Networks and Grids for HENP as Global eScience

Networks and Grids for HENP as Global e-Science Harvey B. Newman California Institute of Technology CHEP 2004, Interlaken September 30, 2004

ICFA and Global Networks for Collaborative Science u Given the wordwide spread and data-intensive challenges in our field u National and International Networks, with sufficient (rapidly increasing) capacity and seamless end-to-end capability, are essential for r The daily conduct of collaborative work in both experiment and theory r Experiment development & construction on a global scale r Grid systems supporting analysis involving physicists in all world regions r The conception, design and implementation of next generation facilities as “global networks” u “Collaborations on this scale would never have been attempted, if they could not rely on excellent networks”

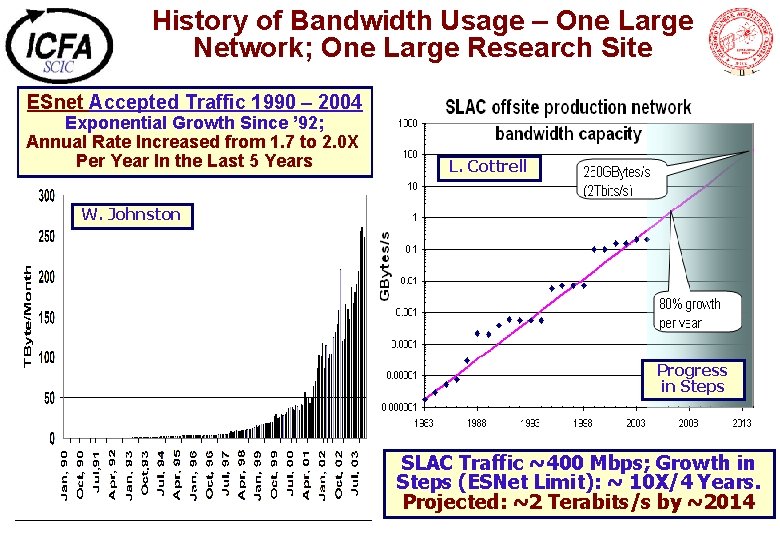

History of Bandwidth Usage – One Large Network; One Large Research Site ESnet Accepted Traffic 1990 – 2004 Exponential Growth Since ’ 92; Annual Rate Increased from 1. 7 to 2. 0 X Per Year In the Last 5 Years L. Cottrell W. Johnston Progress in Steps SLAC Traffic ~400 Mbps; Growth in Steps (ESNet Limit): ~ 10 X/4 Years. Projected: ~2 Terabits/s by ~2014

Int’l Networks BW on Major Links for HENP: US-CERN Example u Rate of Progress >> Moore’s Law (US-CERN Example) r 9. 6 kbps Analog (1985) 64 -256 kbps Digital (1989 - 1994) 1. 5 Mbps Shared (1990 -3; IBM) 2 -4 Mbps (1996 -1998) 12 -20 Mbps (1999 -2000) 155 -310 Mbps (2001 -2) 622 Mbps (2002 -3) 2. 5 Gbps (2003 -4) 10 Gbps (2005) 4 x 10 Gbps or 40 Gbps (2007 -8) r [X 7 – 27] r [X 160] r [X 200 -400] r [X 1. 2 k-2 k] r [X 16 k – 32 k] r [X 65 k] r [X 250 k] r [X 1 M] r [X 4 M] u A factor of ~1 M Bandwidth Increase since 1985; ~4 M by 2007 -8 A factor of ~5 k Since 1995; u HENP has become a leading applications driver, and also a codeveloper of global networks

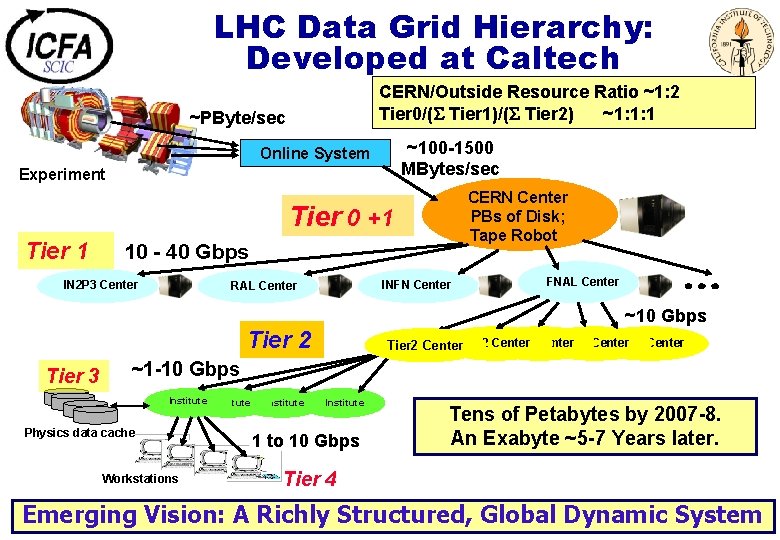

LHC Data Grid Hierarchy: Developed at Caltech CERN/Outside Resource Ratio ~1: 2 Tier 0/( Tier 1)/( Tier 2) ~1: 1: 1 ~PByte/sec ~100 -1500 MBytes/sec Online System Experiment CERN Center PBs of Disk; Tape Robot Tier 0 +1 Tier 1 10 - 40 Gbps IN 2 P 3 Center INFN Center RAL Center FNAL Center ~10 Gbps Tier 2 Tier 3 Tier 2 Center Tier 2 Center ~1 -10 Gbps Institute Physics data cache Workstations Institute 1 to 10 Gbps Tens of Petabytes by 2007 -8. An Exabyte ~5 -7 Years later. Tier 4 Emerging Vision: A Richly Structured, Global Dynamic System

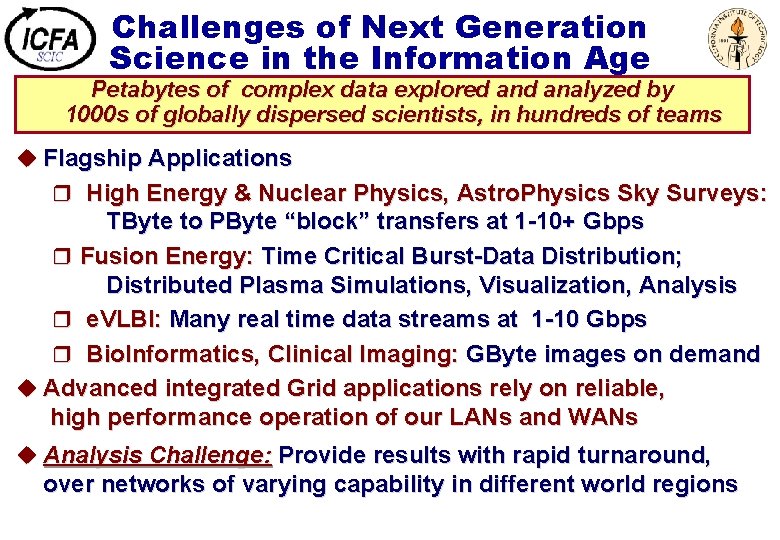

Challenges of Next Generation Science in the Information Age Petabytes of complex data explored analyzed by 1000 s of globally dispersed scientists, in hundreds of teams u Flagship Applications r High Energy & Nuclear Physics, Astro. Physics Sky Surveys: TByte to PByte “block” transfers at 1 -10+ Gbps r Fusion Energy: Time Critical Burst-Data Distribution; Distributed Plasma Simulations, Visualization, Analysis r e. VLBI: Many real time data streams at 1 -10 Gbps r Bio. Informatics, Clinical Imaging: GByte images on demand u Advanced integrated Grid applications rely on reliable, high performance operation of our LANs and WANs u Analysis Challenge: Provide results with rapid turnaround, over networks of varying capability in different world regions

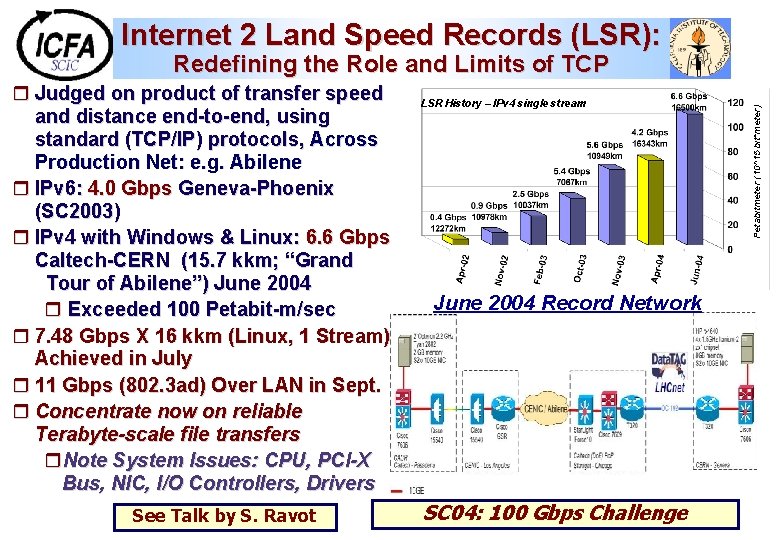

Internet 2 Land Speed Records (LSR): r Judged on product of transfer speed and distance end-to-end, using standard (TCP/IP) protocols, Across Production Net: e. g. Abilene r IPv 6: 4. 0 Gbps Geneva-Phoenix (SC 2003) r IPv 4 with Windows & Linux: 6. 6 Gbps Caltech-CERN (15. 7 kkm; “Grand Tour of Abilene”) June 2004 r Exceeded 100 Petabit-m/sec r 7. 48 Gbps X 16 kkm (Linux, 1 Stream) Achieved in July r 11 Gbps (802. 3 ad) Over LAN in Sept. r Concentrate now on reliable Terabyte-scale file transfers r. Note System Issues: CPU, PCI-X Bus, NIC, I/O Controllers, Drivers See Talk by S. Ravot LSR History – IPv 4 single stream Monitoring of the Abilene traffic in LA: June 2004 Record Network SC 04: 100 Gbps Challenge Petabitmeter (10^15 bit*meter) Redefining the Role and Limits of TCP

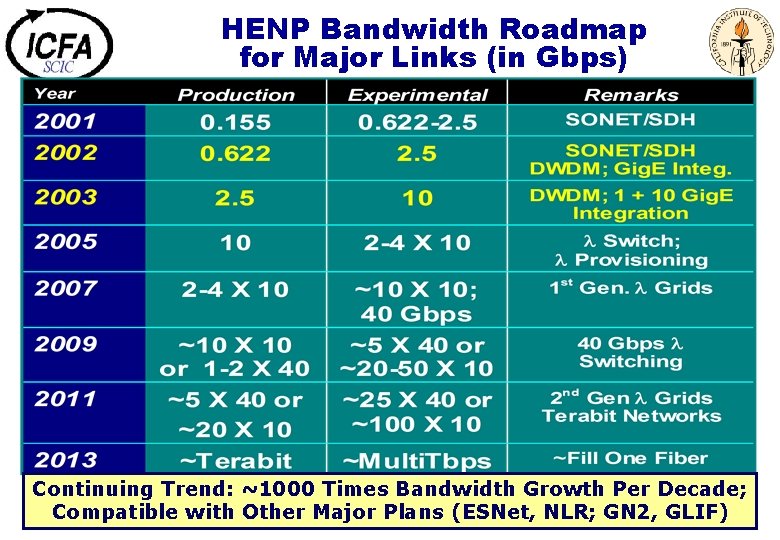

HENP Bandwidth Roadmap for Major Links (in Gbps) Continuing Trend: ~1000 Times Bandwidth Growth Per Decade; Compatible with Other Major Plans (ESNet, NLR; GN 2, GLIF)

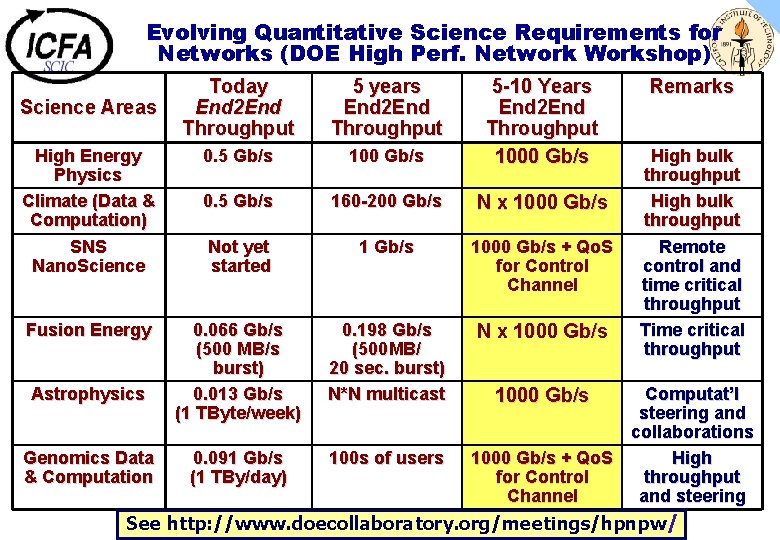

Evolving Quantitative Science Requirements for Networks (DOE High Perf. Network Workshop) Today End 2 End Throughput 5 years End 2 End Throughput High Energy Physics Climate (Data & Computation) SNS Nano. Science 0. 5 Gb/s 100 Gb/s 5 -10 Years End 2 End Throughput 1000 Gb/s 0. 5 Gb/s 160 -200 Gb/s N x 1000 Gb/s Not yet started 1 Gb/s 1000 Gb/s + Qo. S for Control Channel Fusion Energy 0. 066 Gb/s (500 MB/s burst) 0. 013 Gb/s (1 TByte/week) 0. 198 Gb/s (500 MB/ 20 sec. burst) N*N multicast N x 1000 Gb/s 0. 091 Gb/s (1 TBy/day) 100 s of users Science Areas Astrophysics Genomics Data & Computation Remarks High bulk throughput Remote control and time critical throughput Time critical throughput 1000 Gb/s Computat’l steering and collaborations 1000 Gb/s + Qo. S High for Control throughput Channel and steering See http: //www. doecollaboratory. org/meetings/hpnpw/

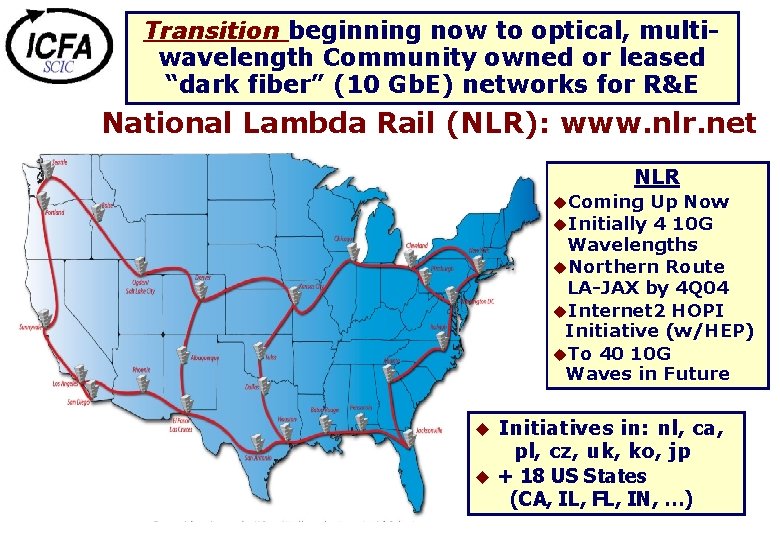

Transition beginning now to optical, multiwavelength Community owned or leased “dark fiber” (10 Gb. E) networks for R&E National Lambda Rail (NLR): www. nlr. net NLR u. Coming Up Now u. Initially 4 10 G Wavelengths u. Northern Route LA-JAX by 4 Q 04 u. Internet 2 HOPI Initiative (w/HEP) u. To 40 10 G Waves in Future Initiatives in: nl, ca, pl, cz, uk, ko, jp u + 18 US States (CA, IL, FL, IN, …) u

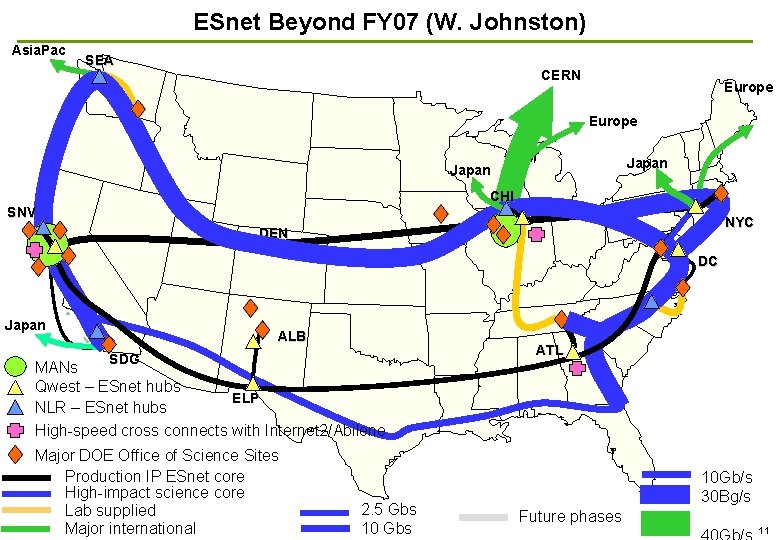

ESnet Beyond FY 07 (W. Johnston) Asia. Pac SEA CERN Europe Japan CHI SNV NYC DEN DC Japan ALB SDG MANs Qwest – ESnet hubs ELP NLR – ESnet hubs High-speed cross connects with Internet 2/Abilene Major DOE Office of Science Sites Production IP ESnet core High-impact science core Lab supplied Major international 2. 5 Gbs 10 Gbs ATL 10 Gb/s 30 Bg/s Future phases 11

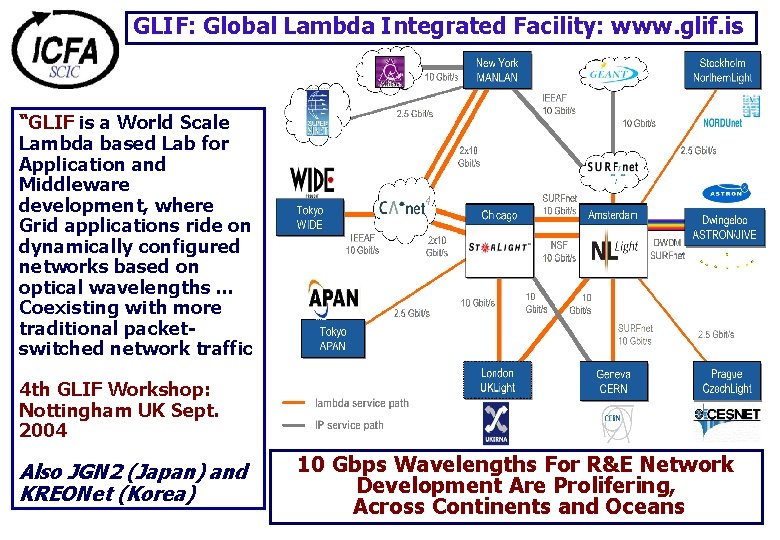

GLIF: Global Lambda Integrated Facility: www. glif. is “GLIF is a World Scale Lambda based Lab for Application and Middleware development, where Grid applications ride on dynamically configured networks based on optical wavelengths. . . Coexisting with more traditional packetswitched network traffic 4 th GLIF Workshop: Nottingham UK Sept. 2004 Also JGN 2 (Japan) and KREONet (Korea) 10 Gbps Wavelengths For R&E Network Development Are Prolifering, Across Continents and Oceans

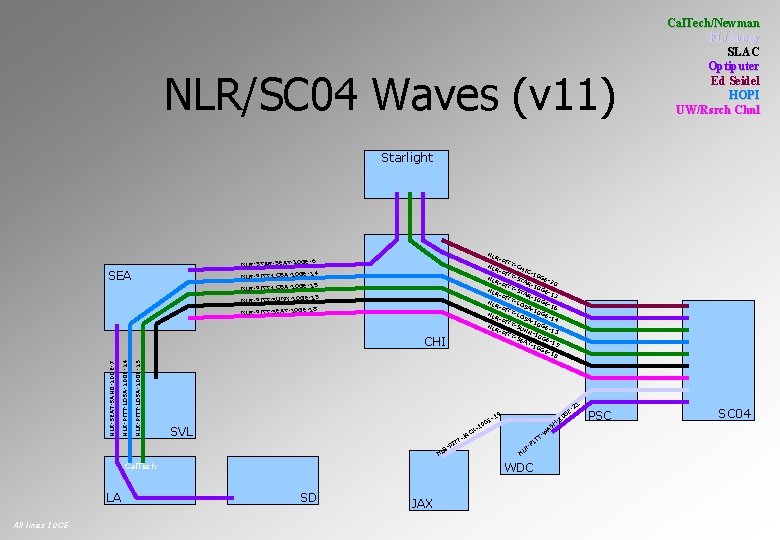

NLR/SC 04 Waves (v 11) Cal. Tech/Newman FL/Avery SLAC Optiputer Ed Seidel HOPI UW/Rsrch Chnl Starlight NL R-P ITT -CH NL R-P ICITT 10 GE -ST NL -20 R-P AR -10 ITT GE -ST NL -13 R-P AR -10 ITT GE -LO NL -16 R-P SA -10 ITT GE -LO NL -14 R-P SA -10 ITT GE -SU NL -15 R-P NN ITT -10 -SE GE -19 AT -10 GE -18 -6 NLR-STAR-SEAT-10 GE GE-14 NLR-PITT-LOSA-10 GE-15 NLR-PITT-LOSA-10 GE-19 NLR-PITT-SUNN-10 18 NLR-PITT-SEAT-10 GE- SEA NLR-PITT-LOSA-10 GE-15 NLR-PITT-LOSA-10 GE-14 NLR-SEAT-SAND-10 GE-7 CHI 21 SVL I -P R NL All lines 10 GE C JA TT AS -W TT PI R- NL WDC Cal. Tech LA E 0 G 1 K- E- G 10 9 -1 SD JAX H- PSC SC 04

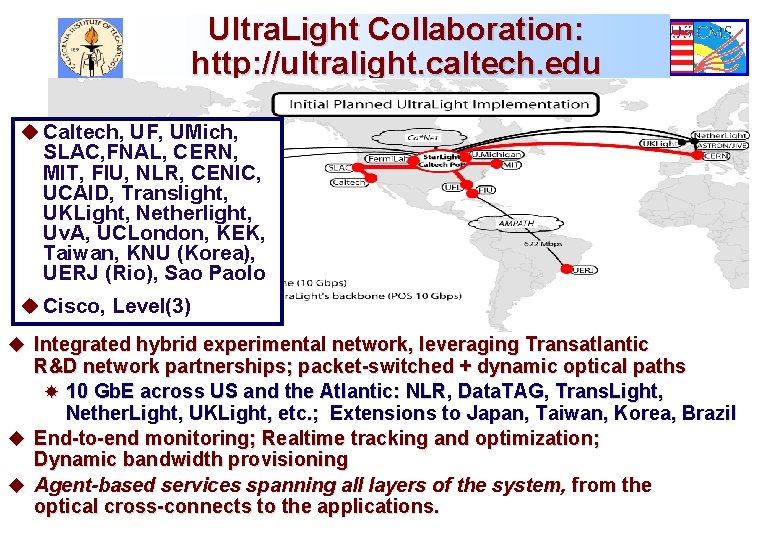

Ultra. Light Collaboration: http: //ultralight. caltech. edu u Caltech, UF, UMich, SLAC, FNAL, CERN, MIT, FIU, NLR, CENIC, UCAID, Translight, UKLight, Netherlight, Uv. A, UCLondon, KEK, Taiwan, KNU (Korea), UERJ (Rio), Sao Paolo u Cisco, Level(3) u Integrated hybrid experimental network, leveraging Transatlantic R&D network partnerships; packet-switched + dynamic optical paths 10 Gb. E across US and the Atlantic: NLR, Data. TAG, Trans. Light, Nether. Light, UKLight, etc. ; Extensions to Japan, Taiwan, Korea, Brazil u End-to-end monitoring; Realtime tracking and optimization; Dynamic bandwidth provisioning u Agent-based services spanning all layers of the system, from the optical cross-connects to the applications.

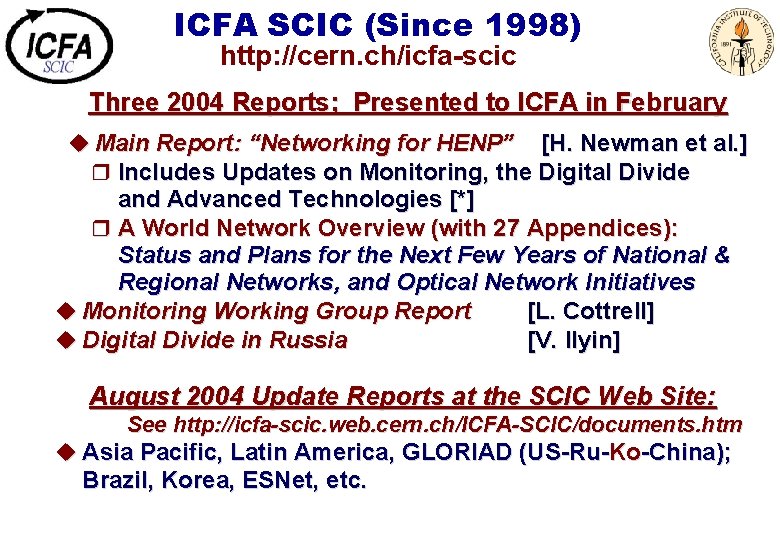

ICFA SCIC (Since 1998) http: //cern. ch/icfa-scic Three 2004 Reports; Presented to ICFA in February u Main Report: “Networking for HENP” [H. Newman et al. ] r Includes Updates on Monitoring, the Digital Divide and Advanced Technologies [*] r A World Network Overview (with 27 Appendices): Status and Plans for the Next Few Years of National & Regional Networks, and Optical Network Initiatives u Monitoring Working Group Report [L. Cottrell] u Digital Divide in Russia [V. Ilyin] August 2004 Update Reports at the SCIC Web Site: See http: //icfa-scic. web. cern. ch/ICFA-SCIC/documents. htm u Asia Pacific, Latin America, GLORIAD (US-Ru-Ko-China); Brazil, Korea, ESNet, etc.

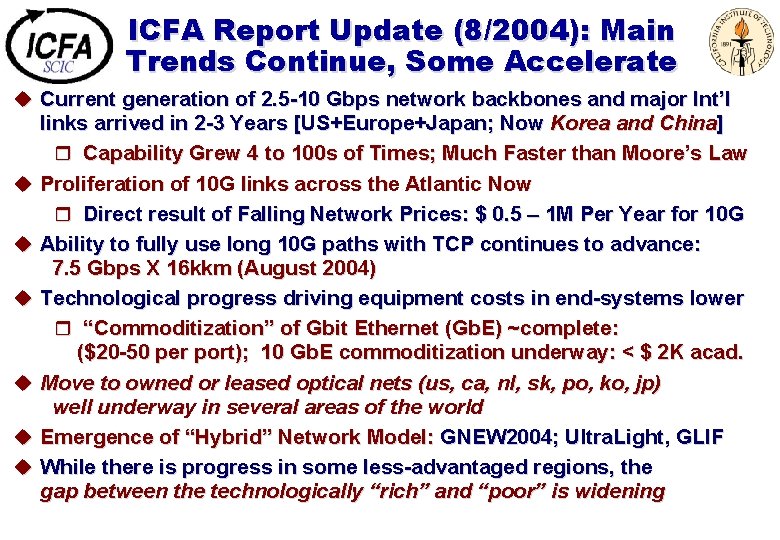

ICFA Report Update (8/2004): Main Trends Continue, Some Accelerate u Current generation of 2. 5 -10 Gbps network backbones and major Int’l u u u links arrived in 2 -3 Years [US+Europe+Japan; Now Korea and China] r Capability Grew 4 to 100 s of Times; Much Faster than Moore’s Law Proliferation of 10 G links across the Atlantic Now r Direct result of Falling Network Prices: $ 0. 5 – 1 M Per Year for 10 G Ability to fully use long 10 G paths with TCP continues to advance: 7. 5 Gbps X 16 kkm (August 2004) Technological progress driving equipment costs in end-systems lower r “Commoditization” of Gbit Ethernet (Gb. E) ~complete: ($20 -50 per port); 10 Gb. E commoditization underway: < $ 2 K acad. Move to owned or leased optical nets (us, ca, nl, sk, po, ko, jp) well underway in several areas of the world Emergence of “Hybrid” Network Model: GNEW 2004; Ultra. Light, GLIF While there is progress in some less-advantaged regions, the gap between the technologically “rich” and “poor” is widening

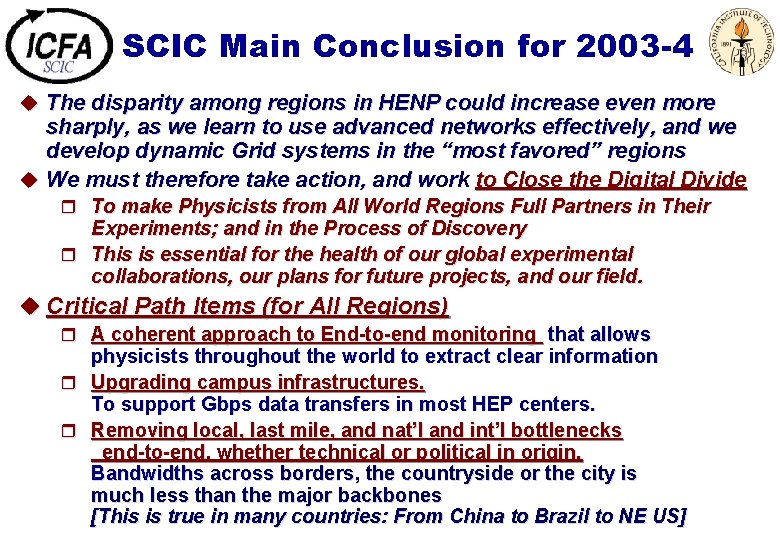

SCIC Main Conclusion for 2003 -4 u The disparity among regions in HENP could increase even more sharply, as we learn to use advanced networks effectively, and we develop dynamic Grid systems in the “most favored” regions u We must therefore take action, and work to Close the Digital Divide r To make Physicists from All World Regions Full Partners in Their Experiments; and in the Process of Discovery r This is essential for the health of our global experimental collaborations, our plans for future projects, and our field. u Critical Path Items (for All Regions) r A coherent approach to End-to-end monitoring that allows physicists throughout the world to extract clear information r Upgrading campus infrastructures. To support Gbps data transfers in most HEP centers. r Removing local, last mile, and nat’l and int’l bottlenecks end-to-end, whether technical or political in origin. Bandwidths across borders, the countryside or the city is much less than the major backbones [This is true in many countries: From China to Brazil to NE US]

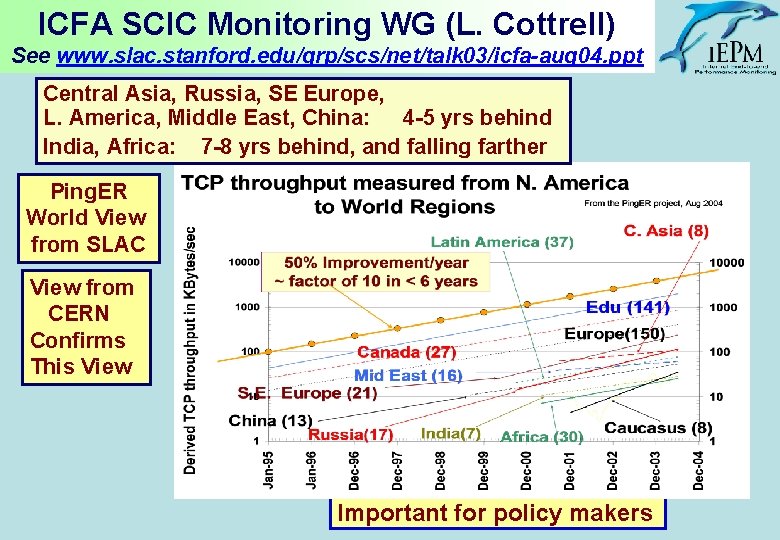

ICFA SCIC Monitoring WG (L. Cottrell) See www. slac. stanford. edu/grp/scs/net/talk 03/icfa-aug 04. ppt Central Asia, Russia, SE Europe, L. America, Middle East, China: 4 -5 yrs behind India, Africa: 7 -8 yrs behind, and falling farther Ping. ER World View from SLAC View from CERN Confirms This View Important for policy makers

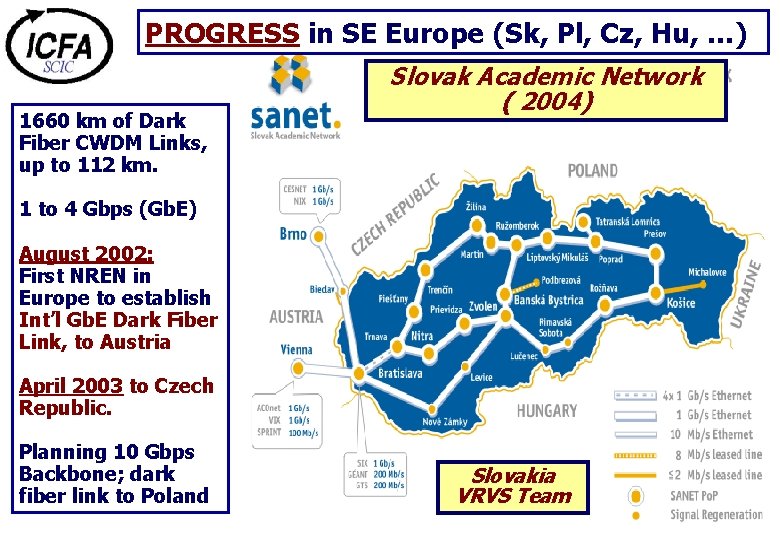

PROGRESS in SE Europe (Sk, Pl, Cz, Hu, …) 1660 km of Dark Fiber CWDM Links, up to 112 km. Slovak Academic Network ( 2004) 1 to 4 Gbps (Gb. E) August 2002: First NREN in Europe to establish Int’l Gb. E Dark Fiber Link, to Austria April 2003 to Czech Republic. Planning 10 Gbps Backbone; dark fiber link to Poland Slovakia VRVS Team

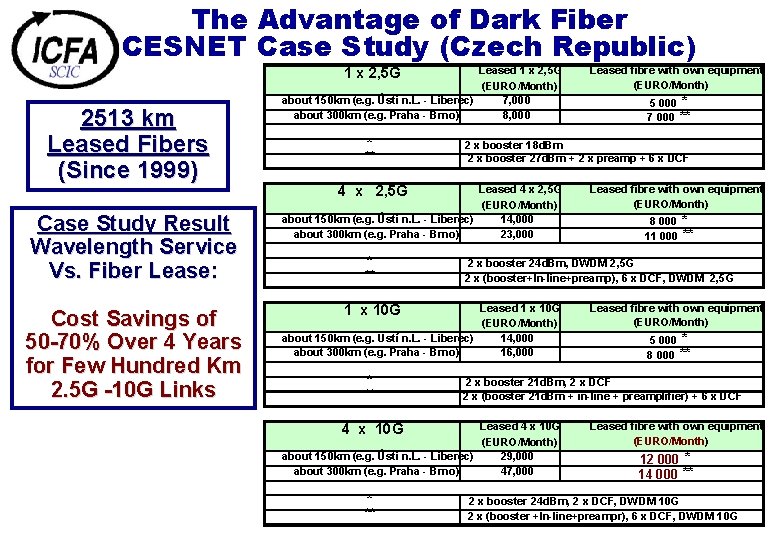

The Advantage of Dark Fiber CESNET Case Study (Czech Republic) 1 x 2, 5 G 2513 km Leased Fibers (Since 1999) Case Study Result Wavelength Service Vs. Fiber Lease: Cost Savings of 50 -70% Over 4 Years for Few Hundred Km 2. 5 G -10 G Links Leased 1 x 2, 5 G (EURO/Month) about 150 km (e. g. Ústí n. L. - Liberec) 7, 000 about 300 km (e. g. Praha - Brno) 8, 000 * ** Leased 4 x 2, 5 G (EURO/Month) about 150 km (e. g. Ústí n. L. - Liberec) 14, 000 about 300 km (e. g. Praha - Brno) 23, 000 Leased 1 x 10 G (EURO/Month) about 150 km (e. g. Ústí n. L. - Liberec) 14, 000 about 300 km (e. g. Praha - Brno) 16, 000 4 x 10 G 8 000 * 11 000 ** Leased fibre with own equipment (EURO/Month) 5 000 * 8 000 ** 2 x booster 21 d. Bm, 2 x DCF 2 x (booster 21 d. Bm + in-line + preamplifier) + 6 x DCF Leased 4 x 10 G (EURO/Month) about 150 km (e. g. Ústí n. L. - Liberec) 29, 000 about 300 km (e. g. Praha - Brno) 47, 000 * ** Leased fibre with own equipment (EURO/Month) 2 x booster 24 d. Bm, DWDM 2, 5 G 2 x (booster+In-line+preamp), 6 x DCF, DWDM 2, 5 G 1 x 10 G * ** 5 000 * 7 000 ** 2 x booster 18 d. Bm 2 x booster 27 d. Bm + 2 x preamp + 6 x DCF 4 x 2, 5 G * ** Leased fibre with own equipment (EURO/Month) 12 000 * 14 000 ** 2 x booster 24 d. Bm, 2 x DCF, DWDM 10 G 2 x (booster +In-line+preampr), 6 x DCF, DWDM 10 G

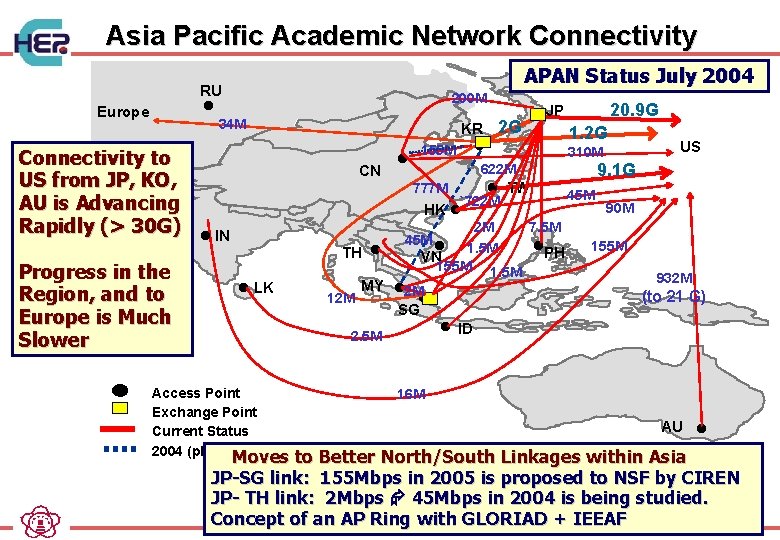

Asia Pacific Academic Network Connectivity RU l Europe 200 M 34 M Connectivity to US from JP, KO, AU is Advancing Rapidly (> 30 G) Progress in the Region, and to Europe is Much Slower APAN Status July 2004 CN l KR 155 M l 2 G 1. 2 G 310 M l TW `722 M HK l TH l l LK 45 M l 2. 5 M Access Point Exchange Point Current Status 2004 (plan) 45 M 90 M 7. 5 M l PH 1. 5 M 155 M 1. 5 M VN MY l 2 M l 12 M SG 2 M US 9. 1 G 622 M 777 M l IN 20. 9 G JP 155 M 932 M (to 21 G) l ID 16 M AU l Moves to Better North/South Linkages within Asia JP-SG link: 155 Mbps in 2005 is proposed to NSF by CIREN JP- TH link: 2 Mbps 45 Mbps in 2004 is being studied. Concept of an AP Ring with GLORIAD + IEEAF

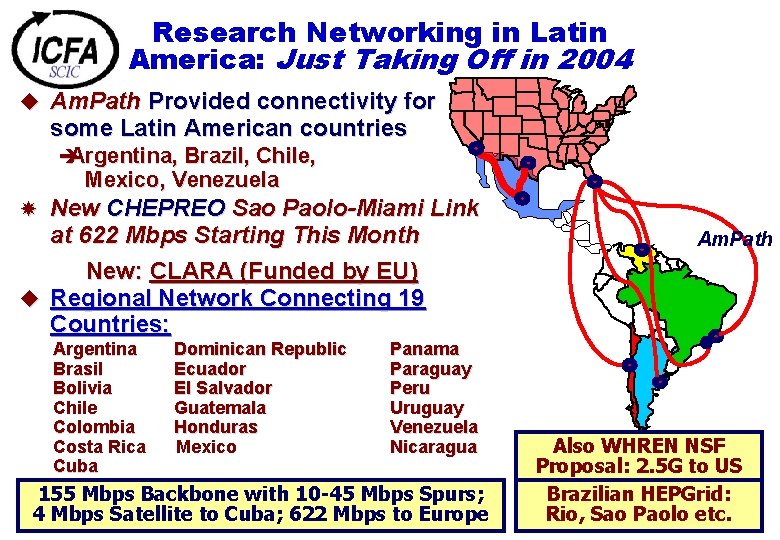

Research Networking in Latin America: Just Taking Off in 2004 u Am. Path Provided connectivity for some Latin American countries Argentina, Brazil, Chile, Mexico, Venezuela New CHEPREO Sao Paolo-Miami Link at 622 Mbps Starting This Month New: CLARA (Funded by EU) u Regional Network Connecting 19 Countries: Argentina Dominican Republic Brasil Ecuador Bolivia El Salvador Chile Guatemala Colombia Honduras Costa Rica Mexico Cuba Panama Paraguay Peru Uruguay Venezuela Nicaragua 155 Mbps Backbone with 10 -45 Mbps Spurs; 4 Mbps Satellite to Cuba; 622 Mbps to Europe Am. Path Also WHREN NSF Proposal: 2. 5 G to US Brazilian HEPGrid: Rio, Sao Paolo etc.

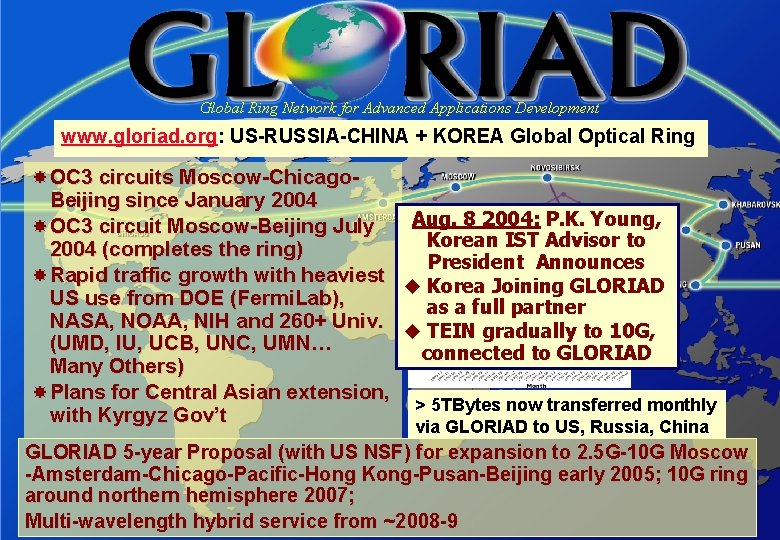

Global Ring Network for Advanced Applications Development www. gloriad. org: US-RUSSIA-CHINA + KOREA Global Optical Ring OC 3 circuits Moscow-Chicago. Beijing since January 2004 OC 3 circuit Moscow-Beijing July 2004 (completes the ring) Rapid traffic growth with heaviest US use from DOE (Fermi. Lab), NASA, NOAA, NIH and 260+ Univ. (UMD, IU, UCB, UNC, UMN… Many Others) Plans for Central Asian extension, with Kyrgyz Gov’t Aug. 8 2004: P. K. Young, Korean IST Advisor to President Announces u Korea Joining GLORIAD as a full partner u TEIN gradually to 10 G, connected to GLORIAD > 5 TBytes now transferred monthly via GLORIAD to US, Russia, China GLORIAD 5 -year Proposal (with US NSF) for expansion to 2. 5 G-10 G Moscow -Amsterdam-Chicago-Pacific-Hong Kong-Pusan-Beijing early 2005; 10 G ring around northern hemisphere 2007; Multi-wavelength hybrid service from ~2008 -9

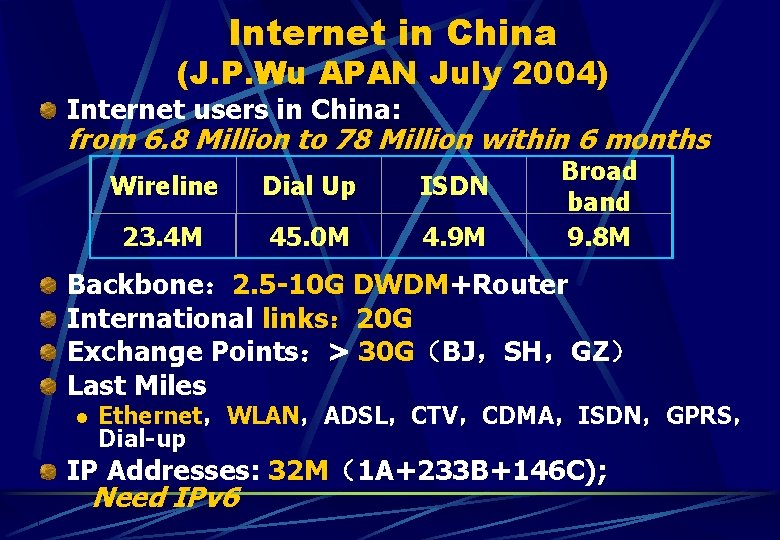

Internet in China (J. P. Wu APAN July 2004) Internet users in China: from 6. 8 Million to 78 Million within 6 months Wireline Dial Up ISDN 23. 4 M 45. 0 M 4. 9 M Broad band 9. 8 M Backbone: 2. 5 -10 G DWDM+Router International links: 20 G Exchange Points:> 30 G(BJ,SH,GZ) Last Miles l Ethernet,WLAN,ADSL,CTV,CDMA,ISDN,GPRS, Dial-up IP Addresses: 32 M(1 A+233 B+146 C); Need IPv 6

AFRICA: Nectar. Net Initiative W. Matthews Georgia Tech www. nectarnet. org Growing Need to connect academic researchers, medical researchers & practitioners to many sites in Africa Examples: Æ CDC & NIH: Global AIDS Project, Dept. of Parasitic Diseases, Nat’l Library of Medicine (Ghana, Nigeria) Æ Gates $ 50 M HIV/AIDS Center in Botswana Æ Monsoon Project, Dakar [cf. East US Hurricanes tp Africa] Æ US Geological Survey: Global Spatial Data Infrastructure Æ Distance Learning: Emory Univ. -Ibadan (Nigeria); Research Channel But Africa is Hard: 11 M Sq. Miles, 600 M People, 54 Countries Æ Little Telecommunications Infrastructure Approach: Use SAT-3/WASC Cable (to S. Africa to Portugal); GEANT Across Europe, AMS-NY Link Across Atlantic, Peer with Abilene in NYC Æ Cable Landings in 8 West African Countries and South Africa Æ Pragmatic approach to reach end points: VSAT, ADSL, microwave, etc. Note: World Conference on Physics and Sustainable Development, 10/31 – 11/2/05 in Durban South Africa; Part of World Year of Physics 2005. Sponsors: UNESCO, ICTP, IUPAP, APS, SAIP

HEP Active in the World Summit on the Information Society 2003 -2005 u GOAL: To Create an “Information Society”. Common Definition Adopted (Tokyo Declaration, January 2003): “… One in which highly developed ICT networks, equitable and ubiquitous access to information, appropriate content in accessible formats, and effective communication can help people achieve their potential” u. Kofi Annan Challenged the Scientific Community to Help (3/03) u WSIS I (Geneva 12/03): SIS Forum, CERN/Caltech Online Stand u CERN RSIS Event u Visitors at WSIS I r Kofi Annan, UN Sec’y General r John H. Marburger, Science Adviser to US President r Ion Iliescu, President of Romania, … u Planning Now Underway for

HEPGRID and Digital Divide Workshop UERJ, Rio de Janeiro, Feb. 16 -20 2004 Theme: Global Collaborations, Grids and Their Relationship to the Digital Divide NEWS: Bulletin: ONE TWO WELCOME BULLETIN General Information Registration Travel Information Hotel Registration Participant List Tutorials How to Get UERJ/Hotel u C++ Computer Accounts u Grid Technologies Useful Phone Numbers Program u Grid-Enabled Contact us: Analysis Secretariat u Networks Chairmen u Collaborative Systems For the past three years the SCIC has focused on understanding and seeking the means of reducing or eliminating the Digital Divide. It proposed to ICFA that these issues, as they affect our field of High Energy Physics, be brought to our community for discussion. This led to ICFA’s approval, in July 2003, of the First Digital Divide and HEP Grid Workshop u Review of R&E Networks; Major Grid Projects u Perspectives on Digital Divide Issues by Major HEP Experiments, Regional Representatives u Focus on Digital Divide Issues in Latin America; Relate to Problems in Other Regions More Info: http: //www. lishep. uerj. br SPONSORS CLAF CNPQ FAPERJ UERJ Sessions & Tutorials Available (w/Video) on the Web

International ICFA Workshop on HEP Networking, Grids and Digital Divide Issues for Global e-Science Dates: May 23 -27, 2005 Venue: Daegu, Korea Dongchul Son Center for High Energy Physics Kyungpook National University ICFA, Beijing, China Aug. 2004 Approved by ICFA August 20, 2004

International ICFA Workshop on HEP Networking, Grids and Digital Divide Issues for Global e-Science l Workshop Goals Æ Review the current status, progress and barriers to effective use of major national, continental and transoceanic networks used by HEP Æ Review progress, strengthen opportunities for collaboration, and explore the means to deal with key issues in Grid computing and Grid-enabled data analysis, for high energy physics and other fields of data intensive science, now and in the future Æ Exchange information and ideas, and formulate plans to develop solutions to specific problems related to the Digital Divide in various regions, with a focus on Asia Pacific, as well as Latin America, Russia and Africa Æ Continue to advance a broad program of work on reducing or eliminating the Digital Divide, and ensuring global collaboration, as related to all of the above aspects. 고에너지물리연구센터 CENTER FOR HIGH ENERGY PHYSICS

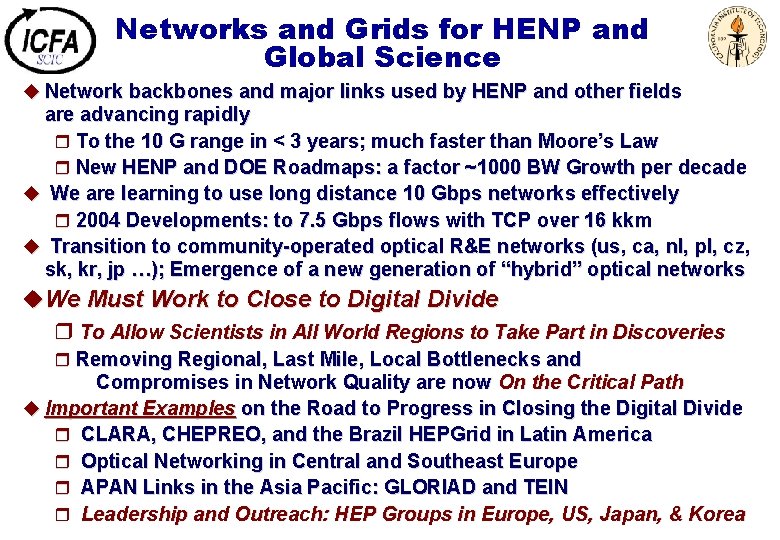

Networks and Grids for HENP and Global Science u Network backbones and major links used by HENP and other fields are advancing rapidly r To the 10 G range in < 3 years; much faster than Moore’s Law r New HENP and DOE Roadmaps: a factor ~1000 BW Growth per decade u We are learning to use long distance 10 Gbps networks effectively r 2004 Developments: to 7. 5 Gbps flows with TCP over 16 kkm u Transition to community-operated optical R&E networks (us, ca, nl, pl, cz, sk, kr, jp …); Emergence of a new generation of “hybrid” optical networks u We Must Work to Close to Digital Divide r To Allow Scientists in All World Regions to Take Part in Discoveries r Removing Regional, Last Mile, Local Bottlenecks and Compromises in Network Quality are now On the Critical Path u Important Examples on the Road to Progress in Closing the Digital Divide r CLARA, CHEPREO, and the Brazil HEPGrid in Latin America r Optical Networking in Central and Southeast Europe r APAN Links in the Asia Pacific: GLORIAD and TEIN r Leadership and Outreach: HEP Groups in Europe, US, Japan, & Korea

Extra Slides Follow

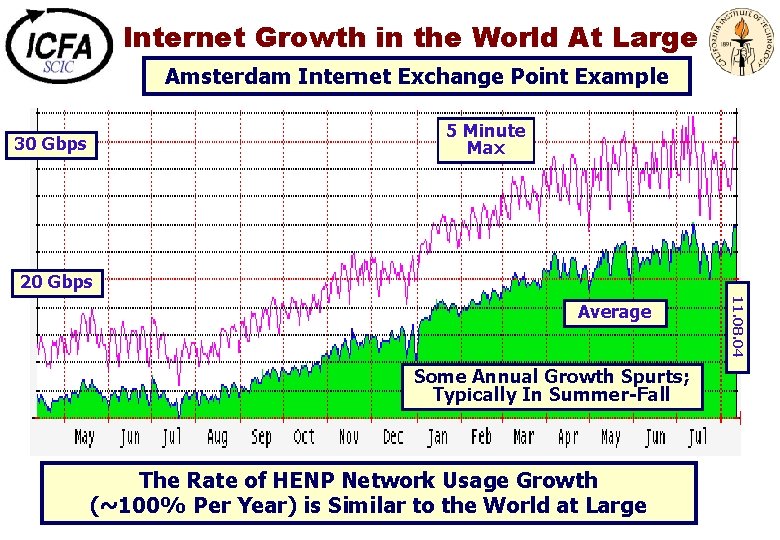

Internet Growth in the World At Large Amsterdam Internet Exchange Point Example 5 Minute Max 30 Gbps 20 Gbps Some Annual Growth Spurts; Typically In Summer-Fall The Rate of HENP Network Usage Growth (~100% Per Year) is Similar to the World at Large 11. 08. 04 Average

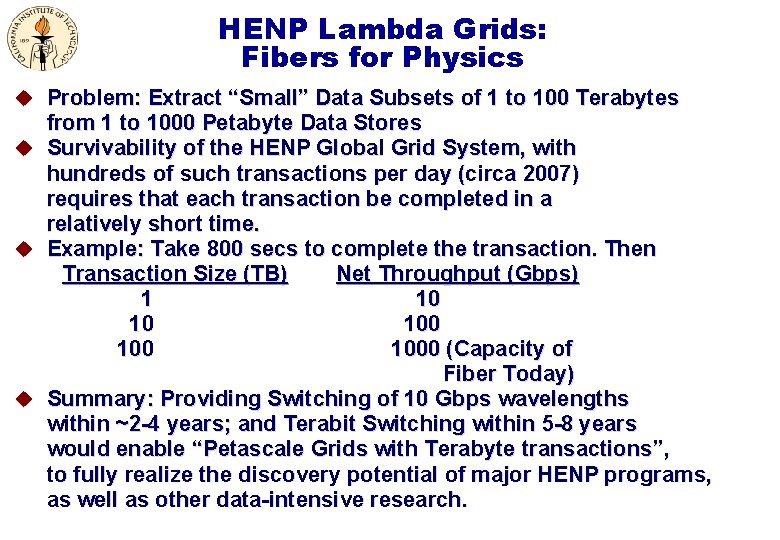

HENP Lambda Grids: Fibers for Physics u Problem: Extract “Small” Data Subsets of 1 to 100 Terabytes from 1 to 1000 Petabyte Data Stores u Survivability of the HENP Global Grid System, with hundreds of such transactions per day (circa 2007) requires that each transaction be completed in a relatively short time. u Example: Take 800 secs to complete the transaction. Then Transaction Size (TB) Net Throughput (Gbps) 10 1000 (Capacity of Fiber Today) u Summary: Providing Switching of 10 Gbps wavelengths within ~2 -4 years; and Terabit Switching within 5 -8 years would enable “Petascale Grids with Terabyte transactions”, to fully realize the discovery potential of major HENP programs, as well as other data-intensive research.

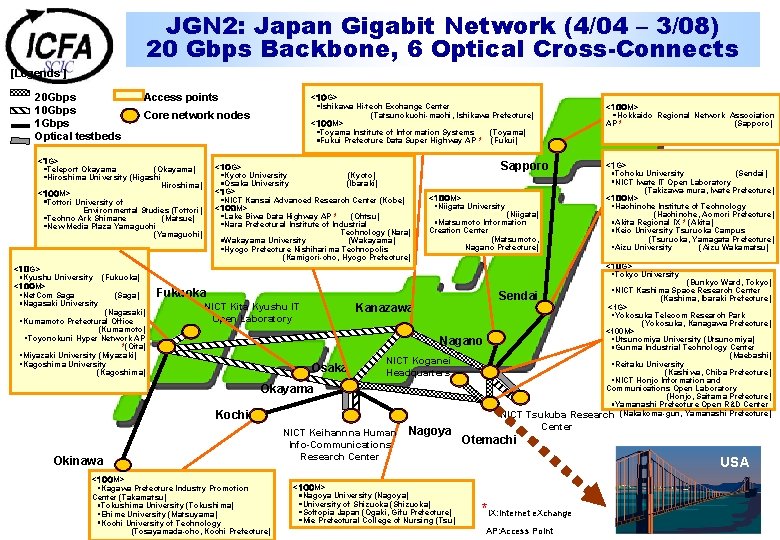

JGN 2: Japan Gigabit Network (4/04 – 3/08) 20 Gbps Backbone, 6 Optical Cross-Connects [Legends ] 20 Gbps 1 Gbps Optical testbeds Access points <10 G> ・Ishikawa Hi-tech Exchange Center (Tatsunokuchi-machi, Ishikawa Prefecture) <100 M> ・Toyama Institute of Information Systems (Toyama) ・Fukui Prefecture Data Super Highway AP * (Fukui) Core network nodes <1 G> ・Teleport Okayama (Okayama) ・Hiroshima University (Higashi Hiroshima) <100 M> ・Tottori University of Environmental Studies (Tottori) ・Techno Ark Shimane (Matsue) ・New Media Plaza Yamaguchi (Yamaguchi) <10 G> ・Kyushu University (Fukuoka) <100 M> ・Net. Com Saga (Saga) ・Nagasaki University (Nagasaki) ・Kumamoto Prefectural Office (Kumamoto) ・Toyonokuni Hyper Network AP *(Oita) ・Miyazaki University (Miyazaki) ・Kagoshima University (Kagoshima) <10 G> ・Kyoto University (Kyoto) ・Osaka University (Ibaraki) <1 G> ・NICT Kansai Advanced Research Center (Kobe) <100 M> ・Lake Biwa Data Highway AP * (Ohtsu) ・Nara Prefectural Institute of Industrial Technology (Nara) ・Wakayama University (Wakayama) ・Hyogo Prefecture Nishiharima Technopolis (Kamigori-cho, Hyogo Prefecture) <100 M> ・Hokkaido Regional Network Association AP * (Sapporo) Sapporo <100 M> ・Niigata University (Niigata) ・Matsumoto Information Creation Center (Matsumoto, Nagano Prefecture) <1 G> ・Tohoku University (Sendai) ・NICT Iwate IT Open Laboratory (Takizawa-mura, Iwate Prefecture) <100 M> ・Hachinohe Institute of Technology (Hachinohe, Aomori Prefecture) ・Akita Regional IX * (Akita) ・Keio University Tsuruoka Campus (Tsuruoka, Yamagata Prefecture) ・Aizu University (Aizu Wakamatsu) <10 G> ・Tokyo University Fukuoka NICT Kita Kyushu IT Open Laboratory Kanazawa Nagano Osaka NICT Koganei Headquarters Okayama Kochi Okinawa <100 M> ・Kagawa Prefecture Industry Promotion Center (Takamatsu) ・Tokushima University (Tokushima) ・Ehime University (Matsuyama) ・Kochi University of Technology (Tosayamada-cho, Kochi Prefecture) NICT Keihannna Human Info-Communications Research Center (Bunkyo Ward, Tokyo) ・NICT Kashima Space Research Center (Kashima, Ibaraki Prefecture) <1 G> ・Yokosuka Telecom Research Park (Yokosuka, Kanagawa Prefecture) <100 M> ・Utsunomiya University (Utsunomiya) ・Gunma Industrial Technology Center (Maebashi) ・Reitaku University (Kashiwa, Chiba Prefecture) ・NICT Honjo Information and Communications Open Laboratory (Honjo, Saitama Prefecture) ・Yamanashi Prefecture Open R&D Center NICT Tsukuba Research (Nakakoma-gun, Yamanashi Prefecture) Sendai Nagoya <100 M> ・Nagoya University (Nagoya) ・University of Shizuoka (Shizuoka) ・Softopia Japan (Ogaki, Gifu Prefecture) ・Mie Prefectural College of Nursing (Tsu) Center Otemachi USA *IX: Internet e. Xchange AP: Access Point

ICFA Standing Committee on Interregional Connectivity (SCIC) u Created by ICFA in July 1998 in Vancouver u CHARGE: Make recommendations to ICFA concerning the connectivity between the Americas, Asia and Europe u As part of the process of developing these recommendations, the committee should Monitor traffic Keep track of technology developments Periodically review forecasts of future bandwidth needs Provide early warning of potential problems u Representatives: Major labs, ECFA, ACFA; North American and Latin American Physics Communities q q u Monitoring, Advanced Technologies, and Digital Divide Working Groups Formed in 2002

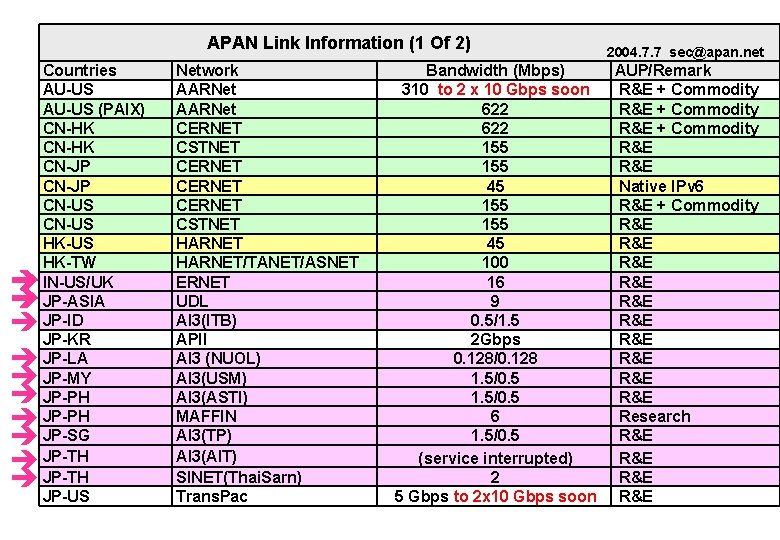

APAN Link Information (1 Of 2) 2004. 7. 7 sec@apan. net Countries AU-US (PAIX) CN-HK CN-JP CN-US HK-US HK-TW IN-US/UK JP-ASIA JP-ID JP-KR JP-LA JP-MY JP-PH JP-SG JP-TH JP-US Network AARNet CERNET CSTNET CERNET CSTNET HARNET/TANET/ASNET ERNET UDL AI 3(ITB) APII AI 3 (NUOL) AI 3(USM) AI 3(ASTI) MAFFIN AI 3(TP) AI 3(AIT) SINET(Thai. Sarn) Trans. Pac Bandwidth (Mbps) 310 to 2 x 10 Gbps soon 622 155 155 45 100 16 9 0. 5/1. 5 2 Gbps 0. 128/0. 128 1. 5/0. 5 6 1. 5/0. 5 (service interrupted) 2 5 Gbps to 2 x 10 Gbps soon AUP/Remark R&E + Commodity R&E Native IPv 6 R&E + Commodity R&E R&E R&E Research R&E R&E

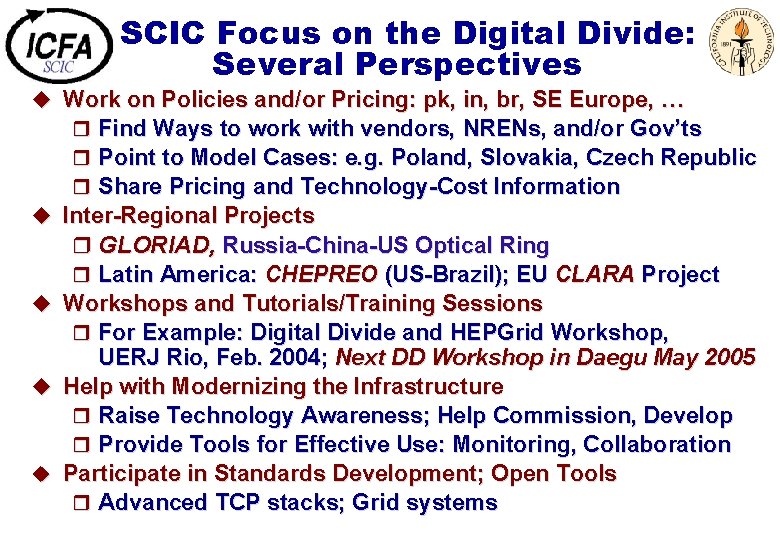

SCIC Focus on the Digital Divide: Several Perspectives u Work on Policies and/or Pricing: pk, in, br, SE Europe, … r Find Ways to work with vendors, NRENs, and/or Gov’ts r Point to Model Cases: e. g. Poland, Slovakia, Czech Republic r Share Pricing and Technology-Cost Information u Inter-Regional Projects r GLORIAD, Russia-China-US Optical Ring r Latin America: CHEPREO (US-Brazil); EU CLARA Project u Workshops and Tutorials/Training Sessions r For Example: Digital Divide and HEPGrid Workshop, UERJ Rio, Feb. 2004; Next DD Workshop in Daegu May 2005 u Help with Modernizing the Infrastructure r Raise Technology Awareness; Help Commission, Develop r Provide Tools for Effective Use: Monitoring, Collaboration u Participate in Standards Development; Open Tools r Advanced TCP stacks; Grid systems

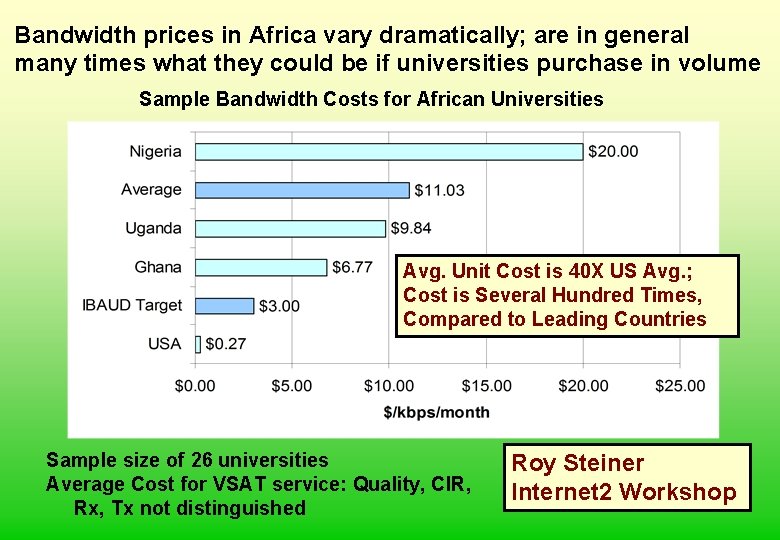

Bandwidth prices in Africa vary dramatically; are in general many times what they could be if universities purchase in volume Sample Bandwidth Costs for African Universities Avg. Unit Cost is 40 X US Avg. ; Cost is Several Hundred Times, Compared to Leading Countries Sample size of 26 universities Average Cost for VSAT service: Quality, CIR, Rx, Tx not distinguished Roy Steiner Internet 2 Workshop

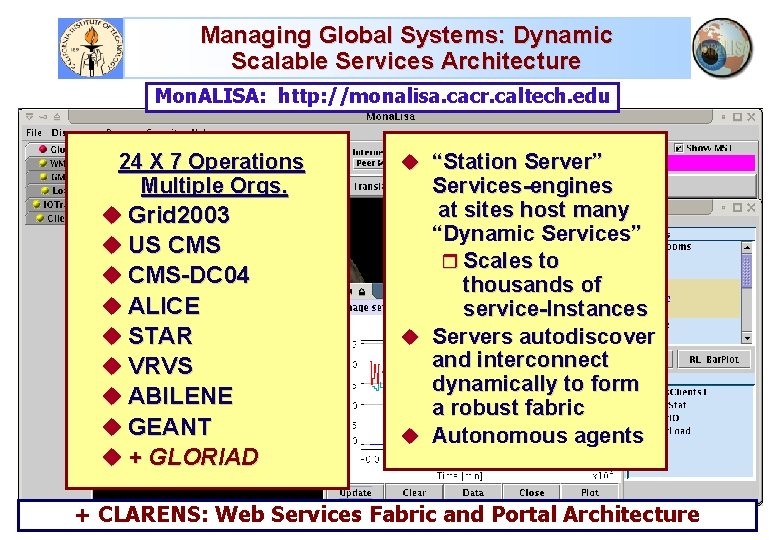

Managing Global Systems: Dynamic Scalable Services Architecture Mon. ALISA: http: //monalisa. cacr. caltech. edu 24 X 7 Operations Multiple Orgs. u Grid 2003 u US CMS u CMS-DC 04 u ALICE u STAR u VRVS u ABILENE u GEANT u + GLORIAD u “Station Server” Services-engines at sites host many “Dynamic Services” r Scales to thousands of service-Instances u Servers autodiscover and interconnect dynamically to form a robust fabric u Autonomous agents + CLARENS: Web Services Fabric and Portal Architecture

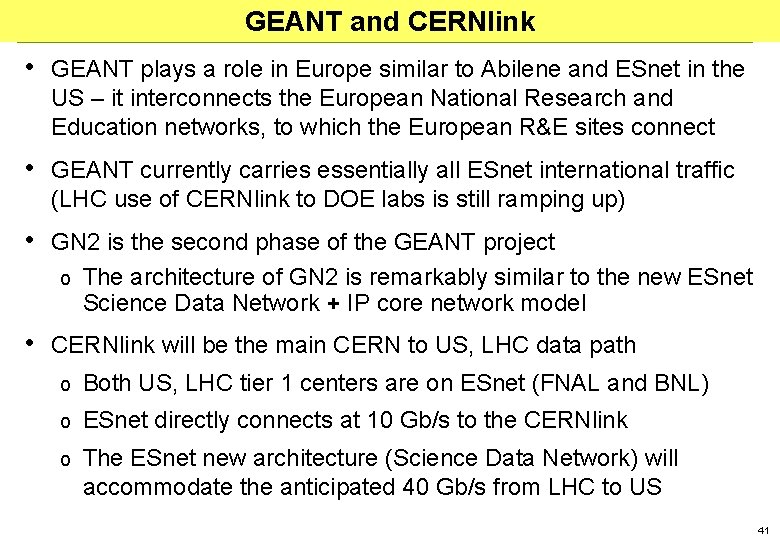

GEANT and CERNlink • GEANT plays a role in Europe similar to Abilene and ESnet in the US – it interconnects the European National Research and Education networks, to which the European R&E sites connect • GEANT currently carries essentially all ESnet international traffic (LHC use of CERNlink to DOE labs is still ramping up) • GN 2 is the second phase of the GEANT project o The architecture of GN 2 is remarkably similar to the new ESnet Science Data Network + IP core network model • CERNlink will be the main CERN to US, LHC data path o Both US, LHC tier 1 centers are on ESnet (FNAL and BNL) o ESnet directly connects at 10 Gb/s to the CERNlink o The ESnet new architecture (Science Data Network) will accommodate the anticipated 40 Gb/s from LHC to US 41

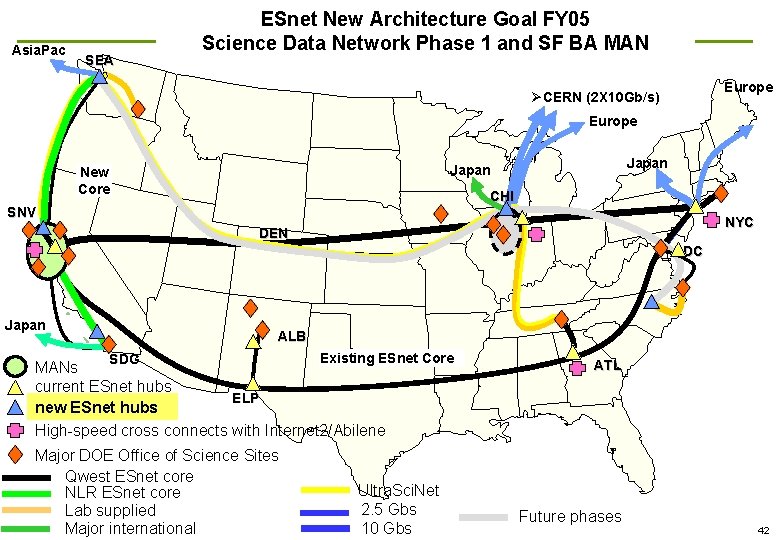

Asia. Pac SEA ESnet New Architecture Goal FY 05 Science Data Network Phase 1 and SF BA MAN Europe ØCERN (2 X 10 Gb/s) Europe Japan New Core CHI SNV NYC DEN DC Japan ALB SDG Existing ESnet Core MANs current ESnet hubs ELP new ESnet hubs High-speed cross connects with Internet 2/Abilene Major DOE Office of Science Sites Qwest ESnet core NLR ESnet core Lab supplied Major international Ultra. Sci. Net 2. 5 Gbs 10 Gbs ATL Future phases 42

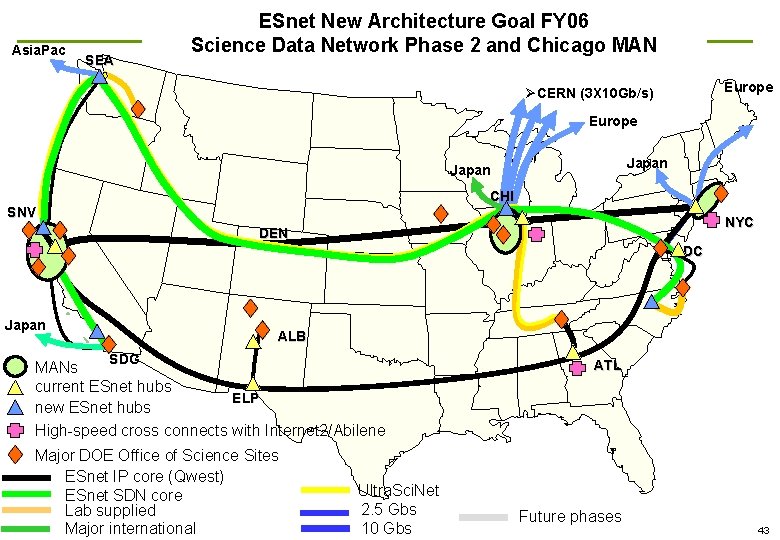

Asia. Pac SEA ESnet New Architecture Goal FY 06 Science Data Network Phase 2 and Chicago MAN Europe ØCERN (3 X 10 Gb/s) Europe Japan CHI SNV NYC DEN DC Japan ALB SDG MANs current ESnet hubs ELP new ESnet hubs High-speed cross connects with Internet 2/Abilene Major DOE Office of Science Sites ESnet IP core (Qwest) ESnet SDN core Lab supplied Major international Ultra. Sci. Net 2. 5 Gbs 10 Gbs ATL Future phases 43

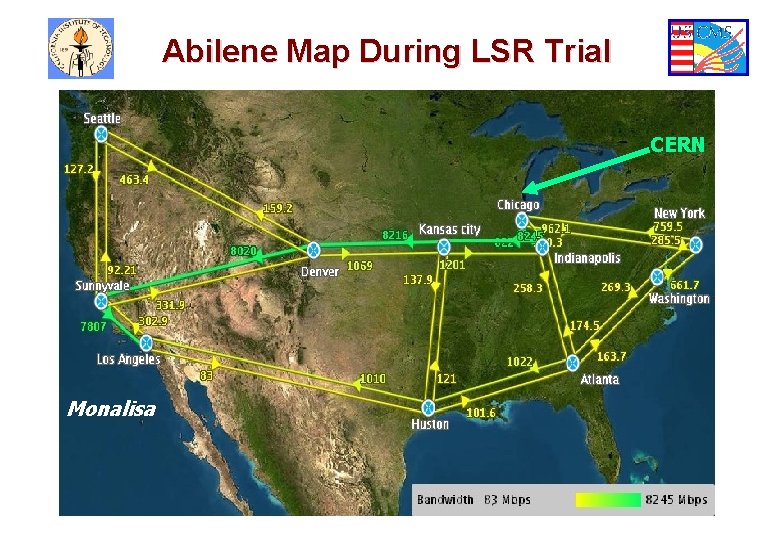

Abilene Map During LSR Trial CERN Monalisa

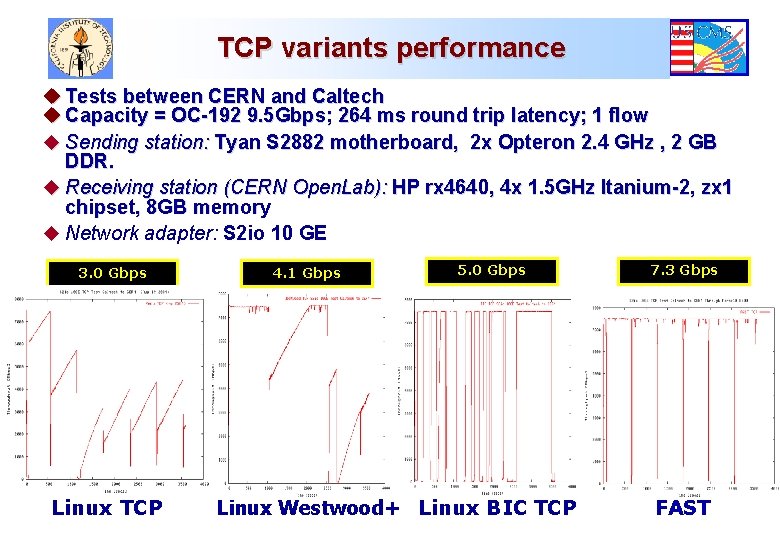

TCP variants performance u Tests between CERN and Caltech u Capacity = OC-192 9. 5 Gbps; 264 ms round trip latency; 1 flow u Sending station: Tyan S 2882 motherboard, 2 x Opteron 2. 4 GHz , 2 GB DDR. u Receiving station (CERN Open. Lab): HP rx 4640, 4 x 1. 5 GHz Itanium-2, zx 1 chipset, 8 GB memory u Network adapter: S 2 io 10 GE 3. 0 Gbps Linux TCP 4. 1 Gbps 5. 0 Gbps Linux Westwood+ Linux BIC TCP 7. 3 Gbps FAST

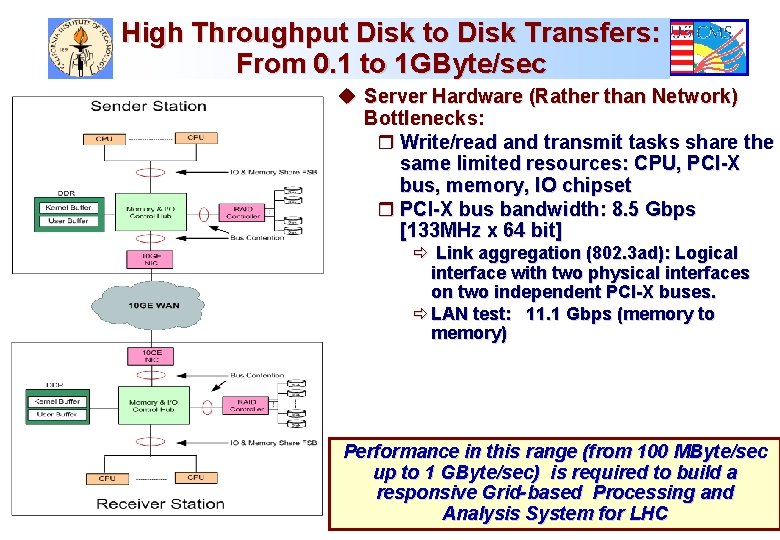

High Throughput Disk to Disk Transfers: From 0. 1 to 1 GByte/sec u Server Hardware (Rather than Network) Bottlenecks: r Write/read and transmit tasks share the same limited resources: CPU, PCI-X bus, memory, IO chipset r PCI-X bus bandwidth: 8. 5 Gbps [133 MHz x 64 bit] ð Link aggregation (802. 3 ad): Logical interface with two physical interfaces on two independent PCI-X buses. ð LAN test: 11. 1 Gbps (memory to memory) Performance in this range (from 100 MByte/sec up to 1 GByte/sec) is required to build a responsive Grid-based Processing and Analysis System for LHC

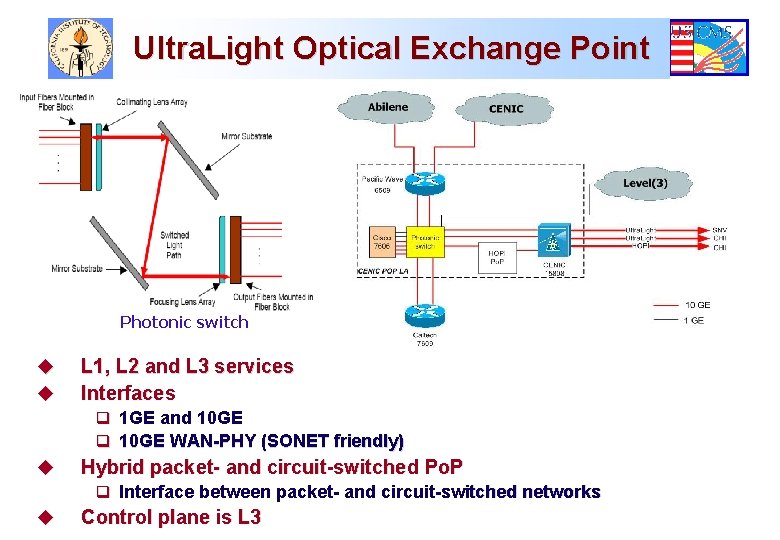

Ultra. Light Optical Exchange Point Photonic switch u u L 1, L 2 and L 3 services Interfaces q q u 1 GE and 10 GE WAN-PHY (SONET friendly) Hybrid packet- and circuit-switched Po. P q Interface between packet- and circuit-switched networks u Control plane is L 3

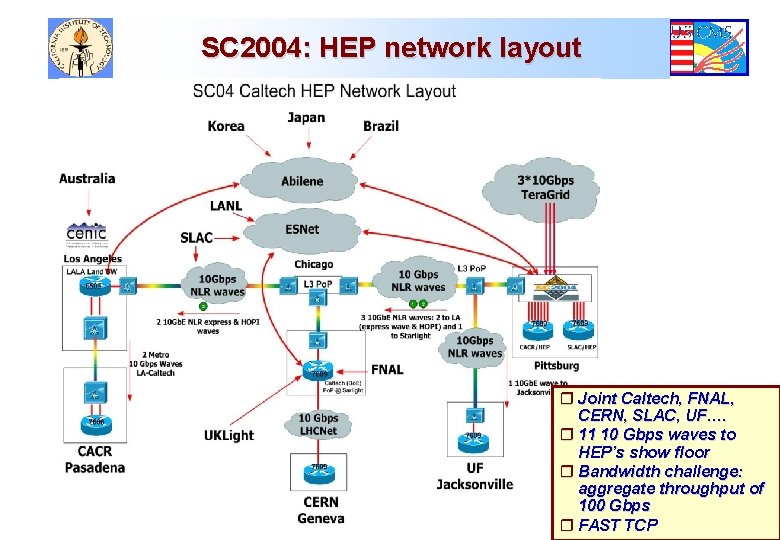

SC 2004: HEP network layout r Joint Caltech, FNAL, CERN, SLAC, UF…. r 11 10 Gbps waves to HEP’s show floor r Bandwidth challenge: aggregate throughput of 100 Gbps r FAST TCP

- Slides: 48