Network Topology and Geometry Complementary Topology of the

- Slides: 21

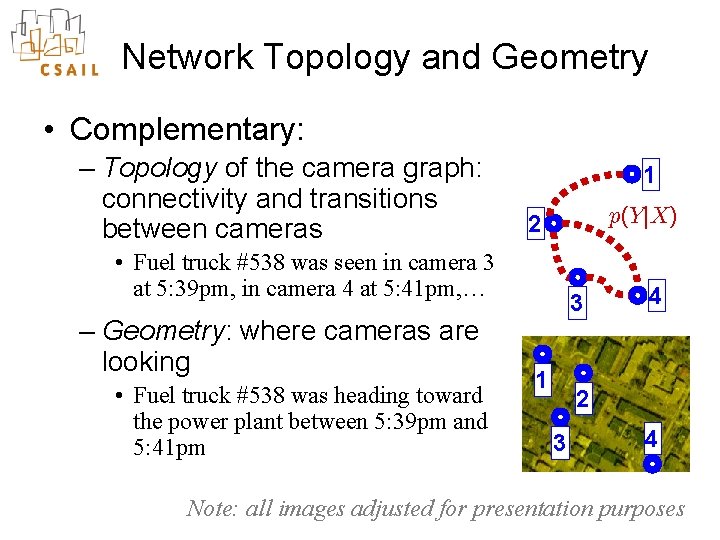

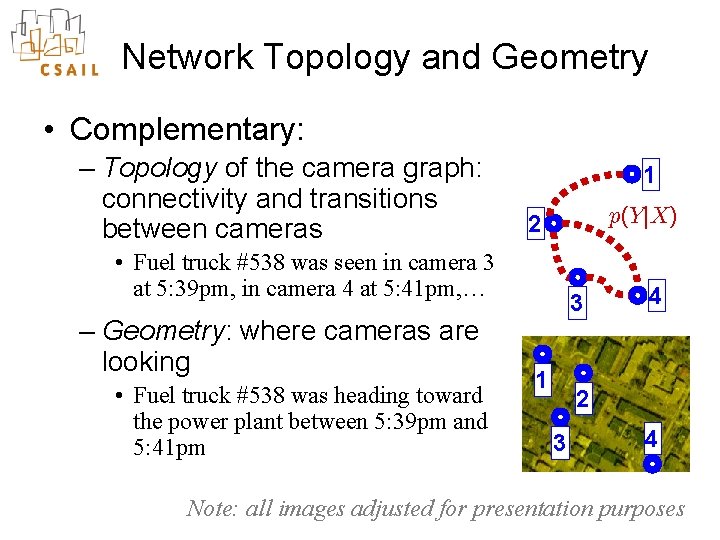

Network Topology and Geometry • Complementary: – Topology of the camera graph: connectivity and transitions between cameras 1 p(Y|X) 2 • Fuel truck #538 was seen in camera 3 at 5: 39 pm, in camera 4 at 5: 41 pm, … – Geometry: where cameras are looking • Fuel truck #538 was heading toward the power plant between 5: 39 pm and 5: 41 pm 3 1 4 2 3 4 Note: all images adjusted for presentation purposes

Motivating Scenario • Large network of cameras – I. e. hundreds or thousands – Location unknown, e. g. • Existing installations • Very rapid physical installation requirements • Regular traffic instrumented with GPS receivers (patrols, service vehicles, etc. ) • …need to know camera locations

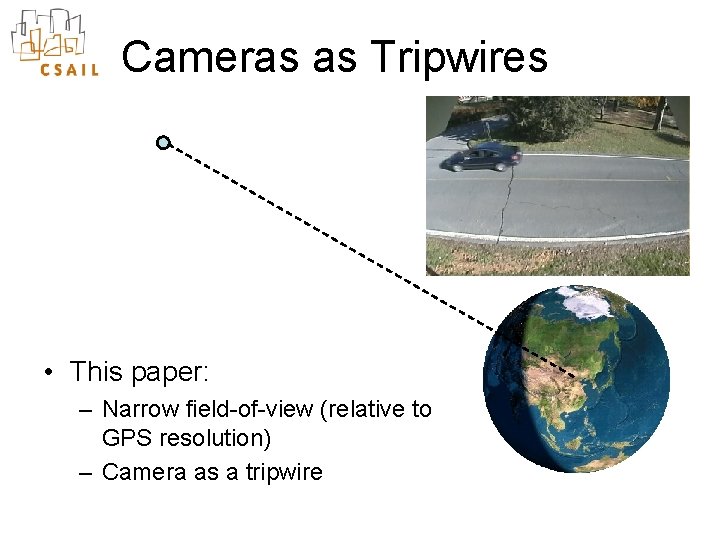

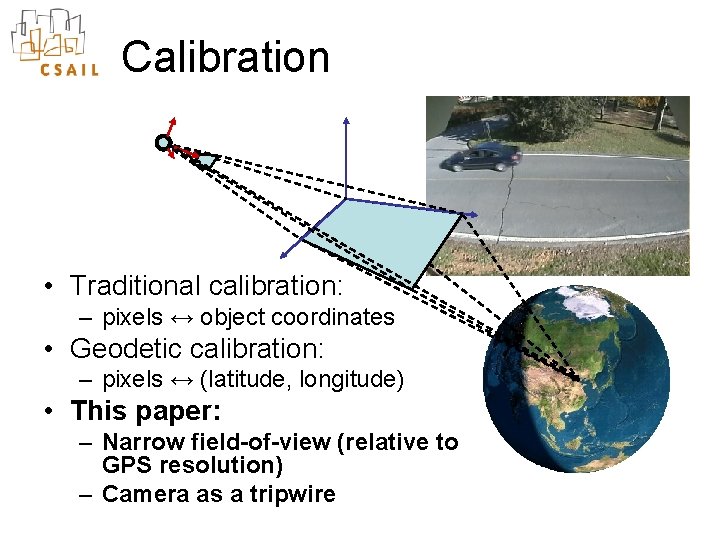

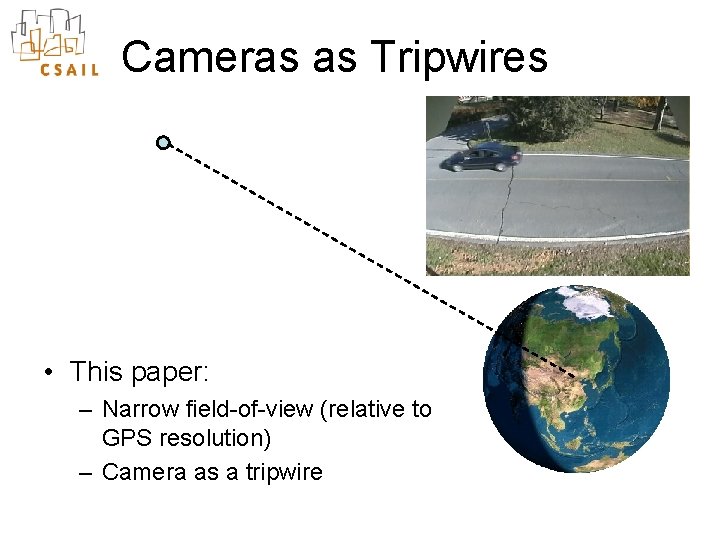

Cameras as Tripwires • This paper: – Narrow field-of-view (relative to GPS resolution) – Camera as a tripwire

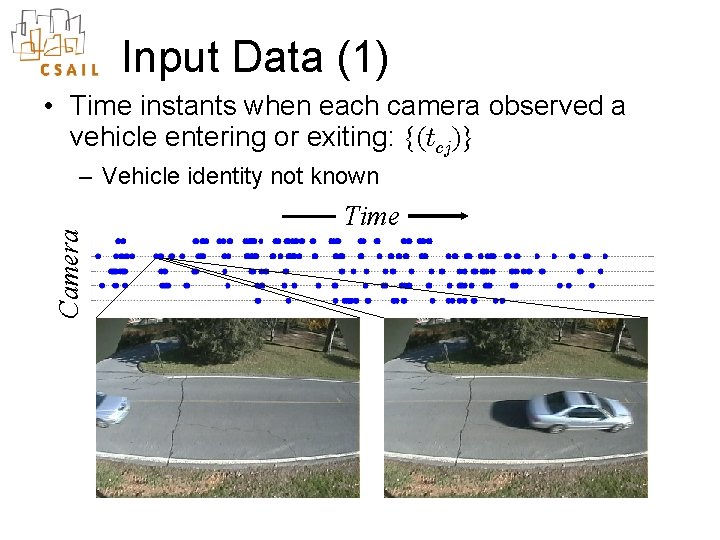

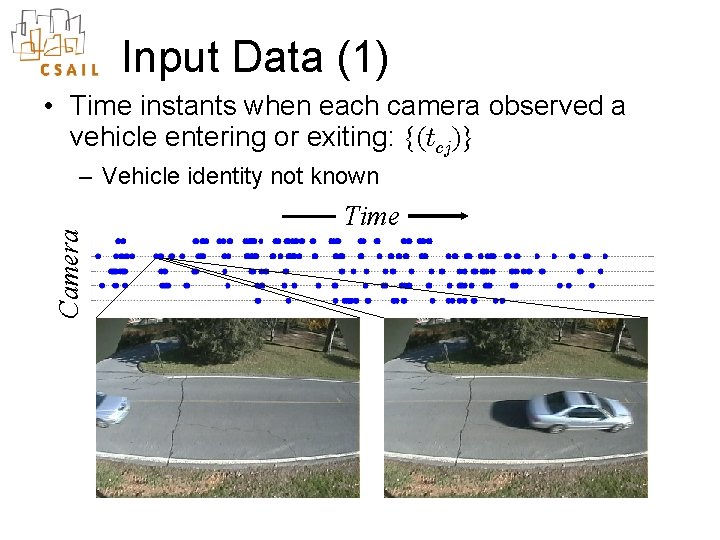

Input Data (1) • Time instants when each camera observed a vehicle entering or exiting: {(tcj)} Camera – Vehicle identity not known Time

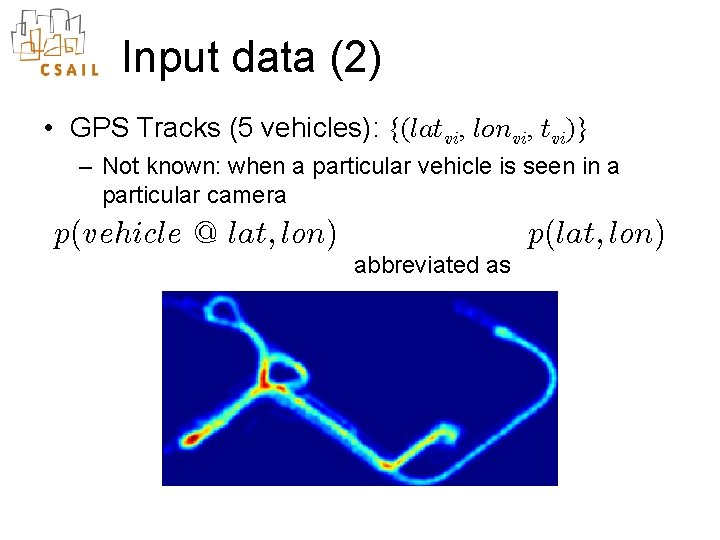

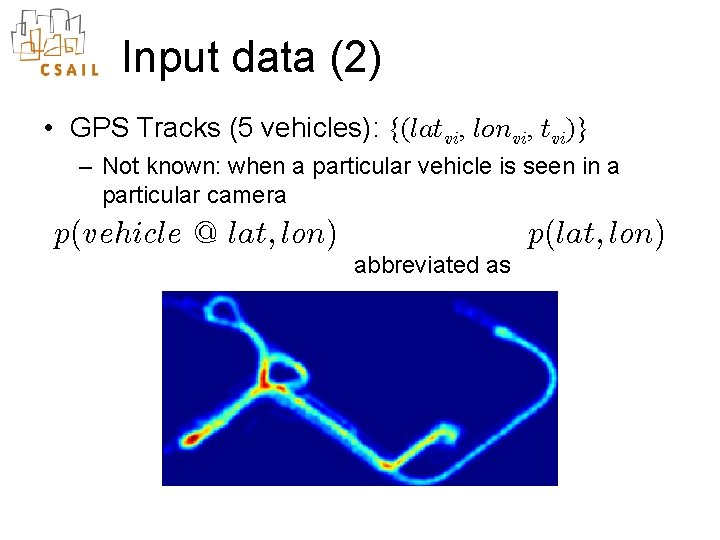

Input data (2) • GPS Tracks (5 vehicles): {(latvi, lonvi, tvi)} – Not known: when a particular vehicle is seen in a particular camera p(vehicle @ lat; lon) p(lat; lon) abbreviated as

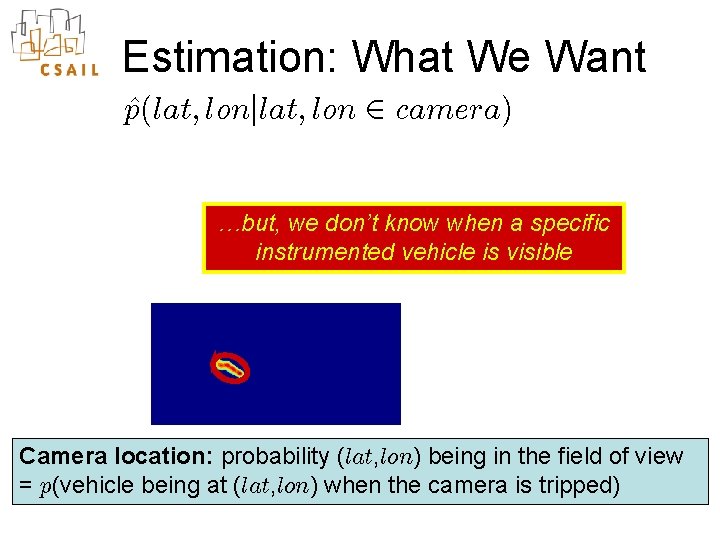

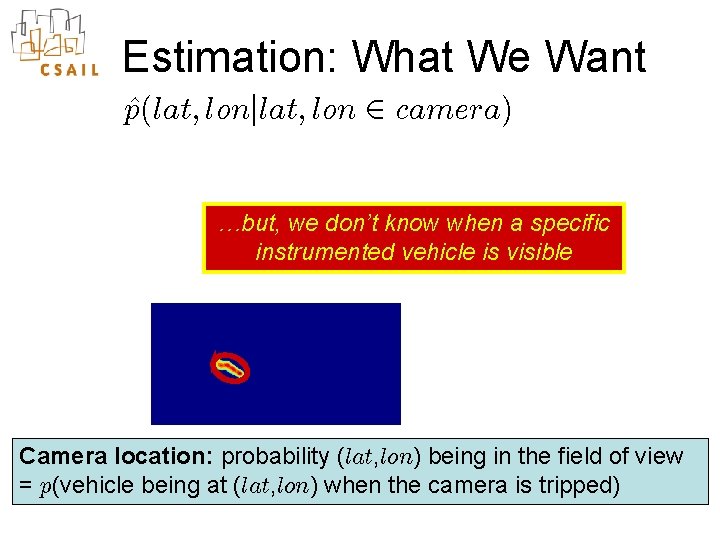

Estimation: What We Want p^(lat; lonj lat; lon 2 camera) …but, we don’t know when a specific instrumented vehicle is visible Camera location: probability (lat, lon) being in the field of view = p(vehicle being at (lat, lon) when the camera is tripped)

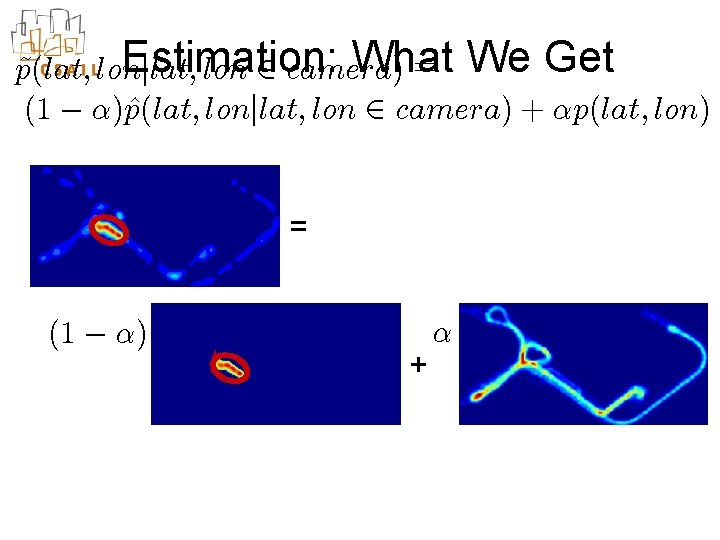

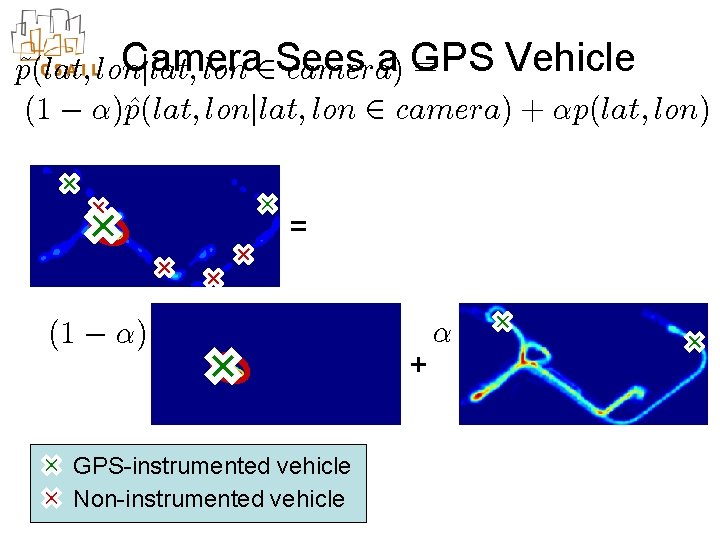

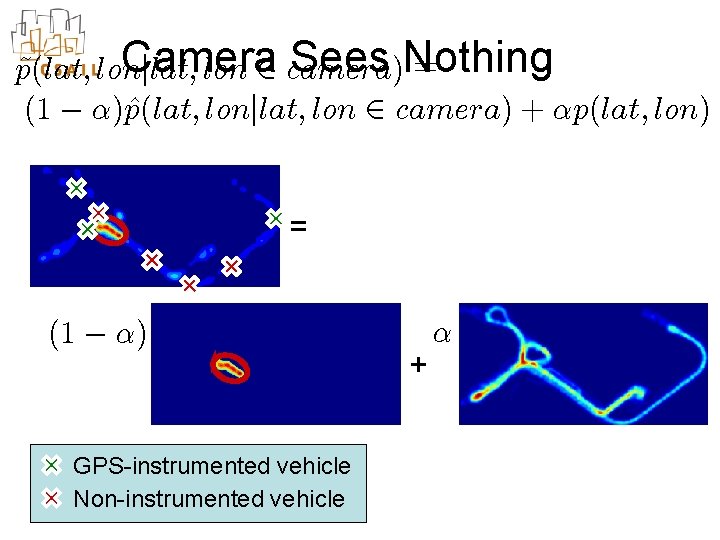

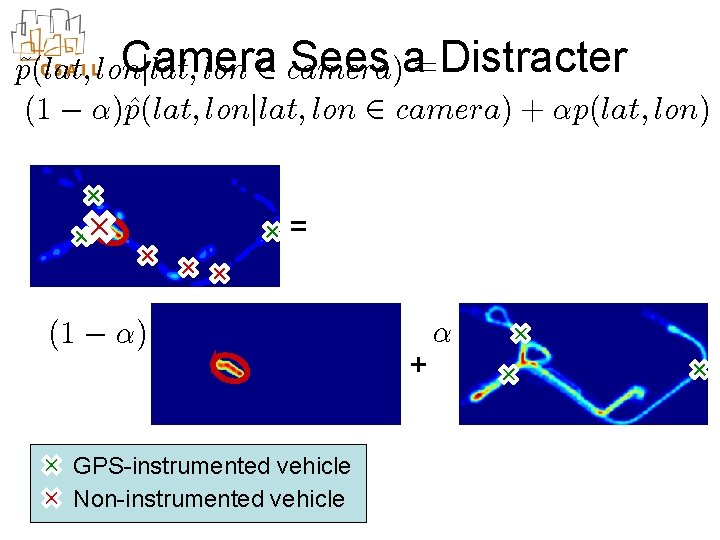

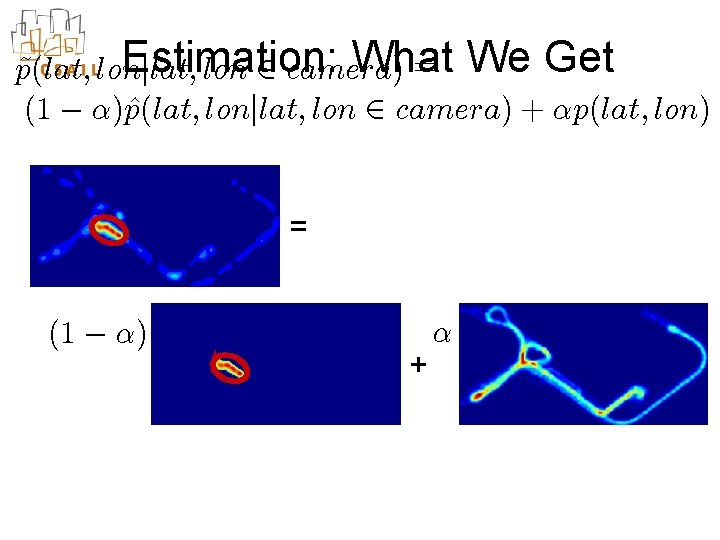

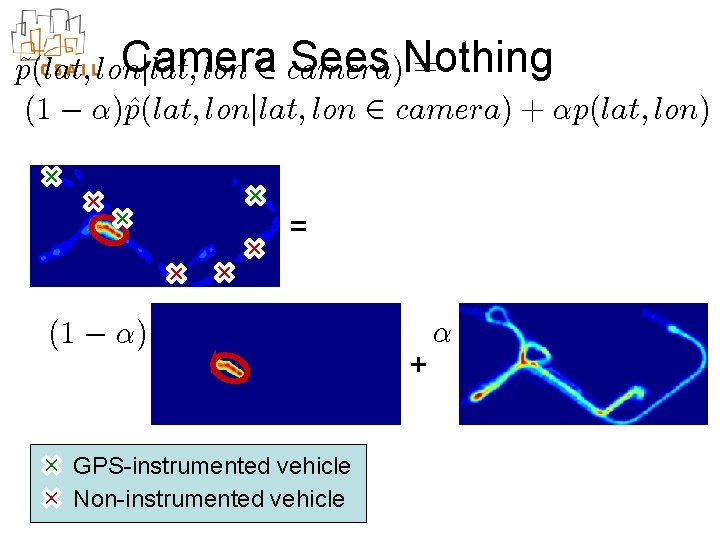

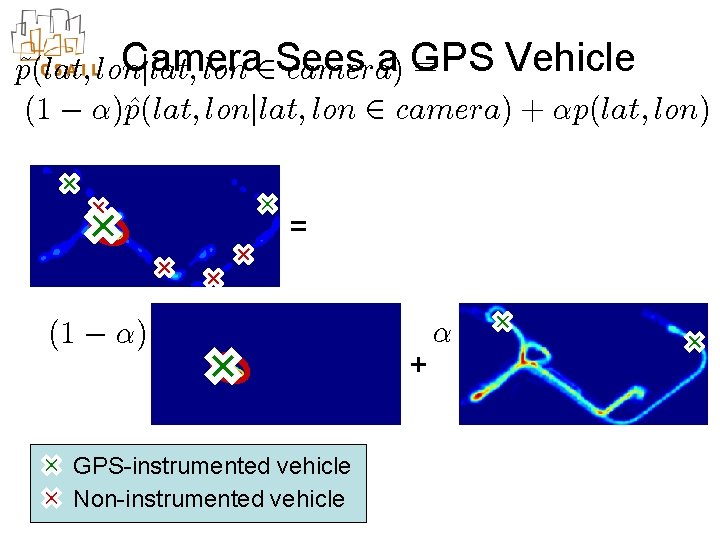

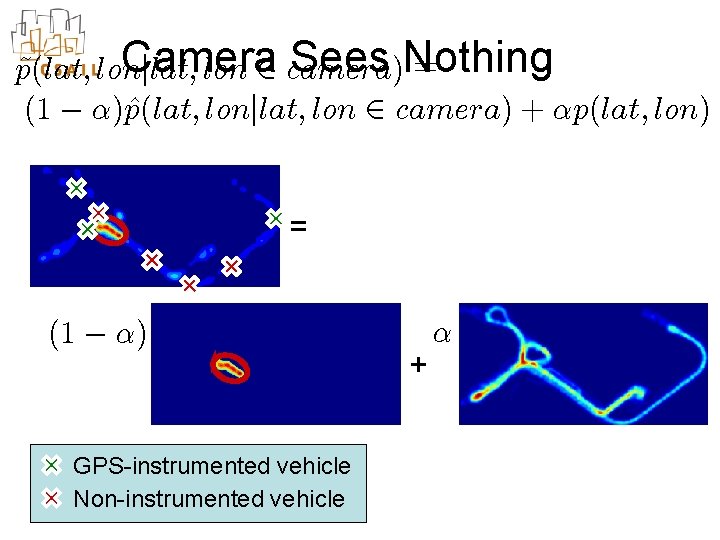

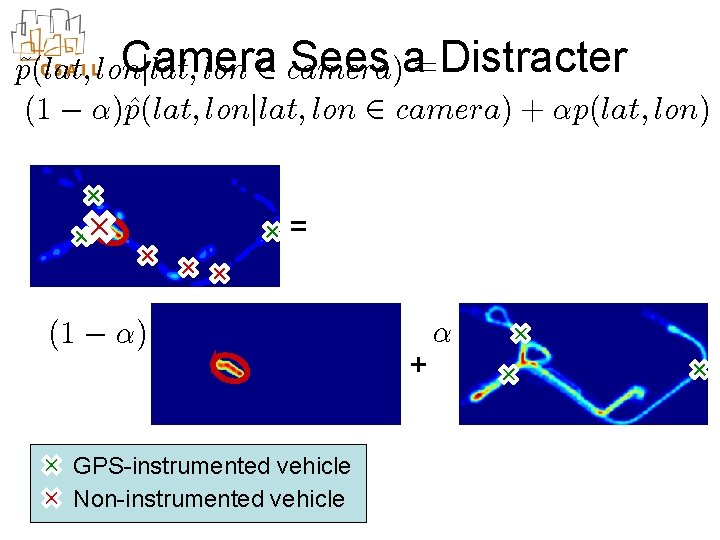

Estimation: What = We Get j lat; lon 2 camera) p~(lat; lon p(lat; lonj lat; lon 2 camera) + ®p(lat; lon) (1 ¡ ®)^ = (1 ¡ ®) + ®

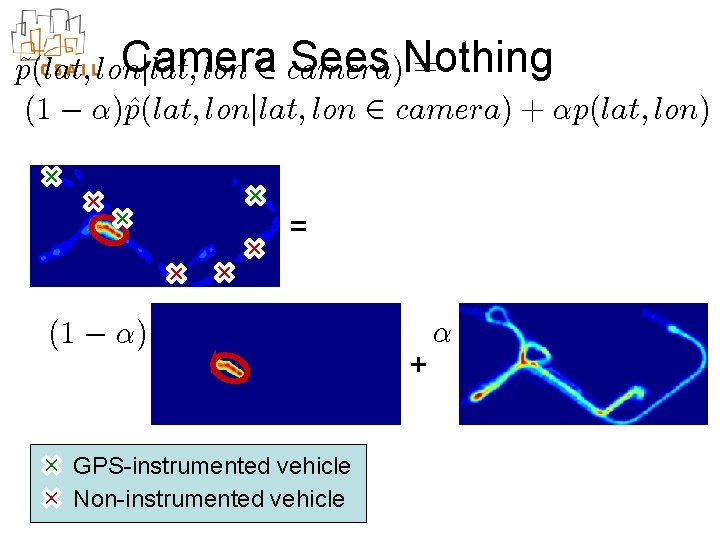

Camera Sees Nothing = j lat; lon 2 camera) p~(lat; lon p(lat; lonj lat; lon 2 camera) + ®p(lat; lon) (1 ¡ ®)^ = (1 ¡ ®) GPS-instrumented vehicle Non-instrumented vehicle + ®

Camera a GPS Vehicle = j lat; lon 2 Sees p~(lat; lon camera) p(lat; lonj lat; lon 2 camera) + ®p(lat; lon) (1 ¡ ®)^ = (1 ¡ ®) GPS-instrumented vehicle Non-instrumented vehicle + ®

Camera Sees Nothing = j lat; lon 2 camera) p~(lat; lon p(lat; lonj lat; lon 2 camera) + ®p(lat; lon) (1 ¡ ®)^ = (1 ¡ ®) GPS-instrumented vehicle Non-instrumented vehicle + ®

Camera Sees a=Distracter j lat; lon 2 camera) p~(lat; lon p(lat; lonj lat; lon 2 camera) + ®p(lat; lon) (1 ¡ ®)^ = (1 ¡ ®) GPS-instrumented vehicle Non-instrumented vehicle + ®

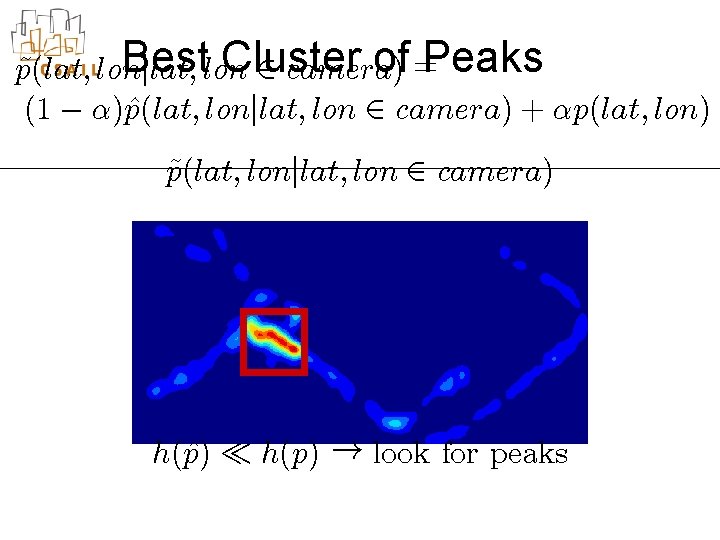

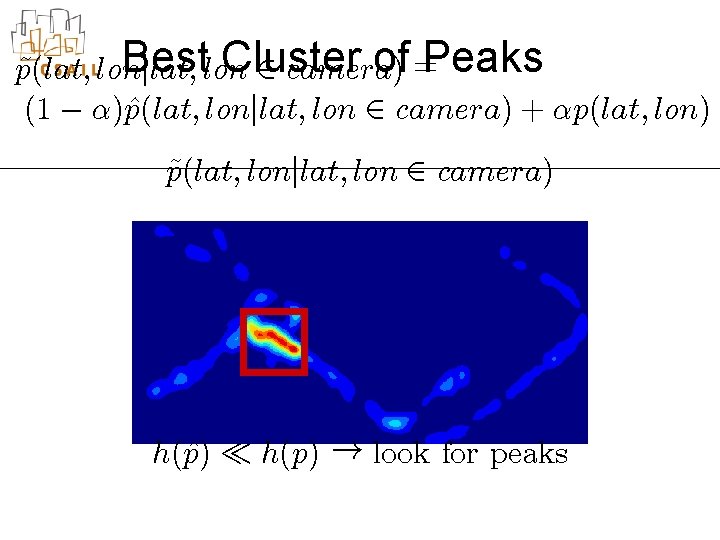

Best Cluster of =Peaks 2 camera) j lat; lon p~(lat; lon p(lat; lonj lat; lon 2 camera) + ®p(lat; lon) (1 ¡ ®)^ p~(lat; lonj lat; lon 2 camera) p) ¿ h(p) ! look for peaks h(^

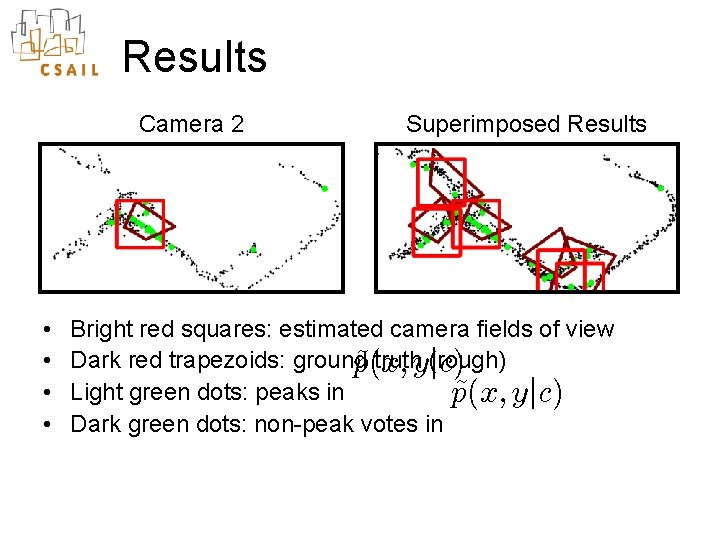

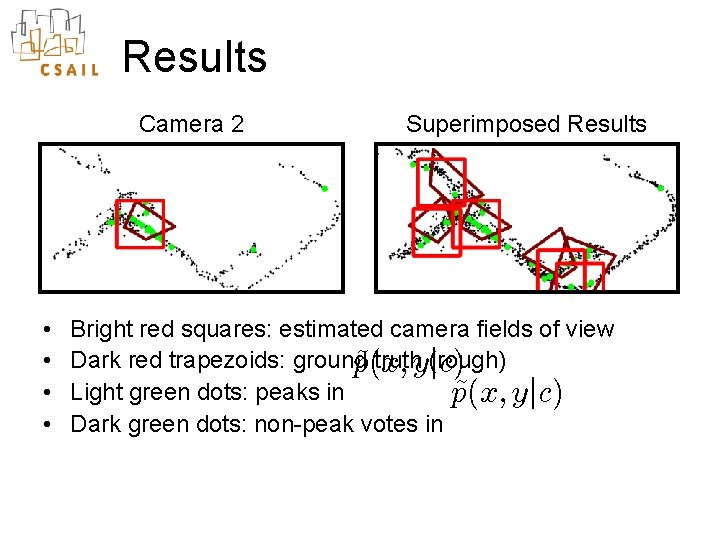

Results Camera 2 • • Superimposed Results Bright red squares: estimated camera fields of view Dark red trapezoids: ground truthy(rough) j c) p~(x; Light green dots: peaks in p~(x; y j c) Dark green dots: non-peak votes in

Conclusions • No given correspondence • Topology – Tripwire data network topology and transitions – Can model appearance changes • Geometry – Tripwire data + GPS side information camera locations

Questions… Thank you

Extra Slides…

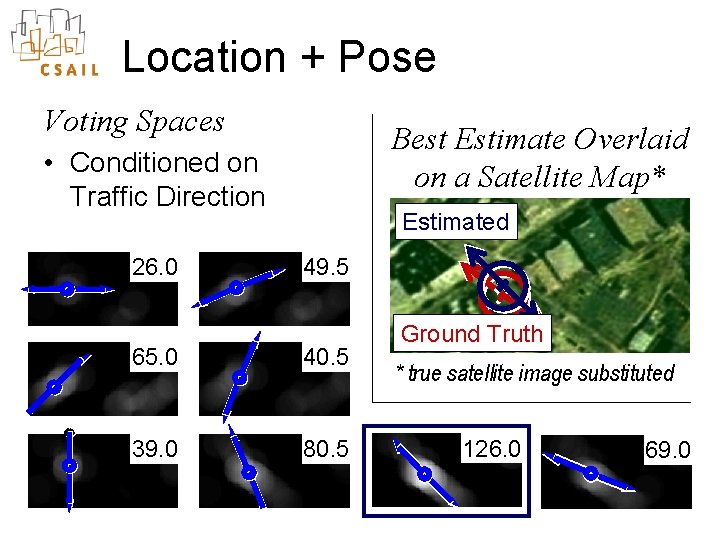

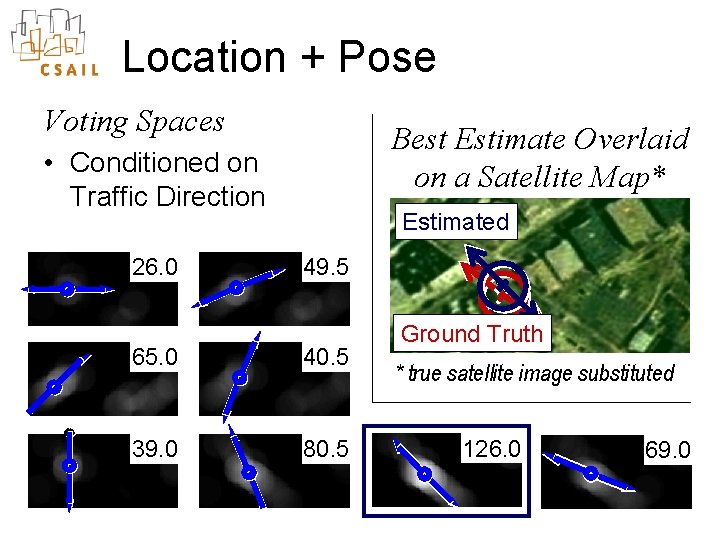

Location + Pose Voting Spaces Best Estimate Overlaid on a Satellite Map* • Conditioned on Traffic Direction 26. 0 Estimated 49. 5 65. 0 40. 5 39. 0 80. 5 Ground Truth * true satellite image substituted 126. 0 69. 0

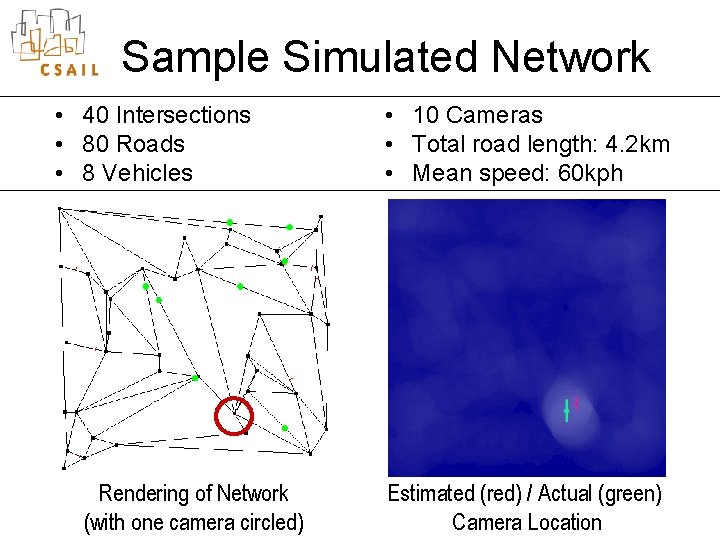

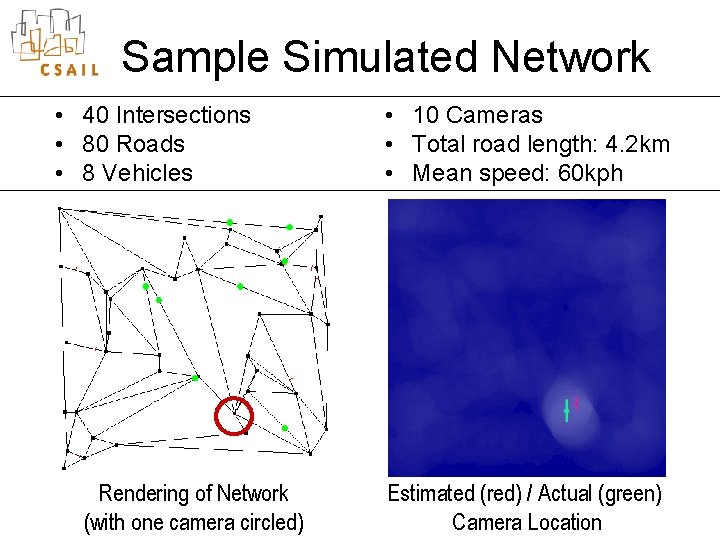

Sample Simulated Network • 40 Intersections • 80 Roads • 8 Vehicles Rendering of Network (with one camera circled) • 10 Cameras • Total road length: 4. 2 km • Mean speed: 60 kph Estimated (red) / Actual (green) Camera Location

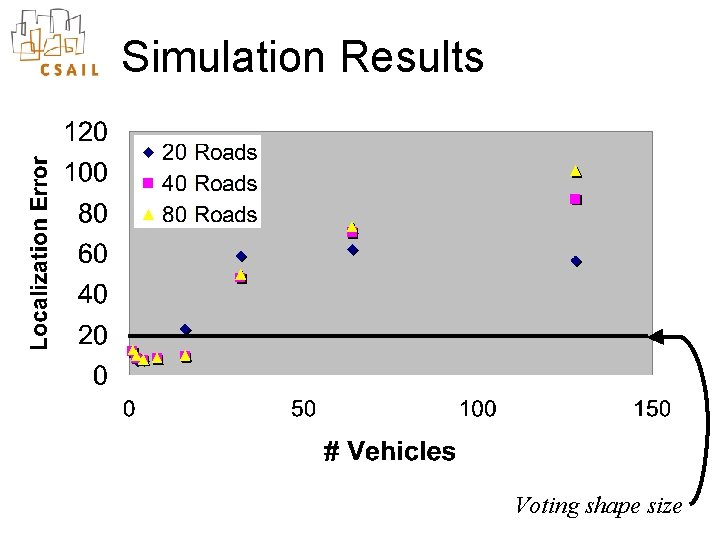

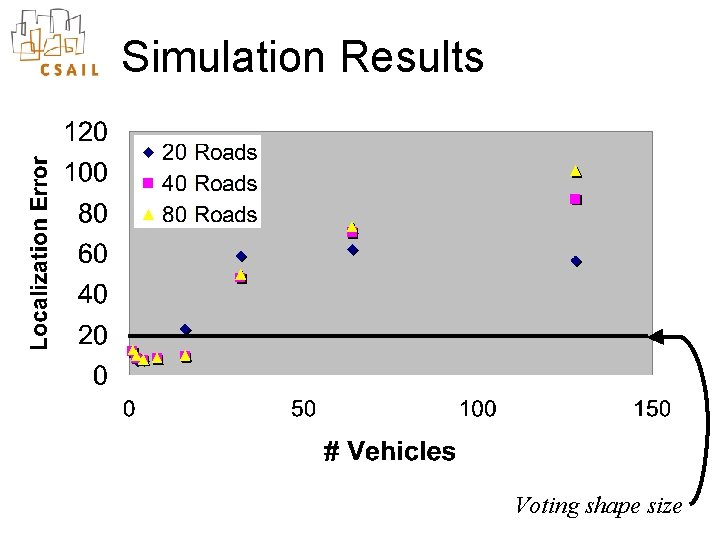

Simulation Results Voting shape size

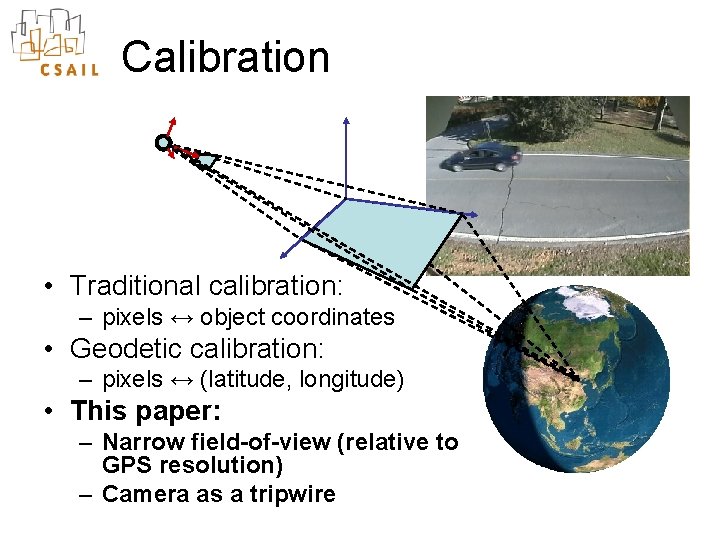

Calibration • Traditional calibration: – pixels ↔ object coordinates • Geodetic calibration: – pixels ↔ (latitude, longitude) • This paper: – Narrow field-of-view (relative to GPS resolution) – Camera as a tripwire

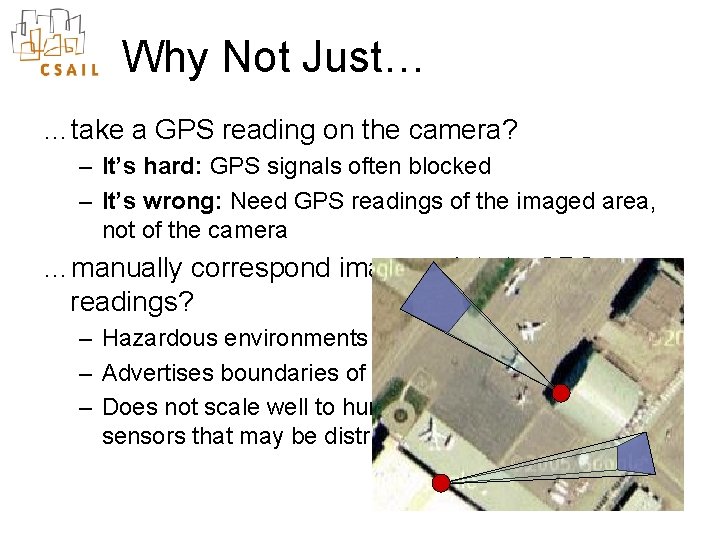

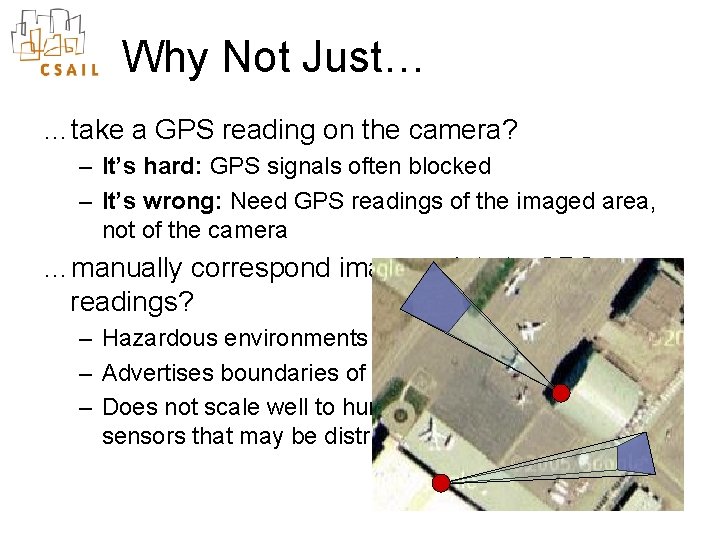

Why Not Just… …take a GPS reading on the camera? – It’s hard: GPS signals often blocked – It’s wrong: Need GPS readings of the imaged area, not of the camera …manually correspond image points to GPS readings? – Hazardous environments – Advertises boundaries of surveillance. – Does not scale well to hundreds or thousands of sensors that may be distributed very rapidly.

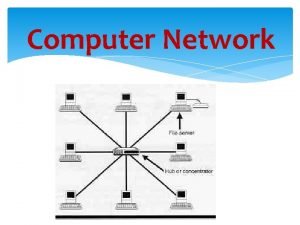

Network topology in computer network

Network topology in computer network Linear.pair

Linear.pair Lewis dot structure and molecular geometry

Lewis dot structure and molecular geometry Mesh topology diagram

Mesh topology diagram Network topology advantages and disadvantages

Network topology advantages and disadvantages 4 electron domains 2 lone pairs

4 electron domains 2 lone pairs Molecular geometry and bonding theories

Molecular geometry and bonding theories What is a physical network topology

What is a physical network topology Local area network topology

Local area network topology Network topology ppt presentation download

Network topology ppt presentation download Mpls network topology

Mpls network topology Knx topology example

Knx topology example Clos network topology

Clos network topology Network topology summary

Network topology summary Commvault network topology

Commvault network topology Topology in computer network

Topology in computer network Redundant ring topology

Redundant ring topology Routing decision

Routing decision Dragonfly network topology

Dragonfly network topology Dragonfly network topology

Dragonfly network topology Cloud computing network topology

Cloud computing network topology Topology in computer network

Topology in computer network