Netflix UID CNAME AGE User Tran ID Rents

- Slides: 6

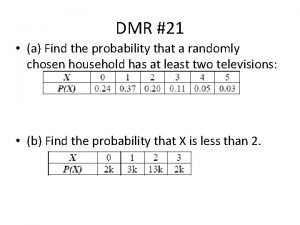

Netflix UID CNAME AGE User Tran. ID Rents Rating Netflix Date MID Mname Date Movie We Classify using a Trainging. Table on a column (possibly composite), called class label column. The Netflix Contest classification use the Rents. Training. Table, Rents(MID, UID, Rating, Date) and class label Rating, to classify new (MID, UID, Date) tuples (i. e. , predict ratings). How? Since there are no features except date? Nearest Neighbor User Voting: We won’t base “near” on a distance over features since there is only one feature, date. uid votes if it is near enough to UID in it’s ratings of movies M={mid 1, . . . , midk} (i. e. , near is based on a User-User correlation over M ). We need to select the User-Correlation (Pearson or Cosine or? ) and the set M={mid 1, …, midk }. Nearest Neighbor Movie Voting: mid votes if it’s ratings by U={uid 1, . . . , uidk} are near enough to those of MID (i. e. , near is based on a Movie-Movie correlation over U). We need to select the Movie-Correlation (Pearson or Cosine or? ) and the set U={uid 1, …, uidk }. Netflix Cinematch predicts potential new ratings. Using these predicted ratings, Netflix recommend next rentals (those that are predicted to be 5’s or 4’s? ).

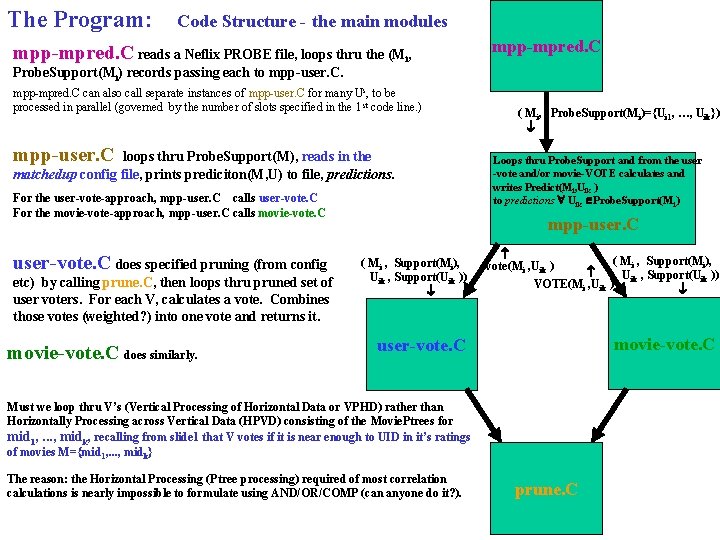

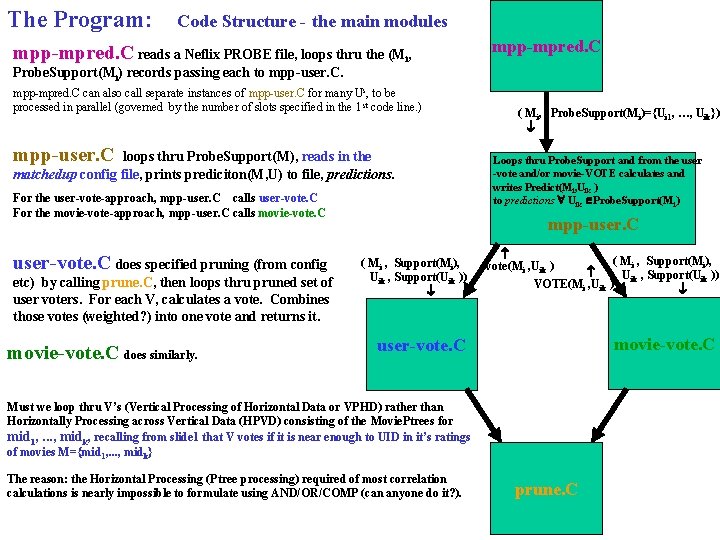

The Program: Code Structure - the main modules mpp-mpred. C reads a Neflix PROBE file, loops thru the (Mi, mpp-mpred. C Probe. Support(Mi) records passing each to mpp-user. C. mpp-mpred. C can also call separate instances of mpp-user. C for many Us, to be processed in parallel (governed by the number of slots specified in the 1 st code line. ) mpp-user. C loops thru Probe. Support(M), reads in the matchedup config file, prints prediciton(M, U) to file, predictions. For the user-vote-approach, mpp-user. C calls user-vote. C For the movie-vote-approach, mpp-user. C calls movie-vote. C user-vote. C does specified pruning (from config etc) by calling prune. C, then loops thru pruned set of user voters. For each V, calculates a vote. Combines those votes (weighted? ) into one vote and returns it. movie-vote. C does similarly. ( Mi, Probe. Support(Mi)={Ui 1, …, Uik}) Loops thru Probe. Support and from the user -vote and/or movie-VOTE calculates and writes Predict(Mi, Uik ) to predictions Uik Probe. Support(Mi) mpp-user. C ( Mi , Support(Mi), Uik , Support(Uik )) ( Mi , Support(Mi), vote(Mi , Uik ) U , Support(Uik )) VOTE(Mi , Uik ) ik user-vote. C movie-vote. C Must we loop thru V’s (Vertical Processing of Horizontal Data or VPHD) rather than Horizontally Processing across Vertical Data (HPVD) consisting of the Movie. Ptrees for mid 1, . . . , midk, recalling from slide 1 that V votes if it is near enough to UID in it’s ratings of movies M={mid 1, . . . , midk} The reason: the Horizontal Processing (Ptree processing) required of most correlation calculations is nearly impossible to formulate using AND/OR/COMP (can anyone do it? ). prune. C

What kind of pruning can be specified? Again, all parameters are specified in a configuration file and the values specified there are consumed at runtime using, e. g. , the call: mpp -i Input_. txt_file -c config -n 16 where Input_. txt_file is the input Probe subset file and 16 is the number of parallel threads that mpp-mpred. C will generate (here, 16 movies are processed in parallel, each sent to a separate instantiation of mpp-user. C) user-vote. C A sample config file is given later. There are up to 3 types of pruning used (for pruning down support(M) as the set of all users that rate M or pruning down support(U) as the set of all movies that rate U: mpp-mpred. C mpp-user. C movie-vote. C prune. C 1. correlation or similarity threshold based pruning 2. count based pruning 3. ID window based pruning Under correlation or similarity threshold based pruning, and using support(M)=sup. M for example (pruning support(U) is similar) we allow any function f: sup. M [0, High. Value] to be called a user correlation provided only that f(u, u)=High. Value for every u in sup. M. Examples include Pearson_Correlation, Gaussian_of_Distance, 1_perp_Correlation (see appendix of these notes), relative_exact_rating_match_count (Tingda is using), dimension_of_common_cosupport, and functions based on Standard Deviations. Under count based pruning, we usually order by one of the correlations above first (into a multimap) then prune down to a specified count of the most highly correlated. Under ID window based pruning we prune down to a window of user. IDs within sup. M (or movie. IDs within sup. U) by specifying a leftside (number added to U, so leftside is relative to U as a user. ID) and a width.

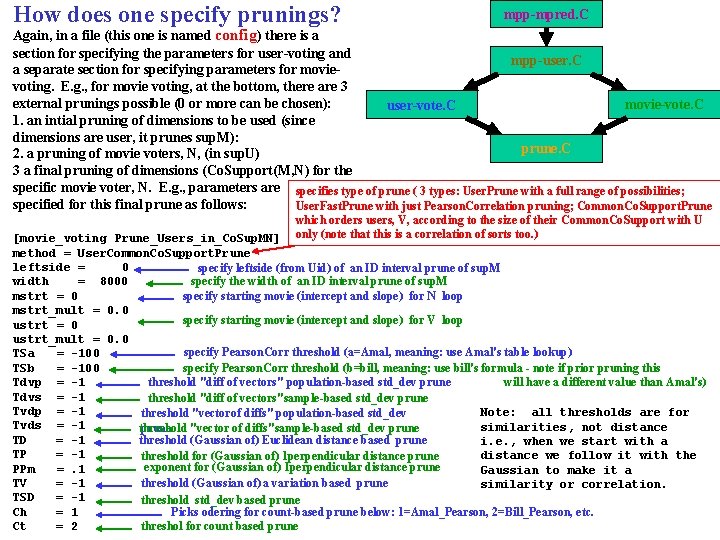

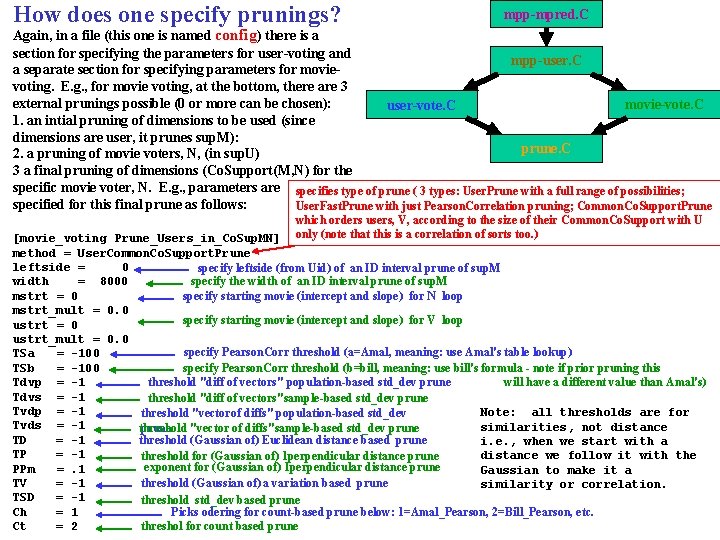

How does one specify prunings? mpp-mpred. C Again, in a file (this one is named config) there is a section for specifying the parameters for user-voting and mpp-user. C a separate section for specifying parameters for movievoting. E. g. , for movie voting, at the bottom, there are 3 external prunings possible (0 or more can be chosen): movie-vote. C user-vote. C 1. an intial pruning of dimensions to be used (since dimensions are user, it prunes sup. M): prune. C 2. a pruning of movie voters, N, (in sup. U) 3 a final pruning of dimensions (Co. Support(M, N) for the specific movie voter, N. E. g. , parameters are specifies type of prune ( 3 types: User. Prune with a full range of possibilities; specified for this final prune as follows: User. Fast. Prune with just Pearson. Correlation pruning; Common. Co. Support. Prune which orders users, V, according to the size of their Common. Co. Support with U only (note that this is a correlation of sorts too. ) [movie_voting Prune_Users_in_Co. Sup. MN] method = User. Common. Co. Support. Prune leftside = 0 specify leftside (from Uid) of an ID interval prune of sup. M specify the width of an ID interval prune of sup. M width = 8000 mstrt = 0 specify starting movie (intercept and slope) for N loop mstrt_mult = 0. 0 specify starting movie (intercept and slope) for V loop ustrt = 0 ustrt_mult = 0. 0 specify Pearson. Corr threshold (a=Amal, meaning: use Amal's table lookup) TSa = -100 specify Pearson. Corr threshold (b=bill, meaning: use bill's formula - note if prior pruning this TSb = -100 will have a different value than Amal's) Tdvp = -1 threshold "diff of vectors" population-based std_dev prune Tdvs = -1 threshold "diff of vectors"sample-based std_dev prune Tvdp = -1 Note: all thresholds are for threshold "vectorof diffs" population-based std_dev Tvds = -1 similarities, not distance threshold "vector of diffs"sample-based std_dev prune TD = -1 threshold (Gaussian of) Euclidean distance based prune i. e. , when we start with a TP = -1 distance we follow it with the threshold for (Gaussian of) 1 perpendicular distance prune exponent for (Gaussian of) 1 perpendicular distance prune PPm =. 1 Gaussian to make it a TV = -1 threshold (Gaussian of) a variation based prune similarity or correlation. TSD = -1 threshold std_dev based prune Ch = 1 Picks odering for count-based prune below: 1=Amal_Pearson, 2=Bill_Pearson, etc. Ct = 2 threshol for count based prune

APPENDIX: The Program (old) mpp-mpred. C reads a Neflix PROBE file, loops thru the (Mi, Probe. Support(Mi)’s passing each to mpp-user. C, which calculates and prints Predicted. Rating(Mi, U) to the file, “prediction”, U Probe. Support(Mi). mpp-mpred. C can also call separate instances of mpp-user. C for many Us, to be processed in parallel (governed by the number of "slots" specified in 1 st code line. ) (Mi, Probe. Support(Mi)) From votes, calculates and writes Predict(Mi, U) to predictions U Probe. Support(Mi) mpp-user. C loops thru Probe. Support(M), reads in the matchedup config file, prints prediciton(M, U) to file, predictions. For the user-vote-approach, mpp-user. C calls user-vote. C, passing (M, Support(M), U, Support(U)). For the movie-vote-approach, mpp-user. C calls movie-vote. C, passing (M, Support(M), U, Support(U). mpp-user. C ( M, Support(M), U, Support(U)) vote(M, U) VOTE(M, U) ( M, Support(M), U, Support(U) ) user-vote. C does the specified pruning (from the config etc. ) by calling prune. C, then loops thru the pruned set of user voters. For each V, calculates a vote, then combines those votes and returns predicted. Rating(M, U). movie-vote. C user-vote. C Must we loop thru V’s (Vertical Processing of Horizontal Data or VPHD) rather than to calculate the vote by Horizontally Processing across the Vertical Data (HPVD) consisting of the Movie. Ptrees for mid 1, . . . , midk, recalling from slide 1 that V=uid votes if it is near enough to UID in it’s ratings of movies M={mid 1, . . . , midk}. The reason: the Horizontal Processing (Ptree processing) required of most correlation calculations is nearly impossible to formulate using AND/OR/COMP (so far, anyway). movie-vote. C similar. prune. C

APPENDIX: Collaborative Filtering is the prediction of likes and dislikes (retail or rental) from the history of previous expressed ratings (filtering new likes thru the historical filter of “collaborator” likes) E. g. , the $1, 000 Netflix Contest was to develop a ratings prediction program that can beat the one Netflix currently uses (called Cinematch) by 10% in predicting what rating users gave to movies. I. e. , predict rating(M, U) where (M, U) QUALIFYING(Movie. ID, User. ID). Netflix uses Cinematch to decide which movies a user will probably like next (based on all past rating history). All ratings are "5 -star" ratings (5 is highest. 1 is lowest. Caution: 0 means “did not rate”). Unfortunately rating=0 does not mean that the user "disliked" that movie, but that it wasn't rated at all. Most “ratings” are 0. That’s the main reason we don’t want to use standard vector space distance for “near”. A "history of ratings given by users to movies“, TRAINING(Movie. ID, User. ID, Rating, Date) is provided, with which to train your predictor, which will predict the ratings given to QUALIFYING movie-user pairs (Netflix knows the rating given to Qualifying pairs, but we don't. ) Since the TRAINING is very large, Netflix also provides a “smaller, but representative subset” of TRAINING, PROBE(Movie. ID, User. ID) (~2 orders of magnitude smaller than TRAINING). Netflix gave 5 years to submit QUALIFYING predictions. That contest was won in the late summer of 2009, when the submission window was about 1/2 gone. The Netflix Contest Problem is an example of the Collaborative Filtering Problem which is ubiquitous in the retail business world (How do you filter out what a customer will want to buy or rent next, based on similar customers? ).