Net FPGA Programmable Networking for HighSpeed Network Prototypes

Net. FPGA Programmable Networking for High-Speed Network Prototypes, Research and Teaching Presented by: Andrew W. Moore (University of Cambridge) CHANGE/OFELIA Berlin, Germany November 10 th, 2011 http: //Net. FPGA. org Berlin – November 10 th, 2011

Tutorial Outline • Motivation – Introduction – The Net. FPGA Platform • Hardware Overview – Net. FPGA 1 G – Net. FPGA 10 G • The Stanford Base Reference Router – Motivation: Basic IP review – Example 1: Reference Router running on the Net. FPGA – Example 2: Understanding buffer size requirements using Net. FPGA • Community Contributions – Altera-DE 4 Net. FPGA Reference Router (UMass. Amherst) – Net. Threads (University of Toronto) • Concluding Remarks Berlin – November 10 th, 2011

Section I: Motivation Berlin – November 10 th, 2011

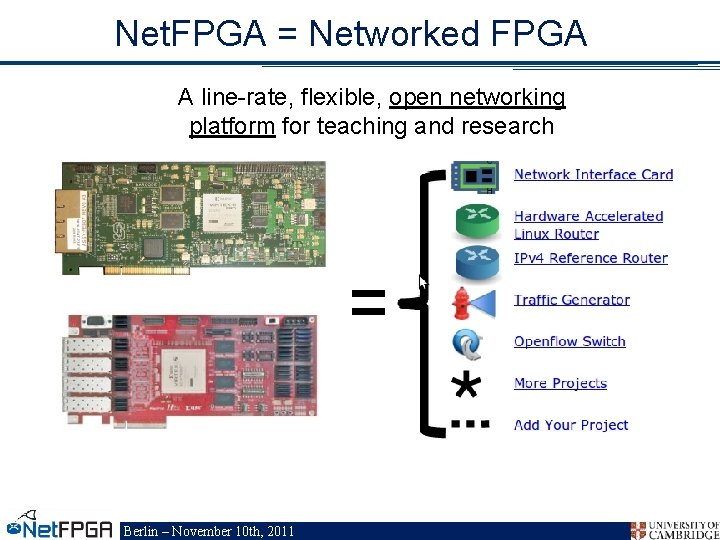

Net. FPGA = Networked FPGA A line-rate, flexible, open networking platform for teaching and research Berlin – November 10 th, 2011

Net. FPGA consists of… Four elements: • Net. FPGA board Net. FPGA 1 G Board • Tools + reference designs • Contributed projects • Community Berlin – November 10 th, 2011 Net. FPGA 10 G Board

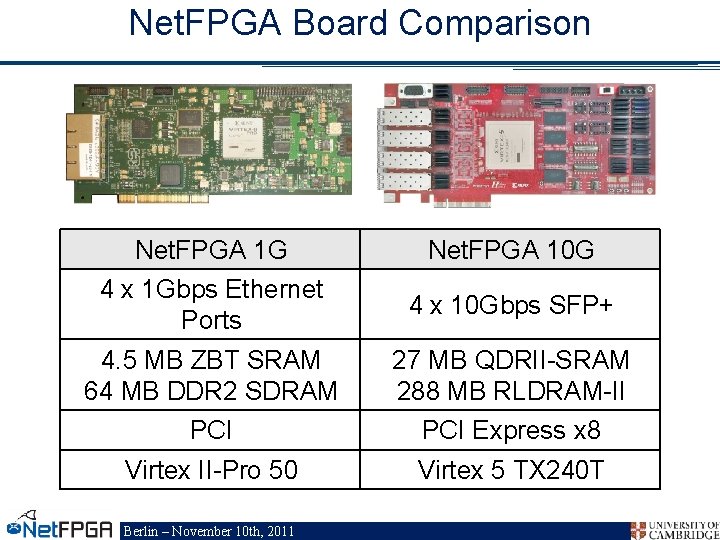

Net. FPGA Board Comparison Net. FPGA 1 G Net. FPGA 10 G 4 x 1 Gbps Ethernet Ports 4 x 10 Gbps SFP+ 4. 5 MB ZBT SRAM 64 MB DDR 2 SDRAM 27 MB QDRII-SRAM 288 MB RLDRAM-II PCI Express x 8 Virtex II-Pro 50 Virtex 5 TX 240 T Berlin – November 10 th, 2011

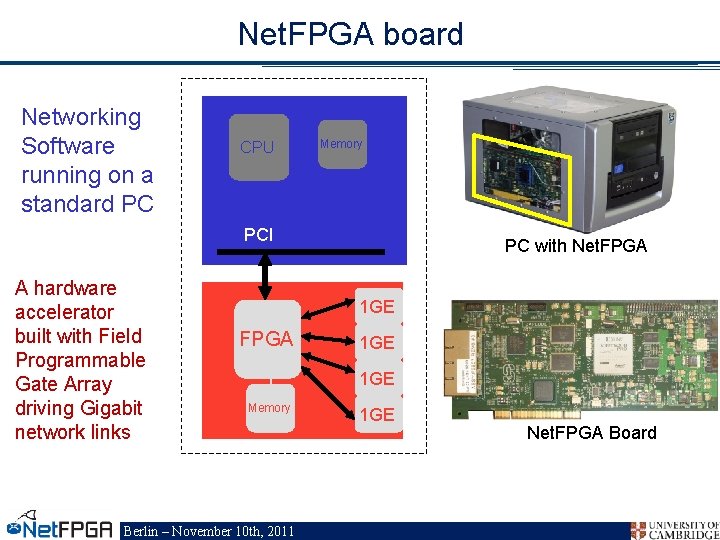

Net. FPGA board Networking Software running on a standard PC CPU Memory PCI A hardware accelerator built with Field Programmable Gate Array driving Gigabit network links PC with Net. FPGA 1 GE 1 GE Memory Berlin – November 10 th, 2011 1 GE Net. FPGA Board

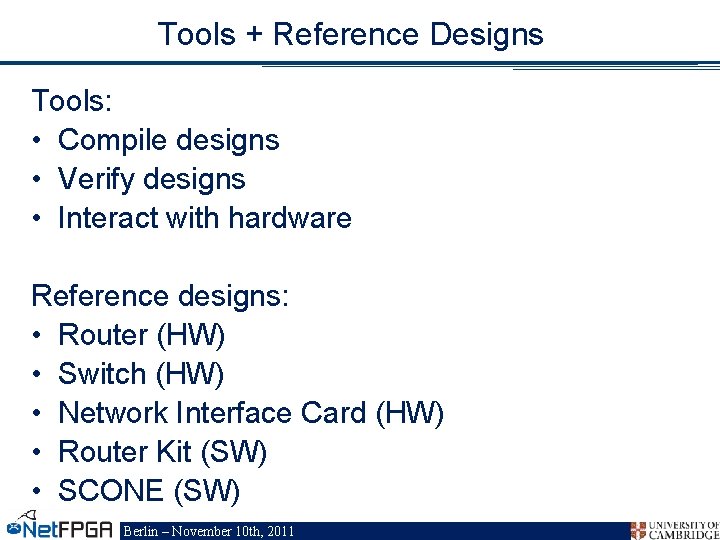

Tools + Reference Designs Tools: • Compile designs • Verify designs • Interact with hardware Reference designs: • Router (HW) • Switch (HW) • Network Interface Card (HW) • Router Kit (SW) • SCONE (SW) Berlin – November 10 th, 2011

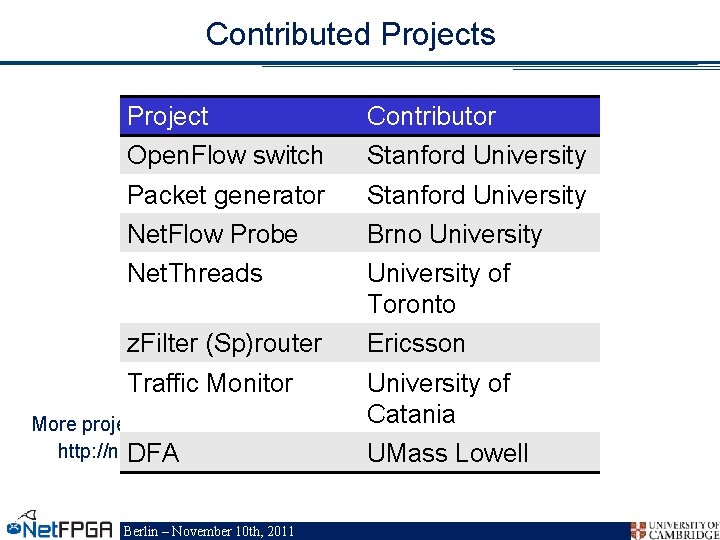

Contributed Projects Project Contributor Open. Flow switch Stanford University Packet generator Stanford University Net. Flow Probe Brno University Net. Threads University of Toronto z. Filter (Sp)router Ericsson Traffic Monitor University of Catania More projects: http: //netfpga. org/foswiki/Net. FPGA/One. Gig/Project. Table DFA UMass Lowell Berlin – November 10 th, 2011

Community Wiki • Documentation – User’s Guide – Developer’s Guide • Encourage users to contribute Forums • Support by users for users • Active community - 10 s-100 s of posts/week Berlin – November 10 th, 2011

International Community Over 1, 000 users, using 1, 900 cards at 150 universities in 32 countries Berlin – November 10 th, 2011

Net. FPGA’s Defining Characteristics • Line-Rate – Processes back-to-back packets • Without dropping packets • At full rate of Gigabit Ethernet Links – Operating on packet headers • For switching, routing, and firewall rules – And packet payloads • For content processing and intrusion prevention • Open-source Hardware – Similar to open-source software • Full source code available • BSD-Style License – But harder, because • Hardware modules must meeting timing • Verilog & VHDL Components have more complex interfaces • Hardware designers need high confidence in specification of modules Berlin – November 10 th, 2011

Test-Driven Design • Regression tests – Have repeatable results – Define the supported features – Provide clear expectation on functionality • Example: Internet Router – – – Drops packets with bad IP checksum Performs Longest Prefix Matching on destination address Forwards IPv 4 packets of length 64 -1500 bytes Generates ICMP message for packets with TTL <= 1 Defines how packets with IP options or non IPv 4 … and dozens more … Every feature is defined by a regression test Berlin – November 10 th, 2011

Who, How, Why Who uses the Net. FPGA? – – – Teachers Students Researchers How do they use the Net. FPGA? – – To run the Router Kit To build modular reference designs • • • IPv 4 router 4 -port NIC Ethernet switch, … Why do they use the Net. FPGA? – – To measure performance of Internet systems To prototype new networking systems Berlin – November 10 th, 2011

Section II: Hardware Overview Berlin – November 10 th, 2011

Net. FPGA-1 G Berlin – November 10 th, 2011

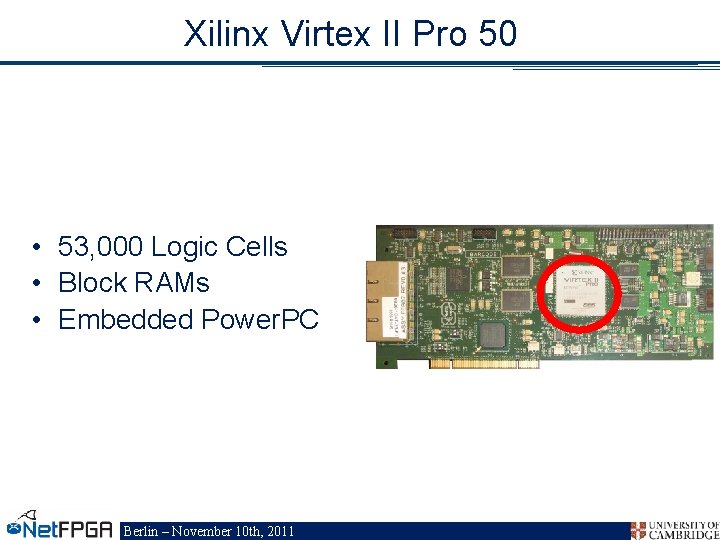

Xilinx Virtex II Pro 50 • 53, 000 Logic Cells • Block RAMs • Embedded Power. PC Berlin – November 10 th, 2011

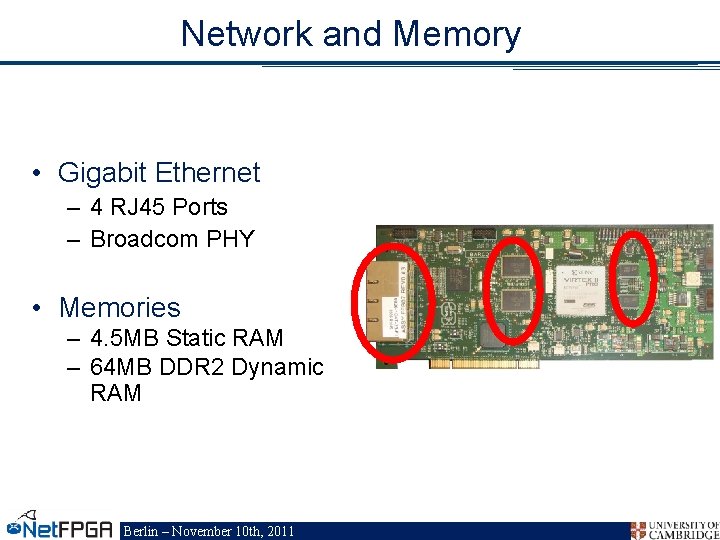

Network and Memory • Gigabit Ethernet – 4 RJ 45 Ports – Broadcom PHY • Memories – 4. 5 MB Static RAM – 64 MB DDR 2 Dynamic RAM Berlin – November 10 th, 2011

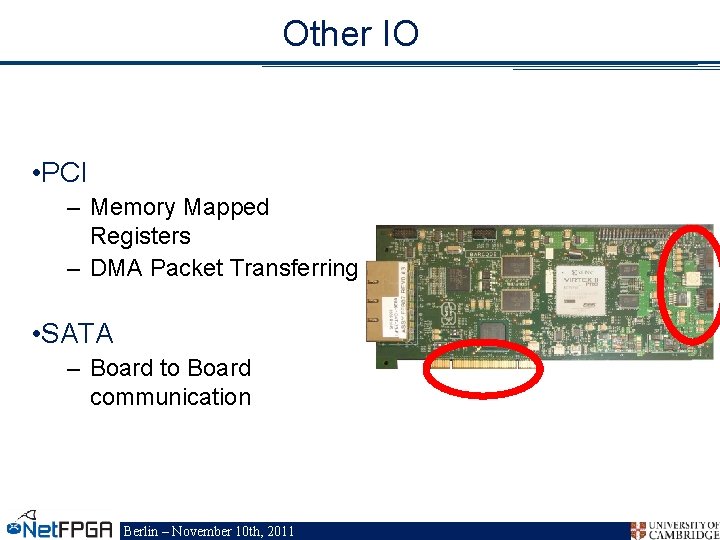

Other IO • PCI – Memory Mapped Registers – DMA Packet Transferring • SATA – Board to Board communication Berlin – November 10 th, 2011

Net. FPGA-10 G • A major upgrade • State-of-the-art technology Berlin – November 10 th, 2011

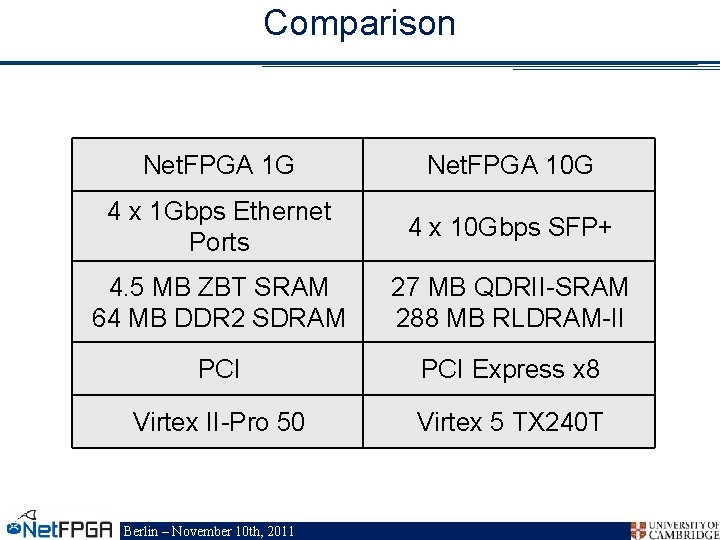

Comparison Net. FPGA 1 G Net. FPGA 10 G 4 x 1 Gbps Ethernet Ports 4 x 10 Gbps SFP+ 4. 5 MB ZBT SRAM 64 MB DDR 2 SDRAM 27 MB QDRII-SRAM 288 MB RLDRAM-II PCI Express x 8 Virtex II-Pro 50 Virtex 5 TX 240 T Berlin – November 10 th, 2011

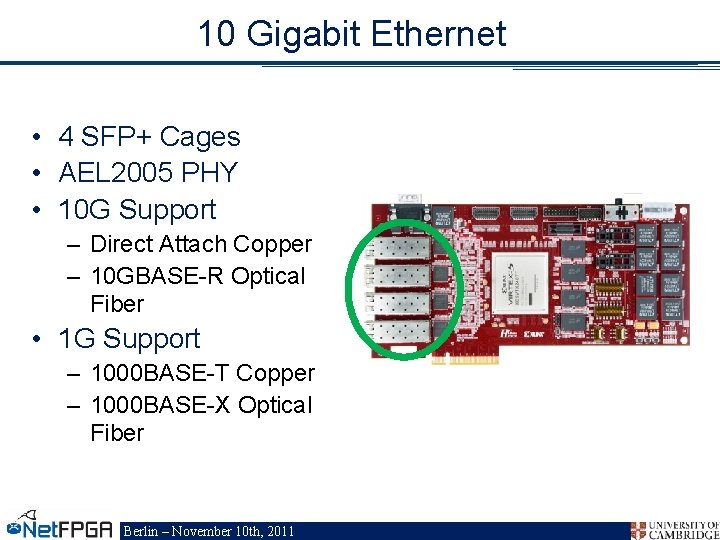

10 Gigabit Ethernet • 4 SFP+ Cages • AEL 2005 PHY • 10 G Support – Direct Attach Copper – 10 GBASE-R Optical Fiber • 1 G Support – 1000 BASE-T Copper – 1000 BASE-X Optical Fiber Berlin – November 10 th, 2011

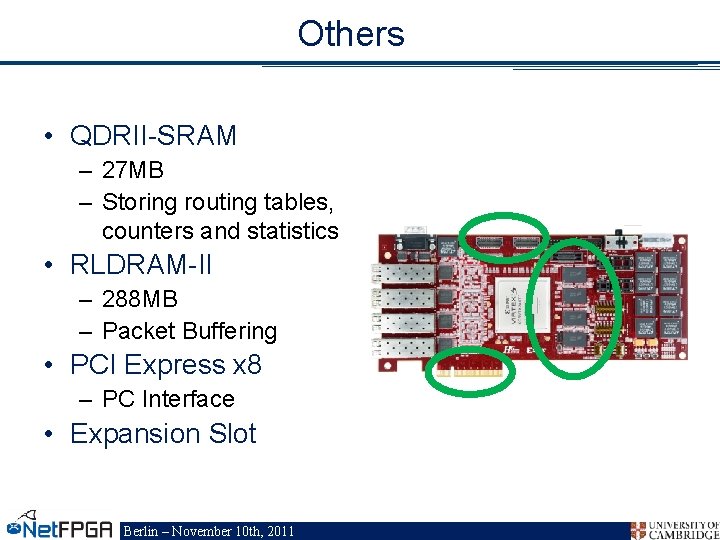

Others • QDRII-SRAM – 27 MB – Storing routing tables, counters and statistics • RLDRAM-II – 288 MB – Packet Buffering • PCI Express x 8 – PC Interface • Expansion Slot Berlin – November 10 th, 2011

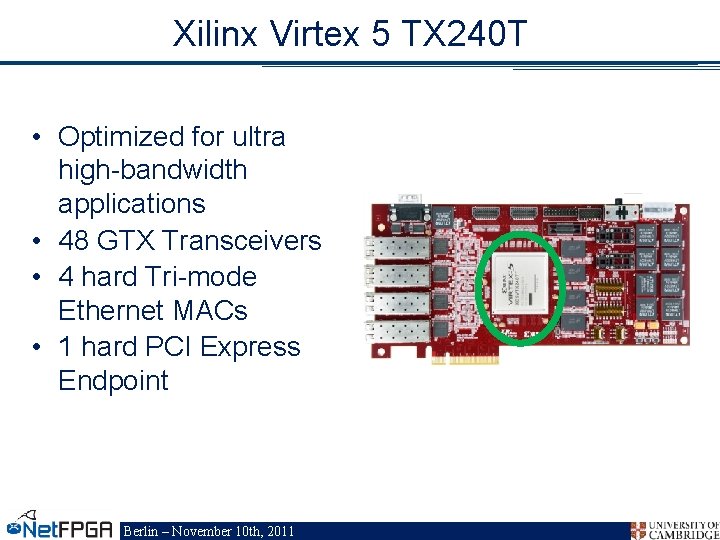

Xilinx Virtex 5 TX 240 T • Optimized for ultra high-bandwidth applications • 48 GTX Transceivers • 4 hard Tri-mode Ethernet MACs • 1 hard PCI Express Endpoint Berlin – November 10 th, 2011

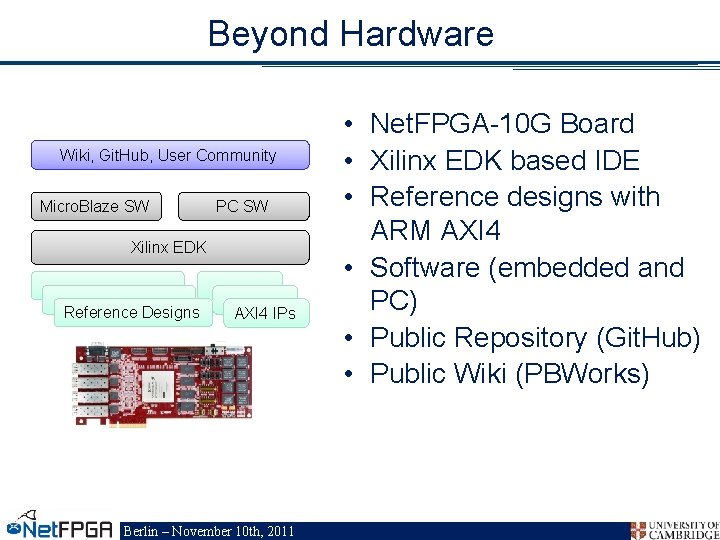

Beyond Hardware Wiki, Git. Hub, User Community Micro. Blaze SW PC SW Xilinx EDK Reference Designs AXI 4 IPs Berlin – November 10 th, 2011 • Net. FPGA-10 G Board • Xilinx EDK based IDE • Reference designs with ARM AXI 4 • Software (embedded and PC) • Public Repository (Git. Hub) • Public Wiki (PBWorks)

Net. FPGA-1 G Cube Systems • PCs assembled from parts – Stanford University – Cambridge University • Pre-built systems available – Accent Technology Inc. • Details are in the Guide http: //netfpga. org/static/guide. html Berlin – November 10 th, 2011

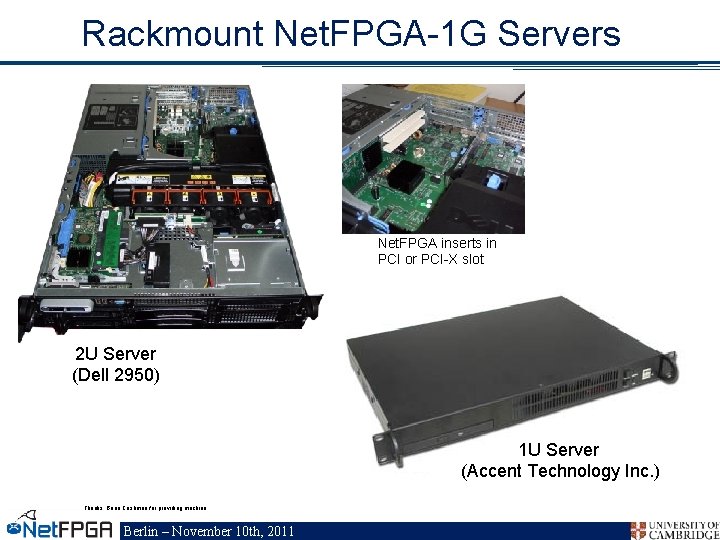

Rackmount Net. FPGA-1 G Servers Net. FPGA inserts in PCI or PCI-X slot 2 U Server (Dell 2950) 1 U Server (Accent Technology Inc. ) Thanks: Brian Cashman for providing machine Berlin – November 10 th, 2011

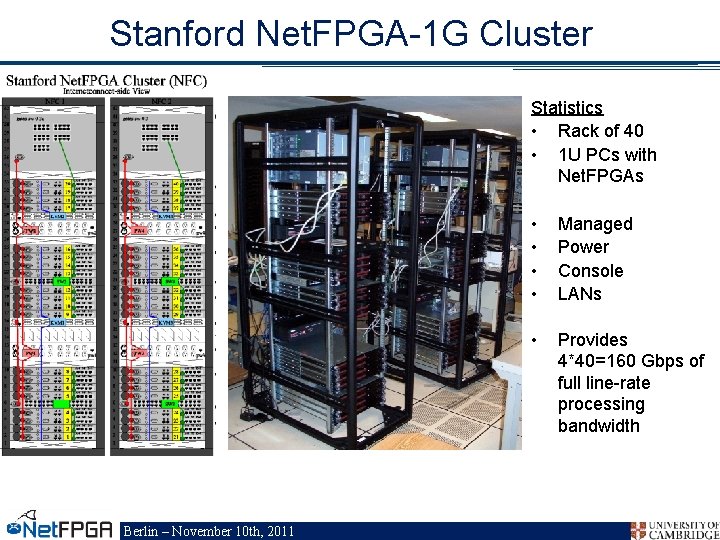

Stanford Net. FPGA-1 G Cluster Statistics • Rack of 40 • 1 U PCs with Net. FPGAs Berlin – November 10 th, 2011 • • Managed Power Console LANs • Provides 4*40=160 Gbps of full line-rate processing bandwidth

Section III: Network review Berlin – November 10 th, 2011

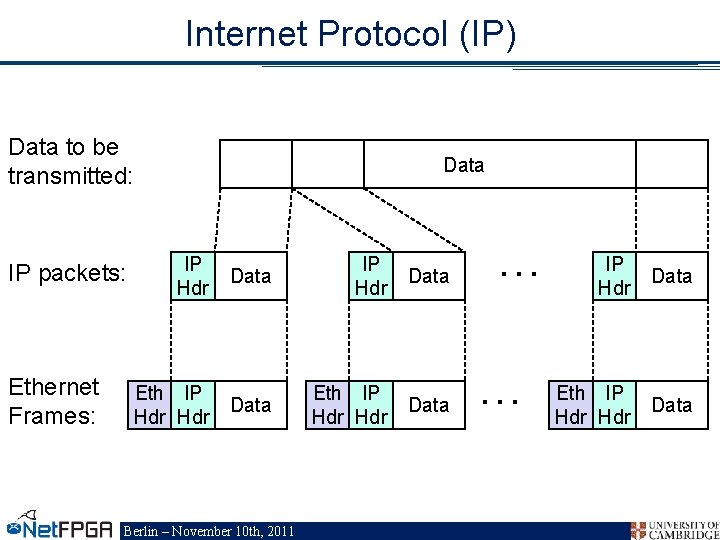

Internet Protocol (IP) Data to be transmitted: IP packets: Ethernet Frames: Data IP Hdr Data Eth IP Hdr Hdr Data Berlin – November 10 th, 2011 … … IP Hdr Data Eth IP Hdr Data

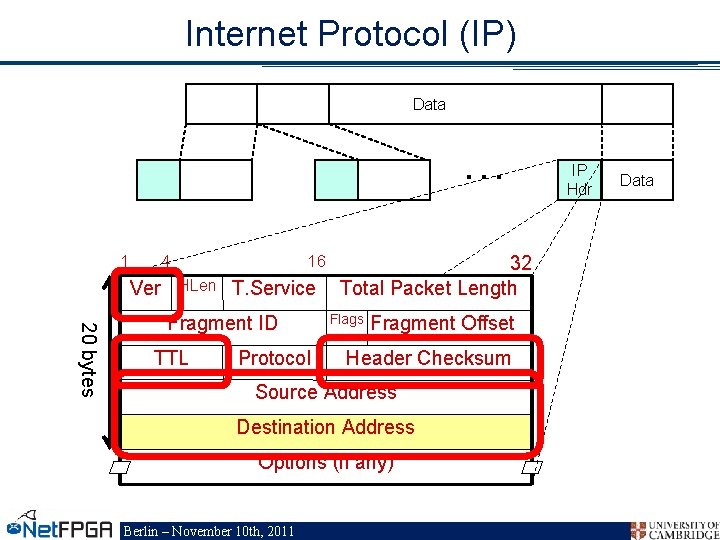

Internet Protocol (IP) Data … 1 4 Ver 16 HLen T. Service 20 bytes Fragment ID TTL Protocol 32 Total Packet Length Flags Fragment Offset Header Checksum Source Address Destination Address Options (if any) Berlin – November 10 th, 2011 IP Hdr Data

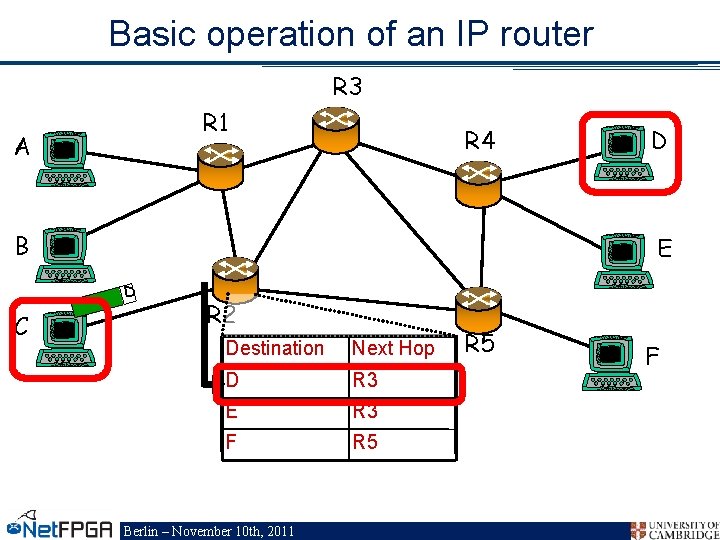

Basic operation of an IP router R 3 R 1 A R 4 B E D C D R 2 Destination Next Hop D R 3 E R 3 F R 5 Berlin – November 10 th, 2011 R 5 F

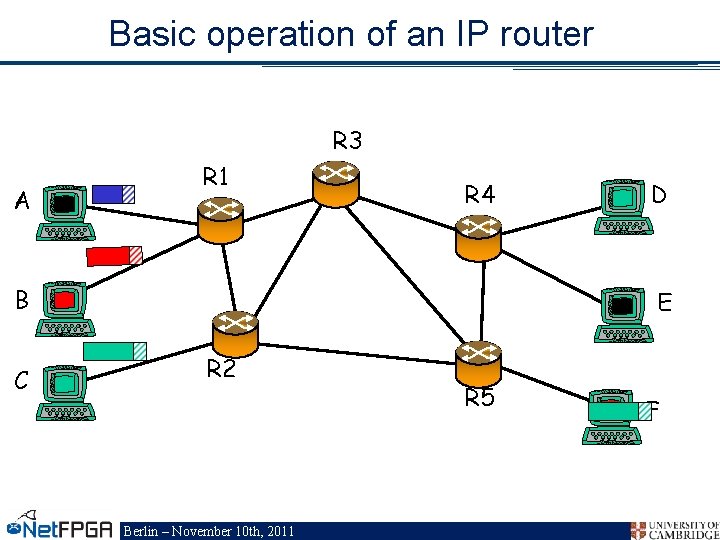

Basic operation of an IP router R 3 A R 1 R 4 B C D E R 2 Berlin – November 10 th, 2011 R 5 F

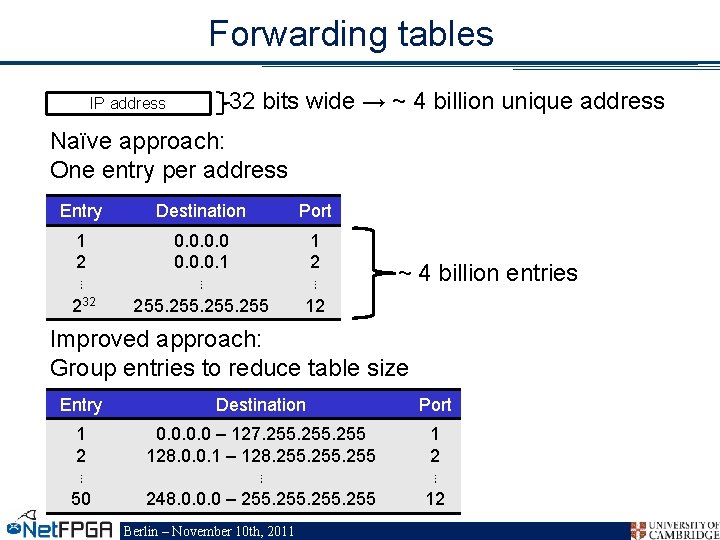

Forwarding tables IP address 32 bits wide → ~ 4 billion unique address Naïve approach: One entry per address Entry Destination Port 1 2 ⋮ 232 0. 0. 1 ⋮ 255 1 2 ⋮ 12 ~ 4 billion entries Improved approach: Group entries to reduce table size Entry Destination Port 1 2 ⋮ 50 0. 0 – 127. 255 128. 0. 0. 1 – 128. 255 ⋮ 248. 0. 0. 0 – 255 1 2 ⋮ 12 Berlin – November 10 th, 2011

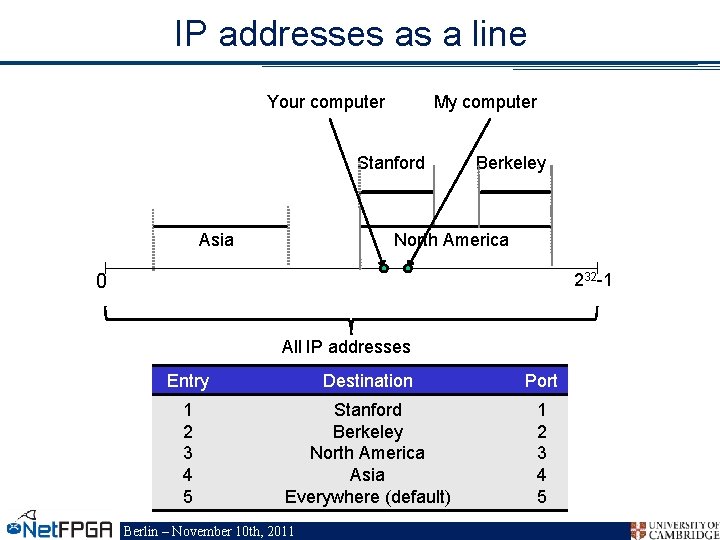

IP addresses as a line Your computer My computer Stanford Asia Berkeley North America 232 -1 0 All IP addresses Entry Destination Port 1 2 3 4 5 Stanford Berkeley North America Asia Everywhere (default) 1 2 3 4 5 Berlin – November 10 th, 2011

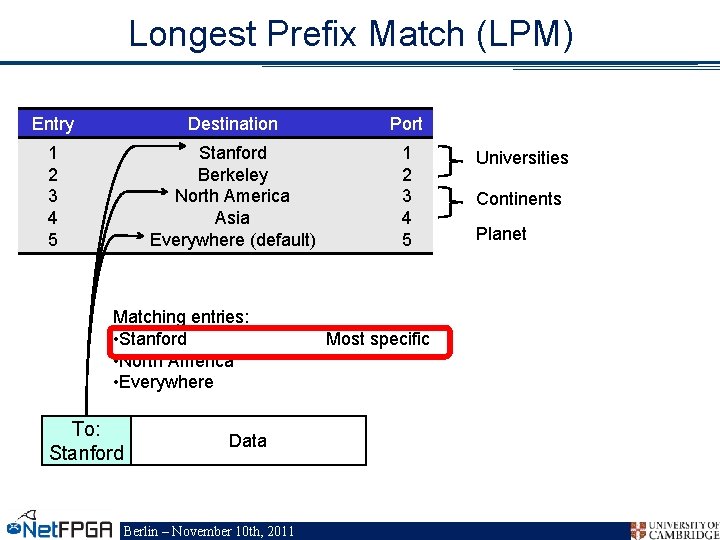

Longest Prefix Match (LPM) Entry Destination Port 1 2 3 4 5 Stanford Berkeley North America Asia Everywhere (default) 1 2 3 4 5 Matching entries: • Stanford • North America • Everywhere To: Stanford Data Berlin – November 10 th, 2011 Most specific Universities Continents Planet

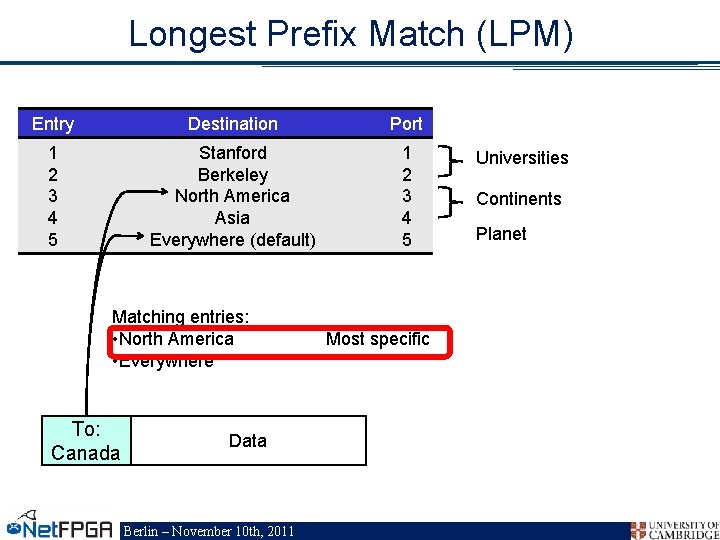

Longest Prefix Match (LPM) Entry Destination Port 1 2 3 4 5 Stanford Berkeley North America Asia Everywhere (default) 1 2 3 4 5 Matching entries: • North America • Everywhere To: Canada Data Berlin – November 10 th, 2011 Most specific Universities Continents Planet

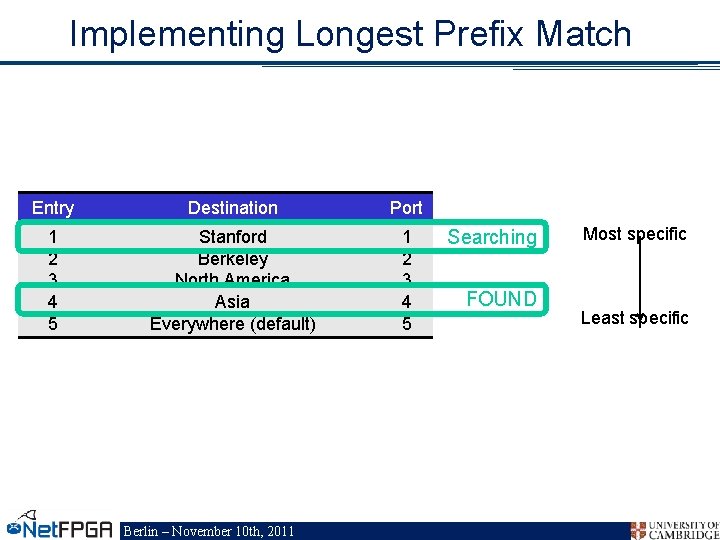

Implementing Longest Prefix Match Entry Destination Port 1 2 3 4 5 Stanford Berkeley North America Asia Everywhere (default) 1 2 3 4 5 Berlin – November 10 th, 2011 Searching FOUND Most specific Least specific

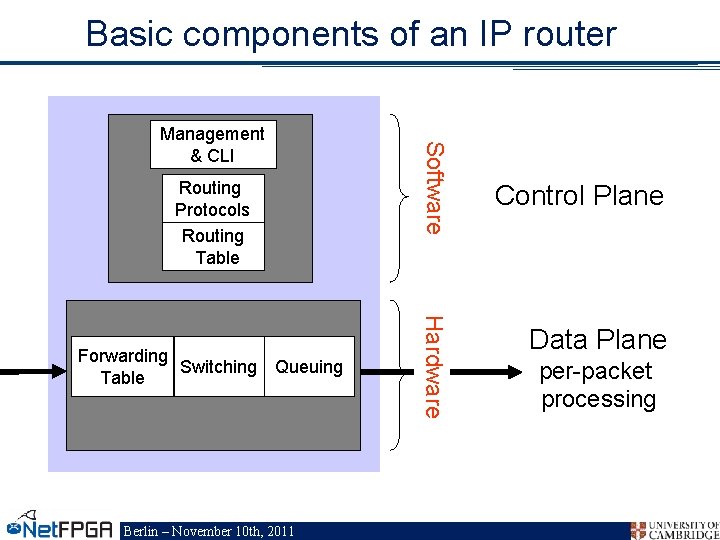

Basic components of an IP router Routing Protocols Routing Table Berlin – November 10 th, 2011 Hardware Forwarding Switching Queuing Table Software Management & CLI Control Plane Data Plane per-packet processing

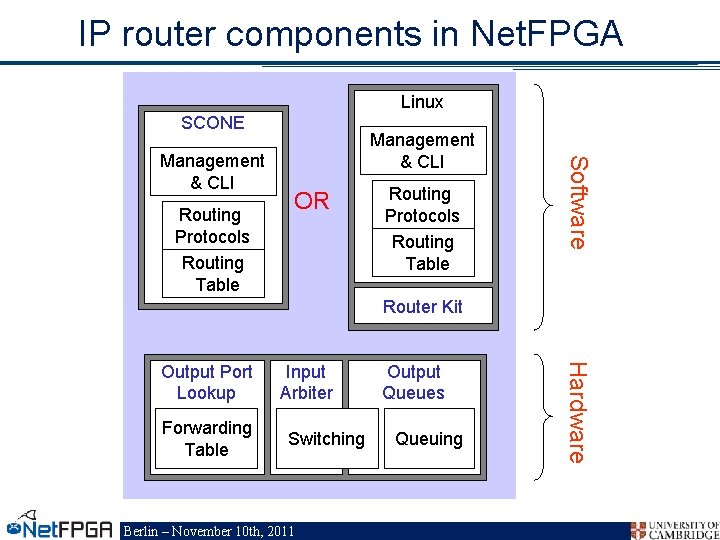

IP router components in Net. FPGA Linux SCONE Routing Protocols Routing Table OR Routing Protocols Routing Table Software Management & CLI Router Kit Forwarding Table Input Arbiter Switching Berlin – November 10 th, 2011 Output Queues Queuing Hardware Output Port Lookup

Section IV: Example I Berlin – November 10 th, 2011

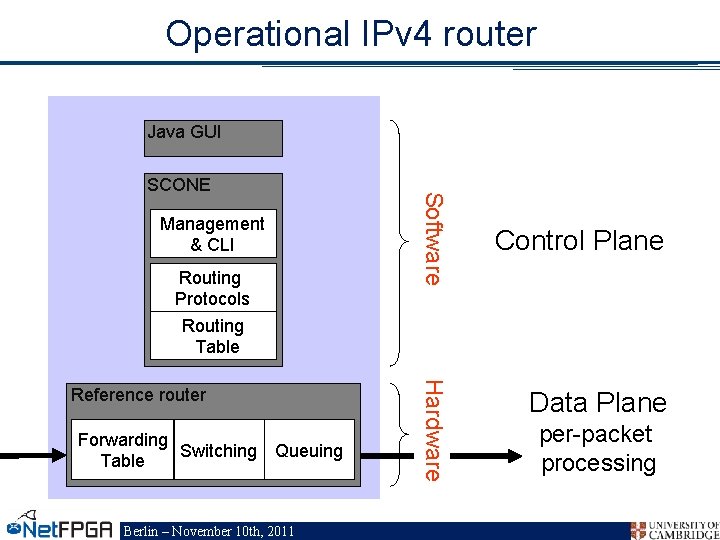

Operational IPv 4 router Java GUI Management & CLI Routing Protocols Routing Table Forwarding Switching Queuing Table Berlin – November 10 th, 2011 Hardware Reference router Software SCONE Control Plane Data Plane per-packet processing

Streaming video Berlin – November 10 th, 2011

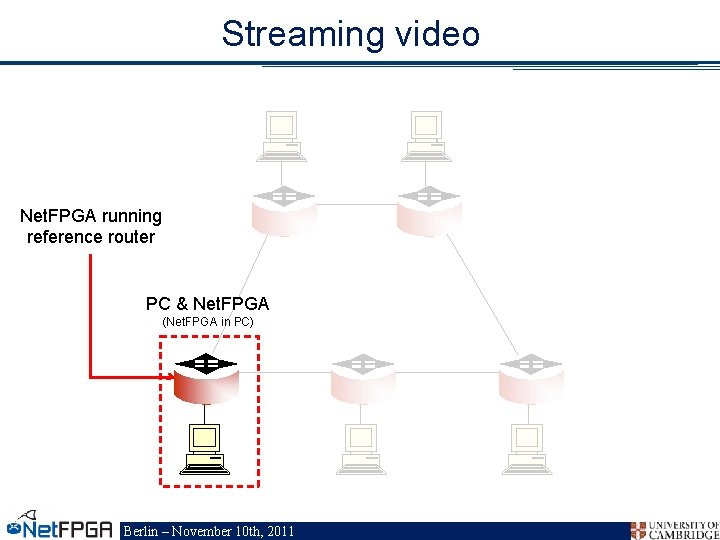

Streaming video Net. FPGA running reference router PC & Net. FPGA (Net. FPGA in PC) Berlin – November 10 th, 2011

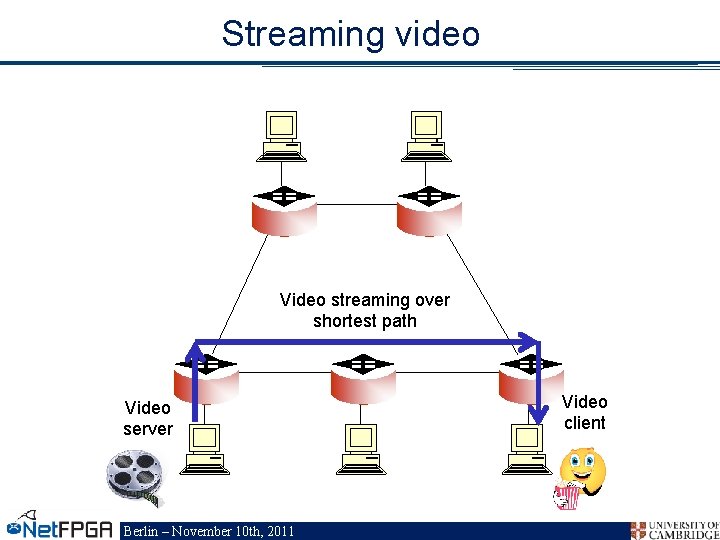

Streaming video Video streaming over shortest path Video server Berlin – November 10 th, 2011 Video client

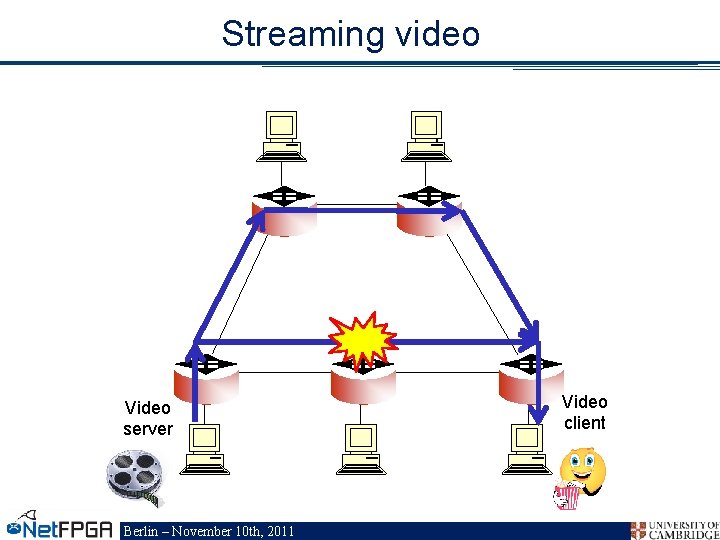

Streaming video Video server Berlin – November 10 th, 2011 Video client

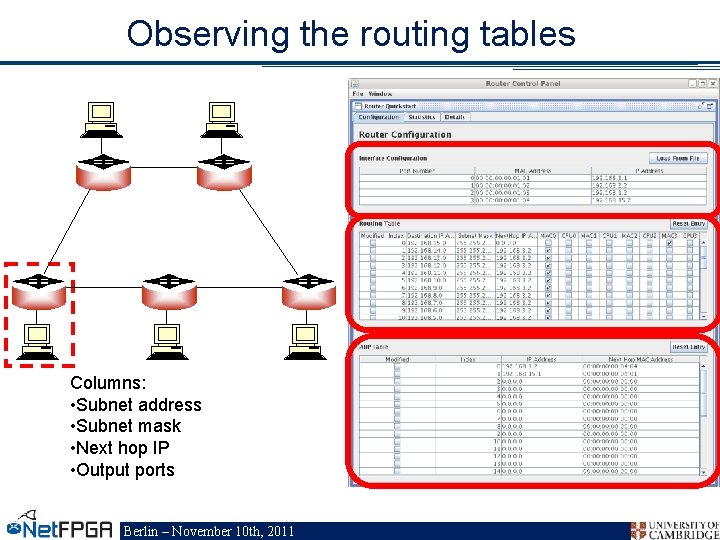

Observing the routing tables Columns: • Subnet address • Subnet mask • Next hop IP • Output ports Berlin – November 10 th, 2011

Example 1 http: //www. youtube. com/watc h? v=x. U 5 DM 5 Hzqes Berlin – November 10 th, 2011

Review Exercise 1 Net. FPGA as IPv 4 router: • Reference hardware + SCONE software • Routing protocol discovers topology Example 1: • Ring topology • Traffic flows over shortest path • Broken link: automatically route around failure Berlin – November 10 th, 2011

Section IV: Example II Berlin – November 10 th, 2011

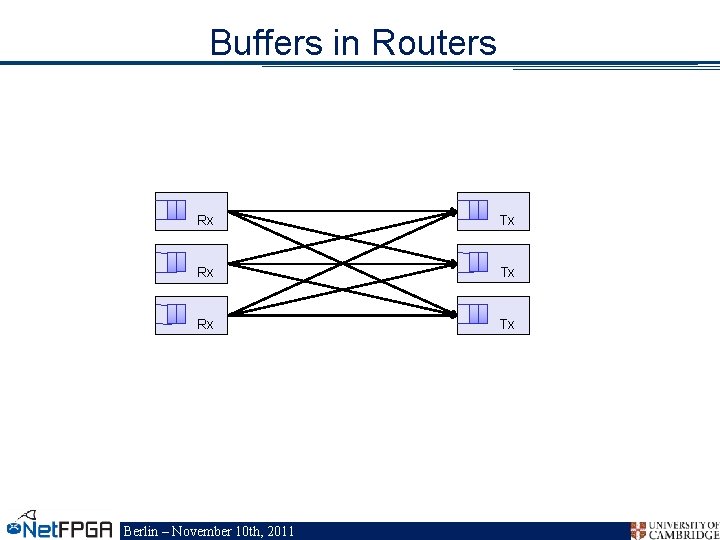

Buffers in Routers • Internal Contention • Congestion • Pipelining Berlin – November 10 th, 2011

Buffers in Routers Rx Tx Berlin – November 10 th, 2011

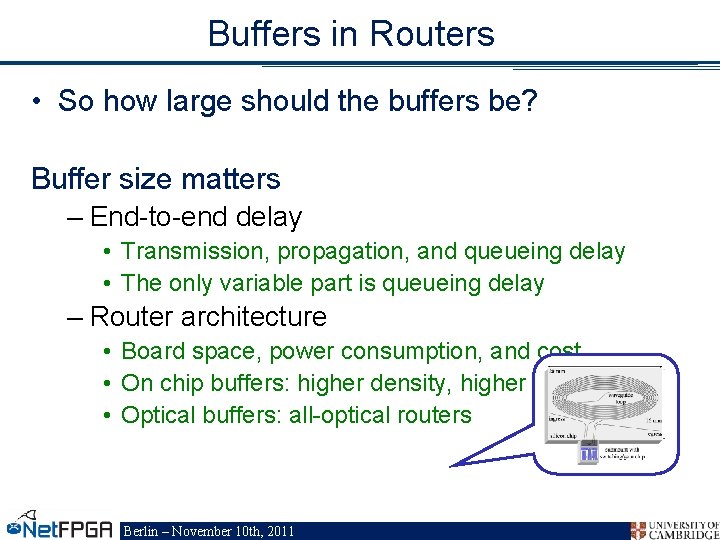

Buffers in Routers • So how large should the buffers be? Buffer size matters – End-to-end delay • Transmission, propagation, and queueing delay • The only variable part is queueing delay – Router architecture • Board space, power consumption, and cost • On chip buffers: higher density, higher capacity • Optical buffers: all-optical routers Berlin – November 10 th, 2011

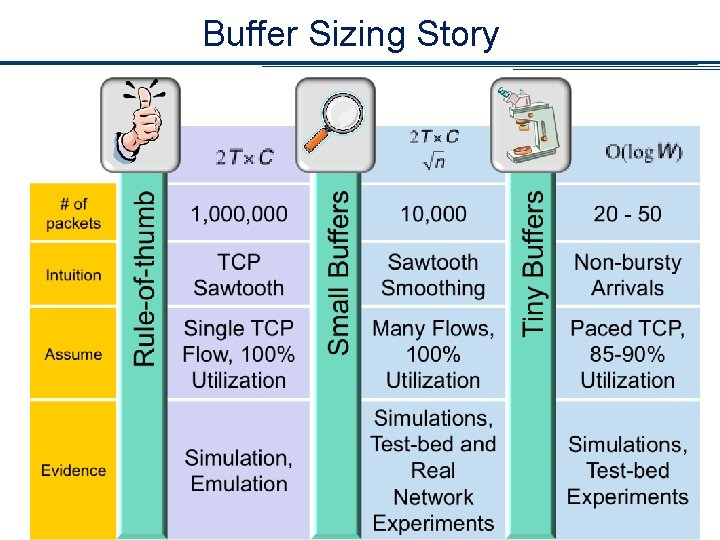

Buffer Sizing Story Berlin – November 10 th, 2011

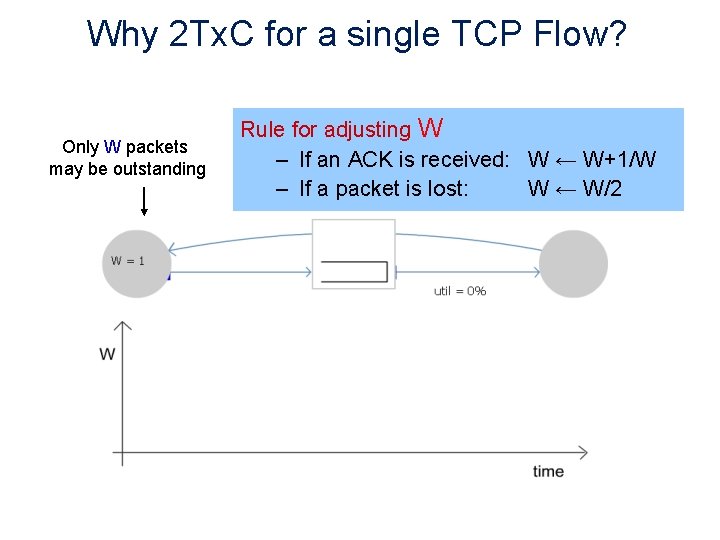

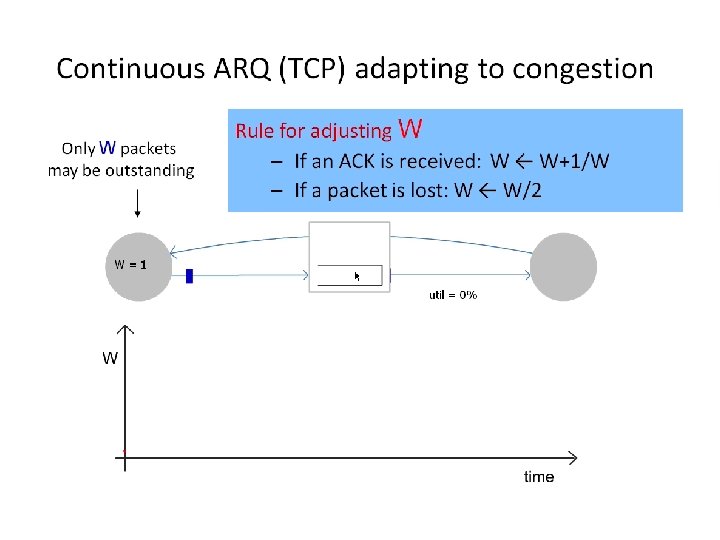

Why 2 Tx. C for a single TCP Flow? Only W packets may be outstanding Rule for adjusting W – If an ACK is received: W ← W+1/W – If a packet is lost: W ← W/2

Berlin – November 10 th, 2011

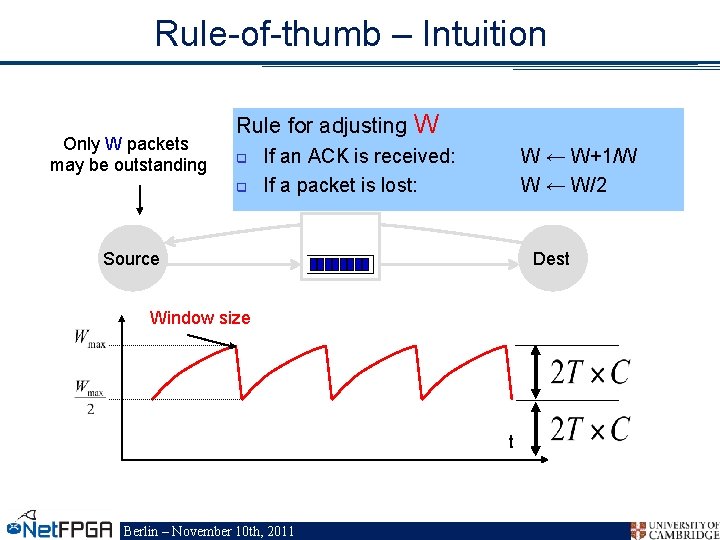

Rule-of-thumb – Intuition Only W packets may be outstanding Rule for adjusting W q q If an ACK is received: If a packet is lost: W ← W+1/W W ← W/2 Source Dest Window size t Berlin – November 10 th, 2011

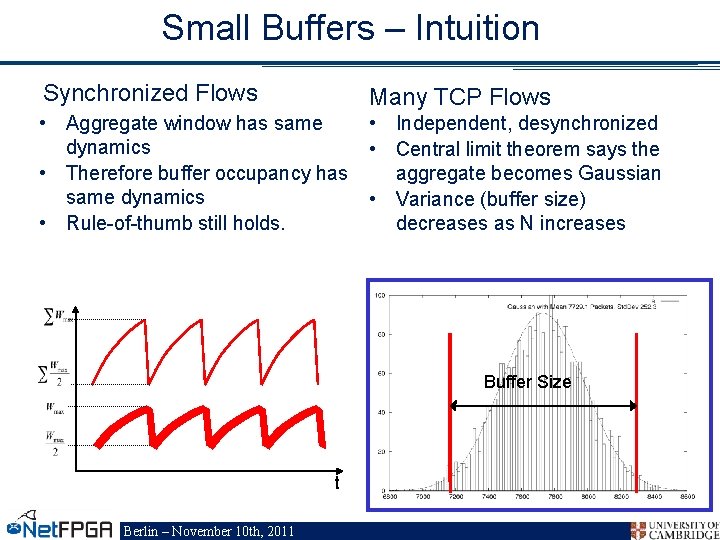

Small Buffers – Intuition Synchronized Flows Many TCP Flows • Aggregate window has same dynamics • Therefore buffer occupancy has same dynamics • Rule-of-thumb still holds. • Independent, desynchronized • Central limit theorem says the aggregate becomes Gaussian • Variance (buffer size) decreases as N increases Buffer Size Probability Distribution t Berlin – November 10 th, 2011 t

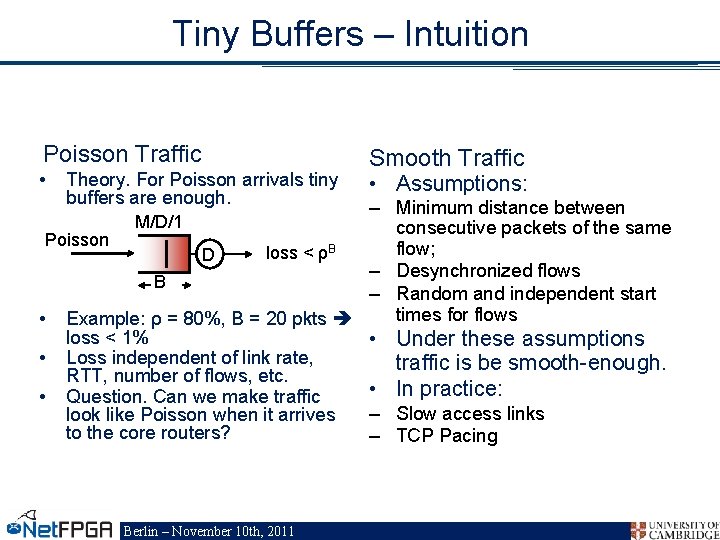

Tiny Buffers – Intuition Poisson Traffic • Theory. For Poisson arrivals tiny buffers are enough. Smooth Traffic • Assumptions: – Minimum distance between consecutive packets of the same Poisson flow; loss < ρB D – Desynchronized flows B – Random and independent start times for flows • Example: ρ = 80%, B = 20 pkts loss < 1% • Under these assumptions • Loss independent of link rate, traffic is be smooth-enough. RTT, number of flows, etc. • In practice: • Question. Can we make traffic – Slow access links look like Poisson when it arrives to the core routers? – TCP Pacing M/D/1 Berlin – November 10 th, 2011

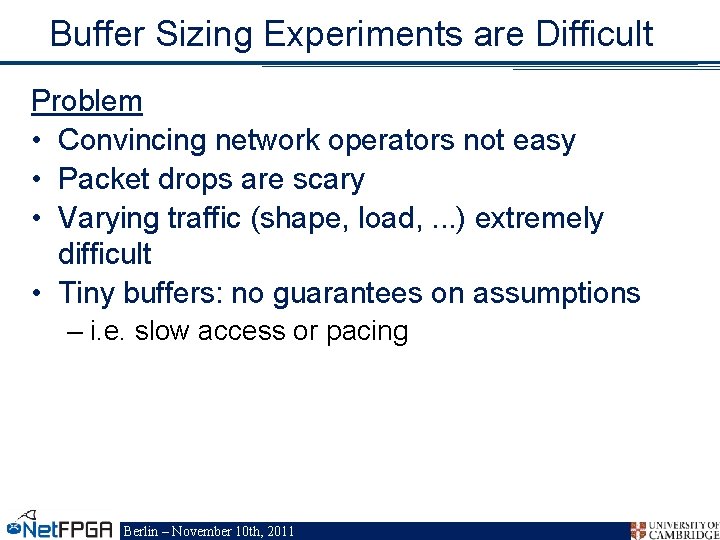

Buffer Sizing Experiments are Difficult Problem • Convincing network operators not easy • Packet drops are scary • Varying traffic (shape, load, . . . ) extremely difficult • Tiny buffers: no guarantees on assumptions – i. e. slow access or pacing Berlin – November 10 th, 2011

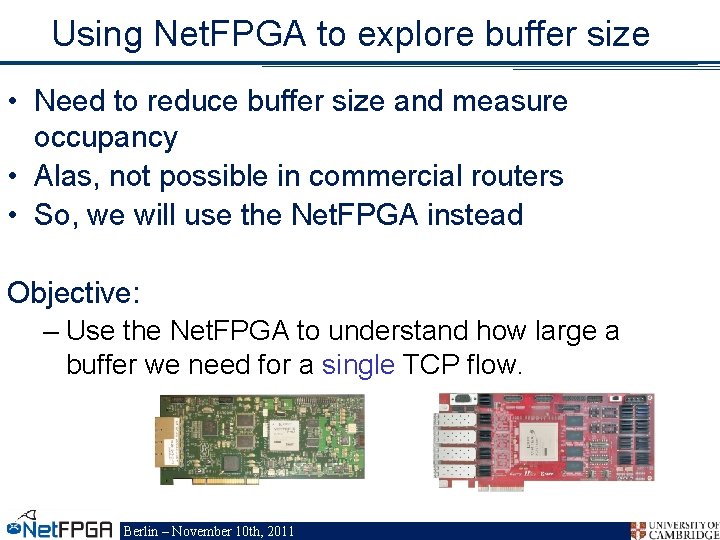

Using Net. FPGA to explore buffer size • Need to reduce buffer size and measure occupancy • Alas, not possible in commercial routers • So, we will use the Net. FPGA instead Objective: – Use the Net. FPGA to understand how large a buffer we need for a single TCP flow. Berlin – November 10 th, 2011

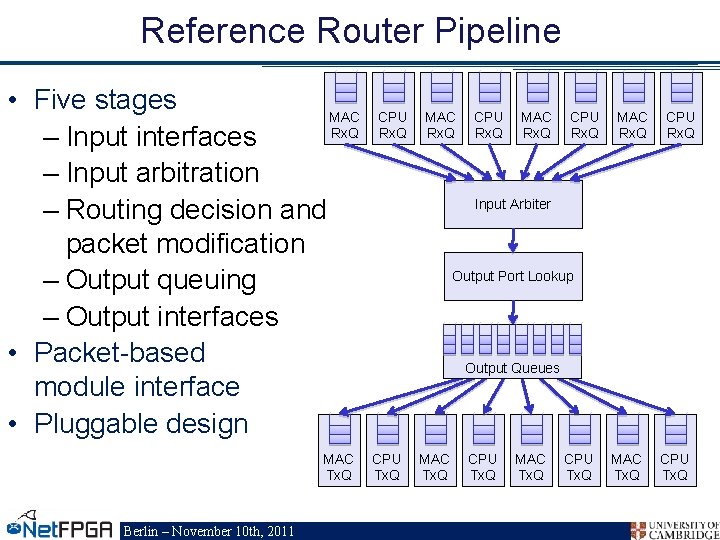

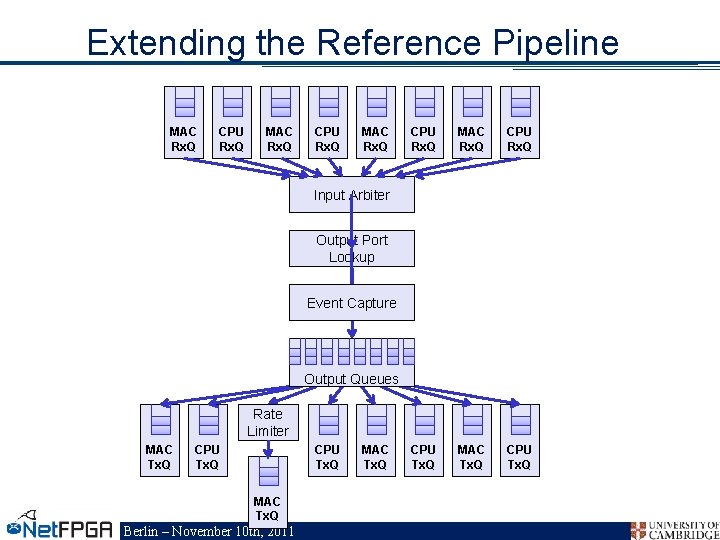

Reference Router Pipeline • Five stages MAC Rx. Q – Input interfaces – Input arbitration – Routing decision and packet modification – Output queuing – Output interfaces • Packet-based module interface • Pluggable design MAC Tx. Q Berlin – November 10 th, 2011 CPU Rx. Q MAC Rx. Q CPU Rx. Q Input Arbiter Output Port Lookup Output Queues CPU Tx. Q MAC Tx. Q CPU Tx. Q

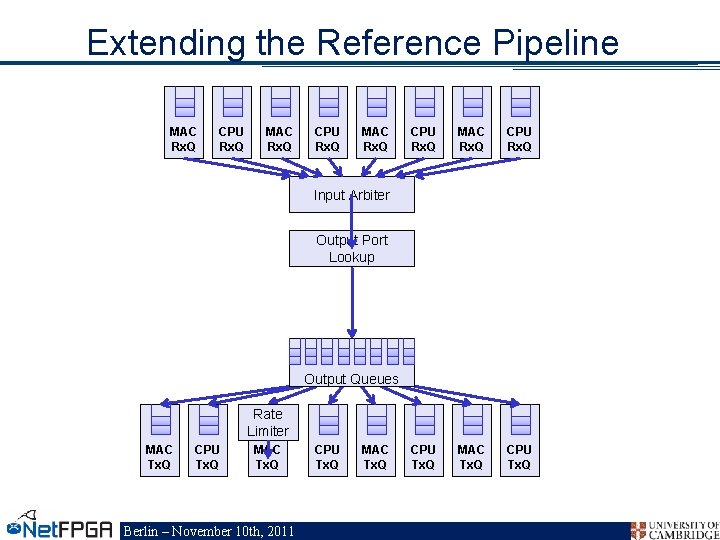

Extending the Reference Pipeline MAC Rx. Q CPU Rx. Q CPU Tx. Q MAC Tx. Q CPU Tx. Q Input Arbiter Output Port Lookup Output Queues Rate Limiter MAC Tx. Q CPU Tx. Q MAC Tx. Q Berlin – November 10 th, 2011 CPU Tx. Q MAC Tx. Q

Extending the Reference Pipeline MAC Rx. Q CPU Rx. Q CPU Tx. Q MAC Tx. Q CPU Tx. Q Input Arbiter Output Port Lookup Event Capture Output Queues Rate Limiter MAC Tx. Q CPU Tx. Q MAC Tx. Q Berlin – November 10 th, 2011 MAC Tx. Q

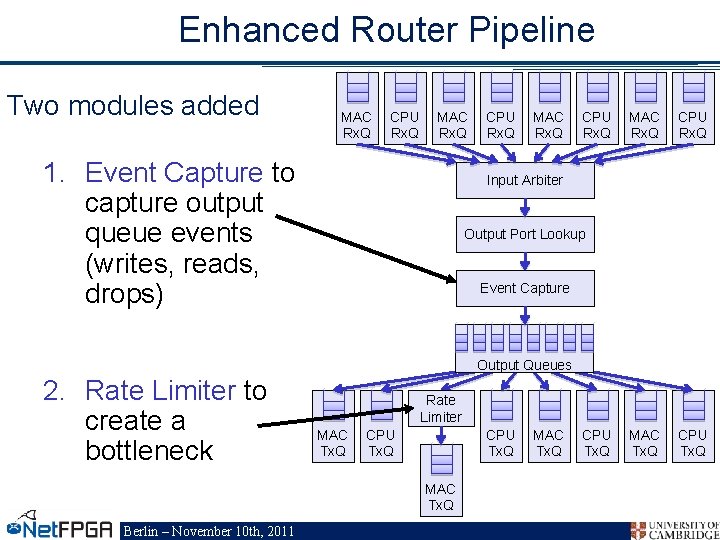

Enhanced Router Pipeline Two modules added MAC Rx. Q CPU Rx. Q MAC Rx. Q 1. Event Capture to capture output queue events (writes, reads, drops) CPU Rx. Q MAC Rx. Q CPU Rx. Q MAC Tx. Q CPU Tx. Q Input Arbiter Output Port Lookup Event Capture Output Queues 2. Rate Limiter to create a bottleneck Rate Limiter MAC Tx. Q CPU Tx. Q MAC Tx. Q Berlin – November 10 th, 2011 MAC Tx. Q CPU Tx. Q

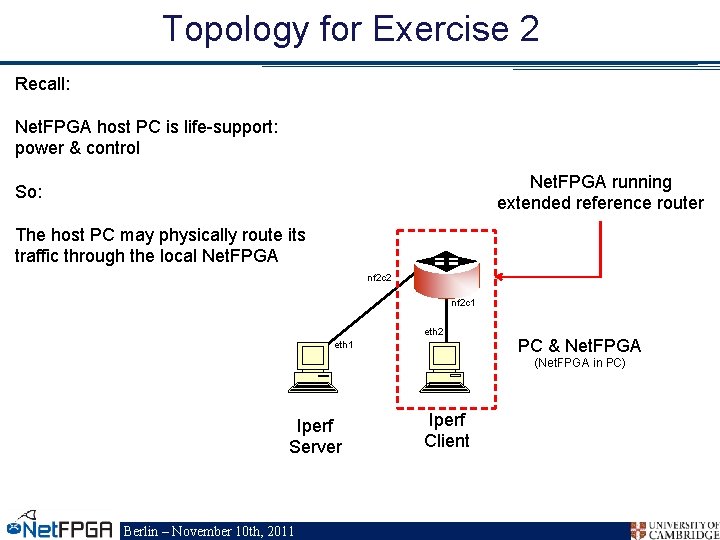

Topology for Exercise 2 Recall: Net. FPGA host PC is life-support: power & control Net. FPGA running extended reference router So: The host PC may physically route its traffic through the local Net. FPGA nf 2 c 2 nf 2 c 1 eth 2 eth 1 PC & Net. FPGA (Net. FPGA in PC) Iperf Server Berlin – November 10 th, 2011 Iperf Client

Example 2 Berlin – November 10 th, 2011

Review Net. FPGA as flexible platform: • Reference hardware + SCONE software • new modules: event capture and rate-limiting Example 2: Client Router Server topology – Observed router with new modules – Started tcp transfer, look at queue occupancy – Observed queue change in response to TCP ARQ Berlin – November 10 th, 2011

Section V: Community Contributions Berlin – November 10 th, 2011

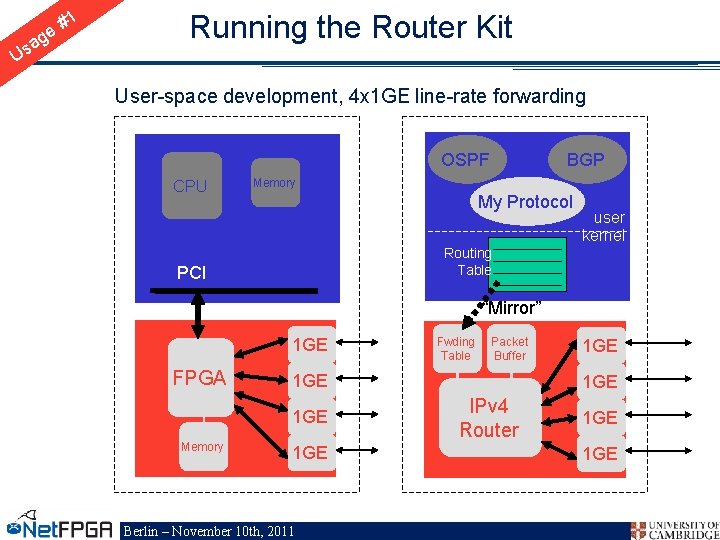

Us #1 e ag Running the Router Kit User-space development, 4 x 1 GE line-rate forwarding OSPF CPU BGP Memory My Protocol user kernel Routing Table PCI “Mirror” 1 GE FPGA Packet Buffer 1 GE Memory Fwding Table 1 GE Berlin – November 10 th, 2011 1 GE IPv 4 Router 1 GE

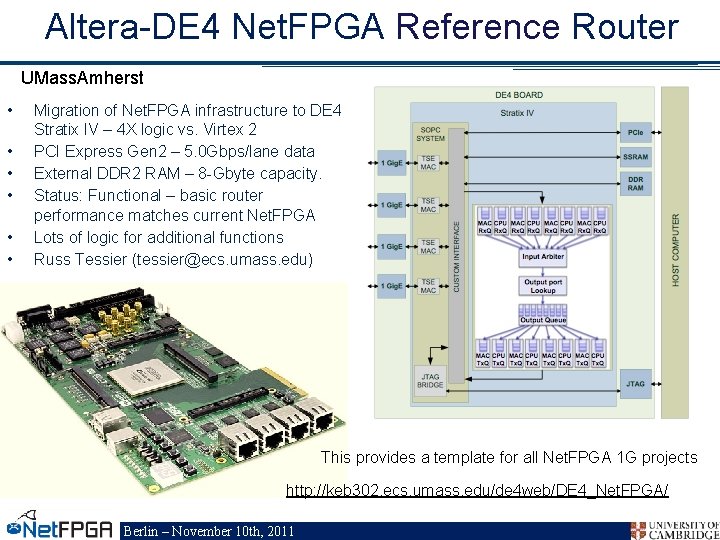

Altera-DE 4 Net. FPGA Reference Router UMass. Amherst • • • Migration of Net. FPGA infrastructure to DE 4 Stratix IV – 4 X logic vs. Virtex 2 PCI Express Gen 2 – 5. 0 Gbps/lane data External DDR 2 RAM – 8 -Gbyte capacity. Status: Functional – basic router performance matches current Net. FPGA Lots of logic for additional functions Russ Tessier (tessier@ecs. umass. edu) This provides a template for all Net. FPGA 1 G projects http: //keb 302. ecs. umass. edu/de 4 web/DE 4_Net. FPGA/ Berlin – November 10 th, 2011

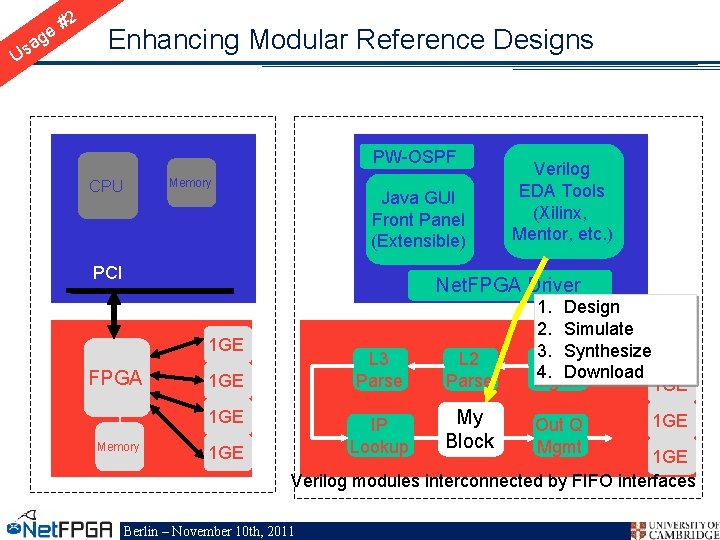

Us #2 e ag Enhancing Modular Reference Designs PW-OSPF Memory CPU Java GUI Front Panel (Extensible) PCI Verilog EDA Tools (Xilinx, Mentor, etc. ) Net. FPGA Driver 1 GE FPGA 1 GE Memory 1 GE L 3 Parse L 2 Parse 1. Design 2. Simulate 3. Synthesize 1 GE In Q 4. Download Mgmt 1 GE IP Lookup My Block Out Q Mgmt 1 GE Verilog modules interconnected by FIFO interfaces Berlin – November 10 th, 2011

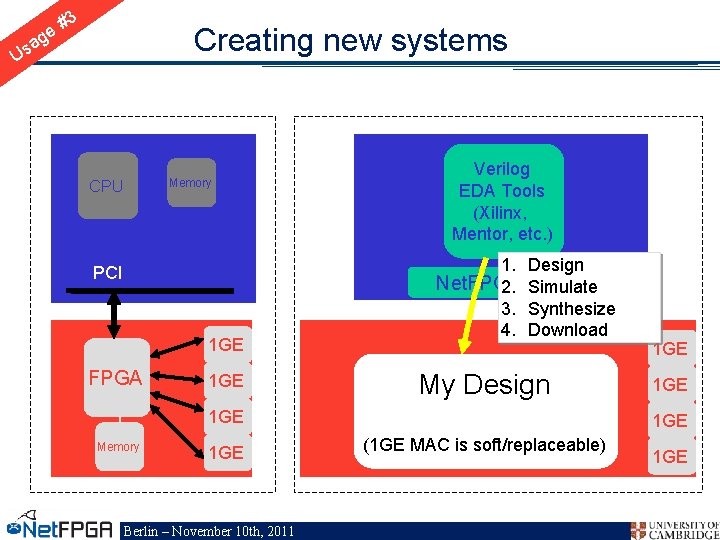

#3 e ag Creating new systems Us Memory CPU PCI 1 GE FPGA 1 GE Verilog EDA Tools (Xilinx, Mentor, etc. ) 1. Design Net. FPGA 2. Driver Simulate 3. Synthesize 4. Download My Design 1 GE Memory 1 GE Berlin – November 10 th, 2011 1 GE 1 GE (1 GE MAC is soft/replaceable) 1 GE

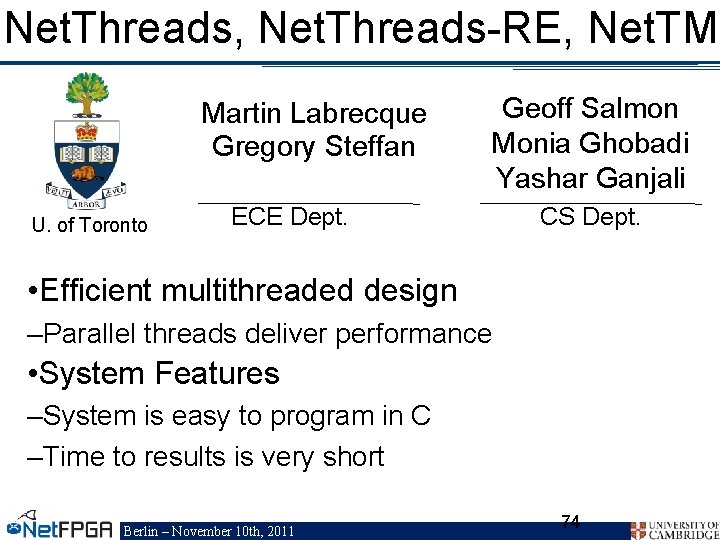

Net. Threads, Net. Threads-RE, Net. TM Martin Labrecque Gregory Steffan U. of Toronto Geoff Salmon Monia Ghobadi Yashar Ganjali ECE Dept. CS Dept. • Efficient multithreaded design –Parallel threads deliver performance • System Features –System is easy to program in C –Time to results is very short Berlin – November 10 th, 2011 74

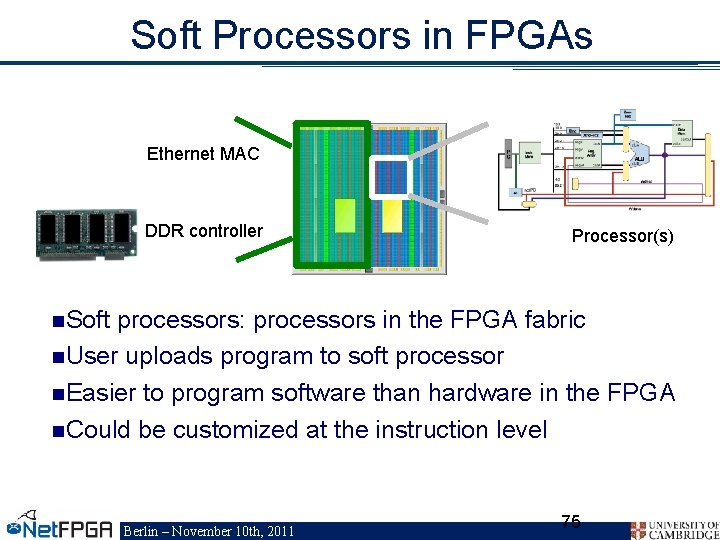

Soft Processors in FPGAs FPGA Ethernet MAC DDR controller Processor(s) n. Soft processors: processors in the FPGA fabric n. User uploads program to soft processor n. Easier to program software than hardware in the FPGA n. Could be customized at the instruction level Berlin – November 10 th, 2011 75

Net. Threads Martin Labrecque martin. L@eecg. utoronto. ca Net. Threads, Net. Threads-RE & Net. TM available with supporting software at: http: //www. netfpga. org/foswiki/bin/view/Net. FPGA/One. Gig/Net. Threads. RE http: //netfpga. org/foswiki/bin/view/Net. FPGA/One. Gig/Net. TM Berlin – November 10 th, 2011

Section VI: What to do next? Berlin – November 10 th, 2011

To get started with your project 1. Get familiar with hardware description language 2. Prepare for your project a) Learn Net. FPGA by yourself b) Get a hands-on tutorial Berlin – November 10 th, 2011

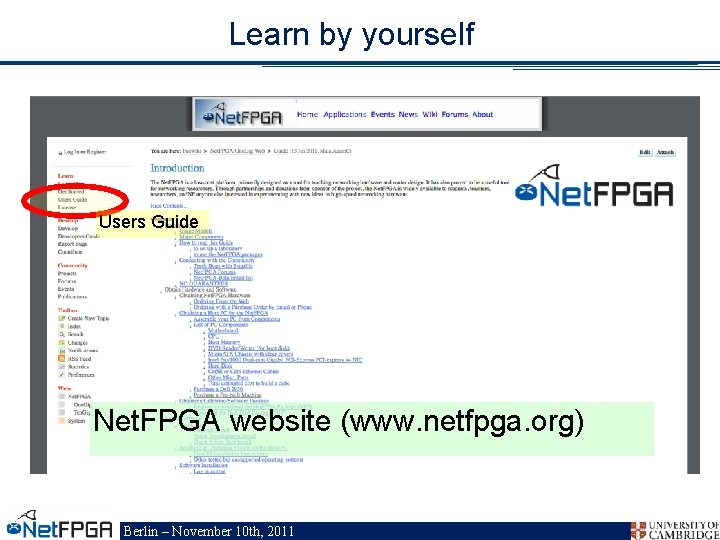

Learn by yourself Users Guide Net. FPGA website (www. netfpga. org) Berlin – November 10 th, 2011

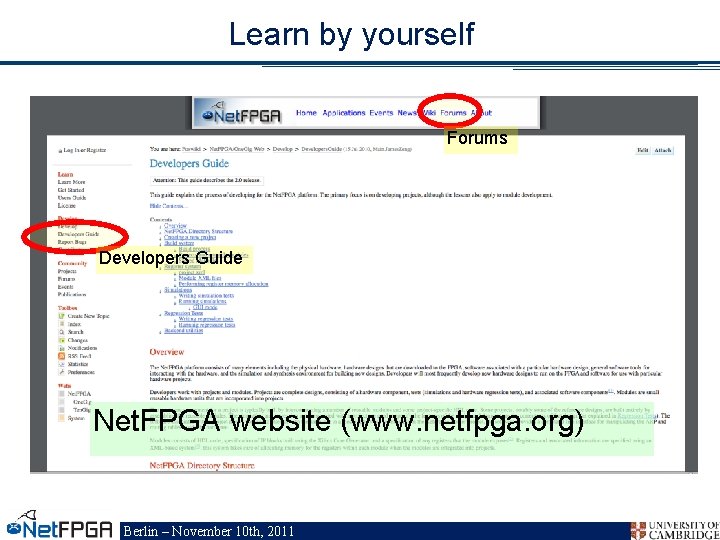

Learn by yourself Forums Developers Guide Net. FPGA website (www. netfpga. org) Berlin – November 10 th, 2011

Learn by Yourself Online tutor – coming soon! Support for Net. FPGA enhancements provided by Berlin – November 10 th, 2011

Get a hands-on tutorial Cambridge Stanford Berlin – November 10 th, 2011

Get a hands-on tutorial Events Net. FPGA website (www. netfpga. org) Berlin – November 10 th, 2011

Section VII: Conclusion Berlin – November 10 th, 2011

Conclusions • Net. FPGA Provides – Open-source, hardware-accelerated Packet Processing – Modular interfaces arranged in reference pipeline – Extensible platform for packet processing • Net. FPGA Reference Code Provides – Large library of core packet processing functions – Scripts and GUIs for simulation and system operation – Set of Projects for download from repository • The Net. FPGA Base Code – Well defined functionality defined by regression tests – Function of the projects documented in the Wiki Guide Berlin – November 10 th, 2011

Acknowledgments Net. FPGA Team at Stanford University (Past and Present): Nick Mc. Keown, Glen Gibb, Jad Naous, David Erickson, G. Adam Covington, John W. Lockwood, Jianying Luo, Brandon Heller, Paul Hartke, Neda Beheshti, Sara Bolouki, James Zeng, Jonathan Ellithorpe, Sachidanandan Sambandan, Eric Lo Net. FPGA Team at University of Cambridge (Past and Present): Andrew Moore, Shahbaz Muhammad, David Miller, Martin Zadnik All Community members (including but not limited to): Paul Rodman, Kumar Sanghvi, Wojciech A. Koszek, Yahsar Ganjali, Martin Labrecque, Jeff Shafer, Eric Keller , Tatsuya Yabe, Bilal Anwer, Yashar Ganjali, Martin Labrecque Kees Vissers, Michaela Blott, Shep Siegel Berlin – November 10 th, 2011

Special thanks to our Partners: Ram Subramanian, Patrick Lysaght, Veena Kumar, Paul Hartke, Anna Acevedo Xilinx University Program (XUP) Other Net. FPGA Tutorial Presented At: SIGMETRICS See: http: //Net. FPGA. org/tutorials/ Berlin – November 10 th, 2011

Thanks to our Sponsors: • Support for the Net. FPGA project has been provided by the following companies and institutions Disclaimer: Any opinions, findings, conclusions, or recommendations expressed in these materials do not necessarily reflect the views of the National Science Foundation or of any other sponsors supporting this project. Berlin – November 10 th, 2011

- Slides: 88