Needs Assessment and Program Evaluation Needs Assessment is

- Slides: 28

Needs Assessment and Program Evaluation

Needs Assessment is: • A type of applied research. Data is collected for a purpose! • Can be either a descriptive or exploratory study. • Can use either quantitative or qualitative methods. • Can use a combination of both qualitative or quantitative data. We have more confidence in our data if we have similar findings using both methods • Most often involves collecting information from community participants or existing data (indicators or case records). • In some cases, subjects or participants can be involved in research design, data collection, and analysis.

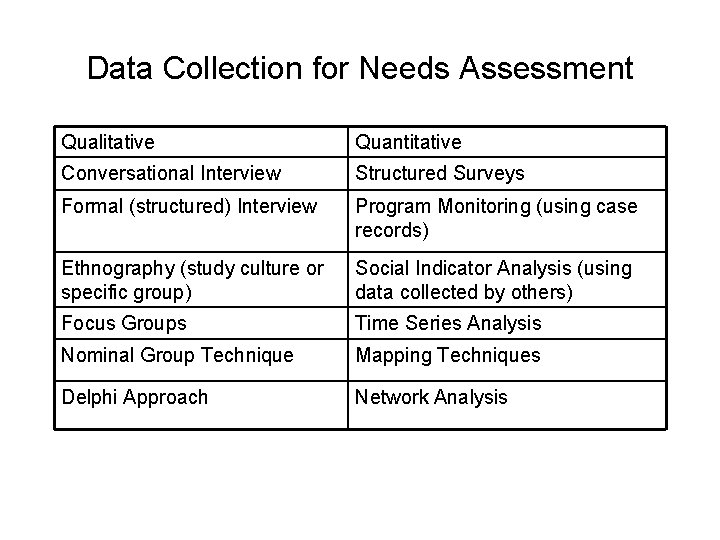

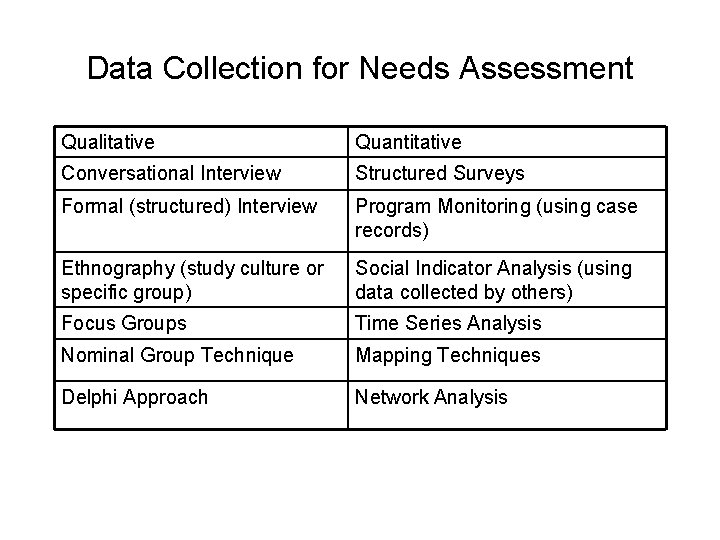

Data Collection for Needs Assessment Qualitative Quantitative Conversational Interview Structured Surveys Formal (structured) Interview Program Monitoring (using case records) Ethnography (study culture or specific group) Social Indicator Analysis (using data collected by others) Focus Groups Time Series Analysis Nominal Group Technique Mapping Techniques Delphi Approach Network Analysis

Needs Assessment is most commonly undertaken • To document needs of community residents and plan interventions (community organization). To provide a justification for new programs or services. As a component of a grant proposal. To identify gaps in services.

Most commonly used techniques for empirical studies • Formal interviews with clients, workers, community residents, or key informants • Surveys (respondents are usually asked to rank a list of needs) • Focus groups • Social indicator or case record analysis

Other characteristics of needs assessment • No interventions • Not easily tied to theoretical literature • Few standardized methods for instrument development or data collection. • Instruments and findings should vary substantially by setting and population group. • Tests for reliability/validity are limited to pre-testing the instrument. • Some ideal types of quantitative methods are not necessary here. For example, sampling is often convenience or purposive sampling rather than random.

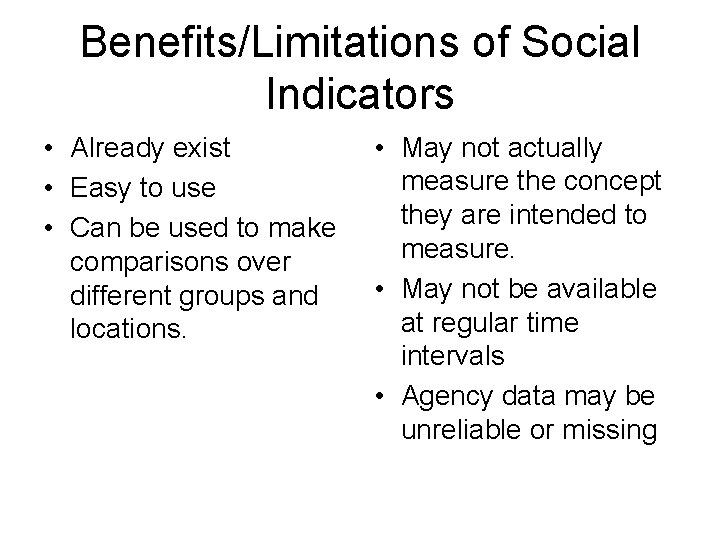

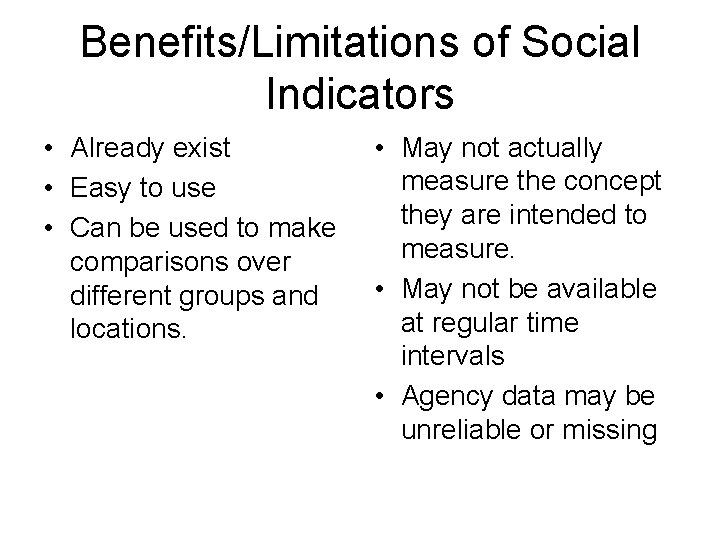

Benefits/Limitations of Social Indicators • Already exist • Easy to use • Can be used to make comparisons over different groups and locations. • May not actually measure the concept they are intended to measure. • May not be available at regular time intervals • Agency data may be unreliable or missing

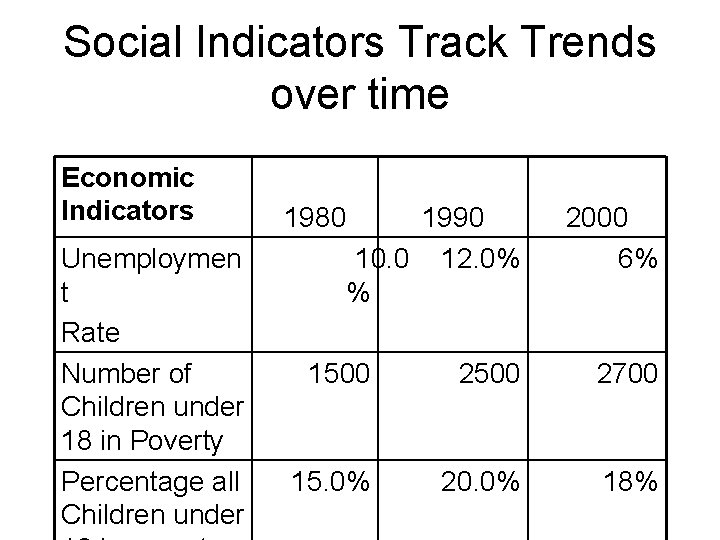

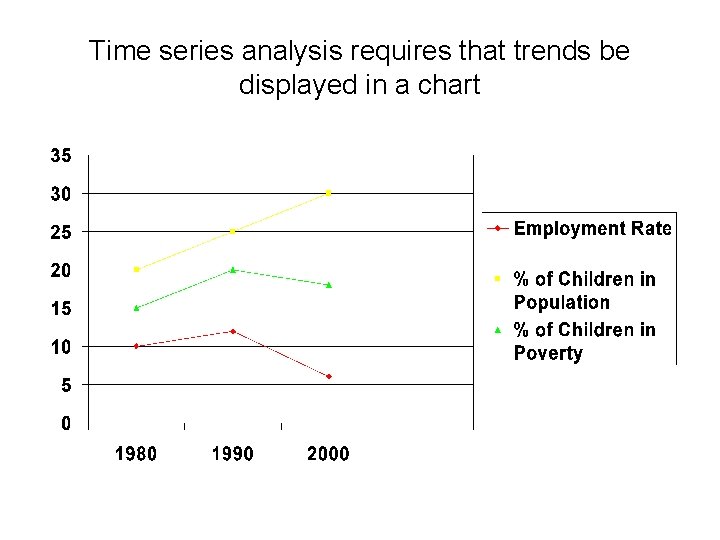

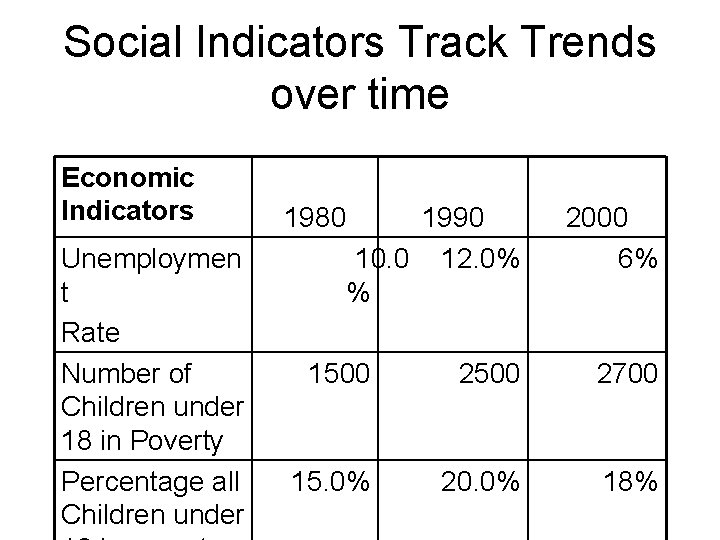

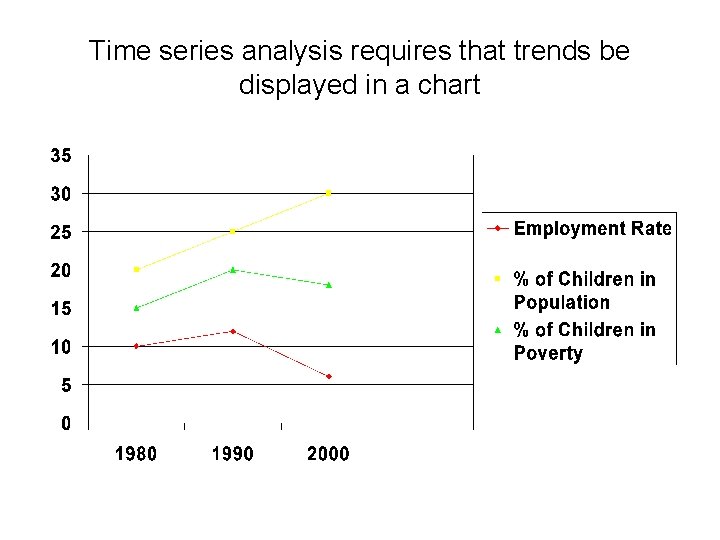

Social Indicators Track Trends over time Economic Indicators Unemploymen t Rate Number of Children under 18 in Poverty Percentage all Children under 1980 1990 10. 0 12. 0% % 2000 6% 1500 2700 15. 0% 20. 0% 18%

Time series analysis requires that trends be displayed in a chart

Time series limitations • • Does not establish cause and effect Does not control for confounding variables There may be unexplained effects Data pertains to the general population rather than subgroups. Consequently, sample can not be divided between control and experimental groups.

Program evaluations measure: Program effectiveness, efficiency, quality, and participant satisfaction with the program.

Program evaluation can also measure: How or why a program is effective or is not effective

Program evaluation looks at the program or component of a program. It is often used to assess whether a program has actually been implemented in the intended manner

The program’s goals & objectives serve as the starting place for program evaluations. • Objectives must be measurable, time-limited, and contain an evaluation mechanism. • Be developed in relation to a specific program or intervention plan. • Specify processes and tasks to be completed. • Incorporate the program’s theory of action – describe how the program works and what it is expected to do (outcomes). • To start an evaluation, the evaluator must find out what program participants identify as the goal (evaluability assessment).

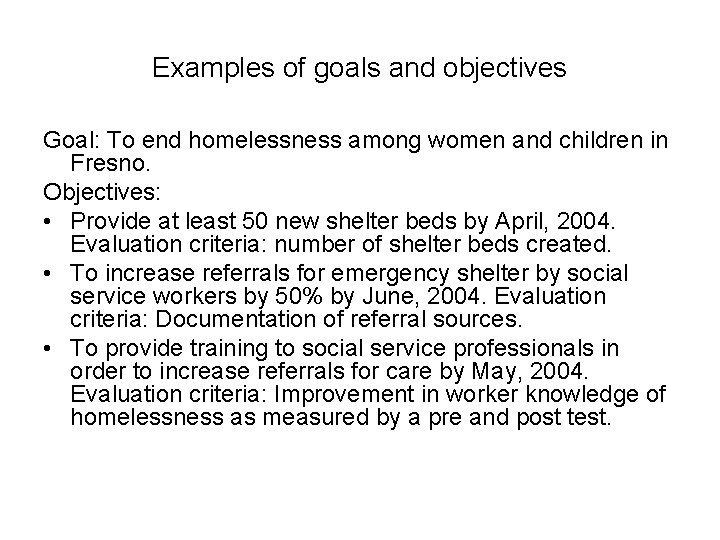

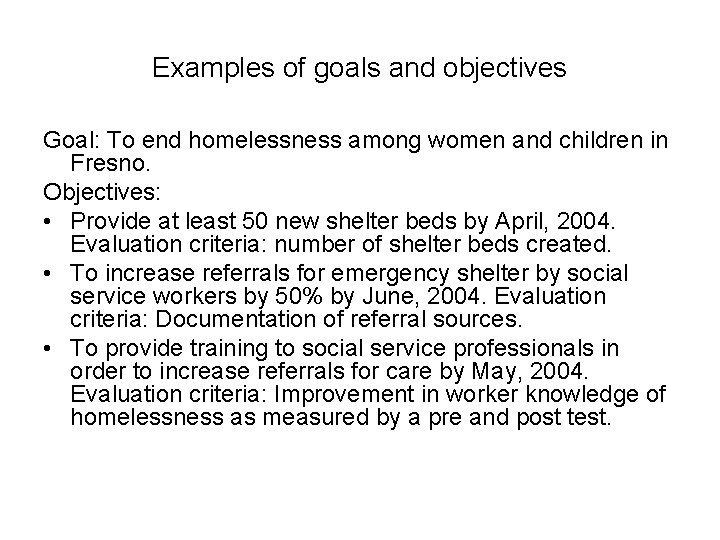

Examples of goals and objectives Goal: To end homelessness among women and children in Fresno. Objectives: • Provide at least 50 new shelter beds by April, 2004. Evaluation criteria: number of shelter beds created. • To increase referrals for emergency shelter by social service workers by 50% by June, 2004. Evaluation criteria: Documentation of referral sources. • To provide training to social service professionals in order to increase referrals for care by May, 2004. Evaluation criteria: Improvement in worker knowledge of homelessness as measured by a pre and post test.

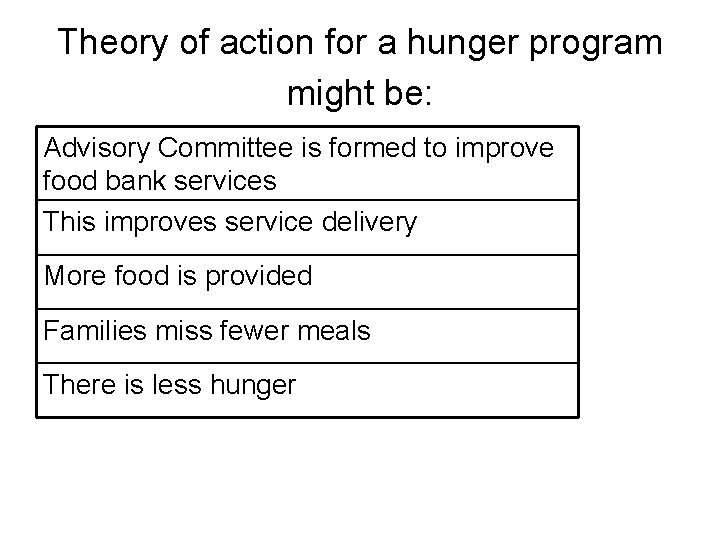

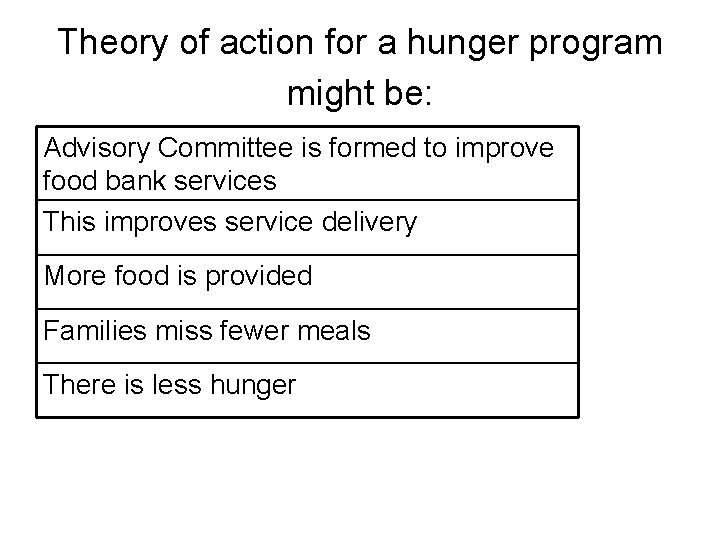

Theory of action for a hunger program might be: Advisory Committee is formed to improve food bank services This improves service delivery More food is provided Families miss fewer meals There is less hunger

Evaluations can measure process or outcomes • Qualitative methods are used to answer how and why questions (process) • Quantitative methods are used to answer what questions- what outcomes were produced; was the program successful, effective, or efficient.

Most common types: • Outcome evaluation (quantitative - may or may not use control groups to measure effectiveness). • Goal attainment (have objectives been achieved). • Process evaluation (qualitative - looks at how or why a program works or doesn’t work). • Implementation analysis (mixed methods – was the program implemented in the manner intended). • Program monitoring (mixed methods – is the program meeting its goals – conducted while the program is in progress).

Client satisfaction surveys are often used as one component of a program evaluation. • Can provide valuable information about how clientele perceive the program and may suggest how the program can be changed to make it more effective or accessible. • Client satisfaction surveys also have methodological limitations.

Limitations include: • It is difficult to define and measure “satisfaction. ” • Few standardized satisfaction instruments, that have been tested for validity and reliability exit. • Most surveys find that 80 -90% of participants are satisfied with the program. Most researchers are skeptical that such levels of satisfaction exit. Hence, most satisfaction surveys are believed to be unreliable. • Since agencies want to believe their programs are good, the wording may be biased. • Clients who are dependent on the program for services or who fear retaliation may not provide accurate responses.

Problems with client satisfaction surveys can be addressed. • Pre-testing to ensure face validity and reliability. • Asking respondents to indicate their satisfaction level with various components of the program. • Ensuring that administration of the survey is separated from service delivery and that confidentiality of clients/consumers is protected.

Process and Most Implementation Evaluations • Assume that the program is a “black box” – with input- throughput – and output. • Use some mixture of interviews, document analysis, observations, or semi-structured surveys. • Gather information from a variety of organization participants: administrators, front-line staff, and clients. These evaluations also examine communication patterns, program policies, and the interaction among individuals, groups, programs, or organizations in the external environment.

Use the following criteria to determine type of evaluation • Research question to be addressed. • Amount of resources and time that can be allocated for research. • Ethics (can you reasonably construct control groups or hold confounding variables constant? ) • Will the evaluation be conducted by an internal or external evaluator? • Who is audience for the evaluation? • How will the data be used? • Who will be involved in the evaluation?

Choice of sampling methods • Probability sampling: random; systematic sampling; cluster sampling • Nonprobability sampling: purposive; convenience sampling; quota sampling; snowball sampling

Types of research design • Experimental Design • Quasi-experimental design. Constructed control/experimental groups. • One group only • Time Series

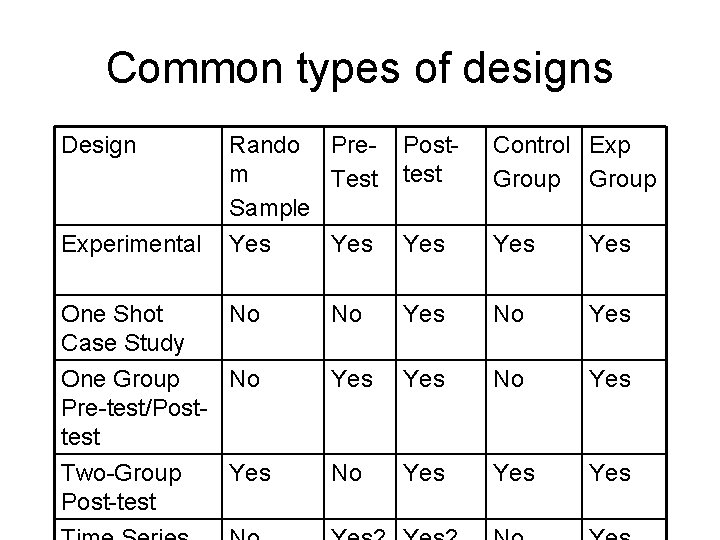

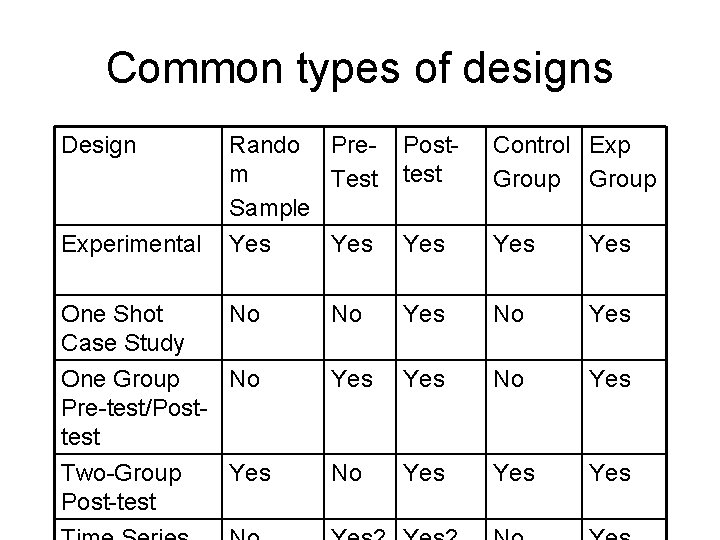

Common types of designs Design Rando Prem Test Sample Posttest Control Exp Group Experimental Yes Yes Yes One Shot No Case Study One Group No Pre-test/Posttest No Yes Yes No Yes Two-Group Post-test No Yes Yes

Problems with designs • Internal Validity – can possible alternative explanations be found for the cause and effect relationship between the independent and dependent variables? Are there confounding variables that influence the results? • External Validity – Can the findings be generalized to other settings or people?

Common threats to internal & external validity • • Selection Bias History Maturation Testing Instrumentation Mortality Statistical Regression Interaction among any of these factors • Hawthorne Effect • Interaction of selection and intervention • Multiple treatment interaction