Near Duplicate Image Detection minHash and tfidf weighting

![Image Representation SIFT descriptor [Lowe’ 04] Feature detector Vector quantization 1 2 0 0 Image Representation SIFT descriptor [Lowe’ 04] Feature detector Vector quantization 1 2 0 0](https://slidetodoc.com/presentation_image_h/f382034f27217ab59c2db2c6124892b8/image-4.jpg)

- Slides: 29

Near Duplicate Image Detection: min-Hash and tf-idf weighting Ondřej Chum Center for Machine Perception Czech Technical University in Prague co-authors: James Philbin and Andrew Zisserman

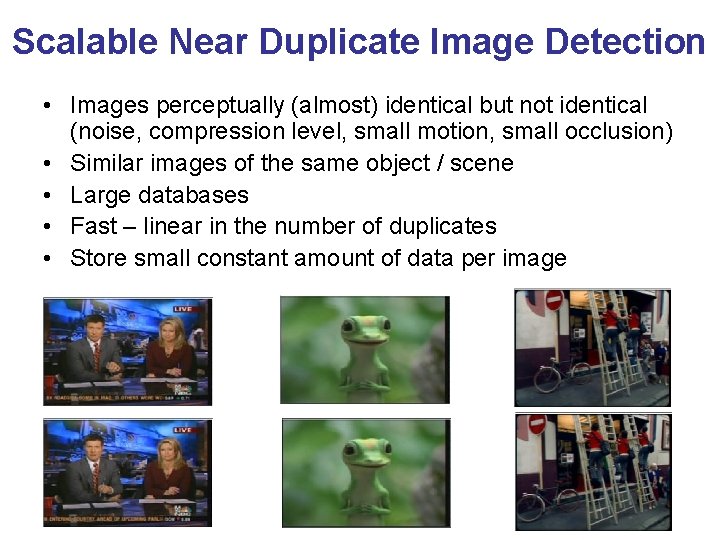

Outline • Near duplicate detection and large databases (find all groups of near duplicate images in a database) • • • min-Hash review Novel similarity measures Results on Trec. Vid 2006 Results on the University of Kentucky database (Nister & Stewenius) Beyond near duplicates

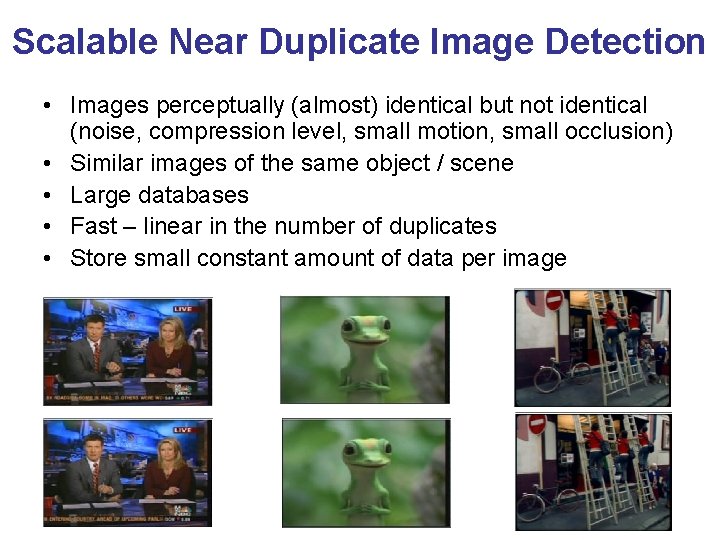

Scalable Near Duplicate Image Detection • Images perceptually (almost) identical but not identical (noise, compression level, small motion, small occlusion) • Similar images of the same object / scene • Large databases • Fast – linear in the number of duplicates • Store small constant amount of data per image

![Image Representation SIFT descriptor Lowe 04 Feature detector Vector quantization 1 2 0 0 Image Representation SIFT descriptor [Lowe’ 04] Feature detector Vector quantization 1 2 0 0](https://slidetodoc.com/presentation_image_h/f382034f27217ab59c2db2c6124892b8/image-4.jpg)

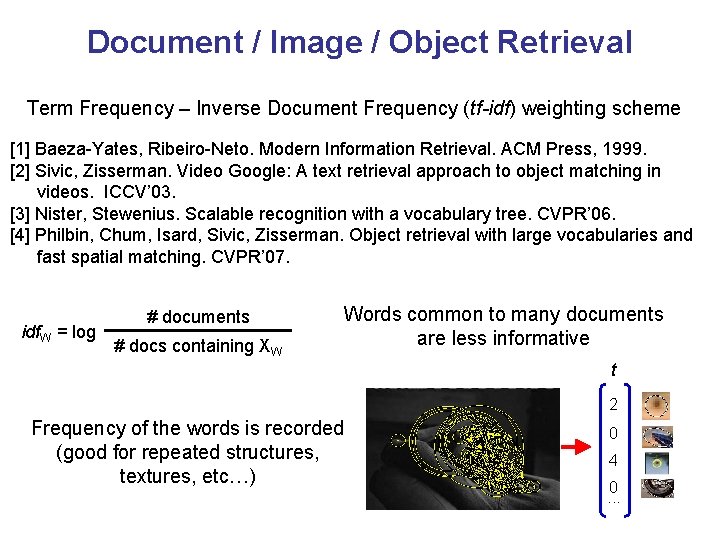

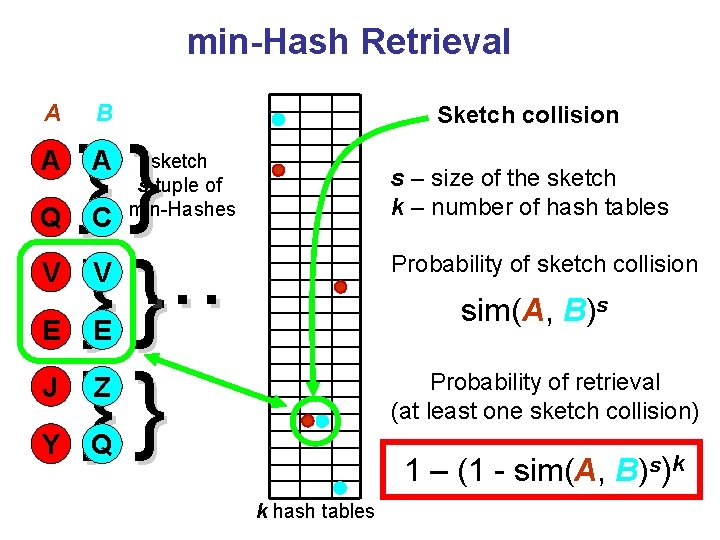

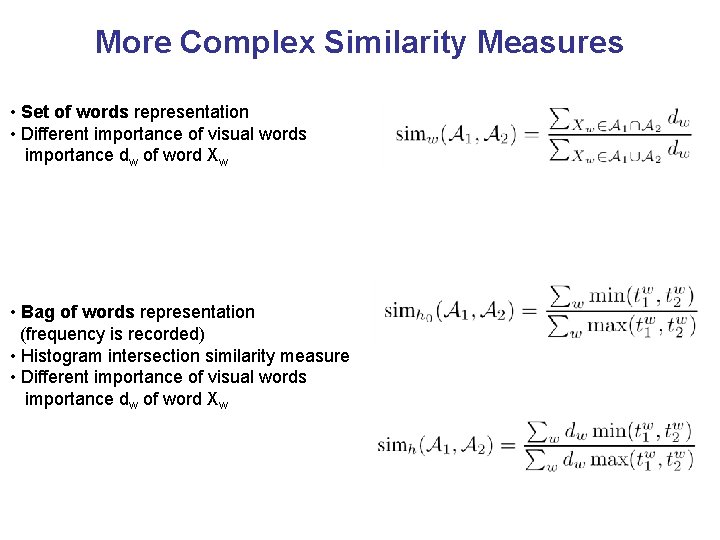

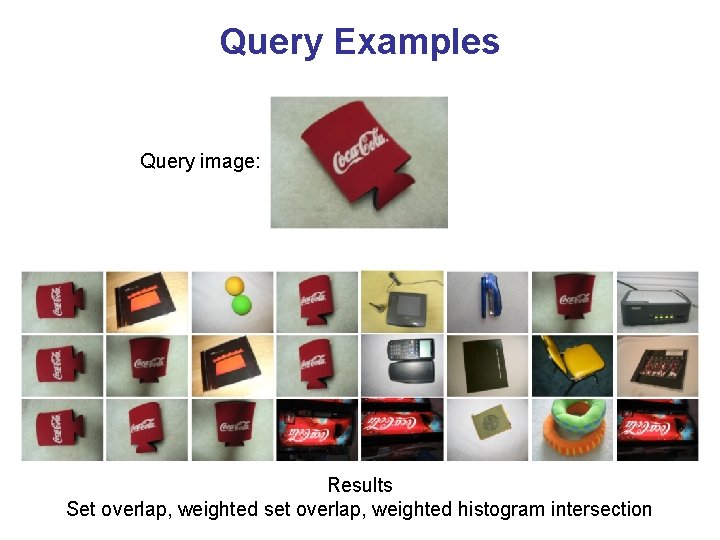

Image Representation SIFT descriptor [Lowe’ 04] Feature detector Vector quantization 1 2 0 0 1 4 0. . . Set of words Bag of words … Visual vocabulary

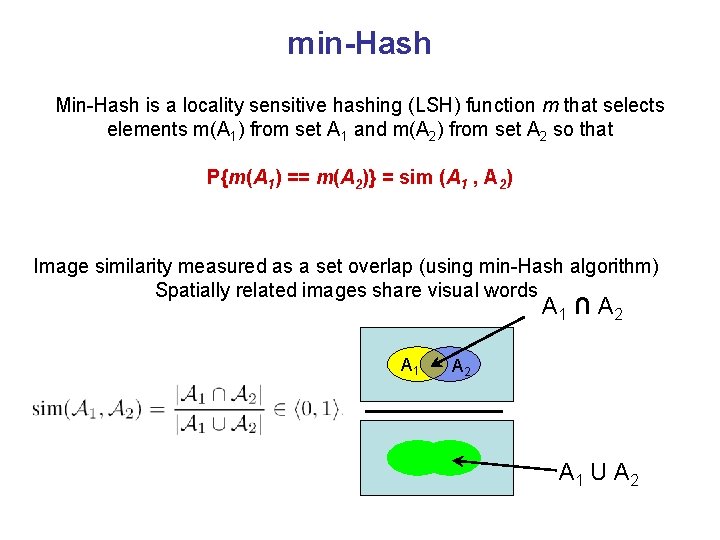

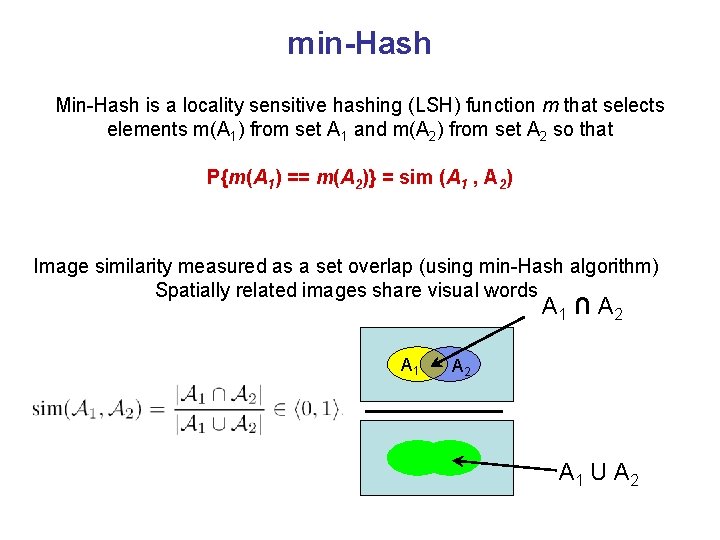

min-Hash Min-Hash is a locality sensitive hashing (LSH) function m that selects elements m(A 1) from set A 1 and m(A 2) from set A 2 so that P{m(A 1) == m(A 2)} = sim (A 1 , A 2) Image similarity measured as a set overlap (using min-Hash algorithm) Spatially related images share visual words A 1 ∩ A 2 A 1 U A 2

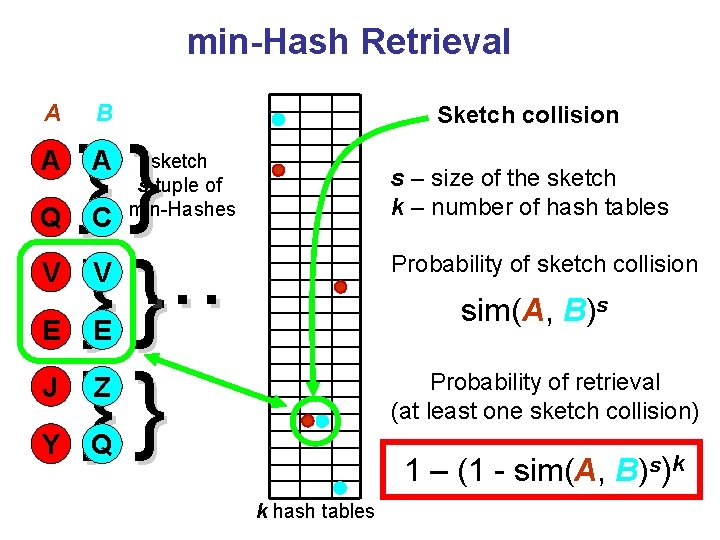

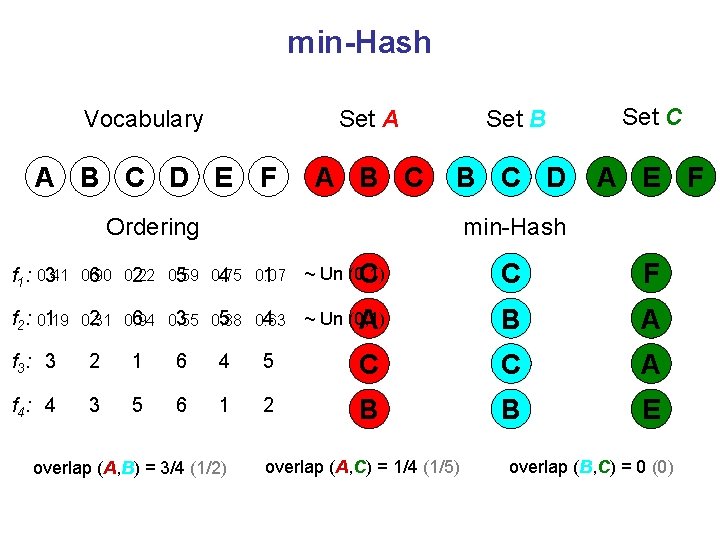

min-Hash Vocabulary A B C D E F Set A Set B Set C A B C D A E F Ordering min-Hash f 1: 0. 41 3 0. 90 6 0. 22 2 0. 59 5 0. 75 4 0. 07 1 ~ Un (0, 1) C C F f 2: 0. 19 1 2 0. 94 6 0. 55 3 0. 88 5 0. 63 4 0. 31 ~ Un (0, 1) A B A f 3 : 3 2 1 6 4 5 C C A f 4 : 4 3 5 6 1 2 B B E overlap (A, B) = 3/4 (1/2) overlap (A, C) = 1/4 (1/5) overlap (B, C) = 0 (0)

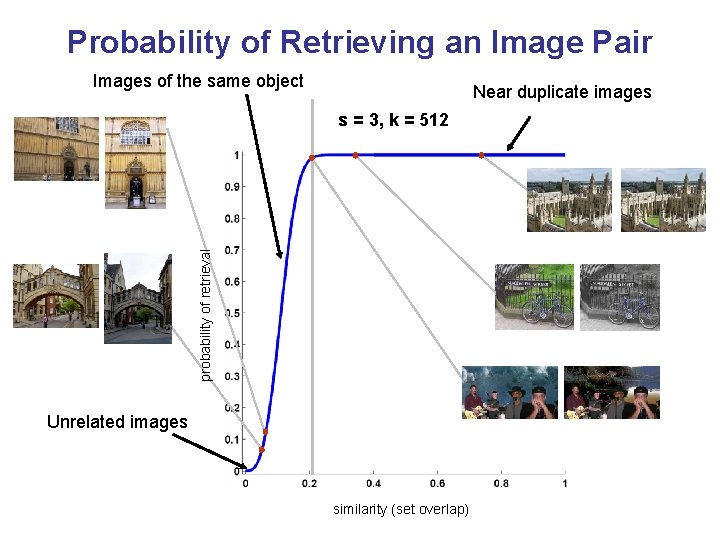

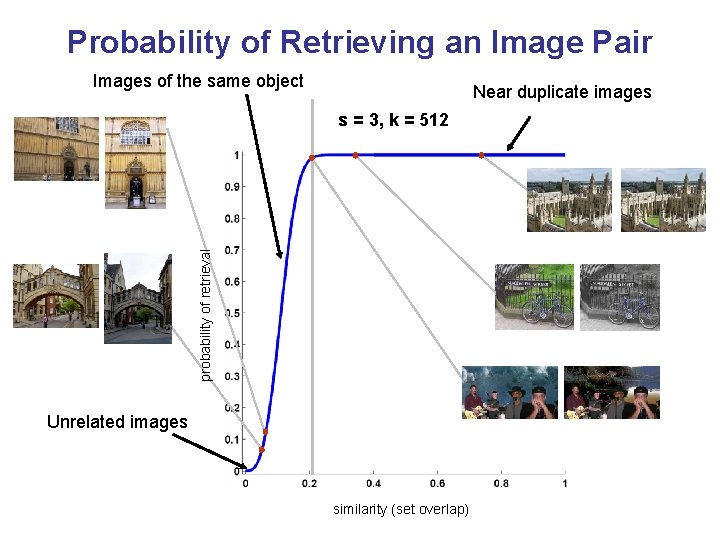

min-Hash Retrieval A A Q V E J Y B Sketch collision }}. . . }} }} A C sketch s-tuple of min-Hashes s – size of the sketch k – number of hash tables Probability of sketch collision V sim(A, B)s E Probability of retrieval (at least one sketch collision) Z Q 1 – (1 - sim(A, B)s)k k hash tables

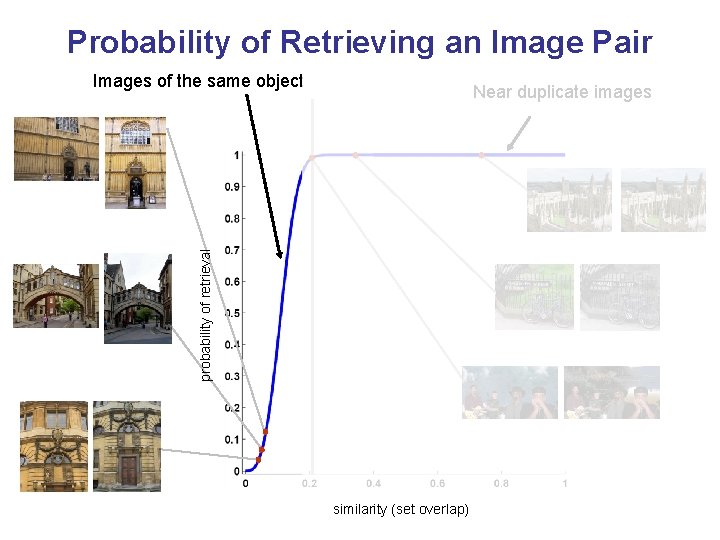

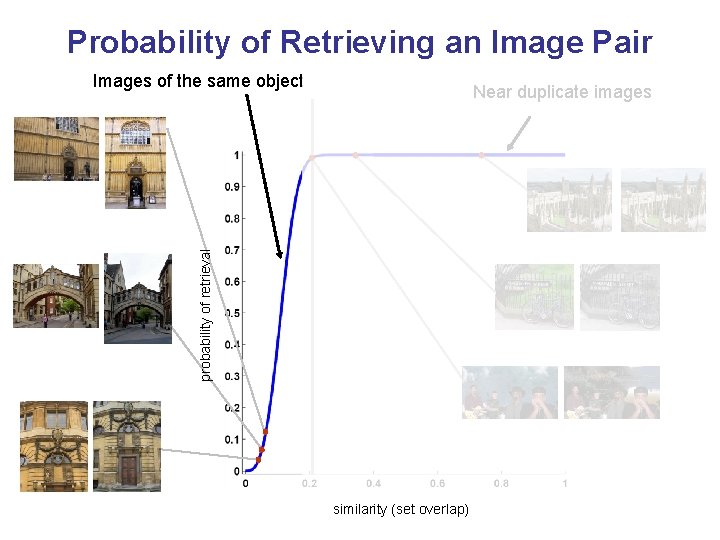

Probability of Retrieving an Image Pair Images of the same object Near duplicate images probability of retrieval s = 3, k = 512 Unrelated images similarity (set overlap)

More Complex Similarity Measures

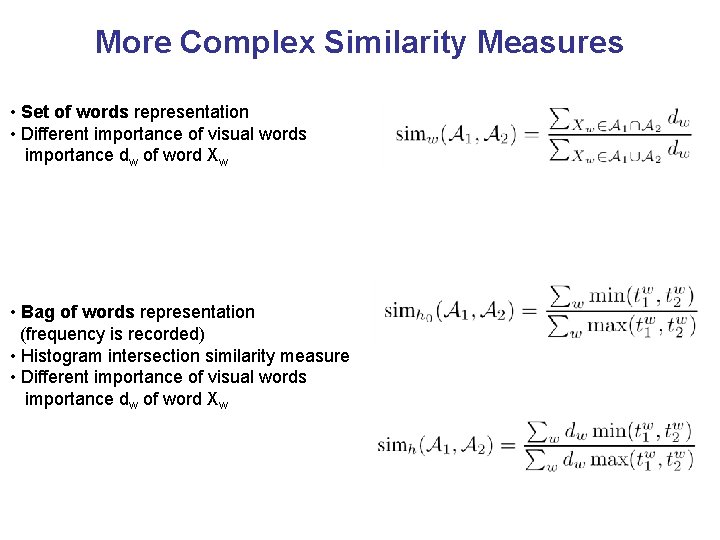

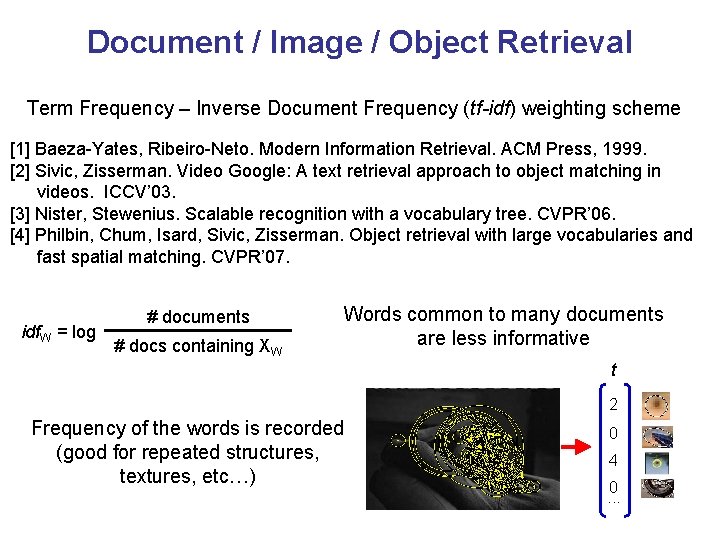

Document / Image / Object Retrieval Term Frequency – Inverse Document Frequency (tf-idf) weighting scheme [1] Baeza-Yates, Ribeiro-Neto. Modern Information Retrieval. ACM Press, 1999. [2] Sivic, Zisserman. Video Google: A text retrieval approach to object matching in videos. ICCV’ 03. [3] Nister, Stewenius. Scalable recognition with a vocabulary tree. CVPR’ 06. [4] Philbin, Chum, Isard, Sivic, Zisserman. Object retrieval with large vocabularies and fast spatial matching. CVPR’ 07. idf. W = log # documents # docs containing XW Words common to many documents are less informative t 2 Frequency of the words is recorded (good for repeated structures, textures, etc…) 0 4 0. . .

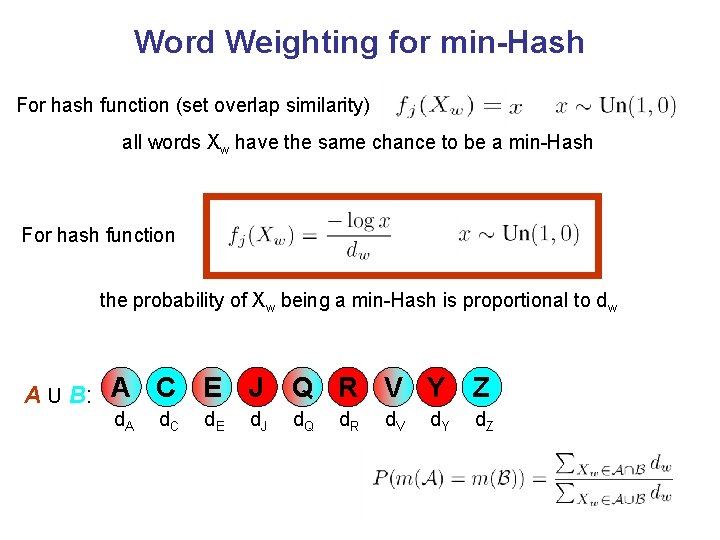

More Complex Similarity Measures • Set of words representation • Different importance of visual words importance dw of word Xw • Bag of words representation (frequency is recorded) • Histogram intersection similarity measure • Different importance of visual words importance dw of word Xw

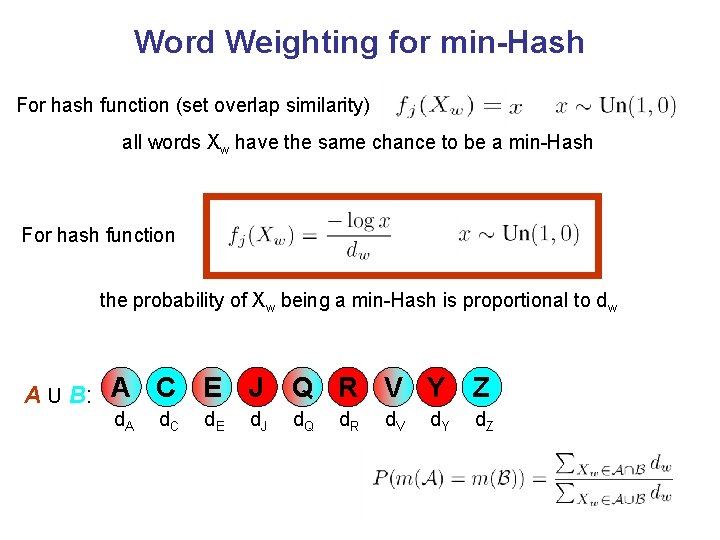

Word Weighting for min-Hash For hash function (set overlap similarity) all words Xw have the same chance to be a min-Hash For hash function the probability of Xw being a min-Hash is proportional to dw A U B: A d. A C E J Q R V Y Z d. C d. J d. E d. Q d. R d. V d. Y d. Z

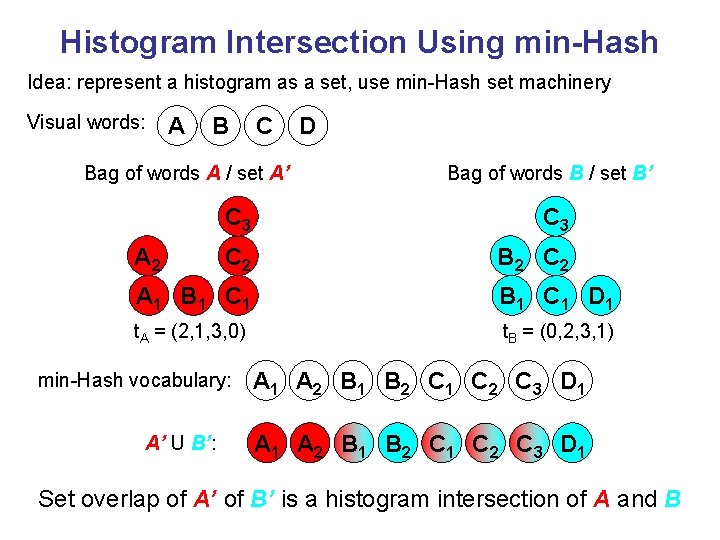

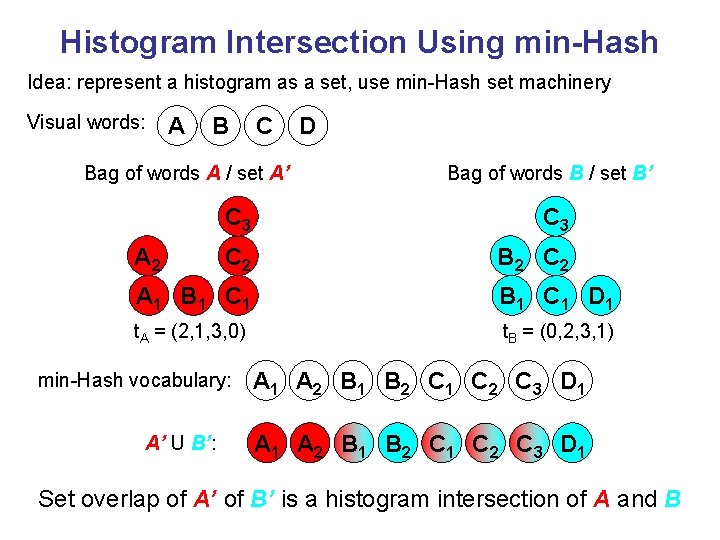

Histogram Intersection Using min-Hash Idea: represent a histogram as a set, use min-Hash set machinery Visual words: A B C Bag of words A / set A’ A 2 D Bag of words B / set B’ C 3 C 2 B 2 C 2 A 1 B 1 C 1 D 1 t. A = (2, 1, 3, 0) t. B = (0, 2, 3, 1) min-Hash vocabulary: A’ U B’: A 1 A 2 B 1 B 2 C 1 C 2 C 3 D 1 Set overlap of A’ of B’ is a histogram intersection of A and B

Results • Quality of the retrieval • Speed – the number of documents considered as near-duplicates

TRECVid Challange • 165 hours of news footage, different channels, different countries • 146, 588 key-frames, 352× 240 pixels • No ground truth on near duplicates

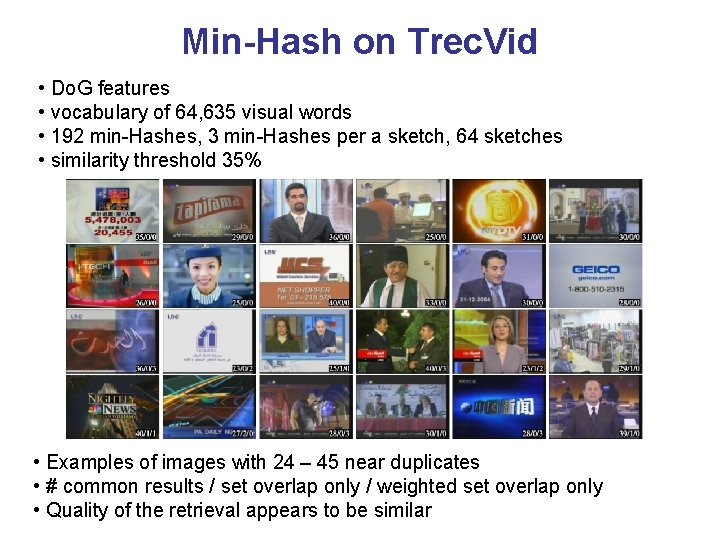

Min-Hash on Trec. Vid • Do. G features • vocabulary of 64, 635 visual words • 192 min-Hashes, 3 min-Hashes per a sketch, 64 sketches • similarity threshold 35% • Examples of images with 24 – 45 near duplicates • # common results / set overlap only / weighted set overlap only • Quality of the retrieval appears to be similar

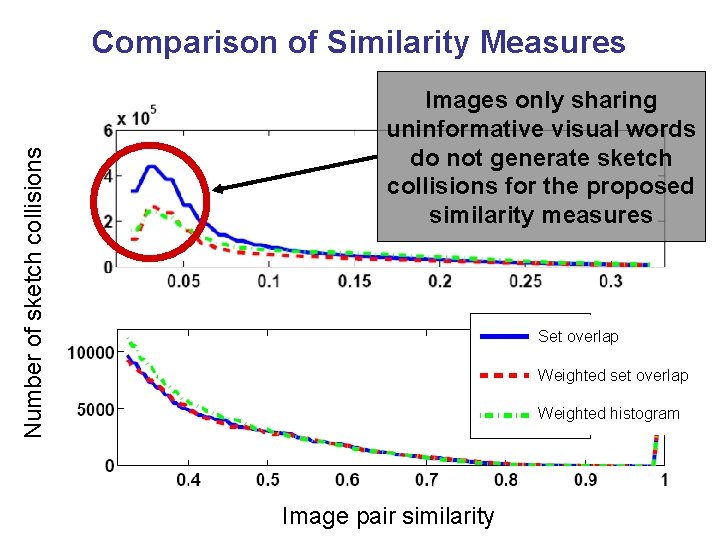

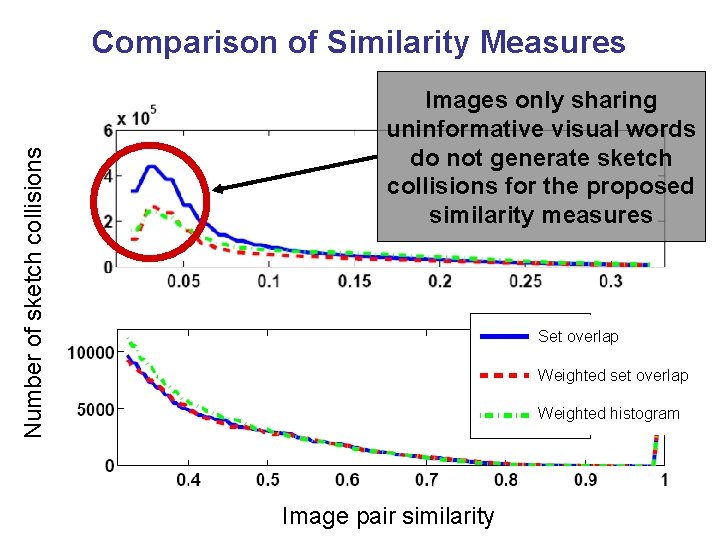

Number of sketch collisions Comparison of Similarity Measures Images only sharing uninformative visual words do not generate sketch collisions for the proposed similarity measures Set overlap Weighted set overlap Weighted histogram Image pair similarity

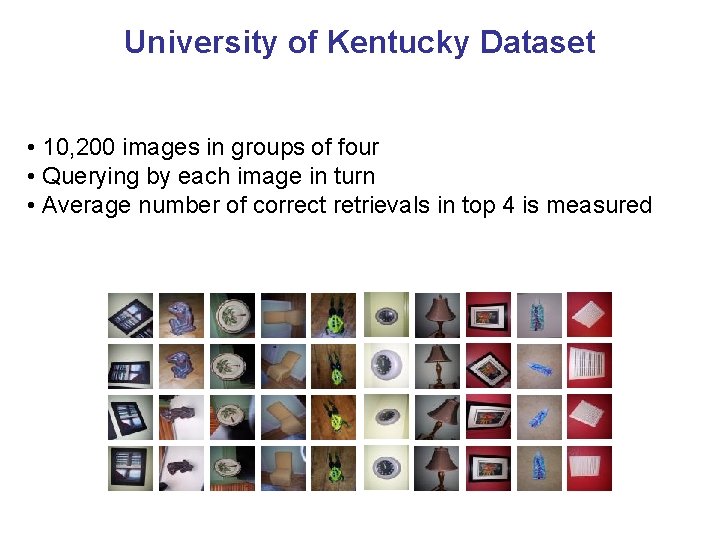

University of Kentucky Dataset • 10, 200 images in groups of four • Querying by each image in turn • Average number of correct retrievals in top 4 is measured

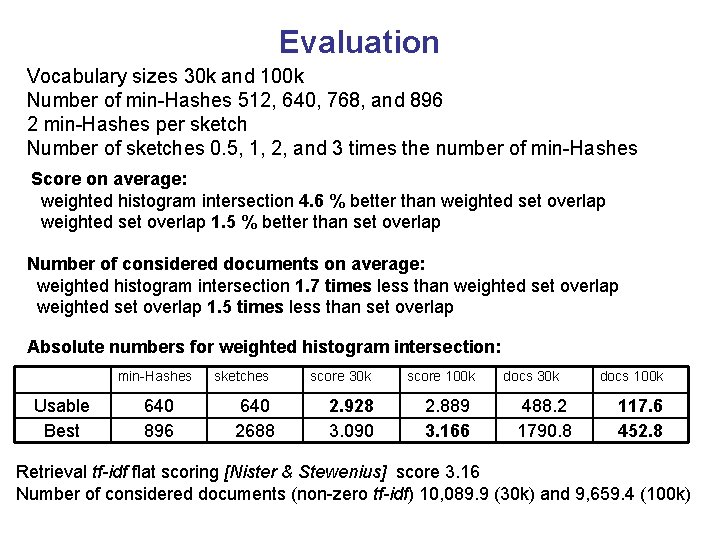

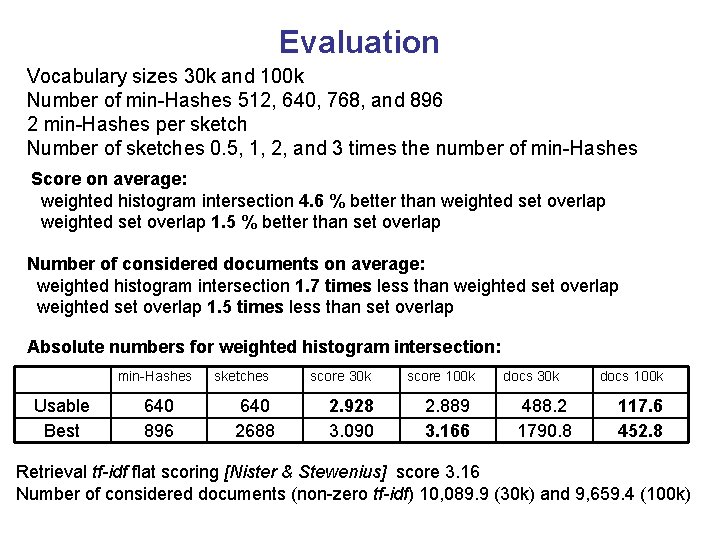

Evaluation Vocabulary sizes 30 k and 100 k Number of min-Hashes 512, 640, 768, and 896 2 min-Hashes per sketch Number of sketches 0. 5, 1, 2, and 3 times the number of min-Hashes Score on average: weighted histogram intersection 4. 6 % better than weighted set overlap 1. 5 % better than set overlap Number of considered documents on average: weighted histogram intersection 1. 7 times less than weighted set overlap 1. 5 times less than set overlap Absolute numbers for weighted histogram intersection: min-Hashes Usable Best 640 896 sketches 640 2688 score 30 k 2. 928 3. 090 score 100 k 2. 889 3. 166 docs 30 k 488. 2 1790. 8 docs 100 k 117. 6 452. 8 Retrieval tf-idf flat scoring [Nister & Stewenius] score 3. 16 Number of considered documents (non-zero tf-idf) 10, 089. 9 (30 k) and 9, 659. 4 (100 k)

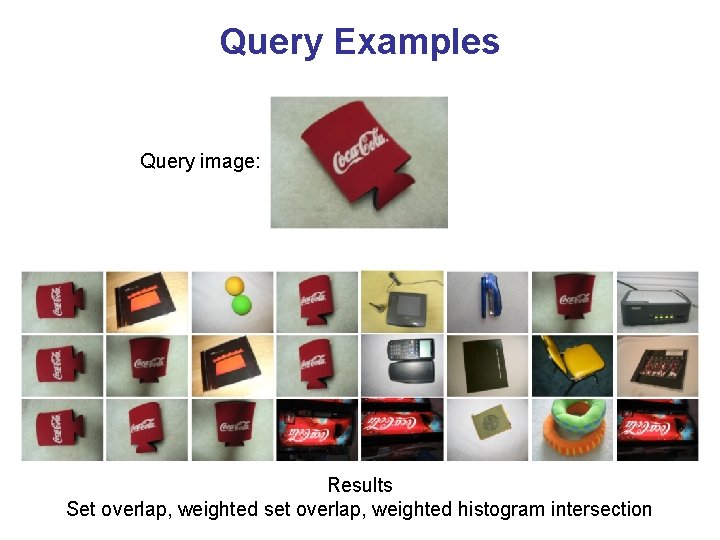

Query Examples Query image: Results Set overlap, weighted set overlap, weighted histogram intersection

Beyond Near Duplicate Detection

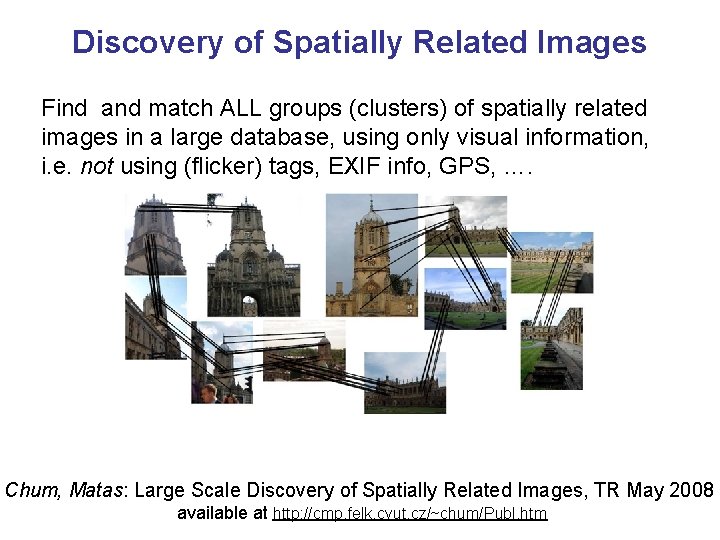

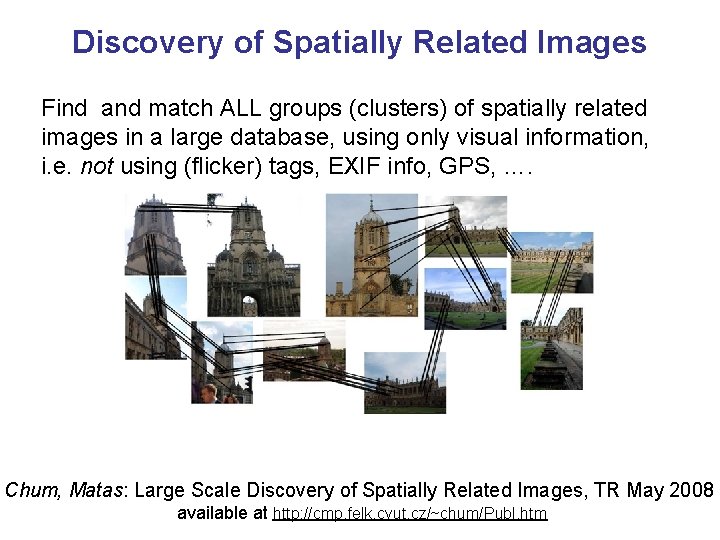

Discovery of Spatially Related Images Find and match ALL groups (clusters) of spatially related images in a large database, using only visual information, i. e. not using (flicker) tags, EXIF info, GPS, …. Chum, Matas: Large Scale Discovery of Spatially Related Images, TR May 2008 available at http: //cmp. felk. cvut. cz/~chum/Publ. htm

Probability of Retrieving an Image Pair Images of the same object probability of retrieval Near duplicate images similarity (set overlap)

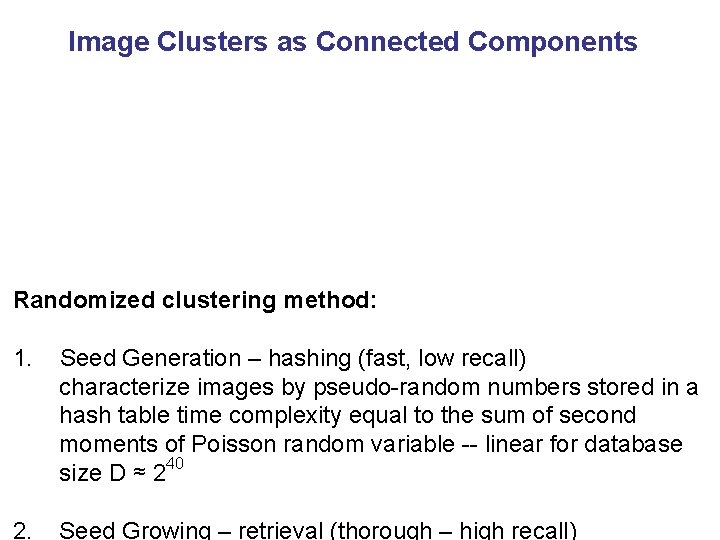

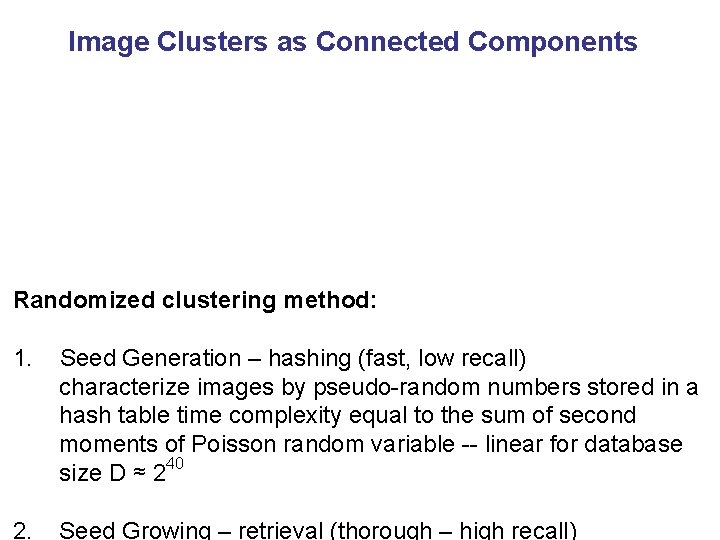

Image Clusters as Connected Components Randomized clustering method: 1. Seed Generation – hashing (fast, low recall) characterize images by pseudo-random numbers stored in a hash table time complexity equal to the sum of second moments of Poisson random variable -- linear for database size D ≈ 240 2. Seed Growing – retrieval (thorough – high recall)

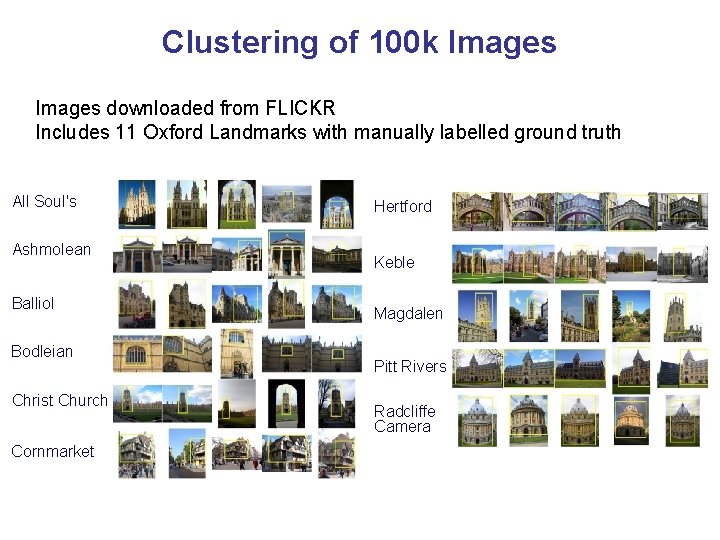

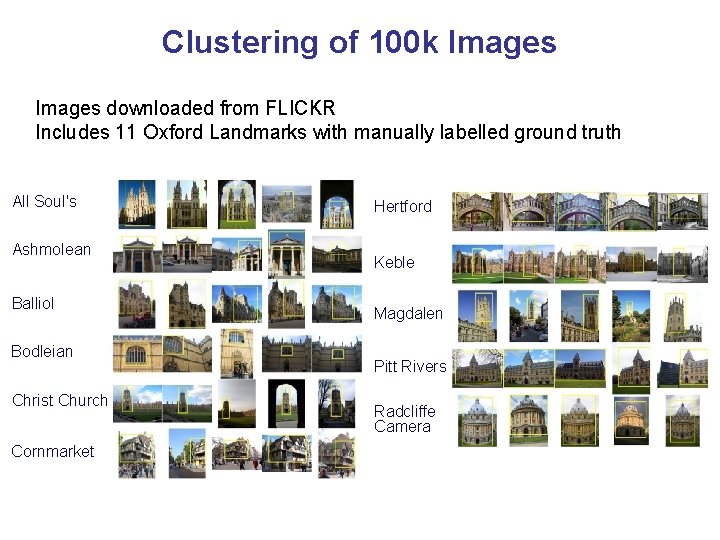

Clustering of 100 k Images downloaded from FLICKR Includes 11 Oxford Landmarks with manually labelled ground truth All Soul's Ashmolean Balliol Bodleian Christ Church Cornmarket Hertford Keble Magdalen Pitt Rivers Radcliffe Camera

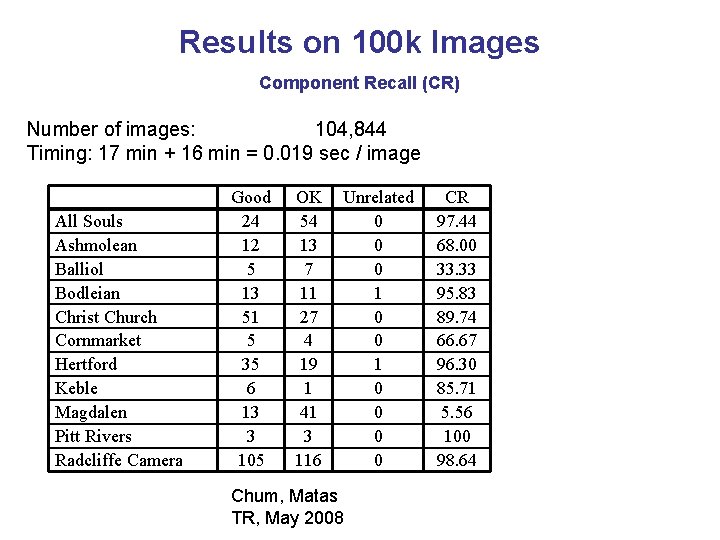

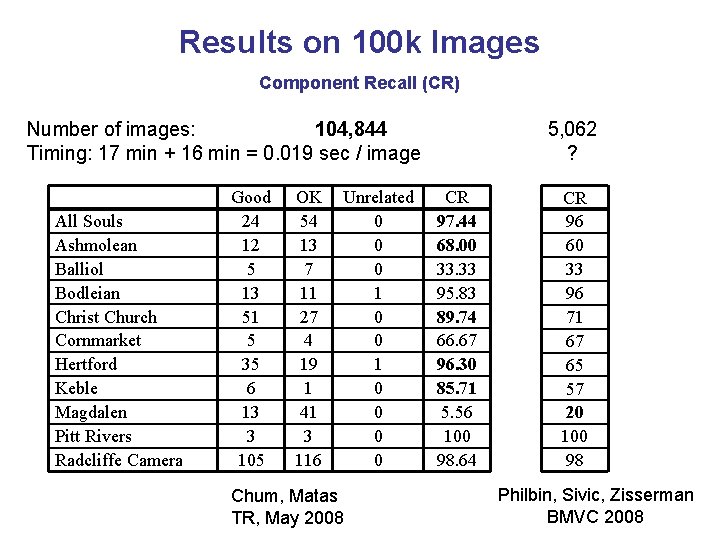

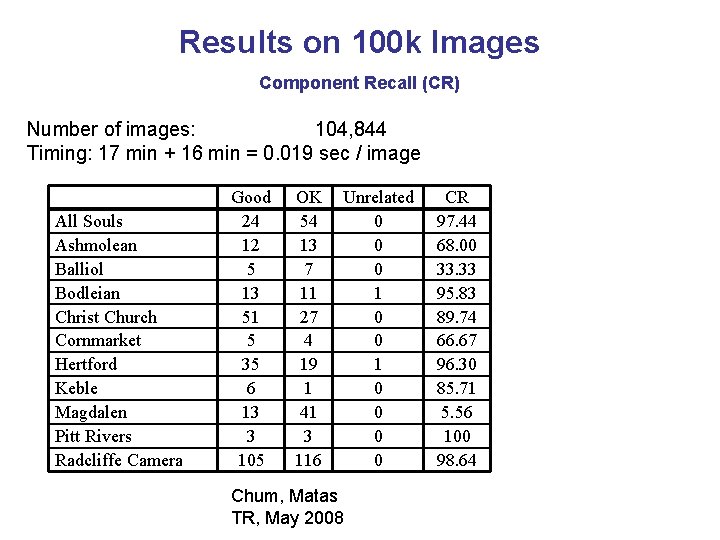

Results on 100 k Images Component Recall (CR) Number of images: 104, 844 Timing: 17 min + 16 min = 0. 019 sec / image All Souls Ashmolean Balliol Bodleian Christ Church Cornmarket Hertford Keble Magdalen Pitt Rivers Radcliffe Camera Good 24 12 5 13 51 5 35 6 13 3 105 OK 54 13 7 11 27 4 19 1 41 3 116 Unrelated 0 0 0 1 0 0 Chum, Matas TR, May 2008 CR 97. 44 68. 00 33. 33 95. 83 89. 74 66. 67 96. 30 85. 71 5. 56 100 98. 64

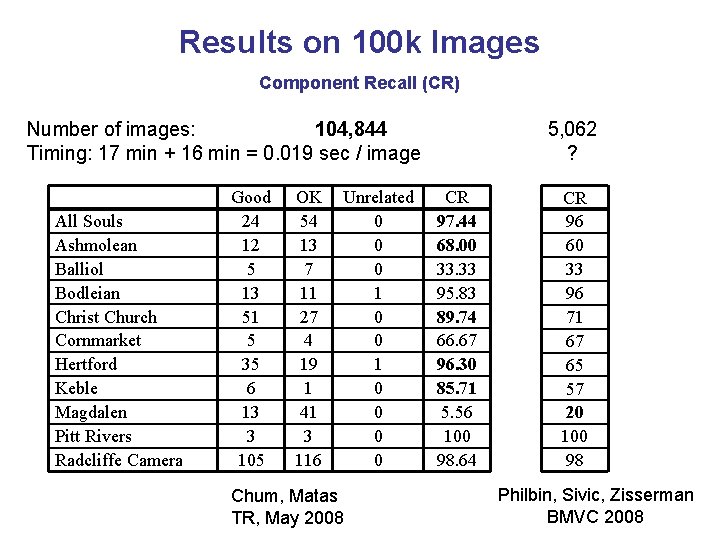

Results on 100 k Images Component Recall (CR) Number of images: 104, 844 Timing: 17 min + 16 min = 0. 019 sec / image All Souls Ashmolean Balliol Bodleian Christ Church Cornmarket Hertford Keble Magdalen Pitt Rivers Radcliffe Camera Good 24 12 5 13 51 5 35 6 13 3 105 OK 54 13 7 11 27 4 19 1 41 3 116 Unrelated 0 0 0 1 0 0 Chum, Matas TR, May 2008 5, 062 ? CR 97. 44 68. 00 33. 33 95. 83 89. 74 66. 67 96. 30 85. 71 5. 56 100 98. 64 CR 96 60 33 96 71 67 65 57 20 100 98 Philbin, Sivic, Zisserman BMVC 2008

Conclusions • New similarity measures were derived for the min-Hash framework – Weighted set overlap – Histogram intersection – Weighted histogram intersection • Experiments show that the similarity measures are superior to the state of the art – in the quality of the retrieval (up to 7% on University of Kentucky dataset) – in the speed of the retrieval (up to 2. 5 times) • min-Hash is a very useful tool for randomized image clustering

Thank you!