Nature Inspired Learning Classification and Prediction Algorithms arnas

- Slides: 33

Nature Inspired Learning: Classification and Prediction Algorithms Šarūnas Raudys Computational Intelligence Group Department of Informatics Vilnius University. Lithuania e-mail: sarunas@raudys. com Juodkrante, 2009 05 22

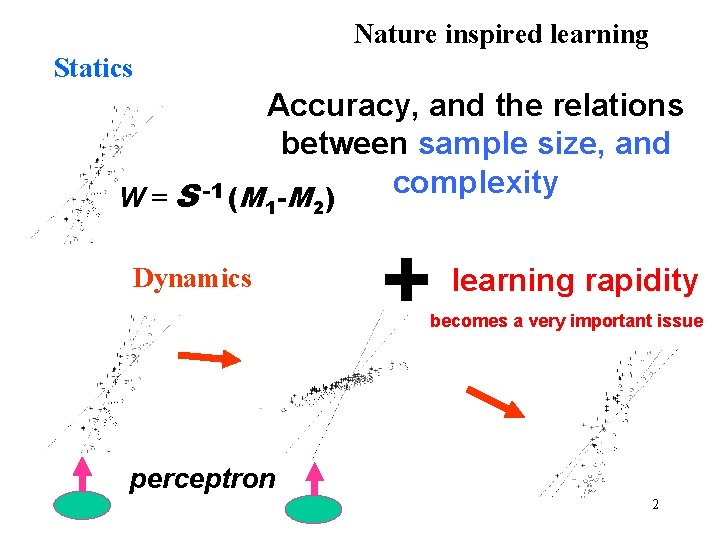

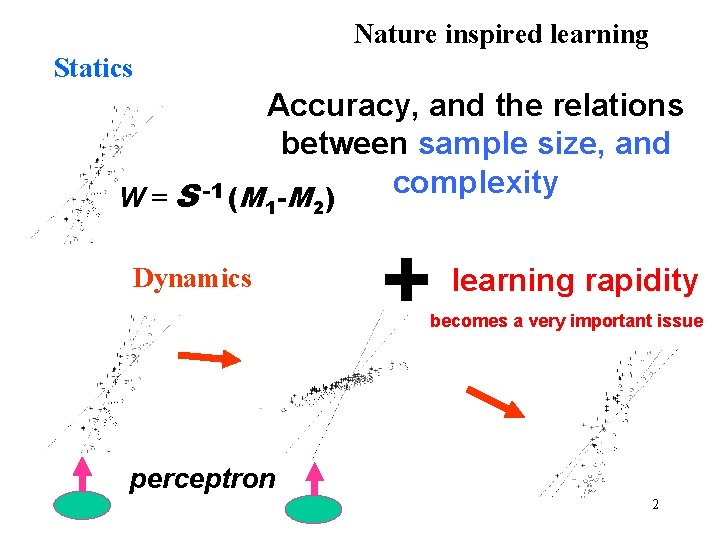

Nature inspired learning Statics Accuracy, and the relations between sample size, and complexity W = S -1 (M -M ) 1 Dynamics 2 + learning rapidity becomes a very important issue perceptron 2

3

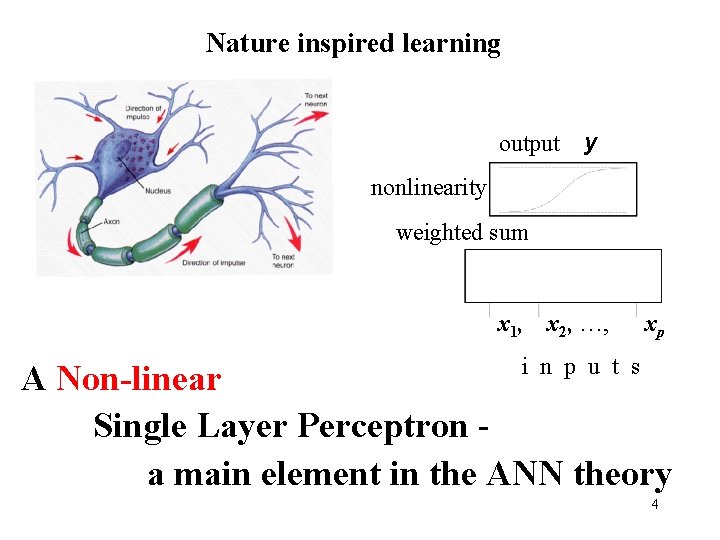

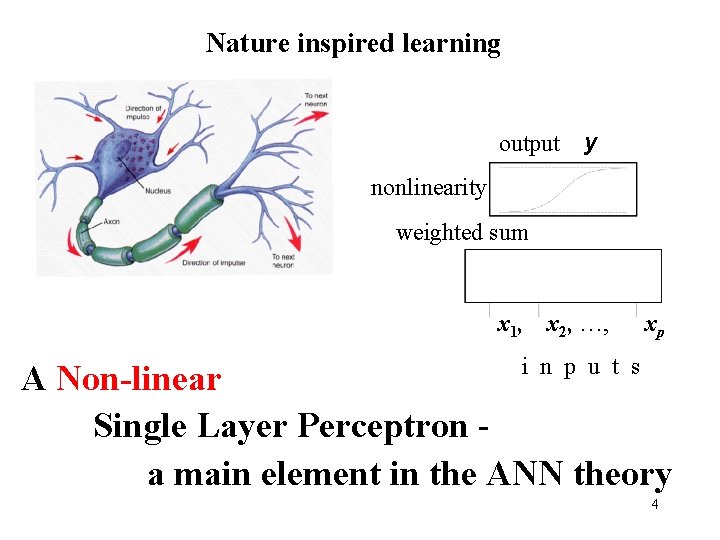

Nature inspired learning output y nonlinearity weighted sum x 1, x 2, …, xp i n p u t s A Non-linear Single Layer Perceptron a main element in the ANN theory 4

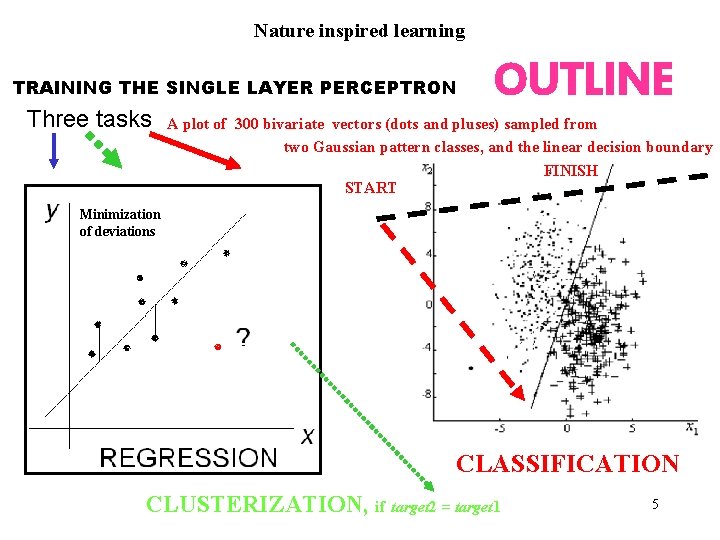

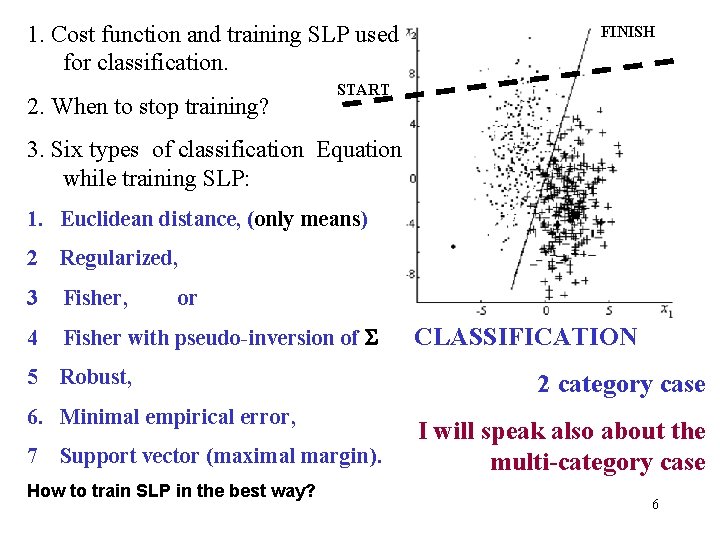

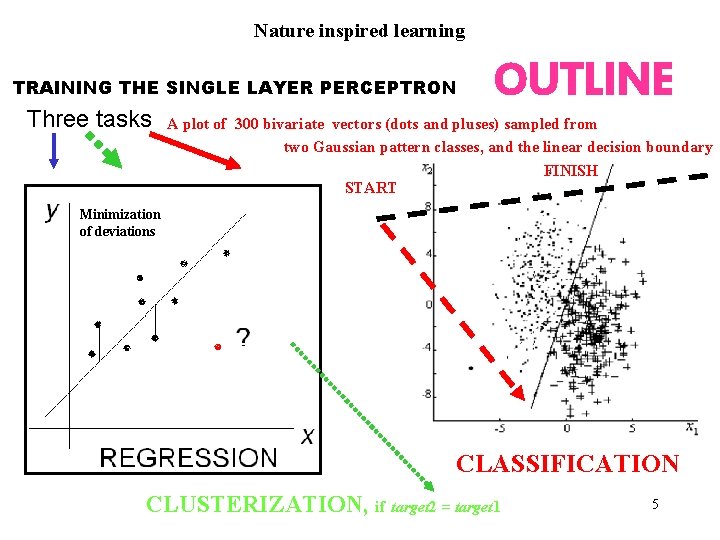

Nature inspired learning TRAINING THE SINGLE LAYER PERCEPTRON Three tasks OUTLINE A plot of 300 bivariate vectors (dots and pluses) sampled from two Gaussian pattern classes, and the linear decision boundary FINISH START Minimization of deviations CLASSIFICATION CLUSTERIZATION, if target 2 = target 1 5

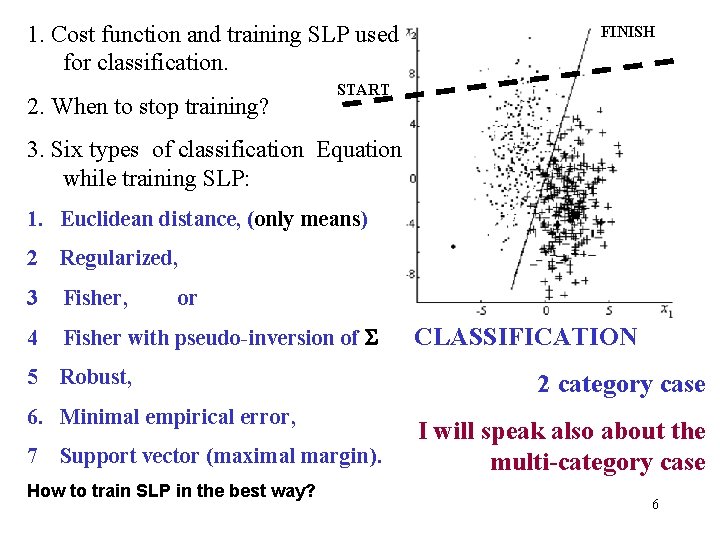

1. Cost function and training SLP used for classification. 2. When to stop training? FINISH START 3. Six types of classification Equation while training SLP: 1. Euclidean distance, (only means) 2 Regularized, 3 Fisher, 4 Fisher with pseudo-inversion of S 5 Robust, or 6. Minimal empirical error, 7 Support vector (maximal margin). How to train SLP in the best way? CLASSIFICATION 2 category case I will speak also about the multi-category case 6

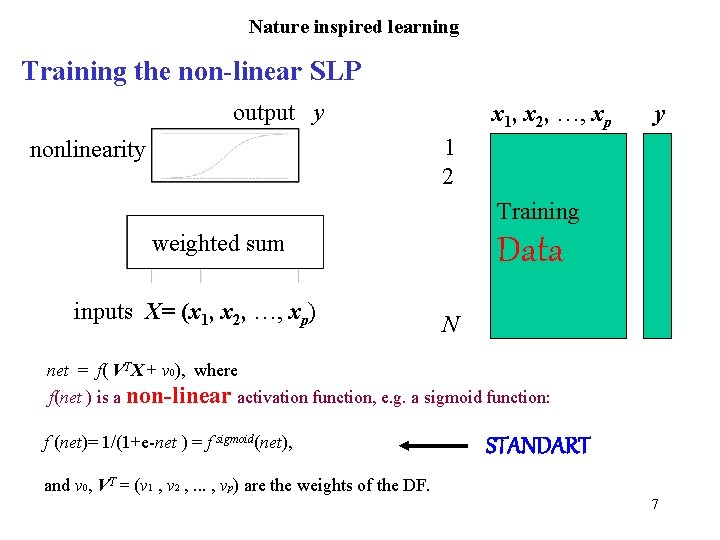

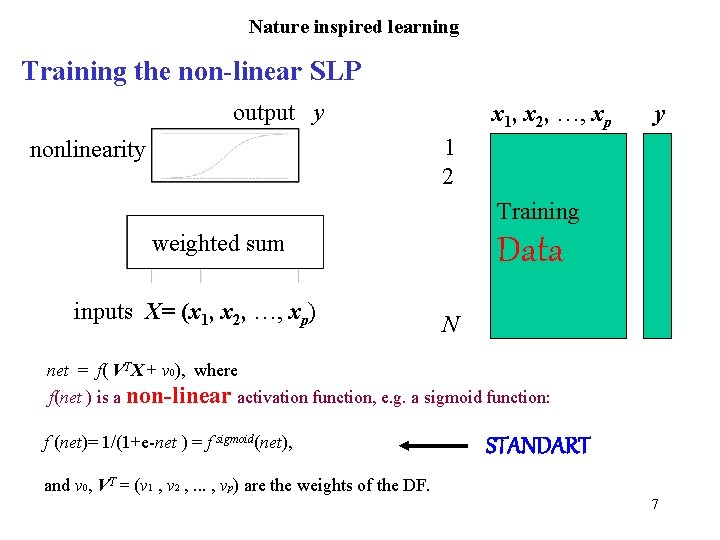

Nature inspired learning Training the non-linear SLP output y x 1, x 2, …, xp y 1 2 nonlinearity Training weighted sum inputs X= (x 1, x 2, …, xp) Data N net = f( VTX + v 0), where f(net ) is a non-linear activation function, e. g. a sigmoid function: f (net)= 1/(1+e-net ) = f sigmoid(net), STANDART and v 0, VT = (v 1 , v 2 , . . . , vp) are the weights of the DF. 7

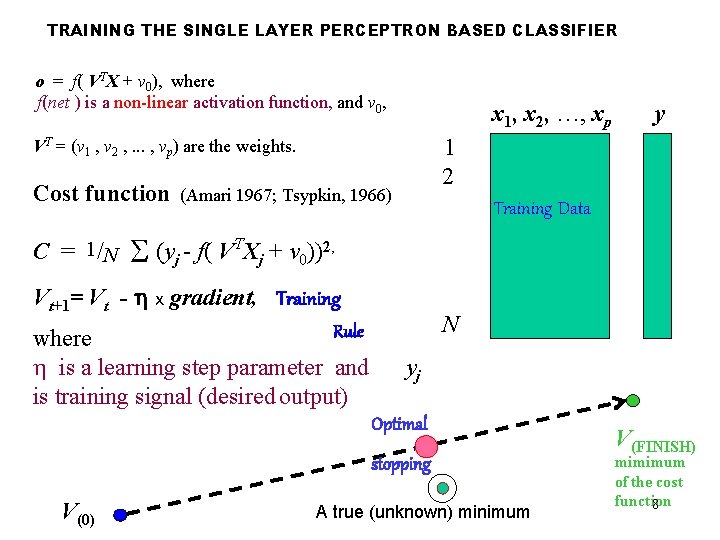

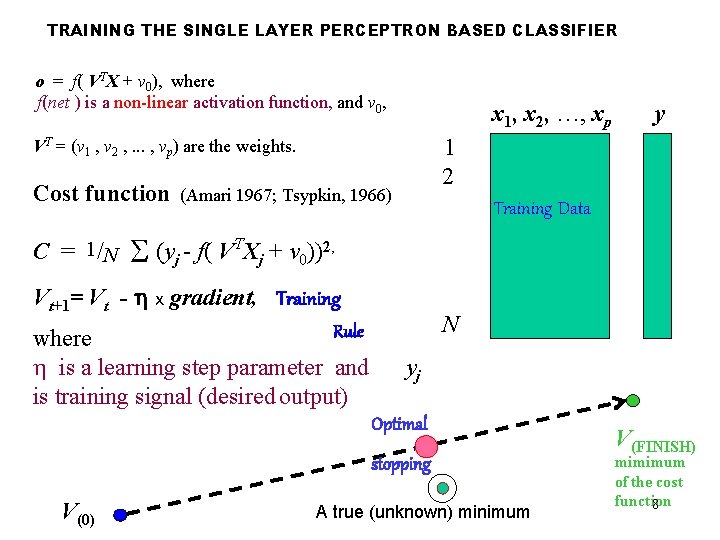

TRAINING THE SINGLE LAYER PERCEPTRON BASED CLASSIFIER o = f( VTX + v 0), where f(net ) is a non-linear activation function, and v 0, VT = (v 1 , v 2 , . . . , vp) are the weights. x 1, x 2, …, xp y 1 2 Cost function (Amari 1967; Tsypkin, 1966) Training Data C = 1/N S (yj - f( VTXj + v 0))2, Vt+1= Vt - h x gradient, Training Rule where h is a learning step parameter and yj is training signal (desired output) N Optimal stopping V(0) A true (unknown) minimum V(FINISH) mimimum of the cost function 8

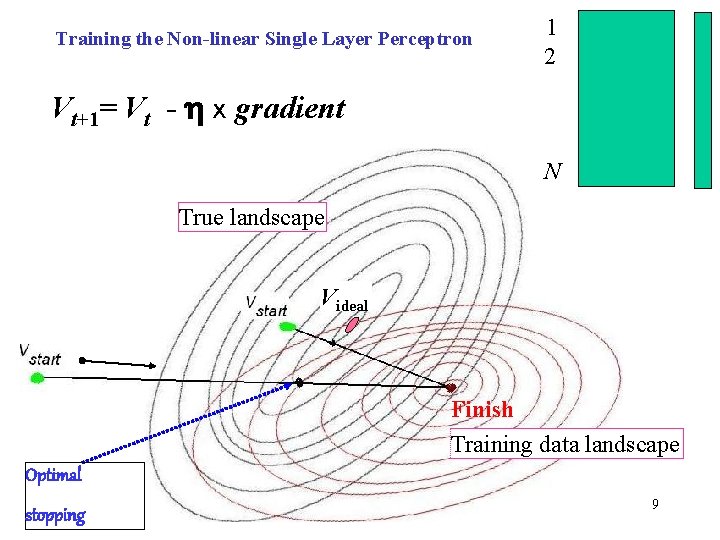

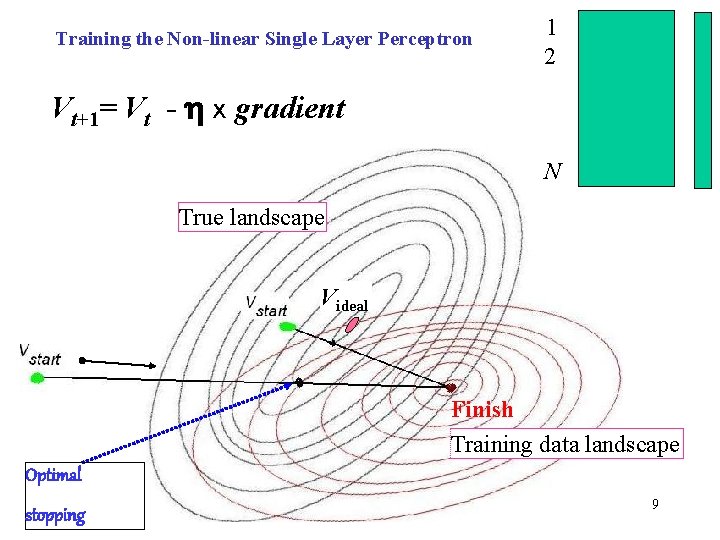

Training the Non-linear Single Layer Perceptron Vt+1= Vt - h x gradient 1 2 Training Data N True landscape Videal Finish Training data landscape Optimal stopping 9

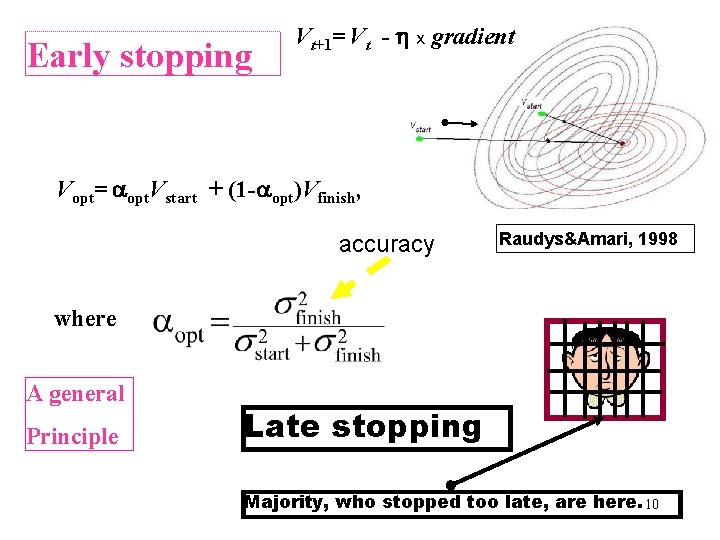

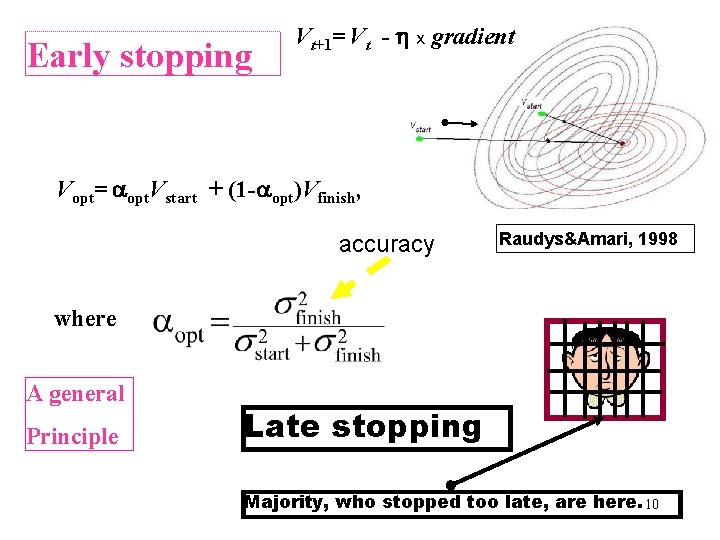

Early stopping Vt+1= Vt - h x gradient Vopt= aopt. Vstart + (1 -aopt)Vfinish, accuracy Raudys&Amari, 1998 where A general Principle Late stopping Majority, who stopped too late, are here. 10

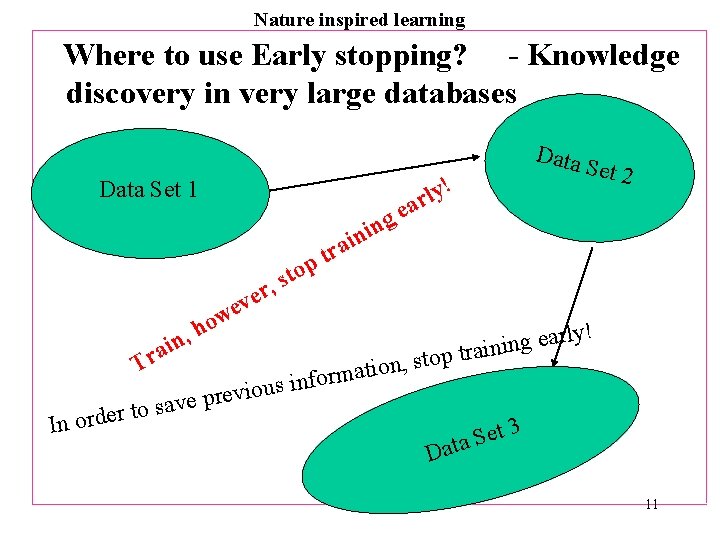

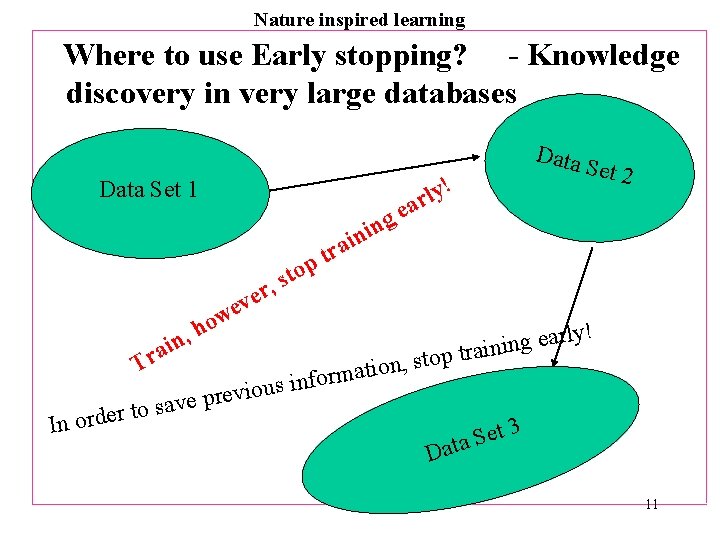

Nature inspired learning Where to use Early stopping? - Knowledge discovery in very large databases Data ! Data Set 1 g n i in y l r ea Set 2 ra t p ve e w o t s r, o h , n i a r T ! y l r a e g trainin top s u o i v e e pr v a s o t rder , s n o i t a m infor In o 3 t e S a at D 11

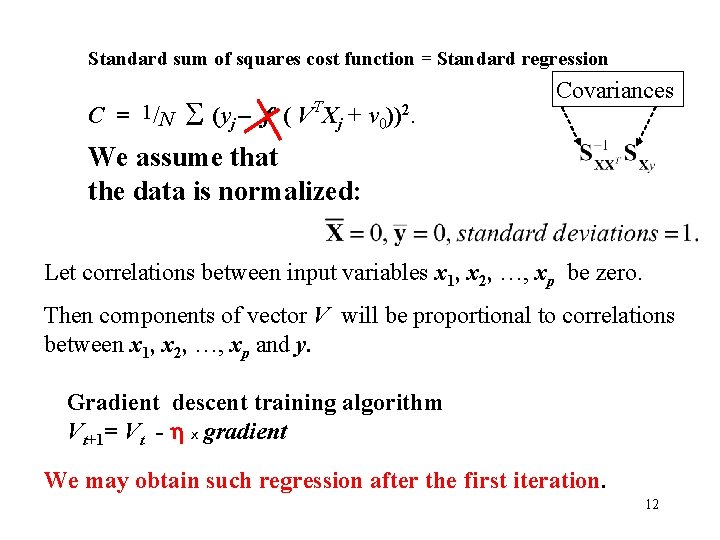

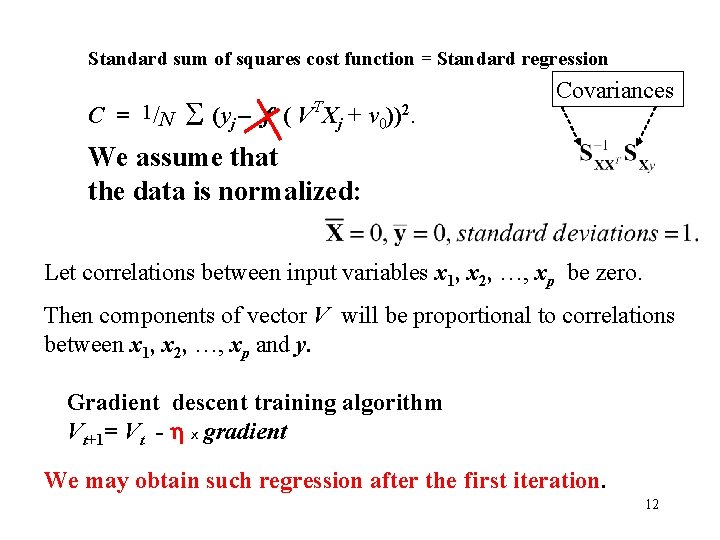

Standard sum of squares cost function = Standard regression C = 1/N S (yj – f ( VTXj + v 0))2. Covariances We assume that the data is normalized: Let correlations between input variables x 1, x 2, …, xp be zero. Then components of vector V will be proportional to correlations between x 1, x 2, …, xp and y. Gradient descent training algorithm Vt+1= Vt - h x gradient We may obtain such regression after the first iteration. 12

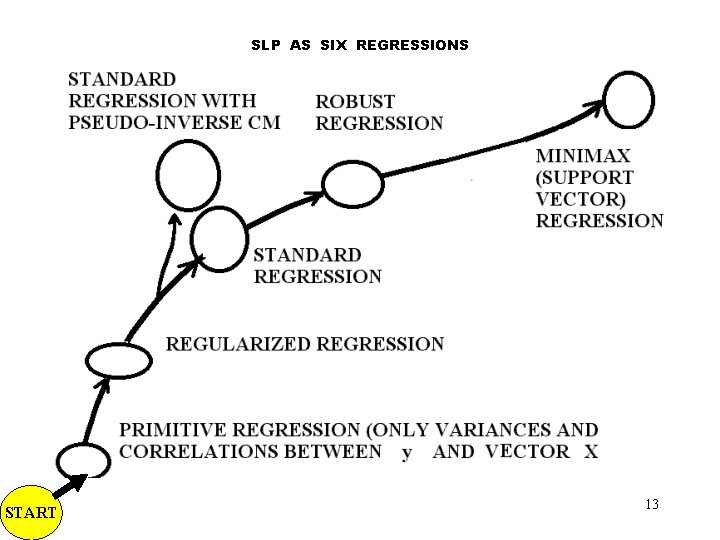

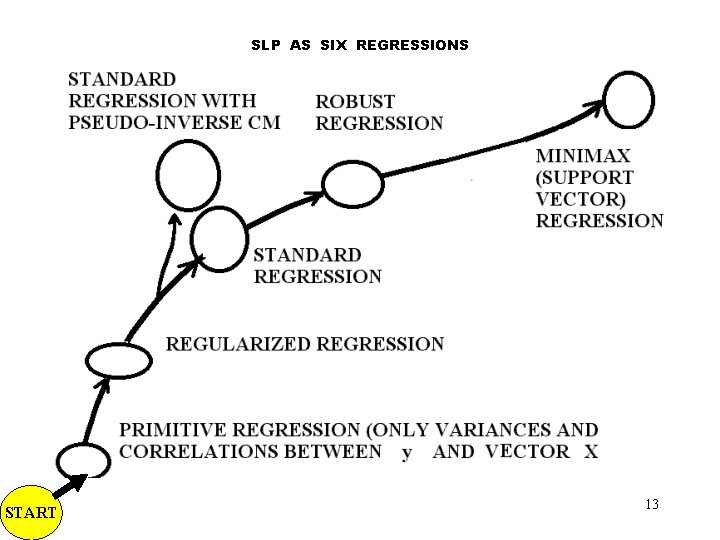

SLP AS SIX REGRESSIONS START 13

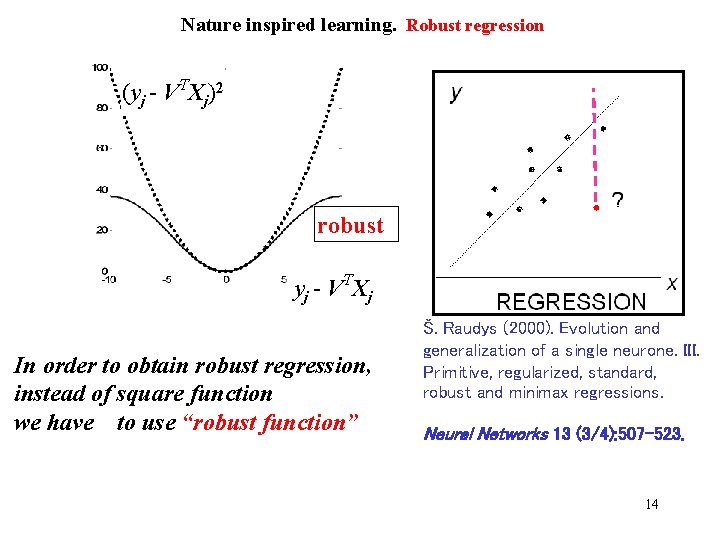

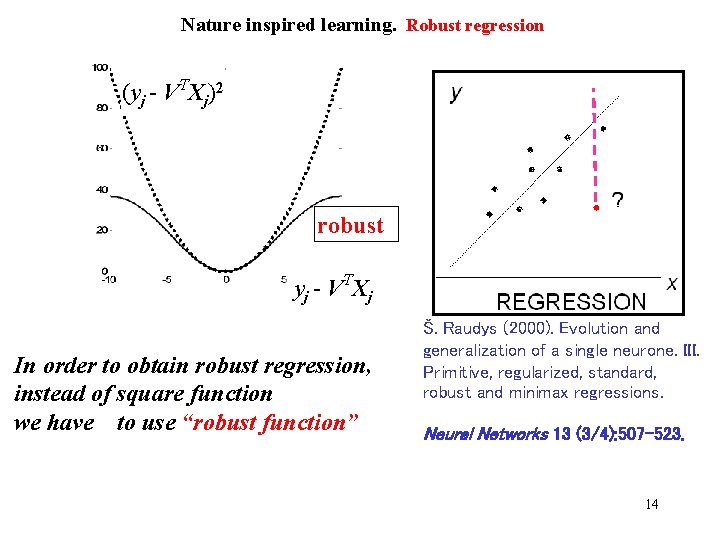

Nature inspired learning. Robust regression (yj - VTXj)2 robust yj - VTXj In order to obtain robust regression, instead of square function we have to use “robust function” Š. Raudys (2000). Evolution and generalization of a single neurone. III. Primitive, regularized, standard, robust and minimax regressions. Neural Networks 13 (3/4): 507 -523. 14

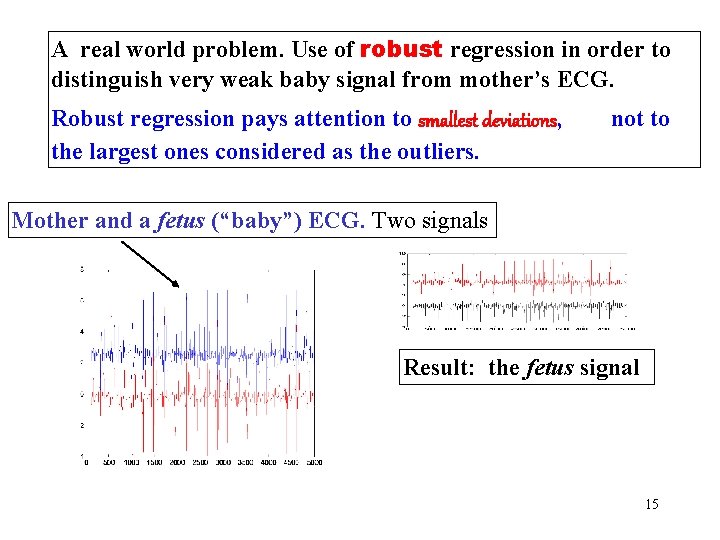

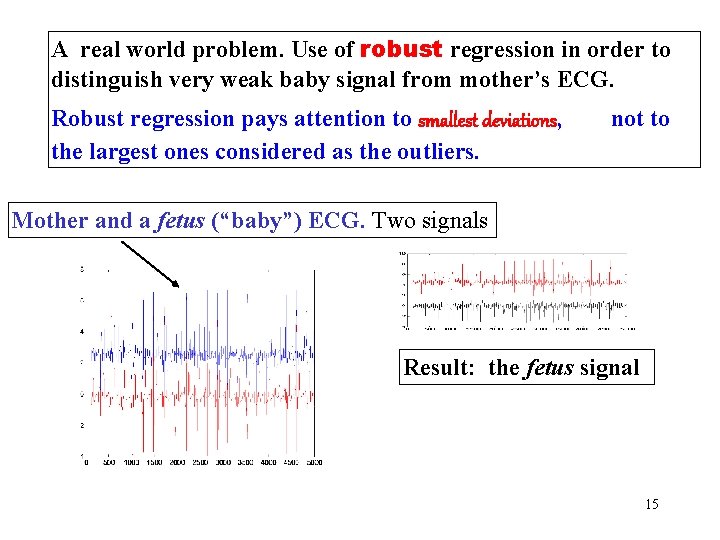

A real world problem. Use of robust regression in order to distinguish very weak baby signal from mother’s ECG. Robust regression pays attention to smallest deviations, the largest ones considered as the outliers. not to Mother and a fetus (“baby”) ECG. Two signals Result: the fetus signal 15

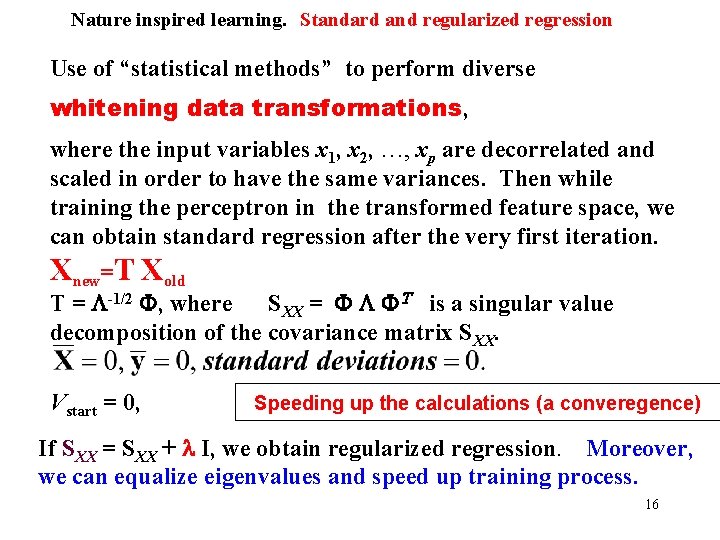

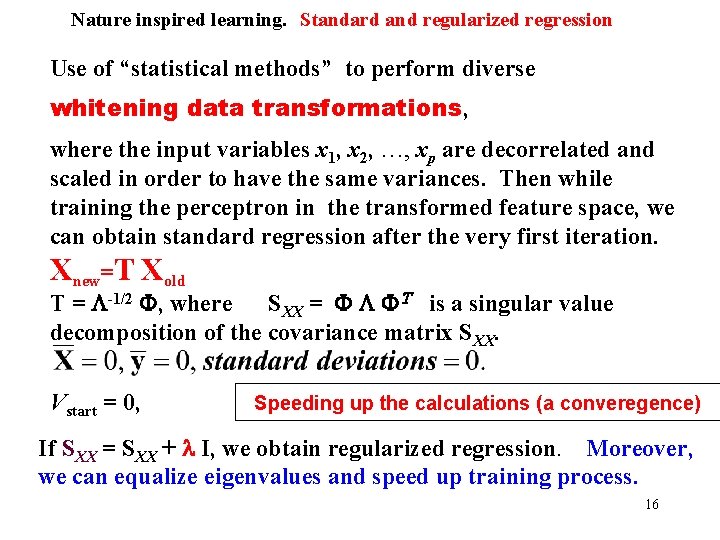

Nature inspired learning. Standard and regularized regression Use of “statistical methods” to perform diverse whitening data transformations, where the input variables x 1, x 2, …, xp are decorrelated and scaled in order to have the same variances. Then while training the perceptron in the transformed feature space, we can obtain standard regression after the very first iteration. Xnew=T Xold T = L-1/2 F, where SXX = F L FT is a singular value decomposition of the covariance matrix SXX. Vstart = 0, Speeding up the calculations (a converegence) If SXX = SXX + l I, we obtain regularized regression. Moreover, we can equalize eigenvalues and speed up training process. 16

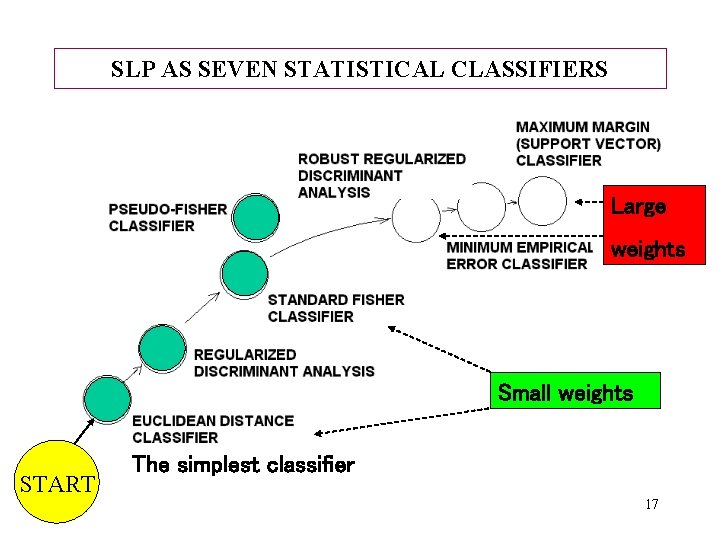

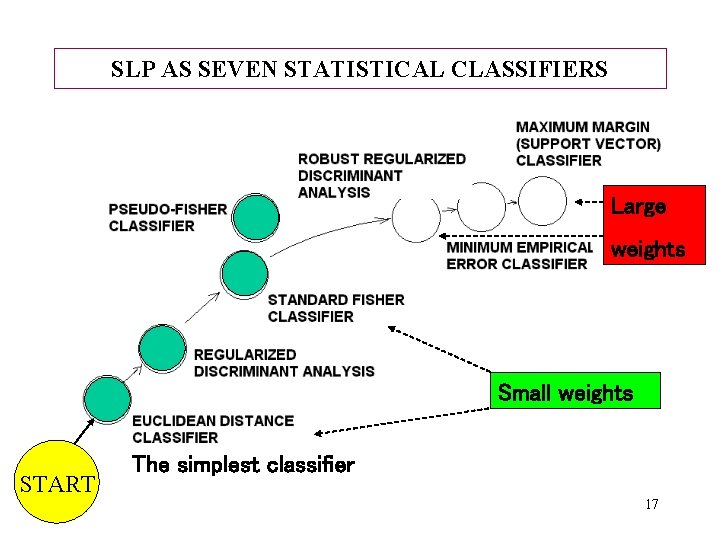

SLP AS SEVEN STATISTICAL CLASSIFIERS Large weights Small weights START The simplest classifier 17

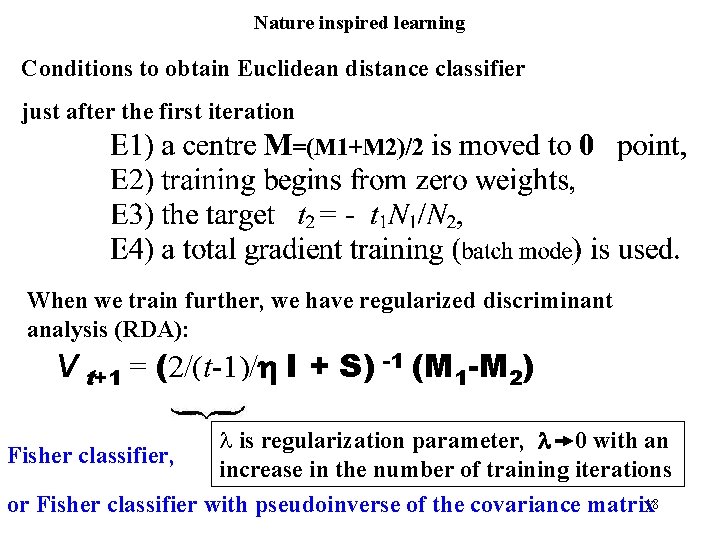

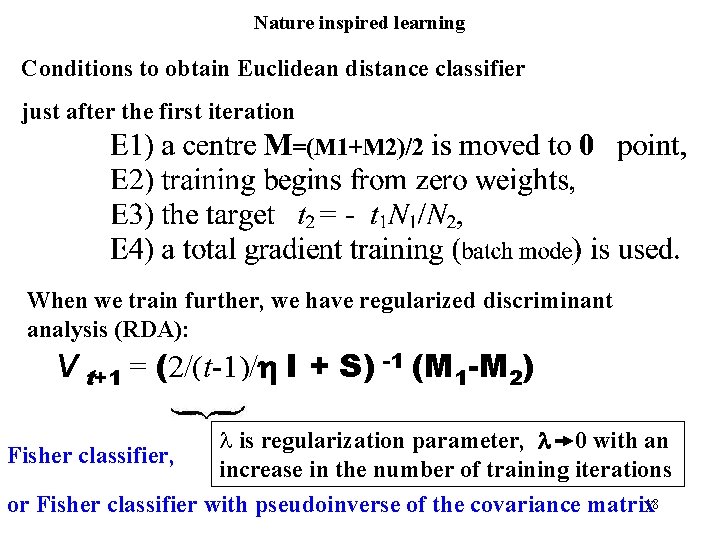

Nature inspired learning Conditions to obtain Euclidean distance classifier just after the first iteration When we train further, we have regularized discriminant analysis (RDA): V t+1 = (2/(t-1)/h I + S) -1 (M 1 -M 2) l is regularization parameter, l 0 with an increase in the number of training iterations or Fisher classifier with pseudoinverse of the covariance matrix 18 Fisher classifier,

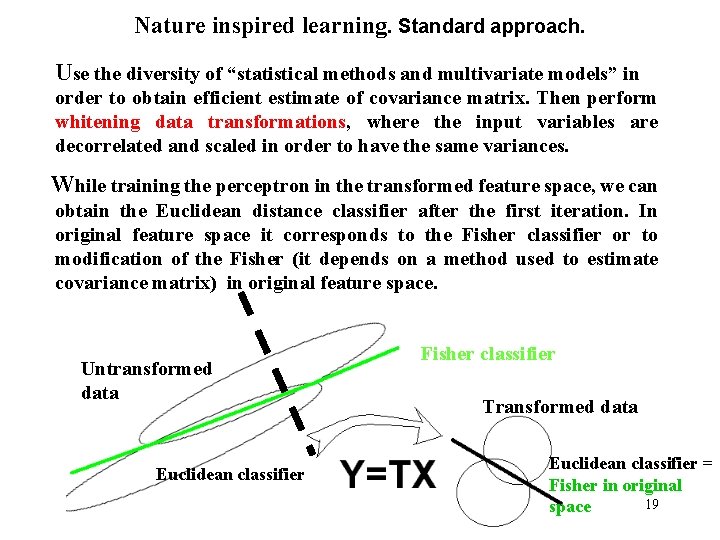

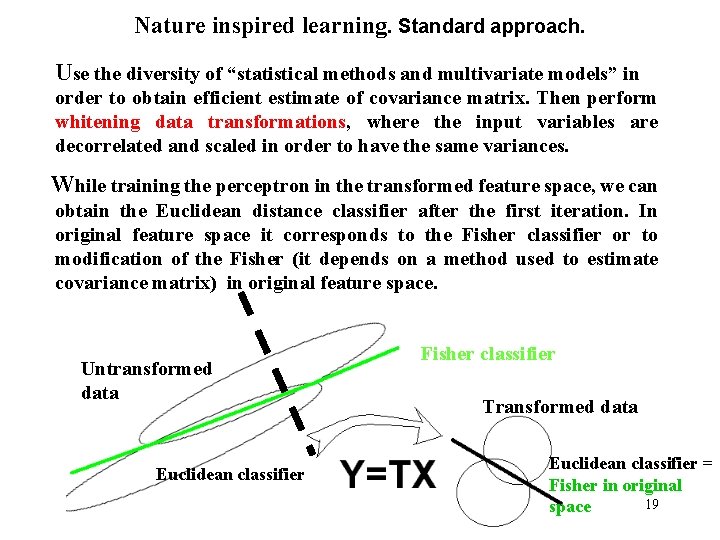

Nature inspired learning. Standard approach. Use the diversity of “statistical methods and multivariate models” in order to obtain efficient estimate of covariance matrix. Then perform whitening data transformations, where the input variables are decorrelated and scaled in order to have the same variances. While training the perceptron in the transformed feature space, we can obtain the Euclidean distance classifier after the first iteration. In original feature space it corresponds to the Fisher classifier or to modification of the Fisher (it depends on a method used to estimate covariance matrix) in original feature space. Untransformed data Euclidean classifier Fisher classifier Transformed data Euclidean classifier = Fisher in original 19 space

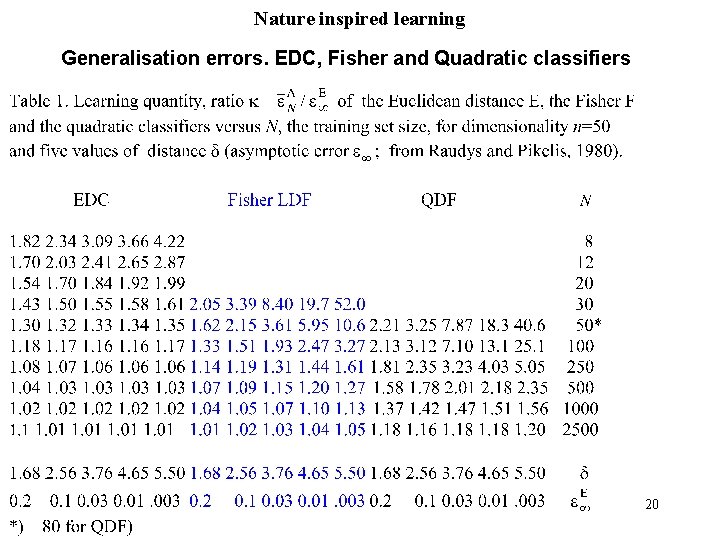

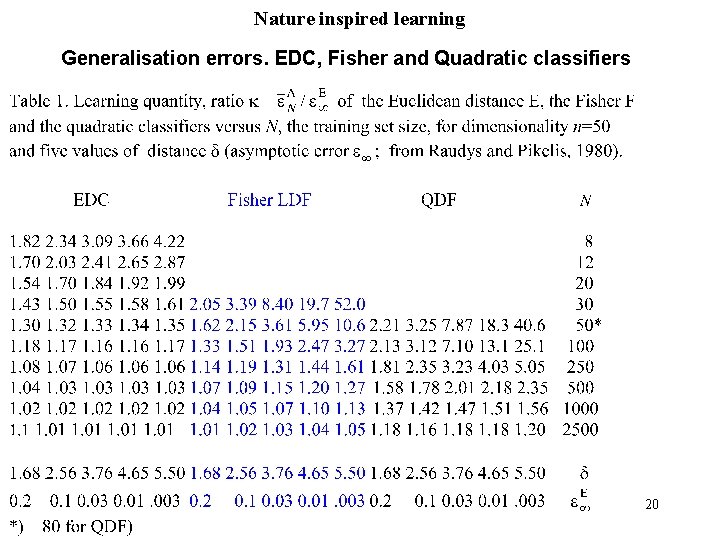

Nature inspired learning Generalisation errors. EDC, Fisher and Quadratic classifiers 20

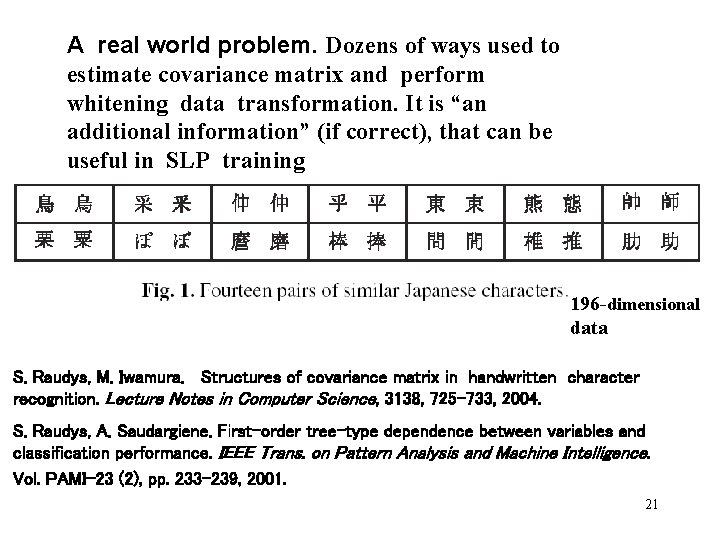

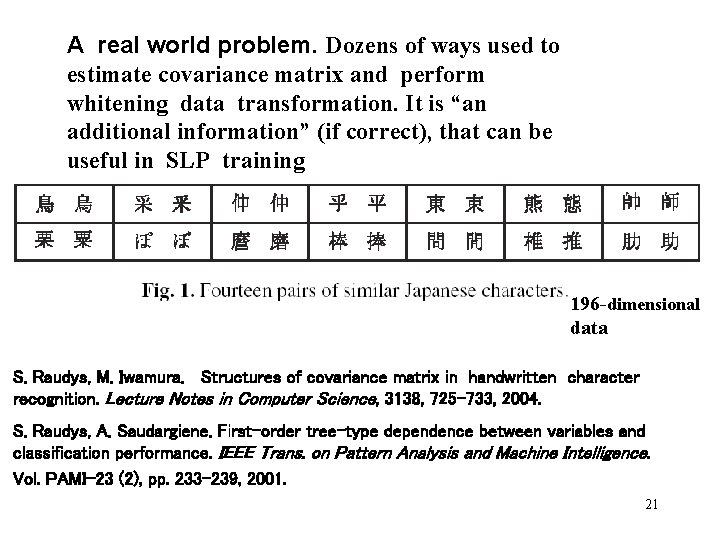

A real world problem. Dozens of ways used to estimate covariance matrix and perform whitening data transformation. It is “an additional information” (if correct), that can be useful in SLP training 196 -dimensional data S. Raudys, M. Iwamura. Structures of covariance matrix in handwritten character recognition. Lecture Notes in Computer Science, 3138, 725 -733, 2004. S. Raudys, A. Saudargiene. First-order tree-type dependence between variables and classification performance. IEEE Trans. on Pattern Analysis and Machine Intelligence. Vol. PAMI-23 (2), pp. 233 -239, 2001. 21

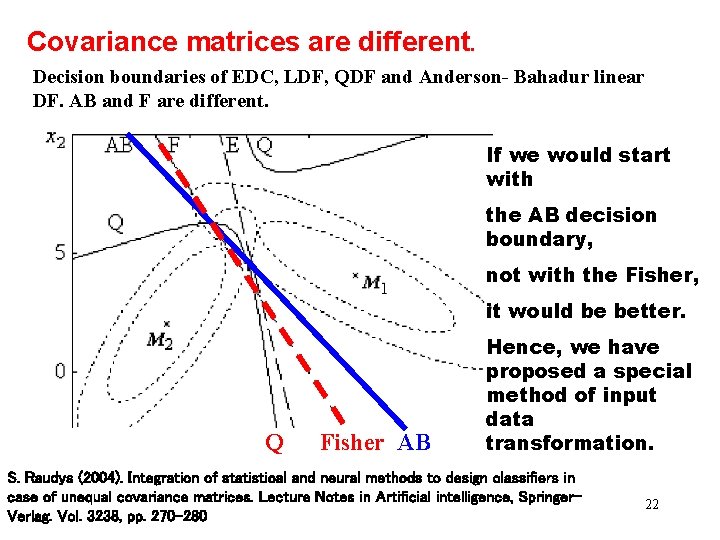

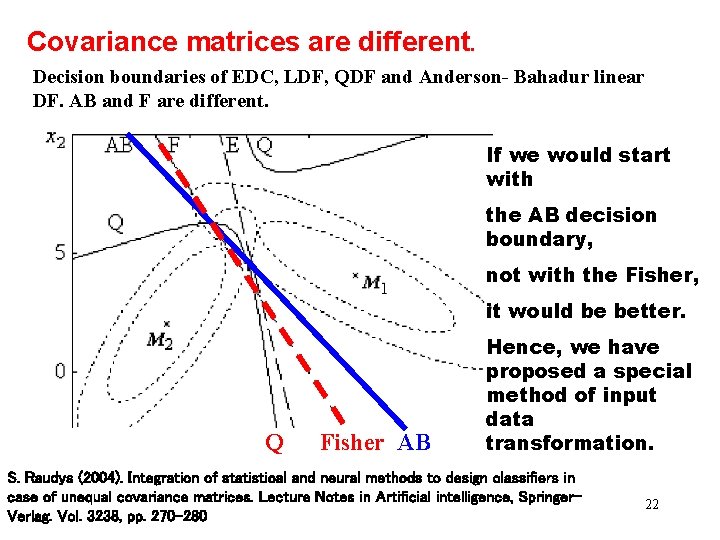

Covariance matrices are different. Decision boundaries of EDC, LDF, QDF and Anderson- Bahadur linear DF. AB and F are different. If we would start with the AB decision boundary, not with the Fisher, it would be better. Q Fisher AB Hence, we have proposed a special method of input data transformation. S. Raudys (2004). Integration of statistical and neural methods to design classifiers in case of unequal covariance matrices. Lecture Notes in Artificial intelligence, Springer. Verlag. Vol. 3238, pp. 270 -280 22

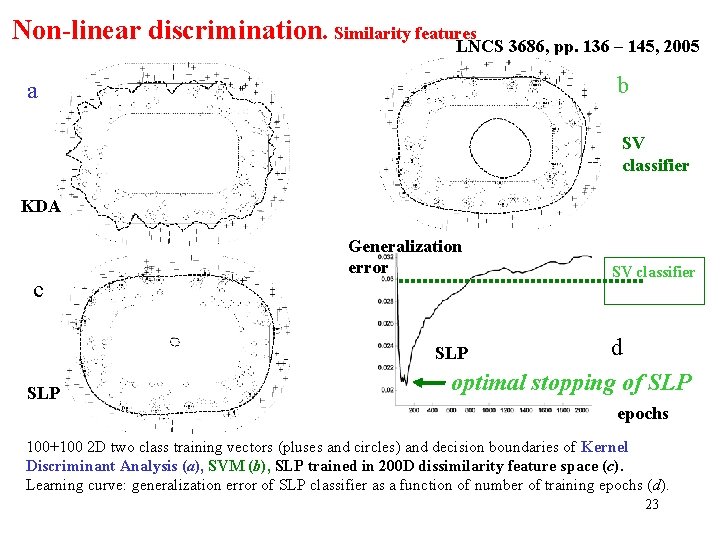

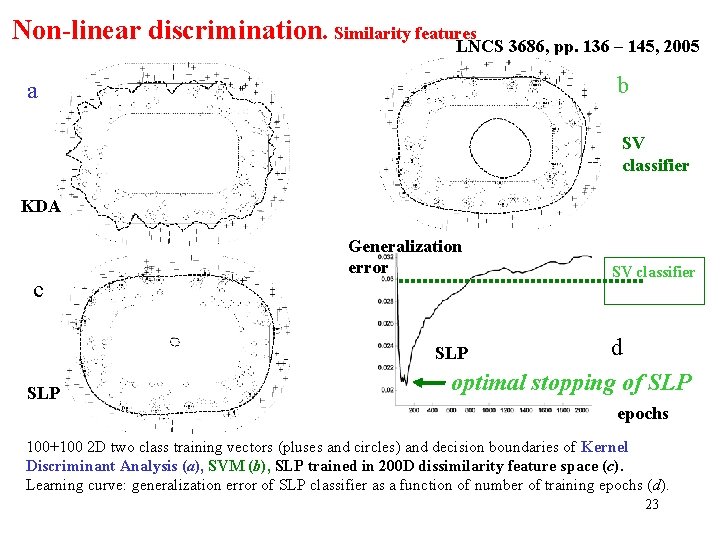

Non-linear discrimination. Similarity features LNCS 3686, pp. 136 – 145, 2005 b a SV classifier KDA c Generalization error SV classifier d optimal stopping of SLP SLP epochs 100+100 2 D two class training vectors (pluses and circles) and decision boundaries of Kernel Discriminant Analysis (a), SVM (b), SLP trained in 200 D dissimilarity feature space (c). Learning curve: generalization error of SLP classifier as a function of number of training epochs (d). 23

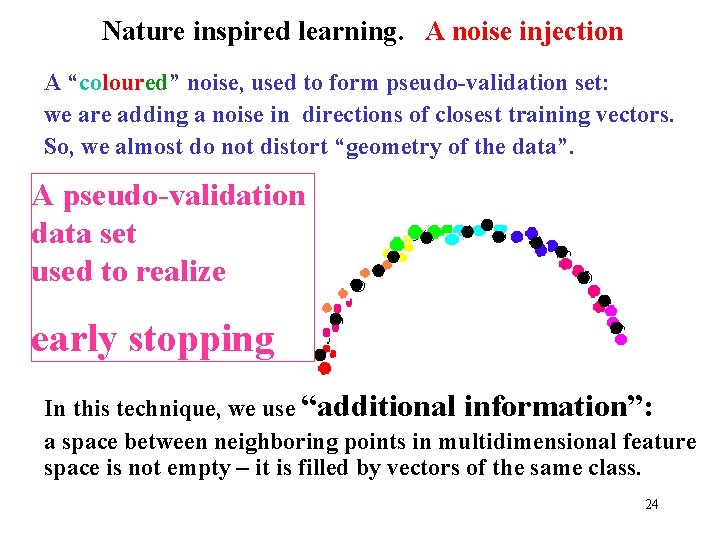

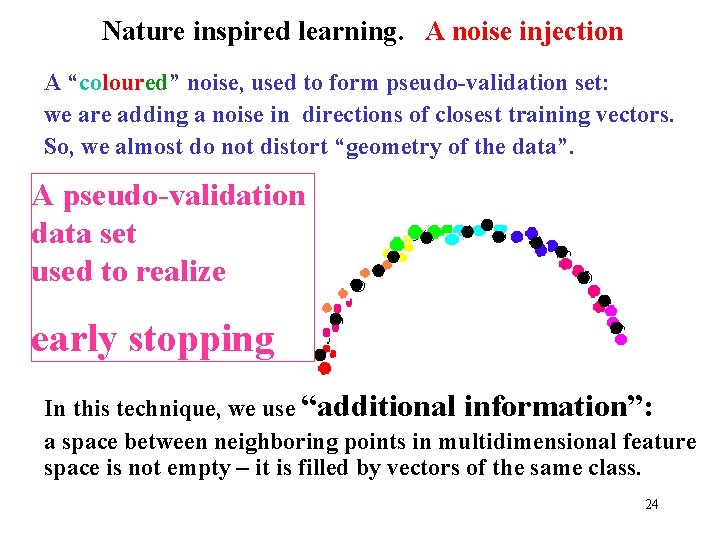

Nature inspired learning. A noise injection A “coloured” noise, used to form pseudo-validation set: we are adding a noise in directions of closest training vectors. So, we almost do not distort “geometry of the data”. A pseudo-validation data set used to realize early stopping In this technique, we use “additional information”: a space between neighboring points in multidimensional feature space is not empty – it is filled by vectors of the same class. 24

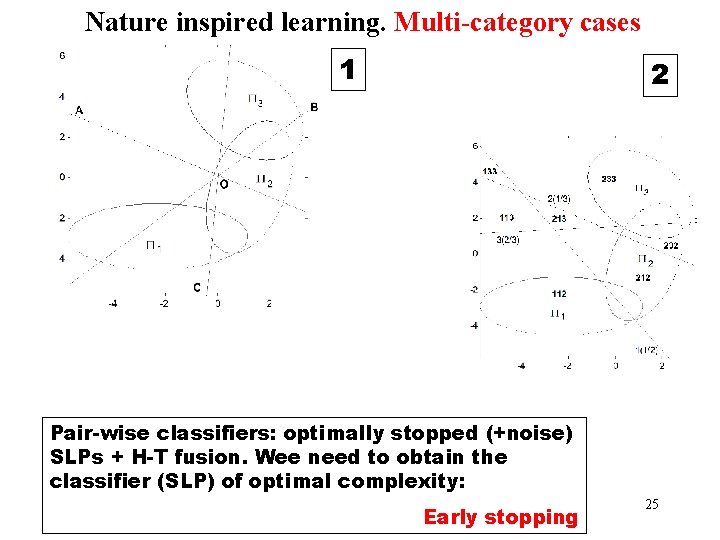

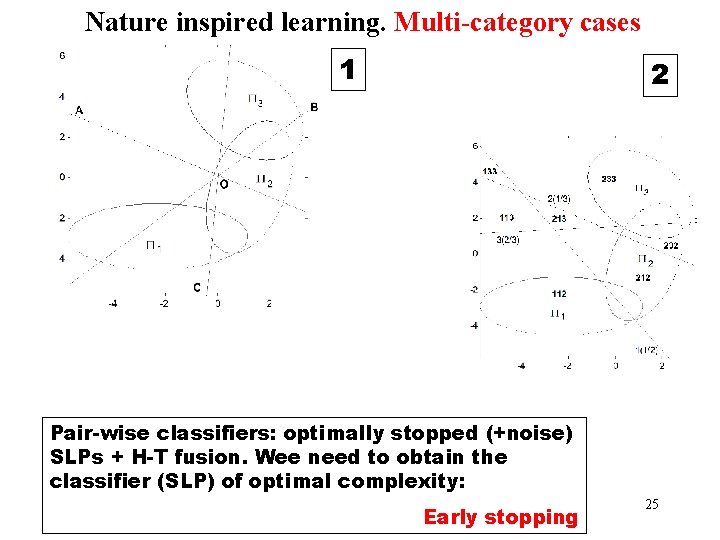

Nature inspired learning. Multi-category cases 1 2 Pair-wise classifiers: optimally stopped (+noise) SLPs + H-T fusion. Wee need to obtain the classifier (SLP) of optimal complexity: Early stopping 25

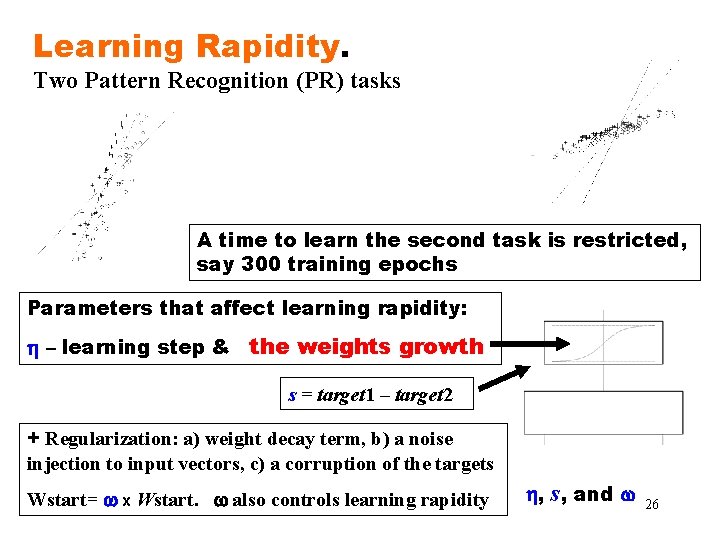

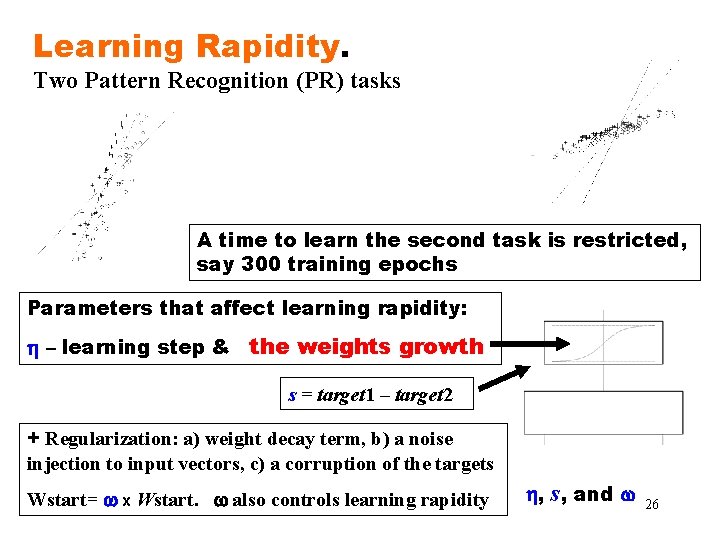

Learning Rapidity. Two Pattern Recognition (PR) tasks A time to learn the second task is restricted, say 300 training epochs Parameters that affect learning rapidity: h – learning step & the weights growth s = target 1 – target 2 + Regularization: a) weight decay term, b) a noise injection to input vectors, c) a corruption of the targets Wstart= w x Wstart. w also controls learning rapidity h, s, and w 26

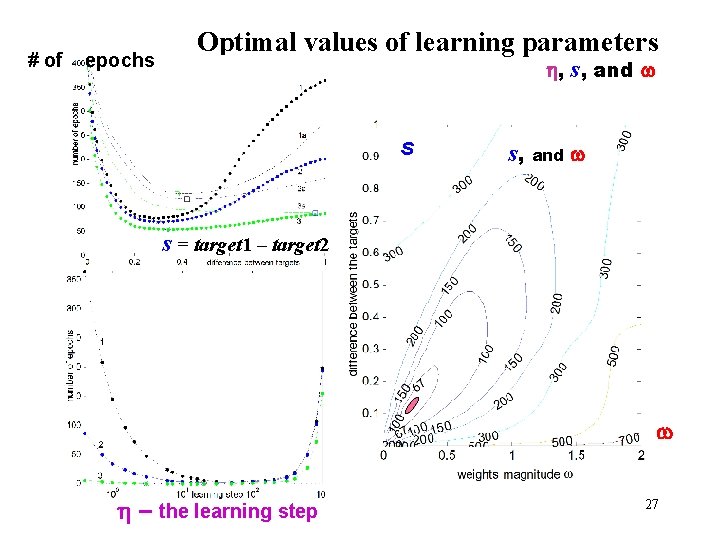

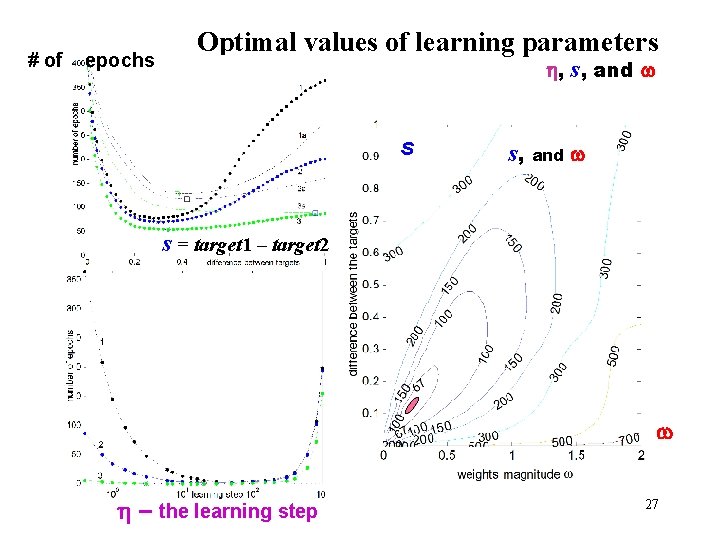

# of epochs Optimal values of learning parameters h, s, and w s = target 1 – target 2 w h – the learning step 27

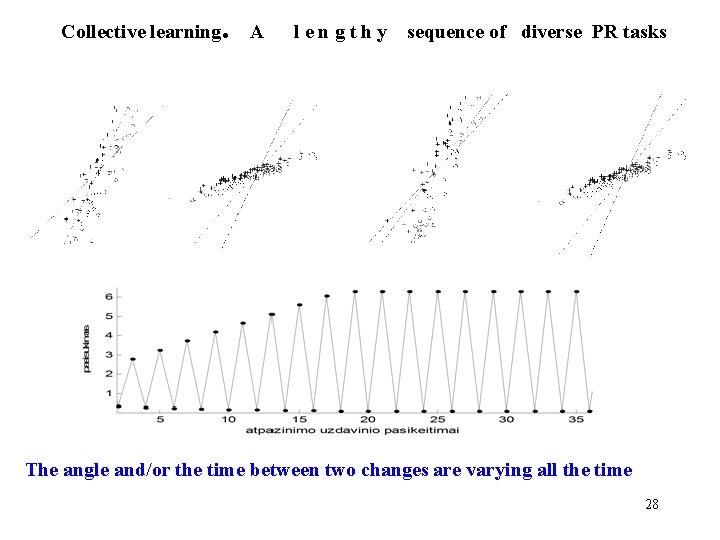

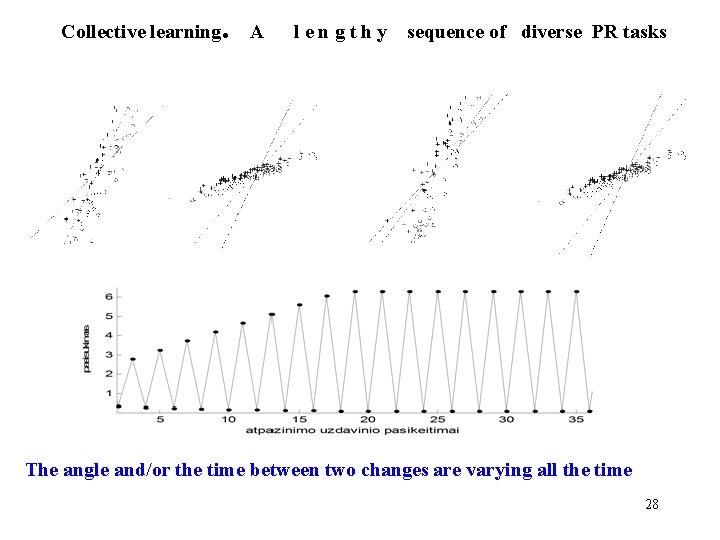

Collective learning . A lengthy sequence of diverse PR tasks The angle and/or the time between two changes are varying all the time 28

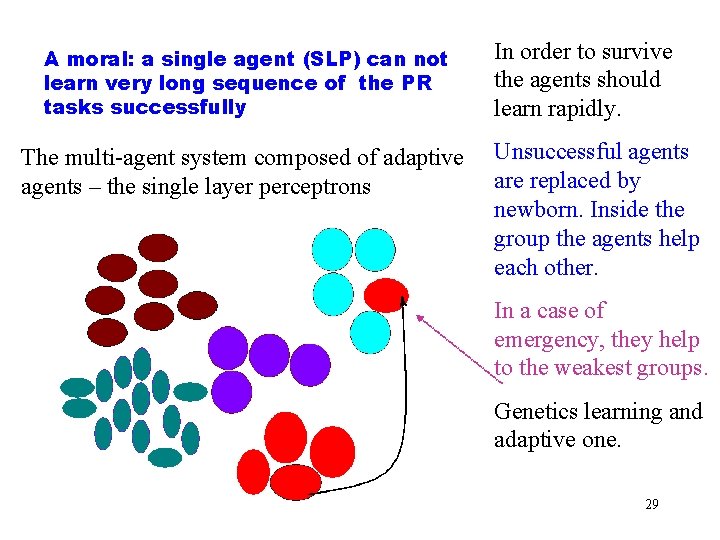

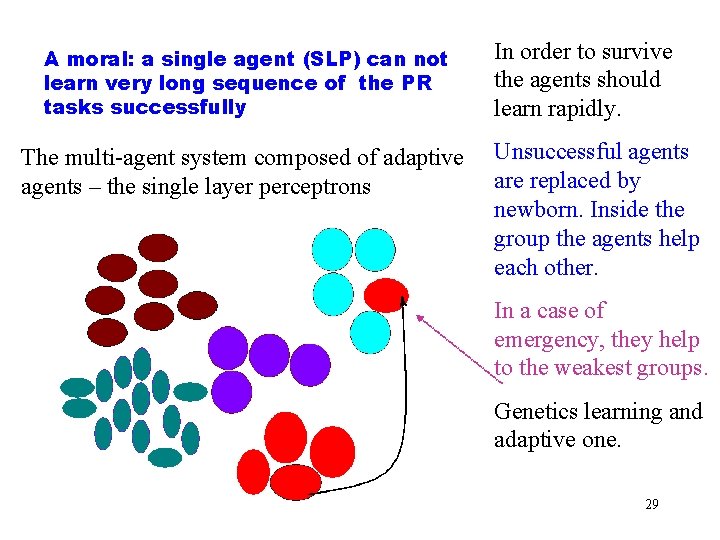

A moral: a single agent (SLP) can not learn very long sequence of the PR tasks successfully The multi-agent system composed of adaptive agents – the single layer perceptrons In order to survive the agents should learn rapidly. Unsuccessful agents are replaced by newborn. Inside the group the agents help each other. In a case of emergency, they help to the weakest groups. Genetics learning and adaptive one. 29

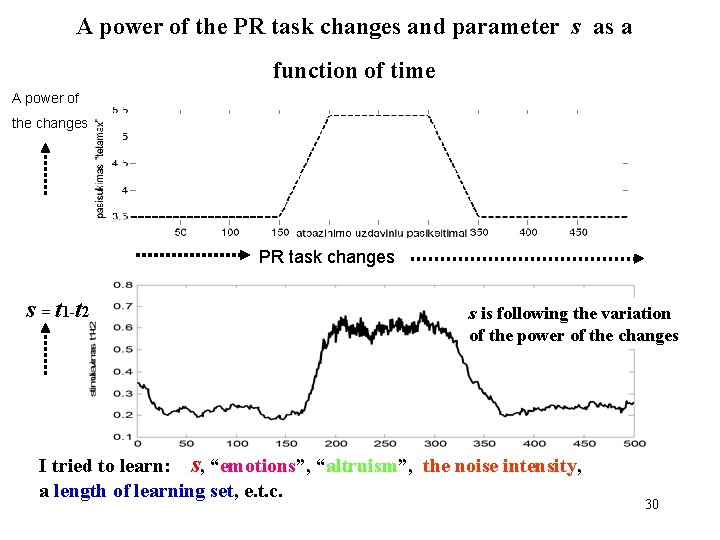

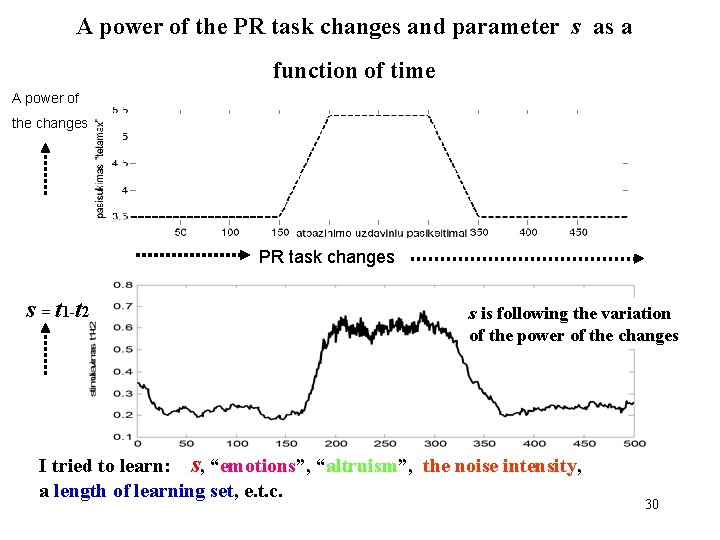

A power of the PR task changes and parameter s as a function of time A power of the changes PR task changes s = t 1 -t 2 s is following the variation of the power of the changes I tried to learn: s, “emotions”, “altruism”, the noise intensity, a length of learning set, e. t. c. 30

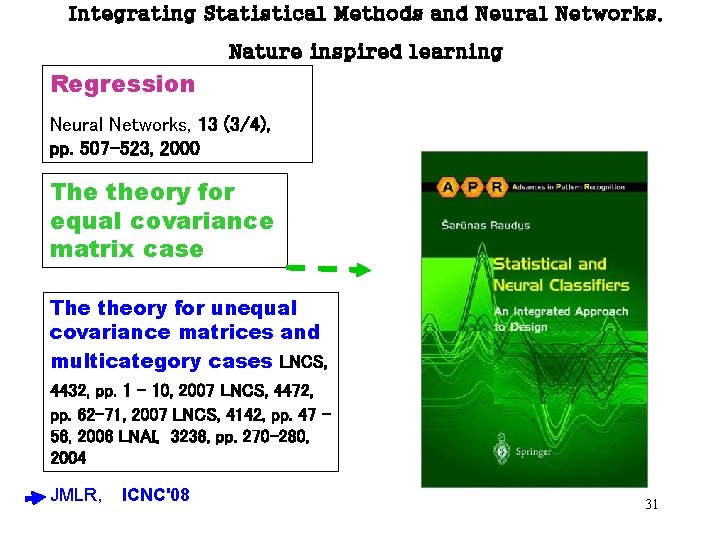

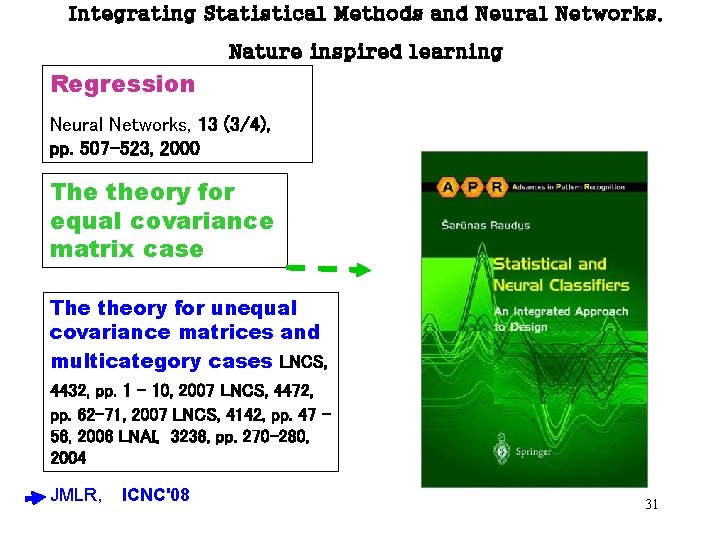

Integrating Statistical Methods and Neural Networks. Nature inspired learning Regression Neural Networks, 13 (3/4), pp. 507 -523, 2000 The theory for equal covariance matrix case The theory for unequal covariance matrices and multicategory cases LNCS, 4432, pp. 1 – 10, 2007 LNCS, 4472, pp. 62– 71, 2007 LNCS, 4142, pp. 47 – 56, 2006 LNAI, 3238, pp. 270 -280, 2004 JMLR, ICNC'08 31

32

33