Natural Language Processing Lecture Notes 3 1142020 1

- Slides: 36

Natural Language Processing Lecture Notes 3 11/4/2020 1

Morphology (Ch 3) • Finite-state methods are particularly useful in dealing with a lexicon. • So we’ll switch to talking about some facts about words and then come back to computational methods 11/4/2020 2

English Morphology • Morphology is the study of the ways that words are built up from smaller meaningful units called morphemes • We can usefully divide morphemes into two classes – Stems: The core meaning bearing units – Affixes: Bits and pieces that adhere to stems to change their meanings and grammatical functions 11/4/2020 3

Examples • Insubstantial, trying, unreadable 11/4/2020 4

English Morphology • We can also divide morphology up into two broad classes – Inflectional – Derivational 11/4/2020 5

Word Classes • By word class, we have in mind familiar notions like noun and verb • We’ll go into the gory details when we cover POS tagging • Right now we’re concerned with word classes because the way that stems and affixes combine is based to a large degree on the word class of the stem 11/4/2020 6

Inflectional Morphology • Inflectional morphology concerns the combination of stems and affixes where the resulting word – Has the same word class as the original – Serves a grammatical purpose different from the original (agreement, tense) – birds – likes or liked 11/4/2020 7

Nouns and Verbs (English) • Nouns are simple – Markers for plural and possessive • Verbs are only slightly more complex – Markers appropriate to the tense of the verb 11/4/2020 8

Regulars and Irregulars • Ok so it gets a little complicated by the fact that some words misbehave (refuse to follow the rules) – Mouse/mice, goose/geese, ox/oxen – Go/went, fly/flew • The terms regular and irregular will be used to refer to words that follow the rules and those that don’t. (Different meaning than “regular” languages!) 11/4/2020 9

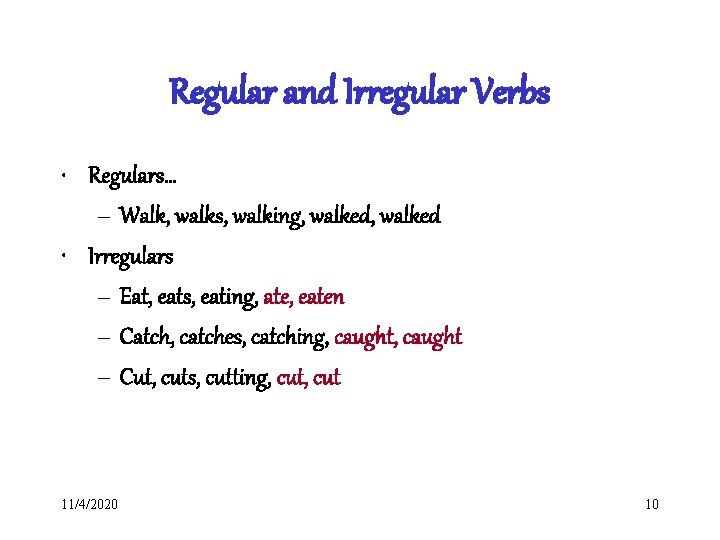

Regular and Irregular Verbs • Regulars… – Walk, walks, walking, walked • Irregulars – Eat, eats, eating, ate, eaten – Catch, catches, catching, caught – Cut, cuts, cutting, cut 11/4/2020 10

Regular Verbs • If you know a regular verb stem, you can predict the other forms by adding a predictable ending and making regular spelling changes (details in the chapter) • The regular class is productive – includes new verbs. – Emailed, instant-messaged, faxed, googled, … 11/4/2020 11

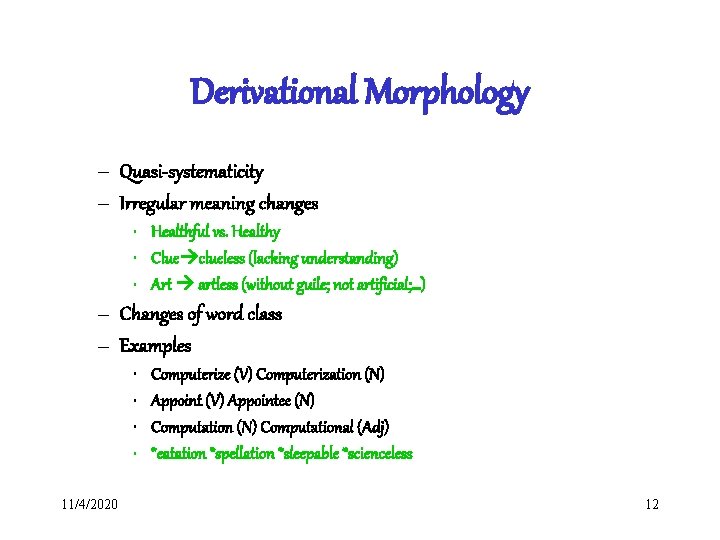

Derivational Morphology – Quasi-systematicity – Irregular meaning changes • Healthful vs. Healthy • Clue clueless (lacking understanding) • Art artless (without guile; not artificial; …) – Changes of word class – Examples • • 11/4/2020 Computerize (V) Computerization (N) Appoint (V) Appointee (N) Computational (Adj) *eatation *spellation *sleepable *scienceless 12

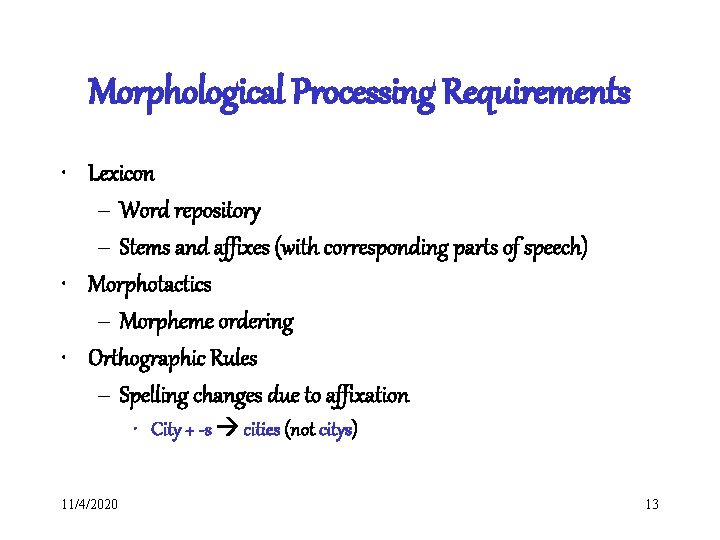

Morphological Processing Requirements • Lexicon – Word repository – Stems and affixes (with corresponding parts of speech) • Morphotactics – Morpheme ordering • Orthographic Rules – Spelling changes due to affixation • City + -s cities (not citys) 11/4/2020 13

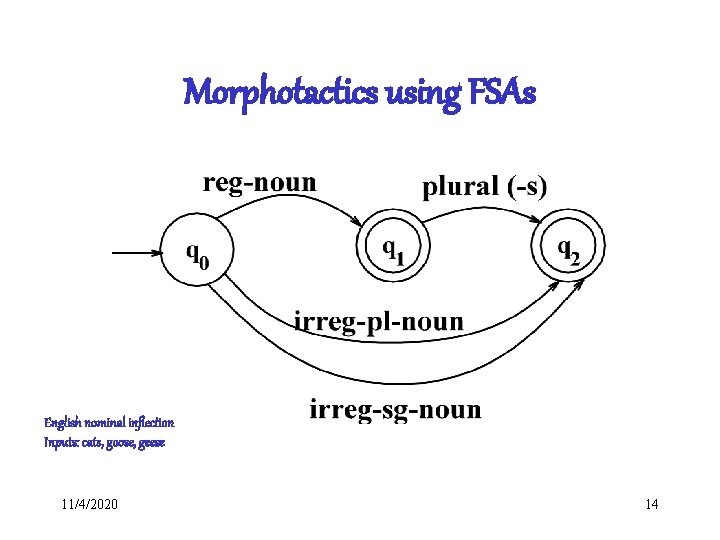

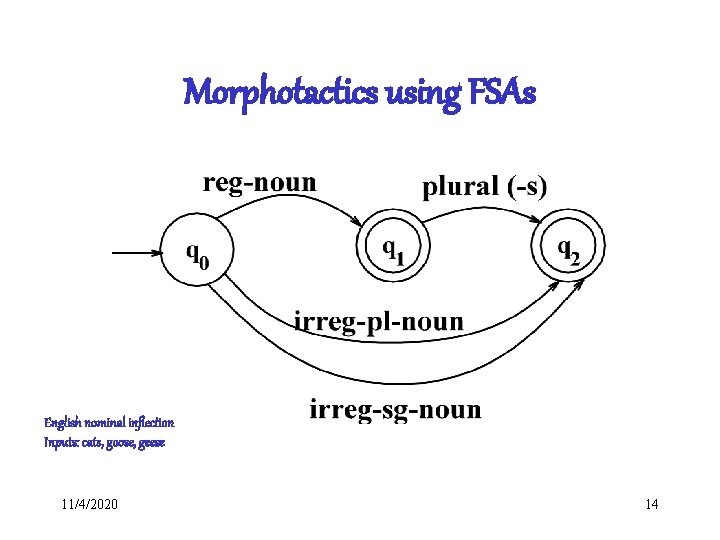

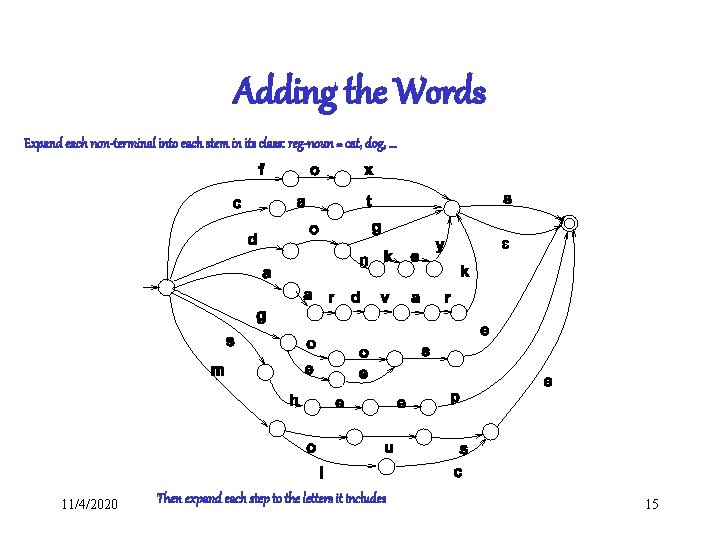

Morphotactics using FSAs English nominal inflection Inputs: cats, goose, geese 11/4/2020 14

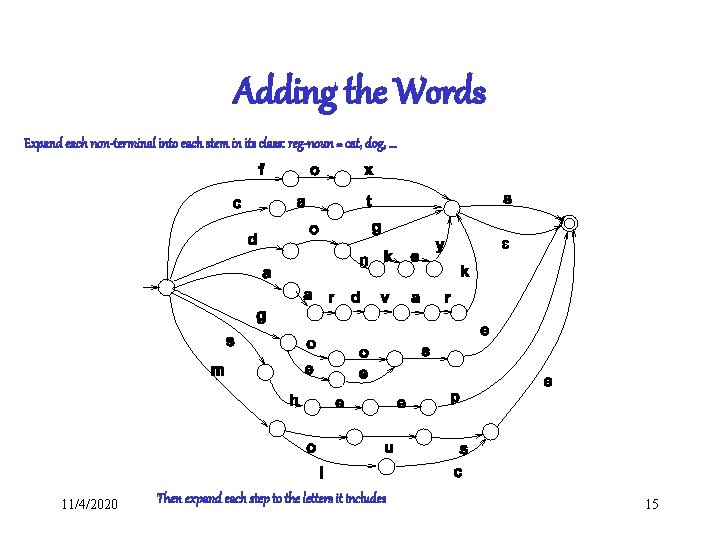

Adding the Words Expand each non-terminal into each stem in its class: reg-noun = cat, dog, … 11/4/2020 Then expand each step to the letters it includes 15

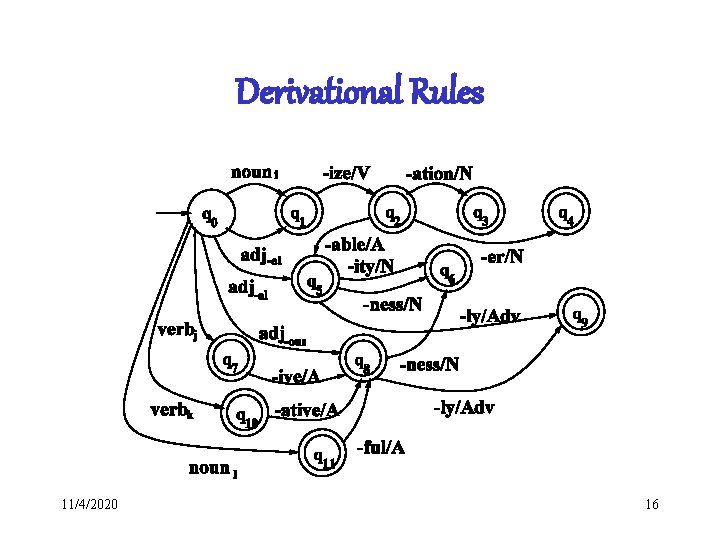

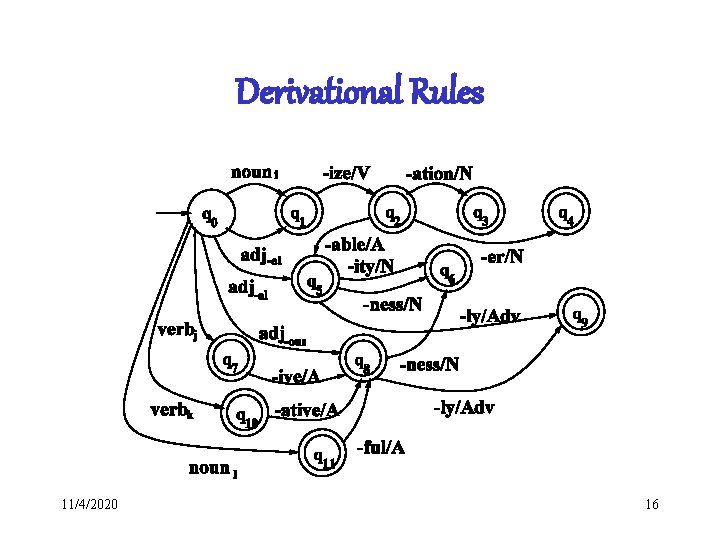

Derivational Rules 11/4/2020 16

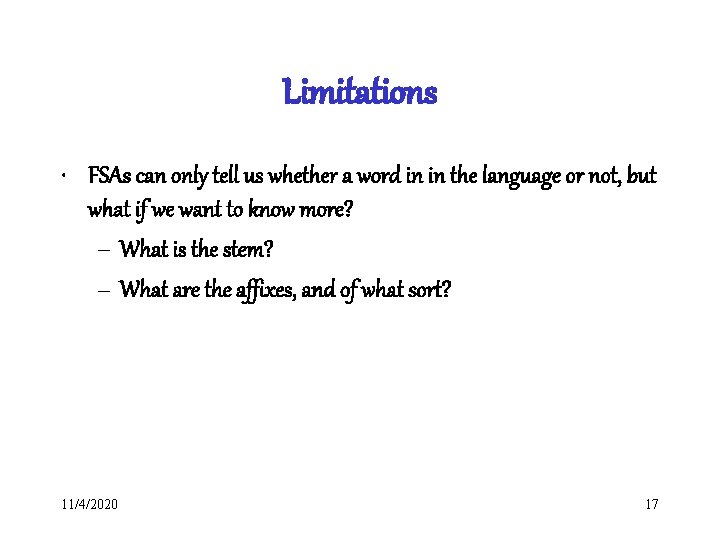

Limitations • FSAs can only tell us whether a word in in the language or not, but what if we want to know more? – What is the stem? – What are the affixes, and of what sort? 11/4/2020 17

Parsing/Generation vs. Recognition • Recognition is usually not quite what we need. – Usually if we find some string in the language we need to find the structure in it (parsing) – Or we have some structure and we want to produce a surface form (production/generation) • Examples – From “cats” to “cat +N +PL” – From “cat +N +Pl” to “cats” 11/4/2020 18

Applications • The kind of parsing we’re talking about is normally called morphological analysis • It can either be – An important stand-alone component of an application (spelling correction, information retrieval) – Or simply a link in a chain of processing 11/4/2020 19

Finite State Transducers • The simple story – Add another tape – Add extra symbols to the transitions – On one tape we read “cats”, on the other we write “cat +N +PL”, or the other way around. 11/4/2020 20

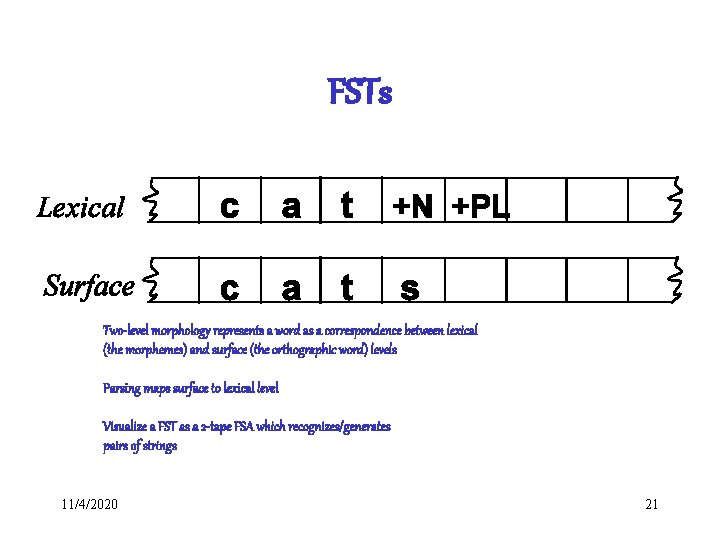

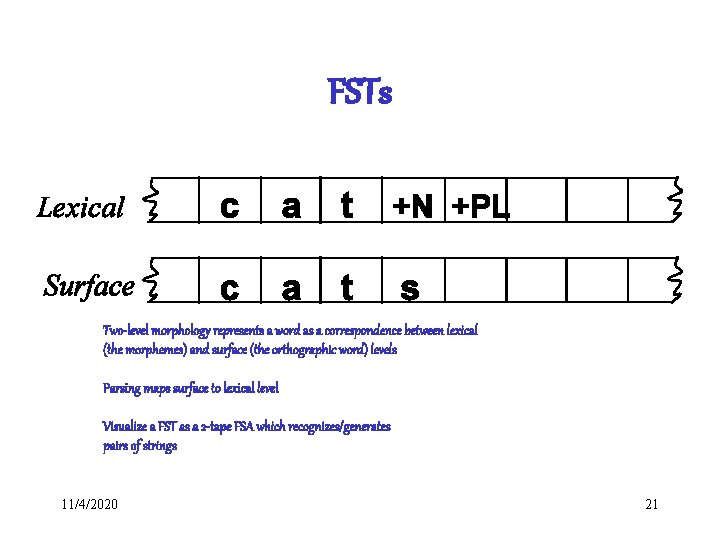

FSTs Two-level morphology represents a word as a correspondence between lexical (the morphemes) and surface (the orthographic word) levels Parsing maps surface to lexical level Visualize a FST as a 2 -tape FSA which recognizes/generates pairs of strings 11/4/2020 21

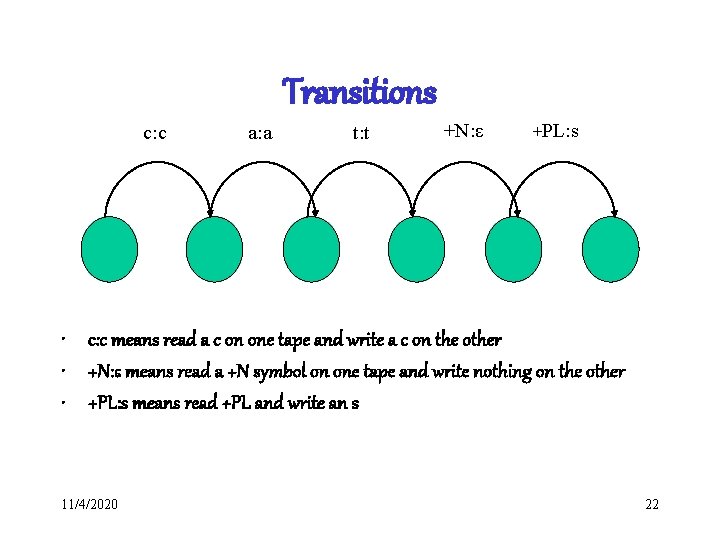

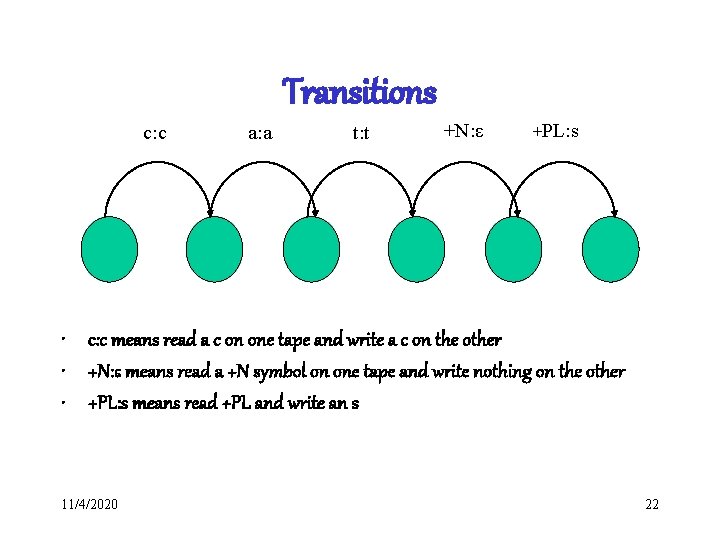

Transitions c: c a: a t: t +N: ε +PL: s • c: c means read a c on one tape and write a c on the other • +N: ε means read a +N symbol on one tape and write nothing on the other • +PL: s means read +PL and write an s 11/4/2020 22

Typical Uses • Typically, we’ll read from one tape using the first symbol on the machine transitions (just as in a simple FSA). • And we’ll write to the second tape using the other symbols on the transitions. 11/4/2020 23

Ambiguity • Recall that in non-deterministic recognition multiple paths through a machine may lead to an accept state. – Didn’t matter which path was actually traversed • In FSTs the path to an accept state does matter since different paths represent different parses and different outputs will result 11/4/2020 24

Ambiguity • What’s the right parse for – Unionizable – Union-ize-able – Un-ion-ize-able • Each represents a valid path through the derivational morphology machine. 11/4/2020 25

Ambiguity • There a number of ways to deal with this problem – Simply take the first output found – Find all the possible outputs (all paths) and return them all (without choosing) – Bias the search so that only one or a few likely paths are explored 11/4/2020 26

The Gory Details • Of course, its not as easy as – “cat +N +PL” <-> “cats” • As we saw earlier there are geese, mice and oxen • But there also a whole host of spelling/pronunciation changes that go along with inflectional changes 11/4/2020 27

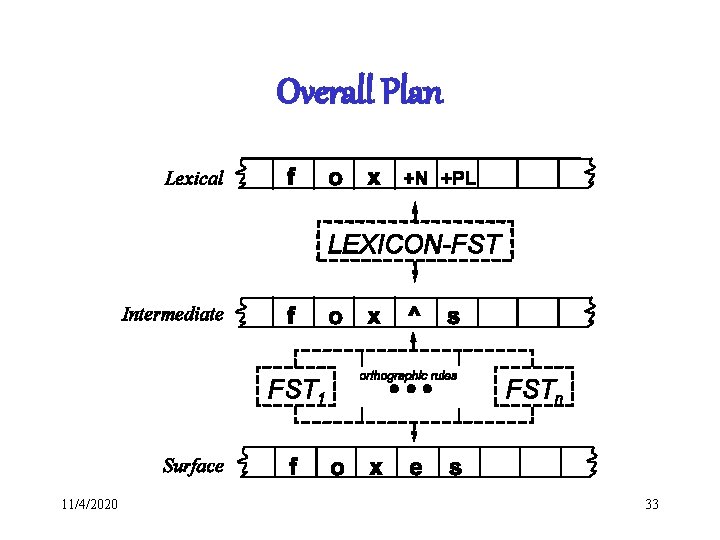

Multi-Tape Machines • To deal with this we can simply add more tape and use the output of one tape machine as the input to the next • So to handle irregular spelling changes we’ll add intermediate tapes with intermediate symbols 11/4/2020 28

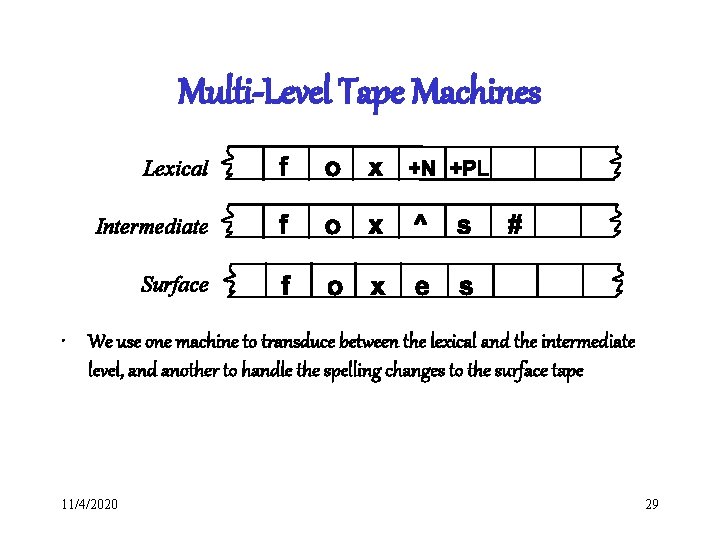

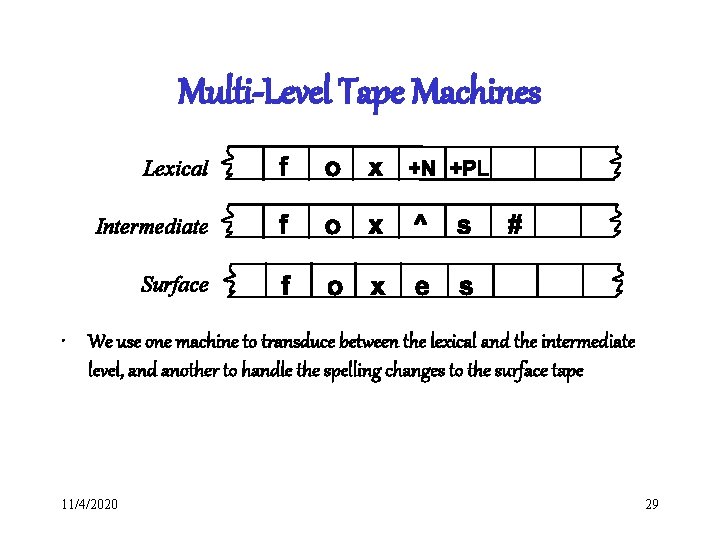

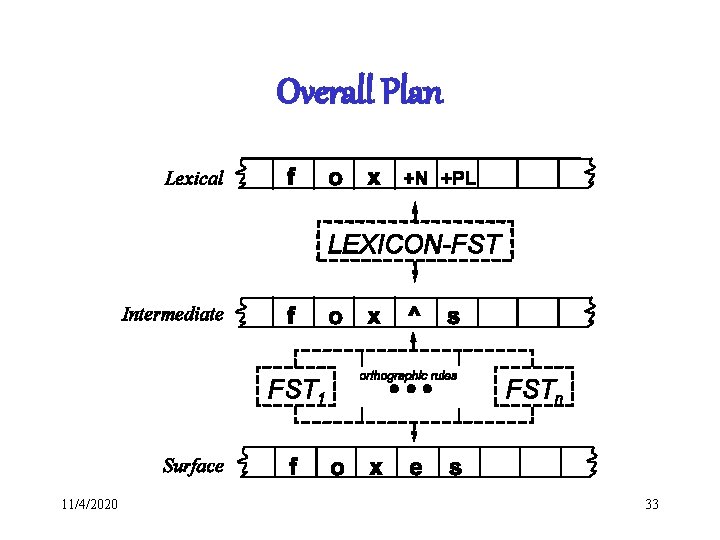

Multi-Level Tape Machines • We use one machine to transduce between the lexical and the intermediate level, and another to handle the spelling changes to the surface tape 11/4/2020 29

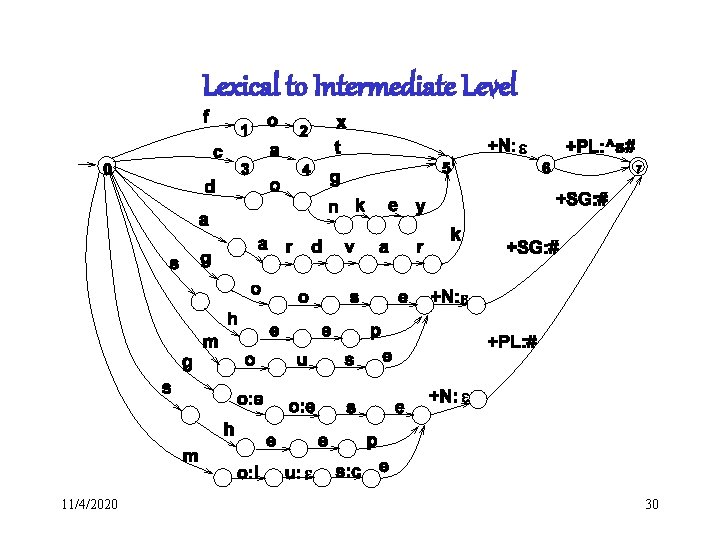

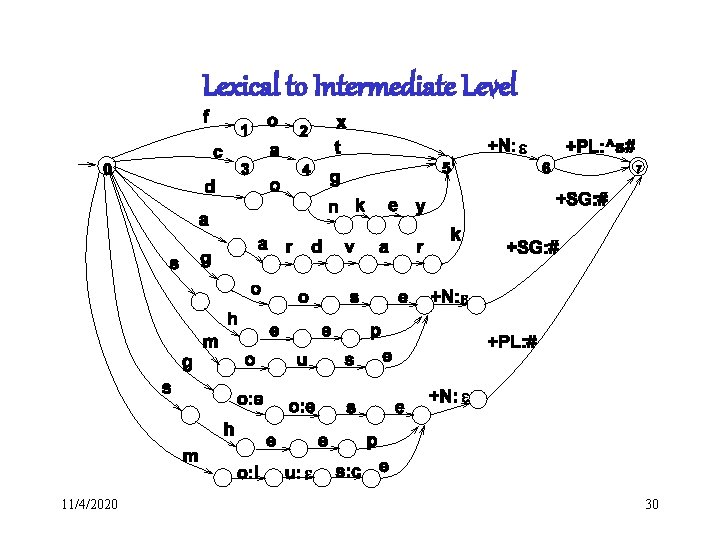

Lexical to Intermediate Level 11/4/2020 30

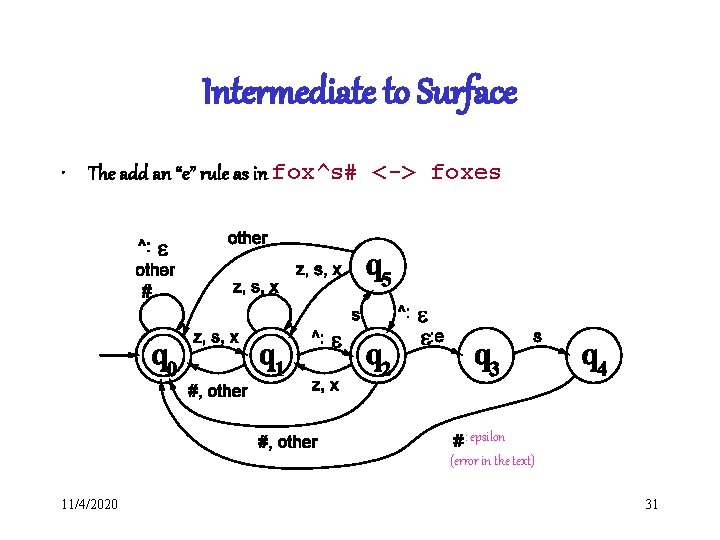

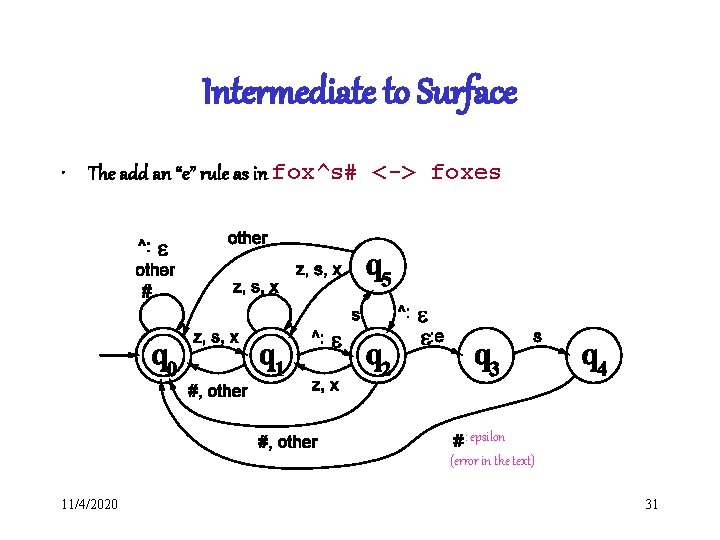

Intermediate to Surface • The add an “e” rule as in fox^s# <-> foxes : epsilon (error in the text) 11/4/2020 31

Note • A key feature of this machine is that it doesn’t do anything to inputs to which it doesn’t apply. • Meaning that they are written out unchanged to the output tape. 11/4/2020 32

Overall Plan 11/4/2020 33

Summing Up • FSTs allow us to take an input and deliver a structure based on it • Or… take a structure and create a surface form • Or take a structure and create another structure 11/4/2020 34

Summing Up • In many applications its convenient to decompose the problem into a set of cascaded transducers where – The output of one feeds into the input of the next. – We’ll see this scheme again for deeper semantic processing. 11/4/2020 35

Summing Up • FSTs provide a useful tool for implementing a standard model of morphological analysis (two-level morphology) • Toolkits such as AT&T FSM Toolkit available • Other approaches are also used, e. g. , the rule-based Porter Stemmer, and memory-based learning 11/4/2020 36