Natural Language Processing Lecture Notes 10 Chapter 19

- Slides: 30

Natural Language Processing Lecture Notes 10 Chapter 19 Computational Lexical Semantics Part 1: Supervised Word-Sense Disambiguation 9/2/2021 1

Computational Lexical Semantics • Word-Sense Disambiguation (WSD) • Computing word similarity • Semantic role labeling – AKA (also known as): case role or thematic role assignment 9/2/2021 2

WSD • As we saw, thematic roles and selectional restrictions depend on word senses • WSD could help machine translation, question answering, information extraction, information retrieval, and text classification 9/2/2021 3

WSD • Prototypical: – Input: a word instance in context and a fixed inventory of possible senses for that word – Output: one of the senses • The sense inventory depends on the task. E. g. , For MT from English to Spanish, the sense inventory for an English word might be the set of possible Spanish translations 9/2/2021 4

Two Current Tasks • SENSEVAL – community competition, organized by ACL SIGLex • Lexical sample task: a pre-selected set of target words and fixed sense inventories for them – Supervised machine learning – One classifier per word (“line”, “interest”, “plant”) • All-words: system must sense tag all the content words in the corpus (which appear in the lexicon) – Data sparseness problems, so supervised learning not as feasible – So many polysemous words, that one classifier-per-word becomes less feasible 9/2/2021 5

Supervised ML Approaches • A training corpus of words manually tagged in context with senses is used to train a classifier that can tag words in new text 9/2/2021 6

Representations • Most supervised ML approaches represent the training data as – Vectors of feature/value pairs • So our first task is to extract training data from a corpus with respect to a particular instance of a target word – This typically consists of features of a window of text surrounding the target 9/2/2021 7

Representations • This is where ML and NLP intersect – If you stick to trivial surface features that are easy to extract from a text, then most of the work is in the ML system – If you decide to use features that require more analysis (say parse trees) then the ML part may be doing less work (relatively) 9/2/2021 8

Surface Representations • Collocational and co-occurrence information – Collocational • Encode features about the words that appear in specific positions to the right and left of the target word – Often limited to the words themselves as well as their part of speech • Warning: “collocation” may also mean ‘a word statistically correlated with the classification’, even in the statistical NLP community – Co-occurrence (bag-of-words) • Features characterizing the words that occur anywhere in the window regardless of position 9/2/2021 9

Examples • Example text (WSJ) – An electric guitar and bass player stand off to one side not really part of the scene, just as a sort of nod to gringo expectations perhaps – Assume a window of +/- 2 from the target 9/2/2021 10

Examples • Example text – An electric guitar and bass player stand off to one side not really part of the scene, just as a sort of nod to gringo expectations perhaps – Assume a window of +/- 2 from the target 9/2/2021 11

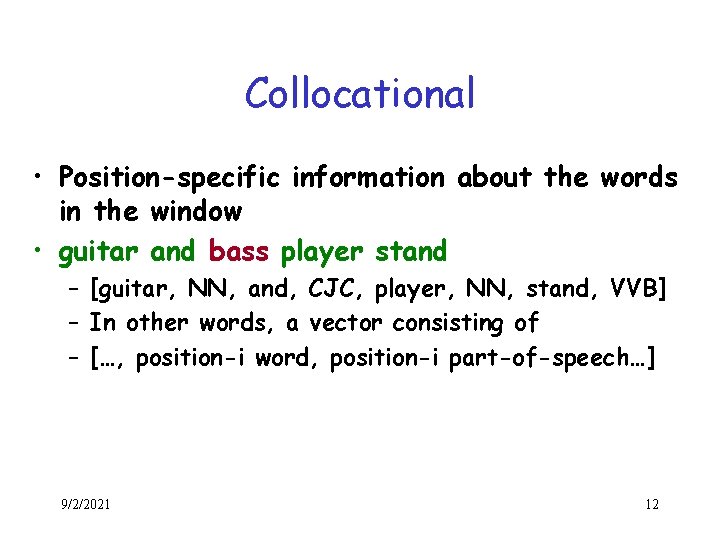

Collocational • Position-specific information about the words in the window • guitar and bass player stand – [guitar, NN, and, CJC, player, NN, stand, VVB] – In other words, a vector consisting of – […, position-i word, position-i part-of-speech…] 9/2/2021 12

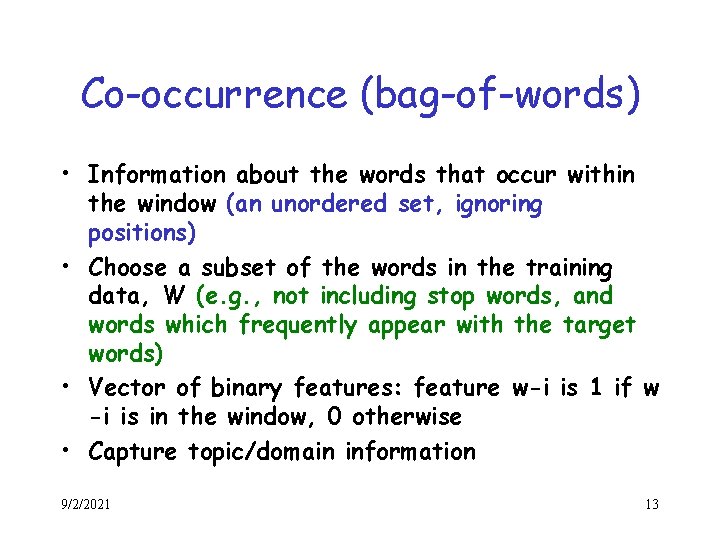

Co-occurrence (bag-of-words) • Information about the words that occur within the window (an unordered set, ignoring positions) • Choose a subset of the words in the training data, W (e. g. , not including stop words, and words which frequently appear with the target words) • Vector of binary features: feature w-i is 1 if w -i is in the window, 0 otherwise • Capture topic/domain information 9/2/2021 13

Bag-Of-Words Example • Assume we’ve settled on a possible vocabulary of 12 words that includes guitar and player but not and stand • guitar and bass player stand – [0, 0, 0, 1, 0, 0] 9/2/2021 14

Classifiers • Once we cast the WSD problem as a classification problem, then all sorts of techniques are possible – – – – 9/2/2021 Naïve Bayes (the right thing to try first) Decision lists Decision trees Boosting Support vector machines Nearest neighbor methods … 15

Classifiers • The choice of technique, in part, depends on the set of features that have been used – Some techniques work better/worse with features with numerical values – Some techniques work better/worse with features that have large numbers of possible values • For example, the feature the word to the left has a large number of possible values 9/2/2021 16

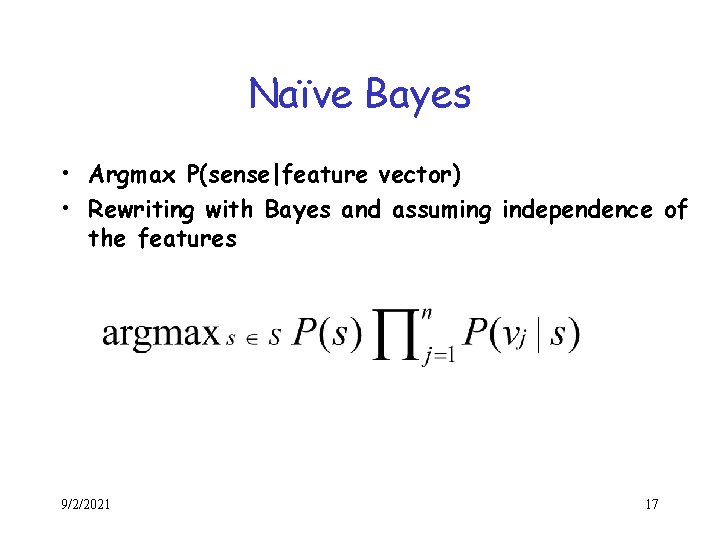

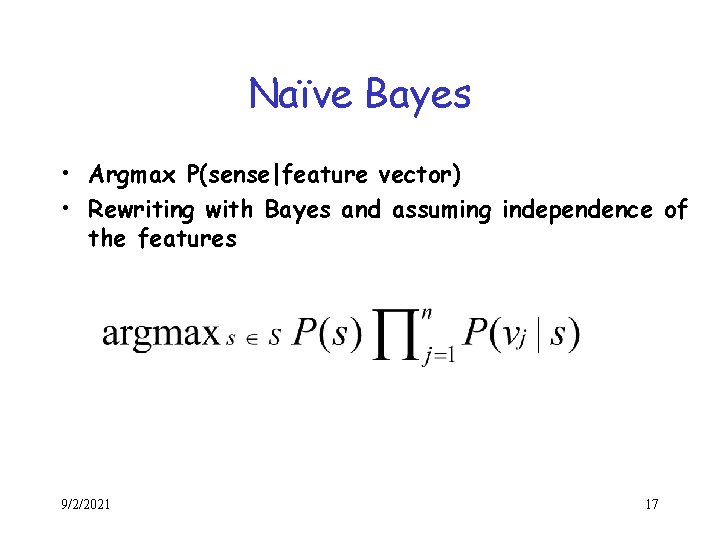

Naïve Bayes • Argmax P(sense|feature vector) • Rewriting with Bayes and assuming independence of the features 9/2/2021 17

Naïve Bayes • P(s) … just the prior of that sense. – As with part of speech tagging, not all senses will occur with equal frequency • P(vj|s) … conditional probability of some particular feature value given a particular sense • You can get both of these from a tagged corpus with the features encoded • Smoothing is needed for words in test data but not in training data 9/2/2021 18

Naïve Bayes Test • On a corpus of examples of uses of the word line, naïve Bayes achieved about 73% correct • Good? – Standalone: Prec, Recall, Acc, F • Baseline: most frequent sense • Baseline: the lesk algorithm (later) – Improve an NLP task: does the WSD-aware task system perform significantly better? 9/2/2021 19

Most-Frequent Sense Baseline • Depends on the corpus • Mc. Carthy et al. (best paper at ACL 2005): – Unsupervised method for finding the most frequent sense in a corpus 9/2/2021 20

Naïve Bayes • One problem with NB (and other algorithms such as SVM) is that their conclusions are opaque • Difficult to examine the results and understand why the WSD system decided what it did • Decision lists and decision trees are more transparent. We’ll look at decision lists… 9/2/2021 21

Decision Lists • Like case, switch statements in PLs 9/2/2021 22

Learning DLs: one approach • Restrict the lists to rules that test a single feature (1 -dl rules) • Evaluate each possible test and rank them based on how well they work. – Log-likelihood ratio: how discriminative a feature is between two senses • Abs(log(p(S 1|F)/p(S 2|F)) • Use the top N tests, in order, as the decision list 9/2/2021 23

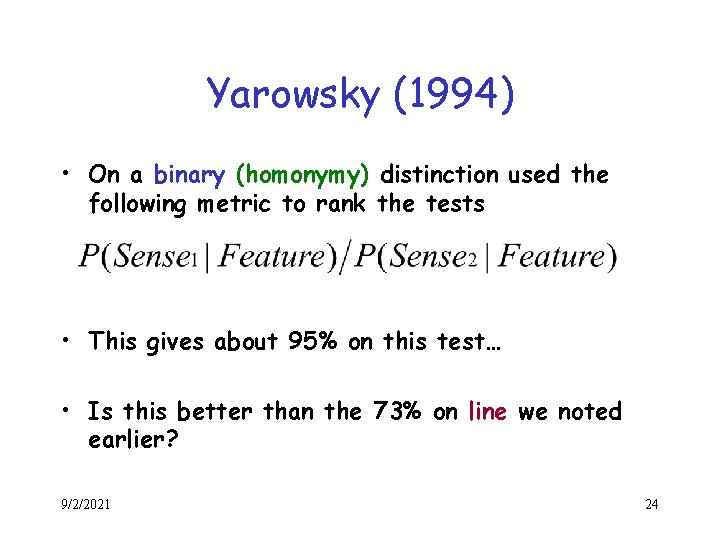

Yarowsky (1994) • On a binary (homonymy) distinction used the following metric to rank the tests • This gives about 95% on this test… • Is this better than the 73% on line we noted earlier? 9/2/2021 24

Bootstrapping • What if you don’t have enough data to train a system… • Bootstrap – Pick a word that you as an analyst think will co-occur with your target word in particular sense – Grep through your corpus for your target word and the hypothesized word – Assume that the target tag is the right one 9/2/2021 25

Bootstrapping • For bass – Assume play occurs with the music sense and fish occurs with the fish sense 9/2/2021 26

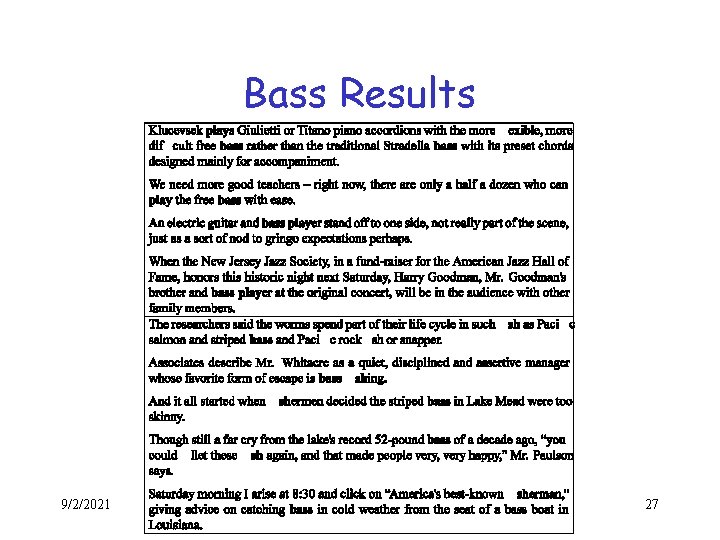

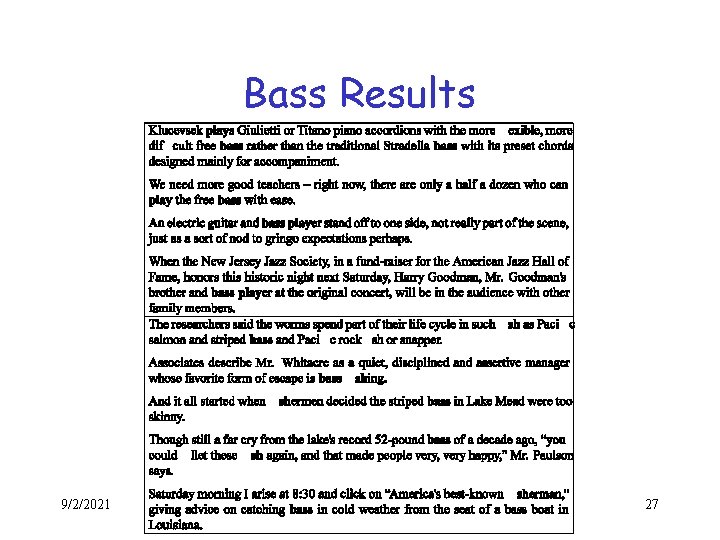

Bass Results 9/2/2021 27

Bootstrapping • Perhaps better – Use the little training data you have to train an inadequate system – Use that system to tag new data. – Use that larger set of training data to train a new system 9/2/2021 28

Problems • Given these general ML approaches, how many classifiers do I need to perform WSD robustly – One for each ambiguous word in the language • How do you decide what set of tags/labels/senses to use for a given word? – Depends on the application 9/2/2021 29

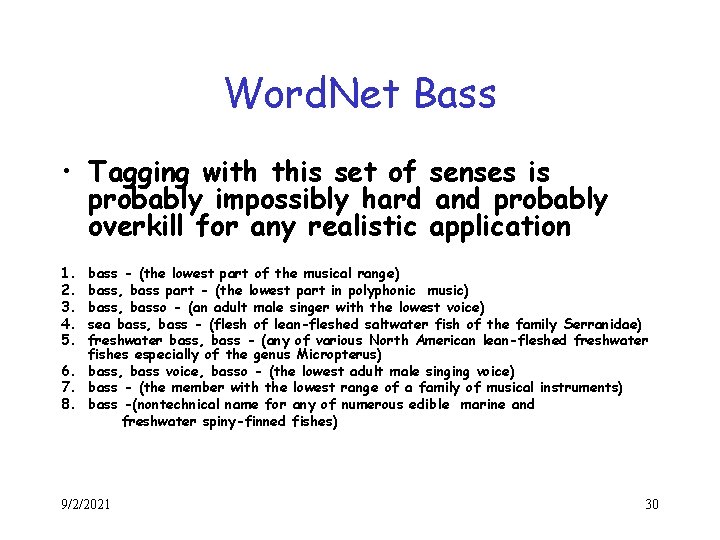

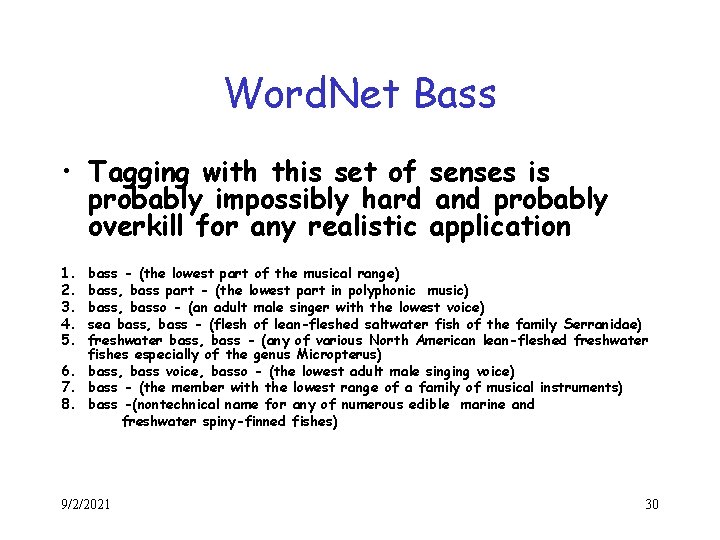

Word. Net Bass • Tagging with this set of senses is probably impossibly hard and probably overkill for any realistic application 1. 2. 3. 4. 5. bass - (the lowest part of the musical range) bass, bass part - (the lowest part in polyphonic music) bass, basso - (an adult male singer with the lowest voice) sea bass, bass - (flesh of lean-fleshed saltwater fish of the family Serranidae) freshwater bass, bass - (any of various North American lean-fleshed freshwater fishes especially of the genus Micropterus) 6. bass, bass voice, basso - (the lowest adult male singing voice) 7. bass - (the member with the lowest range of a family of musical instruments) 8. bass -(nontechnical name for any of numerous edible marine and freshwater spiny-finned fishes) 9/2/2021 30