Natural Language Processing Lecture 2 Semantics Last Lecture

- Slides: 32

Natural Language Processing Lecture 2: Semantics

Last Lecture Motivation n Paradigms for studying language n Levels of NL analysis n Syntax n – Parsing Top-down n Bottom-up n Chart parsing n

Today’s Lecture DCGs and parsing in Prolog n Semantics n – Logical representation schemes – Procedural representation schemes – Network representation schemes – Structured representation schemes

Parsing in PROLOG n How do you represent a grammar in PROLOG?

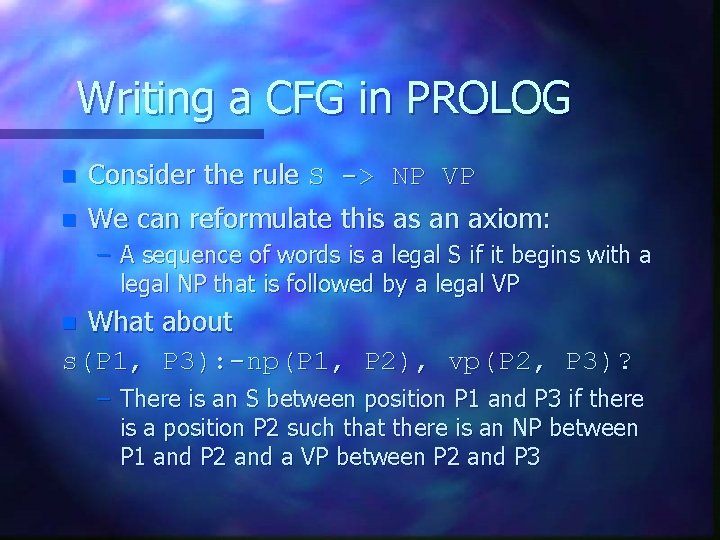

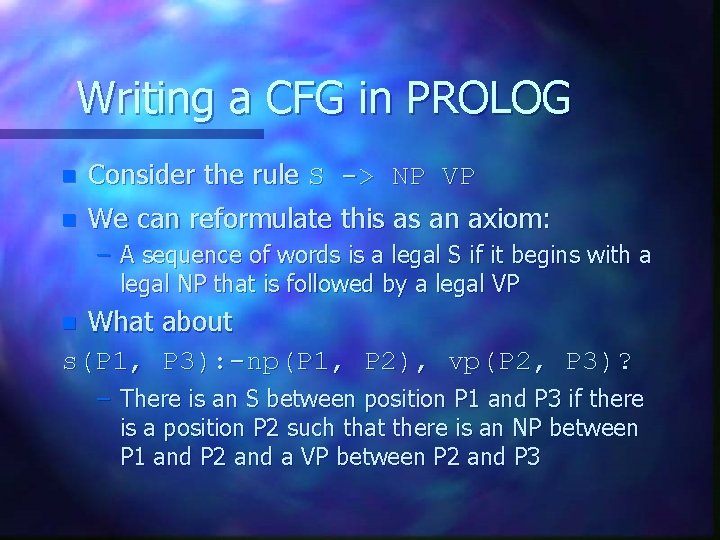

Writing a CFG in PROLOG n Consider the rule S -> NP VP n We can reformulate this as an axiom: – A sequence of words is a legal S if it begins with a legal NP that is followed by a legal VP What about s(P 1, P 3): -np(P 1, P 2), vp(P 2, P 3)? n – There is an S between position P 1 and P 3 if there is a position P 2 such that there is an NP between P 1 and P 2 and a VP between P 2 and P 3

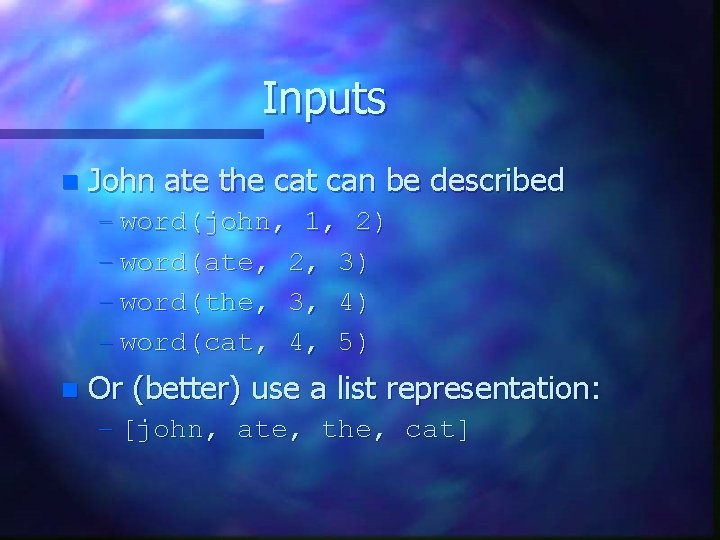

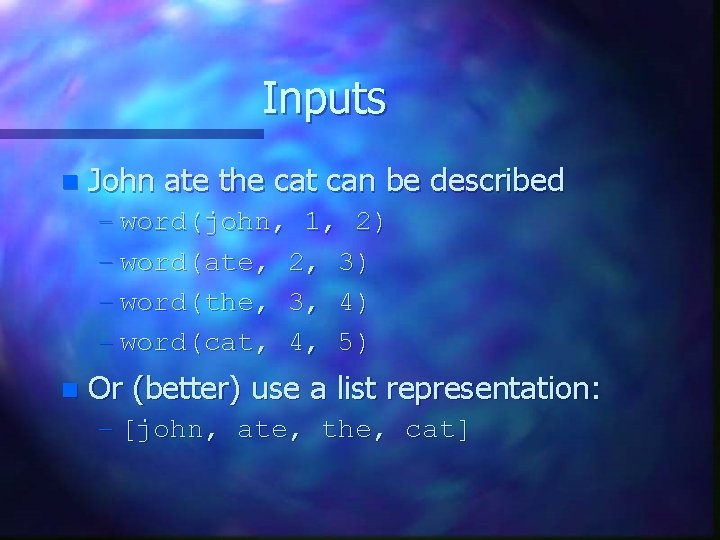

Inputs n John ate the cat can be described – word(john, 1, 2) – word(ate, 2, 3) – word(the, 3, 4) – word(cat, 4, 5) n Or (better) use a list representation: – [john, ate, the, cat]

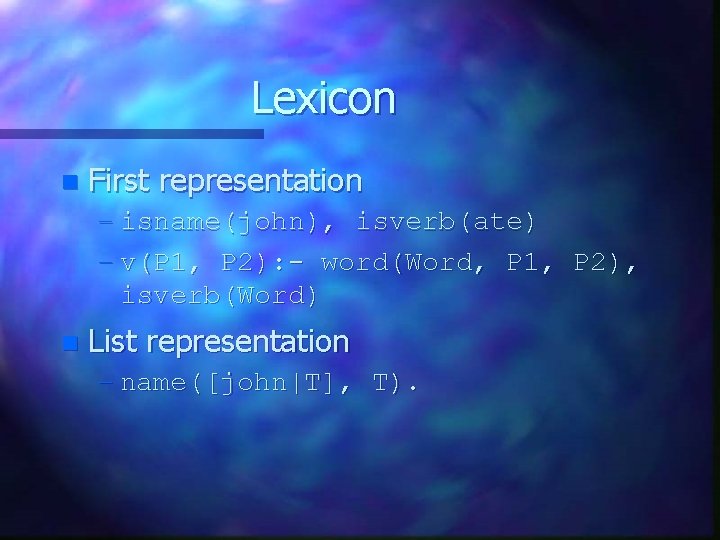

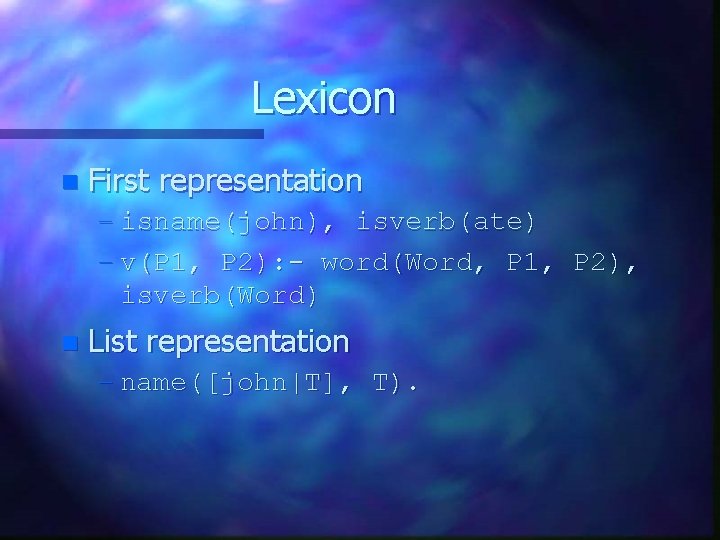

Lexicon n First representation – isname(john), isverb(ate) – v(P 1, P 2): - word(Word, P 1, P 2), isverb(Word) n List representation – name([john|T], T).

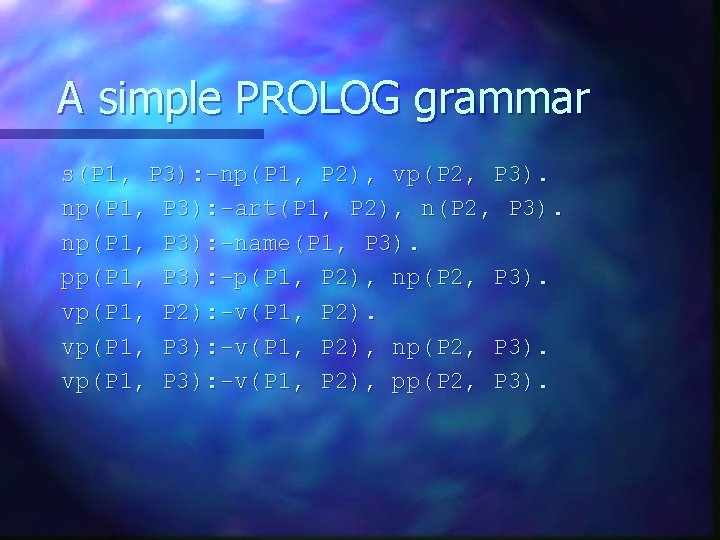

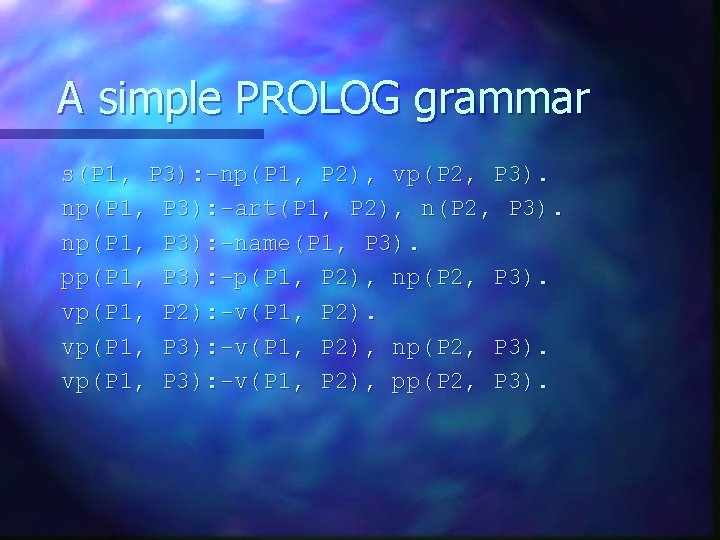

A simple PROLOG grammar s(P 1, P 3): -np(P 1, P 2), vp(P 2, P 3). np(P 1, P 3): -art(P 1, P 2), n(P 2, P 3). np(P 1, P 3): -name(P 1, P 3). pp(P 1, P 3): -p(P 1, P 2), np(P 2, P 3). vp(P 1, P 2): -v(P 1, P 2). vp(P 1, P 3): -v(P 1, P 2), np(P 2, P 3). vp(P 1, P 3): -v(P 1, P 2), pp(P 2, P 3).

Direct clause grammars PROLOG provides an operator that supports DCGs n Rules look like CFG notation n PROLOG automatically translates these n

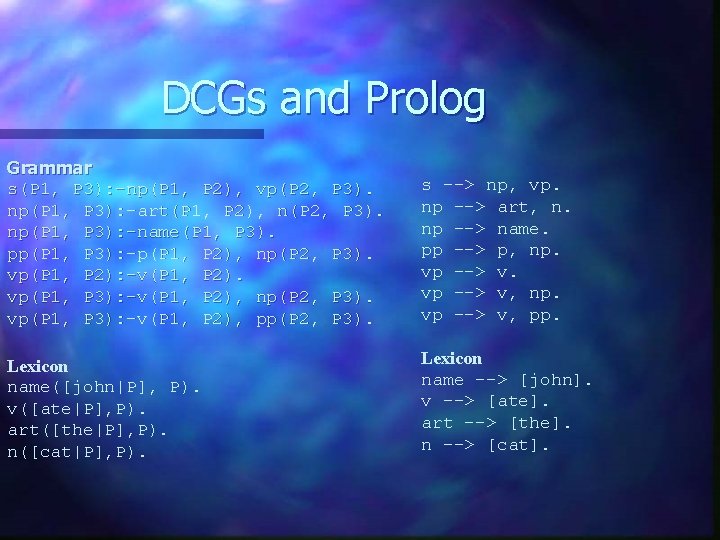

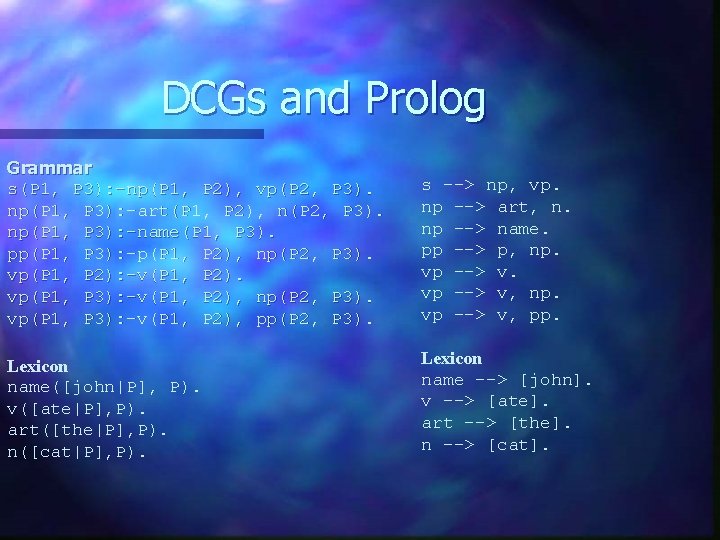

DCGs and Prolog Grammar s(P 1, P 3): -np(P 1, P 2), vp(P 2, P 3). np(P 1, P 3): -art(P 1, P 2), n(P 2, P 3). np(P 1, P 3): -name(P 1, P 3). pp(P 1, P 3): -p(P 1, P 2), np(P 2, P 3). vp(P 1, P 2): -v(P 1, P 2). vp(P 1, P 3): -v(P 1, P 2), np(P 2, P 3). vp(P 1, P 3): -v(P 1, P 2), pp(P 2, P 3). s --> np, vp. np --> art, n. np --> name. pp --> p, np. vp --> v, pp. Lexicon name([john|P], P). v([ate|P], P). art([the|P], P). n([cat|P], P). Lexicon name --> [john]. v --> [ate]. art --> [the]. n --> [cat].

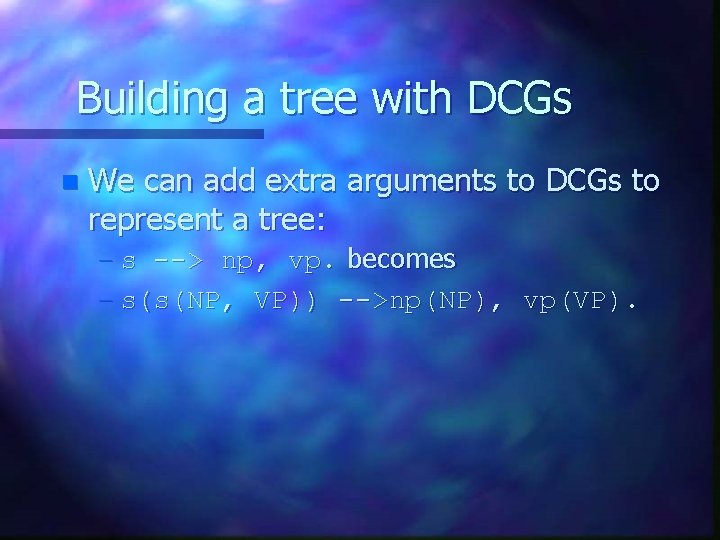

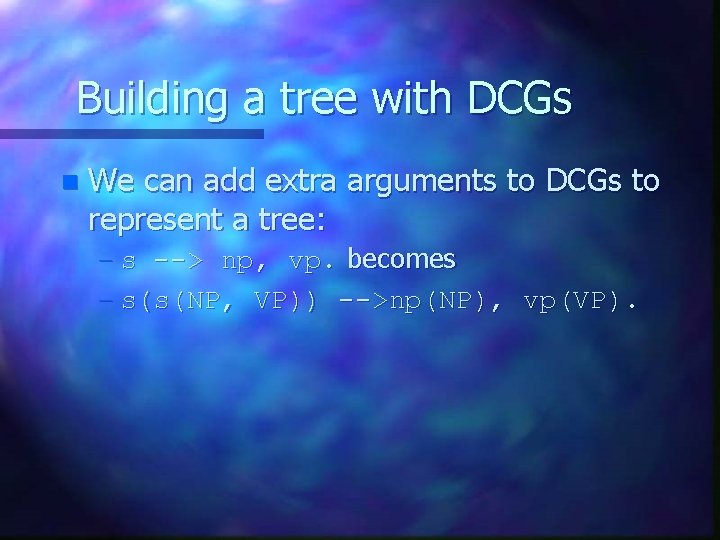

Building a tree with DCGs n We can add extra arguments to DCGs to represent a tree: – s --> np, vp. becomes – s(s(NP, VP)) -->np(NP), vp(VP).

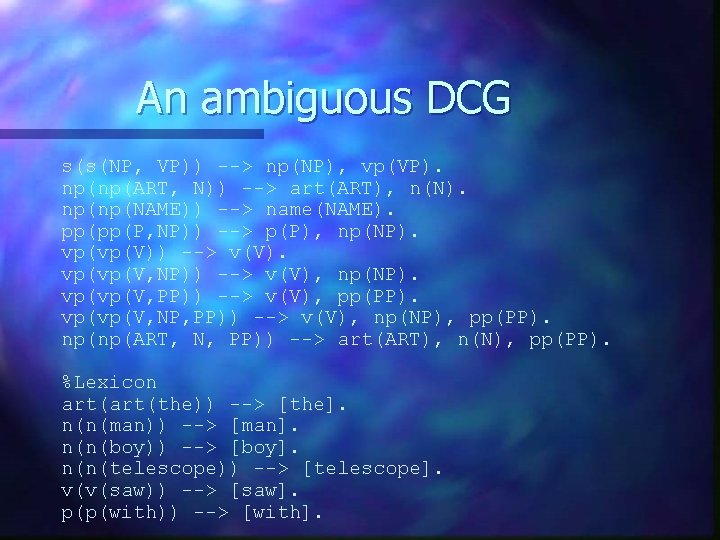

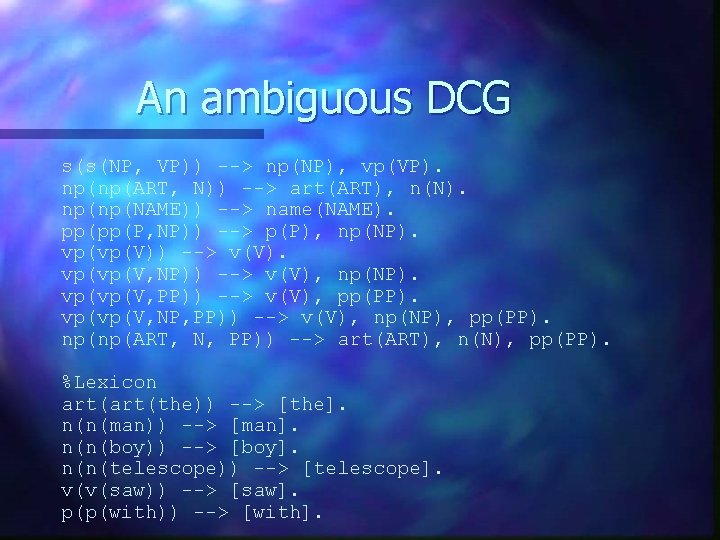

An ambiguous DCG s(s(NP, VP)) --> np(NP), vp(VP). np(np(ART, N)) --> art(ART), n(N). np(np(NAME)) --> name(NAME). pp(pp(P, NP)) --> p(P), np(NP). vp(vp(V)) --> v(V). vp(vp(V, NP)) --> v(V), np(NP). vp(vp(V, PP)) --> v(V), pp(PP). vp(vp(V, NP, PP)) --> v(V), np(NP), pp(PP). np(np(ART, N, PP)) --> art(ART), n(N), pp(PP). %Lexicon art(the)) --> [the]. n(n(man)) --> [man]. n(n(boy)) --> [boy]. n(n(telescope)) --> [telescope]. v(v(saw)) --> [saw]. p(p(with)) --> [with].

Semantics n What does it mean?

Semantic ambiguity n A sentence may have a single syntactic structure, but multiple semantic structures – Every boy loves a dog n Vagueness – some senses are more specific than others – “Person” is more vague than “woman” – Quantifiers: Many people saw the accident

Logical forms Most common is first-order predicate calculus (FOPC) n PROLOG is an ideal implementation language n

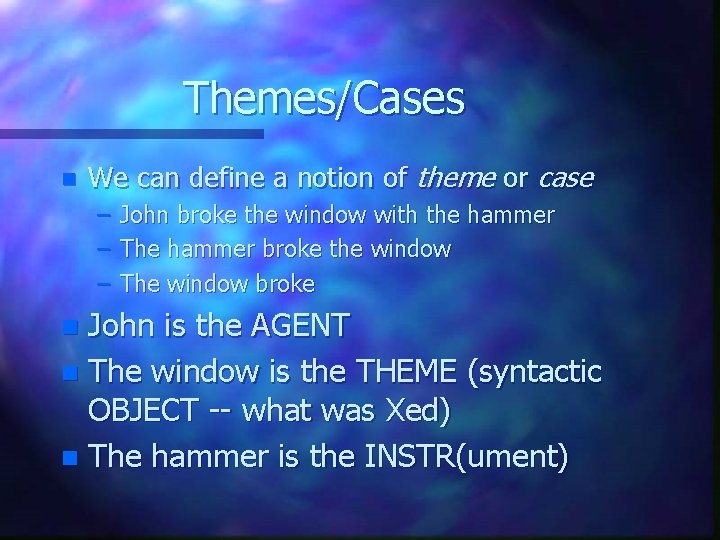

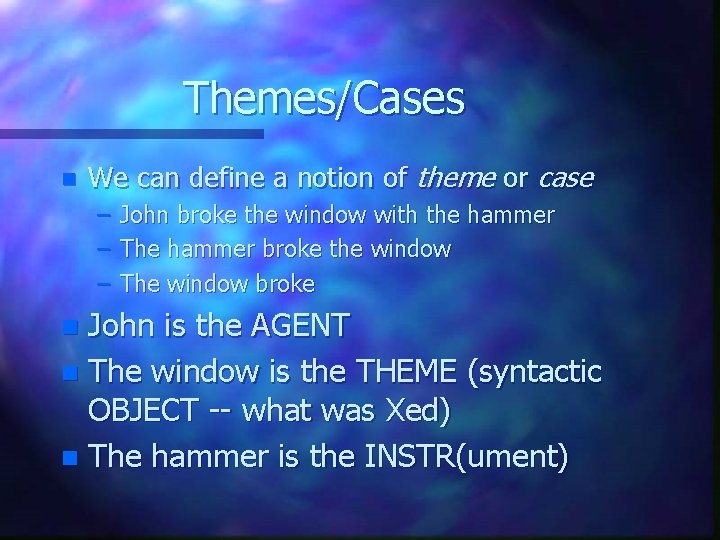

Thematic roles n Consider the following sentences: – John broke the window with the hammer – The hammer broke the window – The window broke n The syntactic structure is different, but John, the hammer, and the window have the same semantic roles in each sentence

Themes/Cases n We can define a notion of theme or case – – – John broke the window with the hammer The hammer broke the window The window broke John is the AGENT n The window is the THEME (syntactic OBJECT -- what was Xed) n The hammer is the INSTR(ument) n

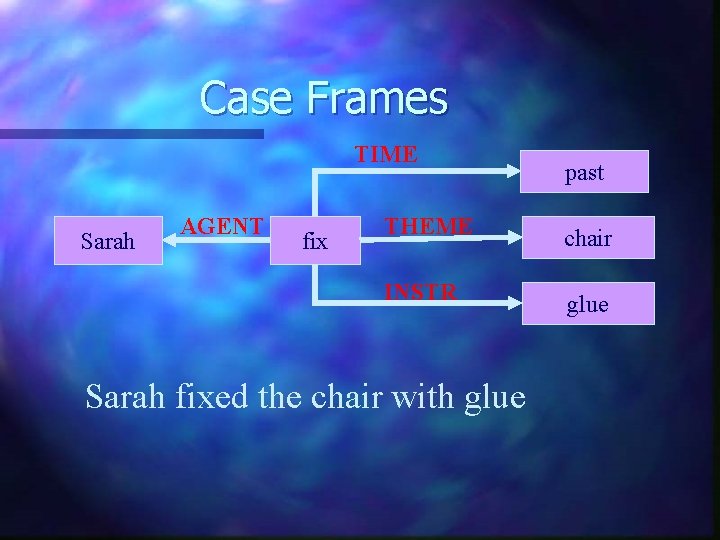

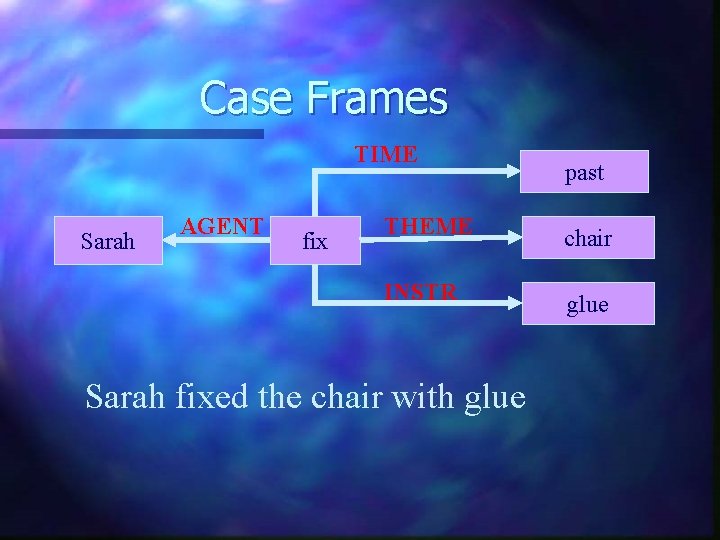

Case Frames TIME Sarah AGENT fix past THEME chair INSTR glue Sarah fixed the chair with glue

Network Representations n Examples: – Semantic networks – Conceptual dependencies – Conceptual graphs

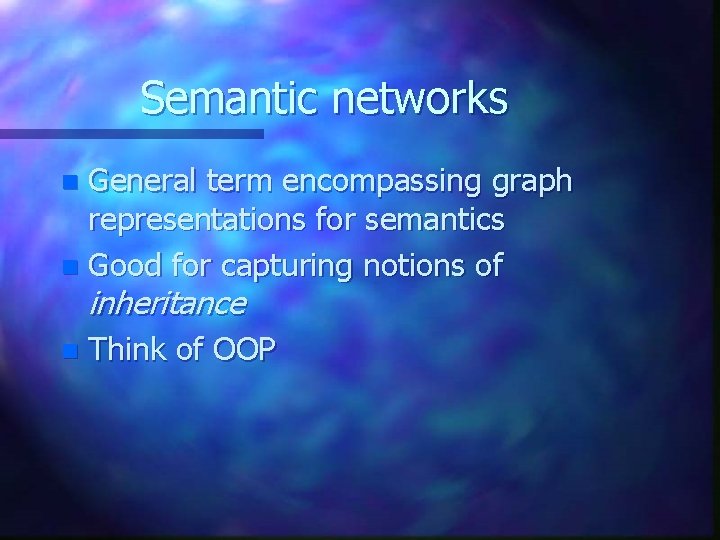

Semantic networks General term encompassing graph representations for semantics n Good for capturing notions of n inheritance n Think of OOP

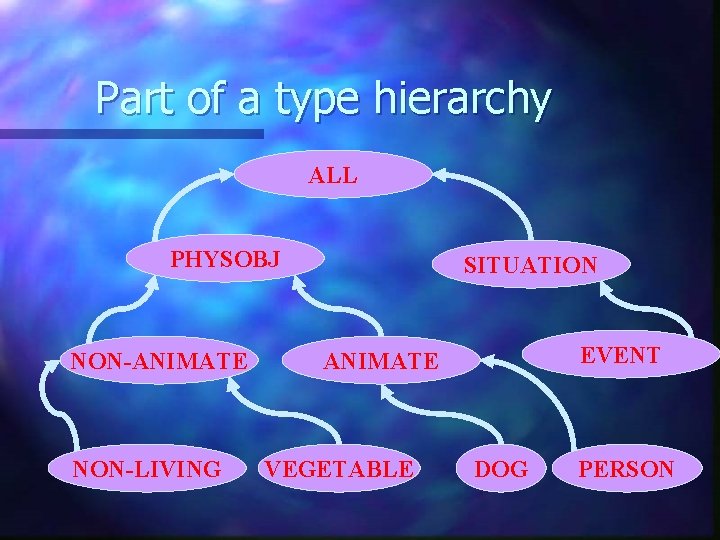

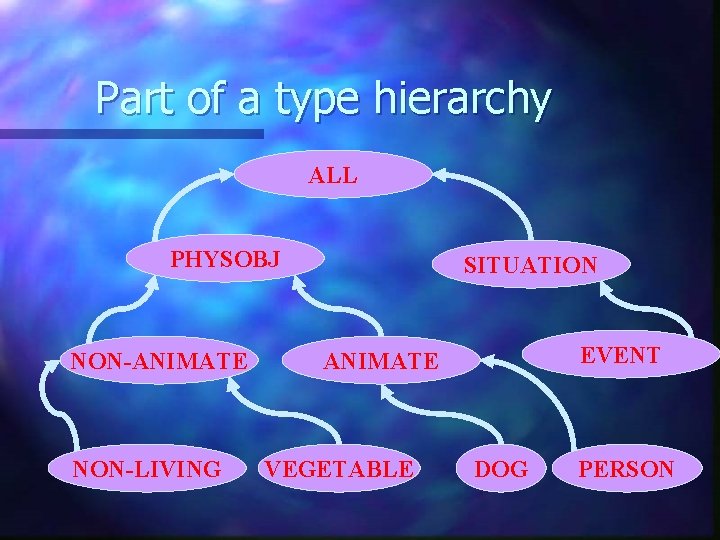

Part of a type hierarchy ALL PHYSOBJ NON-ANIMATE NON-LIVING SITUATION EVENT ANIMATE VEGETABLE DOG PERSON

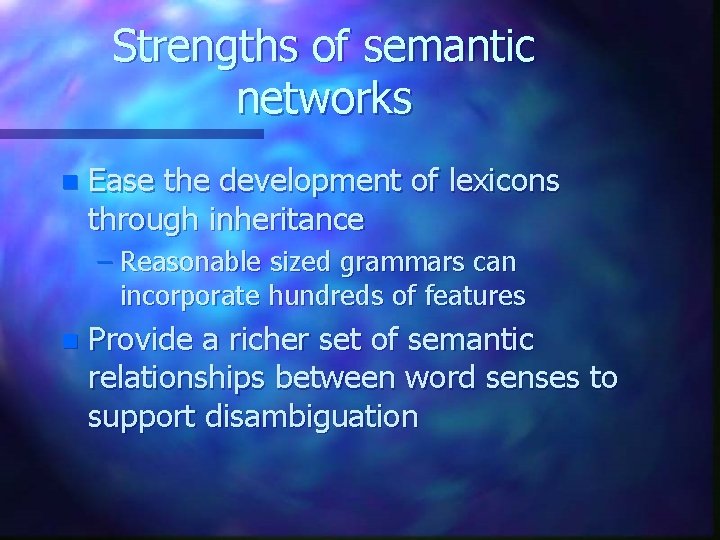

Strengths of semantic networks n Ease the development of lexicons through inheritance – Reasonable sized grammars can incorporate hundreds of features n Provide a richer set of semantic relationships between word senses to support disambiguation

Conceptual dependencies Influential in early semantic representations n Base representation on a small set of primitives n

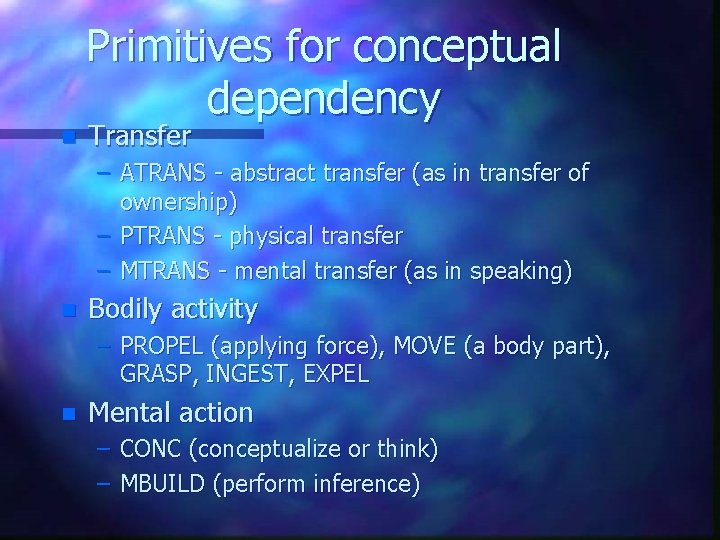

Primitives for conceptual dependency n Transfer – ATRANS - abstract transfer (as in transfer of ownership) – PTRANS - physical transfer – MTRANS - mental transfer (as in speaking) n Bodily activity – PROPEL (applying force), MOVE (a body part), GRASP, INGEST, EXPEL n Mental action – CONC (conceptualize or think) – MBUILD (perform inference)

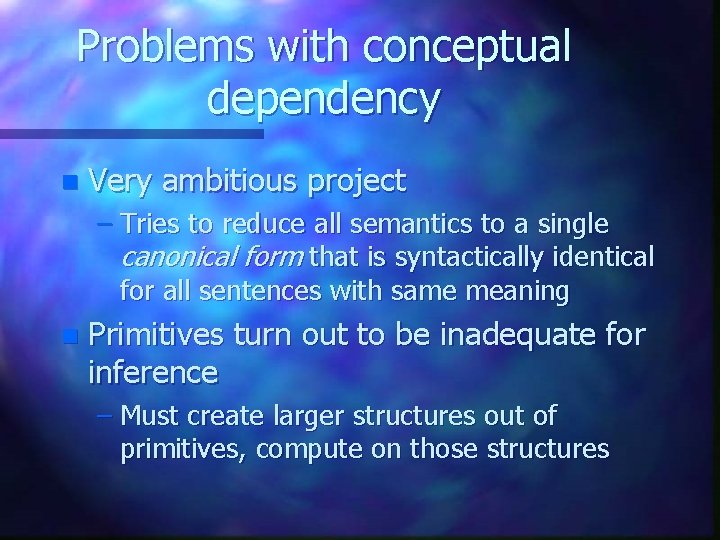

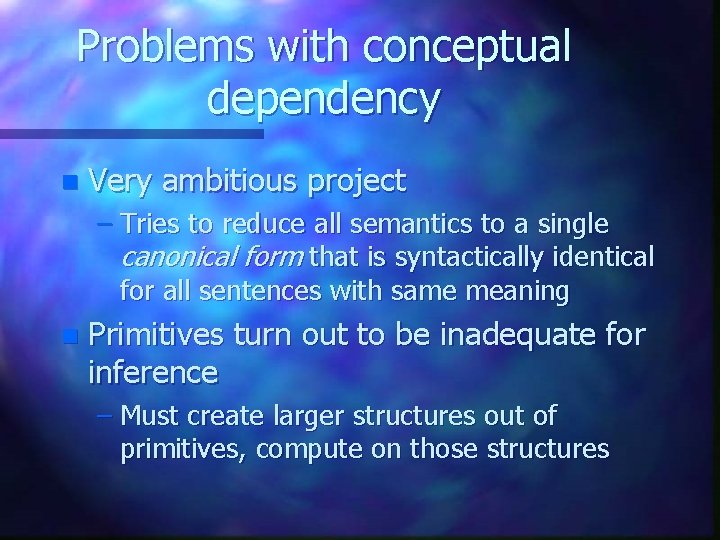

Problems with conceptual dependency n Very ambitious project – Tries to reduce all semantics to a single canonical form that is syntactically identical for all sentences with same meaning n Primitives turn out to be inadequate for inference – Must create larger structures out of primitives, compute on those structures

Structured representation schemes Frames n Scripts n

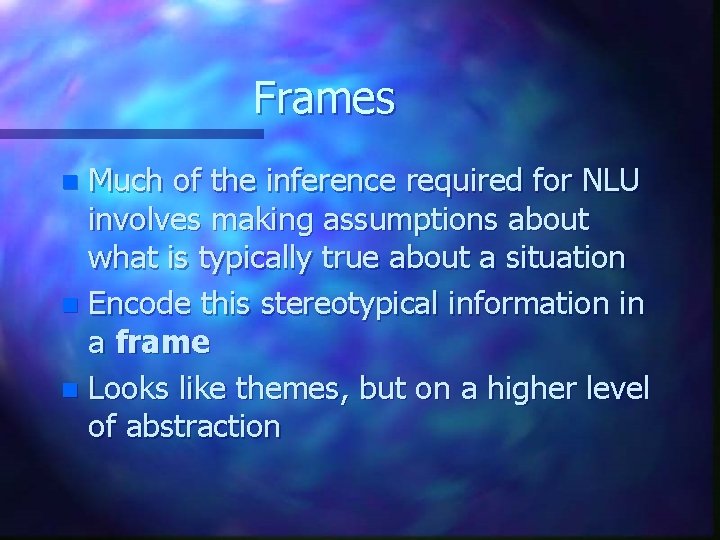

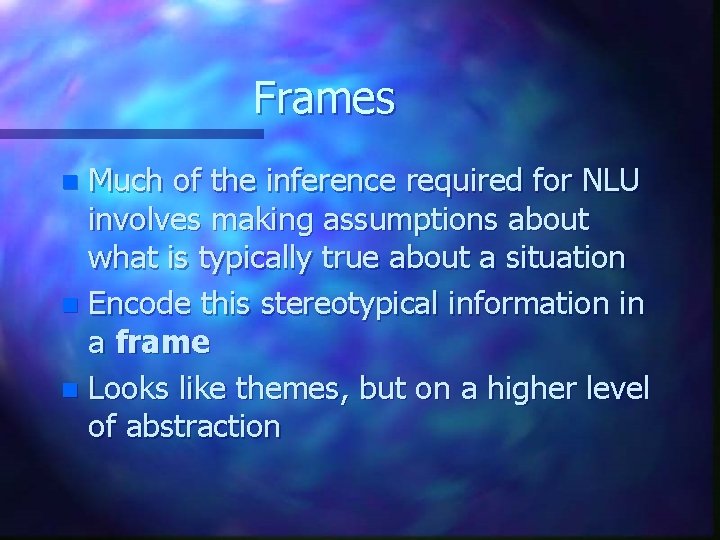

Frames Much of the inference required for NLU involves making assumptions about what is typically true about a situation n Encode this stereotypical information in a frame n Looks like themes, but on a higher level of abstraction n

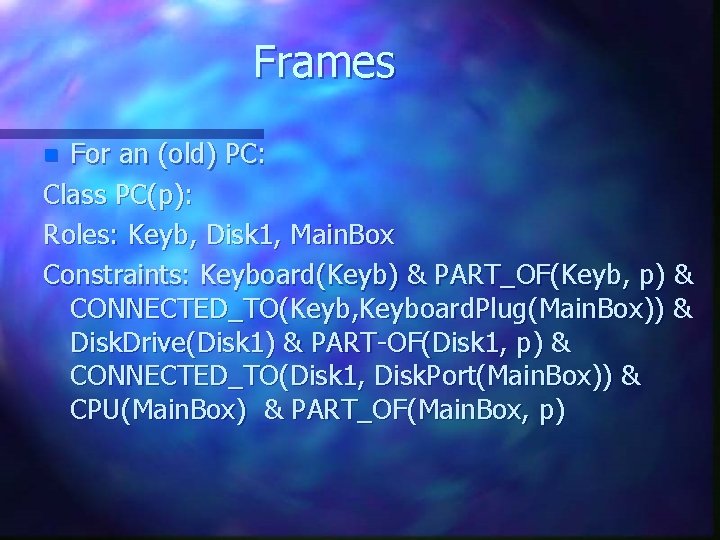

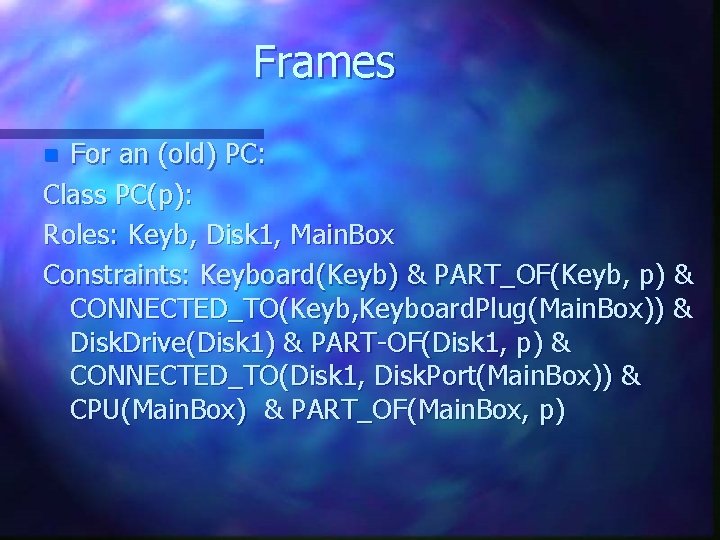

Frames For an (old) PC: Class PC(p): Roles: Keyb, Disk 1, Main. Box Constraints: Keyboard(Keyb) & PART_OF(Keyb, p) & CONNECTED_TO(Keyb, Keyboard. Plug(Main. Box)) & Disk. Drive(Disk 1) & PART-OF(Disk 1, p) & CONNECTED_TO(Disk 1, Disk. Port(Main. Box)) & CPU(Main. Box) & PART_OF(Main. Box, p) n

Scripts A means of identifying common situations in a particular domain n A means of generating expectations n – We precompile information, rather than recomputing from first principles

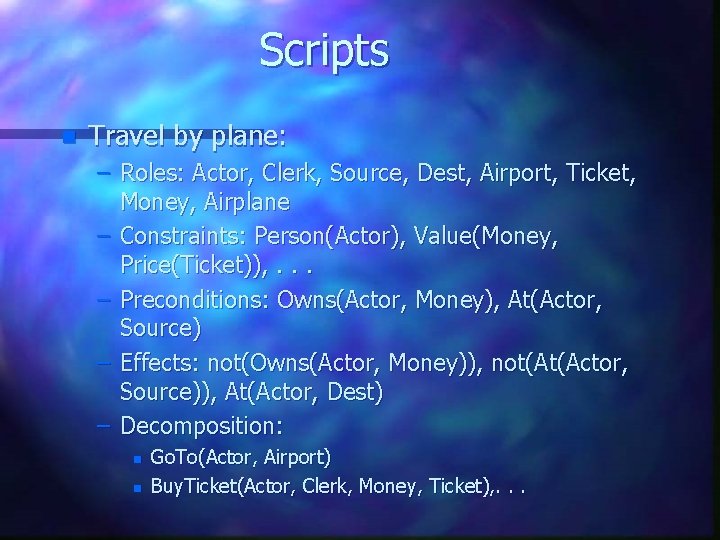

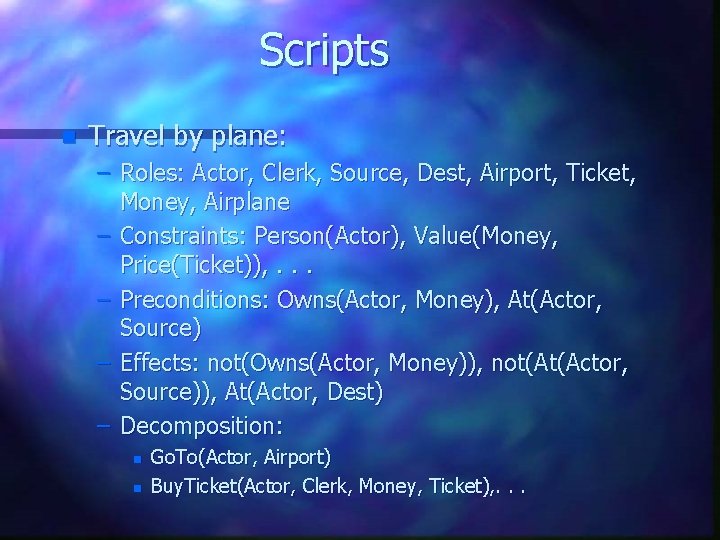

Scripts n Travel by plane: – Roles: Actor, Clerk, Source, Dest, Airport, Ticket, Money, Airplane – Constraints: Person(Actor), Value(Money, Price(Ticket)), . . . – Preconditions: Owns(Actor, Money), At(Actor, Source) – Effects: not(Owns(Actor, Money)), not(At(Actor, Source)), At(Actor, Dest) – Decomposition: n n Go. To(Actor, Airport) Buy. Ticket(Actor, Clerk, Money, Ticket), . . .

Issues with Scripts n Script selection – How do we decide which script is relevant? n Where are we in the script?

NLP -- Where are we? We’re five years away (? ? ) n Call 1 -888 -NUANCE 9 (banking/airline ticket demo) n 1 -888 -LSD-TALK (Weather information) n Google n Ask Jeeves n Office Assistant n