NATURAL LANGUAGE PROCESSING AND INFORMATION RETRIEVAL Dr Eleni

- Slides: 29

NATURAL LANGUAGE PROCESSING AND INFORMATION RETRIEVAL Dr. Eleni Galiotou Assistant Professor, Department of Informatics, TEI of Athens Feb 24, 2004 Eleni Galiotou : Tempus Seminar

(Computer-based) Information Retrieval • Locate (electronically available) documents satisfying user´s information needs • Information need: A statement in a query language matched against document surrogates (title, abstract, keywords etc) • Outcome of IR process: articles, memos, reports, books, annotated image and sound files Feb 24, 2004 Eleni Galiotou : Tempus Seminar 2

The IR strategy • Purpose: – Retrieve all relevant documents – Retrieve as few of non-relevant documents as possible • Techniques in classical IR: – Empirical and ad-hoc – Quantitative methods • IR : also a Natural Language Processing problem • Heterogeneous Collections of full-text documents – Need for Content Understanding => NLP techniques Feb 24, 2004 Eleni Galiotou : Tempus Seminar 3

Main areas of research in IR • Content analysis • Relationships between documents to improve efficiency and effectiveness of IR strategies • Measurement of effectiveness of retrieval Feb 24, 2004 Eleni Galiotou : Tempus Seminar 4

Example: The vector-space model (1) • The SMART Text Retrieval System • Documents and queries represented as vectors in T-dimensional space ( T: number of distinct terms in document collection) • Automated indexing: Assigning of terms to a piece of text • Weighted terms to reflect their relative importance in the text Feb 24, 2004 Eleni Galiotou : Tempus Seminar 5

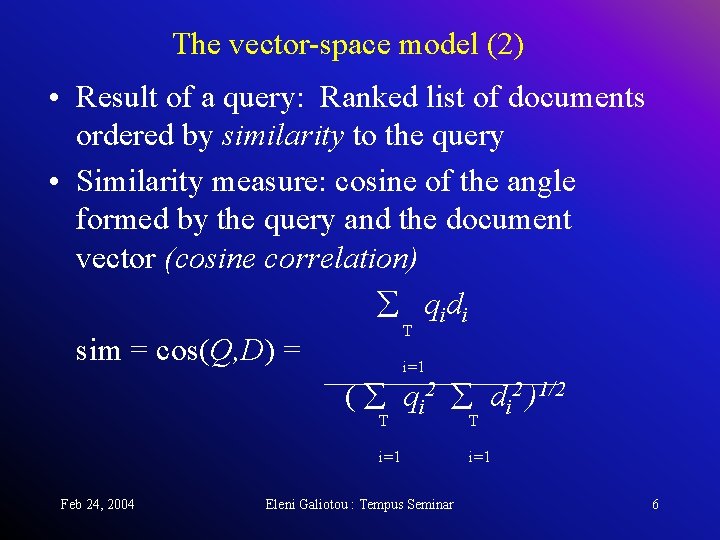

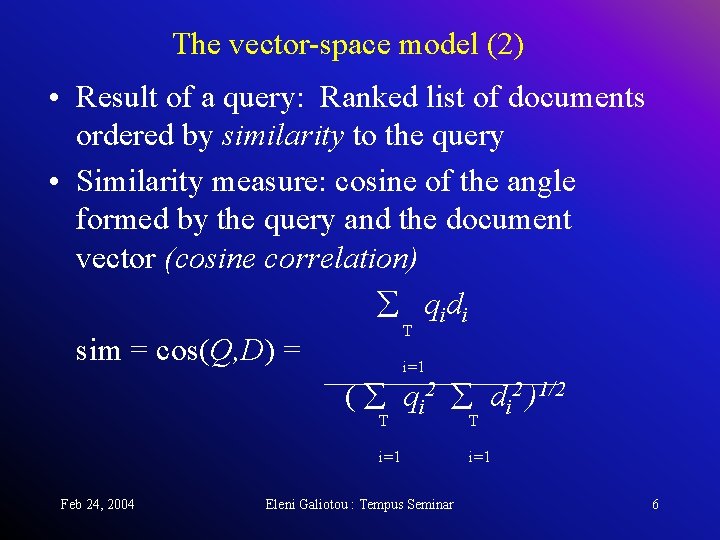

The vector-space model (2) • Result of a query: Ranked list of documents ordered by similarity to the query • Similarity measure: cosine of the angle formed by the query and the document vector (cosine correlation) qidi T sim = cos(Q, D) = i=1 ( qi 2 di 2 )1/2 Feb 24, 2004 T T i=1 Eleni Galiotou : Tempus Seminar 6

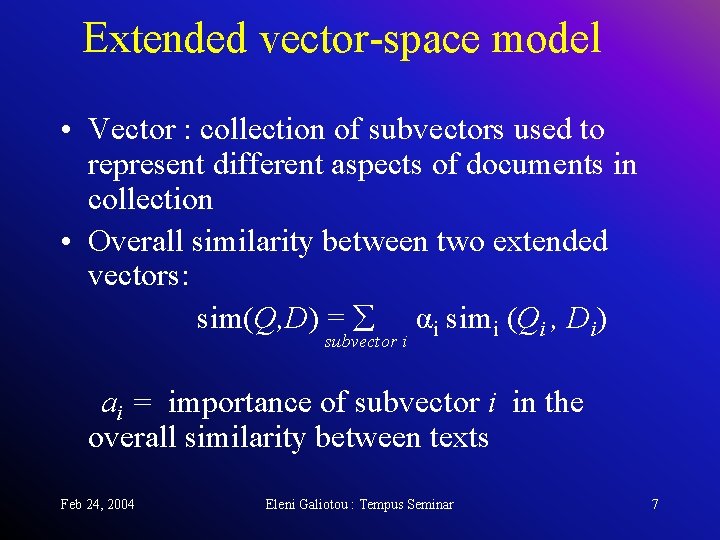

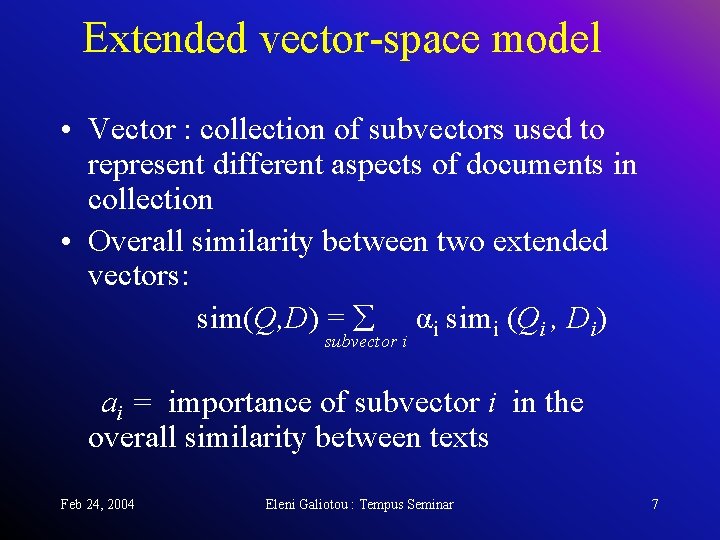

Extended vector-space model • Vector : collection of subvectors used to represent different aspects of documents in collection • Overall similarity between two extended vectors: sim(Q, D) = αi simi (Qi , Di) subvector i ai = importance of subvector i in the overall similarity between texts Feb 24, 2004 Eleni Galiotou : Tempus Seminar 7

Indexing • Index language used to describe documents and requests • Pre-coordinate index terms : logical combination of any index terms used as a label to identify a class of documents • Post-coordinate terms: combination of classes of documents labeled with the individual index terms Feb 24, 2004 Eleni Galiotou : Tempus Seminar 8

LMI vs. NLI • Non-Linguistic Indexing: Removing stopwords, Applying statistical criteria • Linguistically Motivated Indexing: – Applying syntactic and/or semantic techniques for term identification and description formation – Identifying multi-word units and characterizing their internal structure • NLP needed for automated indexing ? ! Feb 24, 2004 Eleni Galiotou : Tempus Seminar 9

Index Term Weighting • tf (t): within-document frequency of term t • idf(t): inverse document frequency = log (N/n) N= total number of documents in collection n : number of documents containing term t • General weighting schema: w(t) = tf(t) X idf(t) • Assumptions on term independence often false • Situation worse when single-word terms are intermixed with phrasal terms Feb 24, 2004 Eleni Galiotou : Tempus Seminar 10

NLP Based Indexing • Example: TREC Experiments – “joint venture” important in Wall Street Journal database – “joint”, “venture” dropped from list of terms by the system because of too low idf • Identify groups creating meaningful phrases • Simple collocations, Statistically-validated N-grams, Part-Of-Speech tagged sequences, Syntactic structures, Semantic Concepts Feb 24, 2004 Eleni Galiotou : Tempus Seminar 11

Obstacles in the application of NLP techniques in IR • Lack of robustness and efficiency • Representations produced : Complex structures effectively compared to determine relevance Solution: Use NLP to assist IR system (boolean, statistical, probabilistic) in representing documents for search purposes Off-line database indexing Feb 24, 2004 Eleni Galiotou : Tempus Seminar 12

Stream-based IR Model (1) • Combination of Statistical and NLP Techniques • Term Extraction Steps 1. Elimination of Stopwords (no-content or low content words: determiners, preposition, pronouns, very frequent words) 2. Morphological Stemming: Affix-stripping process or Morphological Analysis) Feb 24, 2004 Eleni Galiotou : Tempus Seminar 13

Stream-based IR Model (2) 4. Phrase Extraction : Shallow text processing techniques (POS tagging, Phrase boundary detection, Word co-occurrence metrics) used to identify relatively stable groups of words 5. Phrase Normalization: “Head+Modifier” pairs to normalize across syntactic variants and reduce to a common “concept” , e. g. weapon proliferation, proliferation of weapons weapon+ proliferate 6. Proper Name Extraction: People names and titles, Location names, Organization names used for indexing Feb 24, 2004 Eleni Galiotou : Tempus Seminar 14

Stream-based IR Model (3) • Final results: merge ranked lists of documents obtained from searching all streams with appropriately preprocessed queries. • Contributions from each stream are weighted using an effective combination of alternative retrieval and routing methods Meta-search strategy which maximizes contributions of each stream (base search engines: SMART v. 11, PRISE v. 2 e. t. c) Feb 24, 2004 Eleni Galiotou : Tempus Seminar 15

Advantages of Stream Architecture • Easier to compare contributions of different indexing features or representations • Convenient testbed to experiment with algorithms designed to merge results obtained using different IR engines and/ot techniques • Easier to fine-tune system in order to obtain optimum performance • Allows usage of IR engines without having to adopt them Feb 24, 2004 Eleni Galiotou : Tempus Seminar 16

Part Of Speech Tagging (1) • Allows resolution of lexical ambiguities in a running text assuming a known general type of text and a context in which a word is used more accurate lexical normalization, phrase boundary detection • Assigns POS label(s) to each word in a text depending on labels assigned to preceding words Feb 24, 2004 Eleni Galiotou : Tempus Seminar 17

POS Tagging (2) • Best-tag-only option: Only top-ranked for each word is output gain in speed and robustness of subsequent processes (e. g. parsing) • Brill´s rule based Tagger trained on Wall Street Journal texts to preprocess linguistic streams used by SMART Feb 24, 2004 Eleni Galiotou : Tempus Seminar 18

Syntactic Tagging (1) • Capturing semantic dependencies critical for accurate text indexing • Need to exploit syntactic structures produced by a fairly comprehensive parser • TREC experiment: TTP (Tagged Text Parser) based on Linguistic String Grammar • Full grammar parser with a built-in timer regulating amount of time allowed for parsing a sentence Feb 24, 2004 Eleni Galiotou : Tempus Seminar 19

Syntactic Tagging (2) • If no parse is returned before allotated time elapses parser in “skip-and-fit” mode • Result: approximate parse • Fragments skipped in first pass: – analyzed by simple phrasal parser looking for noun phrases and relative clauses – attached to main parse structure Feb 24, 2004 Eleni Galiotou : Tempus Seminar 20

Corpus-based disambiguation of long Noun Phrases (1) • Relationships between in complex phases required to decompose longer phrases into meaningful head+modifier pairs • Pair extractor looks at distribution statistics of compound terms – association between any two words in noun phrase syntactically valid and semantically significant Feb 24, 2004 Eleni Galiotou : Tempus Seminar 21

Corpus-based disambiguation (2) • Phrasal terms extracted in two phases: 1. Only unambiguous head-modifier pairs are generated 2. Distributional statistics gathered in first phase are used to predict the strength of alternative modifier-modified links within ambiguous phrases • Example: multiple unambiguous occurrences : “inside trading”, a few of “trading case”, numerous phrases: “insider trading case”, “insider trading legislation” Feb 24, 2004 Eleni Galiotou : Tempus Seminar 22

Language Resources • Machine Readable Dictionaries (MRD) Mixed results in experiments • Knowledge bases – CYC : Huge Knowledge base of Common Sense Knowledge, Untested contribution to IR – Word. Net : Models Lexical Knowledge of a native user of English Feb 24, 2004 Eleni Galiotou : Tempus Seminar 23

Usage of Word. Net in Information Retrieval Tasks (1) • Word. Net: organized around logical groupings of related terms (synsets) • Synset: list of synonymous word forms and semantic pointers describing relationships between current and other synsets • Knowledge Base: Nouns in Word. Net • Nouns: Most content-bearing of all word classes and occur in every sentence Feb 24, 2004 Eleni Galiotou : Tempus Seminar 24

Usage of Word. Net (2) • Word. Net partitioned into Hierarchical Concept Graphs (HCG) based on the IS-A hierarchical links between synsets • Information content of each synset approximated by estimating the probability of occurrence of all nouns in all subordinate synsets. • Semantic similarity between two nouns (synsets from which the nouns are drawn): information content of first synset which subsumes the two synsets Feb 24, 2004 Eleni Galiotou : Tempus Seminar 25

Usage of Word. Net (3) • Simple word sense disambiguation process for documents which choose the single most likely sense of a noun occurrence • Experiments: Top 1000 documents prefetched from the collection using term weighting (conventional IR technique) and exhaustive word distance based measure on these documents Feb 24, 2004 Eleni Galiotou : Tempus Seminar 26

Usage of Word. Net (4) • Retrieval effectiveness results using word distances, (in terms of precision and recall): poor compared to the tf X idf term weighting strategy • Possibility of errors in syntactic tagging of documents, in word sense disambiguation, in semantic matching between words. Feb 24, 2004 Eleni Galiotou : Tempus Seminar 27

Other Roles for NLP (1) • Routing (Filtering): Amount of training data is the dominant factor in performance • Text categorization (automatic assignment: to prior headings): Using complex terms had no extra beneficial effect • ? Real contribution to selective contentbased information management Feb 24, 2004 Eleni Galiotou : Tempus Seminar 28

Other Roles for NLP (2) • Displaying information about whole documents: giving selected phrases more informative than highlighting matching terms or listing key individual words Information Extraction and Summarizing • Real role of NLP: Supporting more exigent information-management functions within a larger, multi-functional whole Feb 24, 2004 Eleni Galiotou : Tempus Seminar 29