Natural Language Inference in Natural Language Terms Ido

![Lexical Textual Inference [Eyal Shnarch] n Complex systems use parser terloo, 1 st or Lexical Textual Inference [Eyal Shnarch] n Complex systems use parser terloo, 1 st or](https://slidetodoc.com/presentation_image/050c2bbd080f527b973c925f82ef0048/image-57.jpg)

- Slides: 88

Natural Language Inference in Natural Language Terms Ido Dagan Bar-Ilan University, Israel 1

BIU NLP lab - Acknowledgments Chaya Liebeskind

Let’s look at state-of-theart common practice… 3

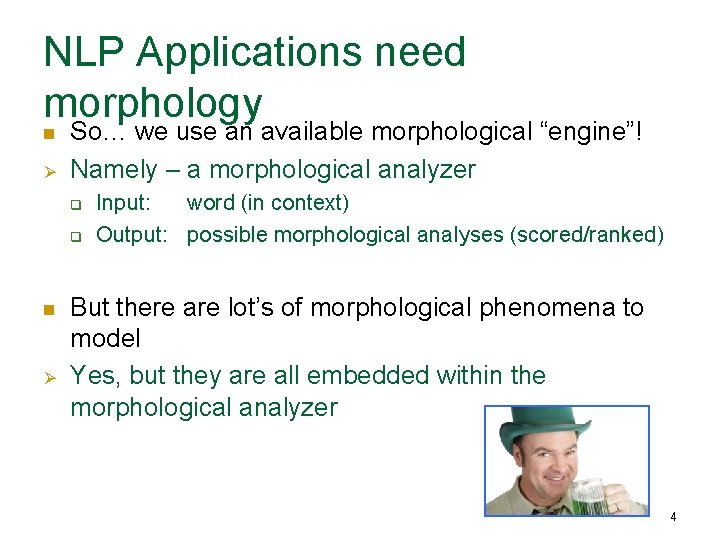

NLP Applications need morphology n Ø So… we use an available morphological “engine”! Namely – a morphological analyzer q q n Ø Input: word (in context) Output: possible morphological analyses (scored/ranked) But there are lot’s of morphological phenomena to model Yes, but they are all embedded within the morphological analyzer 4

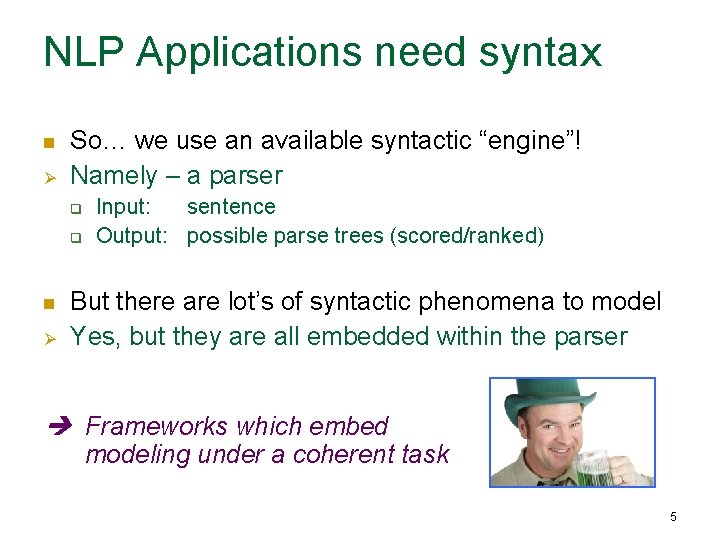

NLP Applications need syntax n Ø So… we use an available syntactic “engine”! Namely – a parser q q n Ø Input: sentence Output: possible parse trees (scored/ranked) But there are lot’s of syntactic phenomena to model Yes, but they are all embedded within the parser Frameworks which embed modeling under a coherent task 5

NLP Applications need semantics n So…. what do we do? q q Use NER, Word. Net, SRL, WSD, statistical similarities, syntactic matching, detect negations, etc, etc. Assemble and implement bits and pieces Scattered & redundant application-dependent research Focus & critical mass lacking, s l o w progress n Can we have a generic semantic inference framework? q Semantic interpretation into logic? But hardly adopted (why? ), so I put it aside till later… 6

What is inference? From dictionary. com: n inferring: to derive by reasoning; conclude or judge from premises or evidence. n reasoning: the process of forming conclusions, judgments, or inferences from facts or premises. 7

What is inference? From dictionary. com: n inferring: to derive by reasoning; conclude or judge from premises or evidence. n reasoning: the process of forming conclusions, judgments, or inferences from facts or premises. 8

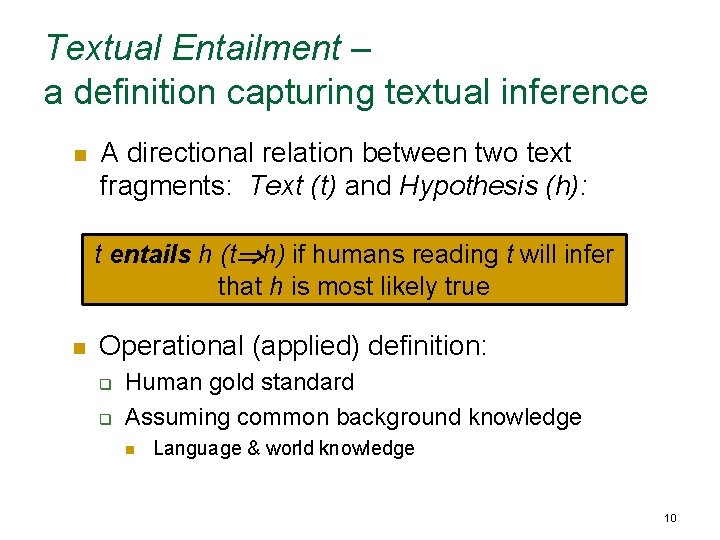

Textual Inference n n Inferring new textual expressions from given ones Captures two types of inference: Inferences about the “extra-linguistic” world 1. n Inferences about language variability 2. n n it rained yesterday => it was wet yesterday I bought a watch => I purchased a watch No definite boundary between the two 9

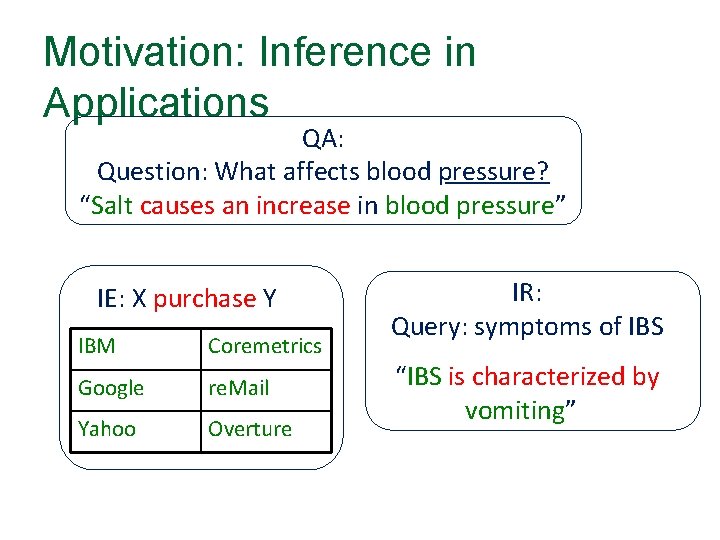

Textual Entailment – a definition capturing textual inference n A directional relation between two text fragments: Text (t) and Hypothesis (h): t entails h (t h) if humans reading t will infer that h is most likely true n Operational (applied) definition: q q Human gold standard Assuming common background knowledge n Language & world knowledge 10

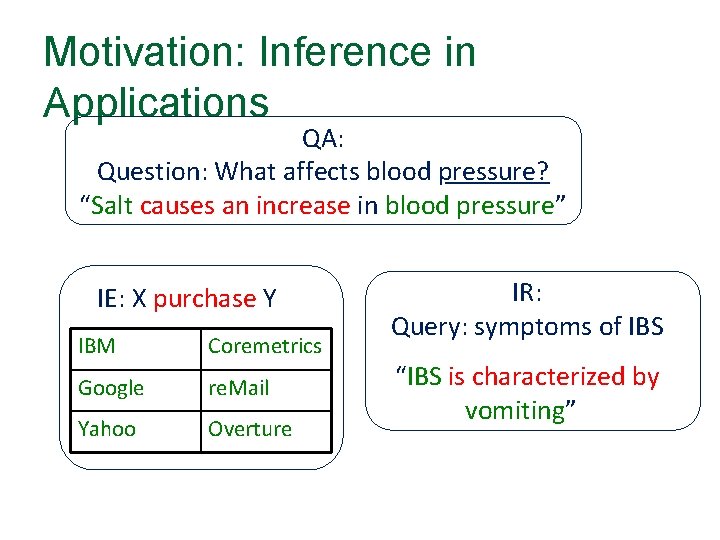

Motivation: Inference in Applications QA: Question: What affects blood pressure? “Salt causes an increase in blood pressure” IE: X purchase Y IBM Coremetrics Google re. Mail Yahoo Overture IR: Query: symptoms of IBS “IBS is characterized by vomiting”

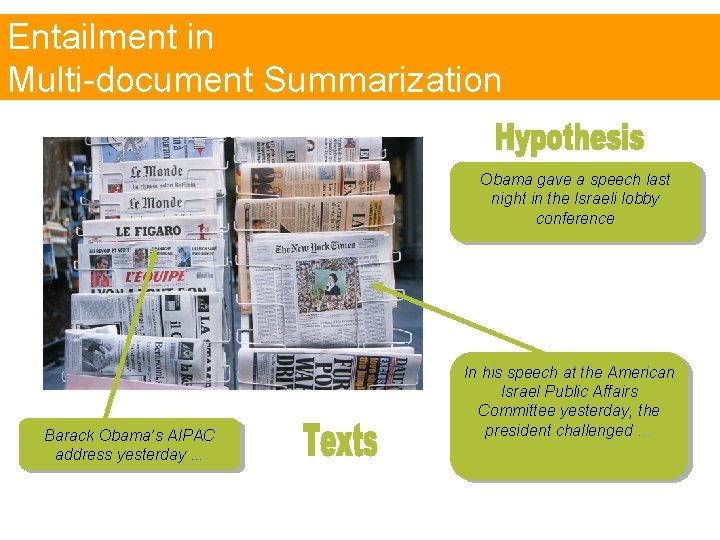

Entailment in Multi-document Summarization Obama gave a speech last night in the Israeli lobby conference Barack Obama’s AIPAC address yesterday. . . In his speech at the American Israel Public Affairs Committee yesterday, the president challenged …

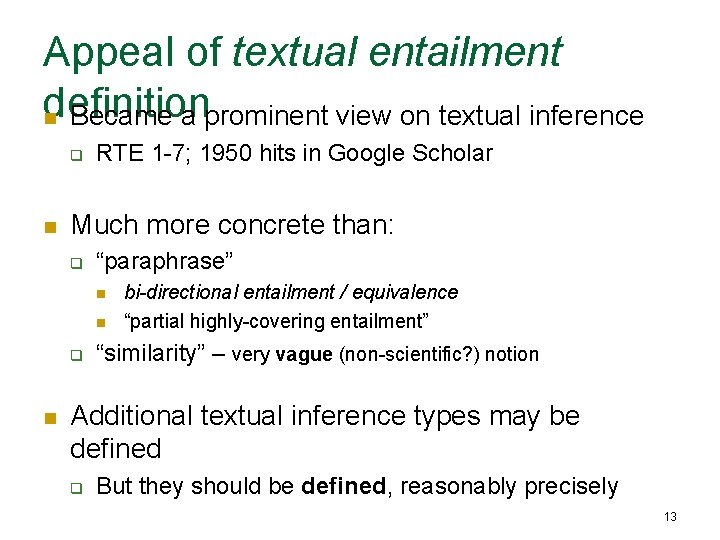

Appeal of textual entailment definition n Became a prominent view on textual inference q n RTE 1 -7; 1950 hits in Google Scholar Much more concrete than: q “paraphrase” n n q n bi-directional entailment / equivalence “partial highly-covering entailment” “similarity” – very vague (non-scientific? ) notion Additional textual inference types may be defined q But they should be defined, reasonably precisely 13

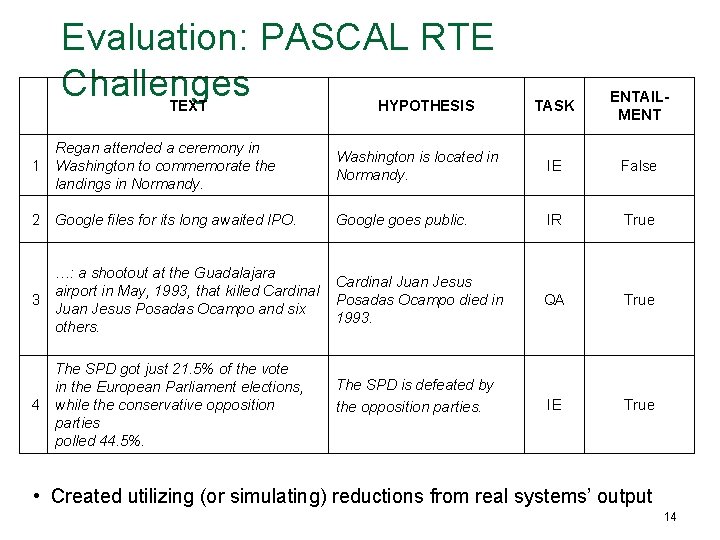

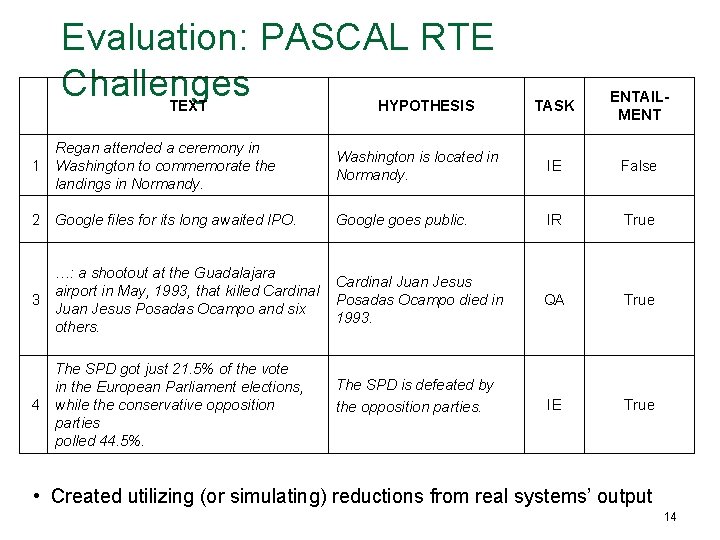

Evaluation: PASCAL RTE Challenges TEXT HYPOTHESIS TASK ENTAILMENT Regan attended a ceremony in 1 Washington to commemorate the landings in Normandy. Washington is located in Normandy. IE False 2 Google files for its long awaited IPO. Google goes public. IR True …: a shootout at the Guadalajara airport in May, 1993, that killed Cardinal 3 Juan Jesus Posadas Ocampo and six others. Cardinal Juan Jesus Posadas Ocampo died in 1993. QA True IE True The SPD got just 21. 5% of the vote in the European Parliament elections, 4 while the conservative opposition parties polled 44. 5%. The SPD is defeated by the opposition parties. • Created utilizing (or simulating) reductions from real systems’ output 14

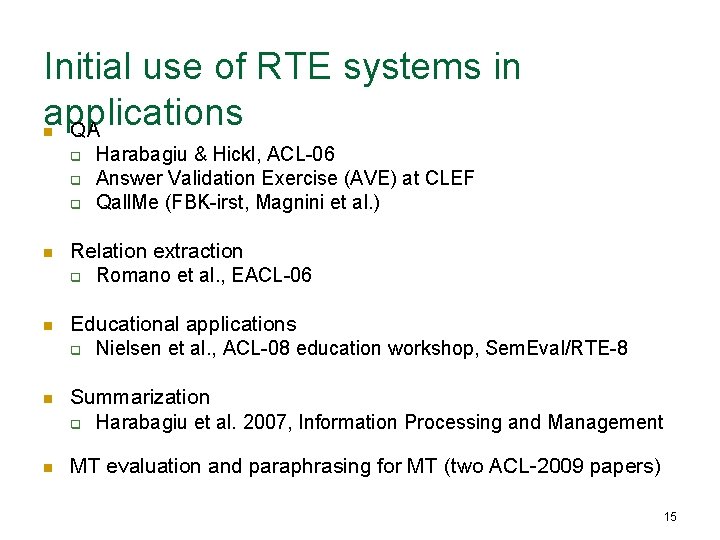

Initial use of RTE systems in applications QA n q q q Harabagiu & Hickl, ACL-06 Answer Validation Exercise (AVE) at CLEF Qall. Me (FBK-irst, Magnini et al. ) n Relation extraction q Romano et al. , EACL-06 n Educational applications q Nielsen et al. , ACL-08 education workshop, Sem. Eval/RTE-8 n Summarization q Harabagiu et al. 2007, Information Processing and Management n MT evaluation and paraphrasing for MT (two ACL-2009 papers) 15

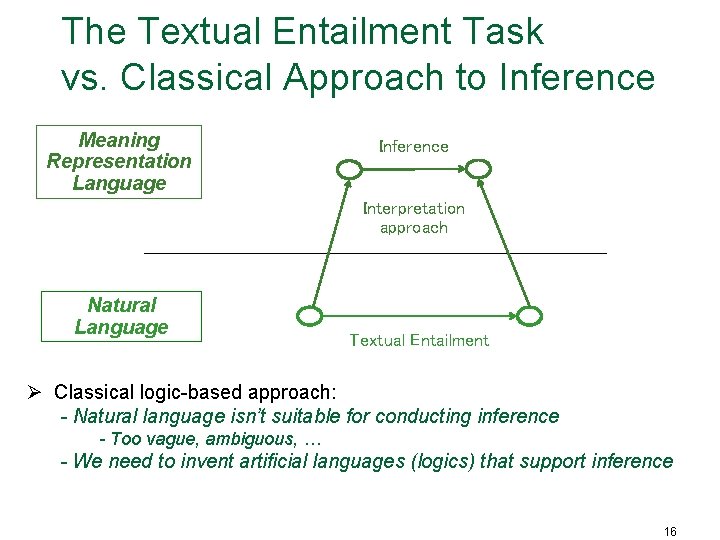

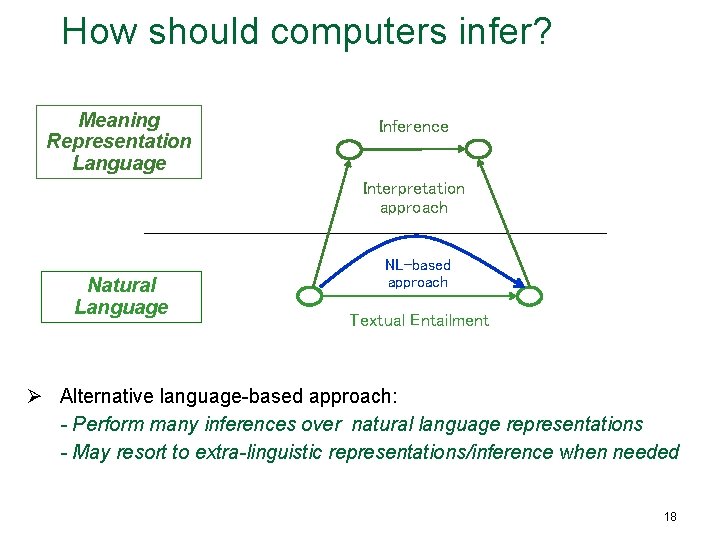

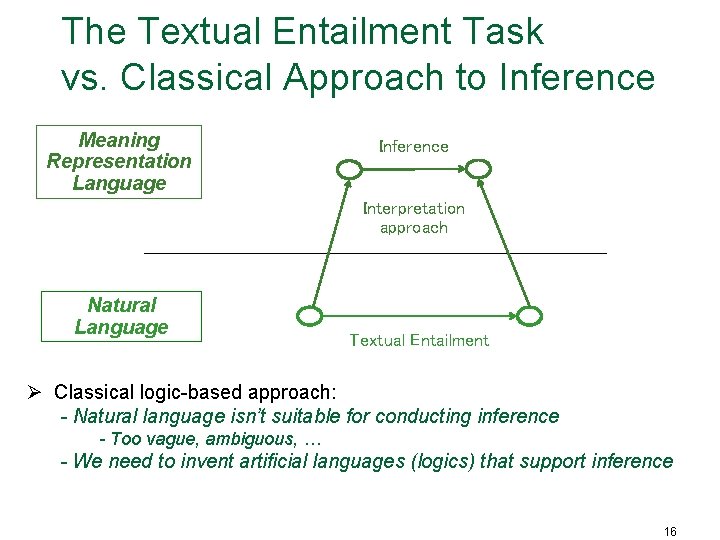

The Textual Entailment Task vs. Classical Approach to Inference Meaning Representation Language Inference Interpretation approach Natural Language Textual Entailment Ø Classical logic-based approach: - Natural language isn’t suitable for conducting inference - Too vague, ambiguous, … - We need to invent artificial languages (logics) that support inference 16

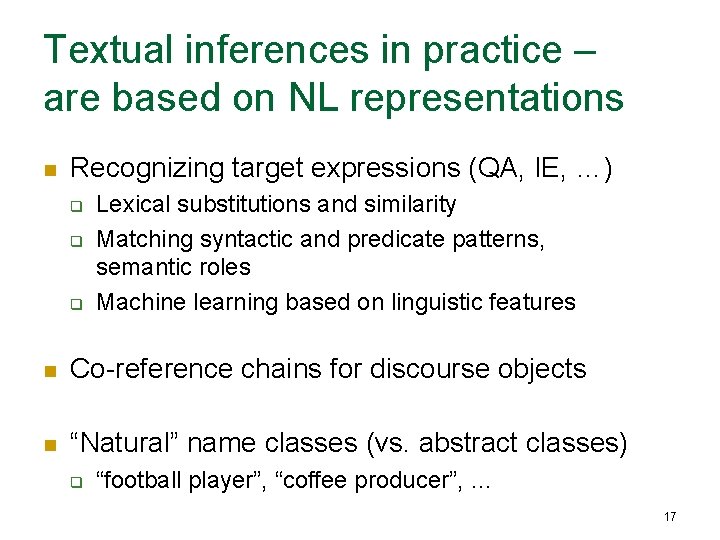

Textual inferences in practice – are based on NL representations n Recognizing target expressions (QA, IE, …) q q q Lexical substitutions and similarity Matching syntactic and predicate patterns, semantic roles Machine learning based on linguistic features n Co-reference chains for discourse objects n “Natural” name classes (vs. abstract classes) q “football player”, “coffee producer”, … 17

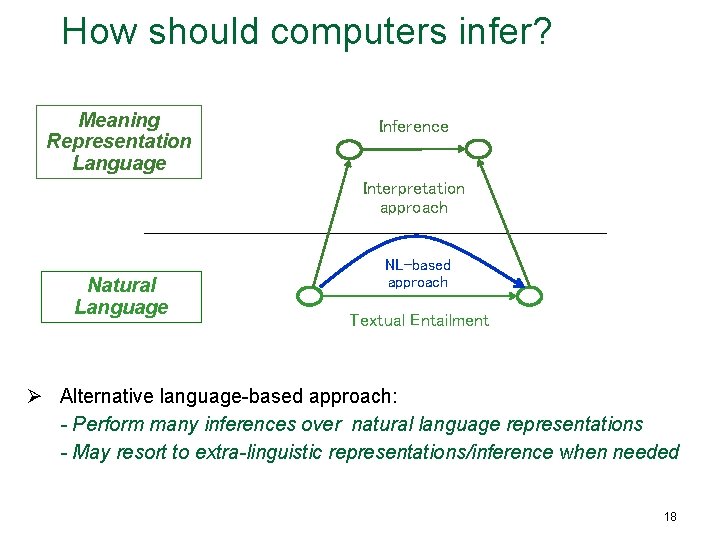

How should computers infer? Meaning Representation Language Inference Interpretation approach Natural Language NL-based approach Textual Entailment Ø Alternative language-based approach: - Perform many inferences over natural language representations - May resort to extra-linguistic representations/inference when needed 18

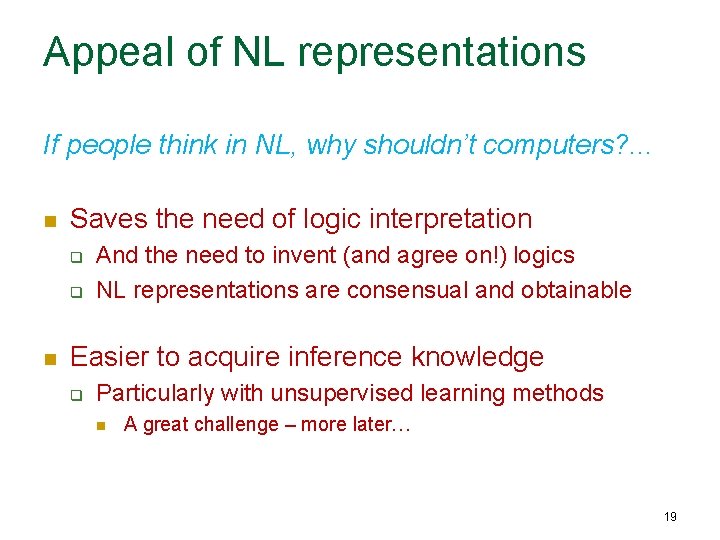

Appeal of NL representations If people think in NL, why shouldn’t computers? . . . n Saves the need of logic interpretation q q n And the need to invent (and agree on!) logics NL representations are consensual and obtainable Easier to acquire inference knowledge q Particularly with unsupervised learning methods n A great challenge – more later… 19

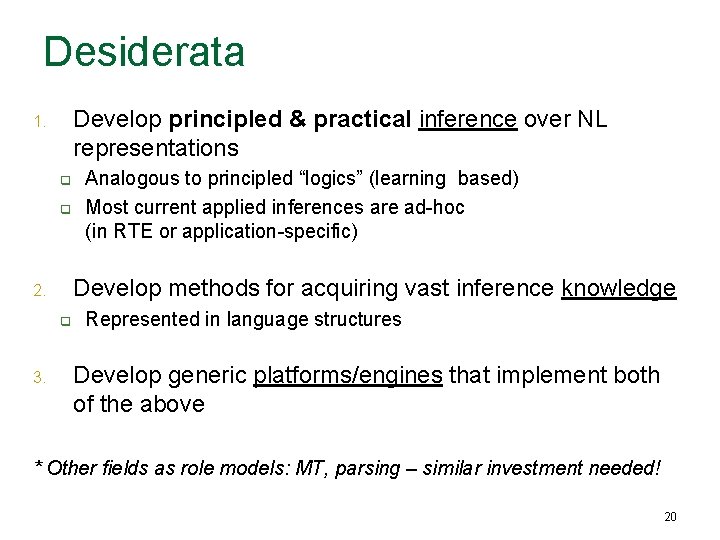

Desiderata Develop principled & practical inference over NL representations 1. q q Develop methods for acquiring vast inference knowledge 2. q 3. Analogous to principled “logics” (learning based) Most current applied inferences are ad-hoc (in RTE or application-specific) Represented in language structures Develop generic platforms/engines that implement both of the above * Other fields as role models: MT, parsing – similar investment needed! 20

Principled Learning-based Inference Mechanisms - over language structures 21

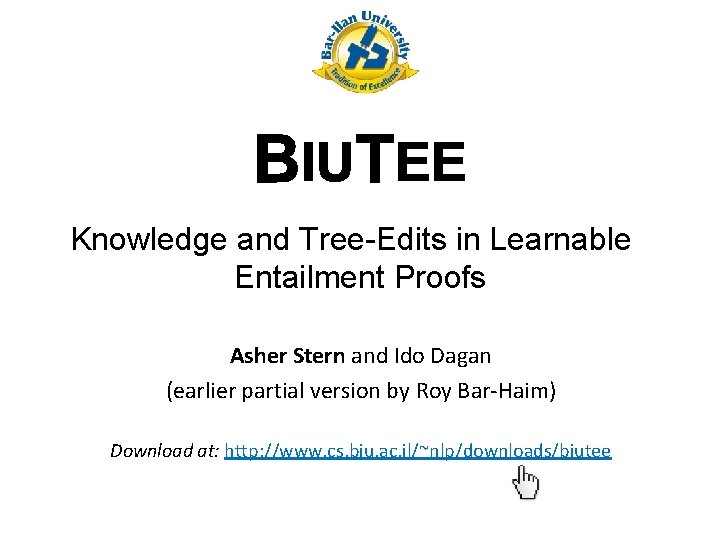

Knowledge and Tree-Edits in Learnable Entailment Proofs Asher Stern and Ido Dagan (earlier partial version by Roy Bar-Haim) Download at: http: //www. cs. biu. ac. il/~nlp/downloads/biutee

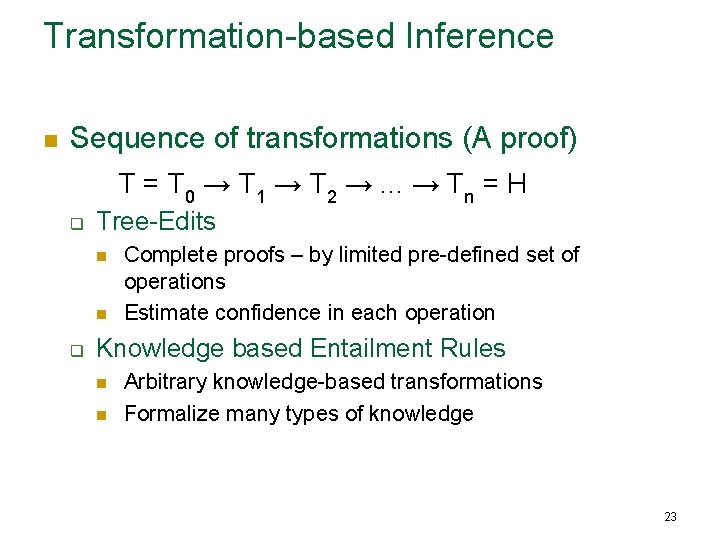

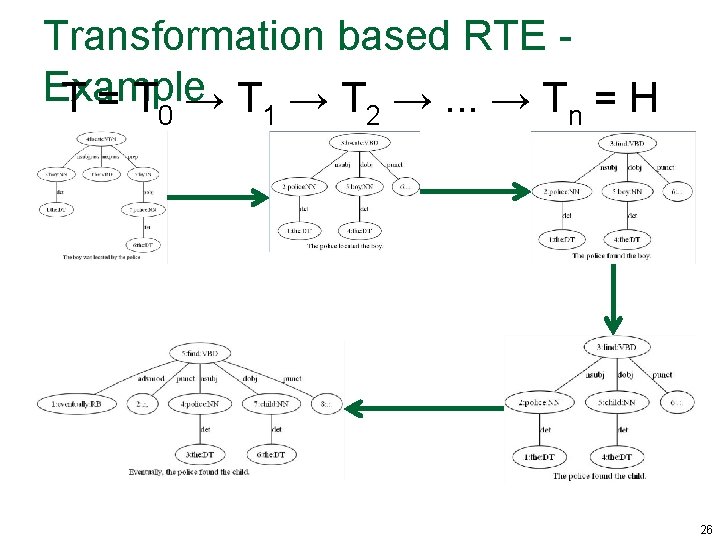

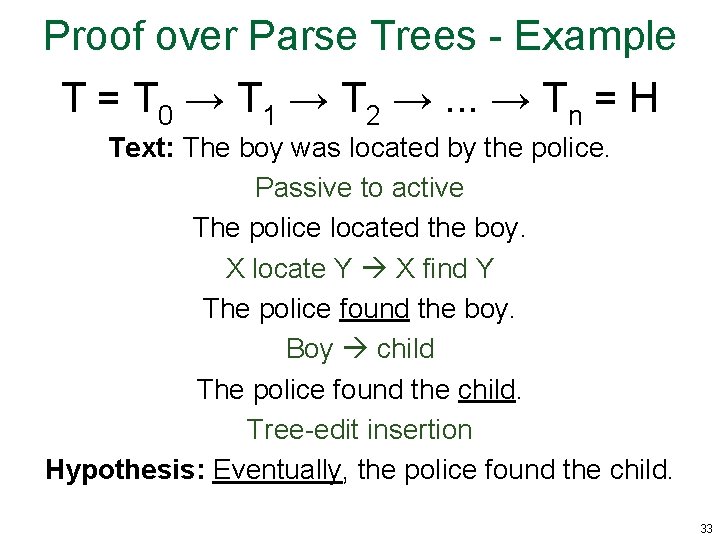

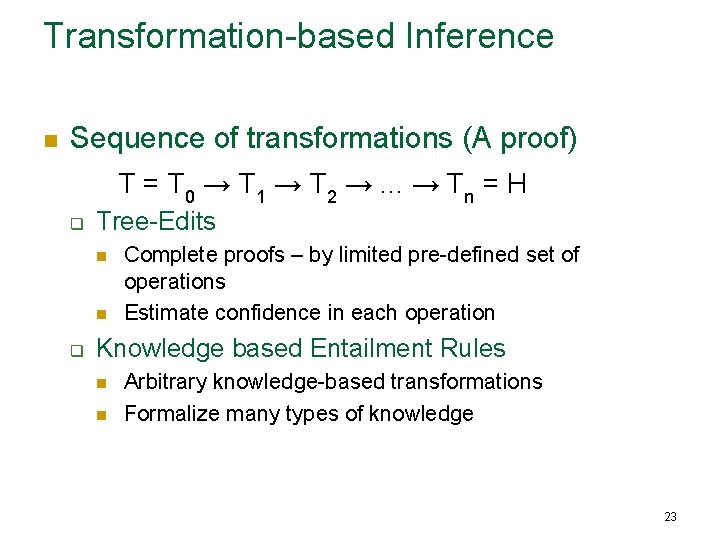

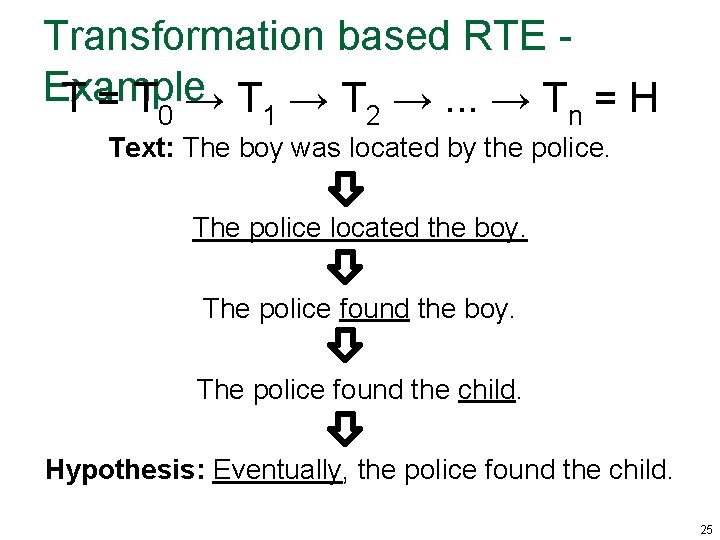

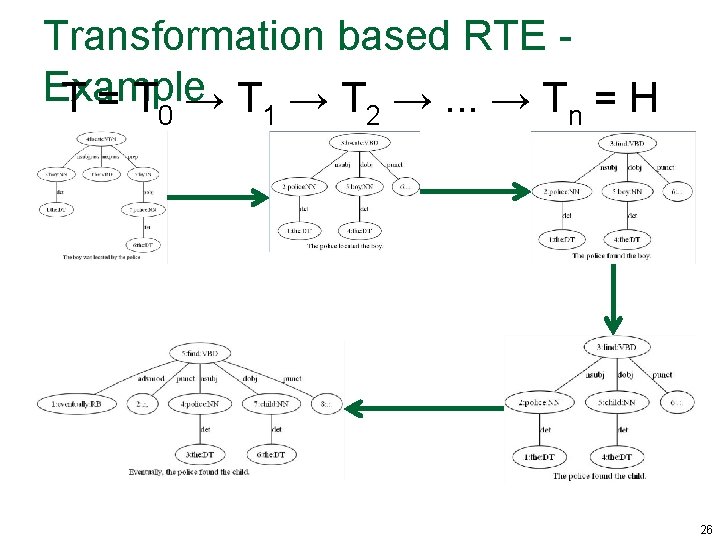

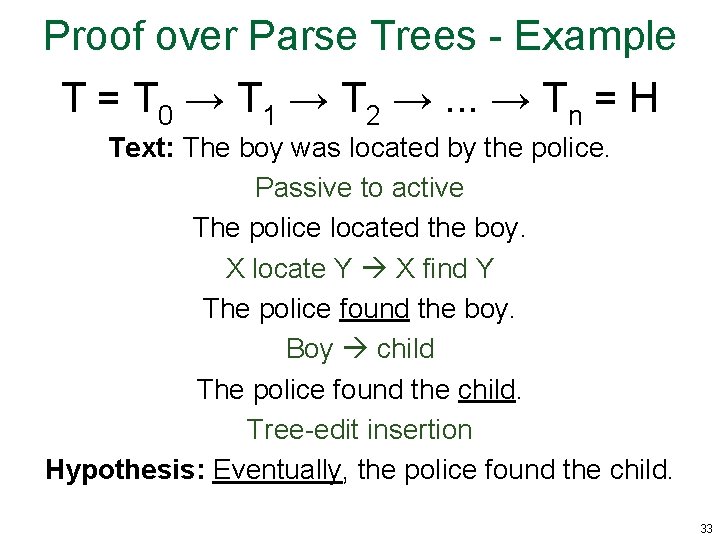

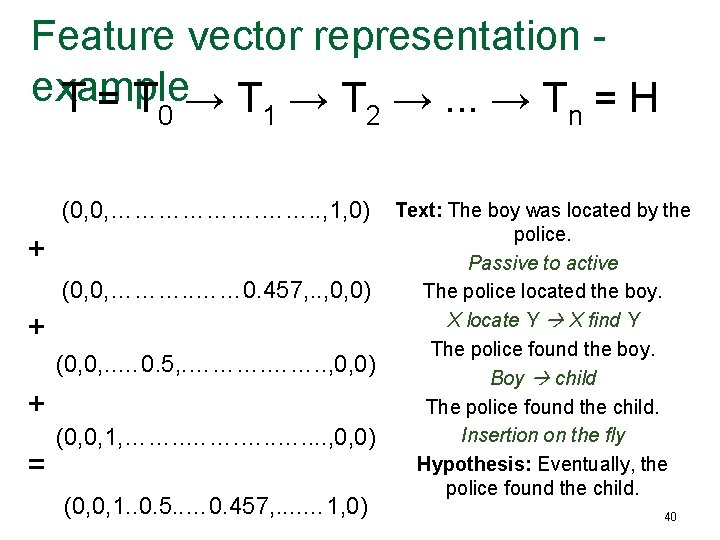

Transformation-based Inference n Sequence of transformations (A proof) T = T 0 → T 1 → T 2 →. . . → Tn = H q Tree-Edits n n q Complete proofs – by limited pre-defined set of operations Estimate confidence in each operation Knowledge based Entailment Rules n n Arbitrary knowledge-based transformations Formalize many types of knowledge 23

Transformation based RTE - Example T = T 0 → T 1 → T 2 →. . . → Tn = H Text: The boy was located by the police. Hypothesis: Eventually, the police found the child. 24

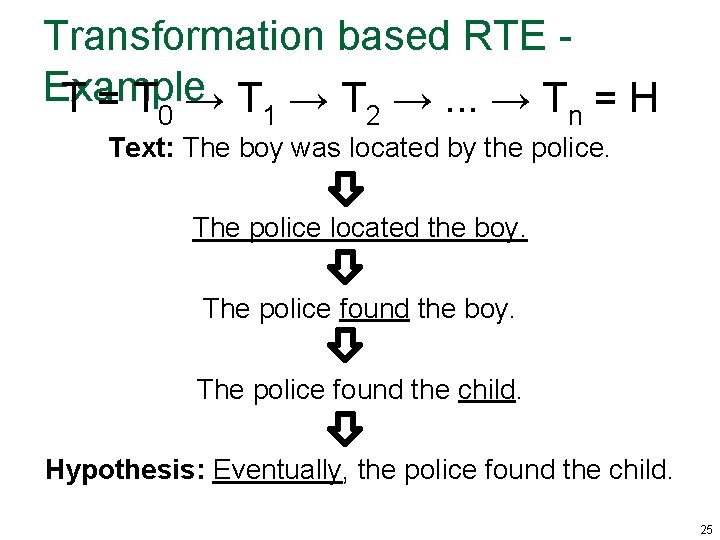

Transformation based RTE - Example T = T 0 → T 1 → T 2 →. . . → Tn = H Text: The boy was located by the police. The police located the boy. The police found the child. Hypothesis: Eventually, the police found the child. 25

Transformation based RTE - Example T = T 0 → T 1 → T 2 →. . . → Tn = H 26

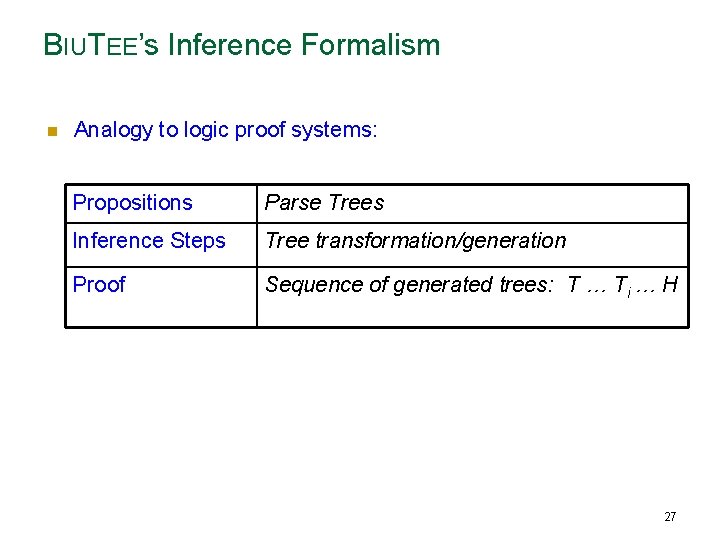

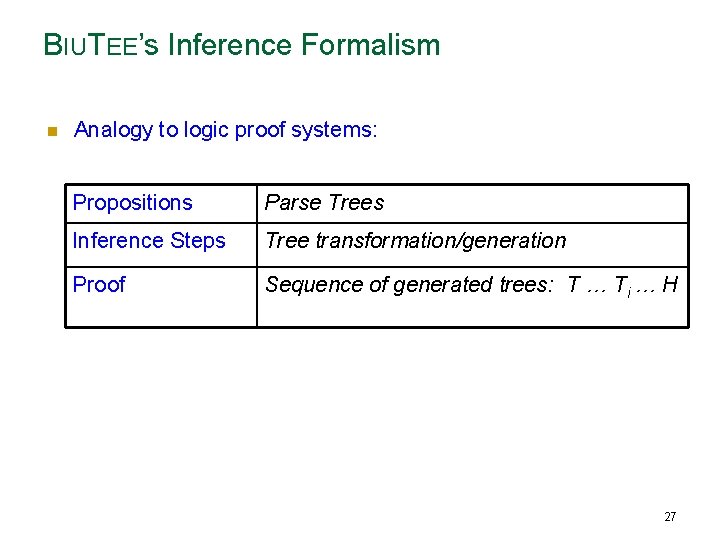

BIUTEE’s Inference Formalism n Analogy to logic proof systems: Propositions Parse Trees Inference Steps Tree transformation/generation Proof Sequence of generated trees: T … Ti … H 27

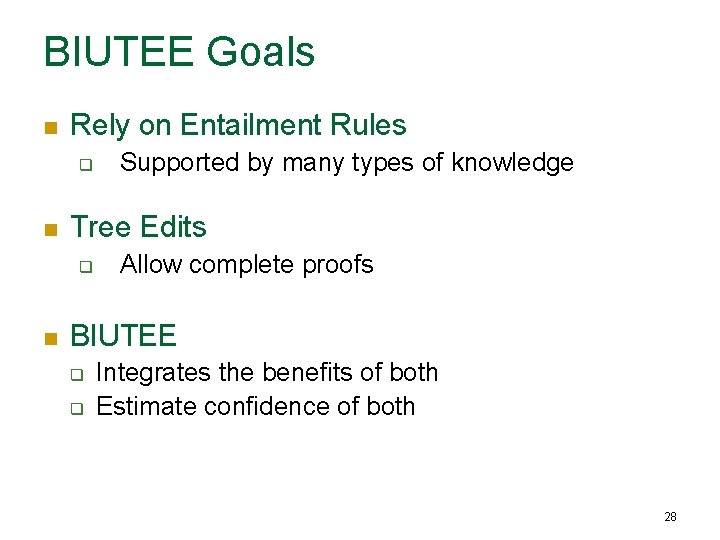

BIUTEE Goals n Rely on Entailment Rules q n Tree Edits q n Supported by many types of knowledge Allow complete proofs BIUTEE q q Integrates the benefits of both Estimate confidence of both 28

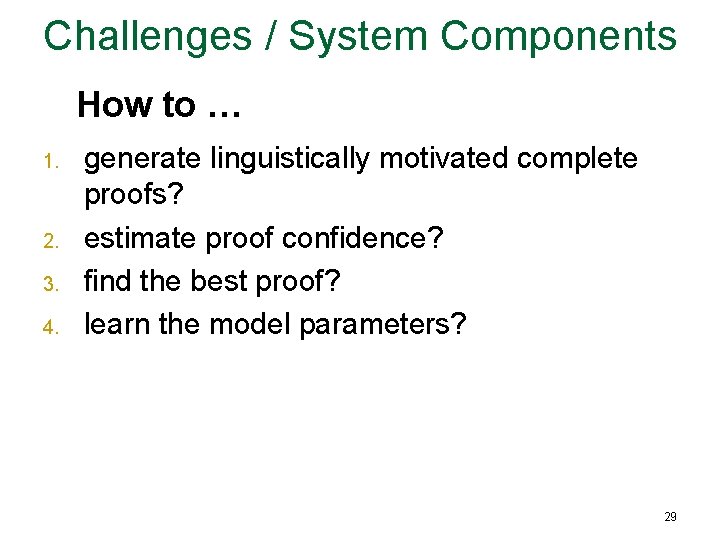

Challenges / System Components How to … 1. 2. 3. 4. generate linguistically motivated complete proofs? estimate proof confidence? find the best proof? learn the model parameters? 29

1. Generate linguistically motivated complete proofs 30

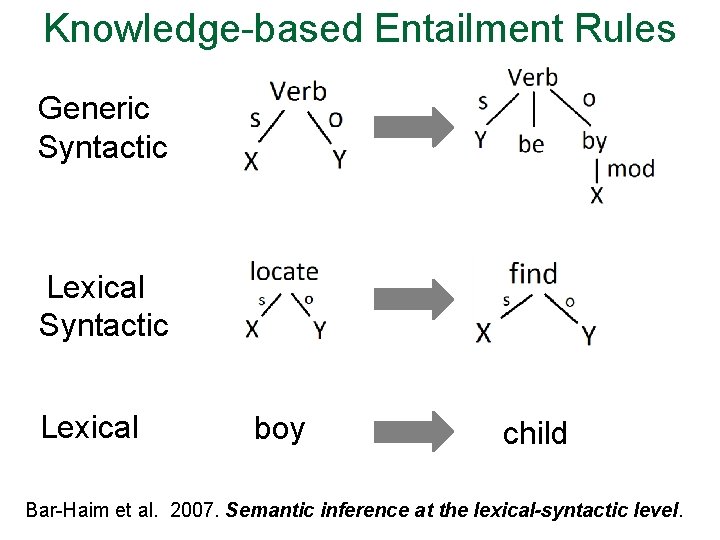

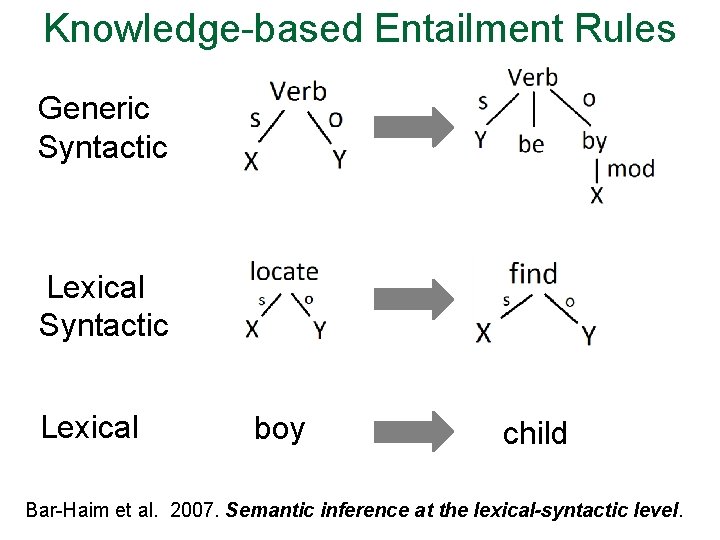

Knowledge-based Entailment Rules Generic Syntactic Lexical boy child Bar-Haim et al. 2007. Semantic inference at the lexical-syntactic level.

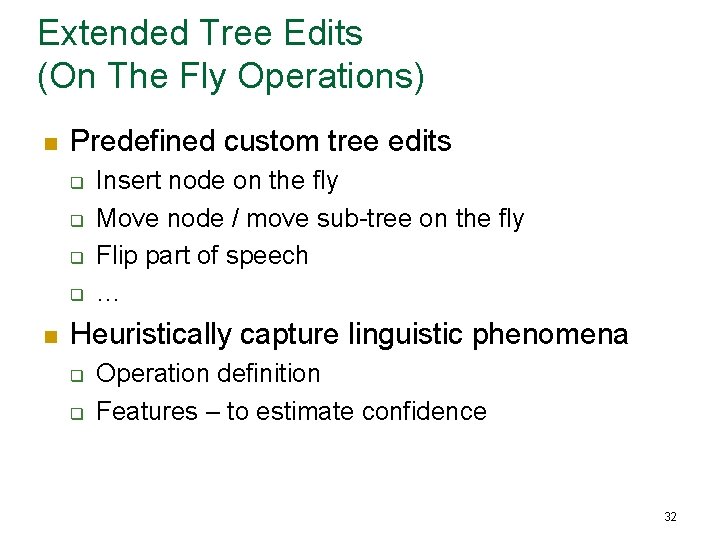

Extended Tree Edits (On The Fly Operations) n Predefined custom tree edits q q n Insert node on the fly Move node / move sub-tree on the fly Flip part of speech … Heuristically capture linguistic phenomena q q Operation definition Features – to estimate confidence 32

Proof over Parse Trees - Example T = T 0 → T 1 → T 2 →. . . → Tn = H Text: The boy was located by the police. Passive to active The police located the boy. X locate Y X find Y The police found the boy. Boy child The police found the child. Tree-edit insertion Hypothesis: Eventually, the police found the child. 33

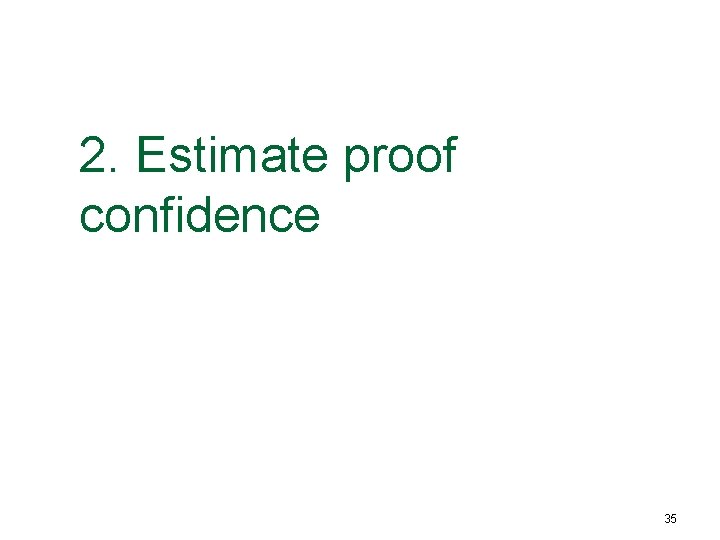

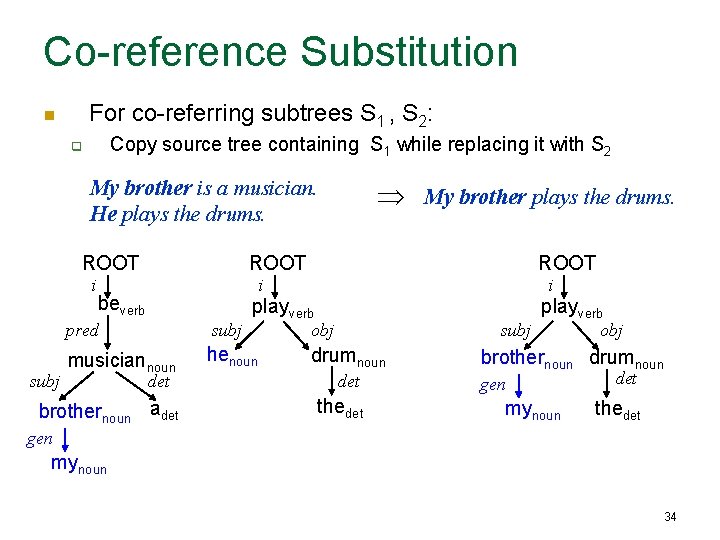

Co-reference Substitution For co-referring subtrees S 1 , S 2: n Copy source tree containing S 1 while replacing it with S 2 q My brother is a musician. He plays the drums. ROOT i subj My brother plays the drums. ROOT i beverb playverb pred subj musiciannoun henoun det brothernoun adet i obj subj drumnoun det thedet playverb obj brothernoun drumnoun gen mynoun det thedet gen mynoun 34

2. Estimate proof confidence 35

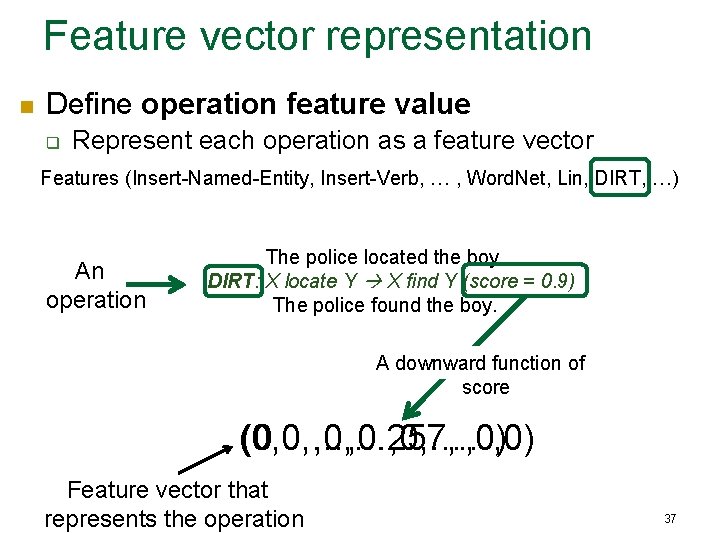

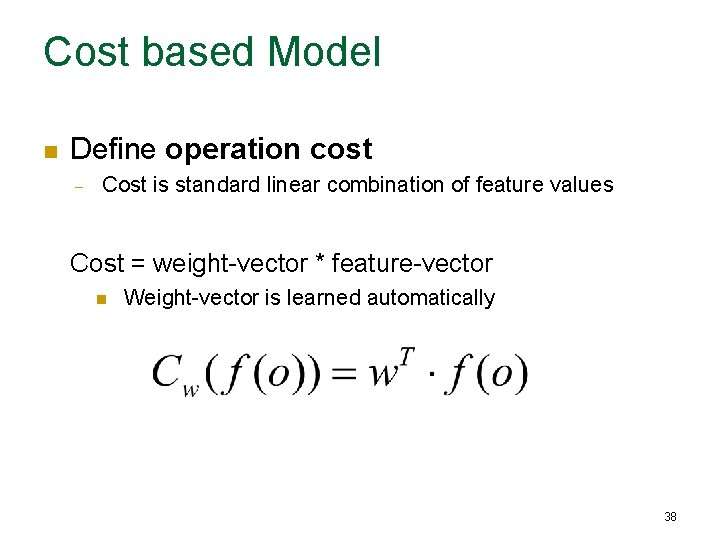

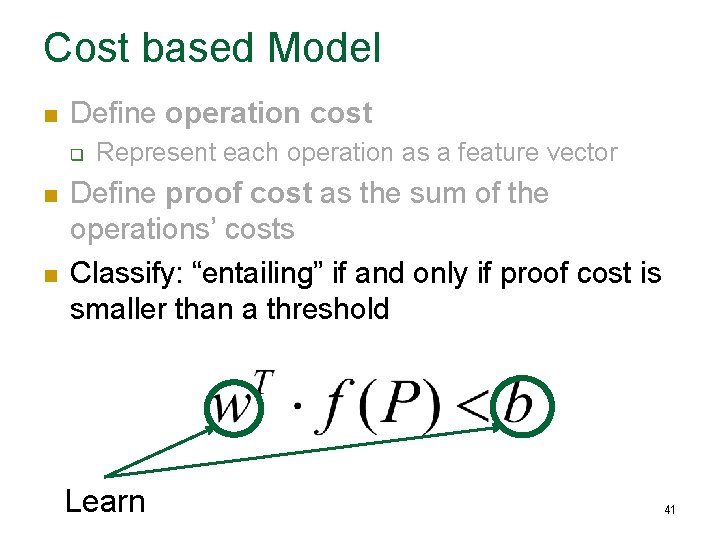

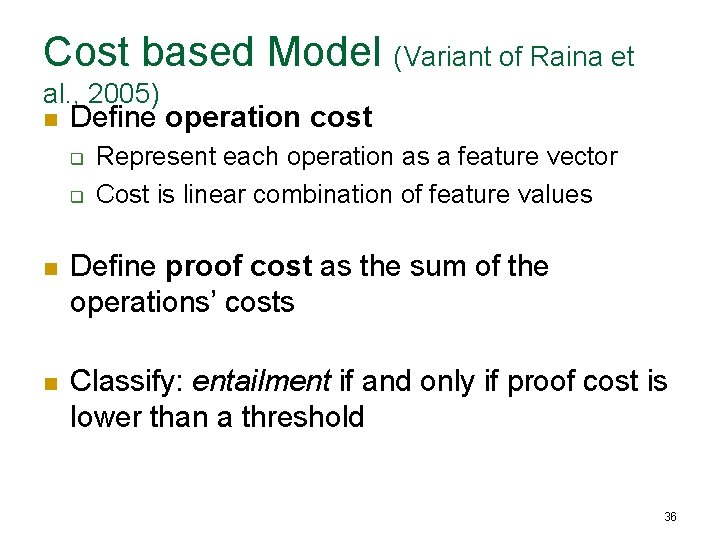

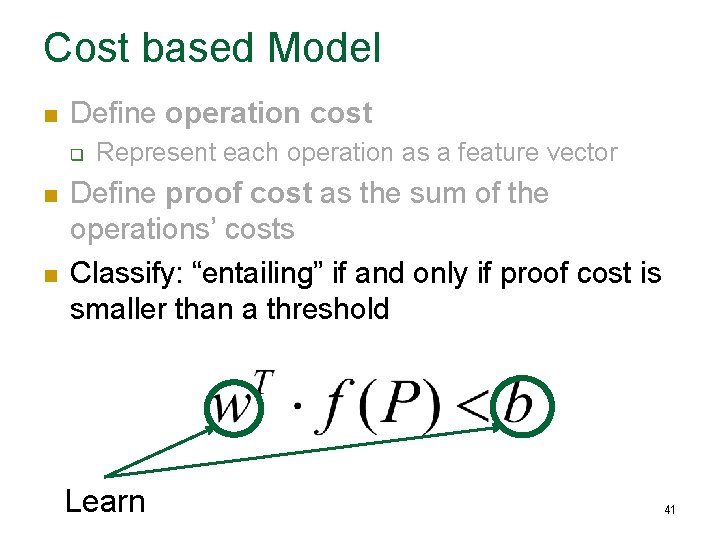

Cost based Model (Variant of Raina et al. , 2005) n Define operation cost q q Represent each operation as a feature vector Cost is linear combination of feature values n Define proof cost as the sum of the operations’ costs n Classify: entailment if and only if proof cost is lower than a threshold 36

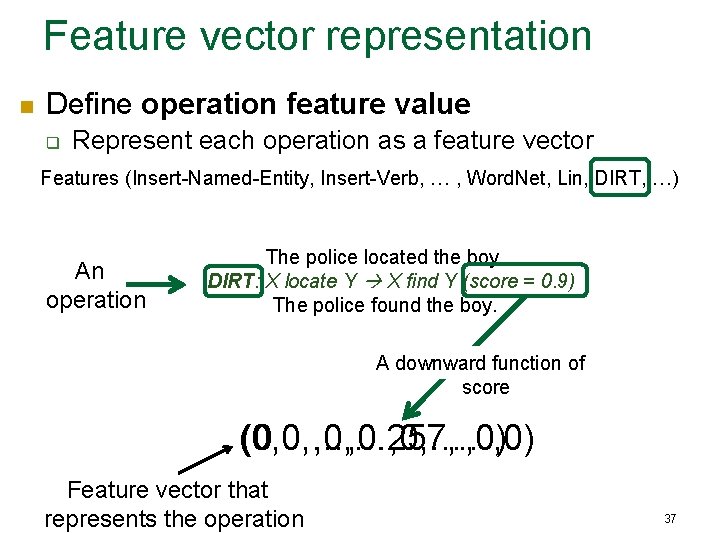

Feature vector representation n Define operation feature value q Represent each operation as a feature vector Features (Insert-Named-Entity, Insert-Verb, … , Word. Net, Lin, DIRT, …) An operation The police located the boy. DIRT: X locate Y X find Y (score = 0. 9) The police found the boy. A downward function of score (0, 0, …, 0. 257, …, 0) (0 , 0, …, 0) Feature vector that represents the operation 37

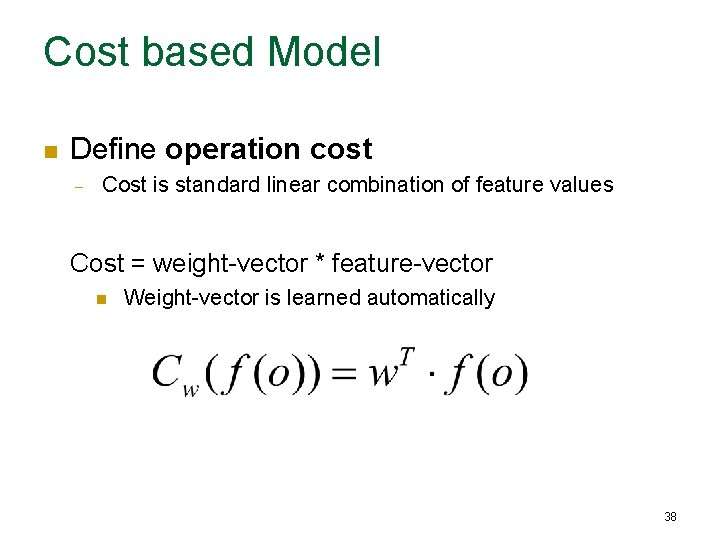

Cost based Model n Define operation cost – Cost is standard linear combination of feature values Cost = weight-vector * feature-vector n Weight-vector is learned automatically 38

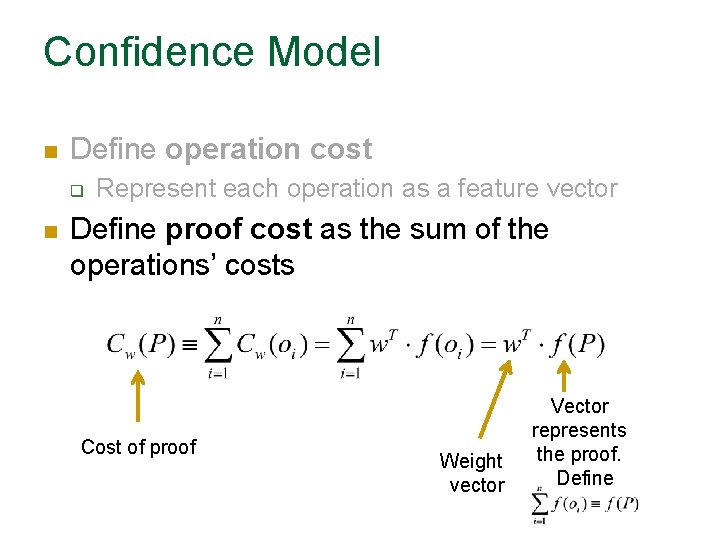

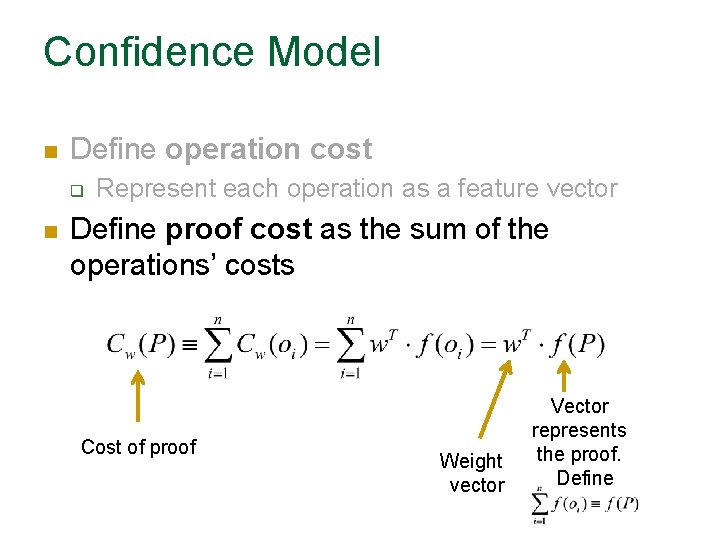

Confidence Model n Define operation cost q n Represent each operation as a feature vector Define proof cost as the sum of the operations’ costs Cost of proof Weight vector Vector represents the proof. Define

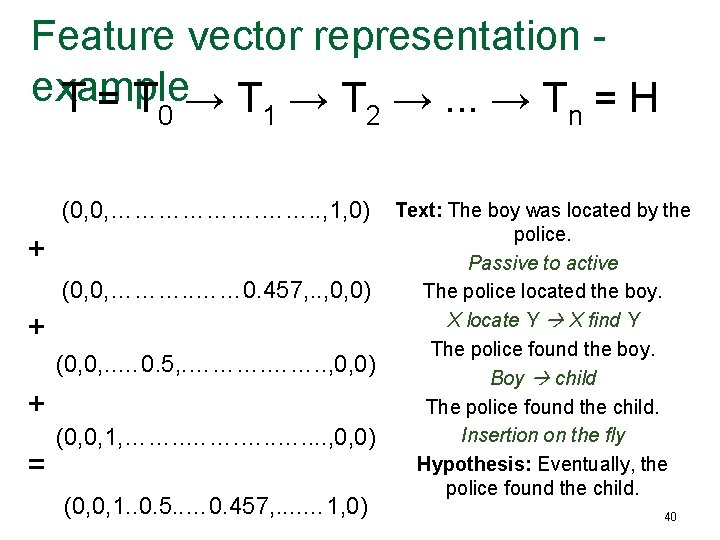

Feature vector representation - example T = T 0 → T 1 → T 2 →. . . → Tn = H (0, 0, ………………. . , 1, 0) + (0, 0, ………. . …… 0. 457, . . , 0, 0) + (0, 0, . . … 0. 5, . ………. . , 0, 0) + = (0, 0, 1, ……. …. . . . , 0, 0) (0, 0, 1. . 0. 5. . … 0. 457, . . … 1, 0) Text: The boy was located by the police. Passive to active The police located the boy. X locate Y X find Y The police found the boy. Boy child The police found the child. Insertion on the fly Hypothesis: Eventually, the police found the child. 40

Cost based Model n Define operation cost q n n Represent each operation as a feature vector Define proof cost as the sum of the operations’ costs Classify: “entailing” if and only if proof cost is smaller than a threshold Learn 41

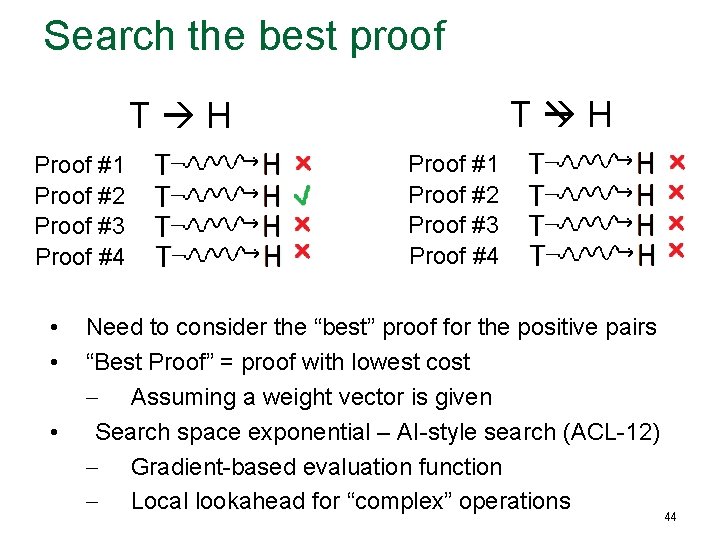

3. Find the best proof 42

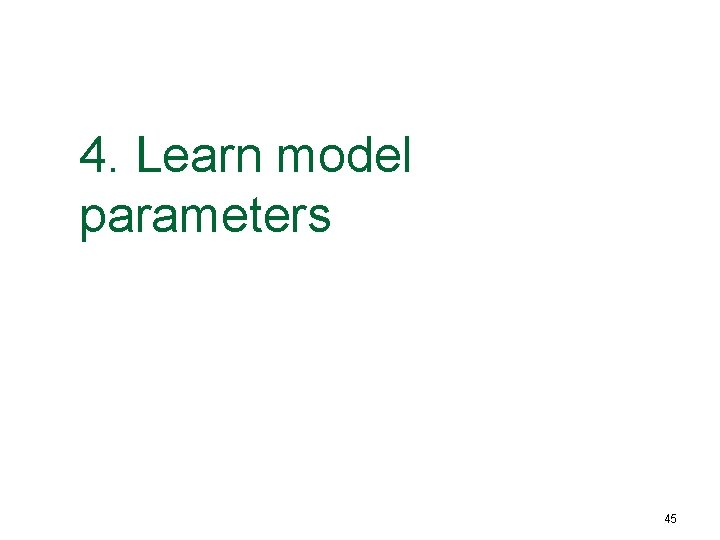

Search the best proof T - H Proof #1 Proof #2 Proof #3 Proof #4 43

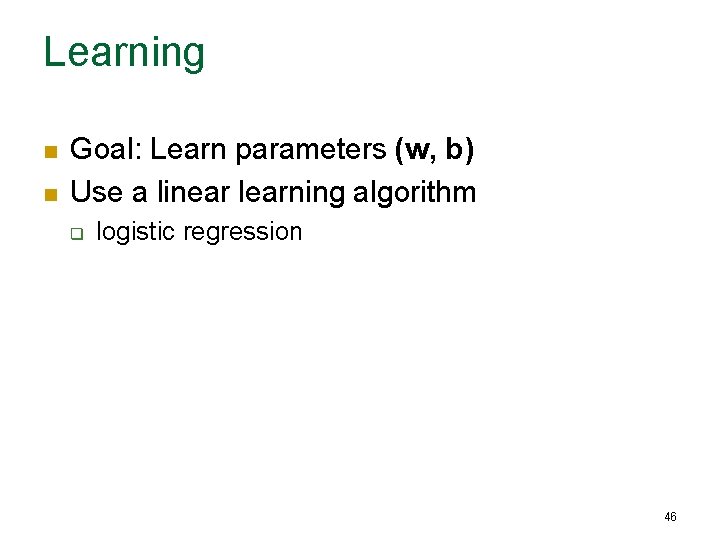

Search the best proof T H Proof #1 Proof #2 Proof #3 Proof #4 • • • Proof #1 Proof #2 Proof #3 Proof #4 Need to consider the “best” proof for the positive pairs “Best Proof” = proof with lowest cost ‒ Assuming a weight vector is given Search space exponential – AI-style search (ACL-12) ‒ Gradient-based evaluation function ‒ Local lookahead for “complex” operations 44

4. Learn model parameters 45

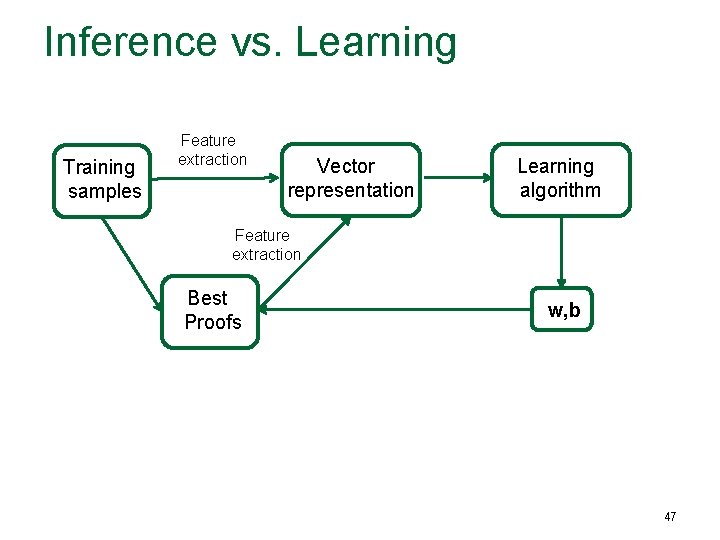

Learning n n Goal: Learn parameters (w, b) Use a linear learning algorithm q logistic regression 46

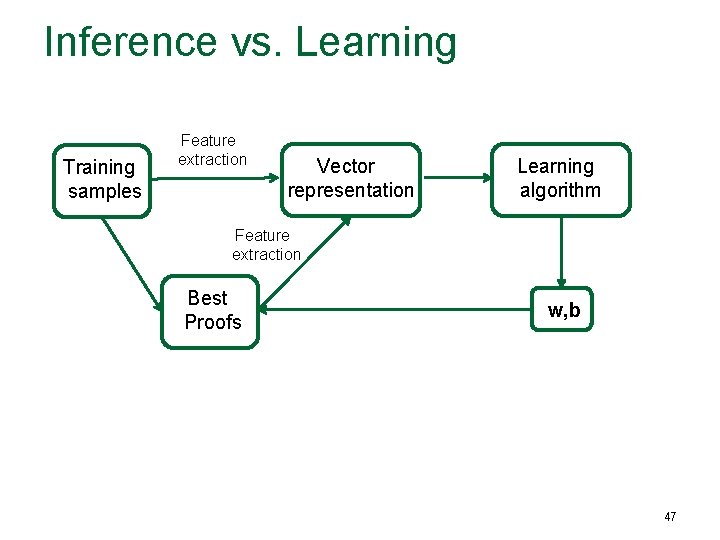

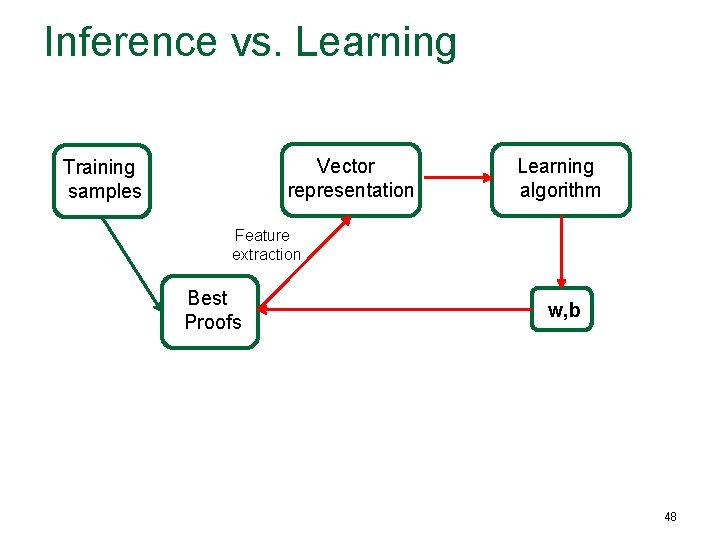

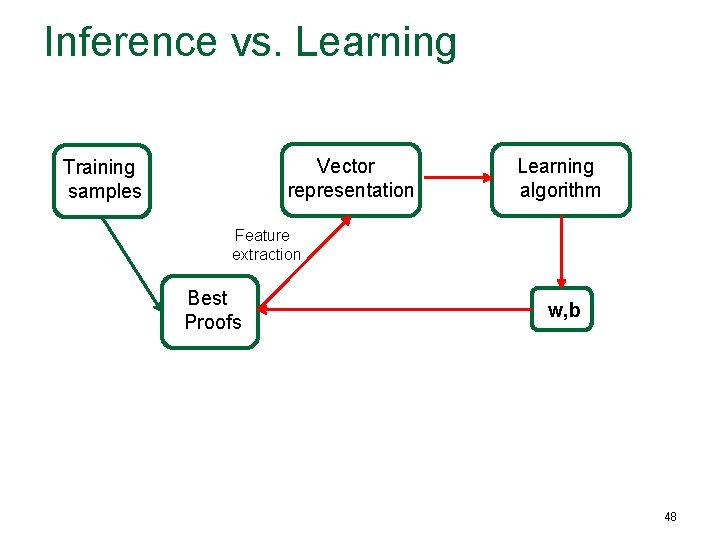

Inference vs. Learning Training samples Feature extraction Vector representation Learning algorithm Feature extraction Best Proofs w, b 47

Inference vs. Learning Vector representation Training samples Learning algorithm Feature extraction Best Proofs w, b 48

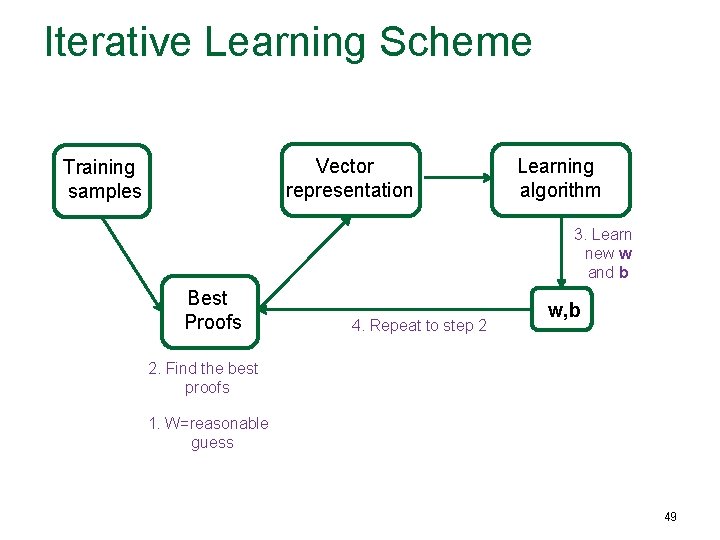

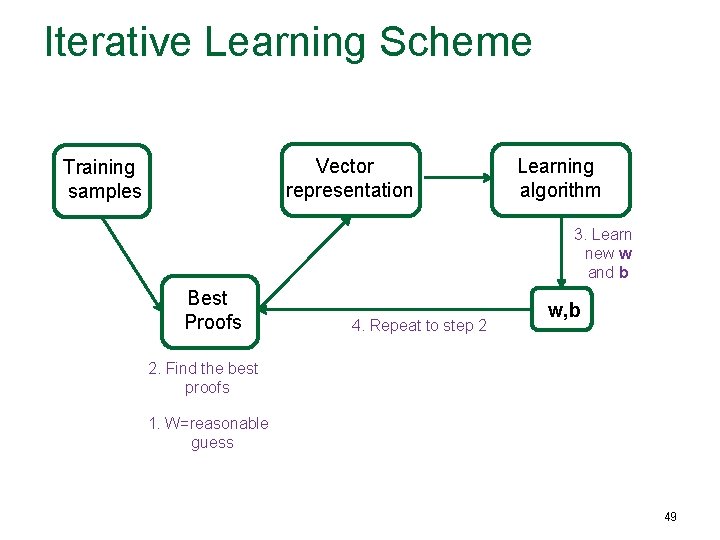

Iterative Learning Scheme Vector representation Training samples Learning algorithm 3. Learn new w and b Best Proofs 4. Repeat to step 2 w, b 2. Find the best proofs 1. W=reasonable guess 49

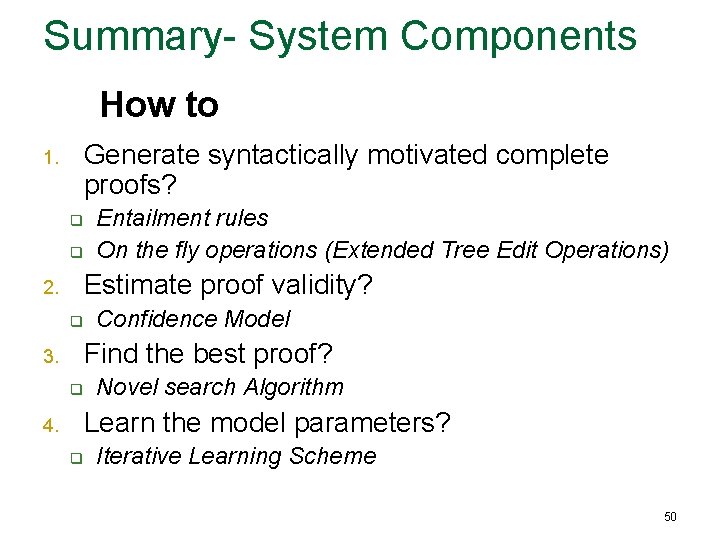

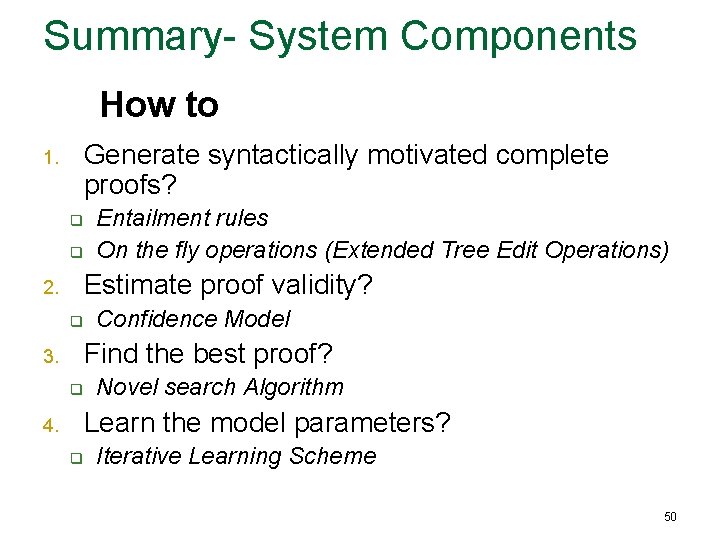

Summary- System Components How to Generate syntactically motivated complete proofs? 1. q q Entailment rules On the fly operations (Extended Tree Edit Operations) Estimate proof validity? 2. q Confidence Model Find the best proof? 3. q Novel search Algorithm Learn the model parameters? 4. q Iterative Learning Scheme 50

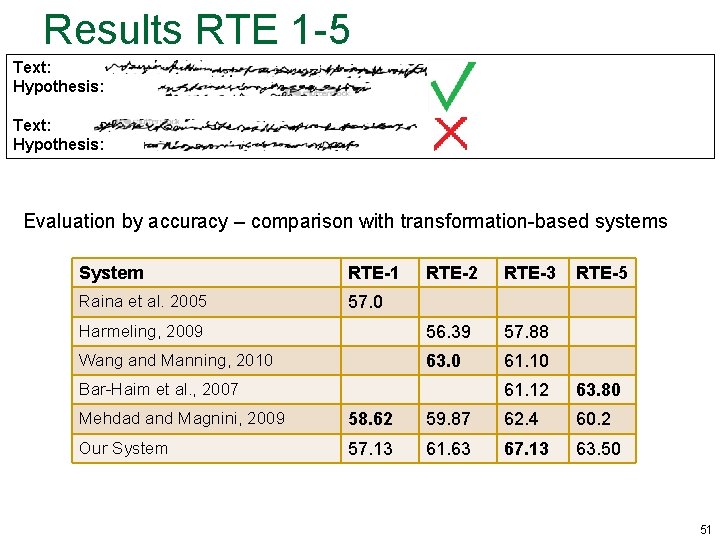

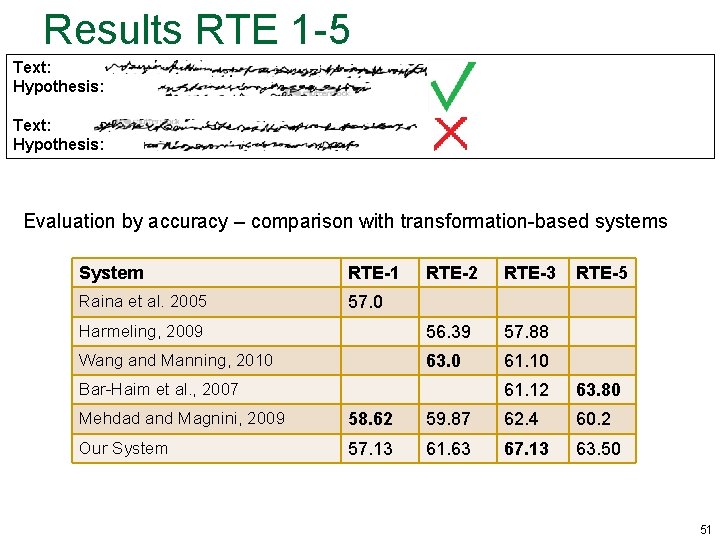

Results RTE 1 -5 Text: Hypothesis: Evaluation by accuracy – comparison with transformation-based systems System RTE-1 RTE-2 RTE-3 Raina et al. 2005 57. 0 Harmeling, 2009 56. 39 57. 88 Wang and Manning, 2010 63. 0 61. 10 Bar-Haim et al. , 2007 RTE-5 61. 12 63. 80 Mehdad and Magnini, 2009 58. 62 59. 87 62. 4 60. 2 Our System 57. 13 61. 63 67. 13 63. 50 51

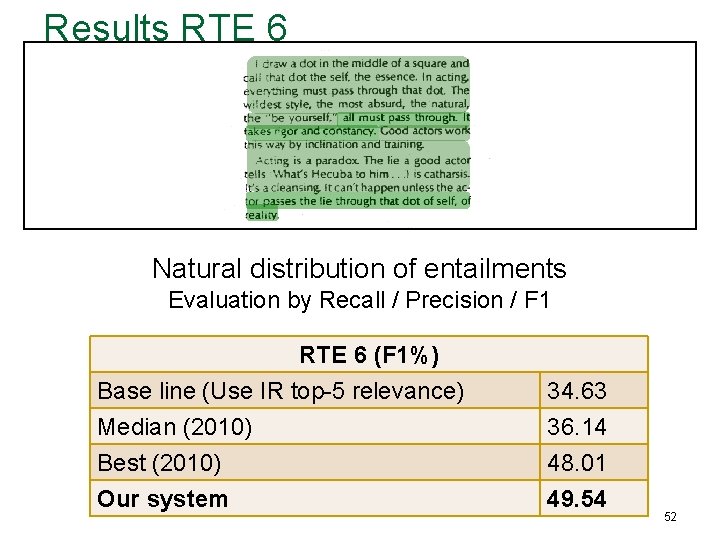

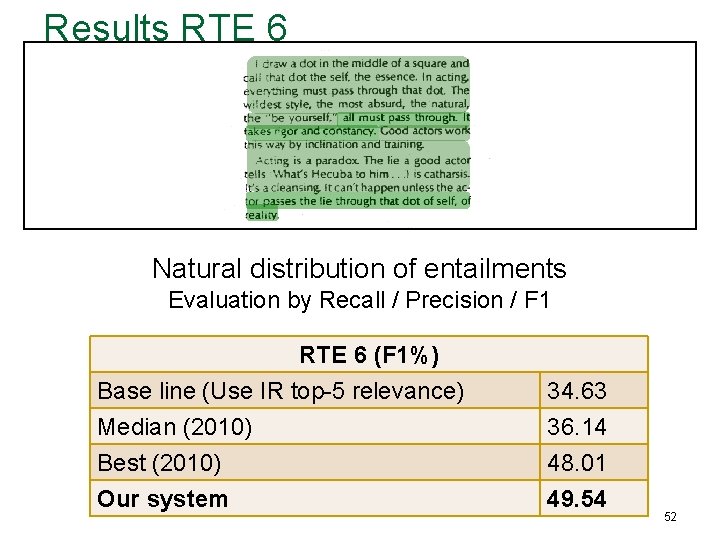

Results RTE 6 Natural distribution of entailments Evaluation by Recall / Precision / F 1 RTE 6 (F 1%) Base line (Use IR top-5 relevance) Median (2010) Best (2010) 34. 63 36. 14 48. 01 Our system 49. 54 52

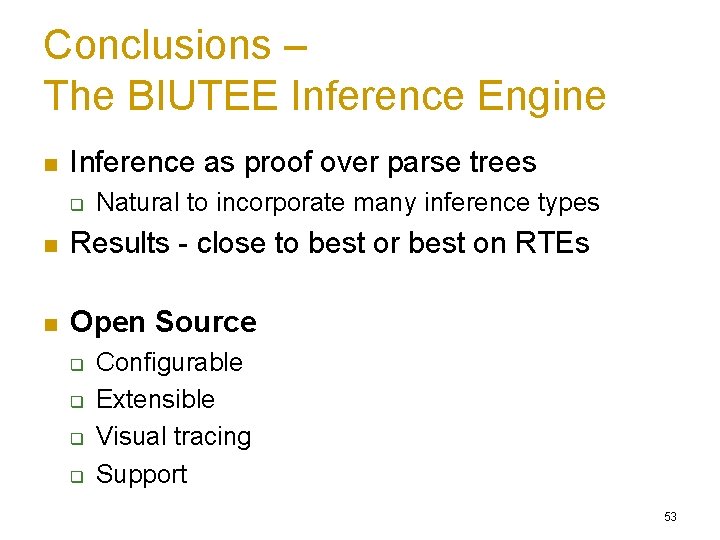

Conclusions – The BIUTEE Inference Engine n Inference as proof over parse trees q Natural to incorporate many inference types n Results - close to best or best on RTEs n Open Source q q Configurable Extensible Visual tracing Support 53

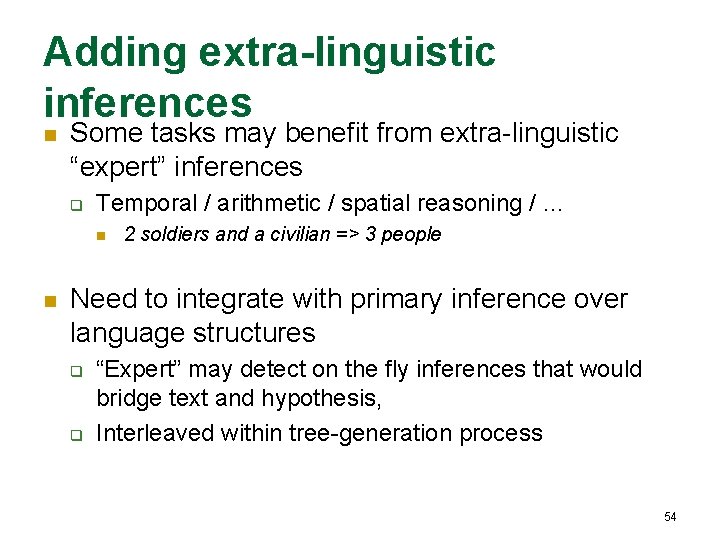

Adding extra-linguistic inferences n Some tasks may benefit from extra-linguistic “expert” inferences q Temporal / arithmetic / spatial reasoning / … n n 2 soldiers and a civilian => 3 people Need to integrate with primary inference over language structures q q “Expert” may detect on the fly inferences that would bridge text and hypothesis, Interleaved within tree-generation process 54

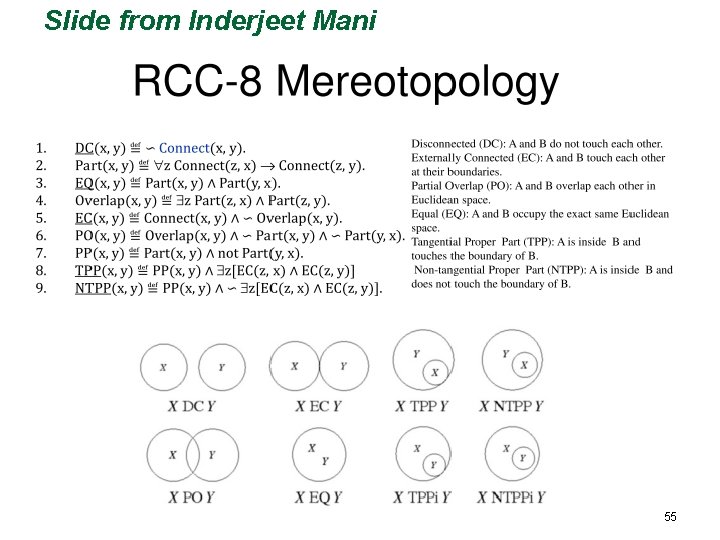

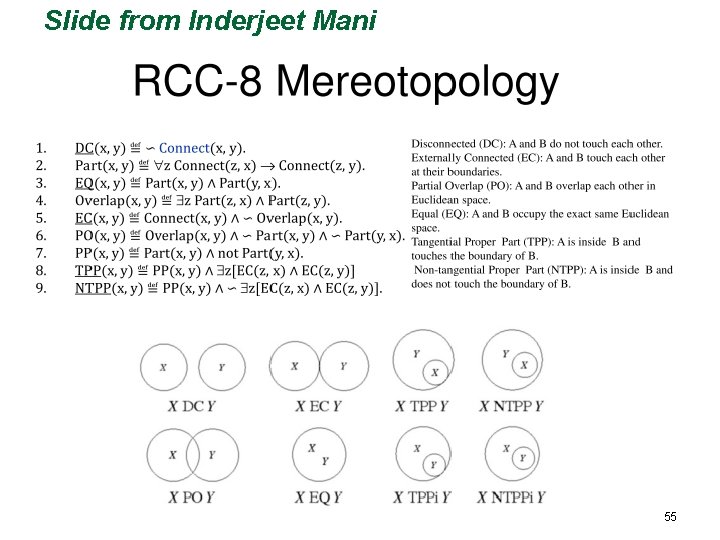

Slide from Inderjeet Mani 55

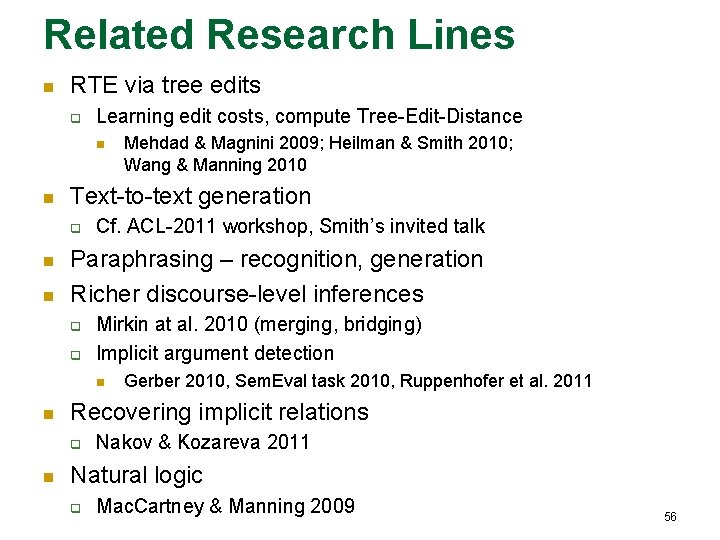

Related Research Lines n RTE via tree edits q Learning edit costs, compute Tree-Edit-Distance n n Text-to-text generation q n n Cf. ACL-2011 workshop, Smith’s invited talk Paraphrasing – recognition, generation Richer discourse-level inferences q q Mirkin at al. 2010 (merging, bridging) Implicit argument detection n n Gerber 2010, Sem. Eval task 2010, Ruppenhofer et al. 2011 Recovering implicit relations q n Mehdad & Magnini 2009; Heilman & Smith 2010; Wang & Manning 2010 Nakov & Kozareva 2011 Natural logic q Mac. Cartney & Manning 2009 56

![Lexical Textual Inference Eyal Shnarch n Complex systems use parser terloo 1 st or Lexical Textual Inference [Eyal Shnarch] n Complex systems use parser terloo, 1 st or](https://slidetodoc.com/presentation_image/050c2bbd080f527b973c925f82ef0048/image-57.jpg)

Lexical Textual Inference [Eyal Shnarch] n Complex systems use parser terloo, 1 st or 2 nd order coa W f o e l t t a In the B rench occurrence F e h t , 5 1 8 18 Jun 1 wa s , n o e l o p a N army, led by crushed. Text n n n in B elgiu m Nap oleo n was defe ated Hypothesis Lexical inference rules link terms from T to H Lexical rules come from lexical resources H is inferred from T iff all its terms are inferred lexical textual inference principled probabilistic model PLIS - Probabilistic Lexical Inference System Improves state-of-the-art 57/34

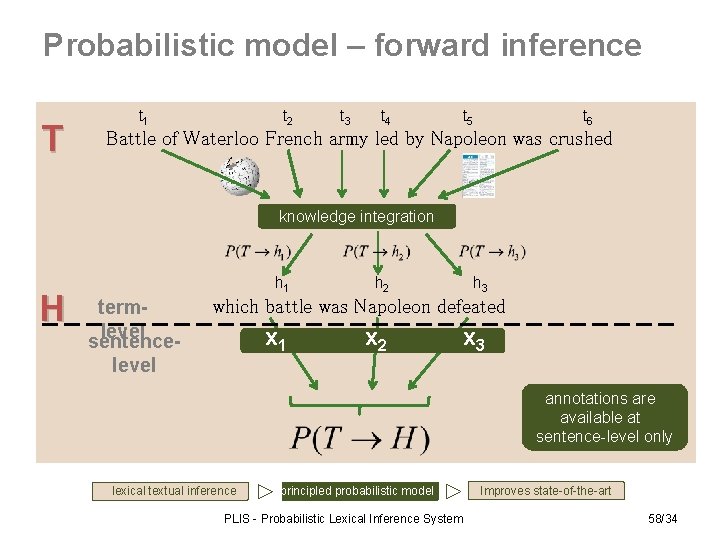

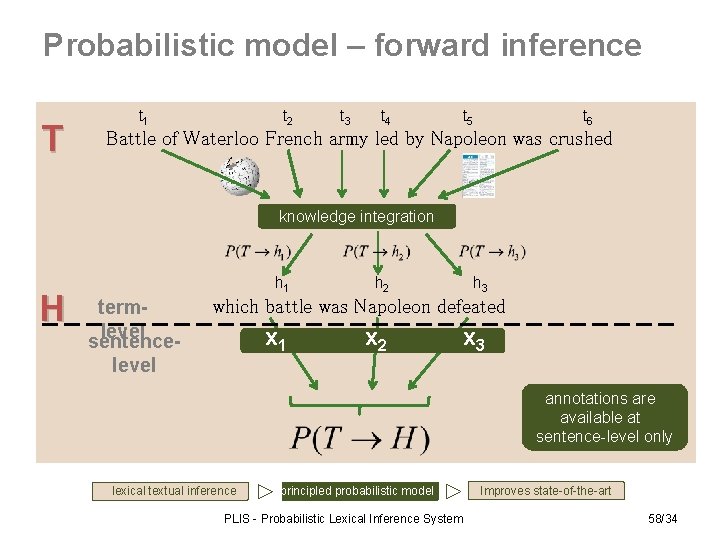

Probabilistic model – forward inference T t 1 t 2 t 3 t 4 t 5 t 6 Battle of Waterloo French army led by Napoleon was crushed knowledge integration H h 1 termlevel sentence- h 2 h 3 which battle was Napoleon defeated x 1 level x 2 x 3 annotations are available at sentence-level only lexical textual inference principled probabilistic model PLIS - Probabilistic Lexical Inference System Improves state-of-the-art 58/34

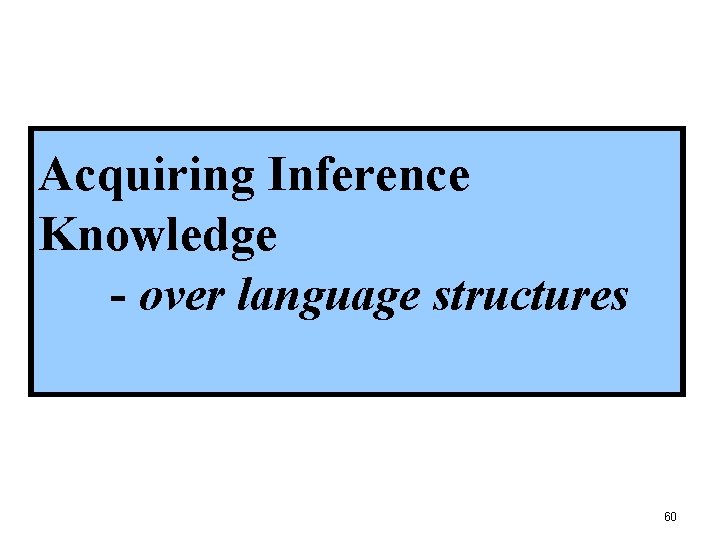

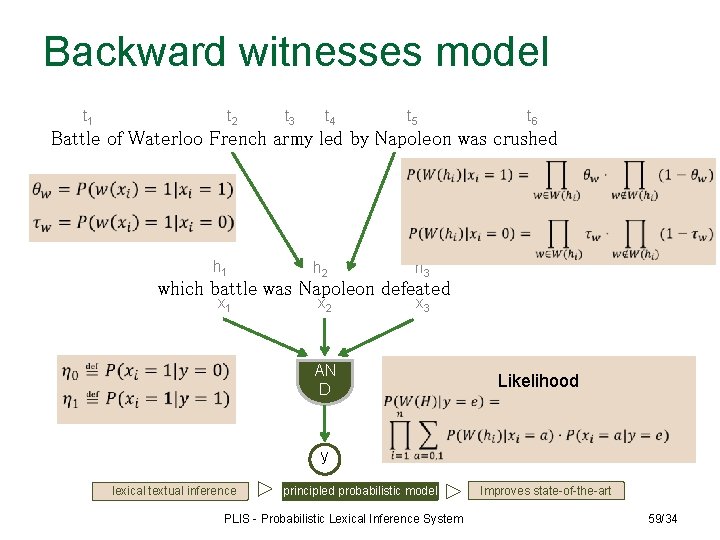

Backward witnesses model t 1 t 2 t 3 t 4 t 5 t 6 Battle of Waterloo French army led by Napoleon was crushed h 1 h 2 h 3 x 1 x 2 x 3 which battle was Napoleon defeated AN D Likelihood y lexical textual inference principled probabilistic model PLIS - Probabilistic Lexical Inference System Improves state-of-the-art 59/34

Acquiring Inference Knowledge - over language structures 60

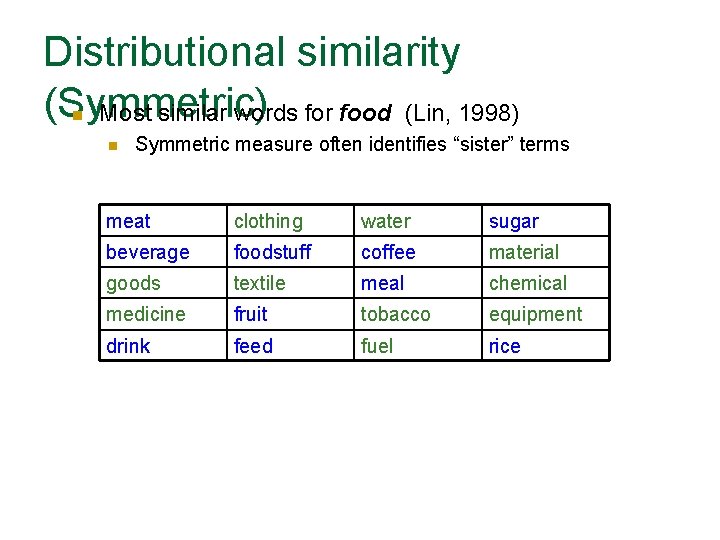

Knowledge acquisition sources n n Learning from corpora Mining human-oriented knowledge resources q n Computational NLP resources q n Wikipedia, dictionary definitions WN, Frame. Net, NOMLEX, … Manual knowledge engineering q Recent Mechanical Turk potential 61

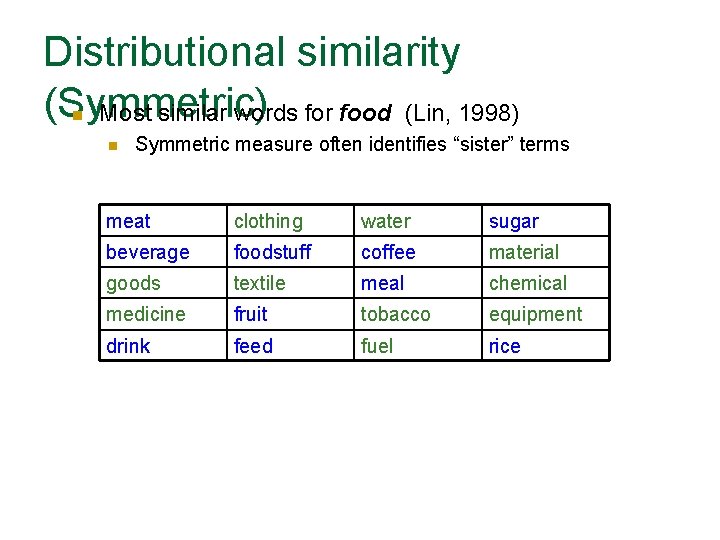

Distributional similarity (Symmetric) Most similar words for food (Lin, 1998) n n Symmetric measure often identifies “sister” terms meat clothing water sugar beverage foodstuff coffee material goods textile meal chemical medicine fruit tobacco equipment drink feed fuel rice

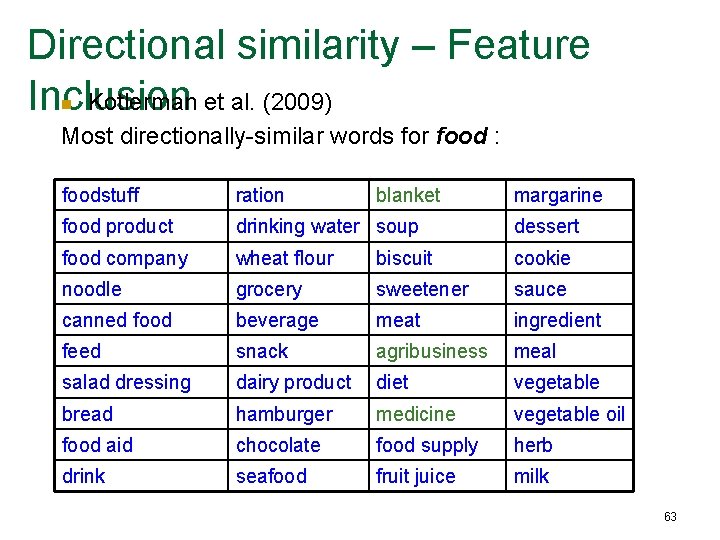

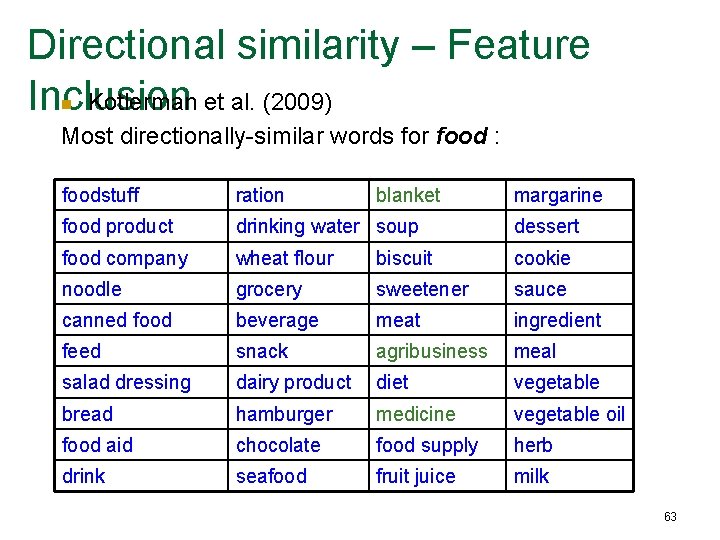

Directional similarity – Feature Inclusion Kotlerman et al. (2009) n Most directionally-similar words for food : foodstuff ration blanket margarine food product drinking water soup dessert food company wheat flour biscuit cookie noodle grocery sweetener sauce canned food beverage meat ingredient feed snack agribusiness meal salad dressing dairy product diet vegetable bread hamburger medicine vegetable oil food aid chocolate food supply herb drink seafood fruit juice milk 63

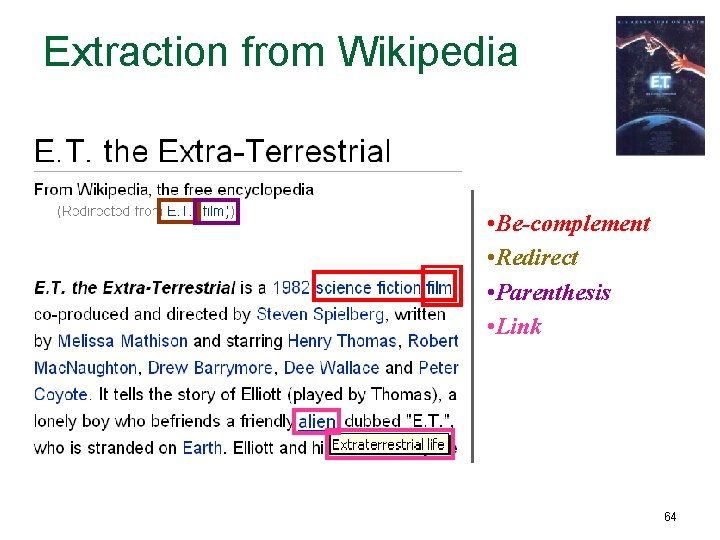

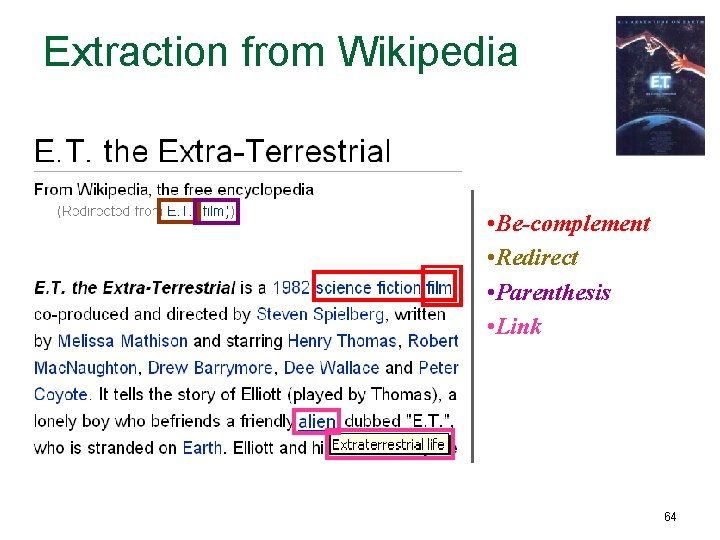

Extraction from Wikipedia • Be-complement • • Top All-nouns Redirect • • Bottom All-nouns Parenthesis • • Redirect various • Linkterms to canonical title 64

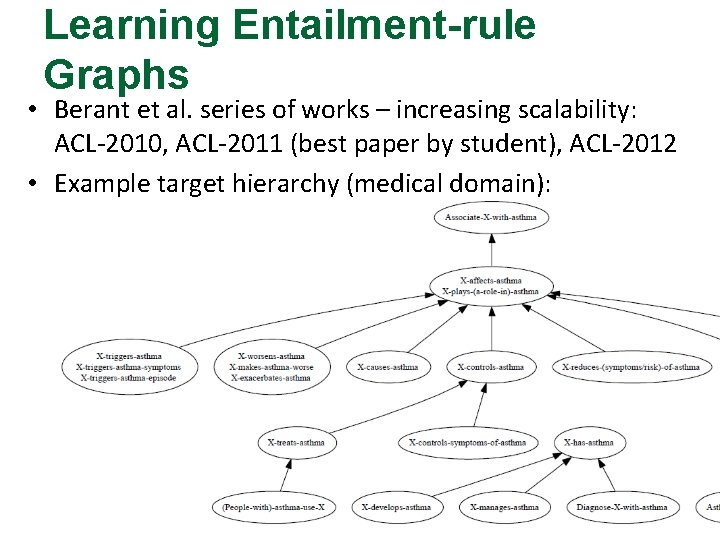

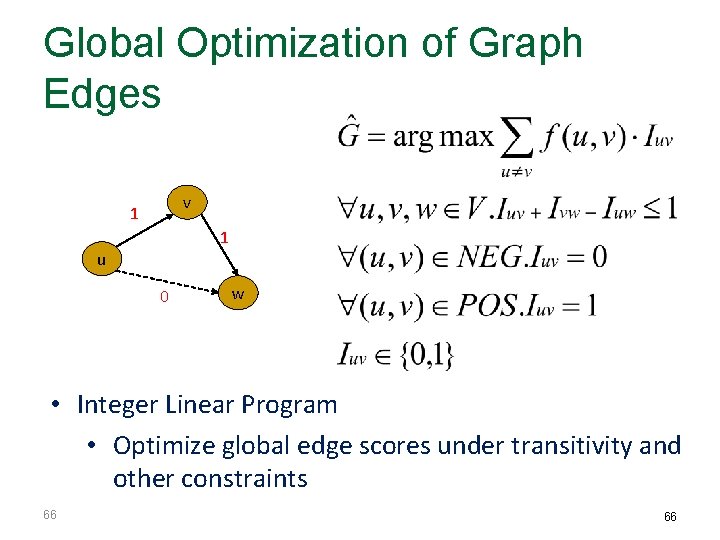

Learning Entailment-rule Graphs • Berant et al. series of works – increasing scalability: ACL-2010, ACL-2011 (best paper by student), ACL-2012 • Example target hierarchy (medical domain): 65

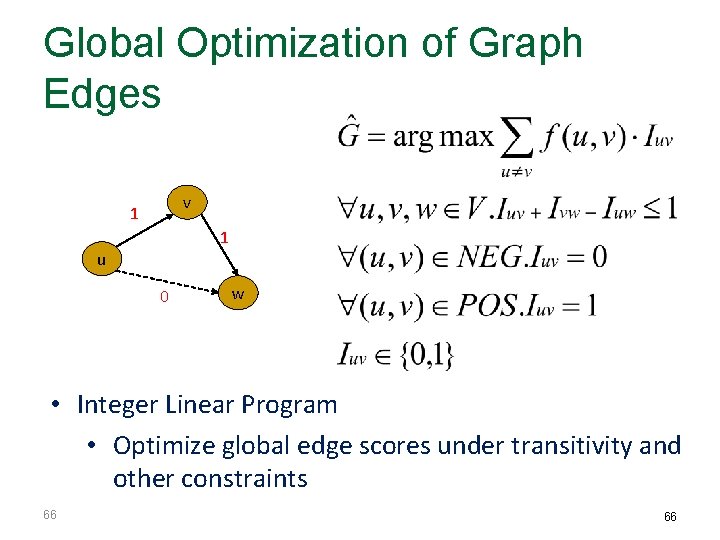

Global Optimization of Graph Edges v 1 1 u 0 w • Integer Linear Program • Optimize global edge scores under transitivity and other constraints 66 66

Syntactic-driven Entailments n Active-passive transformations Recover relative clause arguments Extract conjuncts Appositions … n Truth. Teller: annotate truth for predicates and clauses n n q q q n Positive: John called(+) Mary. Negative: John forgot to call(−) Mary. Unknown: John wanted to call(? ) Mary. Constructed via human linguistic engineering q May be combined with automatic learning 67

Mechanical Turk & Community Knowledge-engineering n Validating automatically-learned rules q q n Generating paraphrases/entailments Zeichner et al. , ACL-2012 Potential for community contribution q Stipulating domain knowledge in NL 68

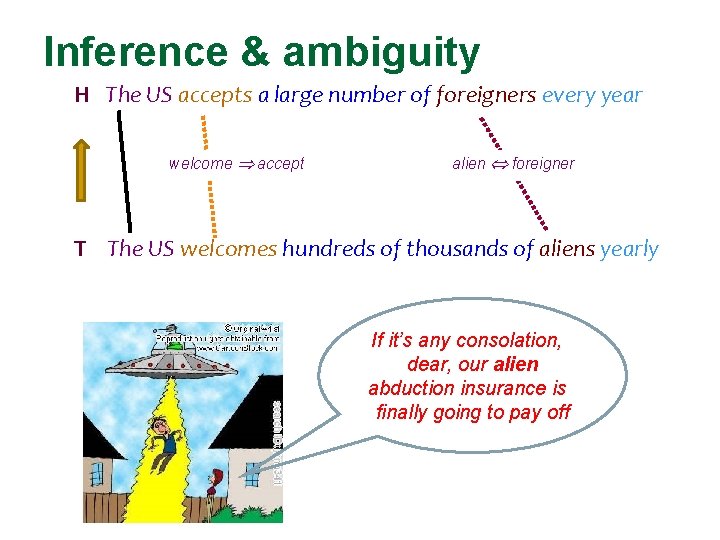

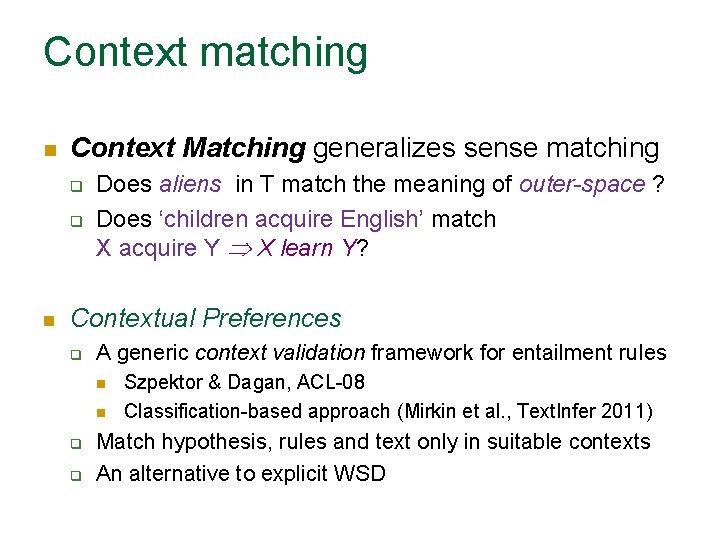

Inference & ambiguity H The US accepts a large number of foreigners every year welcome accept alien foreigner T The US welcomes hundreds of thousands of aliens yearly If it’s any consolation, dear, our alien abduction insurance is finally going to pay off

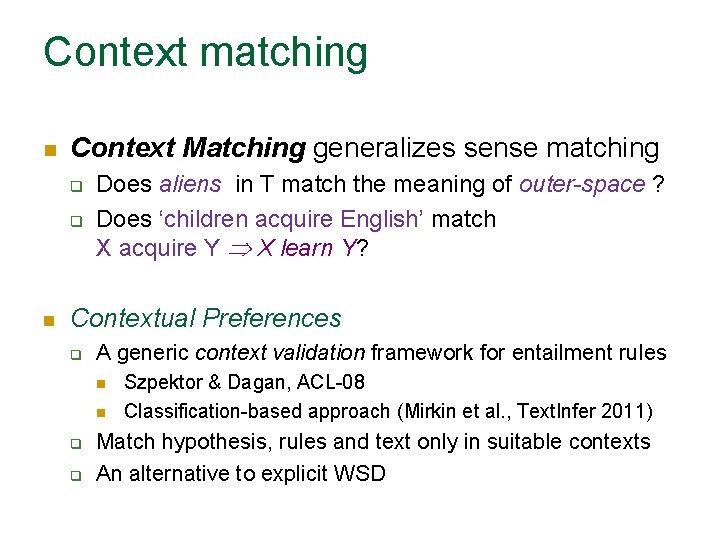

Context matching n Context Matching generalizes sense matching q q n Does aliens in T match the meaning of outer-space ? Does ‘children acquire English’ match X acquire Y X learn Y? Contextual Preferences q A generic context validation framework for entailment rules n n q q Szpektor & Dagan, ACL-08 Classification-based approach (Mirkin et al. , Text. Infer 2011) Match hypothesis, rules and text only in suitable contexts An alternative to explicit WSD

BIUTEE Demo 71

EXCITEMENT: towards Textual-inference Platform - Open source & community 72

A Textual Inference Platform n Starting with BIUTEE, moving to EXCITEMENT q Goal: build MOSES-like environment n q Addressing two types of research communities: n n n Incorporate partners’ inference systems Applications which can benefit from textual inference Technologies which can improve inference technology Partners: q q Academic: FBK, Heidelberg, DFKI, Bar-Ilan Industriacl: NICE (Israel), Alma. Wave (Italy), OMQ (Germany) 73

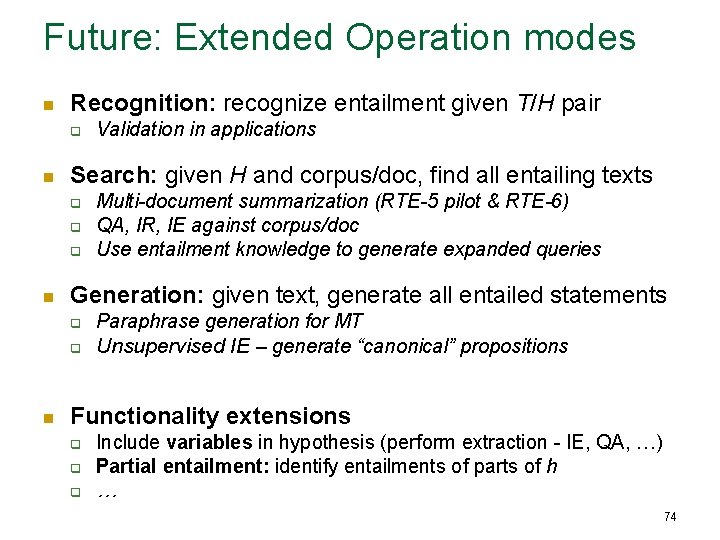

Future: Extended Operation modes n Recognition: recognize entailment given T/H pair q n Search: given H and corpus/doc, find all entailing texts q q q n Multi-document summarization (RTE-5 pilot & RTE-6) QA, IR, IE against corpus/doc Use entailment knowledge to generate expanded queries Generation: given text, generate all entailed statements q q n Validation in applications Paraphrase generation for MT Unsupervised IE – generate “canonical” propositions Functionality extensions q q q Include variables in hypothesis (perform extraction - IE, QA, …) Partial entailment: identify entailments of parts of h … 74

Entailment-based Text Exploration with Application to the Healthcare Domain Meni Adler, Jonathan Berant, Ido Dagan ACL 2012 Demo

Motivation

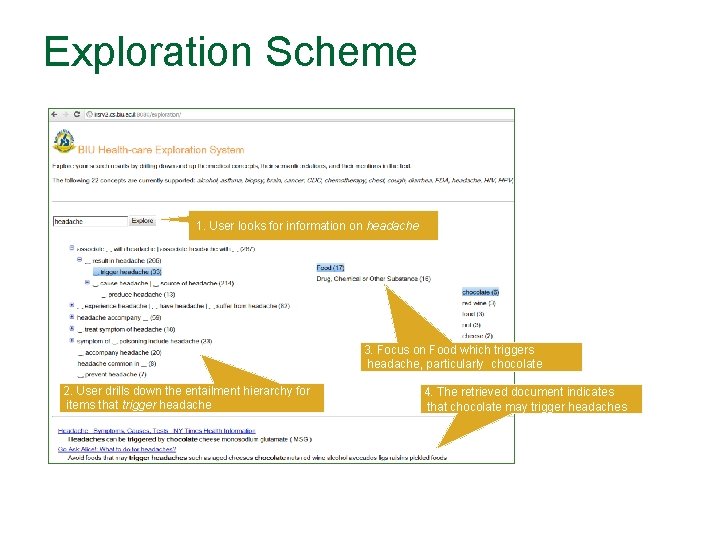

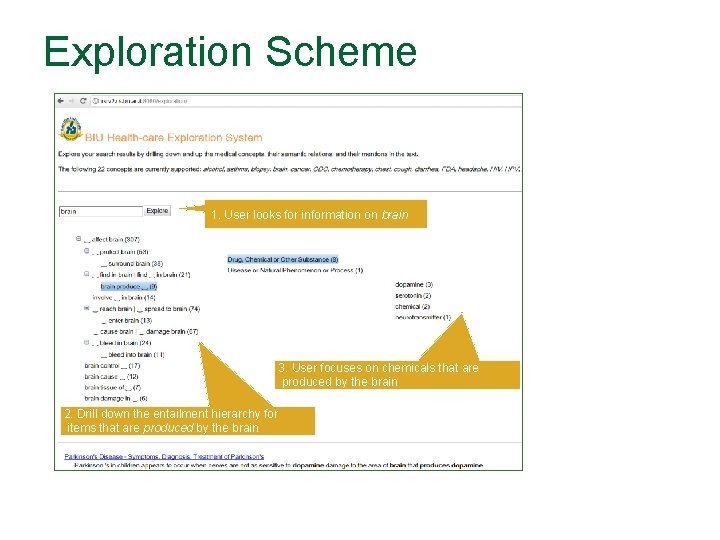

Goal n n Guide users through concrete facts in unfamiliar domains Common exploration approaches – via concepts/categories q n “what are the documents talking about? ” Our approach – exploration of statements/facts q “what do the documents actually say? ”

Entailment-Based Exploration Approach n Key Points q Collect statements/facts about the target concept n n q Open IE (Etzioni et al. ) How to organize it? Organize statements (propositions) by entailment hierarchies

Statement Level n Propositions q Predicate and Arguments

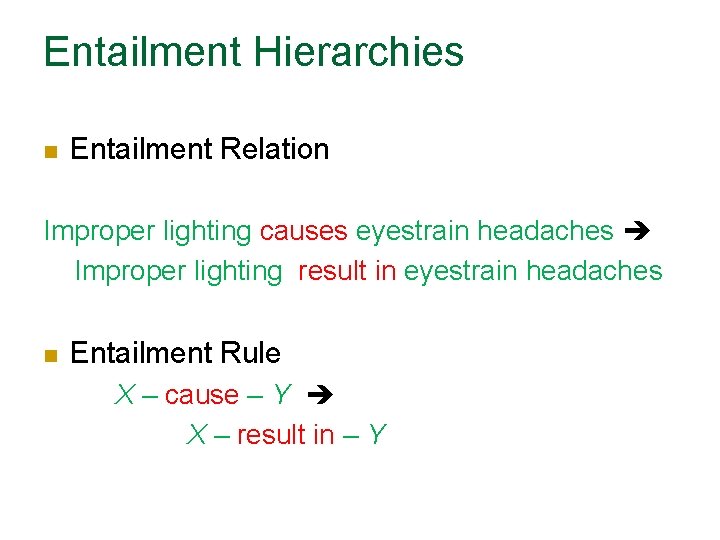

Statement Level n Propositions q Predicate and Arguments Improper lighting causes eyestrain headaches X – cause – Y

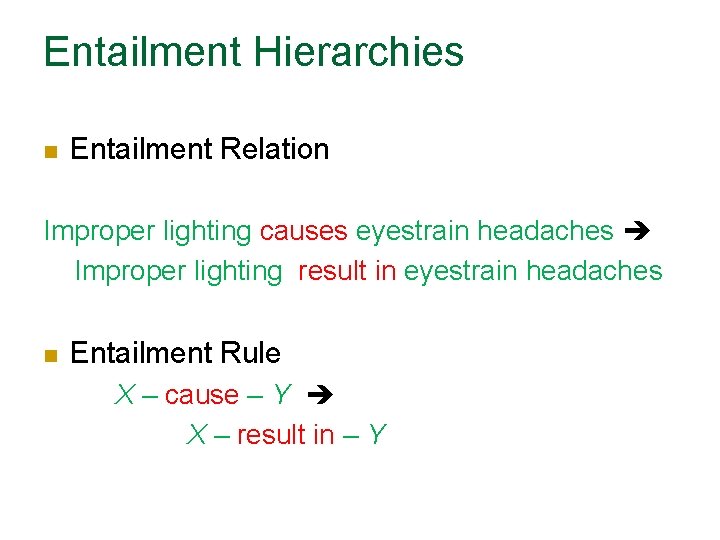

Entailment Hierarchies n Entailment Relation Improper lighting causes eyestrain headaches Improper lighting result in eyestrain headaches n Entailment Rule X – cause – Y X – result in – Y

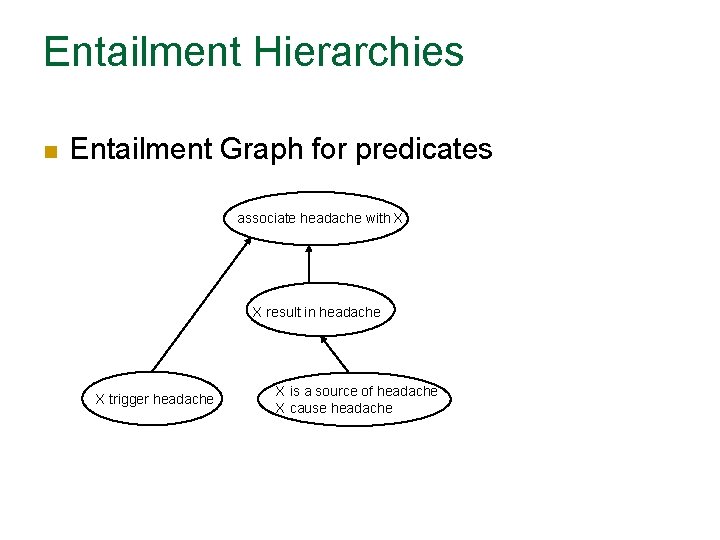

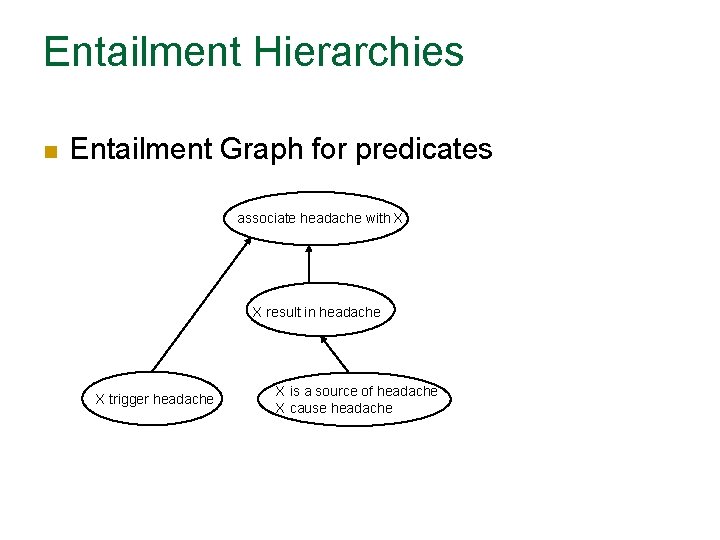

Entailment Hierarchies n Entailment Graph for predicates associate headache with X X result in headache X trigger headache X is a source of headache X cause headache

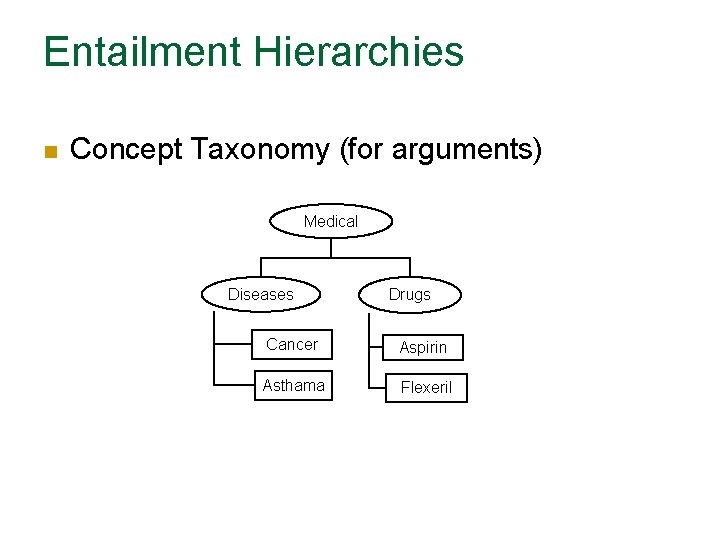

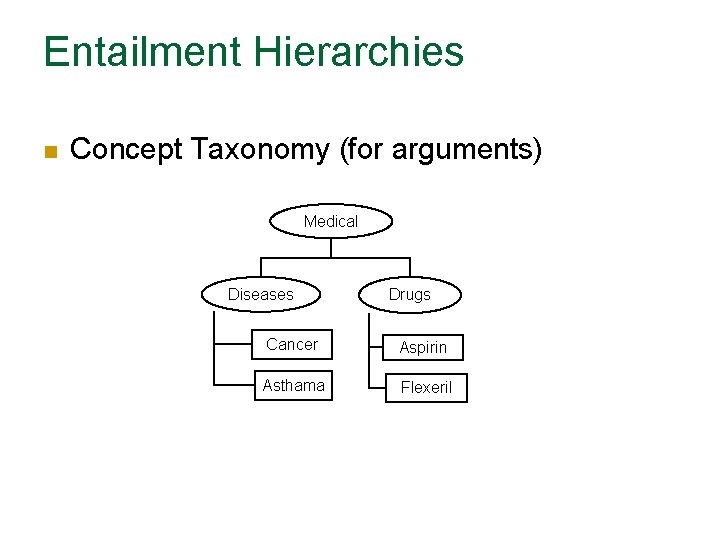

Entailment Hierarchies n Concept Taxonomy (for arguments) Medical Diseases Drugs Cancer Aspirin Asthama Flexeril

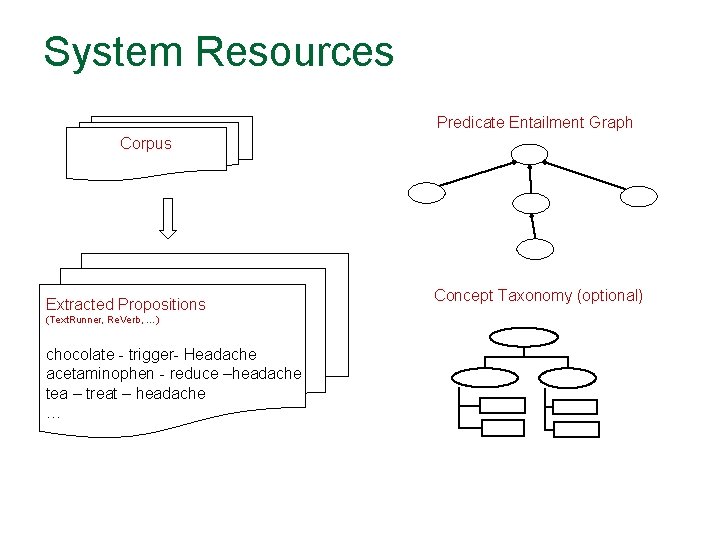

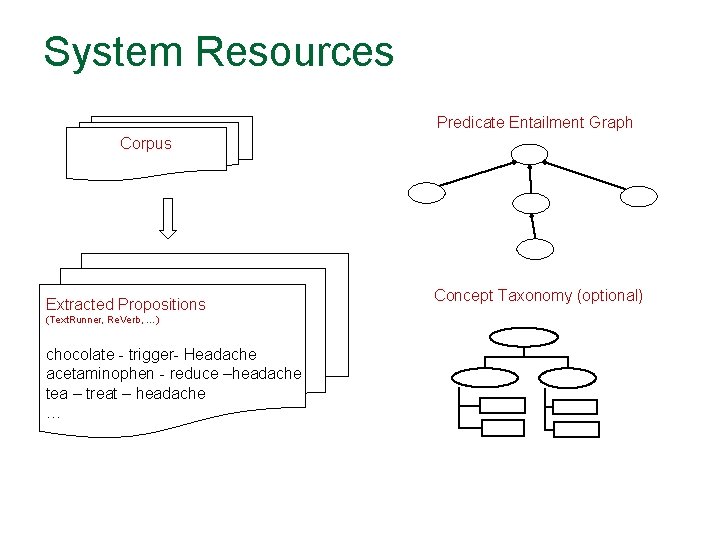

System Resources Predicate Entailment Graph Corpus Extracted Propositions (Text. Runner, Re. Verb, …) chocolate - trigger- Headache acetaminophen - reduce –headache tea – treat – headache … Concept Taxonomy (optional)

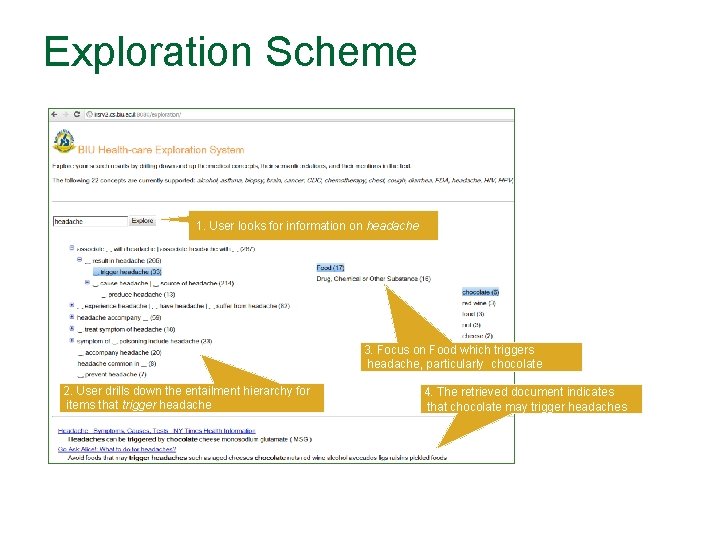

Exploration Scheme 1. User looks for information on headache 3. Focus on Food which triggers headache, particularly chocolate 2. User drills down the entailment hierarchy for items that trigger headache 4. The retrieved document indicates that chocolate may trigger headaches

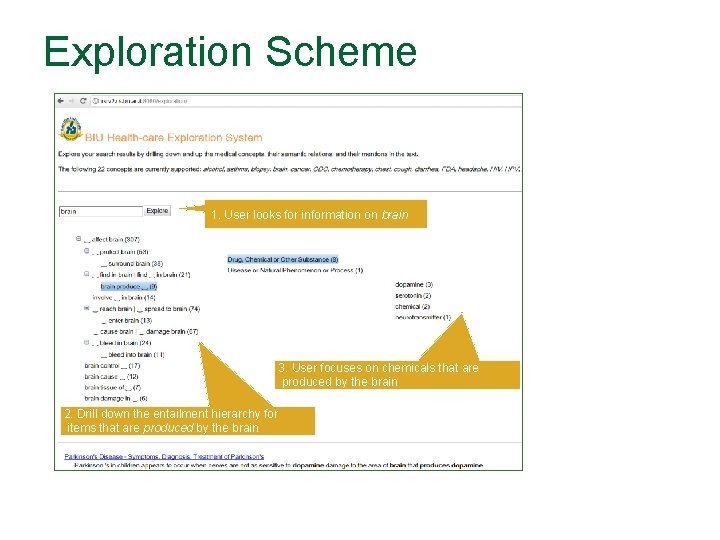

Exploration Scheme 1. User looks for information on brain 3. User focuses on chemicals that are produced by the brain 2. Drill down the entailment hierarchy for items that are produced by the brain

Conclusions – Exploration System n A novel powerful exploration paradigm q q n Organize extracted statements by entailment hierarchies Enables fast acquisition of knowledge by users Current Work q Learning a general entailment graph for the health -care domain n q Prototype available Investigating appropriate evaluation methods

Overall Takeout n Time to develop textual inference q n Generic, applied, principled Proposal: q Base core inference on language-based representations n q Parse trees, co-references, lexical contexts, … Extra-linguistic/logical inference for specific suitable cases n Breakthrough potential – current and future applications n It’s a long-term endeavor, but it’s here! http: //www. cs. biu. ac. il/~nlp/downloads/biutee 88