NASA OSMA SAS 01 Understanding Simulators Using TreeQuery

- Slides: 19

NASA OSMA SAS '01 Understanding Simulators Using Tree-Query Language New idea: Treatment learners Tim Menzies 1, Ying Hu 1, James D. Kiper 2 1 SE, ECE, UBC, Canada 2 Com Sci, Miami, Oxford, Ohio, USA tim@menzies. com, huying_ca@yahoo. com, kiperjd@muohio. edu Sponsored by 2021 -10 -15 1

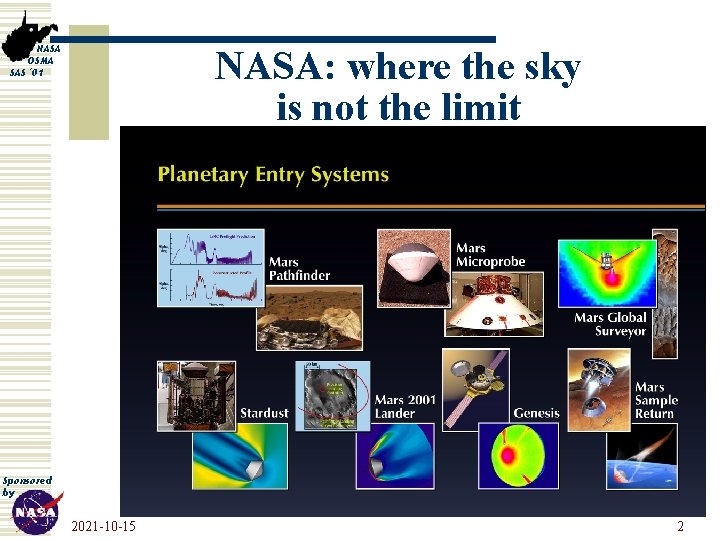

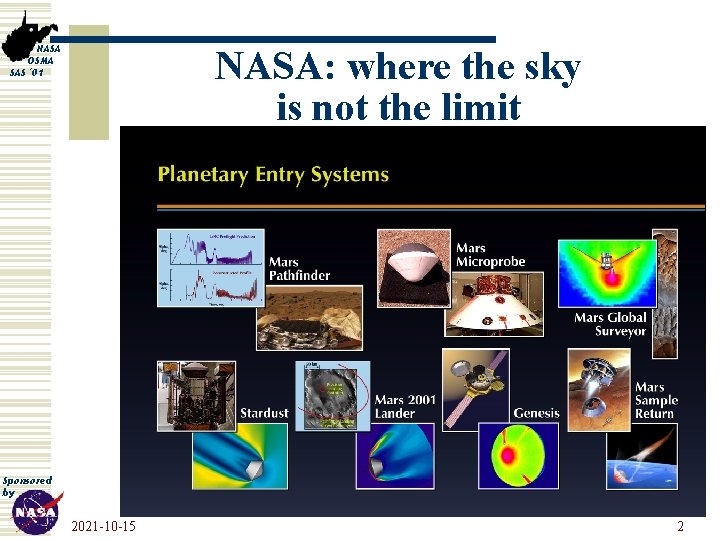

NASA OSMA SAS '01 NASA: where the sky is not the limit Sponsored by 2021 -10 -15 2

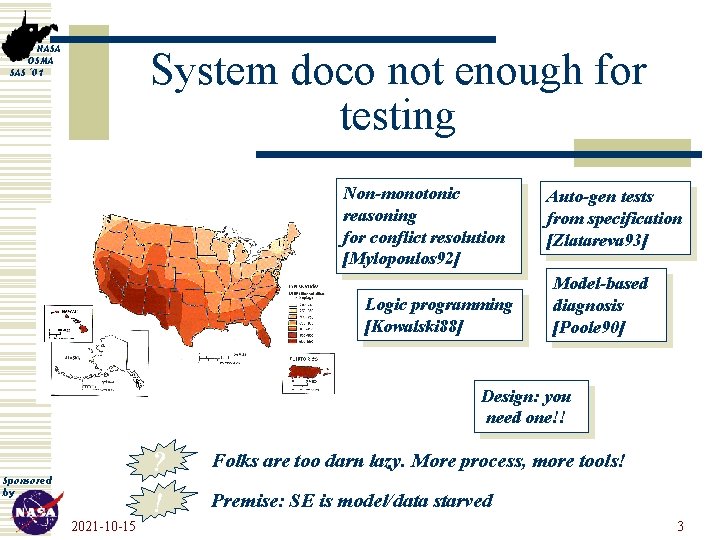

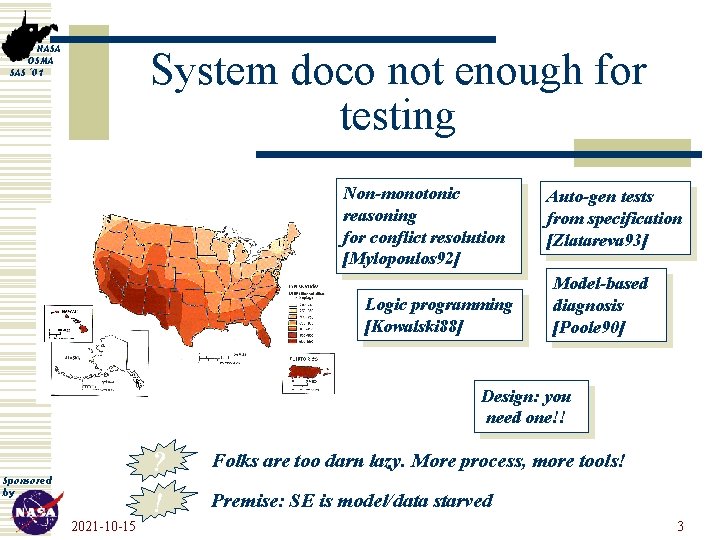

NASA OSMA SAS '01 System doco not enough for testing Non-monotonic reasoning for conflict resolution [Mylopoulos 92] Logic programming [Kowalski 88] Auto-gen tests from specification [Zlatareva 93] Model-based diagnosis [Poole 90] Design: you need one!! ? ! Sponsored by 2021 -10 -15 Folks are too darn lazy. More process, more tools! Premise: SE is model/data starved 3

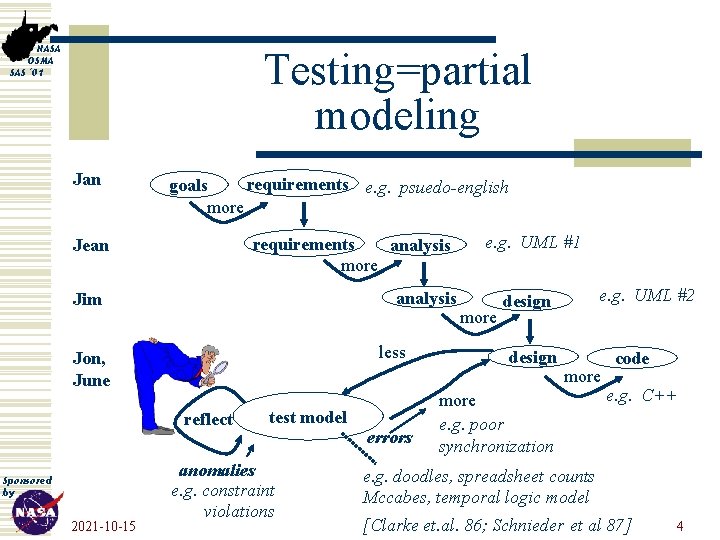

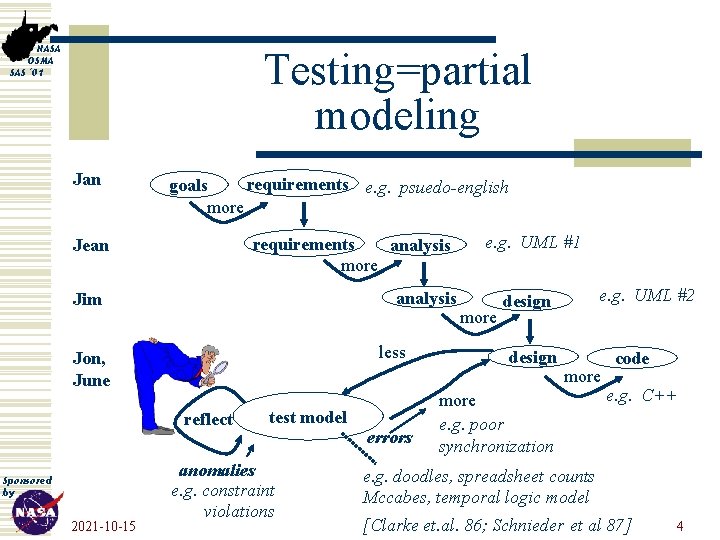

NASA OSMA SAS '01 Testing=partial modeling Jan requirements e. g. psuedo-english goals more requirements analysis more Jean analysis Jim less Jon, June reflect Sponsored by 2021 -10 -15 test model anomalies e. g. constraint violations errors e. g. UML #1 more e. g. UML #2 design more e. g. poor synchronization code e. g. C++ e. g. doodles, spreadsheet counts Mccabes, temporal logic model [Clarke et. al. 86; Schnieder et al 87] 4

NASA OSMA SAS '01 Reflect faster (reflect less) w w Ask less questions Propose least changes Insert fewest monitors Method: n n Knowledge farming Treatment learning Sponsored by 2021 -10 -15 5

NASA OSMA SAS '01 Knowledge Farming w If data is plentiful: n Then data mining (e. g. Khoshgoftaar & Allen) w Else, farm it: 1. Plant a seed l 2. Grow the data l 3. Quickly build (or borrow) a model Monte Carlo (ish) simulations Harvest l Summarize w Happy surprise: l Sponsored by l 2021 -10 -15 When we explore a large space of “maybes”… …Stable conclusions exist. 6

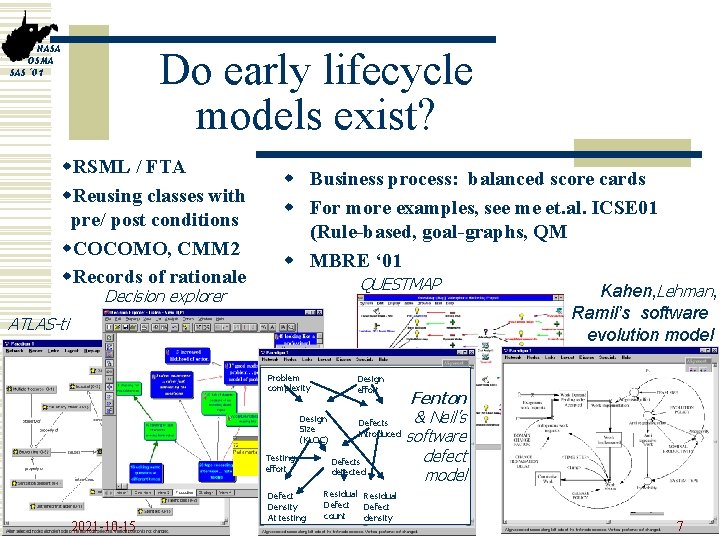

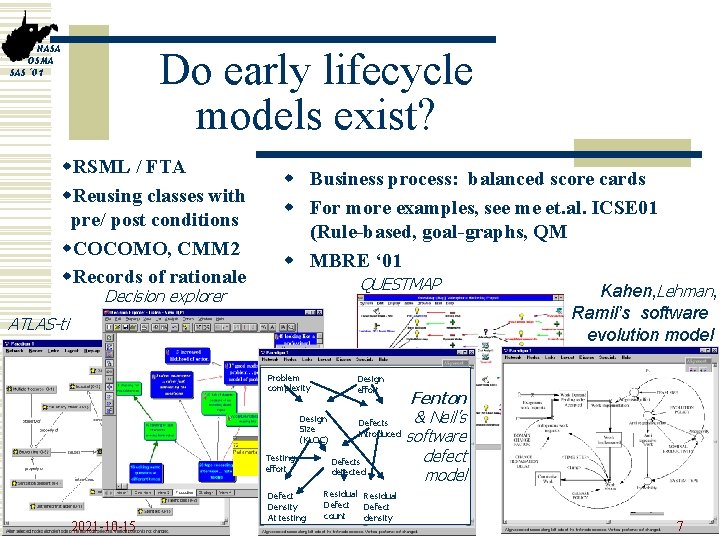

NASA OSMA SAS '01 Do early lifecycle models exist? w. RSML / FTA w. Reusing classes with pre/ post conditions w. COCOMO, CMM 2 w. Records of rationale w Business process: balanced score cards w For more examples, see me et. al. ICSE 01 (Rule-based, goal-graphs, QM w MBRE ‘ 01 QUESTMAP Decision explorer ATLAS-ti Problem complexity Testing effort Sponsored by 2021 -10 -15 Kahen, Lehman, Ramil’s software evolution model Design effort Fenton Design & Neil’s Defects Size introduced software (KLOC) defect Defects detected model Defect Density At testing Residual Defect count density 7

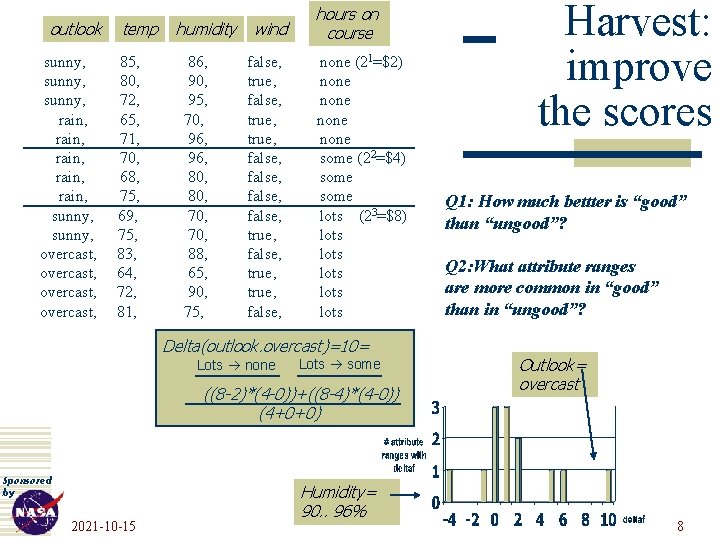

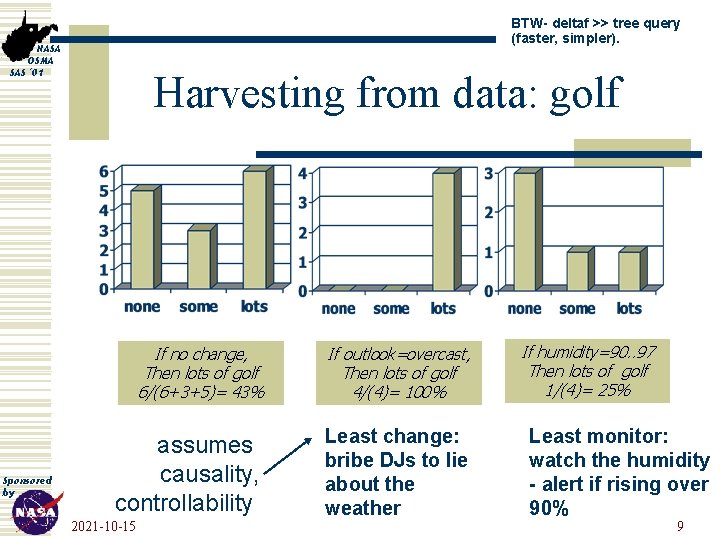

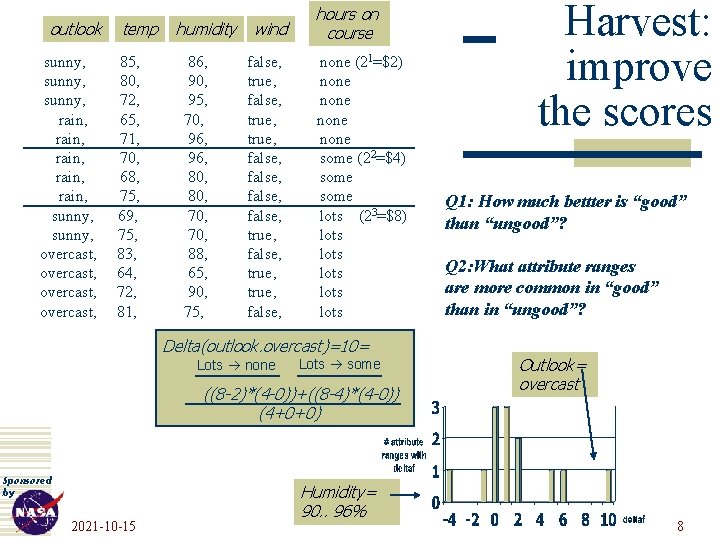

outlook NASA OSMA SAS '01 sunny, rain, rain, sunny, overcast, temp 85, 80, 72, 65, 71, 70, 68, 75, 69, 75, 83, 64, 72, 81, humidity 86, 90, 95, 70, 96, 80, 70, 88, 65, 90, 75, wind false, true, false, true, false, hours on course none (21=$2) none some (22=$4) some lots (23=$8) lots lots Delta(outlook. overcast)=10= Lots none Lots some ((8 -2)*(4 -0))+((8 -4)*(4 -0)) (4+0+0) Sponsored by 2021 -10 -15 Humidity= 90. . 96% Harvest: improve the scores Q 1: How much bettter is “good” than “ungood”? Q 2: What attribute ranges are more common in “good” than in “ungood”? Outlook= overcast 8

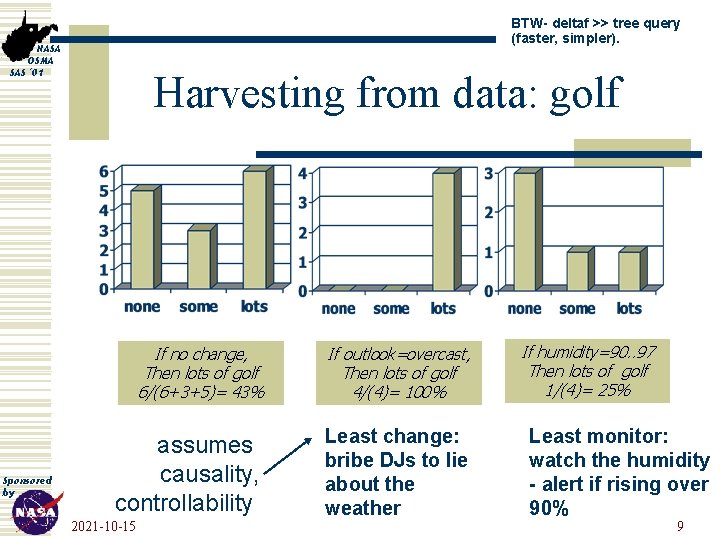

BTW- deltaf >> tree query (faster, simpler). NASA OSMA SAS '01 Sponsored by Harvesting from data: golf If no change, Then lots of golf 6/(6+3+5)= 43% If outlook=overcast, Then lots of golf 4/(4)= 100% assumes causality, controllability Least change: bribe DJs to lie about the weather 2021 -10 -15 If humidity=90. . 97 Then lots of golf 1/(4)= 25% Least monitor: watch the humidity - alert if rising over 90% 9

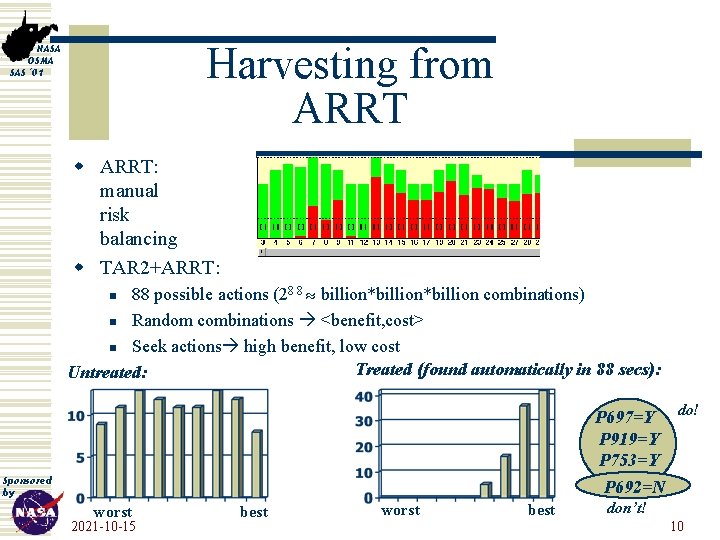

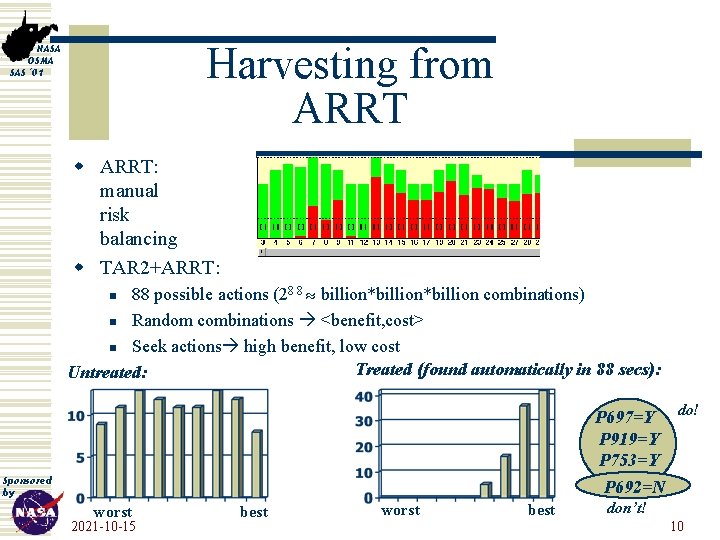

Harvesting from ARRT NASA OSMA SAS '01 w ARRT: manual risk balancing w TAR 2+ARRT: 88 possible actions (28 8 billion*billion combinations) n Random combinations <benefit, cost> n Seek actions high benefit, low cost Treated (found automatically in 88 secs): Untreated: n P 697=Y do! P 919=Y P 753=Y P 692=N Sponsored by worst 2021 -10 -15 best worst best don’t! 10

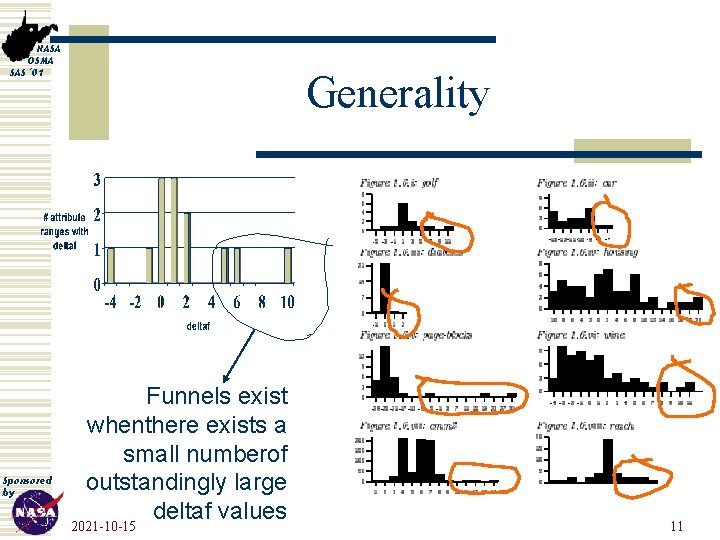

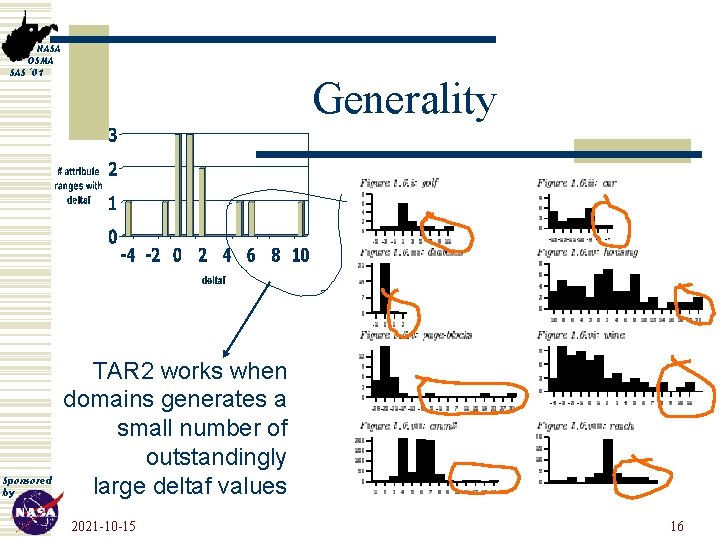

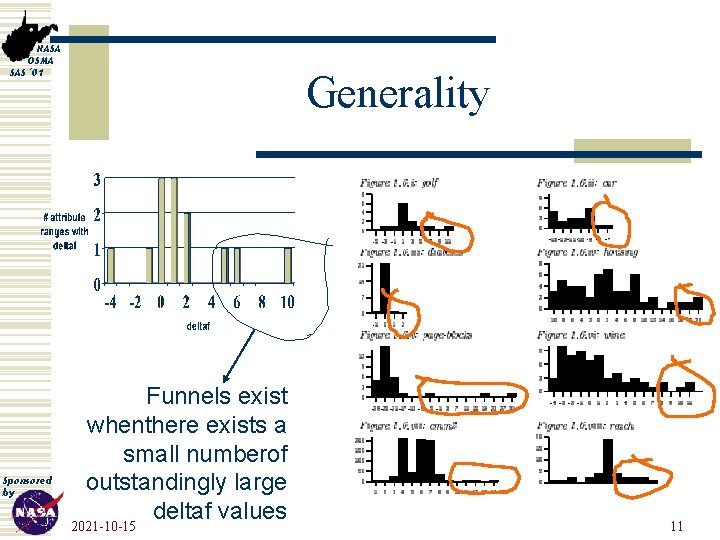

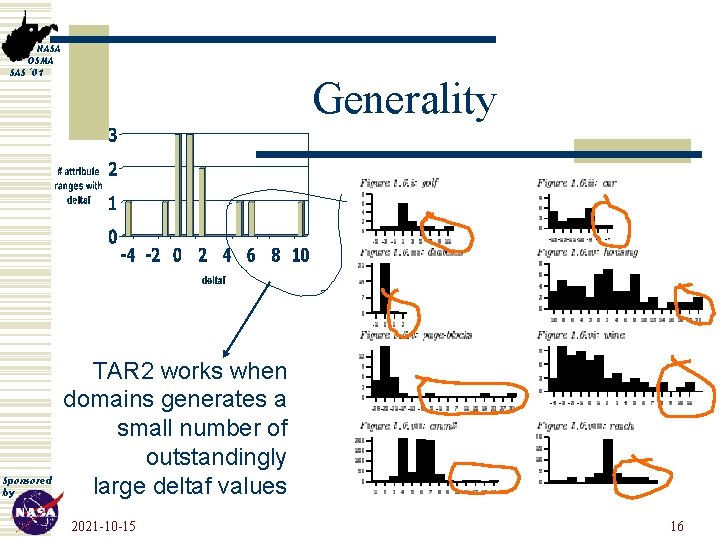

NASA OSMA SAS '01 Sponsored by Generality Funnels exist whenthere exists a small numberof outstandingly large deltaf values 2021 -10 -15 11

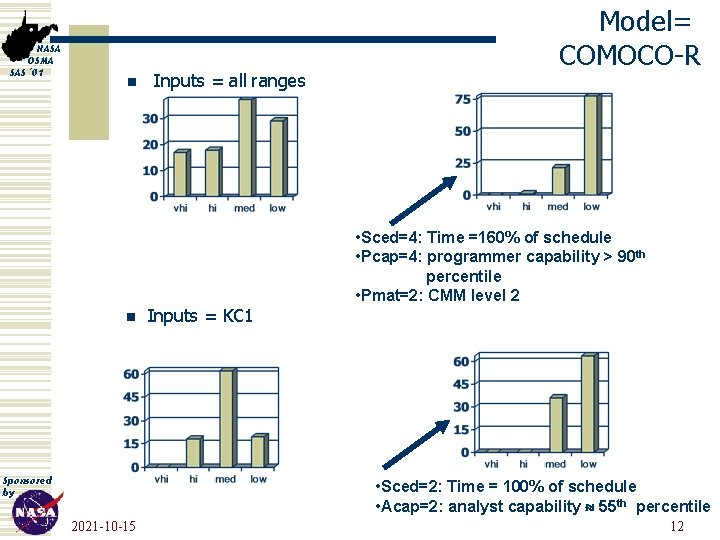

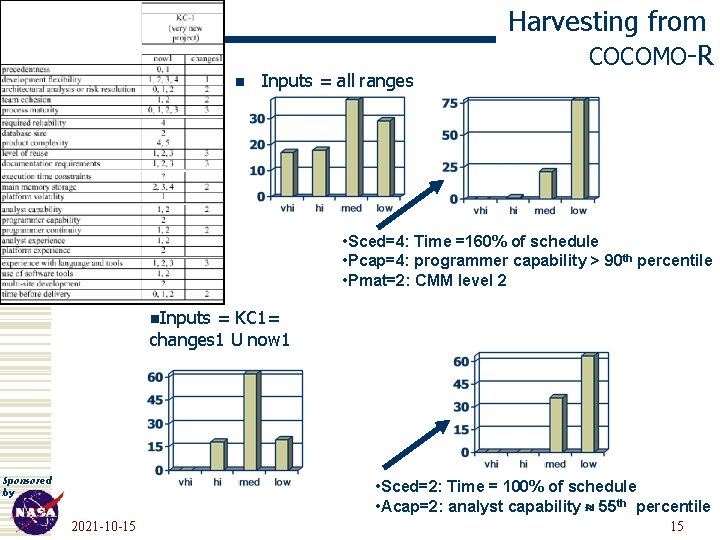

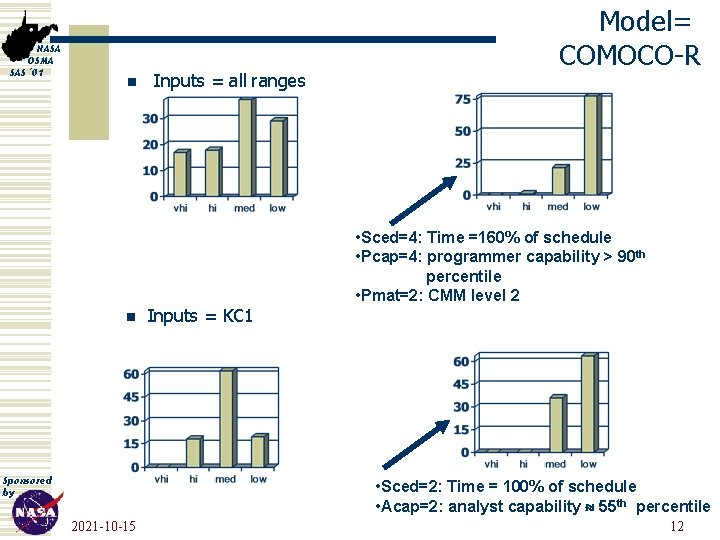

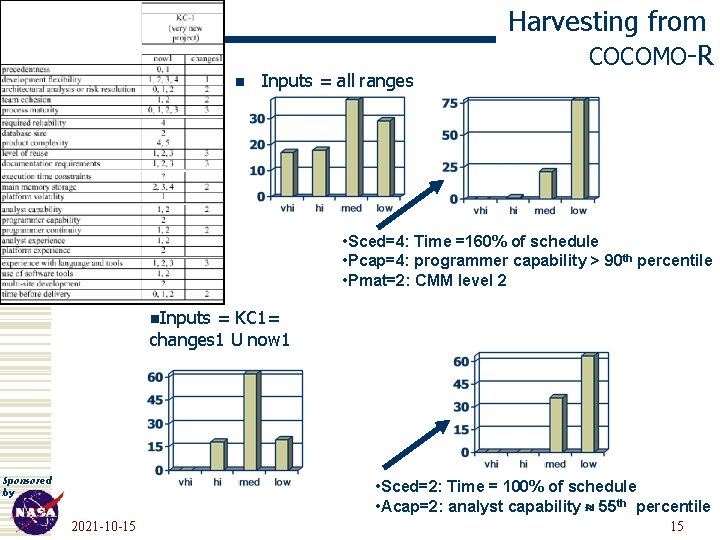

NASA OSMA SAS '01 n n Sponsored by Inputs = all ranges Inputs = KC 1 Model= COMOCO-R • Sced=4: Time =160% of schedule • Pcap=4: programmer capability > 90 th percentile • Pmat=2: CMM level 2 • Sced=2: Time = 100% of schedule • Acap=2: analyst capability 55 th percentile 2021 -10 -15 12

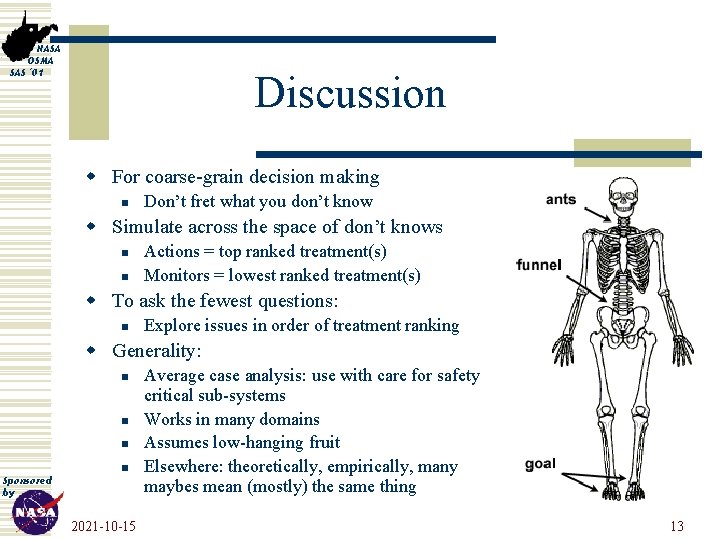

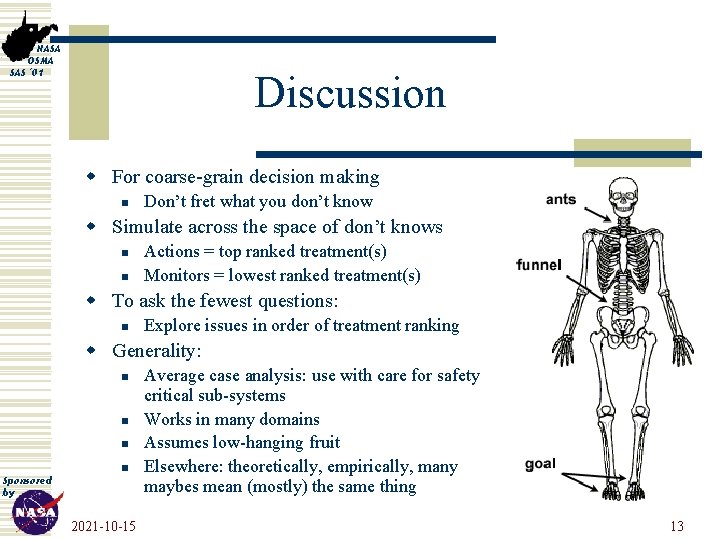

NASA OSMA SAS '01 Discussion w For coarse-grain decision making n Don’t fret what you don’t know w Simulate across the space of don’t knows n n Actions = top ranked treatment(s) Monitors = lowest ranked treatment(s) w To ask the fewest questions: n Explore issues in order of treatment ranking w Generality: n n Sponsored by 2021 -10 -15 Average case analysis: use with care for safety critical sub-systems Works in many domains Assumes low-hanging fruit Elsewhere: theoretically, empirically, many maybes mean (mostly) the same thing 13

NASA OSMA SAS '01 Questions or comments? (Some random other slides follow) Sponsored by 2021 -10 -15 14

NASA OSMA SAS '01 n Inputs = all ranges Harvesting from COCOMO-R • Sced=4: Time =160% of schedule • Pcap=4: programmer capability > 90 th percentile • Pmat=2: CMM level 2 n. Inputs = KC 1= changes 1 U now 1 Sponsored by • Sced=2: Time = 100% of schedule • Acap=2: analyst capability 55 th percentile 2021 -10 -15 15

NASA OSMA SAS '01 Sponsored by Generality TAR 2 works when domains generates a small number of outstandingly large deltaf values 2021 -10 -15 16

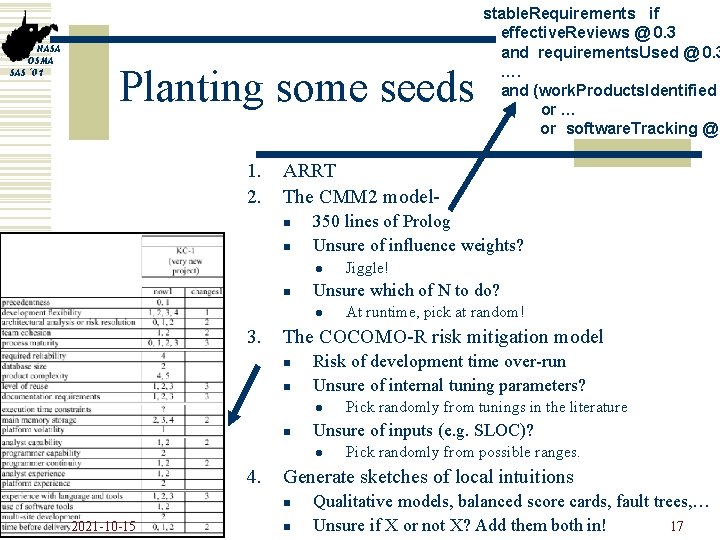

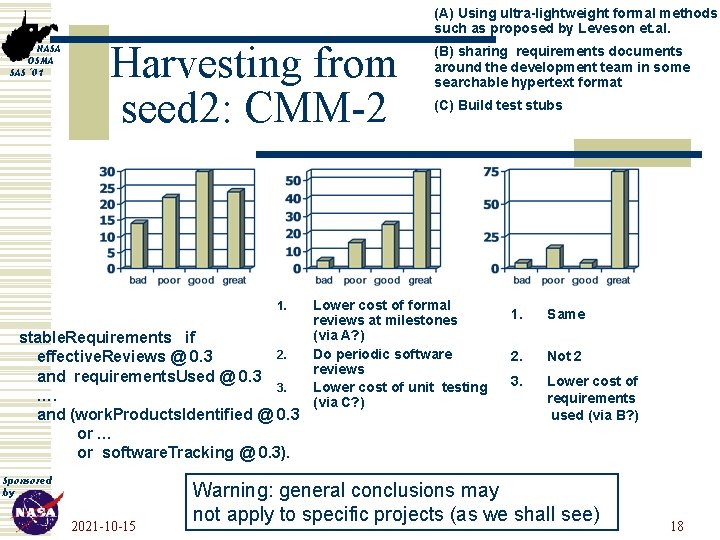

NASA OSMA SAS '01 Planting some seeds 1. 2. ARRT The CMM 2 modeln n 350 lines of Prolog Unsure of influence weights? l n n Risk of development time over-run Unsure of internal tuning parameters? l n Pick randomly from possible ranges. Generate sketches of local intuitions n 2021 -10 -15 Pick randomly from tunings in the literature Unsure of inputs (e. g. SLOC)? l 4. At runtime, pick at random! The COCOMO-R risk mitigation model n Sponsored by Jiggle! Unsure which of N to do? l 3. stable. Requirements if effective. Reviews @ 0. 3 and requirements. Used @ 0. 3 …. and (work. Products. Identified or … or software. Tracking @ n Qualitative models, balanced score cards, fault trees, … Unsure if X or not X? Add them both in! 17

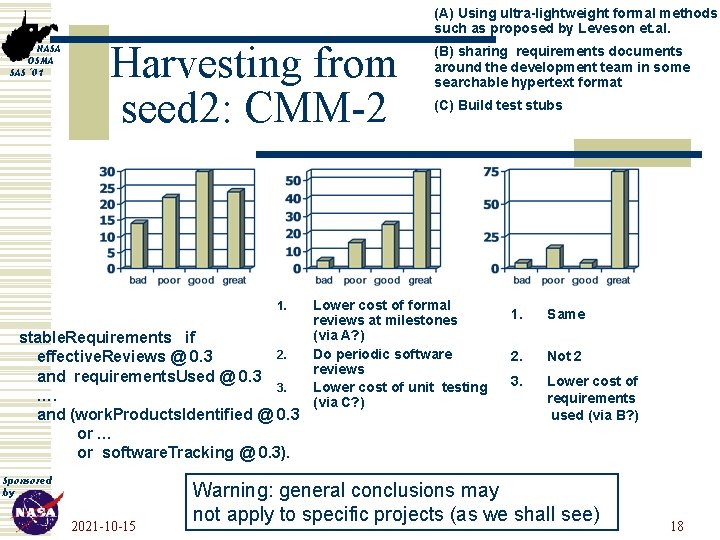

(A) Using ultra-lightweight formal methods such as proposed by Leveson et. al. NASA OSMA SAS '01 Harvesting from seed 2: CMM-2 1. stable. Requirements if 2. effective. Reviews @ 0. 3 and requirements. Used @ 0. 3 3. …. and (work. Products. Identified @ 0. 3 or … or software. Tracking @ 0. 3). Sponsored by 2021 -10 -15 (B) sharing requirements documents around the development team in some searchable hypertext format (C) Build test stubs Lower cost of formal reviews at milestones (via A? ) Do periodic software reviews Lower cost of unit testing (via C? ) 1. Same 2. Not 2 3. Lower cost of requirements used (via B? ) Warning: general conclusions may not apply to specific projects (as we shall see) 18

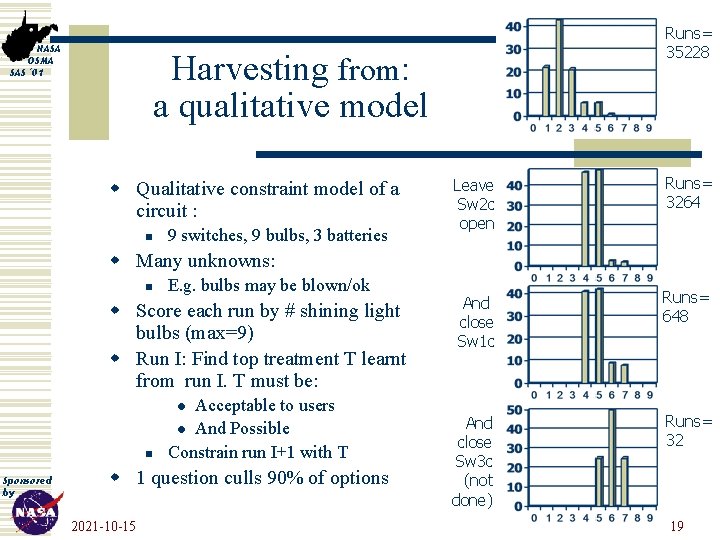

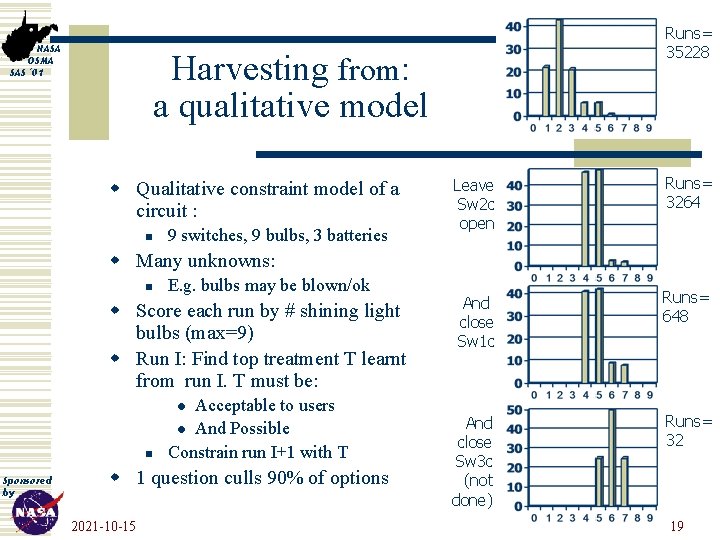

NASA OSMA SAS '01 Runs= 35228 Harvesting from: a qualitative model w Qualitative constraint model of a circuit : n 9 switches, 9 bulbs, 3 batteries Leave Sw 2 c open Runs= 3264 And close Sw 1 c Runs= 648 And close Sw 3 c (not done) Runs= 32 w Many unknowns: n E. g. bulbs may be blown/ok w Score each run by # shining light bulbs (max=9) w Run I: Find top treatment T learnt from run I. T must be: Acceptable to users l And Possible Constrain run I+1 with T l n Sponsored by w 1 question culls 90% of options 2021 -10 -15 19