Name Entity Recognition Using Language Models ZhongHua Wang

- Slides: 18

Name Entity Recognition Using Language Models Zhong-Hua Wang IBM T. J. Waston Research Center Heights, NY, 10598, USA Presented by Yi-Ting

Outline l l Introduction Name entity recognition using language models l l l The algorithm for name entity recognition A bootstrap technique Uniform language model for the general language model Experiments Conclusion

Introduction l The most approaches: l l The collection and manual annotation of sufficient amount of sentence data is an expensive process. A good system requires the training data to be annotated consistently. The collected text data is usually domaindependent and so is the trained. This paper proposes a new technique to address these problems.

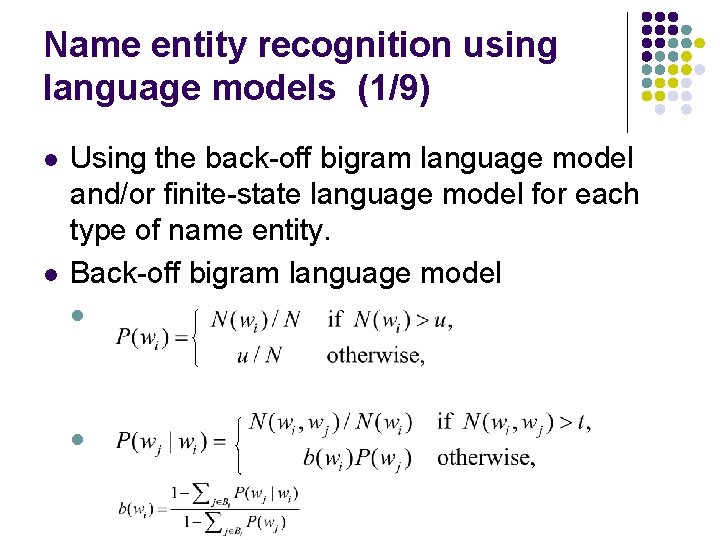

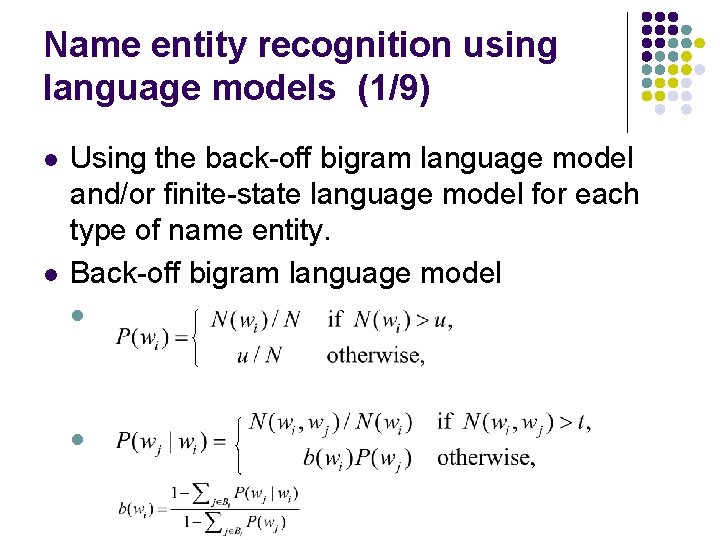

Name entity recognition using language models (1/9) l l Using the back-off bigram language model and/or finite-state language model for each type of name entity. Back-off bigram language model l l

Name entity recognition using language models (2/9) l Finite-state language model A finite-state automata defined by a sextuple (Q, V, I, T, , Z) where Q= is a finite set of states, V is the vocabulary, I is the initial state, T is the terminal state, is transition function Z is the probability function. l A word sequence corresponding to a complete path represents a valid utterance defined by this finite-state languages model. l

Name entity recognition using language models (3/9) l l l K interested name entities, each represented by a bigram or a finite-state language model. A general language model. An ergodic Markov chain with (K+1) states. Assume that we have the a prior knoledge of each interested name entity. The central issue is how to estimate the general language model.

Name entity recognition using language models (4/9) l l The utterance to be decoded is represented by a sequence of words. The state aligned with is denoted by. Replacing the name entities by the corresponding labels.

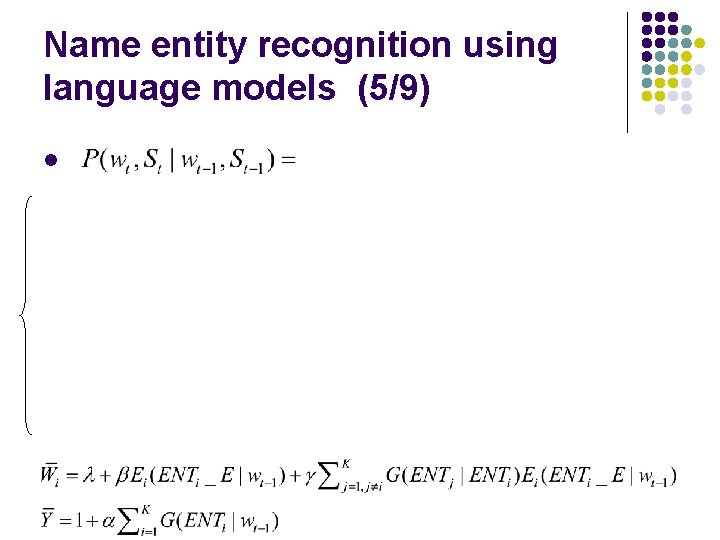

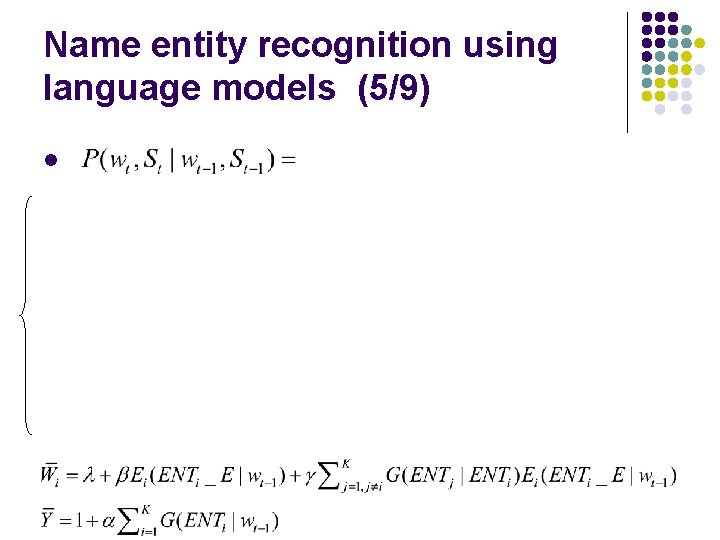

Name entity recognition using language models (5/9) l

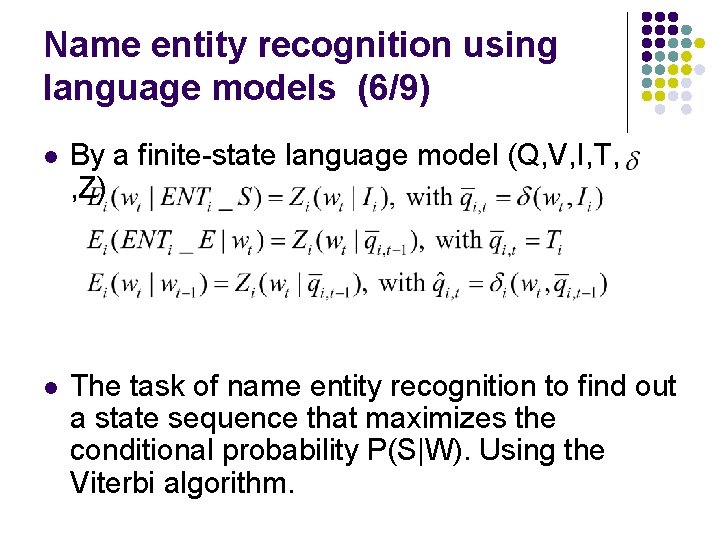

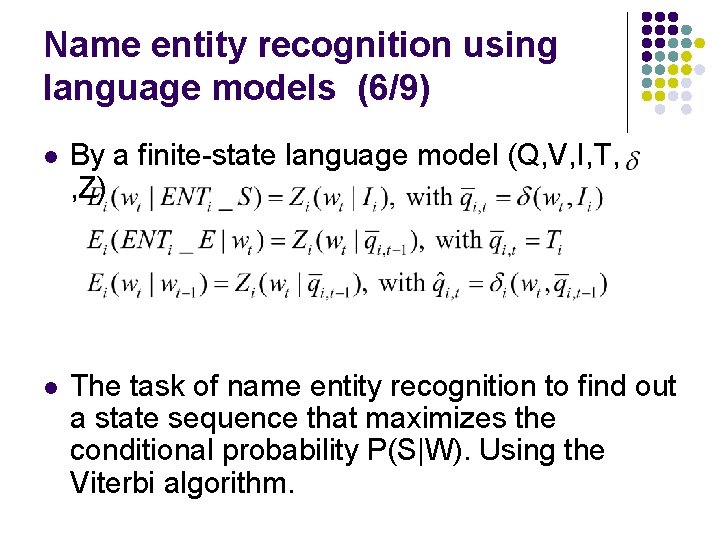

Name entity recognition using language models (6/9) l By a finite-state language model (Q, V, I, T, , Z) l The task of name entity recognition to find out a state sequence that maximizes the conditional probability P(S|W). Using the Viterbi algorithm.

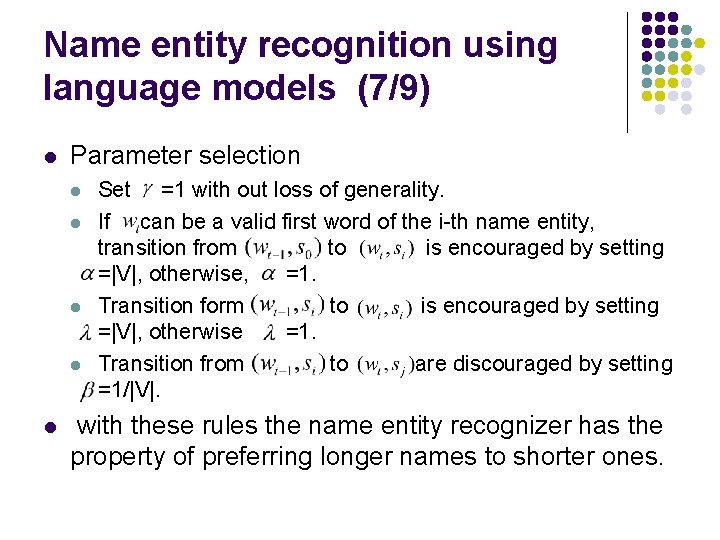

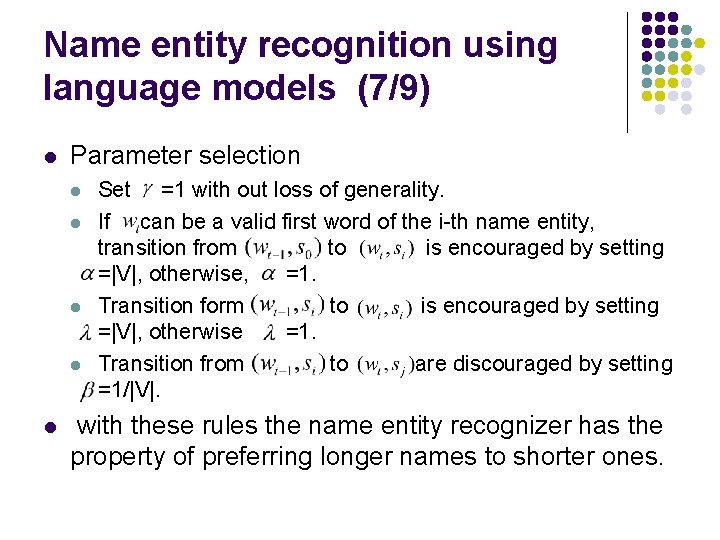

Name entity recognition using language models (7/9) l Parameter selection l l l Set =1 with out loss of generality. If can be a valid first word of the i-th name entity, transition from to is encouraged by setting =|V|, otherwise, =1. Transition form to is encouraged by setting =|V|, otherwise =1. Transition from to are discouraged by setting =1/|V|. with these rules the name entity recognizer has the property of preferring longer names to shorter ones.

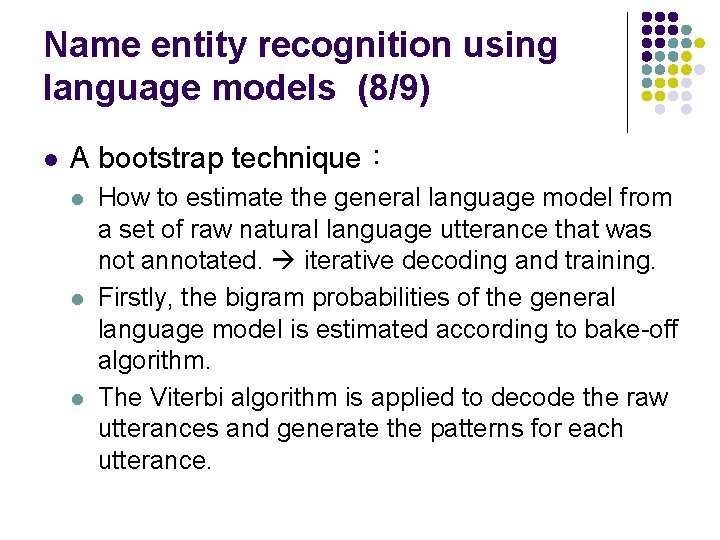

Name entity recognition using language models (8/9) l A bootstrap technique: l l l How to estimate the general language model from a set of raw natural language utterance that was not annotated. iterative decoding and training. Firstly, the bigram probabilities of the general language model is estimated according to bake-off algorithm. The Viterbi algorithm is applied to decode the raw utterances and generate the patterns for each utterance.

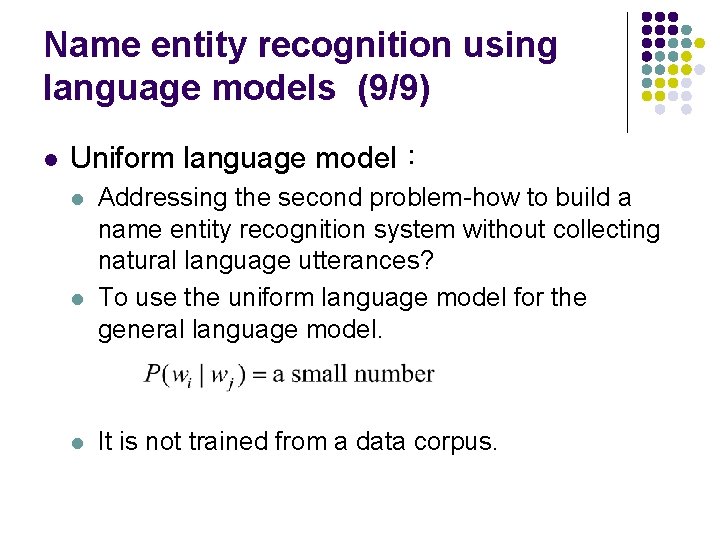

Name entity recognition using language models (9/9) l Uniform language model: l l l Addressing the second problem-how to build a name entity recognition system without collecting natural language utterances? To use the uniform language model for the general language model. It is not trained from a data corpus.

Experiments (1/5) l l l To present the experiment result of the new name entity recognition algorithm in the mutual fund trading application. Comparing its performance with the technique using decision tree model. Finding out the performance when the one of three kinds bigram language models are used as the general language model.

Experiments (2/5) l l Name entities:ACCOUNT、AMOUNT、SHARES、 PERCENT、NUMBER、FUND. There are 43296 raw natural language utterances. Then test data includes 3041 utterances. There are tree kinds of errors: substitution, deletion and insertion. The error rate is calculated as the summation of all these three errors divided by the number of name entitles in test set.

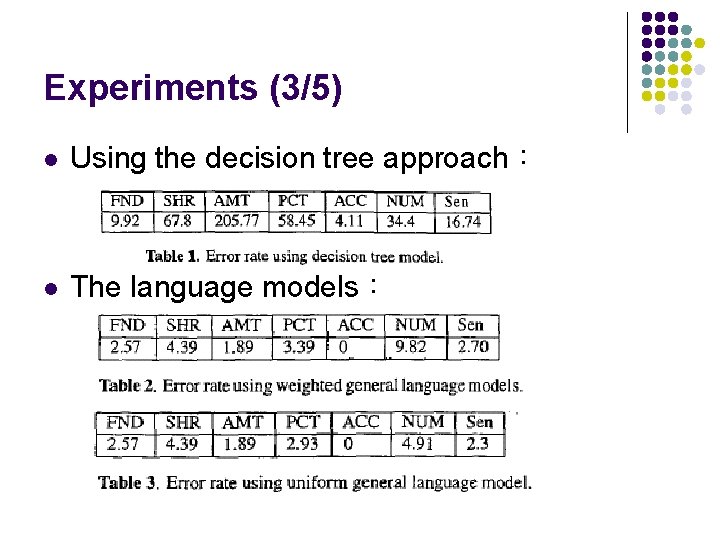

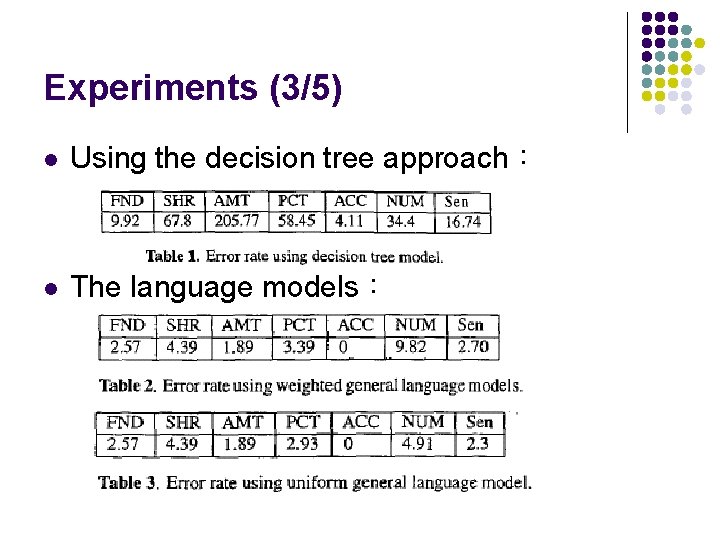

Experiments (3/5) l Using the decision tree approach: l The language models:

Experiments (4/5) l The decision tree approach does not achieve good result with testing data l l l The inconsistency of the data annotation. Insufficient occurrences for some name entities in the training data. If we found some serious errors in this new approach, the only effort needed is to improve the name entity language model.

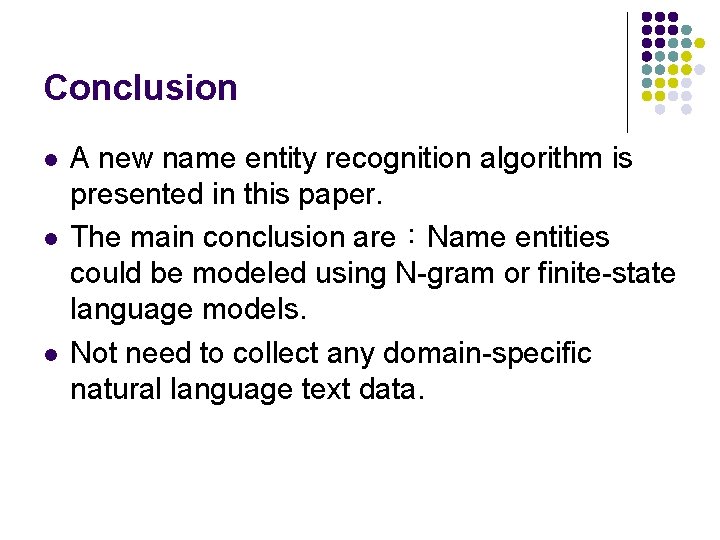

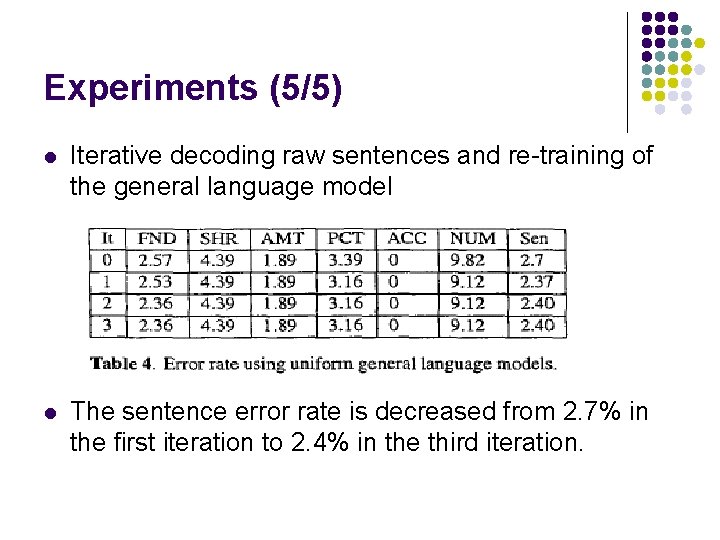

Experiments (5/5) l Iterative decoding raw sentences and re-training of the general language model l The sentence error rate is decreased from 2. 7% in the first iteration to 2. 4% in the third iteration.

Conclusion l l l A new name entity recognition algorithm is presented in this paper. The main conclusion are:Name entities could be modeled using N-gram or finite-state language models. Not need to collect any domain-specific natural language text data.