Naive Bayes for Document Classification Illustrative Example 1

Naive Bayes for Document Classification Illustrative Example 1

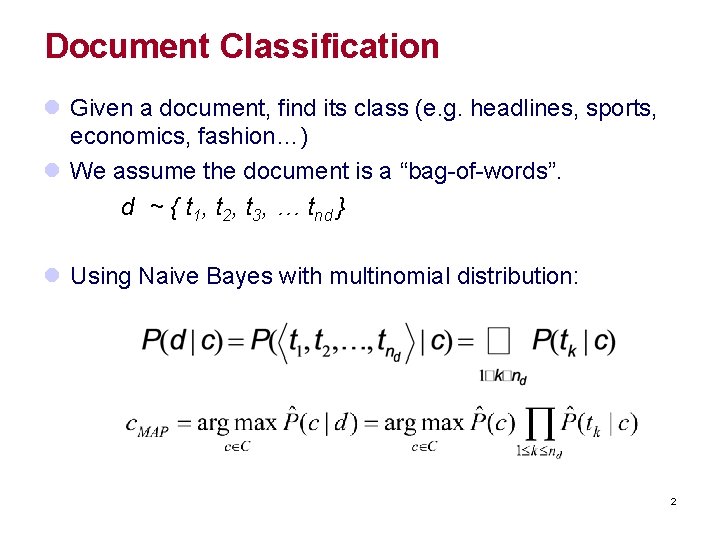

Document Classification l Given a document, find its class (e. g. headlines, sports, economics, fashion…) l We assume the document is a “bag-of-words”. d ~ { t 1, t 2, t 3, … tnd } l Using Naive Bayes with multinomial distribution: 2

Binomial Distribution l n independent trials (a Bernouilli trial), each of which results in success with probability of p l binomial distribution gives the probability of any particular combination of numbers of successes for the two categories. l e. g. You flip a coin 10 times with PHeads=0. 6 l What is the probability of getting 8 H, 2 T? l P(k) = ¡ with k being number of successes (or to see the similarity with multinomial, consider first class is selected k times, . . . )

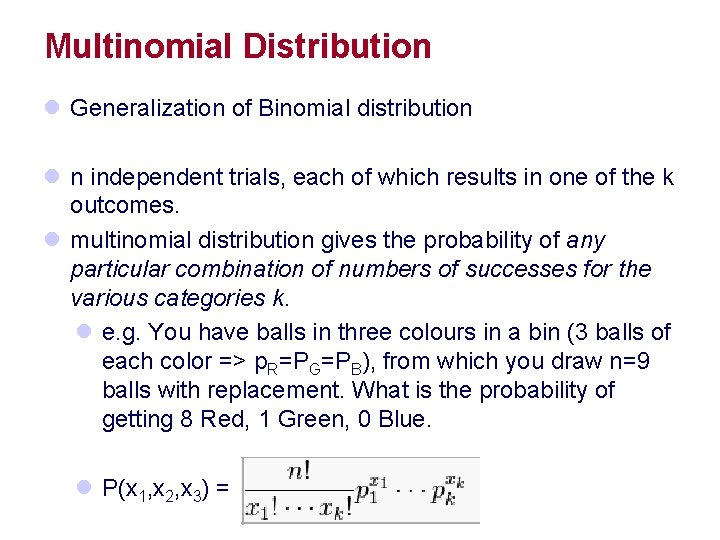

Multinomial Distribution l Generalization of Binomial distribution l n independent trials, each of which results in one of the k outcomes. l multinomial distribution gives the probability of any particular combination of numbers of successes for the various categories k. l e. g. You have balls in three colours in a bin (3 balls of each color => p. R=PG=PB), from which you draw n=9 balls with replacement. What is the probability of getting 8 Red, 1 Green, 0 Blue. l P(x 1, x 2, x 3) =

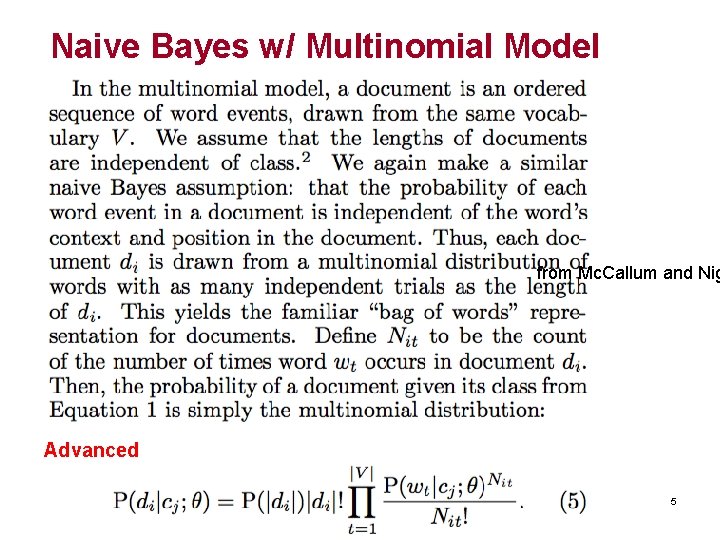

Naive Bayes w/ Multinomial Model from Mc. Callum and Nig Advanced 5

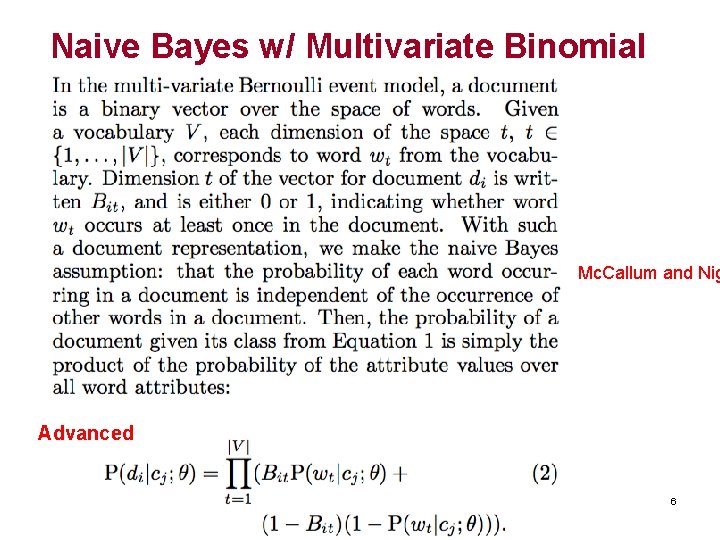

Naive Bayes w/ Multivariate Binomial from Mc. Callum and Nig Advanced 6

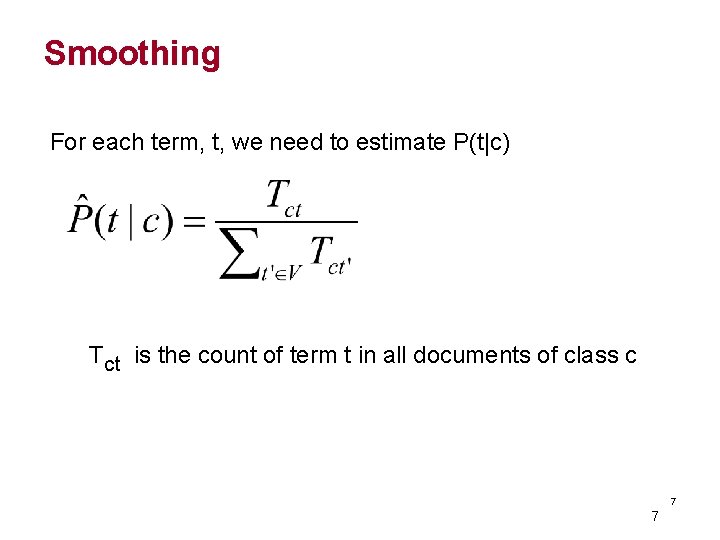

Smoothing For each term, t, we need to estimate P(t|c) Tct is the count of term t in all documents of class c 7 7

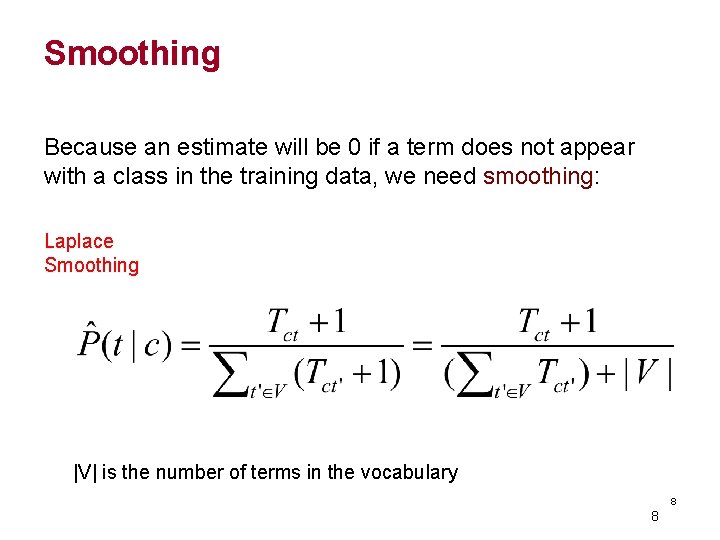

Smoothing Because an estimate will be 0 if a term does not appear with a class in the training data, we need smoothing: Laplace Smoothing |V| is the number of terms in the vocabulary 8 8

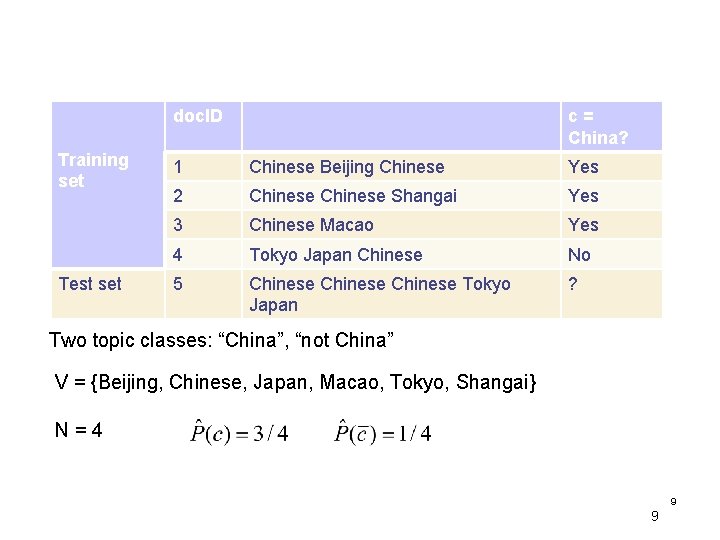

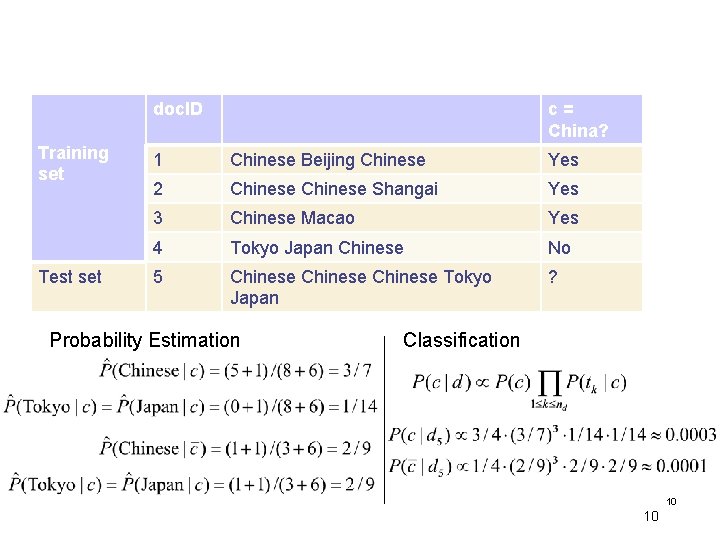

doc. ID Training set Test set c= China? 1 Chinese Beijing Chinese Yes 2 Chinese Shangai Yes 3 Chinese Macao Yes 4 Tokyo Japan Chinese No 5 Chinese Tokyo Japan ? Two topic classes: “China”, “not China” V = {Beijing, Chinese, Japan, Macao, Tokyo, Shangai} N=4 9 9

doc. ID Training set Test set c= China? 1 Chinese Beijing Chinese Yes 2 Chinese Shangai Yes 3 Chinese Macao Yes 4 Tokyo Japan Chinese No 5 Chinese Tokyo Japan ? Probability Estimation Classification 10 10

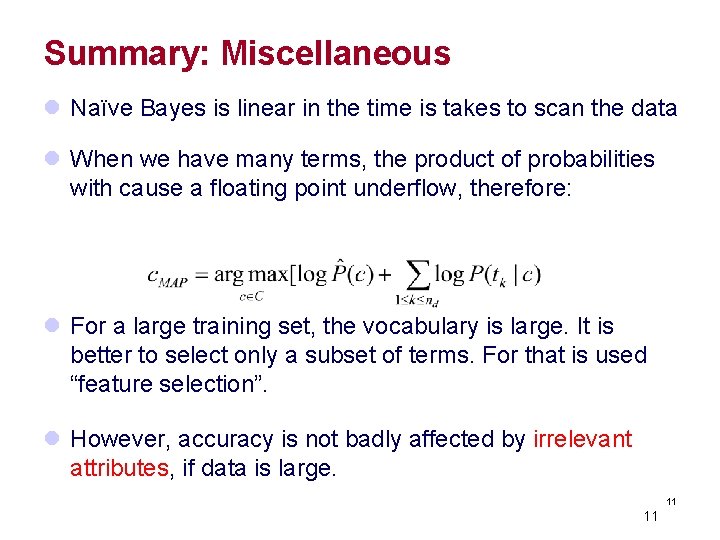

Summary: Miscellaneous l Naïve Bayes is linear in the time is takes to scan the data l When we have many terms, the product of probabilities with cause a floating point underflow, therefore: l For a large training set, the vocabulary is large. It is better to select only a subset of terms. For that is used “feature selection”. l However, accuracy is not badly affected by irrelevant attributes, if data is large. 11 11

- Slides: 11