My SQL HA Ignition Operationally Deploying Ignition when

- Slides: 42

My. SQL HA & Ignition Operationally Deploying Ignition when Uptime Really Matters Tony Holmes Principal Sales Engineer - Oracle / My. SQL

Safe Harbour Statement The following is intended to outline our general product direction. It is intended for information purposes only, and may not be incorporated into any contract. It is not a commitment to deliver any material, code, or functionality, and should not be relied upon in making purchasing decisions. The development, release, and timing of any features or functionality described for Oracle’s products remains at the sole discretion of Oracle.

Introduction to HA

What Is High Availability? “High availability is a system design protocol and associated implementation that ensures a certain degree of operational continuity during a given measurement period. ” http: //en. wikipedia. org/wiki/High_availability

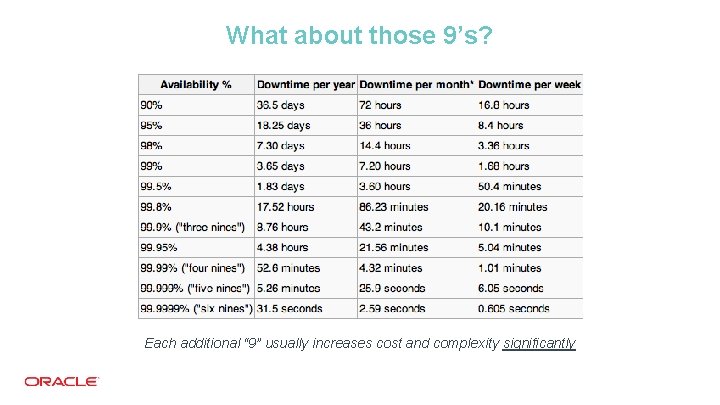

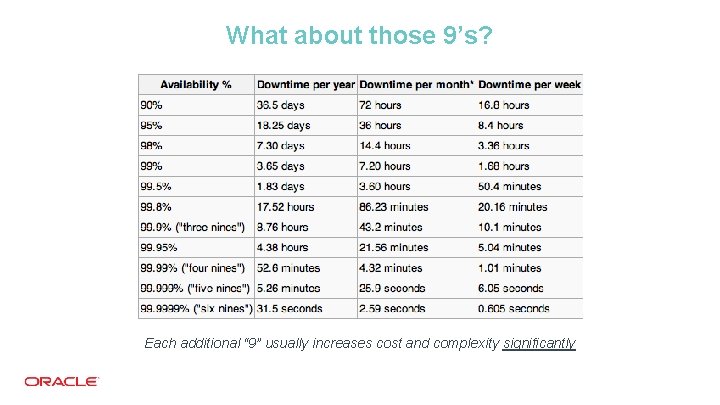

What about those 9’s? Each additional “ 9” usually increases cost and complexity significantly

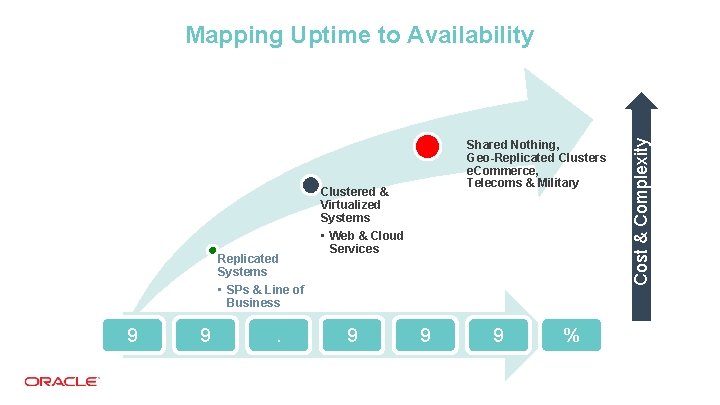

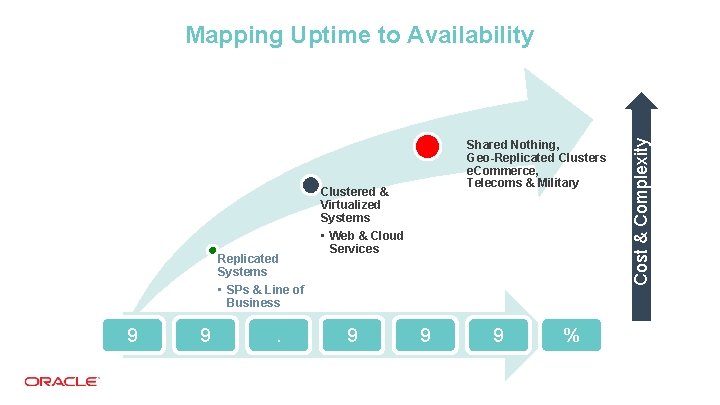

Replicated Systems • SPs & Line of Business 9 9 . Shared Nothing, Geo-Replicated Clusters e. Commerce, Telecoms & Military Clustered & Virtualized Systems • Web & Cloud Services 9 9 9 % Cost & Complexity Mapping Uptime to Availability

What Else Should I Know? “The Recovery Point Objective (RPO) describes the acceptable amount of data loss measured in time. ” http: //en. wikipedia. org/wiki/Recovery_time_objective “The Recovery Time Objective (RTO) is the duration of time and a service level within which a business process must be restored after a disaster (or disruption) in order to avoid unacceptable consequences associated with a break in business continuity. ” http: //en. wikipedia. org/wiki/Recovery_time_objective

Anything Else? “Disaster recovery is the process, policies and procedures related to preparing for recovery or continuation of technology infrastructure critical to an organization after a natural or human-induced disaster. ”

HA on My. SQL

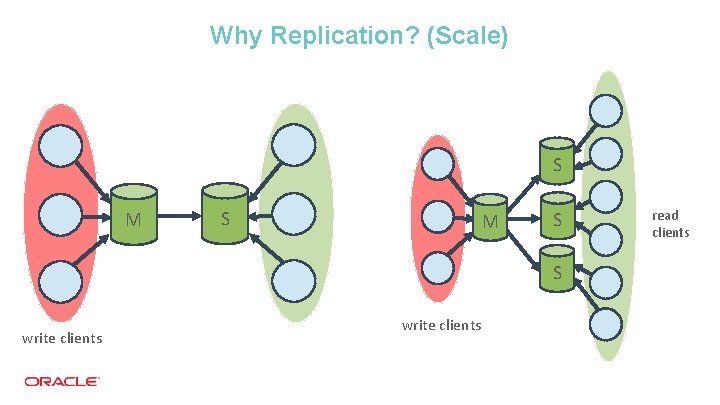

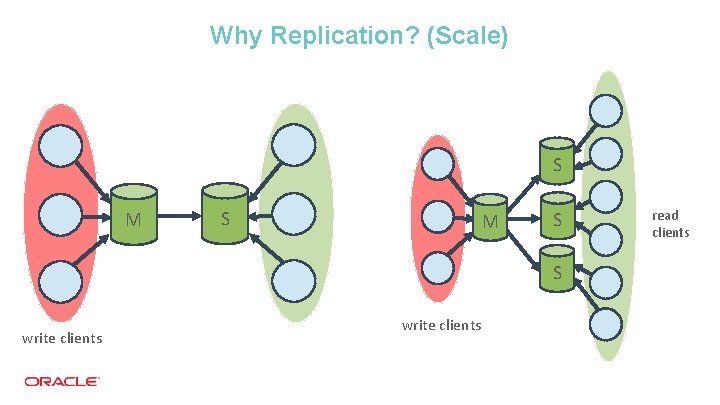

Why Replication? (Scale) S M S S write clients read clients

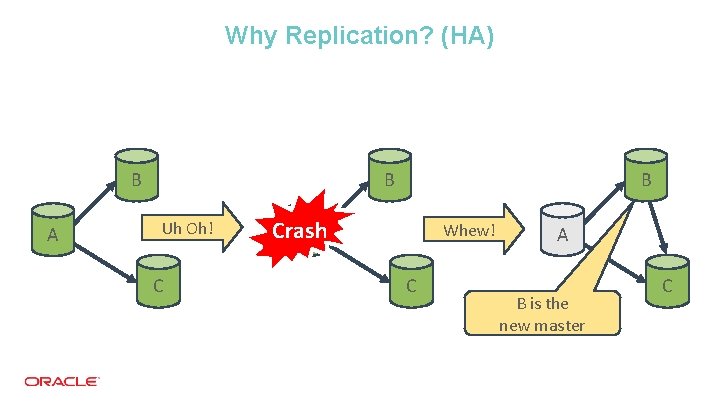

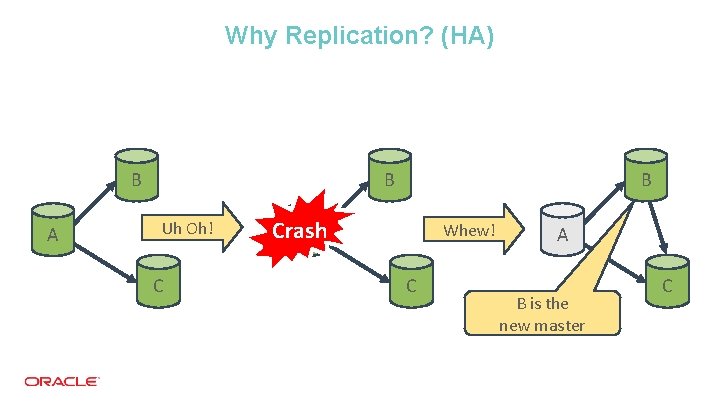

Why Replication? (HA) B A B B Uh Oh! C Crash A Whew! C A B is the new master C

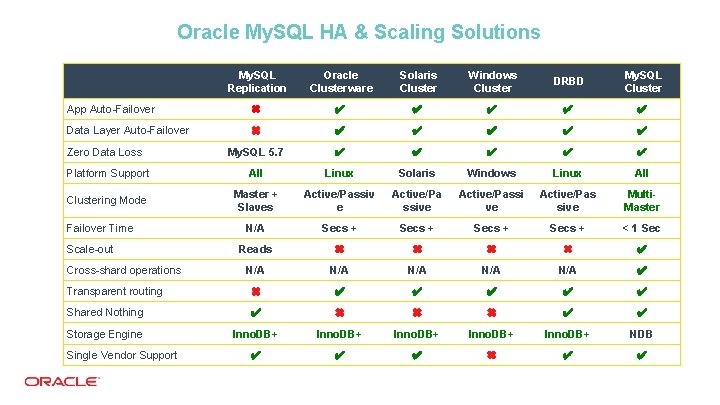

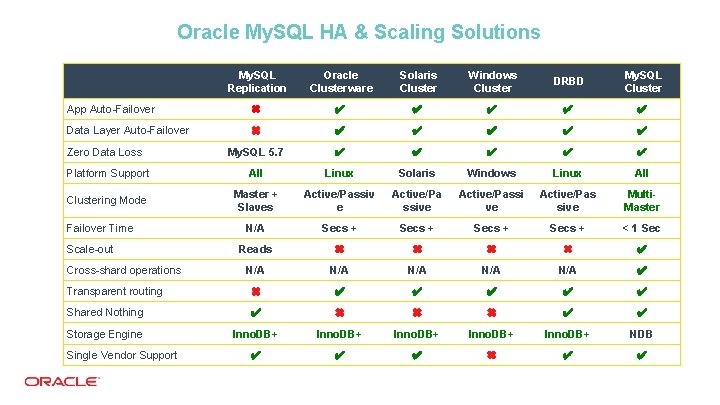

Oracle My. SQL HA & Scaling Solutions My. SQL Replication Oracle Clusterware Solaris Cluster Windows Cluster DRBD My. SQL Cluster App Auto-Failover ✖ ✔ ✔ ✔ Data Layer Auto-Failover ✖ ✔ ✔ ✔ My. SQL 5. 7 ✔ ✔ ✔ Platform Support All Linux Solaris Windows Linux All Clustering Mode Master + Slaves Active/Passiv e Active/Pa ssive Active/Passi ve Active/Pas sive Multi. Master N/A Secs + < 1 Sec Reads ✖ ✖ ✔ N/A N/A N/A ✔ Transparent routing ✖ ✔ ✔ ✔ Shared Nothing ✔ ✖ ✖ ✖ ✔ ✔ Storage Engine Inno. DB+ NDB ✔ ✔ ✔ ✖ ✔ ✔ Zero Data Loss Failover Time Scale-out Cross-shard operations Single Vendor Support

February 2015 14

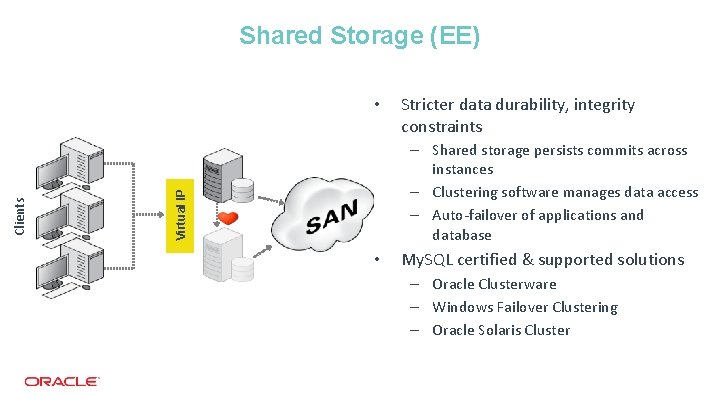

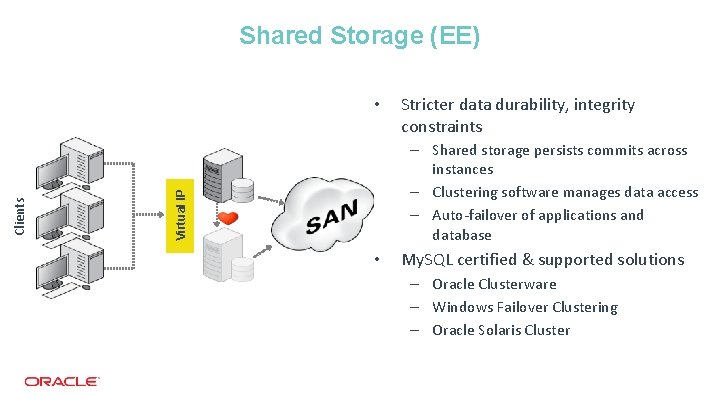

Shared Storage (EE) Stricter data durability, integrity constraints – Shared storage persists commits across instances – Clustering software manages data access – Auto-failover of applications and database Virtual IP Clients • • My. SQL certified & supported solutions – Oracle Clusterware – Windows Failover Clustering – Oracle Solaris Cluster

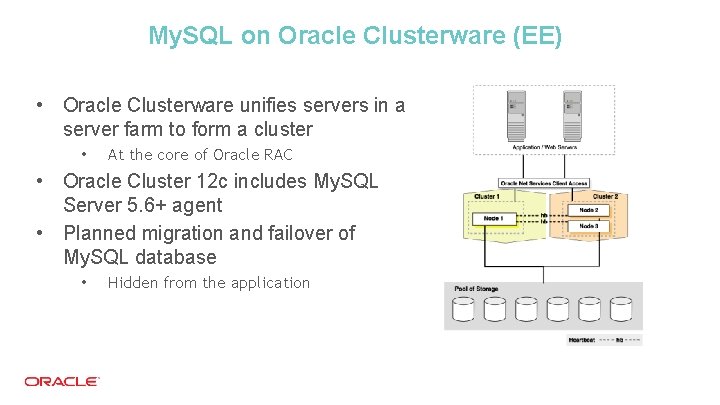

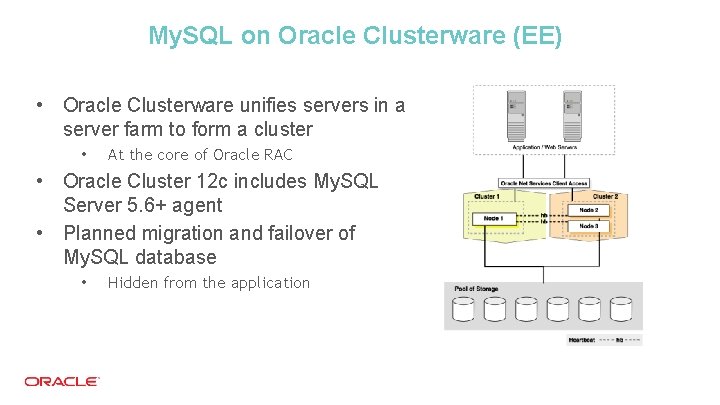

My. SQL on Oracle Clusterware (EE) • Oracle Clusterware unifies servers in a server farm to form a cluster • At the core of Oracle RAC • Oracle Cluster 12 c includes My. SQL Server 5. 6+ agent • Planned migration and failover of My. SQL database • Hidden from the application

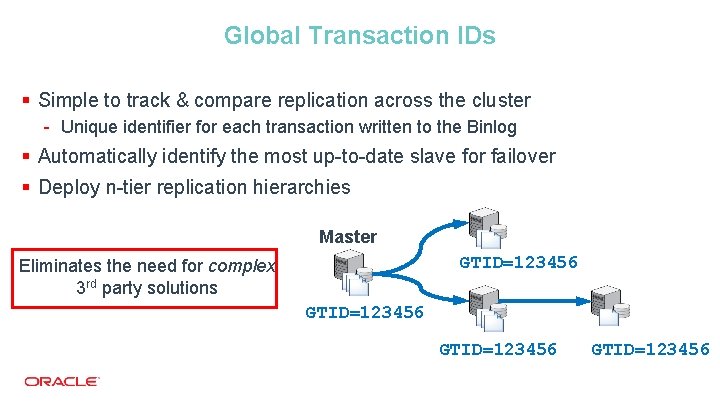

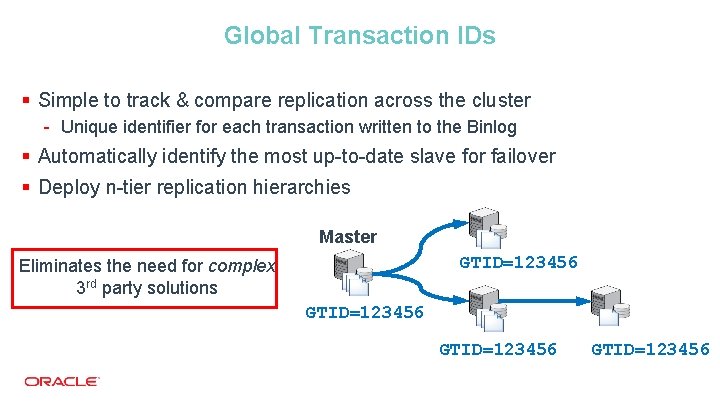

Global Transaction IDs § Simple to track & compare replication across the cluster - Unique identifier for each transaction written to the Binlog § Automatically identify the most up-to-date slave for failover § Deploy n-tier replication hierarchies Master GTID=123456 Eliminates the need for complex 3 rd party solutions GTID=123456

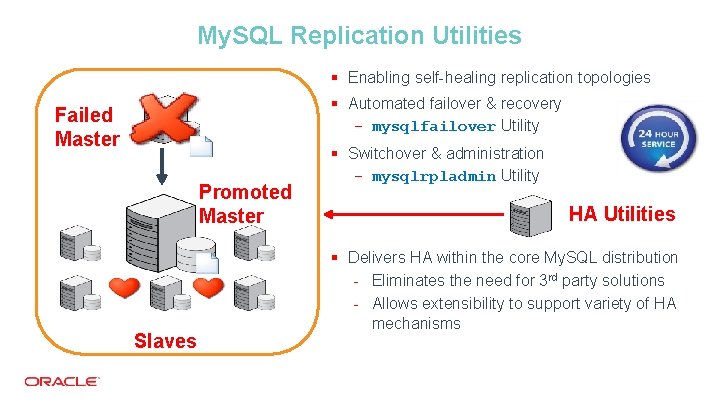

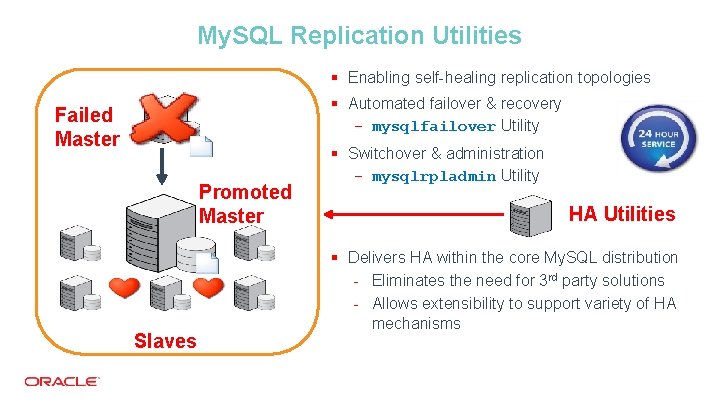

My. SQL Replication Utilities § Enabling self-healing replication topologies § Automated failover & recovery - mysqlfailover Utility Failed Master Promoted Master Slaves § Switchover & administration - mysqlrpladmin Utility HA Utilities § Delivers HA within the core My. SQL distribution - Eliminates the need for 3 rd party solutions - Allows extensibility to support variety of HA mechanisms

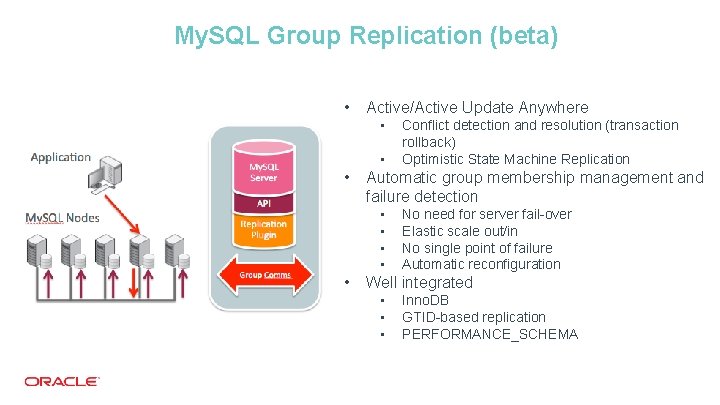

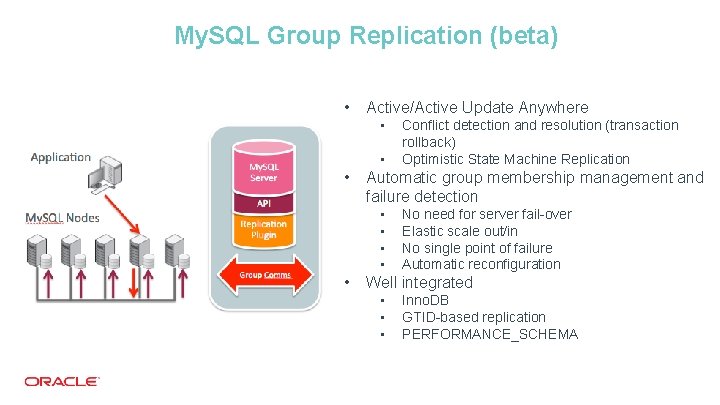

My. SQL Group Replication (beta) • Active/Active Update Anywhere • • • Automatic group membership management and failure detection • • • Conflict detection and resolution (transaction rollback) Optimistic State Machine Replication No need for server fail-over Elastic scale out/in No single point of failure Automatic reconfiguration Well integrated • • • Inno. DB GTID-based replication PERFORMANCE_SCHEMA

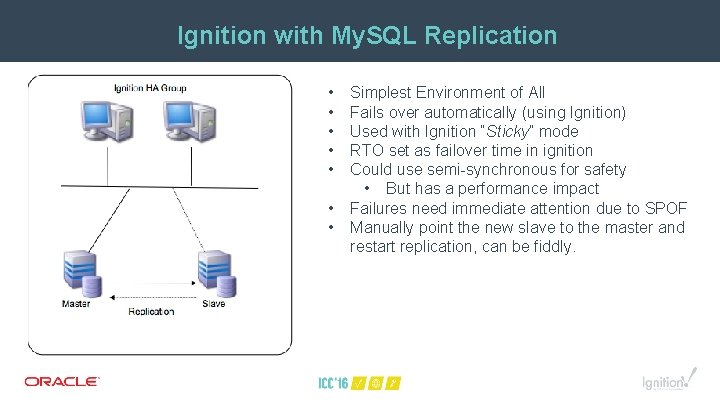

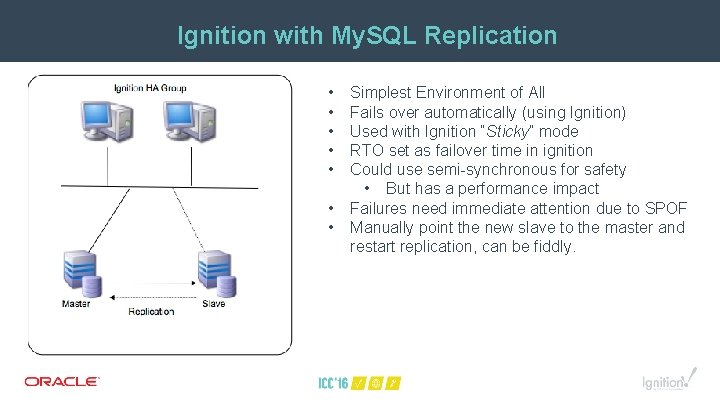

Ignition with My. SQL Replication • • Simplest Environment of All Fails over automatically (using Ignition) Used with Ignition “Sticky” mode RTO set as failover time in ignition Could use semi-synchronous for safety • But has a performance impact Failures need immediate attention due to SPOF Manually point the new slave to the master and restart replication, can be fiddly.

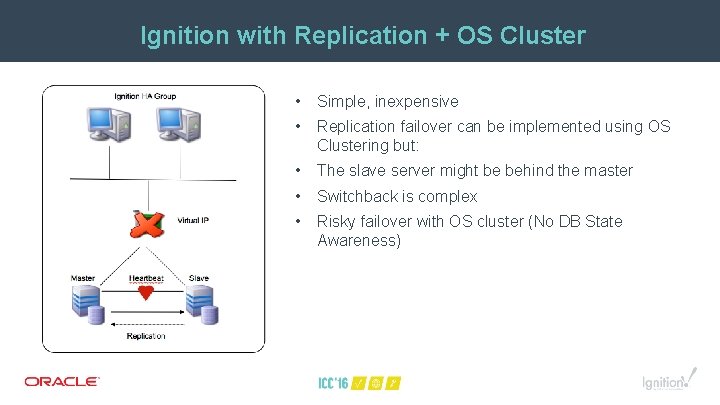

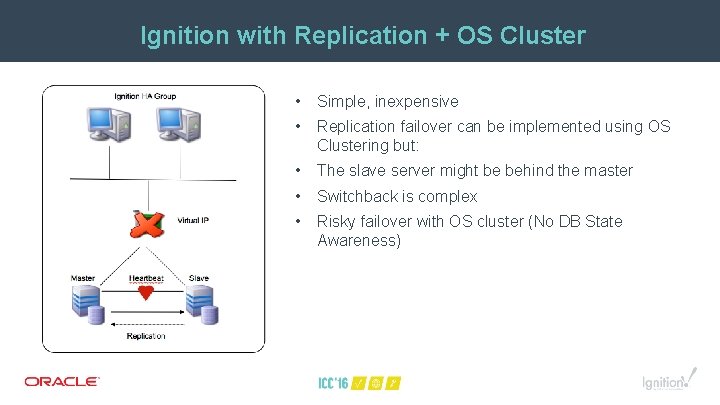

Ignition with Replication + OS Cluster • Simple, inexpensive • Replication failover can be implemented using OS Clustering but: • The slave server might be behind the master • Switchback is complex • Risky failover with OS cluster (No DB State Awareness)

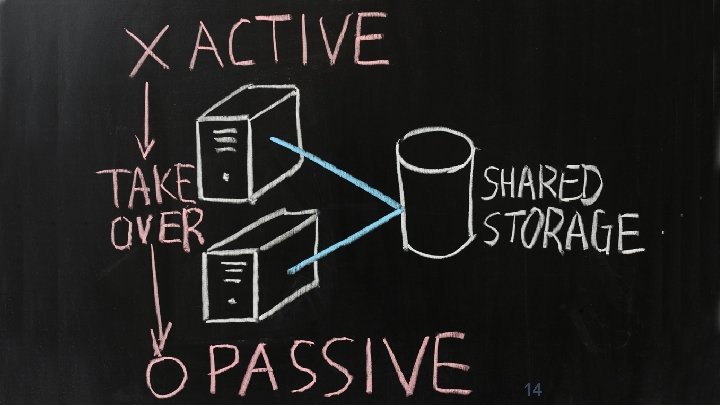

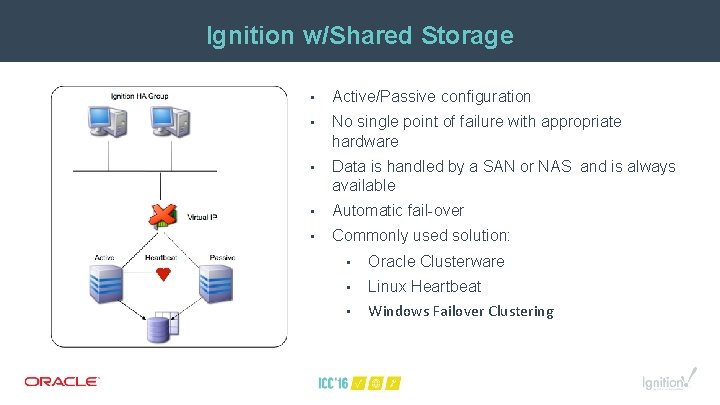

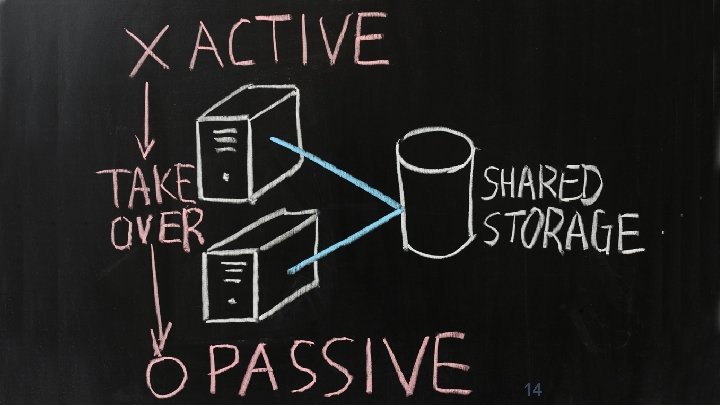

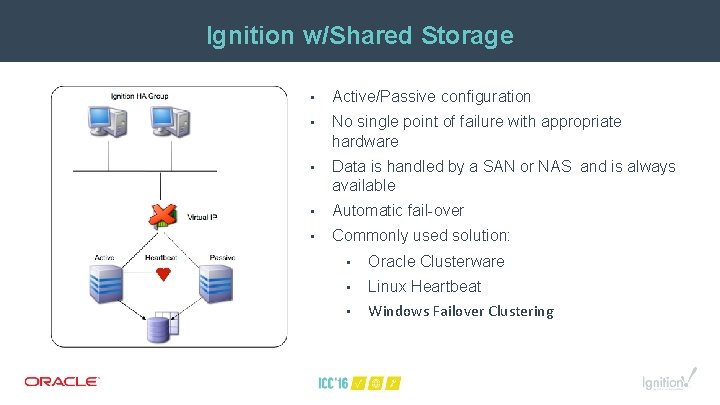

Ignition w/Shared Storage • Active/Passive configuration • No single point of failure with appropriate hardware • Data is handled by a SAN or NAS and is always available • Automatic fail-over • Commonly used solution: • Oracle Clusterware • Linux Heartbeat • Windows Failover Clustering

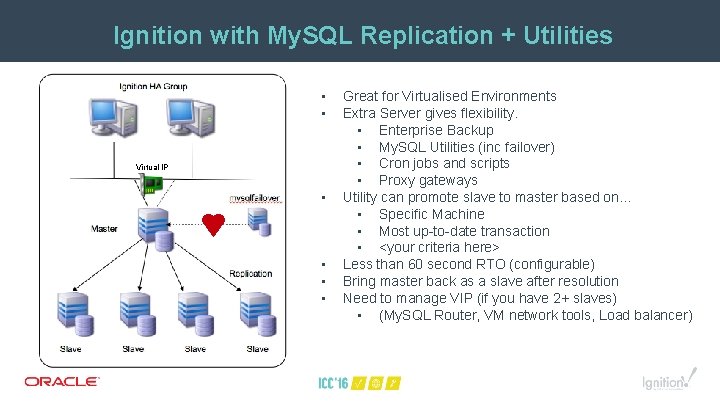

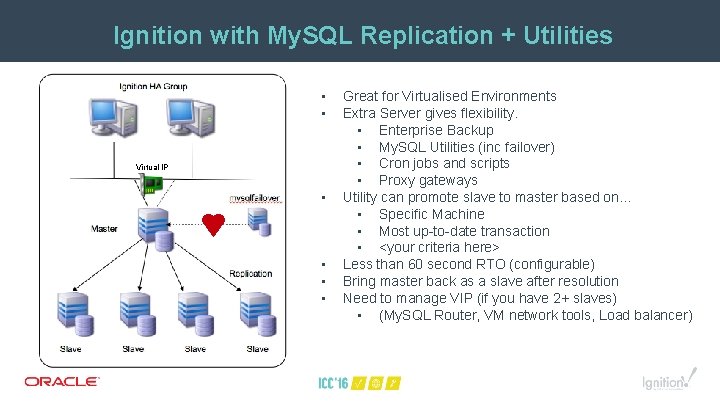

Ignition with My. SQL Replication + Utilities • • Virtual IP • • Great for Virtualised Environments Extra Server gives flexibility. • Enterprise Backup • My. SQL Utilities (inc failover) • Cron jobs and scripts • Proxy gateways Utility can promote slave to master based on… • Specific Machine • Most up-to-date transaction • <your criteria here> Less than 60 second RTO (configurable) Bring master back as a slave after resolution Need to manage VIP (if you have 2+ slaves) • (My. SQL Router, VM network tools, Load balancer)

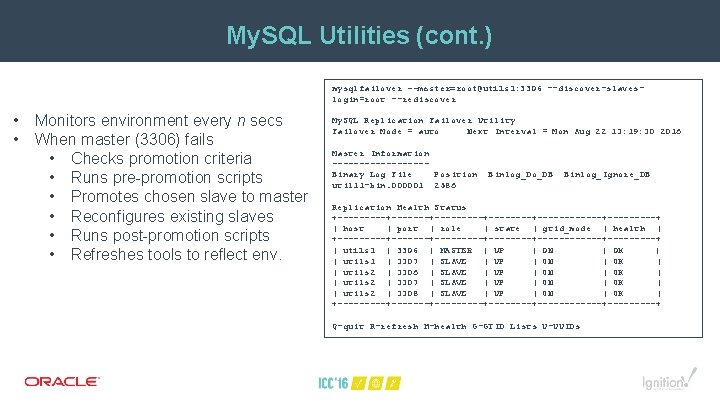

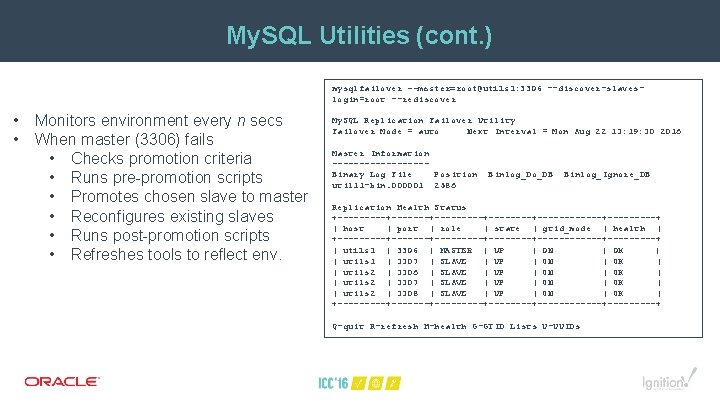

My. SQL Utilities (cont. ) mysqlfailover --master=root@utils 1: 3306 --discover-slaveslogin=root --rediscover • • Monitors environment every n secs When master (3306) fails • Checks promotion criteria • Runs pre-promotion scripts • Promotes chosen slave to master • Reconfigures existing slaves • Runs post-promotion scripts • Refreshes tools to reflect env. My. SQL Replication Failover Utility Failover Mode = auto Next Interval = Mon Aug 22 13: 19: 30 2016 Master Information ---------Binary Log File Position util 11 -bin. 000001 2586 Binlog_Do_DB Binlog_Ignore_DB Replication Health Status +---------+--------+------+-----+ | host | port | role | state | gtid_mode | health | +---------+--------+------+-----+ | utils 1 | 3306 | MASTER | UP | ON | OK | | utils 1 | 3307 | SLAVE | UP | ON | OK | | utils 2 | 3306 | SLAVE | UP | ON | OK | | utils 2 | 3307 | SLAVE | UP | ON | OK | | utils 2 | 3308 | SLAVE | UP | ON | OK | +---------+--------+------+-----+ Q-quit R-refresh H-health G-GTID Lists U-UUIDs

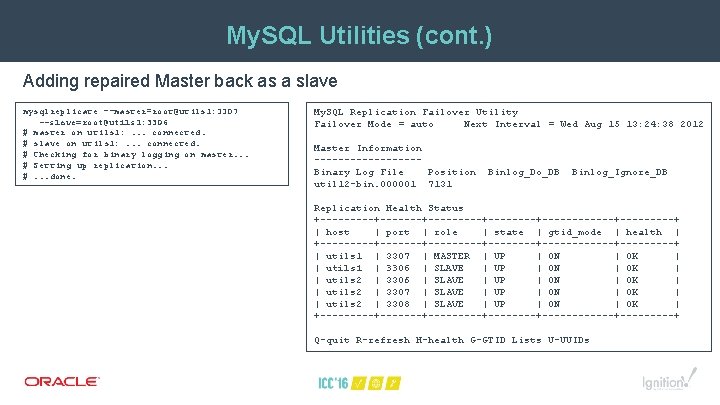

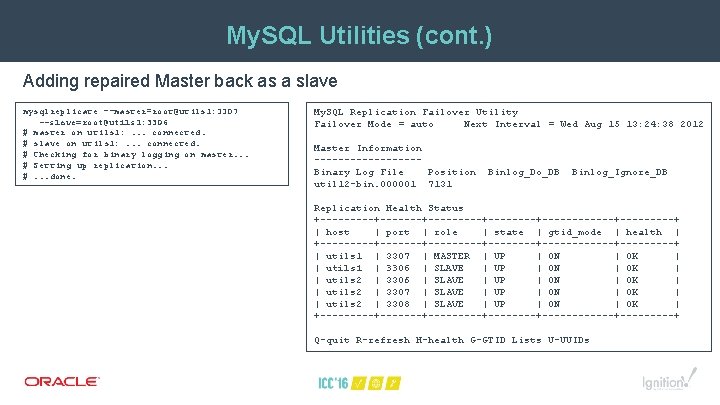

My. SQL Utilities (cont. ) Adding repaired Master back as a slave mysqlreplicate --master=root@utils 1: 3307 --slave=root@utils 1: 3306 # master on utils 1: . . . connected. # slave on utils 1: . . . connected. # Checking for binary logging on master. . . # Setting up replication. . . #. . . done. My. SQL Replication Failover Utility Failover Mode = auto Next Interval = Wed Aug 15 13: 24: 38 2012 Master Information ---------Binary Log File Position util 12 -bin. 000001 7131 Binlog_Do_DB Binlog_Ignore_DB Replication Health Status +---------+--------+------+-----+ | host | port | role | state | gtid_mode | health | +---------+--------+------+-----+ | utils 1 | 3307 | MASTER | UP | ON | OK | | utils 1 | 3306 | SLAVE | UP | ON | OK | | utils 2 | 3307 | SLAVE | UP | ON | OK | | utils 2 | 3308 | SLAVE | UP | ON | OK | +---------+--------+------+-----+ Q-quit R-refresh H-health G-GTID Lists U-UUIDs

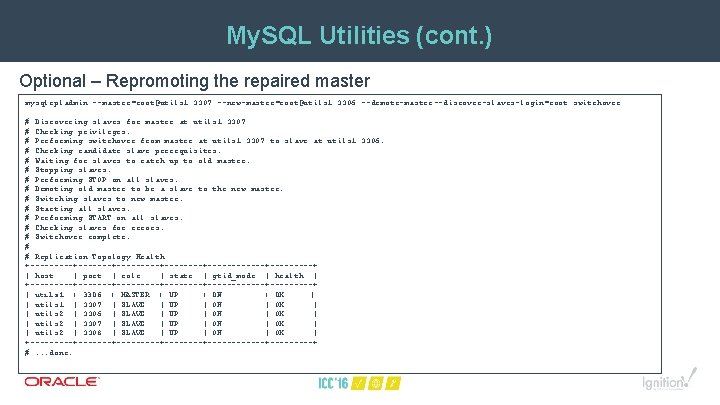

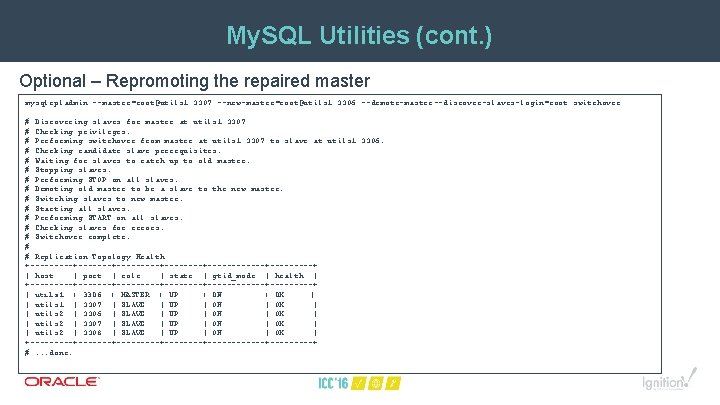

My. SQL Utilities (cont. ) Optional – Repromoting the repaired master mysqlrpladmin --master=root@utils 1: 3307 --new-master=root@utils 1: 3306 --demote-master --discover-slaves-login=root switchover # Discovering slaves for master at utils 1: 3307 # Checking privileges. # Performing switchover from master at utils 1: 3307 to slave at utils 1: 3306. # Checking candidate slave prerequisites. # Waiting for slaves to catch up to old master. # Stopping slaves. # Performing STOP on all slaves. # Demoting old master to be a slave to the new master. # Switching slaves to new master. # Starting all slaves. # Performing START on all slaves. # Checking slaves for errors. # Switchover complete. # # Replication Topology Health: +---------+--------+------+-----+ | host | port | role | state | gtid_mode | health | +---------+--------+------+-----+ | utils 1 | 3306 | MASTER | UP | ON | OK | | utils 1 | 3307 | SLAVE | UP | ON | OK | | utils 2 | 3306 | SLAVE | UP | ON | OK | | utils 2 | 3307 | SLAVE | UP | ON | OK | | utils 2 | 3308 | SLAVE | UP | ON | OK | +---------+--------+------+-----+ #. . . done.

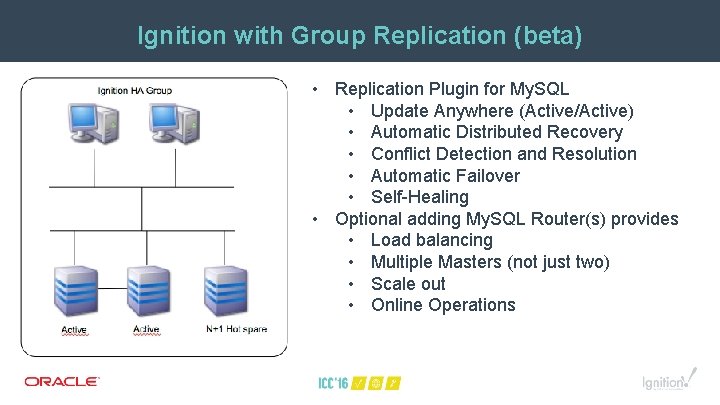

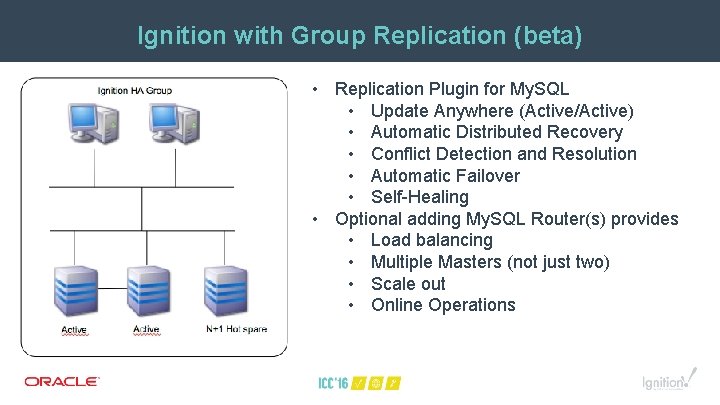

Ignition with Group Replication (beta) • Replication Plugin for My. SQL • Update Anywhere (Active/Active) • Automatic Distributed Recovery • Conflict Detection and Resolution • Automatic Failover • Self-Healing • Optional adding My. SQL Router(s) provides • Load balancing • Multiple Masters (not just two) • Scale out • Online Operations

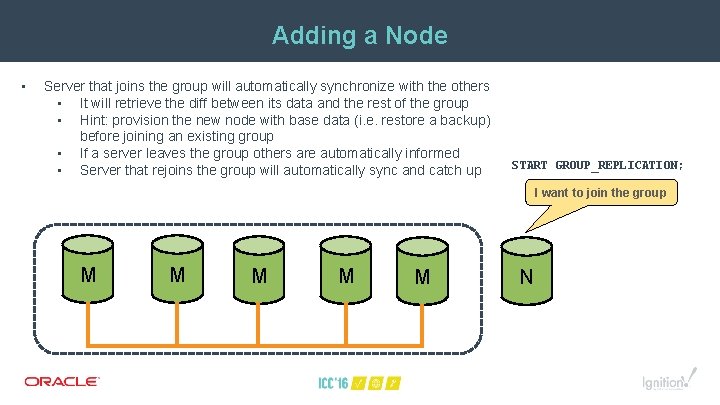

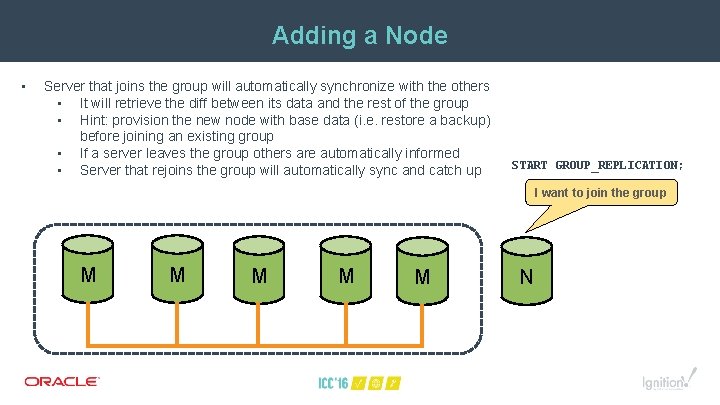

Adding a Node • Server that joins the group will automatically synchronize with the others • It will retrieve the diff between its data and the rest of the group • Hint: provision the new node with base data (i. e. restore a backup) before joining an existing group • If a server leaves the group others are automatically informed • Server that rejoins the group will automatically sync and catch up START GROUP_REPLICATION; I want to join the group M M M N

Other Operational Considerations

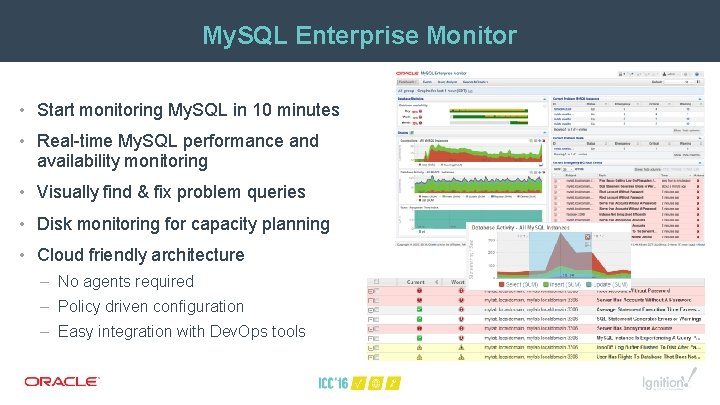

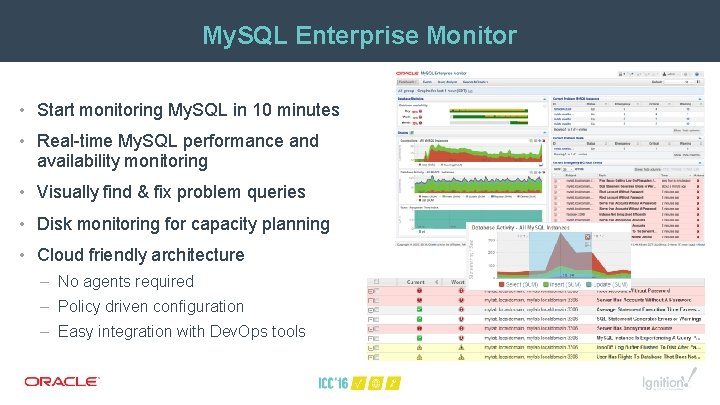

My. SQL Enterprise Monitor • Start monitoring My. SQL in 10 minutes • Real-time My. SQL performance and availability monitoring • Visually find & fix problem queries • Disk monitoring for capacity planning • Cloud friendly architecture – No agents required – Policy driven configuration – Easy integration with Dev. Ops tools

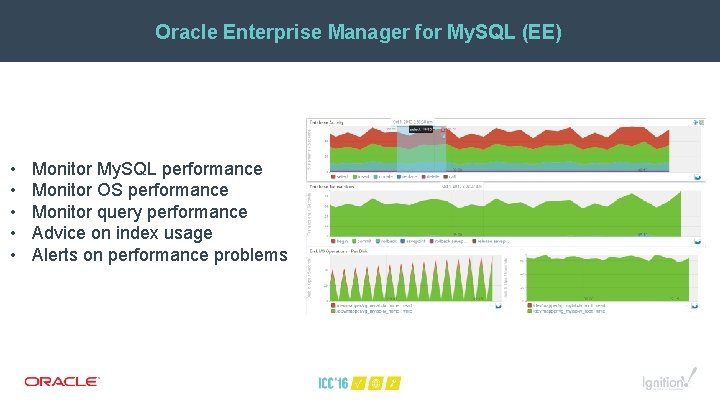

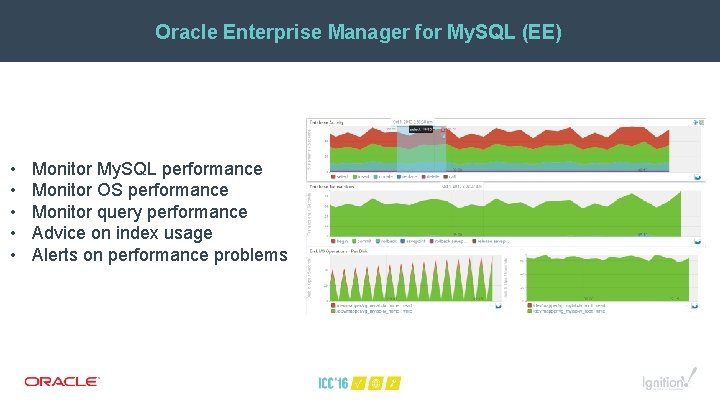

Oracle Enterprise Manager for My. SQL (EE) • • • Monitor My. SQL performance Monitor OS performance Monitor query performance Advice on index usage Alerts on performance problems

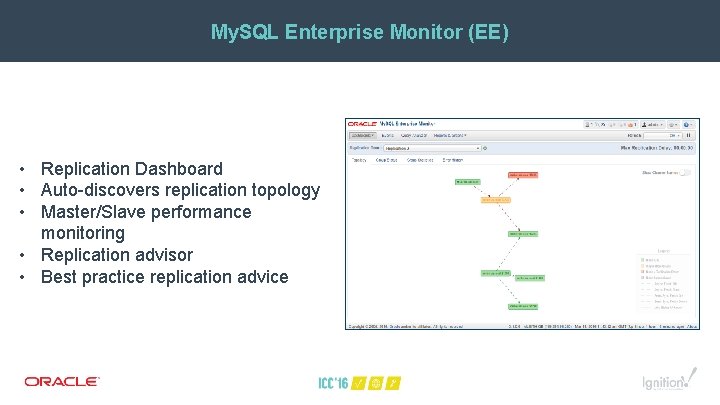

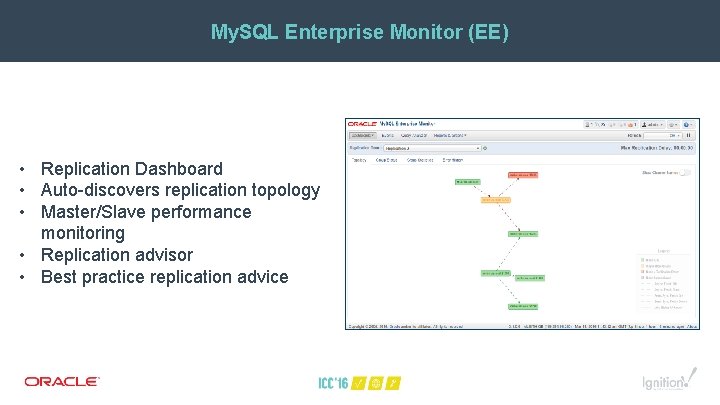

My. SQL Enterprise Monitor (EE) • Replication Dashboard • Auto-discovers replication topology • Master/Slave performance monitoring • Replication advisor • Best practice replication advice

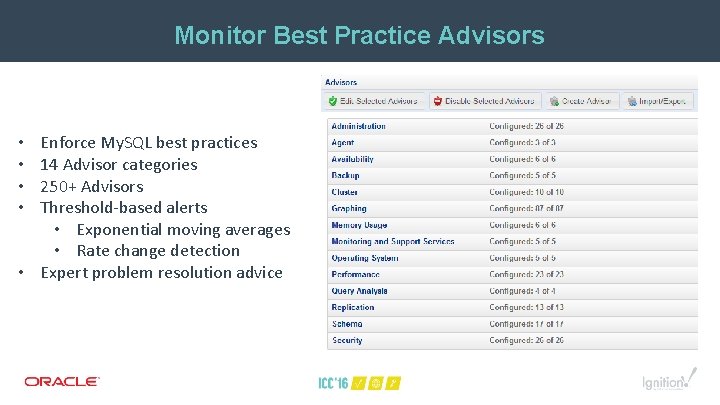

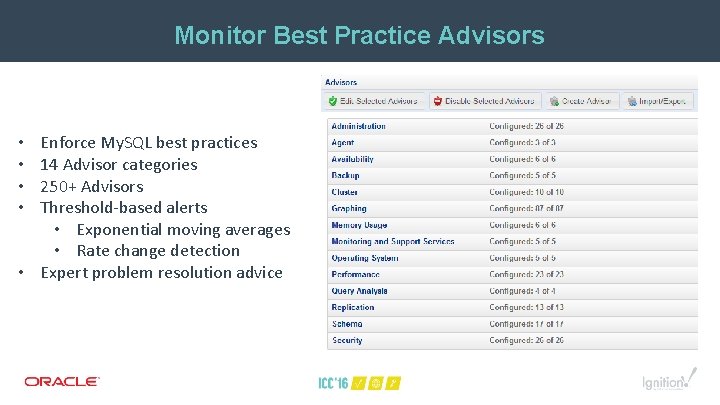

Monitor Best Practice Advisors Enforce My. SQL best practices 14 Advisor categories 250+ Advisors Threshold-based alerts • Exponential moving averages • Rate change detection • Expert problem resolution advice • •

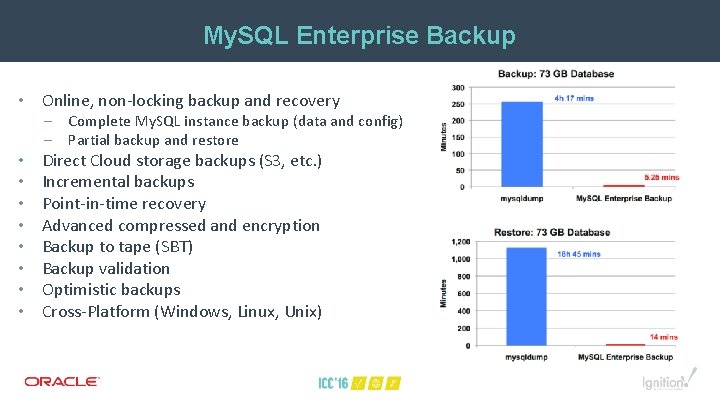

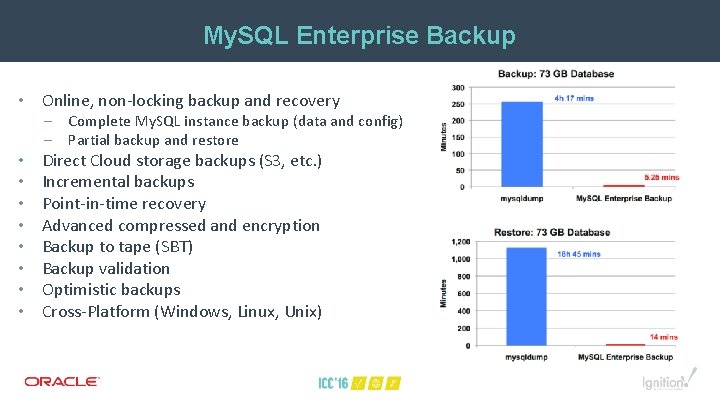

My. SQL Enterprise Backup • Online, non-locking backup and recovery • • – Complete My. SQL instance backup (data and config) – Partial backup and restore Direct Cloud storage backups (S 3, etc. ) Incremental backups Point-in-time recovery Advanced compressed and encryption Backup to tape (SBT) Backup validation Optimistic backups Cross-Platform (Windows, Linux, Unix)

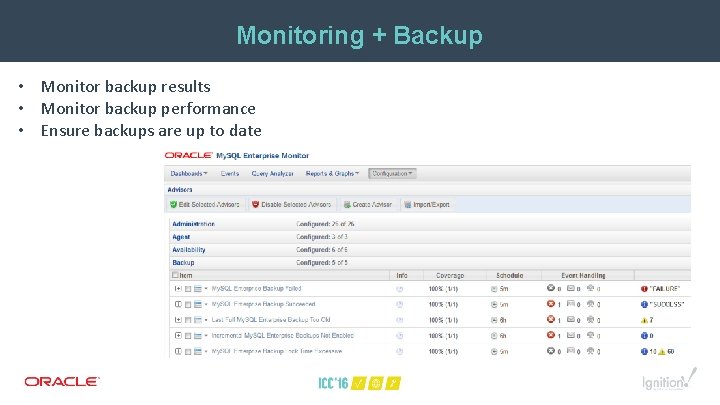

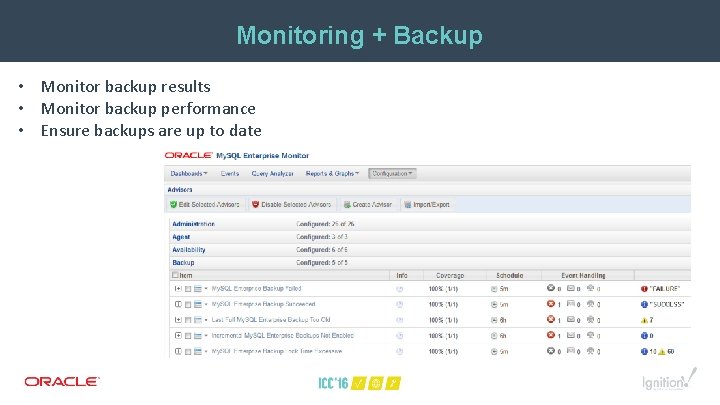

Monitoring + Backup • Monitor backup results • Monitor backup performance • Ensure backups are up to date

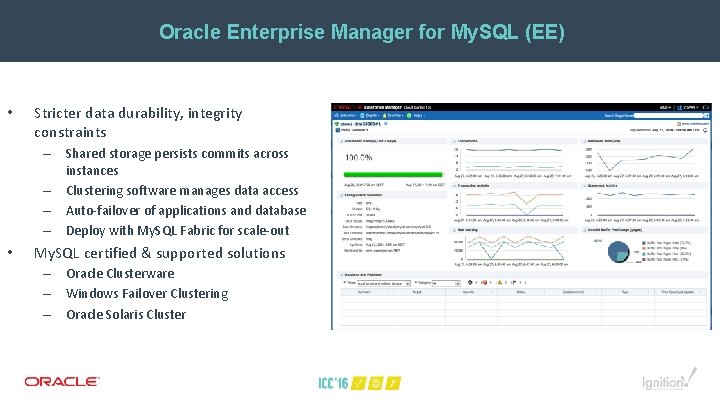

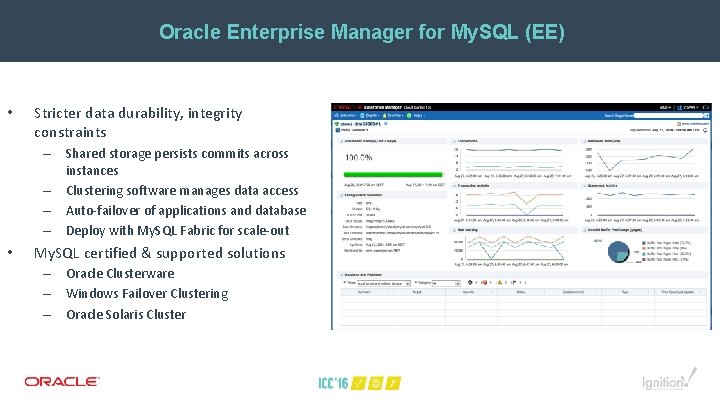

Oracle Enterprise Manager for My. SQL (EE) • Stricter data durability, integrity constraints – Shared storage persists commits across instances – Clustering software manages data access – Auto-failover of applications and database – Deploy with My. SQL Fabric for scale-out • My. SQL certified & supported solutions – Oracle Clusterware – Windows Failover Clustering – Oracle Solaris Cluster

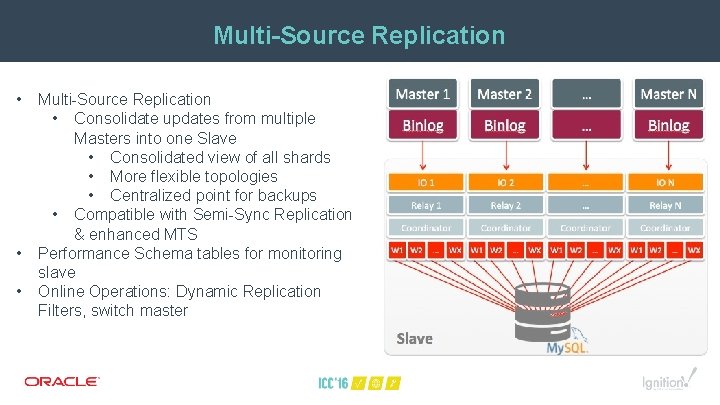

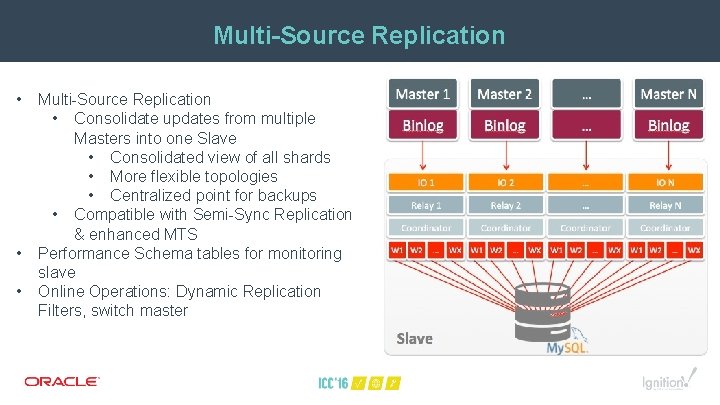

Multi-Source Replication • • • Multi-Source Replication • Consolidate updates from multiple Masters into one Slave • Consolidated view of all shards • More flexible topologies • Centralized point for backups • Compatible with Semi-Sync Replication & enhanced MTS Performance Schema tables for monitoring slave Online Operations: Dynamic Replication Filters, switch master

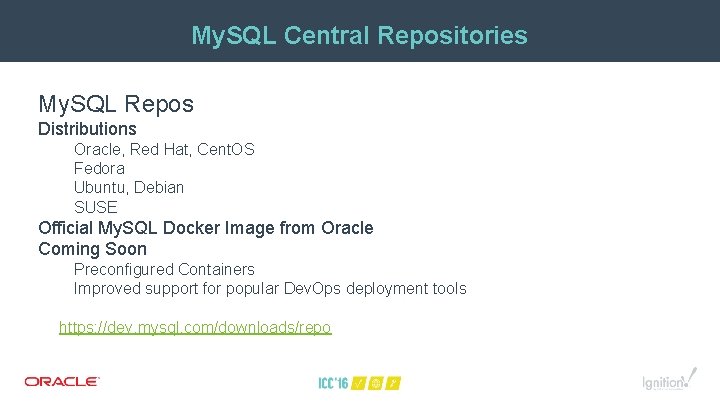

My. SQL Central Repositories My. SQL Repos Distributions Oracle, Red Hat, Cent. OS Fedora Ubuntu, Debian SUSE Official My. SQL Docker Image from Oracle Coming Soon Preconfigured Containers Improved support for popular Dev. Ops deployment tools https: //dev. mysql. com/downloads/repo

My. SQL Cluster • 200 Million No. SQL Reads/Sec • 2. 5 M SQL Ops/Sec • 20 M Writes/Sec • Active-Active Geographic Redundancy • Conflict Detection/Resolution • Online Operations • Elastic Growth • Detailed Reporting Performance Active-Active Management

Questions?

Thank You