MXNet Internals Cyrus M Vahid Principal Solutions Architect

- Slides: 19

MXNet Internals Cyrus M. Vahid, Principal Solutions Architect @ AWS Deep Learning cyrusmv@amazon. com June 2017 © 2017, Amazon Web Services, Inc. or its Affiliates. All rights reserved.

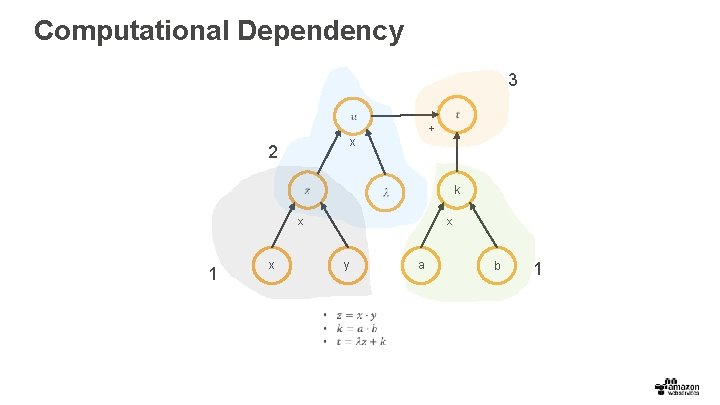

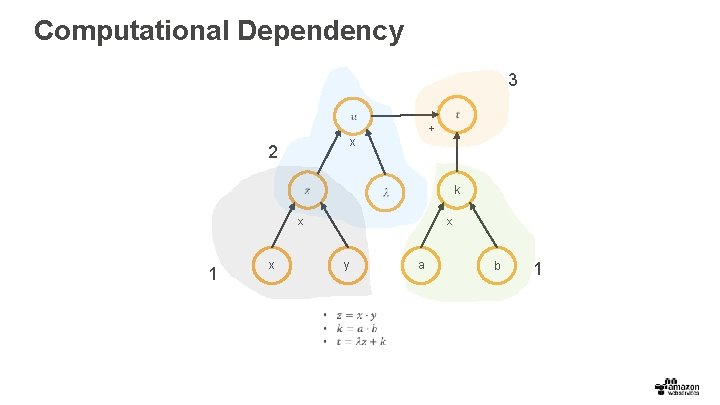

Computational Dependency 3 + x 2 k x 1 x x y a b 1

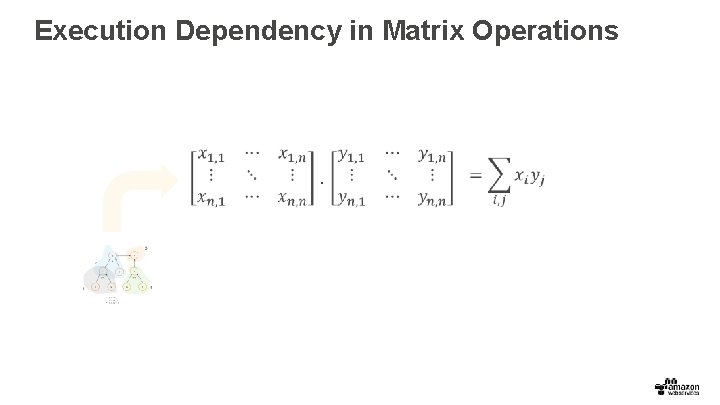

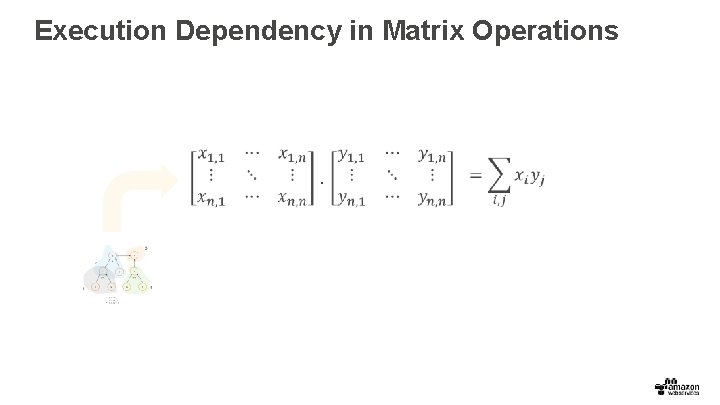

Execution Dependency in Matrix Operations .

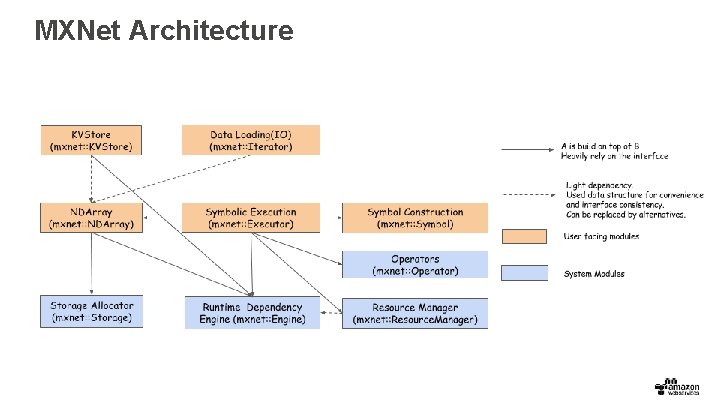

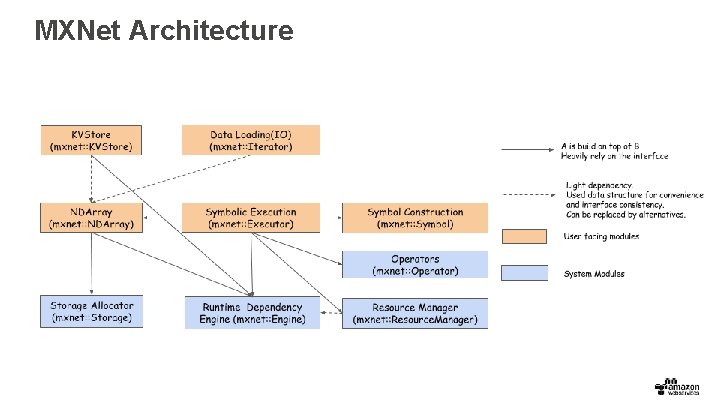

MXNet Architecture

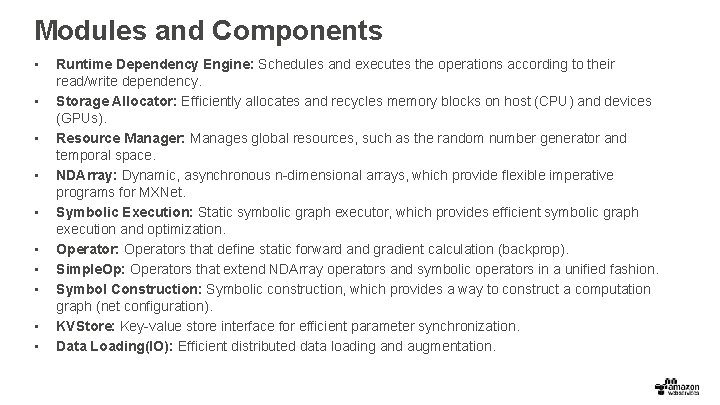

Modules and Components • • • Runtime Dependency Engine: Schedules and executes the operations according to their read/write dependency. Storage Allocator: Efficiently allocates and recycles memory blocks on host (CPU) and devices (GPUs). Resource Manager: Manages global resources, such as the random number generator and temporal space. NDArray: Dynamic, asynchronous n-dimensional arrays, which provide flexible imperative programs for MXNet. Symbolic Execution: Static symbolic graph executor, which provides efficient symbolic graph execution and optimization. Operator: Operators that define static forward and gradient calculation (backprop). Simple. Op: Operators that extend NDArray operators and symbolic operators in a unified fashion. Symbol Construction: Symbolic construction, which provides a way to construct a computation graph (net configuration). KVStore: Key-value store interface for efficient parameter synchronization. Data Loading(IO): Efficient distributed data loading and augmentation.

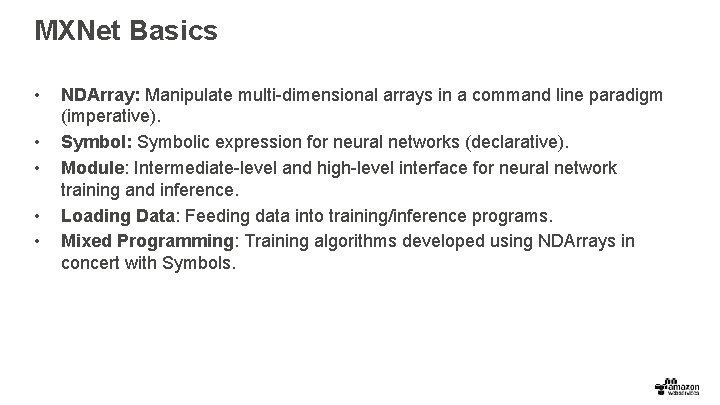

MXNet Basics • • • NDArray: Manipulate multi-dimensional arrays in a command line paradigm (imperative). Symbol: Symbolic expression for neural networks (declarative). Module: Intermediate-level and high-level interface for neural network training and inference. Loading Data: Feeding data into training/inference programs. Mixed Programming: Training algorithms developed using NDArrays in concert with Symbols.

NDArray • • The intention is to replicate numpy’s API, but optimized for GPU It provides matrix operations. API Docs are loated here. Tutorials are here

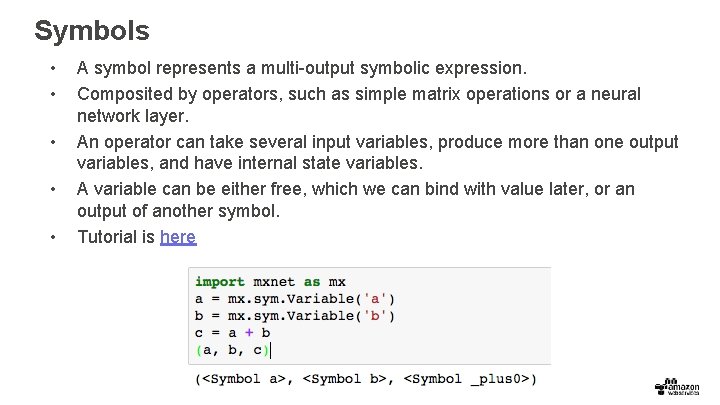

Symbols • • • A symbol represents a multi-output symbolic expression. Composited by operators, such as simple matrix operations or a neural network layer. An operator can take several input variables, produce more than one output variables, and have internal state variables. A variable can be either free, which we can bind with value later, or an output of another symbol. Tutorial is here

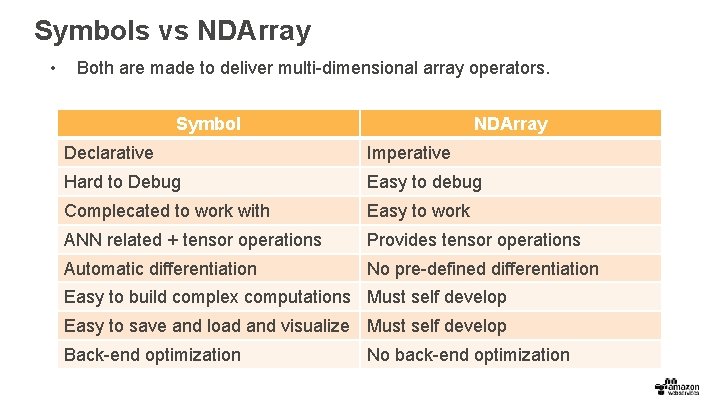

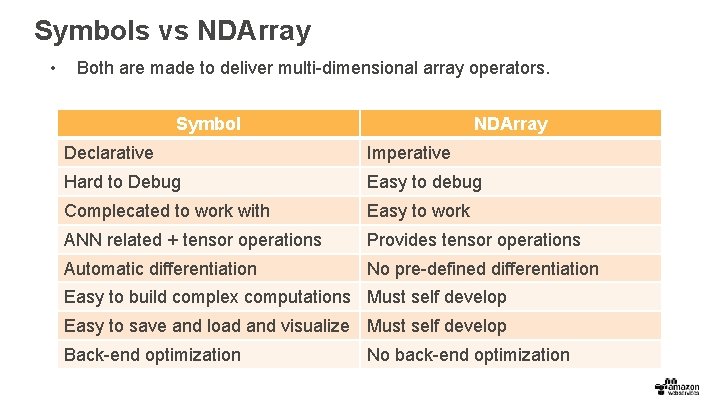

Symbols vs NDArray • Both are made to deliver multi-dimensional array operators. Symbol NDArray Declarative Imperative Hard to Debug Easy to debug Complecated to work with Easy to work ANN related + tensor operations Provides tensor operations Automatic differentiation No pre-defined differentiation Easy to build complex computations Must self develop Easy to save and load and visualize Must self develop Back-end optimization No back-end optimization

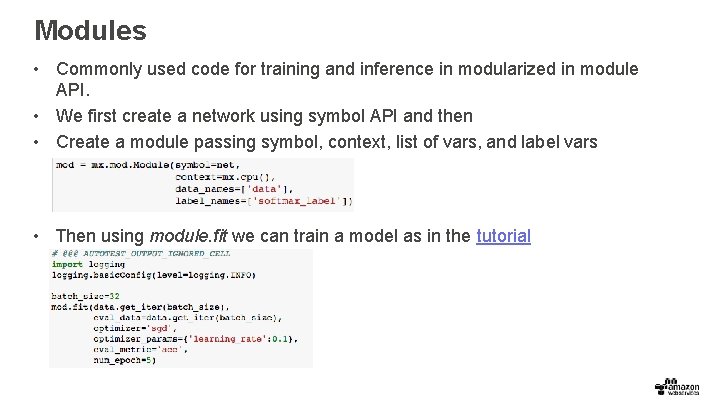

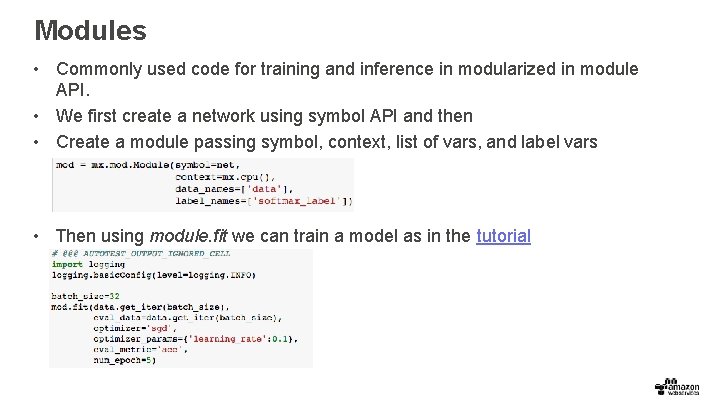

Modules • Commonly used code for training and inference in modularized in module API. • We first create a network using symbol API and then • Create a module passing symbol, context, list of vars, and label vars • Then using module. fit we can train a model as in the tutorial

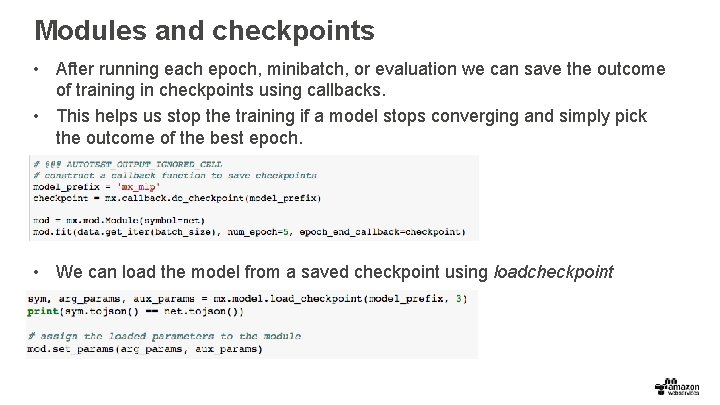

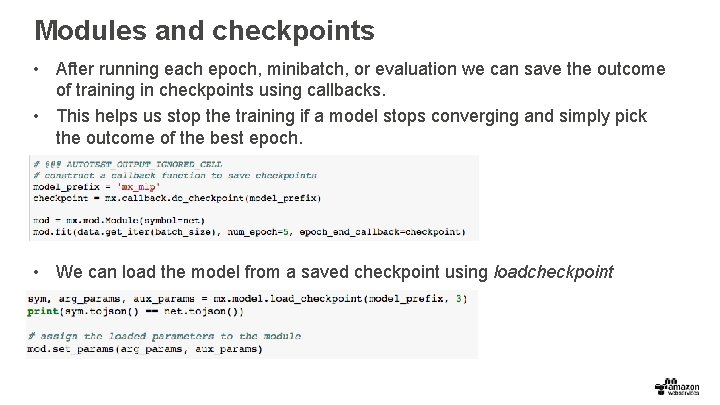

Modules and checkpoints • After running each epoch, minibatch, or evaluation we can save the outcome of training in checkpoints using callbacks. • This helps us stop the training if a model stops converging and simply pick the outcome of the best epoch. • We can load the model from a saved checkpoint using loadcheckpoint

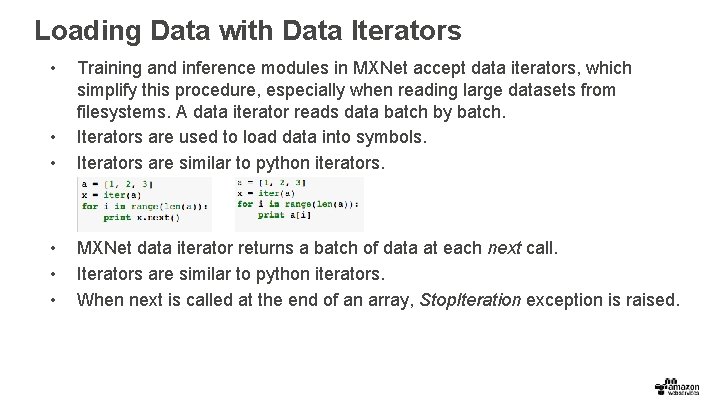

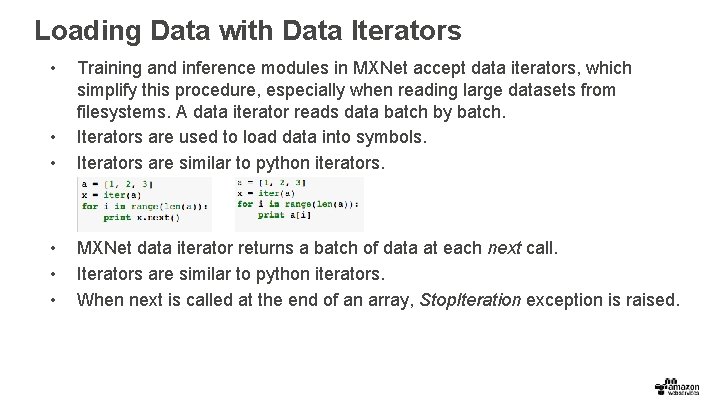

Loading Data with Data Iterators • • • Training and inference modules in MXNet accept data iterators, which simplify this procedure, especially when reading large datasets from filesystems. A data iterator reads data batch by batch. Iterators are used to load data into symbols. Iterators are similar to python iterators. • • • MXNet data iterator returns a batch of data at each next call. Iterators are similar to python iterators. When next is called at the end of an array, Stop. Iteration exception is raised.

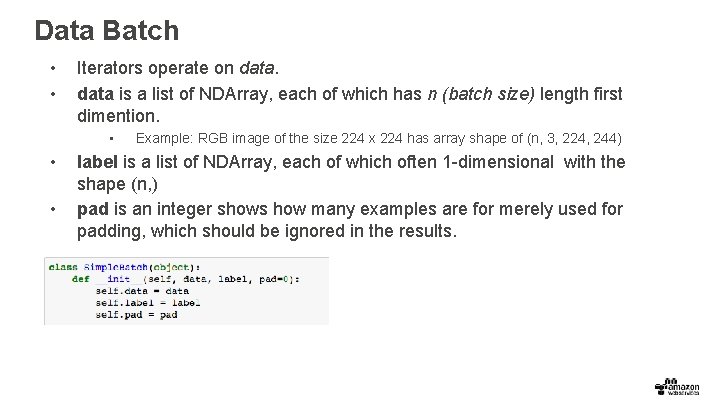

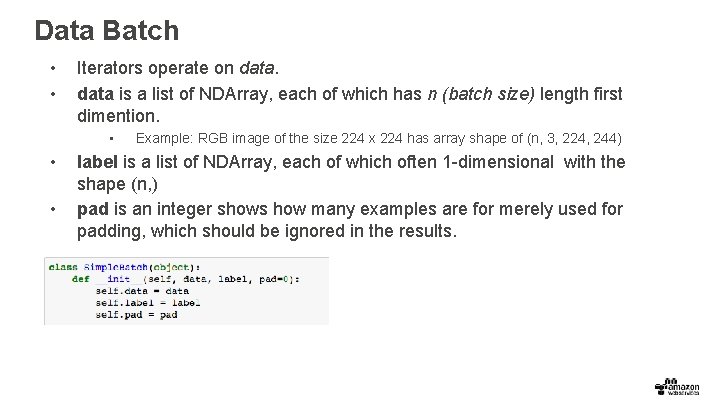

Data Batch • • Iterators operate on data is a list of NDArray, each of which has n (batch size) length first dimention. • • • Example: RGB image of the size 224 x 224 has array shape of (n, 3, 224, 244) label is a list of NDArray, each of which often 1 -dimensional with the shape (n, ) pad is an integer shows how many examples are for merely used for padding, which should be ignored in the results.

MXNet Data Iterators io. NDArray. Iterating on either mx. nd. NDArray or numpy. ndarray. io. CSVIterating on CSV files io. Image. Record. Iterating on image Record. IO files io. Image. Record. UInt 8 Iter Create iterator for dataset packed in recordio. MNISTIterating on the MNIST dataset. recordio. MXRecord. IO Read/write Record. IO format data. recordio. MXIndexed. Record. IO Read/write Record. IO format data supporting random access. image. Iter Image data iterator with a large number of augmentation choices.

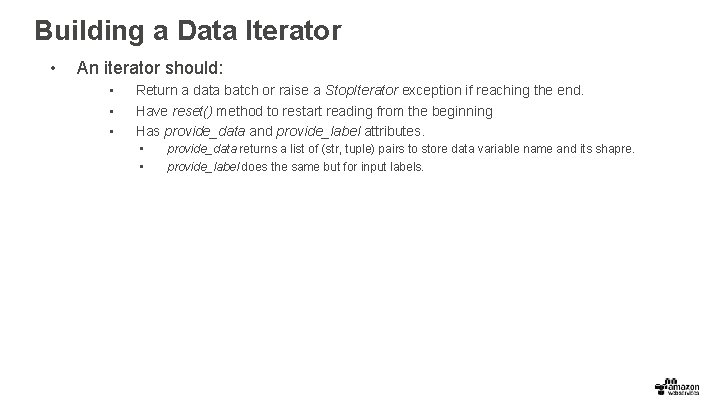

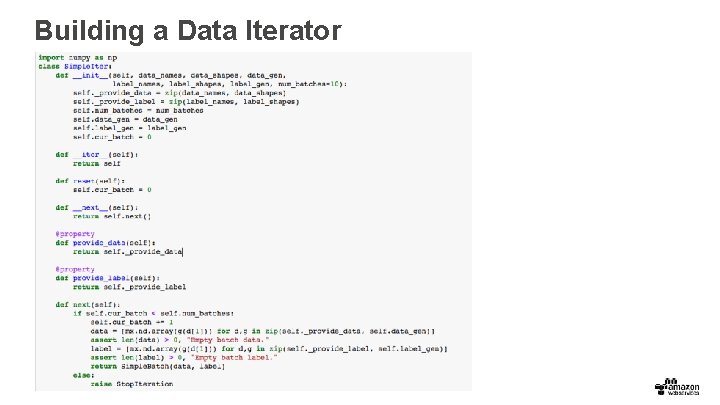

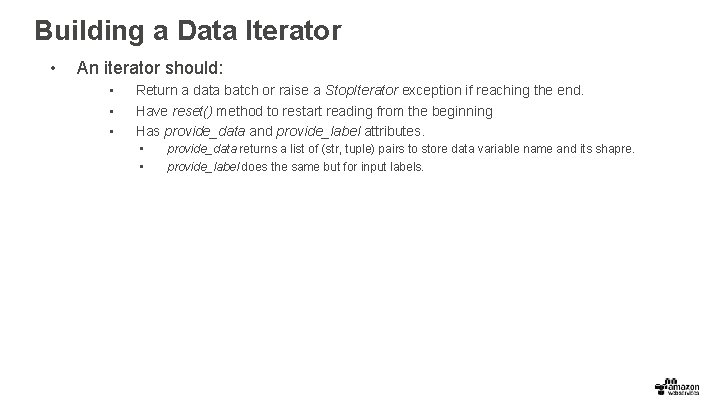

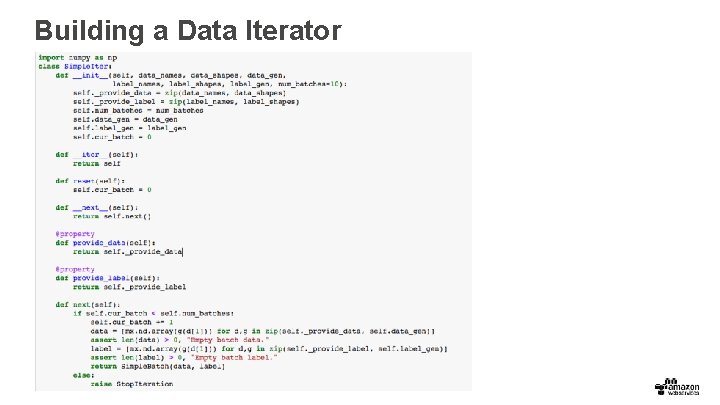

Building a Data Iterator • An iterator should: • • • Return a data batch or raise a Stop. Iterator exception if reaching the end. Have reset() method to restart reading from the beginning Has provide_data and provide_label attributes. • • provide_data returns a list of (str, tuple) pairs to store data variable name and its shapre. provide_label does the same but for input labels.

Building a Data Iterator

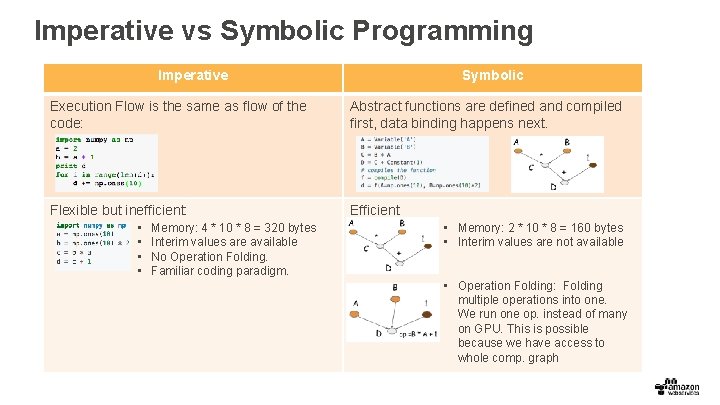

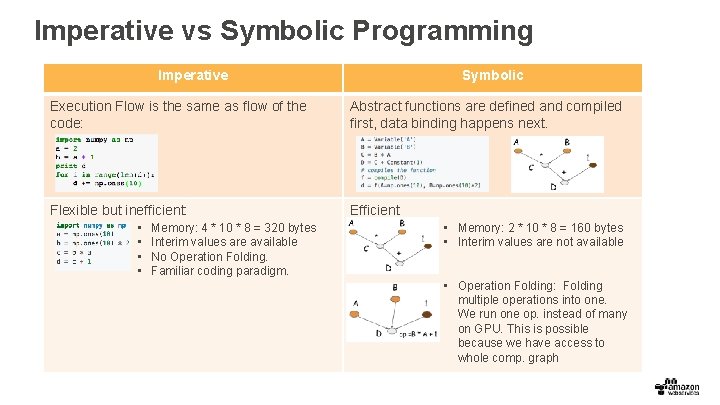

Imperative vs Symbolic Programming Imperative Symbolic Execution Flow is the same as flow of the code: Abstract functions are defined and compiled first, data binding happens next. Flexible but inefficient: Efficient • • Memory: 4 * 10 * 8 = 320 bytes Interim values are available No Operation Folding. Familiar coding paradigm. • Memory: 2 * 10 * 8 = 160 bytes • Interim values are not available • Operation Folding: Folding multiple operations into one. We run one op. instead of many on GPU. This is possible because we have access to whole comp. graph

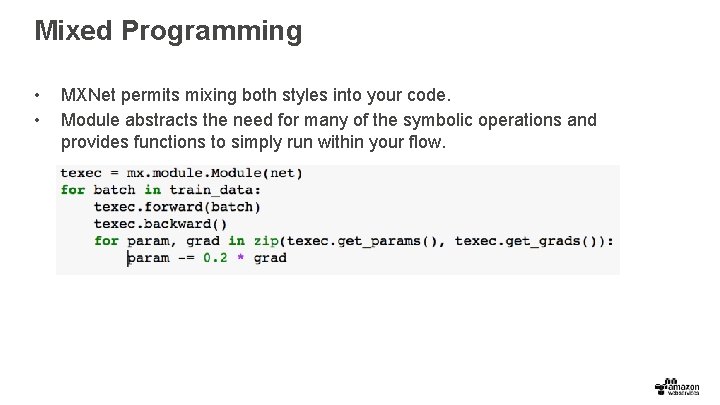

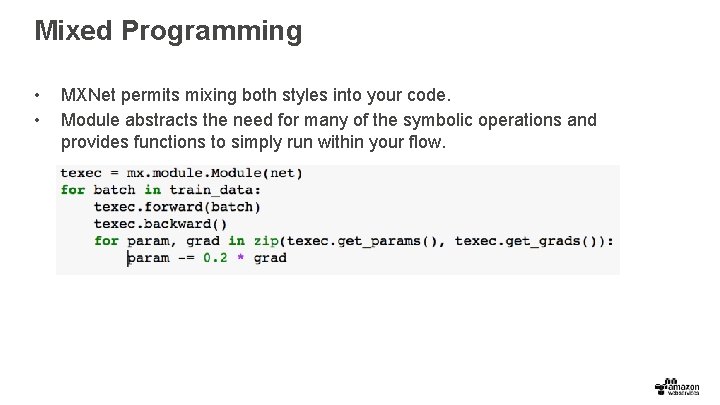

Mixed Programming • • MXNet permits mixing both styles into your code. Module abstracts the need for many of the symbolic operations and provides functions to simply run within your flow.

Thank you! Cyrus M. Vahid cyrusmv@amazon. com