MVRLU Scaling ReadLogUpdate with MultiVersioning JAEHO KIM AJIT

![Read-Log-Update (RLU) [Matveev’ 15] q Readers do not block q Allow multi-pointer update q Read-Log-Update (RLU) [Matveev’ 15] q Readers do not block q Allow multi-pointer update q](https://slidetodoc.com/presentation_image_h/7381bbf0aac578e08796c67261679485/image-6.jpg)

- Slides: 39

MV-RLU: Scaling Read-Log-Update with Multi-Versioning JAEHO KIM, AJIT MATHEW, SANIDHYA KASHYAP† MADHAVA KRISHNAN RAMANATHAN, CHANGWOO MIN † 1

Core count continues to rise…. 2

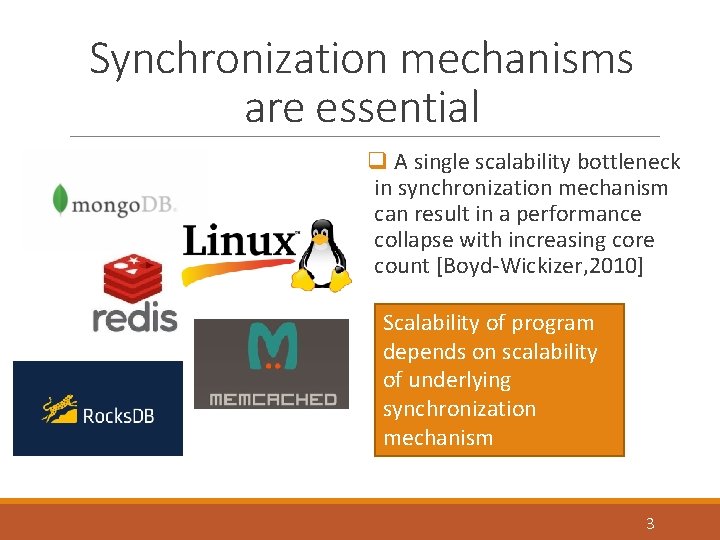

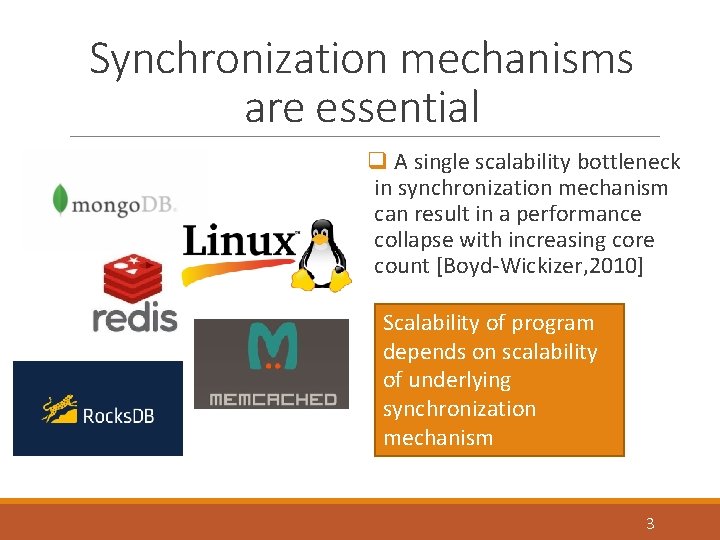

Synchronization mechanisms are essential q A single scalability bottleneck in synchronization mechanism can result in a performance collapse with increasing core count [Boyd-Wickizer, 2010] Scalability of program depends on scalability of underlying synchronization mechanism 3

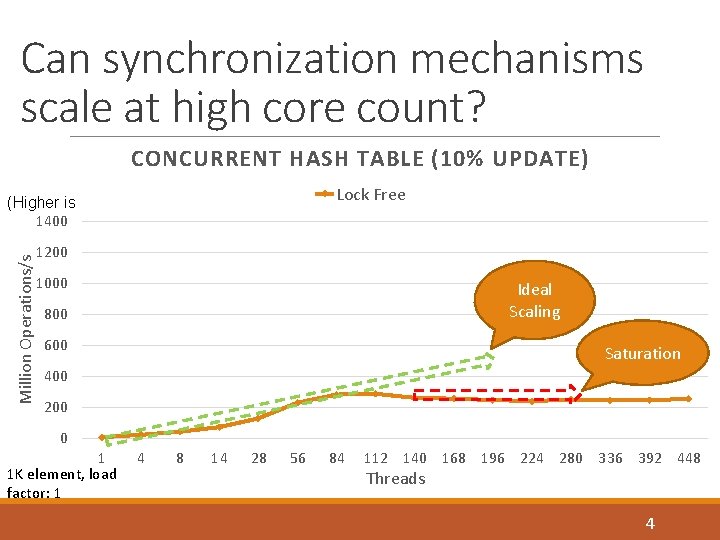

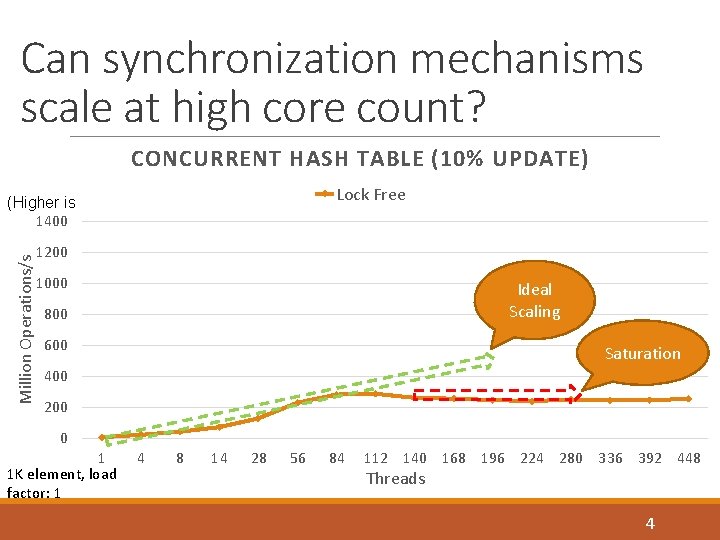

Can synchronization mechanisms scale at high core count? CONCURRENT HASH TABLE (10% UPDATE) Lock Free Million Operations/s (Higher is 1400 1200 1000 Ideal Scaling 800 600 Saturation 400 200 0 1 1 K element, load factor: 1 4 8 14 28 56 84 112 140 168 196 224 280 336 392 448 Threads 4

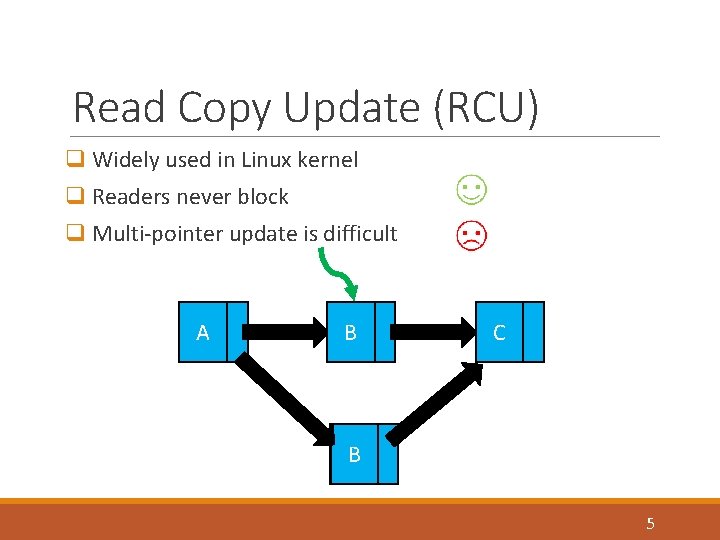

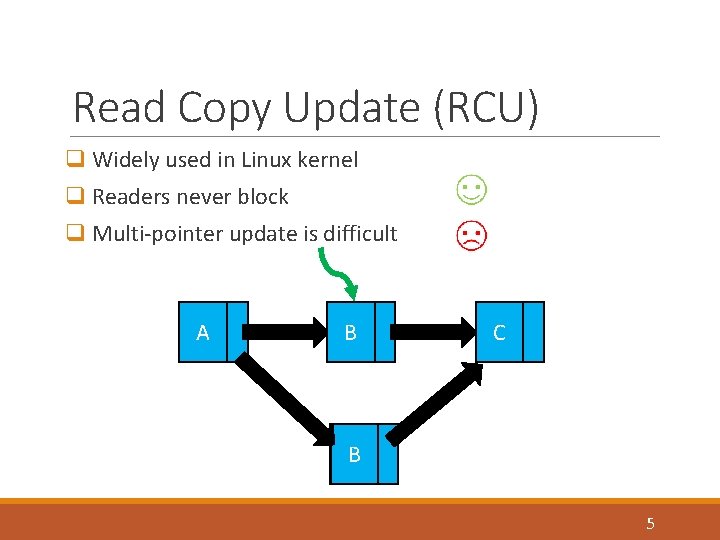

Read Copy Update (RCU) q Widely used in Linux kernel q Readers never block q Multi-pointer update is difficult A B C B B’ 5

![ReadLogUpdate RLU Matveev 15 q Readers do not block q Allow multipointer update q Read-Log-Update (RLU) [Matveev’ 15] q Readers do not block q Allow multi-pointer update q](https://slidetodoc.com/presentation_image_h/7381bbf0aac578e08796c67261679485/image-6.jpg)

Read-Log-Update (RLU) [Matveev’ 15] q Readers do not block q Allow multi-pointer update q Key idea: Use global clock and per thread log to make updates atomically visible 6

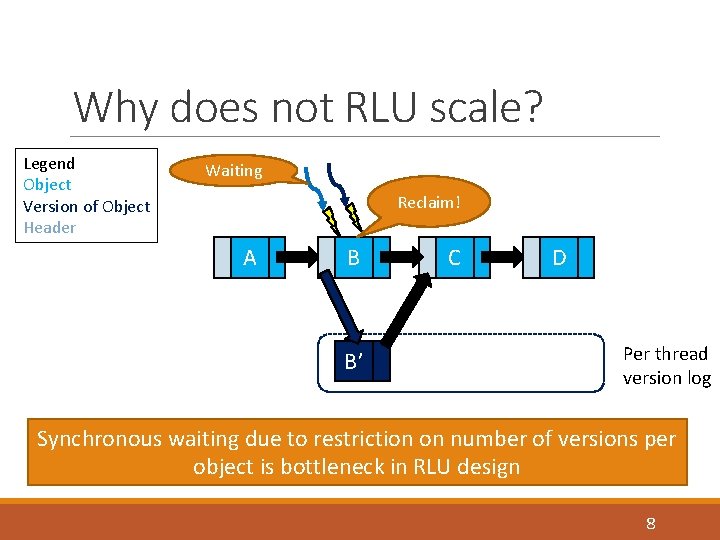

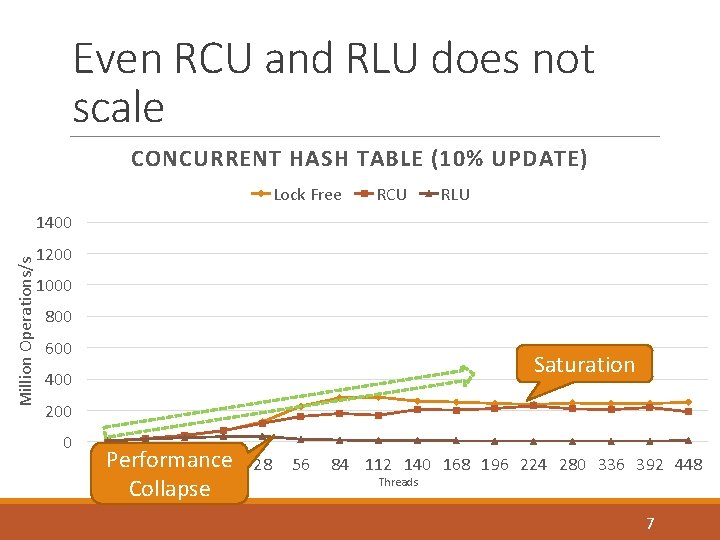

Even RCU and RLU does not scale CONCURRENT HASH TABLE (10% UPDATE) Lock Free RCU RLU Million Operations/s 1400 1200 1000 800 600 Saturation 400 200 0 1 Performance 4 8 14 28 Collapse 56 84 112 140 168 196 224 280 336 392 448 Threads 7

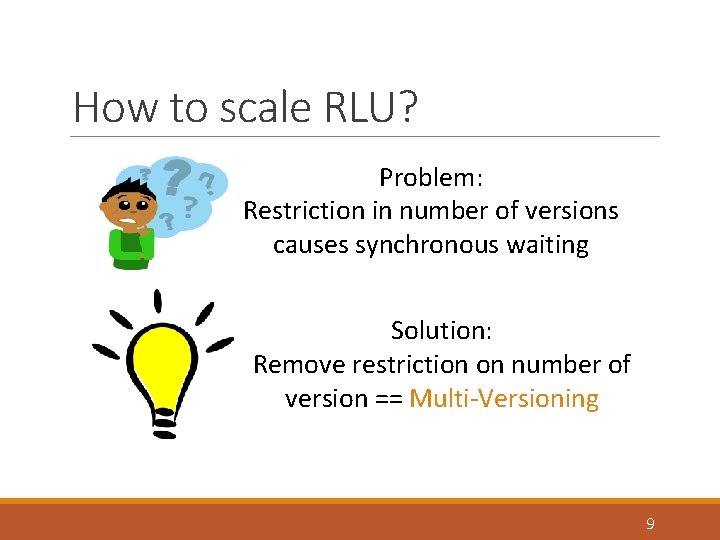

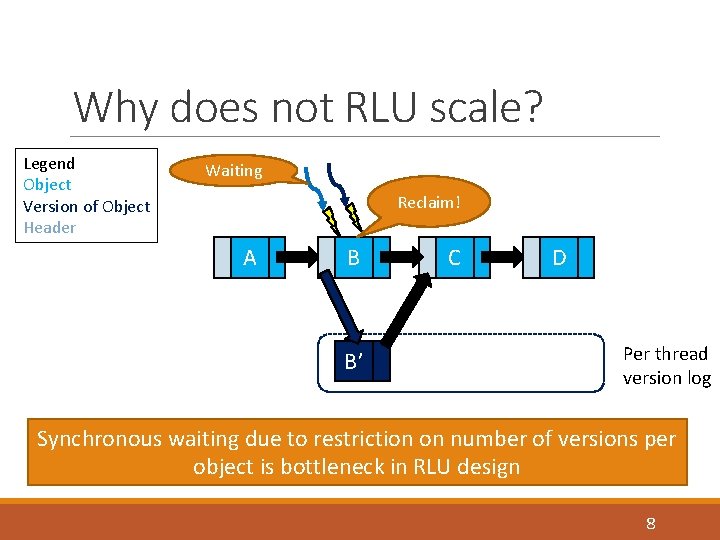

Why does not RLU scale? Legend Object Version of Object Header Waiting Reclaim! A B B’ C D Per thread version log Synchronous waiting due to restriction on number of versions per object is bottleneck in RLU design 8

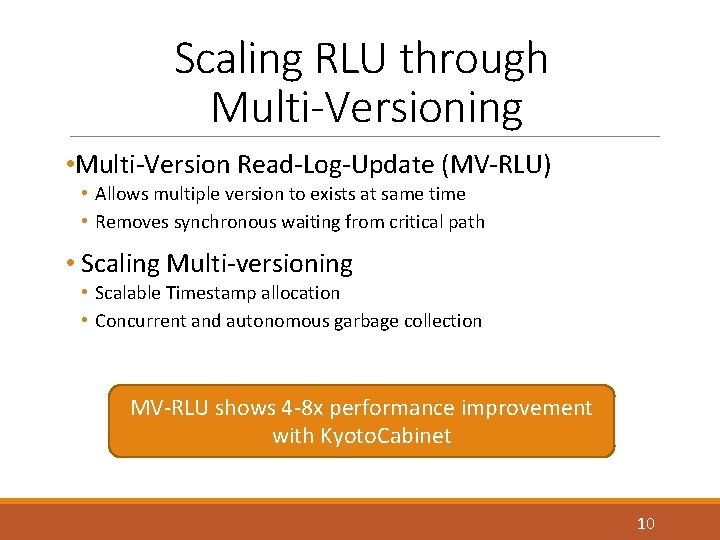

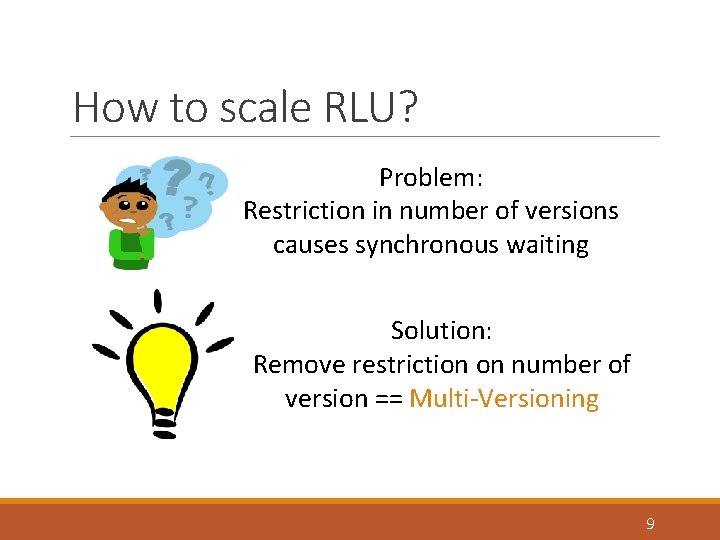

How to scale RLU? Problem: Restriction in number of versions causes synchronous waiting Solution: Remove restriction on number of version == Multi-Versioning 9

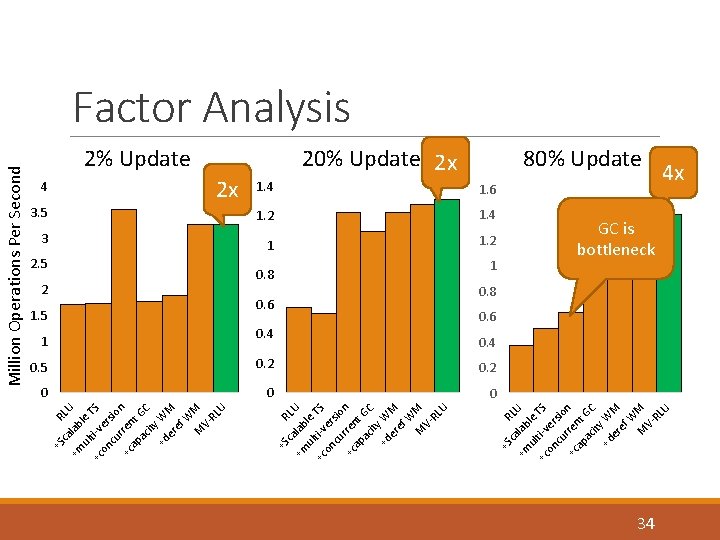

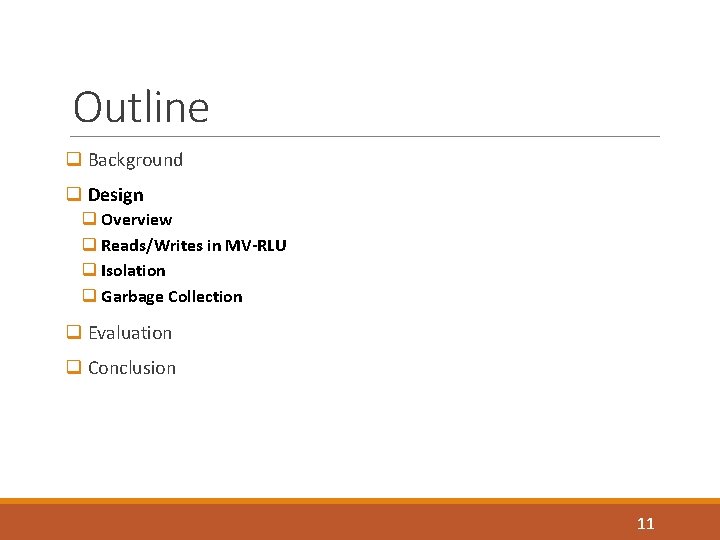

Scaling RLU through Multi-Versioning • Multi-Version Read-Log-Update (MV-RLU) • Allows multiple version to exists at same time • Removes synchronous waiting from critical path • Scaling Multi-versioning • Scalable Timestamp allocation • Concurrent and autonomous garbage collection MV-RLU shows 4 -8 x performance improvement with Kyoto. Cabinet 10

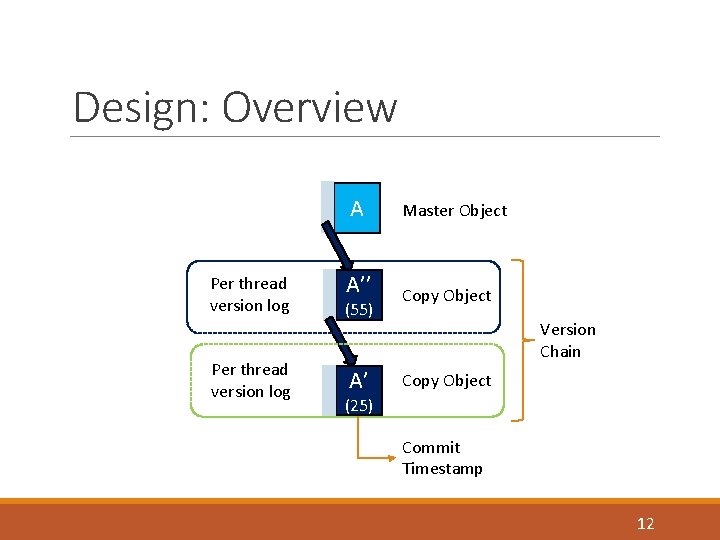

Outline q Background q Design q Overview q Reads/Writes in MV-RLU q Isolation q Garbage Collection q Evaluation q Conclusion 11

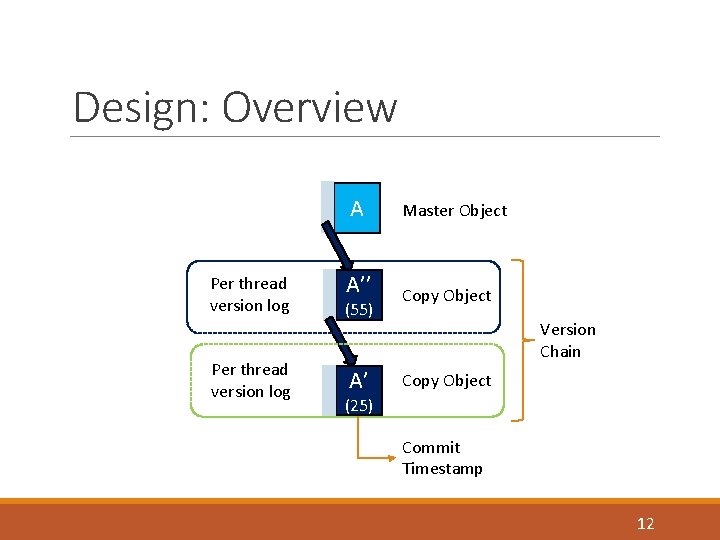

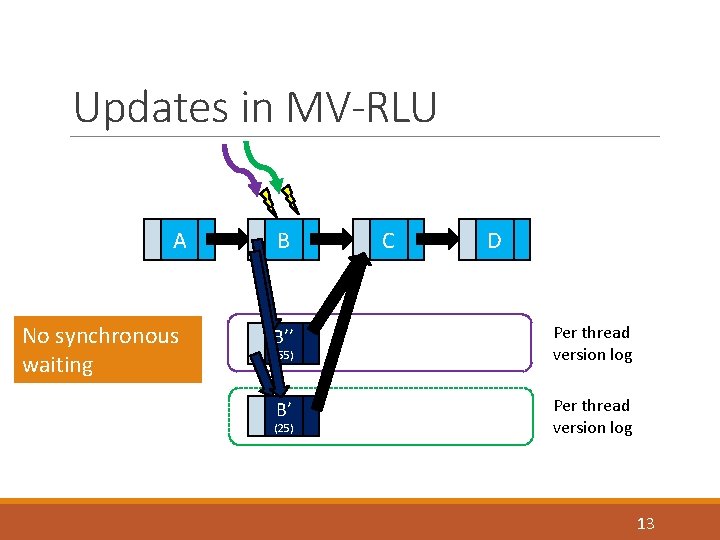

Design: Overview Per thread version log A Master Object A’’ Copy Object (55) A’ Version Chain Copy Object (25) Commit Timestamp 12

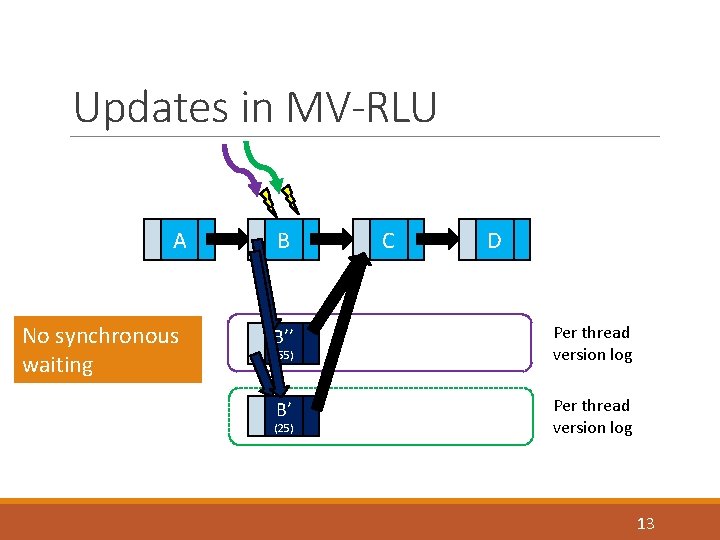

Updates in MV-RLU A B No synchronous waiting B’’ Per thread version log B’ Per thread version log (55) (25) C D 13

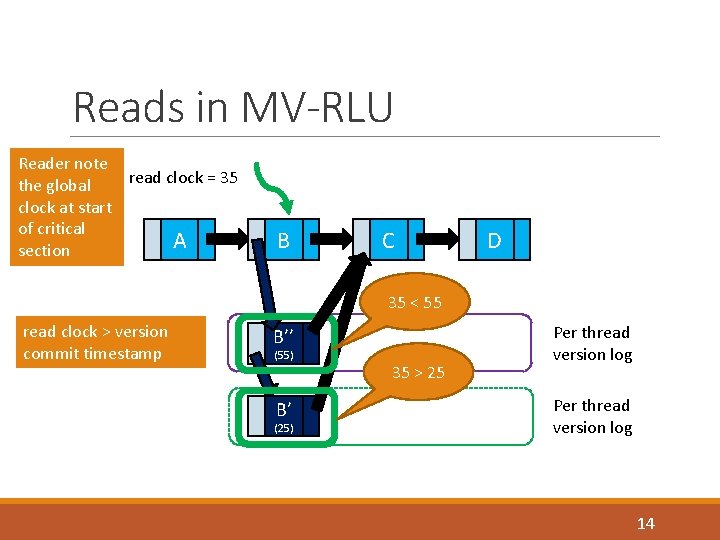

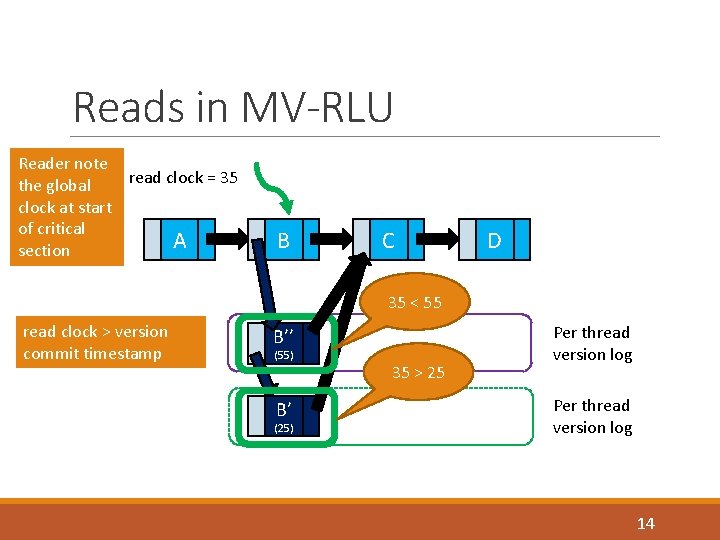

Reads in MV-RLU Reader note read clock = 35 the global clock at start of critical A section B C D 35 < 55 read clock > version commit timestamp B’’ (55) B’ (25) 35 > 25 Per thread version log 14

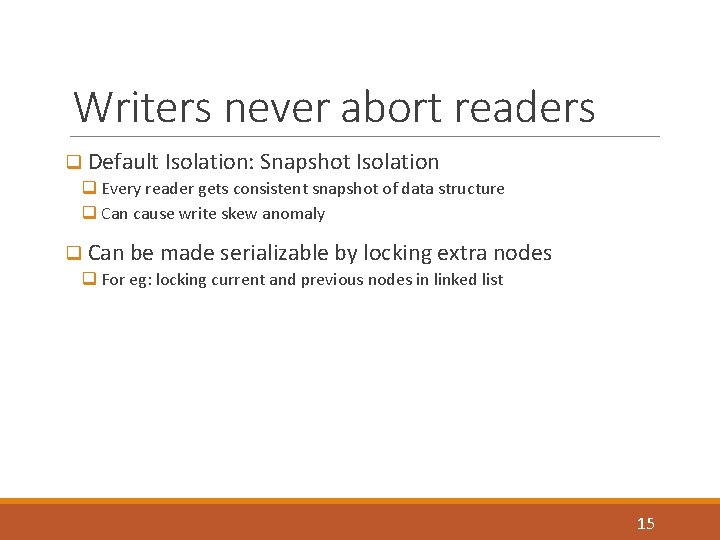

Writers never abort readers q Default Isolation: Snapshot Isolation q Every reader gets consistent snapshot of data structure q Can cause write skew anomaly q Can be made serializable by locking extra nodes q For eg: locking current and previous nodes in linked list 15

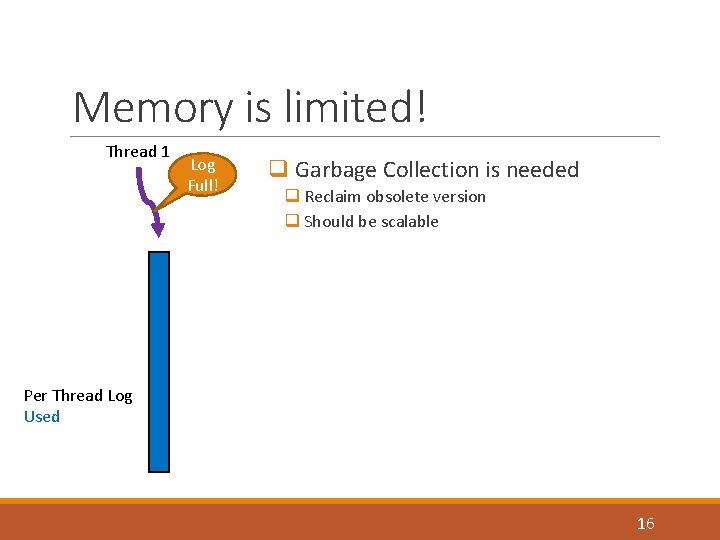

Memory is limited! Thread 1 Log Full! q Garbage Collection is needed q Reclaim obsolete version q Should be scalable Per Thread Log Used 16

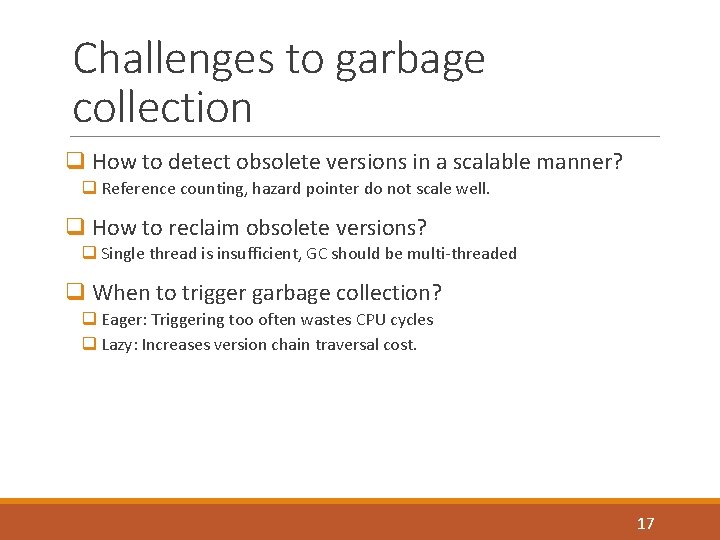

Challenges to garbage collection q How to detect obsolete versions in a scalable manner? q Reference counting, hazard pointer do not scale well. q How to reclaim obsolete versions? q Single thread is insufficient, GC should be multi-threaded q When to trigger garbage collection? q Eager: Triggering too often wastes CPU cycles q Lazy: Increases version chain traversal cost. 17

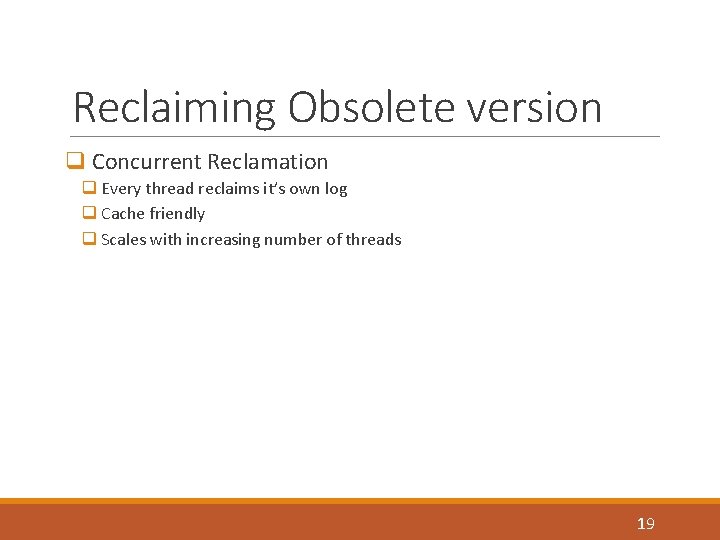

Detecting obsolete version q Quiescent State based Reclamation (QSBR) q Grace Period: Time interval between which each thread has been outside critical section at least once q Grace Period detection is delegated to a special thread 18

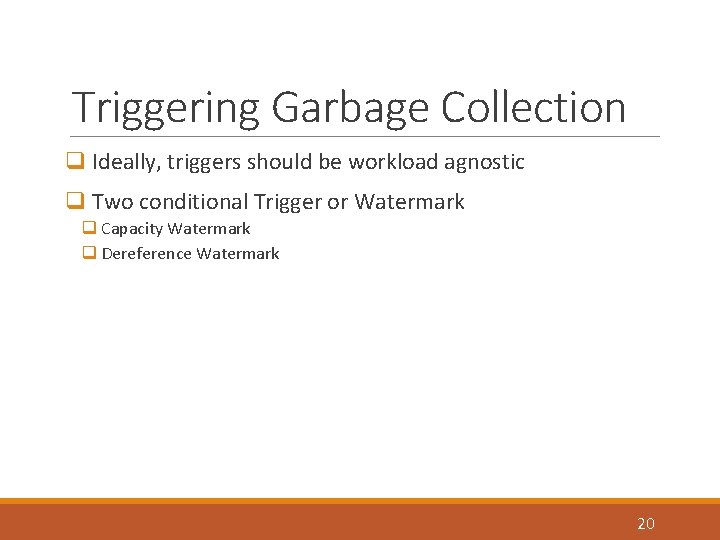

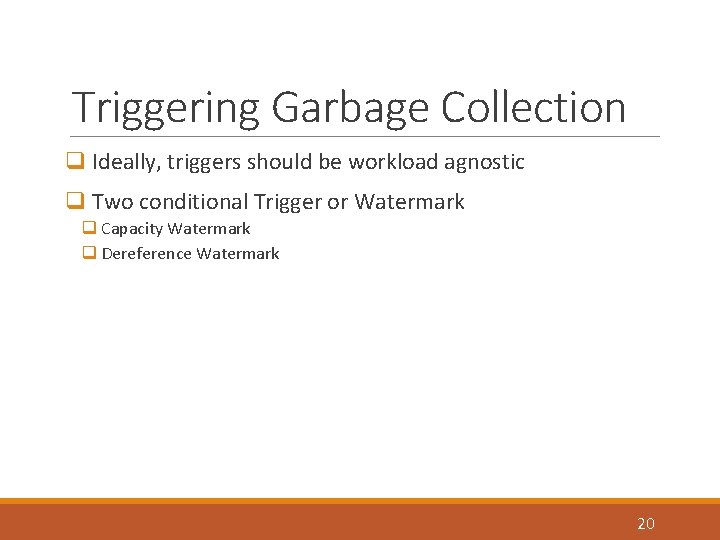

Reclaiming Obsolete version q Concurrent Reclamation q Every thread reclaims it’s own log q Cache friendly q Scales with increasing number of threads 19

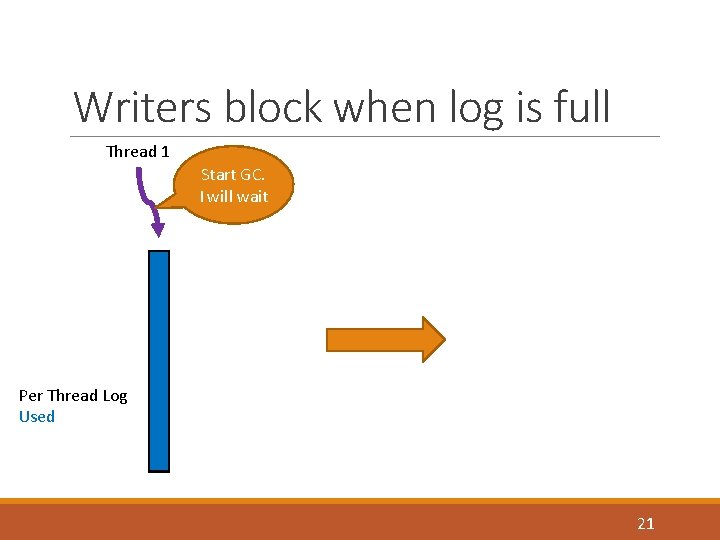

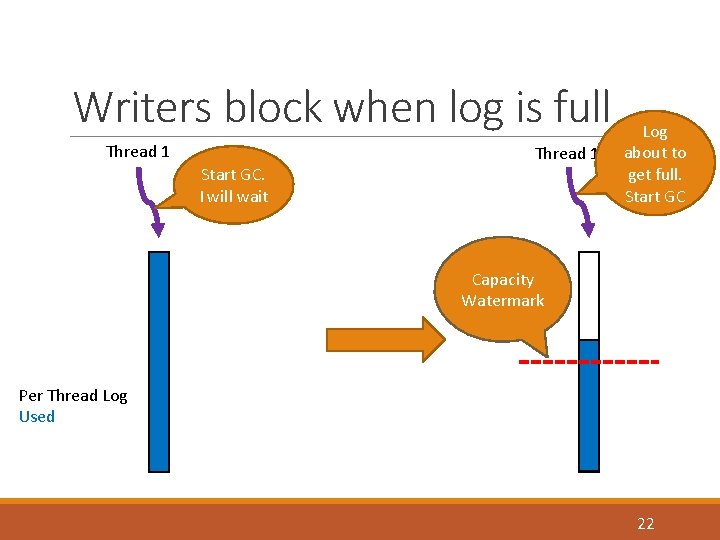

Triggering Garbage Collection q Ideally, triggers should be workload agnostic q Two conditional Trigger or Watermark q Capacity Watermark q Dereference Watermark 20

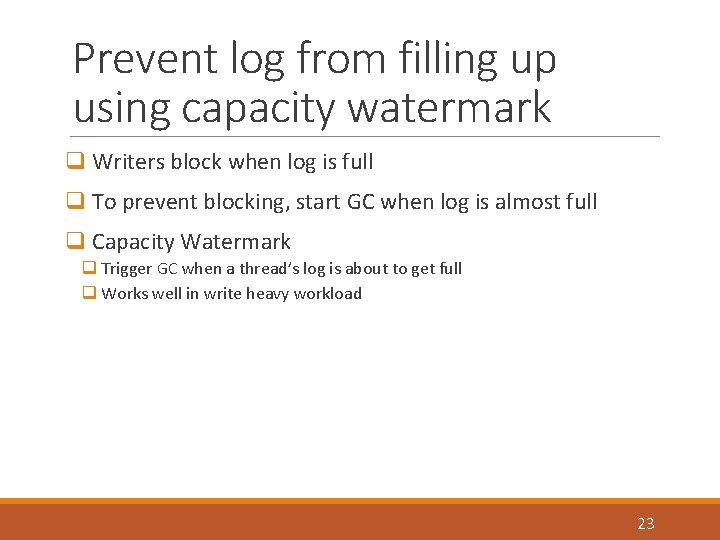

Writers block when log is full Thread 1 Start GC. I will wait Per Thread Log Used 21

Writers block when log is full Thread 1 Start GC. I will wait Thread 1 Log about to get full. Start GC Capacity Watermark Per Thread Log Used 22

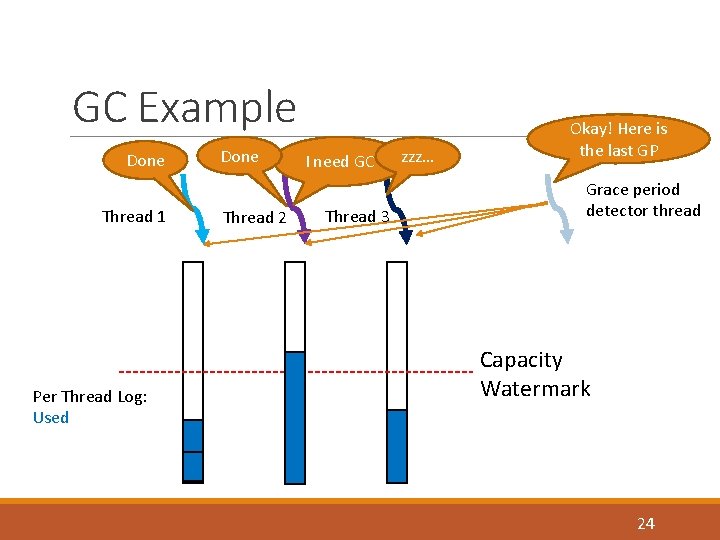

Prevent log from filling up using capacity watermark q Writers block when log is full q To prevent blocking, start GC when log is almost full q Capacity Watermark q Trigger GC when a thread’s log is about to get full q Works well in write heavy workload 23

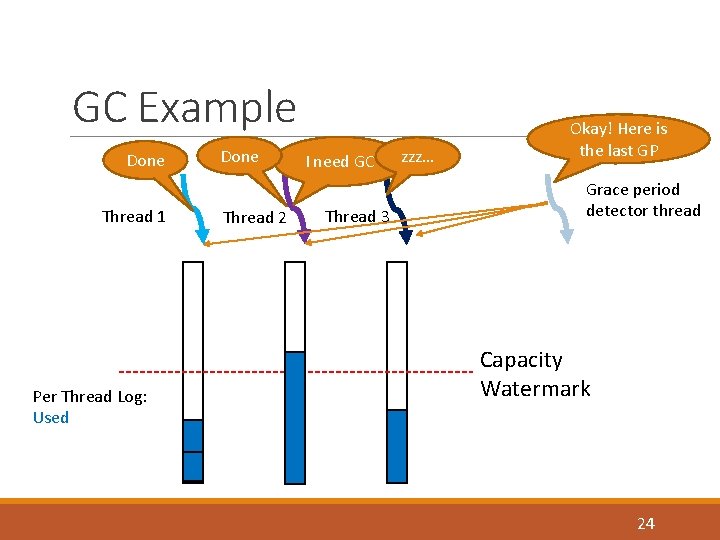

GC Example Done Thread 1 Per Thread Log: Used Done Thread 2 I need GC Thread 3 zzz… Okay! Here is the last GP Grace period detector thread Capacity Watermark 24

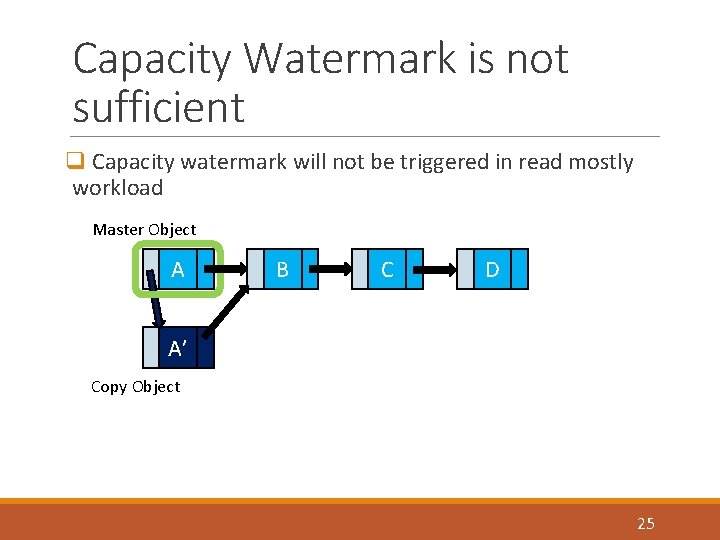

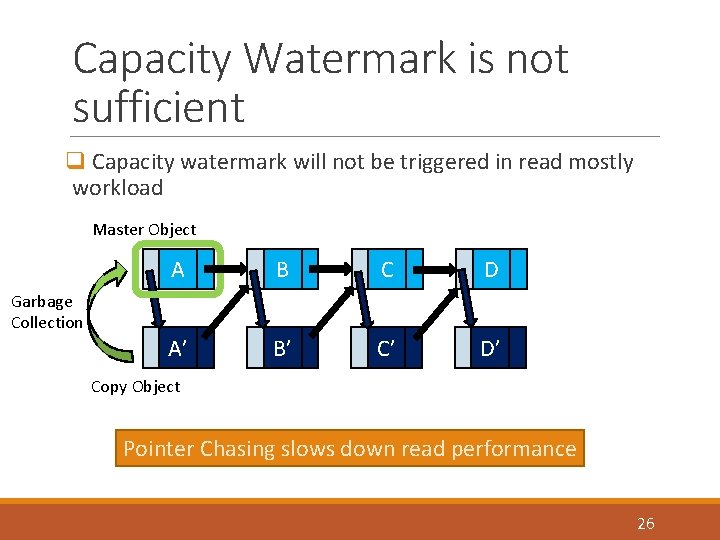

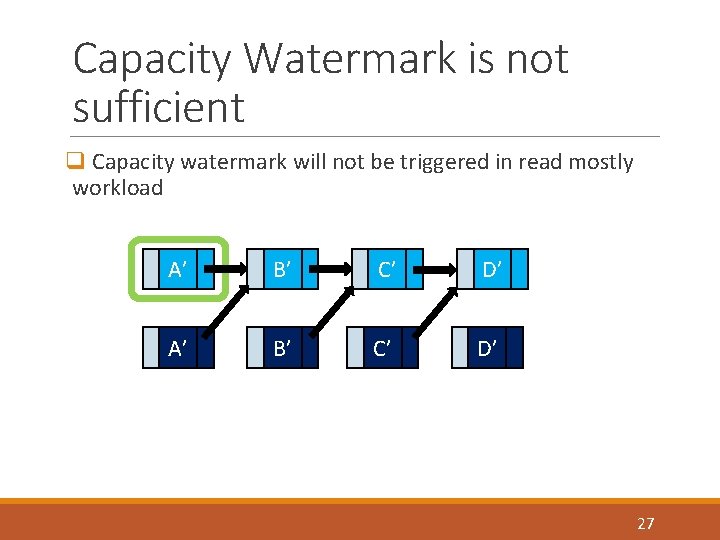

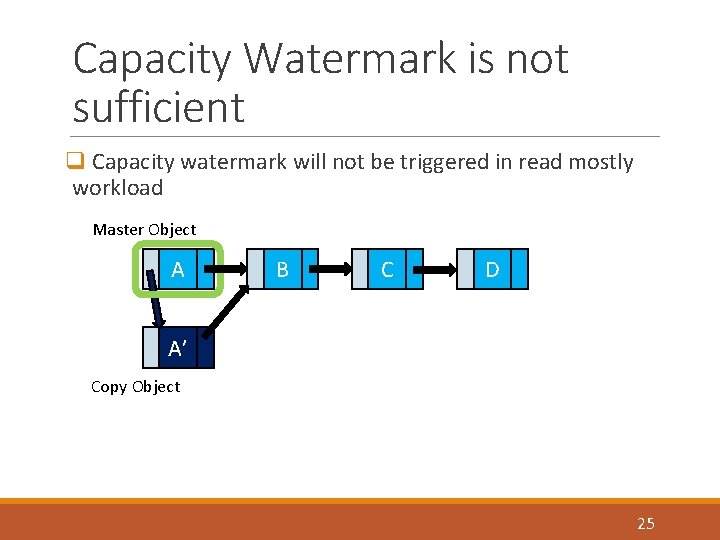

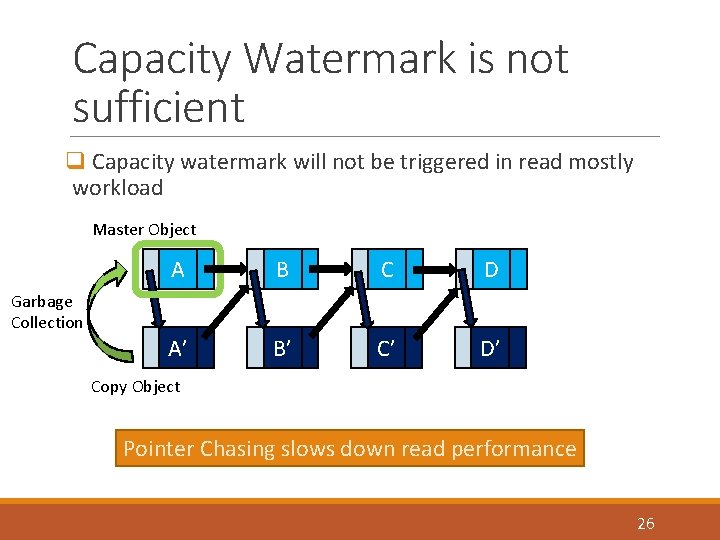

Capacity Watermark is not sufficient q Capacity watermark will not be triggered in read mostly workload Master Object A B C D A’ Copy Object 25

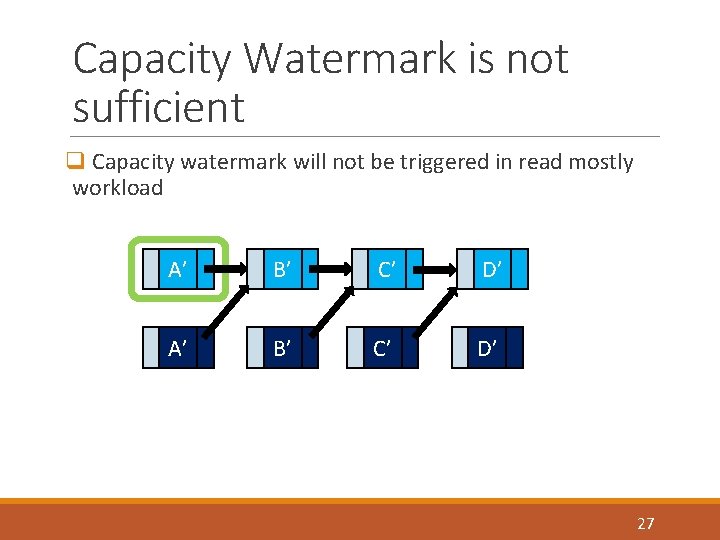

Capacity Watermark is not sufficient q Capacity watermark will not be triggered in read mostly workload Master Object Garbage Collection A B C D A’ B’ C’ D’ Copy Object Pointer Chasing slows down read performance 26

Capacity Watermark is not sufficient q Capacity watermark will not be triggered in read mostly workload A’ B’ C’ D’ 27

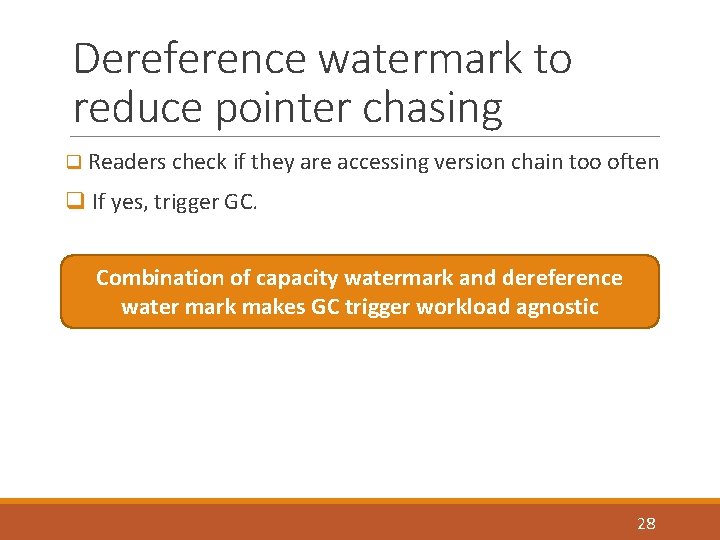

Dereference watermark to reduce pointer chasing q Readers check if they are accessing version chain too often q If yes, trigger GC. Combination of capacity watermark and dereference water mark makes GC trigger workload agnostic 28

More detail q Scalable timestamp allocation q Version Management q Proof of correctness q Implementation details 29

Outline q Background q Design q Evaluation q Conclusion 30

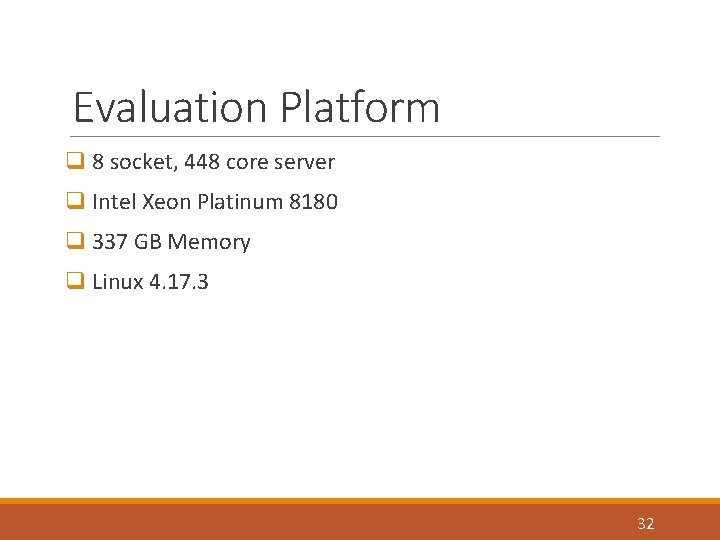

Evaluation Question q Does MV-RLU scale? q What is the impact of our proposed approaches? q What is its impact on real-world workloads? 31

Evaluation Platform q 8 socket, 448 core server q Intel Xeon Platinum 8180 q 337 GB Memory q Linux 4. 17. 3 32

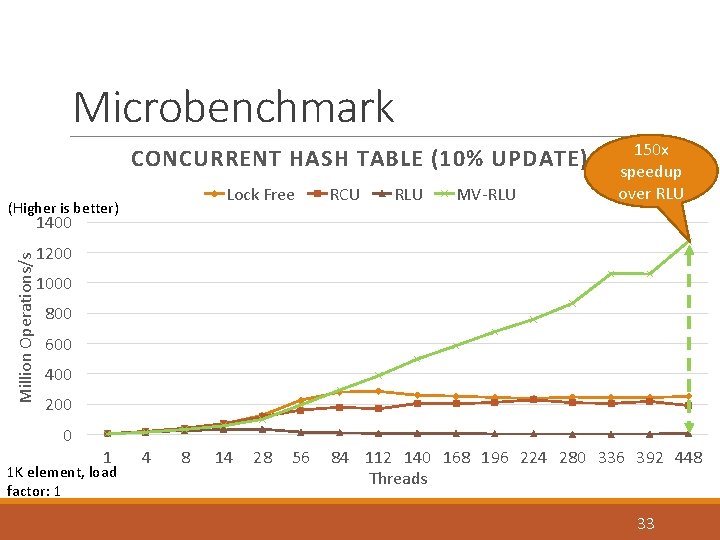

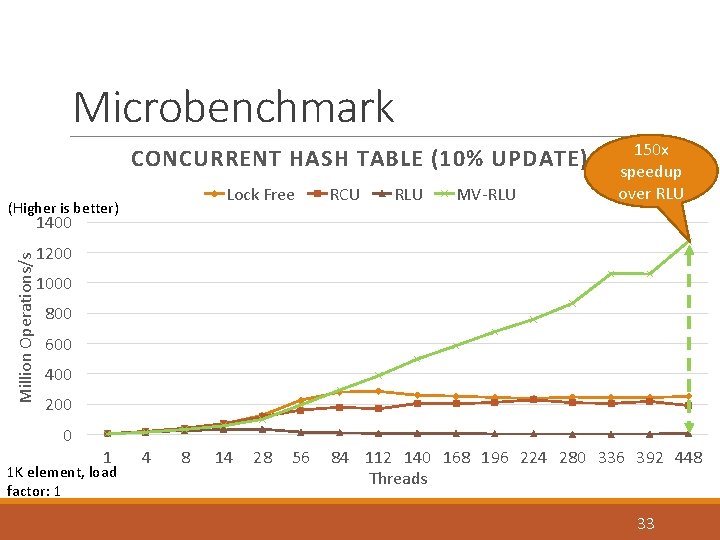

Microbenchmark CONCURRENT HASH TABLE (10% UPDATE) Lock Free (Higher is better) RCU RLU MV-RLU 150 x speedup over RLU Million Operations/s 1400 1200 1000 800 600 400 200 0 1 1 K element, load factor: 1 4 8 14 28 56 84 112 140 168 196 224 280 336 392 448 Threads 33

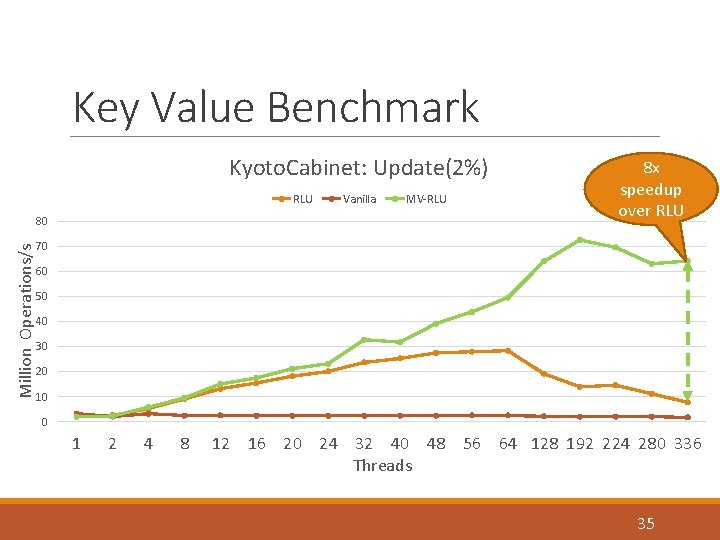

R L 1 1. 6 1. 2 1. 4 1 1. 2 0. 4 0. 5 0. 2 0 0 0 RL U a m bl u e + lti- TS co ve nc rs io u + rre n ca n pa t G cit C + y W de re M f W M M VRL U 0. 6 al 0. 8 Sc 1. 5 1. 4 + 2% Update + 2 R LU a m bl e u + lti- TS co ve nc rs io u + rre n ca n pa t G cit C + y W de re M f W M M VRL U 2. 5 al 3 Sc 3. 5 2 x + 4 + + m U l e u + lti-v TS co nc ersi o u + rre n ca nt pa G cit C + y W de M re f W M M VRL U ab al Sc + Million Operations Per Second Factor Analysis 20% Update 2 x 80% Update 1 34 4 x GC is bottleneck 0. 8 0. 6 0. 4

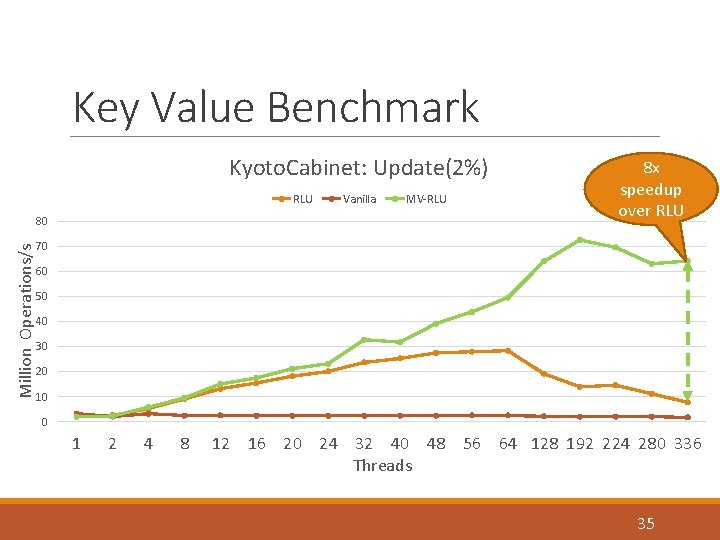

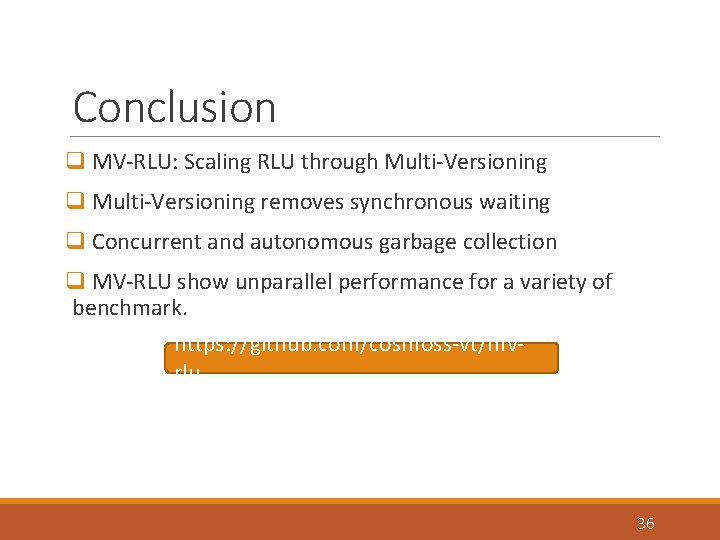

Key Value Benchmark Kyoto. Cabinet: Update(2%) RLU Million Operations/s 80 Vanilla MV-RLU 8 x speedup over RLU 70 60 50 40 30 20 10 0 1 2 4 8 12 16 20 24 32 40 48 56 64 128 192 224 280 336 Threads 35

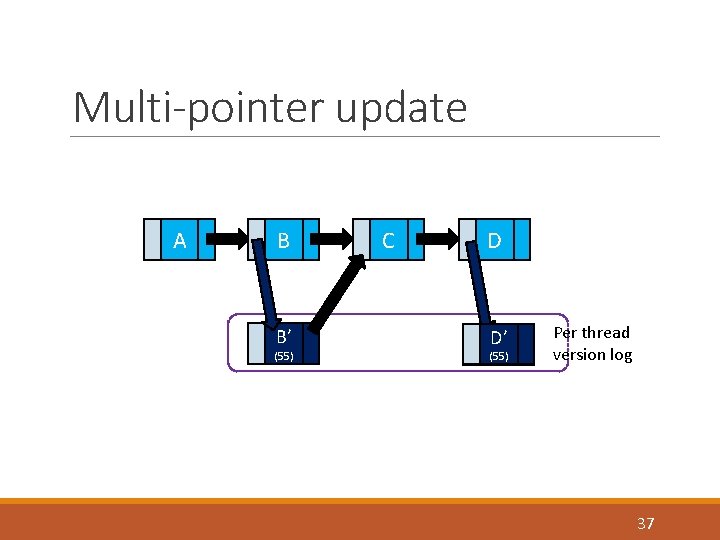

Conclusion q MV-RLU: Scaling RLU through Multi-Versioning q Multi-Versioning removes synchronous waiting q Concurrent and autonomous garbage collection q MV-RLU show unparallel performance for a variety of benchmark. https: //github. com/cosmoss-vt/mvrlu 36

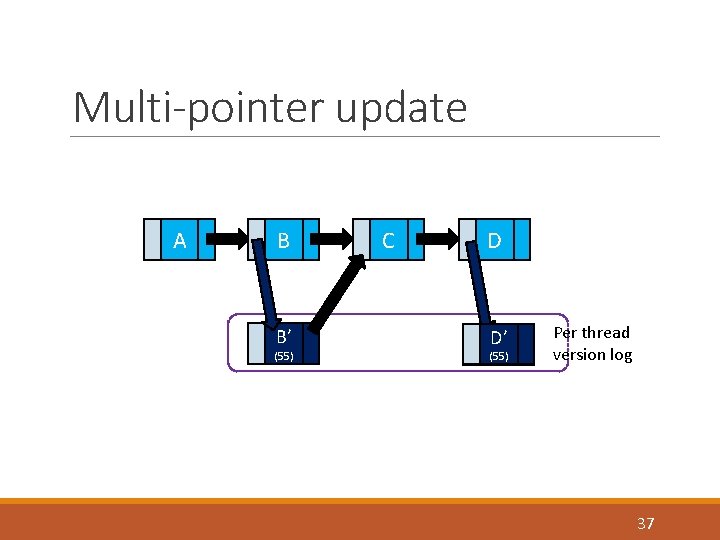

Multi-pointer update A B B’ (∞) (55) C D D’ (55) (∞) Per thread version log 37

Snapshot Isolation q MVRLU Serializable Snapshot Isolation q SSI works well for any application which can tolerate stale read q RCU is widely used which means lot of application for MV-RLU 38

Log size q Memory in computer systems is increasing q Persistent memory can increase total main memory significantly q Log size is a tuning parameter. 39