Mutlithreading Hyperthreading Chip Multiprocessing CMP Beyond ILP thread

Mutli-threading, Hyperthreading & Chip Multiprocessing (CMP) Beyond ILP: thread level parallelism (TLP) Multithreaded microarchitectures 12/14 Multi-Hyper thread. 1

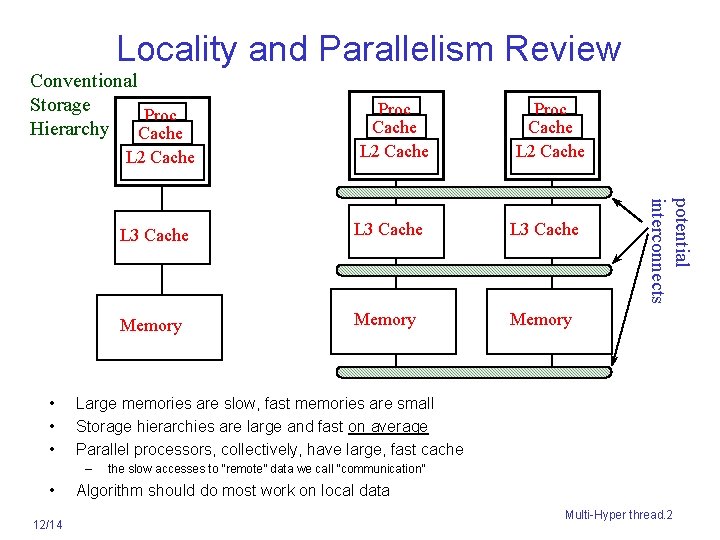

Locality and Parallelism Review Conventional Storage Proc Hierarchy Cache L 2 Cache 12/14 L 3 Cache Memory Large memories are slow, fast memories are small Storage hierarchies are large and fast on average Parallel processors, collectively, have large, fast cache – • Proc Cache L 2 Cache potential interconnects • • • Proc Cache L 2 Cache the slow accesses to “remote” data we call “communication” Algorithm should do most work on local data Multi-Hyper thread. 2

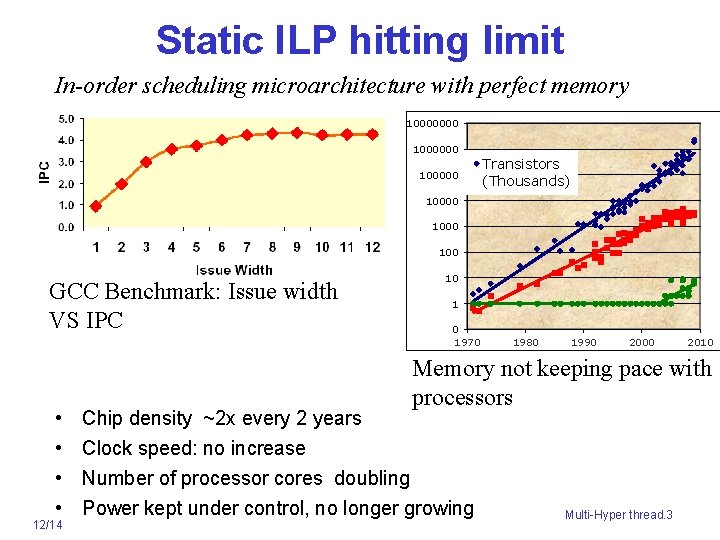

Static ILP hitting limit In-order scheduling microarchitecture with perfect memory 10000000 100000 Transistors (Thousands) 10000 100 GCC Benchmark: Issue width VS IPC • • 12/14 Chip density ~2 x every 2 years Clock speed: no increase 10 1 0 1970 1980 1990 2000 2010 Memory not keeping pace with processors Number of processor cores doubling Power kept under control, no longer growing Multi-Hyper thread. 3

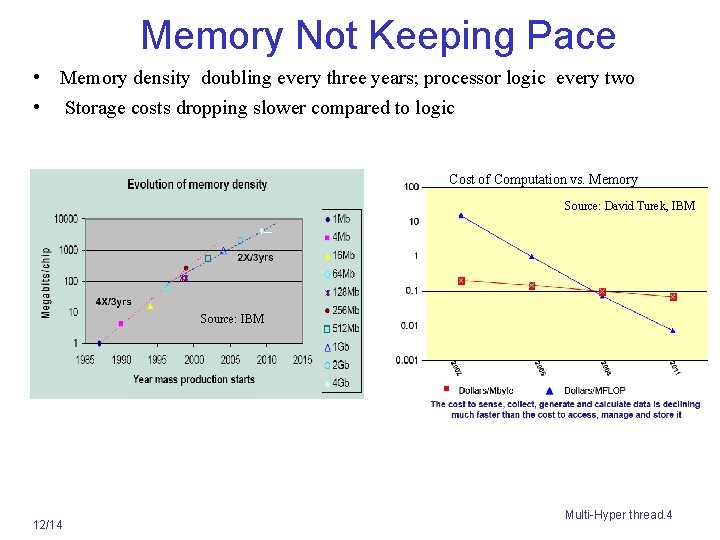

Memory Not Keeping Pace • Memory density doubling every three years; processor logic every two • Storage costs dropping slower compared to logic Cost of Computation vs. Memory Source: David Turek, IBM Source: IBM 12/14 Multi-Hyper thread. 4

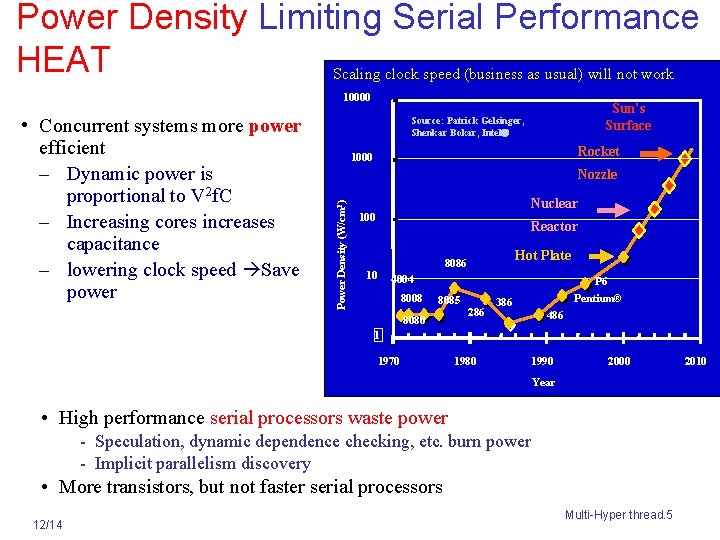

Power Density Limiting Serial Performance HEAT Scaling clock speed (business as usual) will not work 10000 Rocket 1000 Nozzle Power Density (W/cm 2) • Concurrent systems more power efficient – Dynamic power is proportional to V 2 f. C – Increasing cores increases capacitance – lowering clock speed Save power Sun’s Surface Source: Patrick Gelsinger, Shenkar Bokar, Intel Nuclear 100 Reactor Hot Plate 8086 10 4004 8008 P 6 8085 8080 286 Pentium® 386 486 1 1970 1980 1990 2000 Year • High performance serial processors waste power - Speculation, dynamic dependence checking, etc. burn power - Implicit parallelism discovery • More transistors, but not faster serial processors 12/14 Multi-Hyper thread. 5 2010

Parallelism Today: : Multicore • All processor vendors multicore chips – – Every machine is a parallel machine To double performance, double parallelism Can commercial applications use parallelism? rewritten from scratch? • Will programmers parallel programmers – New software models needed – hide complexity from most programmers – In the meantime, need to understand it • Computer industry betting on parallelism, but does not have all the answers – Berkeley Par. Lab & Stanford parallelism working on it 12/14 Multi-Hyper thread. 6

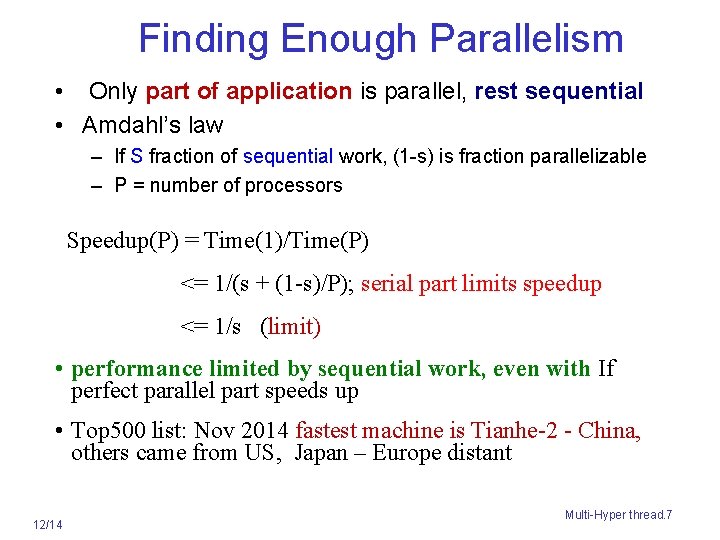

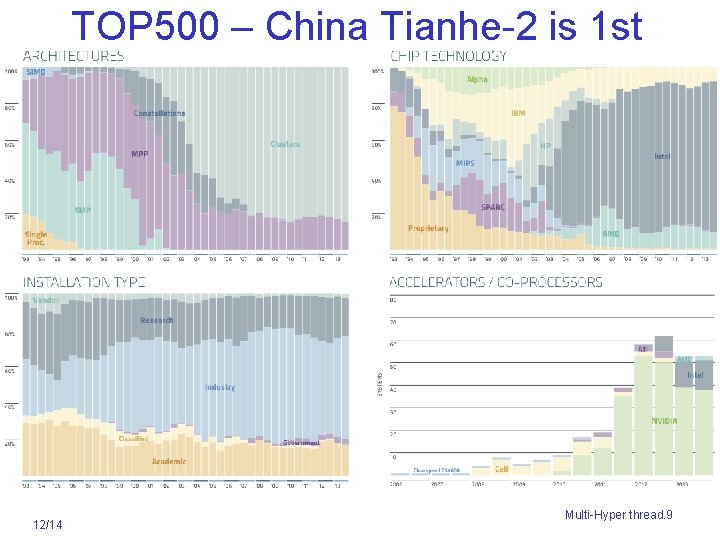

Finding Enough Parallelism • Only part of application is parallel, rest sequential • Amdahl’s law – If S fraction of sequential work, (1 -s) is fraction parallelizable – P = number of processors Speedup(P) = Time(1)/Time(P) <= 1/(s + (1 -s)/P); serial part limits speedup <= 1/s (limit) • performance limited by sequential work, even with If perfect parallel part speeds up • Top 500 list: Nov 2014 fastest machine is Tianhe-2 - China, others came from US, Japan – Europe distant 12/14 Multi-Hyper thread. 7

TOP 500 – China Tianhe-2 1 st nov 2014 12/14 Multi-Hyper thread. 8

TOP 500 – China Tianhe-2 is 1 st 12/14 Multi-Hyper thread. 9

Parallelism has Overhead barrier • Parallelism overheads: – Starting thread / process – communicating shared data – Synchronizing • Each can be in milliseconds (M flops) • Tradeoff: Algorithm needs large units of work to run fast in parallel (i. e. large granularity), but not too large; not enough parallel work 12/14 Multi-Hyper thread. 10

Performance beyond single thread TLP • natural parallelism in applications (e. g. , Database / Scientific ) • Explicit Thread Level Parallelism or Data Level Parallelism • Thread: instruction stream with own PC and data – Eg. Online transaction processing, scientific nature modeling, . . – Each thread has (instructions, data, PC, register state, and so on) necessary to execute • Data Level Parallelism: eg multimedia ; identical operations on data, , vector was predecessor 12/14 Multi-Hyper thread. 11

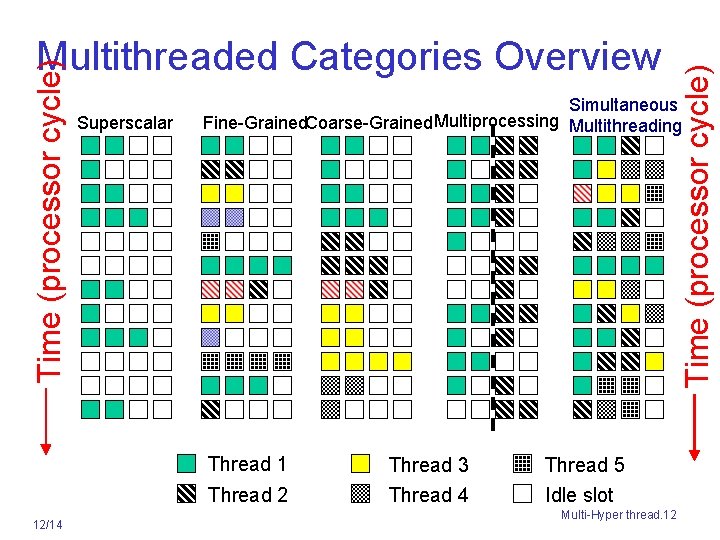

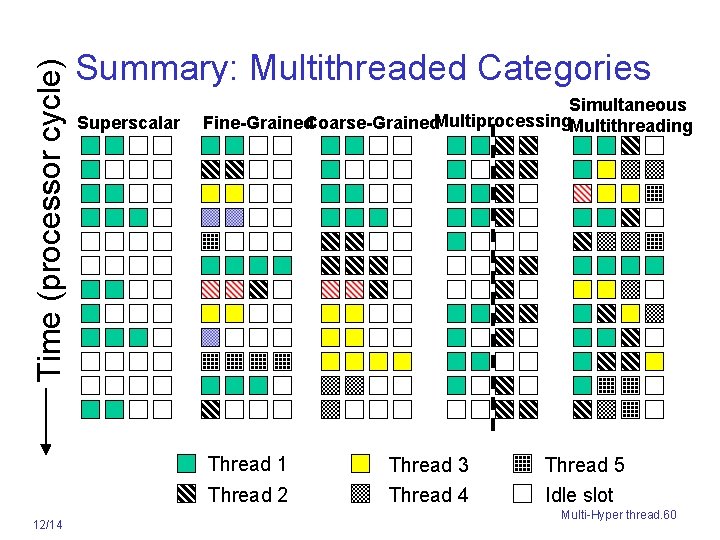

Superscalar Simultaneous Fine-Grained. Coarse-Grained Multiprocessing Multithreading Thread 1 Thread 2 12/14 Time (processor cycle) Multithreaded Categories Overview Thread 3 Thread 4 Thread 5 Idle slot Multi-Hyper thread. 12

Multithreaded Execution • multiple threads share processor functional units – processor duplicates independent state of each thread e. g. , a separate copy of register file, a separate PC, and for running independent programs, a separate page table – memory shared through virtual memory mechanisms – HW for fast thread switch; much faster than full process switch 100 s to 1000 s of clocks • When switch? – fine grain Alternate instruction per thread – coarse grain When thread stalls, eg cache miss; 12/14 Multi-Hyper thread. 13

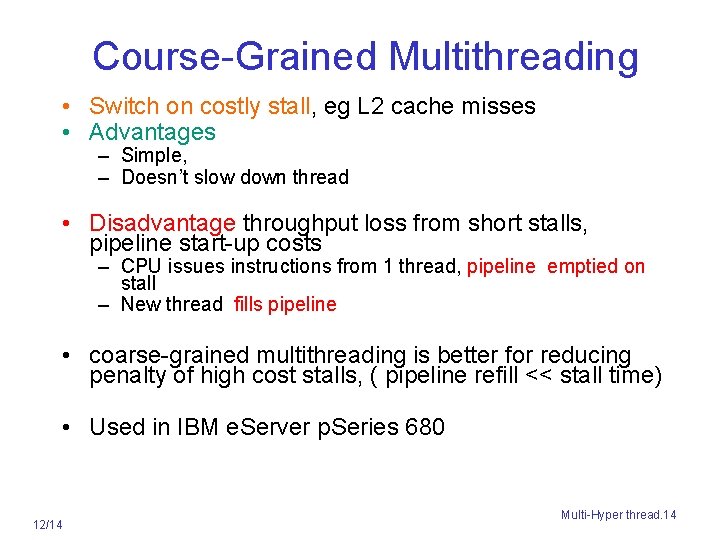

Course-Grained Multithreading • Switch on costly stall, eg L 2 cache misses • Advantages – Simple, – Doesn’t slow down thread • Disadvantage throughput loss from short stalls, pipeline start-up costs – CPU issues instructions from 1 thread, pipeline emptied on stall – New thread fills pipeline • coarse-grained multithreading is better for reducing penalty of high cost stalls, ( pipeline refill << stall time) • Used in IBM e. Server p. Series 680 12/14 Multi-Hyper thread. 14

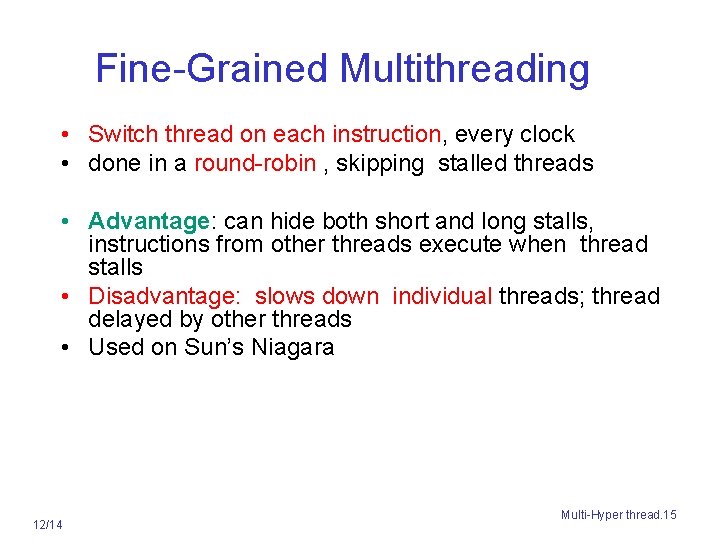

Fine-Grained Multithreading • Switch thread on each instruction, every clock • done in a round-robin , skipping stalled threads • Advantage: can hide both short and long stalls, instructions from other threads execute when thread stalls • Disadvantage: slows down individual threads; thread delayed by other threads • Used on Sun’s Niagara 12/14 Multi-Hyper thread. 15

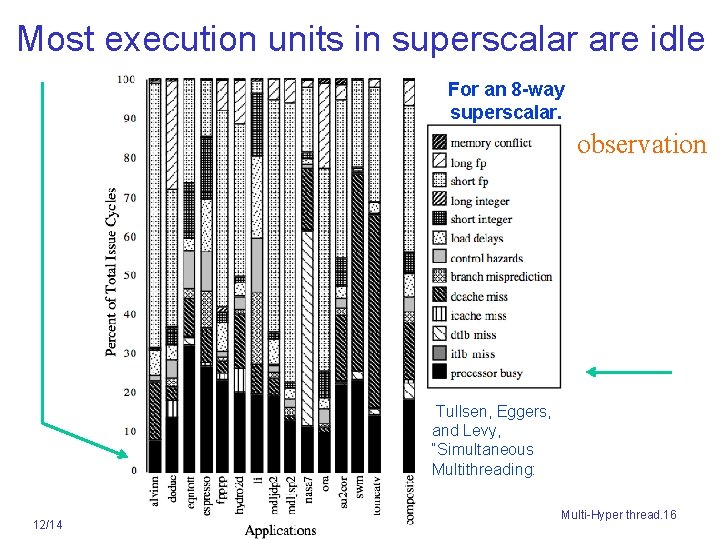

Most execution units in superscalar are idle For an 8 -way superscalar. observation Tullsen, Eggers, and Levy, “Simultaneous Multithreading: 12/14 Multi-Hyper thread. 16

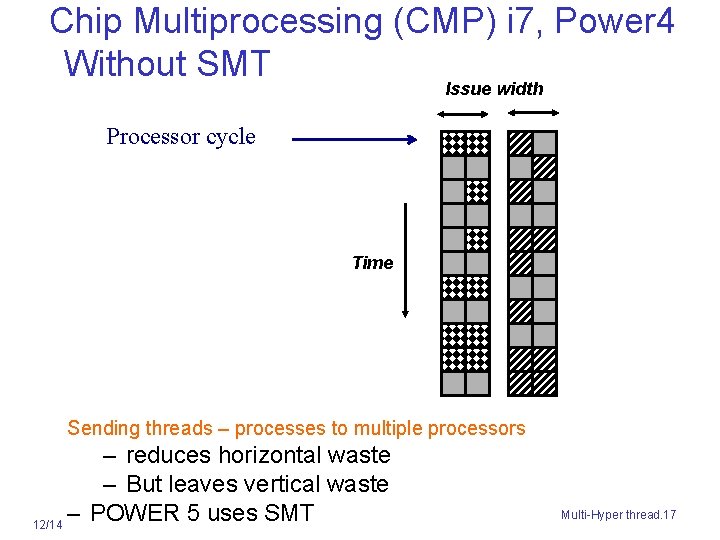

Chip Multiprocessing (CMP) i 7, Power 4 Without SMT Issue width Processor cycle Time Sending threads – processes to multiple processors – reduces horizontal waste – But leaves vertical waste – POWER 5 uses SMT 12/14 Multi-Hyper thread. 17

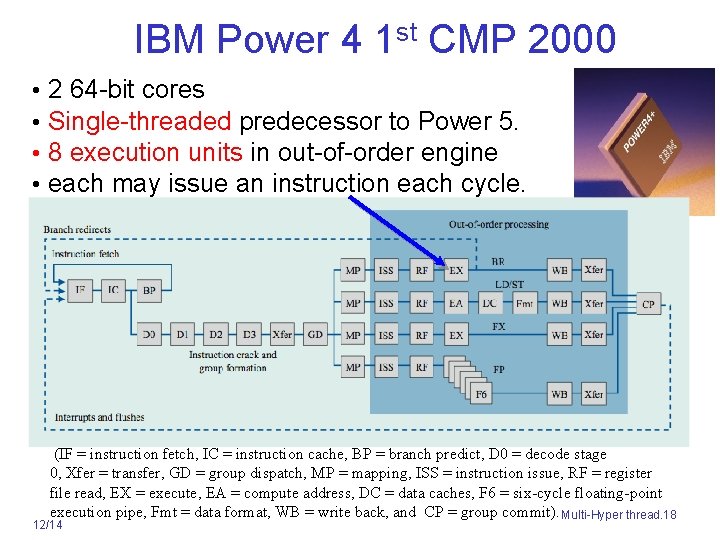

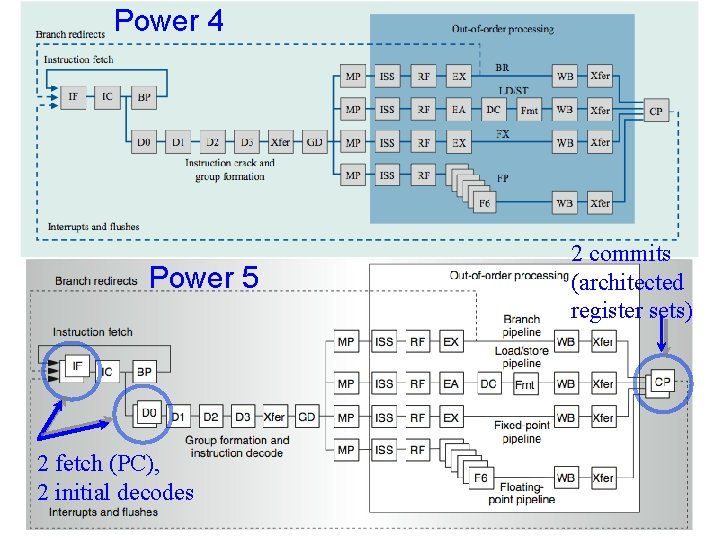

IBM Power 4 1 st CMP 2000 • • 2 64 -bit cores Single-threaded predecessor to Power 5. 8 execution units in out-of-order engine each may issue an instruction each cycle. (IF = instruction fetch, IC = instruction cache, BP = branch predict, D 0 = decode stage 0, Xfer = transfer, GD = group dispatch, MP = mapping, ISS = instruction issue, RF = register file read, EX = execute, EA = compute address, DC = data caches, F 6 = six-cycle floating-point execution pipe, Fmt = data format, WB = write back, and CP = group commit). Multi-Hyper thread. 18 12/14

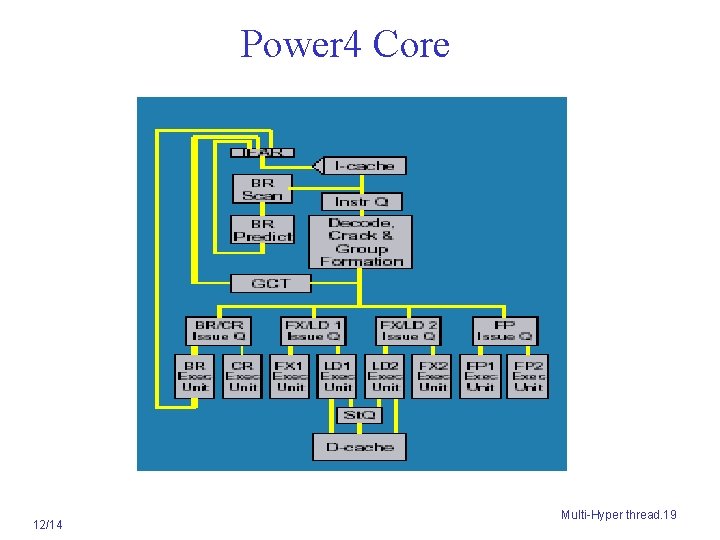

Power 4 Core 12/14 Multi-Hyper thread. 19

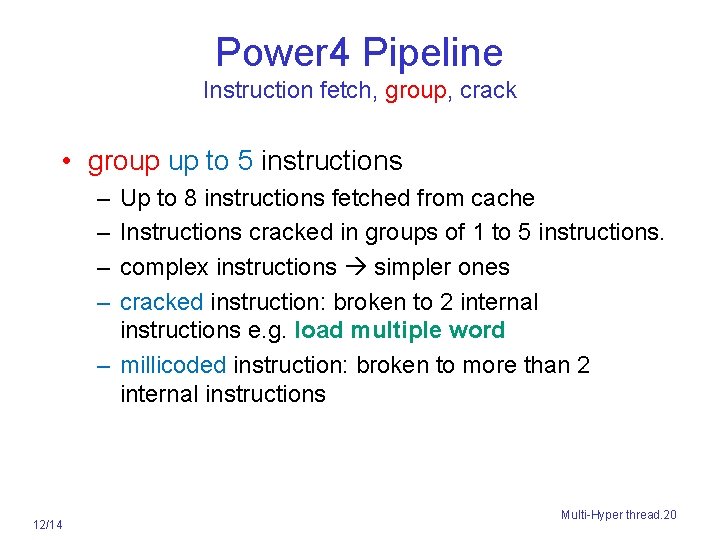

Power 4 Pipeline Instruction fetch, group, crack • group up to 5 instructions – – Up to 8 instructions fetched from cache Instructions cracked in groups of 1 to 5 instructions. complex instructions simpler ones cracked instruction: broken to 2 internal instructions e. g. load multiple word – millicoded instruction: broken to more than 2 internal instructions 12/14 Multi-Hyper thread. 20

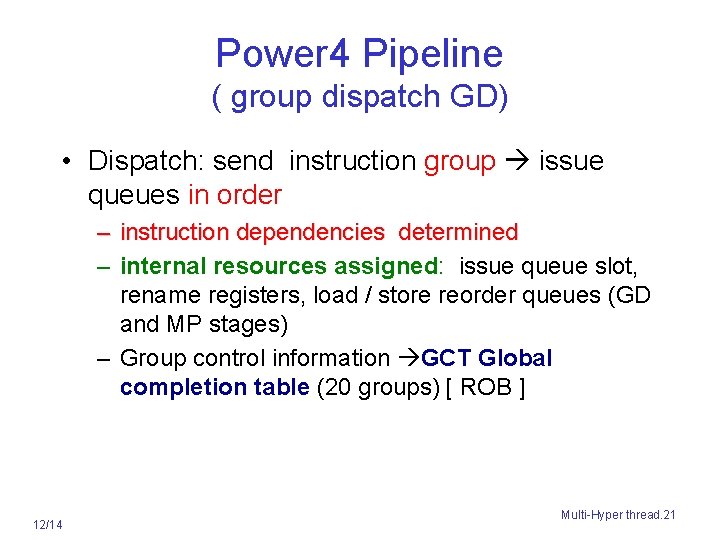

Power 4 Pipeline ( group dispatch GD) • Dispatch: send instruction group issue queues in order – instruction dependencies determined – internal resources assigned: issue queue slot, rename registers, load / store reorder queues (GD and MP stages) – Group control information GCT Global completion table (20 groups) [ ROB ] 12/14 Multi-Hyper thread. 21

Power 4 Pipeline ( group dispatch – one group / cycle) • Group separate issue queues: floating-point, branch execution, fixed-point and load/store units. • Fixed point (integer) & load/store units share common issue queues. • issue stage (ISS): ready to execute instructions pulled out of issue queues. 12/14 Multi-Hyper thread. 22

Power 4 Pipeline • Instruction execution EX, speculation, rename resources (GPRs from 32 -- 80) • Branch Prediction BP – conditional branches are predicted, instructions fetched and speculatively executed – 3 history tables used – processing continues If prediction is correct, ELSE – instructions flushed and instruction fetching redirected. 12/14 Multi-Hyper thread. 23

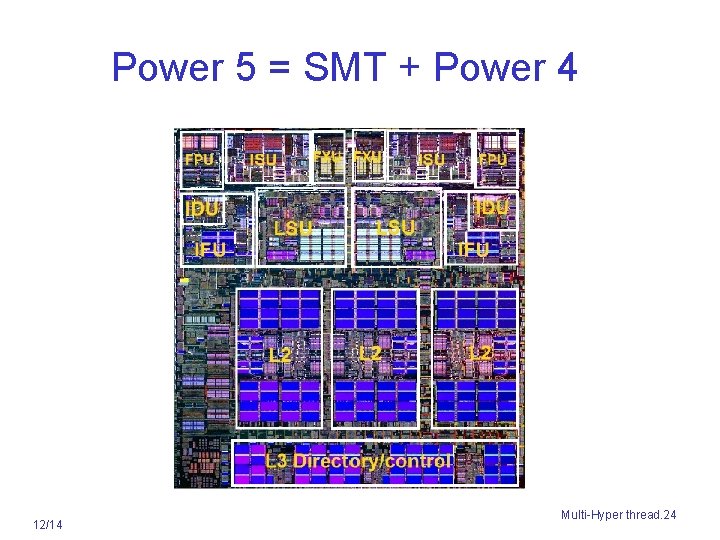

Power 5 = SMT + Power 4 12/14 Multi-Hyper thread. 24

Power 4 2 commits (architected register sets) Power 5 2 fetch (PC), 2 initial decodes 12/14 3/1/2010 Multi-Hyper thread. 25

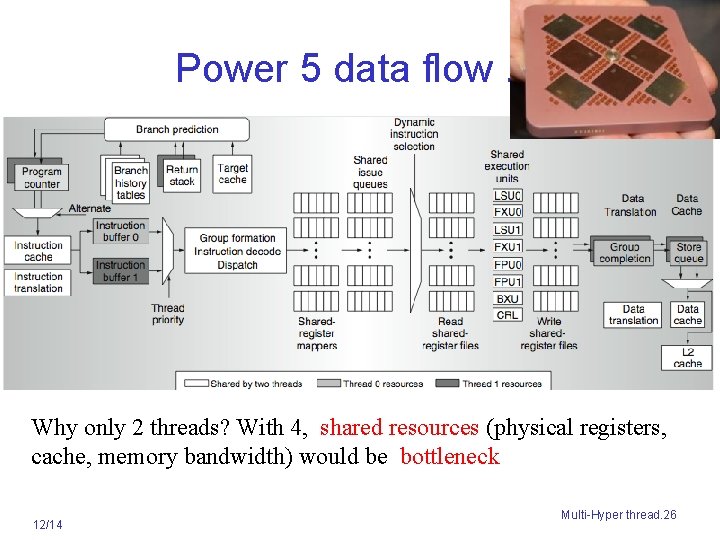

Power 5 data flow. . . Why only 2 threads? With 4, shared resources (physical registers, cache, memory bandwidth) would be bottleneck 12/14 Multi-Hyper thread. 26

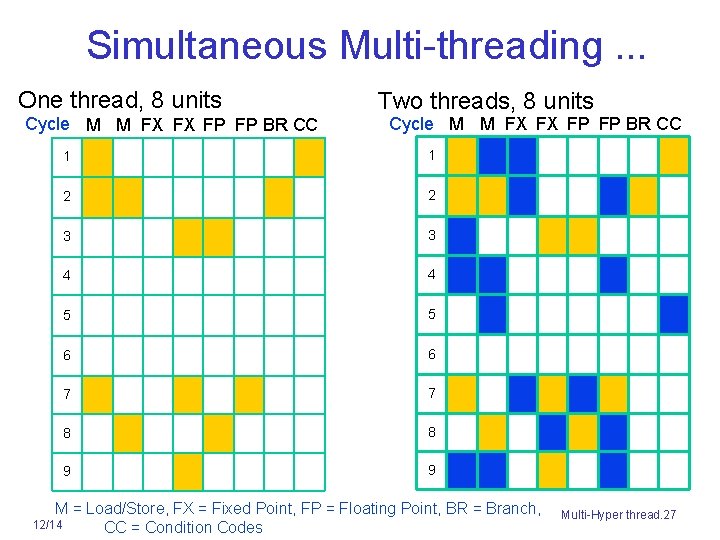

Simultaneous Multi-threading. . . One thread, 8 units Cycle M M FX FX FP FP BR CC Two threads, 8 units Cycle M M FX FX FP FP BR CC 1 1 2 2 3 3 4 4 5 5 6 6 7 7 8 8 9 9 M = Load/Store, FX = Fixed Point, FP = Floating Point, BR = Branch, 12/14 CC = Condition Codes Multi-Hyper thread. 27

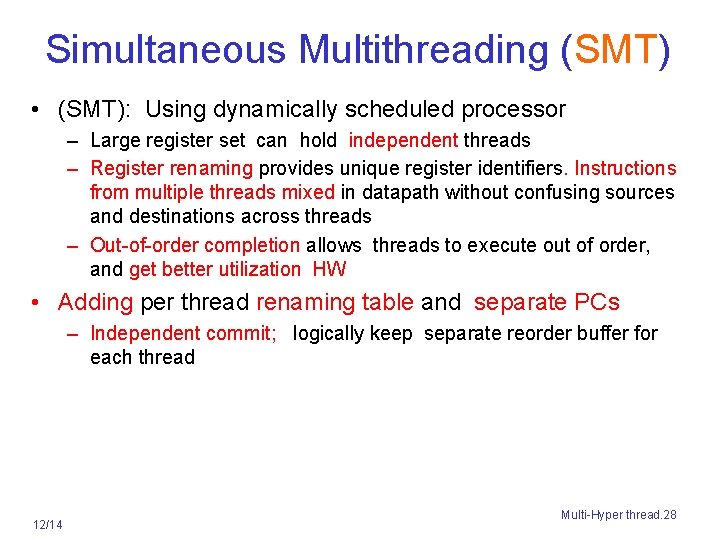

Simultaneous Multithreading (SMT) • (SMT): Using dynamically scheduled processor – Large register set can hold independent threads – Register renaming provides unique register identifiers. Instructions from multiple threads mixed in datapath without confusing sources and destinations across threads – Out-of-order completion allows threads to execute out of order, and get better utilization HW • Adding per thread renaming table and separate PCs – Independent commit; logically keep separate reorder buffer for each thread 12/14 Multi-Hyper thread. 28

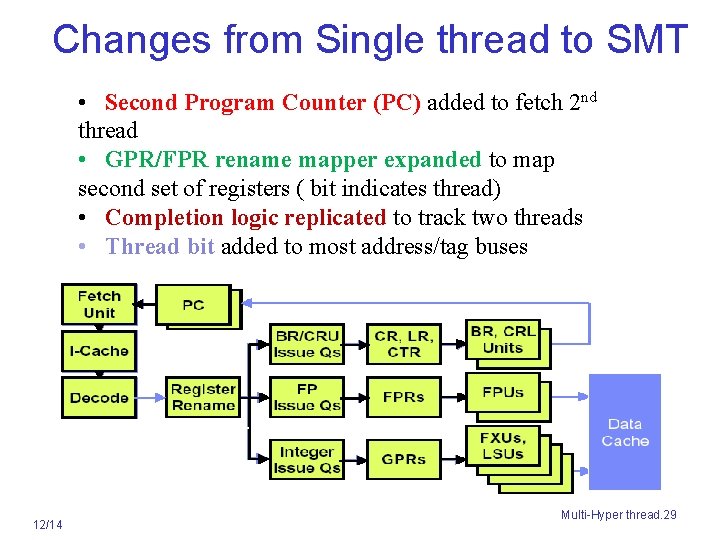

Changes from Single thread to SMT • Second Program Counter (PC) added to fetch 2 nd thread • GPR/FPR rename mapper expanded to map second set of registers ( bit indicates thread) • Completion logic replicated to track two threads • Thread bit added to most address/tag buses 12/14 Multi-Hyper thread. 29

Changes in Power 5 to support SMT • Increased associativity of L 1 I cache and instruction address translation buffers –(ITLB) • Added load - store queues / per thread • Increased L 2 , L 3 size (1. 92 vs. 1. 44 MB) • separate instruction prefetch and buffering per thread • Increased number of virtual registers from 152 to 240 – rename registers • Increased the size of issue queues • Power 5 core 24% larger than the Power 4 core to support SMT 12/14 Multi-Hyper thread. 30

SMT Design Issues • SMT , impact on single thread performance? • Larger register file needed to hold multiple contexts • Clock cycle time, especially in: – Instruction issue - more candidate instructions need to be considered – Instruction completion - choosing which instructions to commit challenging • Cache and TLB conflicts generated by SMT degrade performance 12/14 Multi-Hyper thread. 31

Resource Sharing -- effects • Threads share many resources –GCT, BHT, TLB, . . • Resources balanced across threads for Higher performance • drifting to extremes reduced performance Solution: Dynamically adjust resource utilization 12/14 Multi-Hyper thread. 32

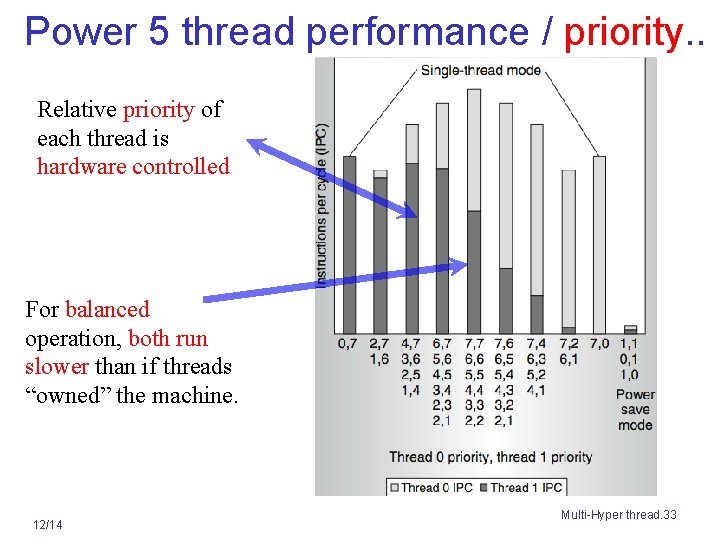

Power 5 thread performance / priority. . Relative priority of each thread is hardware controlled For balanced operation, both run slower than if threads “owned” the machine. 12/14 Multi-Hyper thread. 33

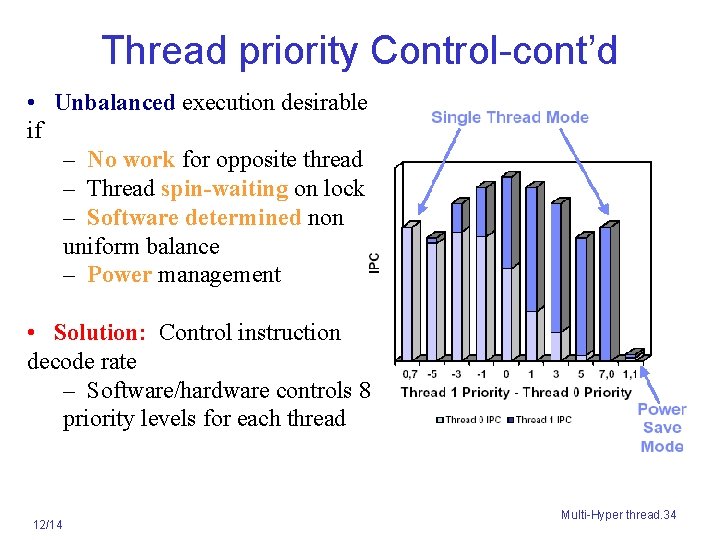

Thread priority Control-cont’d • Unbalanced execution desirable if – No work for opposite thread – Thread spin-waiting on lock – Software determined non uniform balance – Power management • Solution: Control instruction decode rate – Software/hardware controls 8 priority levels for each thread 12/14 Multi-Hyper thread. 34

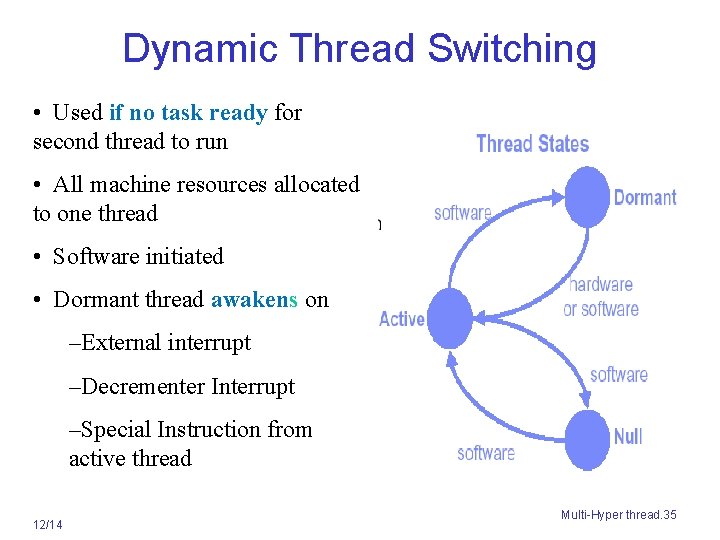

Dynamic Thread Switching • Used if no task ready for second thread to run • All machine resources allocated to one thread • Software initiated • Dormant thread awakens on –External interrupt –Decrementer Interrupt –Special Instruction from active thread 12/14 Multi-Hyper thread. 35

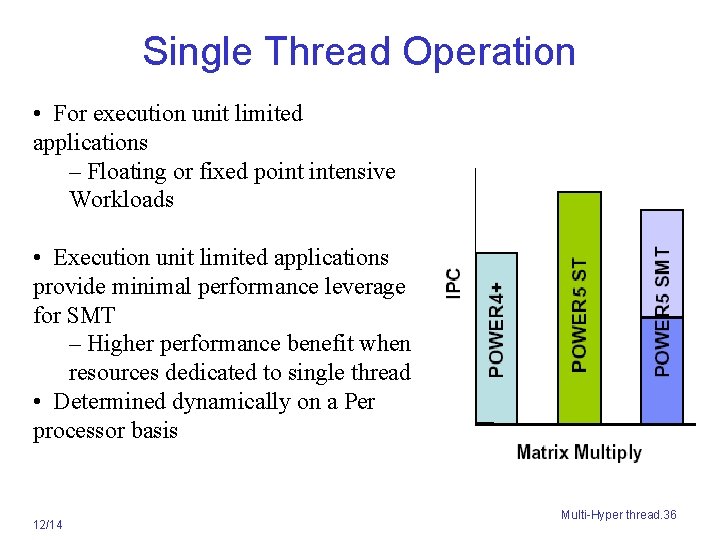

Single Thread Operation • For execution unit limited applications – Floating or fixed point intensive Workloads • Execution unit limited applications provide minimal performance leverage for SMT – Higher performance benefit when resources dedicated to single thread • Determined dynamically on a Per processor basis 12/14 Multi-Hyper thread. 36

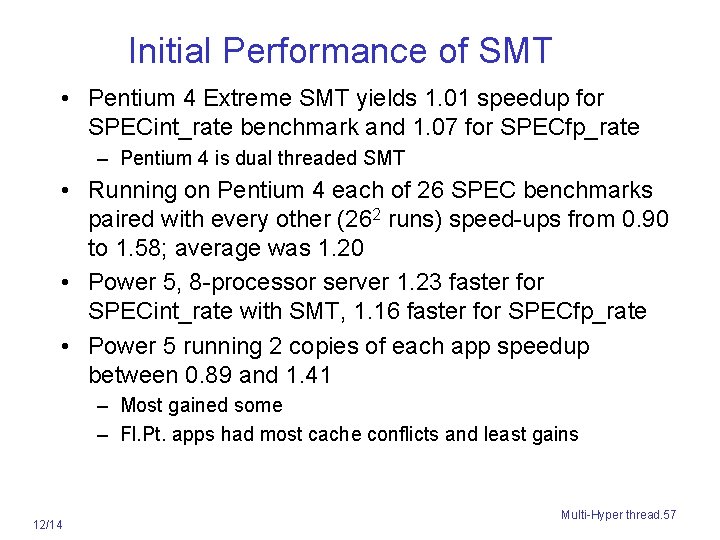

Initial Performance of SMT • Pentium 4 Extreme SMT yields 1. 01 speedup for SPECint_rate benchmark and 1. 07 for SPECfp_rate – Pentium 4 is dual threaded SMT – SPECRate requires that each SPEC benchmark be run against a vendor-selected number of copies of the same benchmark • Running on Pentium 4 each of 26 SPEC benchmarks paired with every other (262 runs) speed-ups from 0. 90 to 1. 58; average was 1. 20 • Power 5, 8 processor server 1. 23 faster for SPECint_rate with SMT, 1. 16 faster for SPECfp_rate • Power 5 running 2 copies of each app speedup between 0. 89 and 1. 41 – Most gained some – Fl. Pt. apps had most cache conflicts and least gains 12/14 Multi-Hyper thread. 37

Limits to ILP • Doubling issue rates above today’s 3 -6 instructions per clock, say to 6 to 12 instructions, probably requires a processor to – – issue 3 or 4 data memory accesses per cycle, resolve 2 or 3 branches per cycle, rename and access more than 20 registers per cycle, and fetch 12 to 24 instructions per cycle. • The complexities of implementing these capabilities is likely to mean sacrifices in the maximum clock rate – E. g, widest issue processor is the Itanium 2, but it also has the slowest clock rate, despite the fact that it consumes the most power! 12/14 Multi-Hyper thread. 38

• • Limits to ILP Most techniques for increasing performance increase power consumption The key question is whether a technique is energy efficient: does it increase power consumption faster than it increases performance? Multiple issue processors techniques all are energy inefficient: 1. Issuing multiple instructions incurs some overhead in logic that grows faster than the issue rate grows 2. Growing gap between peak issue rates and sustained performance Number of transistors switching = f(peak issue rate), and performance = f( sustained rate), growing gap between peak and sustained performance increasing energy per unit of performance 12/14 Multi-Hyper thread. 39

Commentary • Itanium architecture does not represent a significant breakthrough in scaling ILP or in avoiding power / complexity consumption problems • Instead of more ILP, architects focusing on TLP implemented with CMP • IBM announced Power 4, 1 st commercial CMP, = 2 Power 3 processors + L 2 cache – Sun Microsystems and Intel have switched CMP rather than aggressive uniprocessors. • Right balance of ILP and TLP not clear – Good for server, exploit more TLP, – desktop, single-thread performance a primary requirement 12/14 Multi-Hyper thread. 40

And in conclusion … • Limits to ILP (power efficiency, compilers, dependencies …) seem to limit to 3 to 6 issue for practical options • Explicitly parallel (Data level parallelism or Thread level parallelism) is next step to performance • Coarse grain vs. Fine grained multithreading – Only on big stall vs. every clock cycle • Simultaneous Multithreading fine grained multithreading based on superscalar microarchitecture – Instead of replicating registers, reuse rename registers 12/14 Multi-Hyper thread. 41

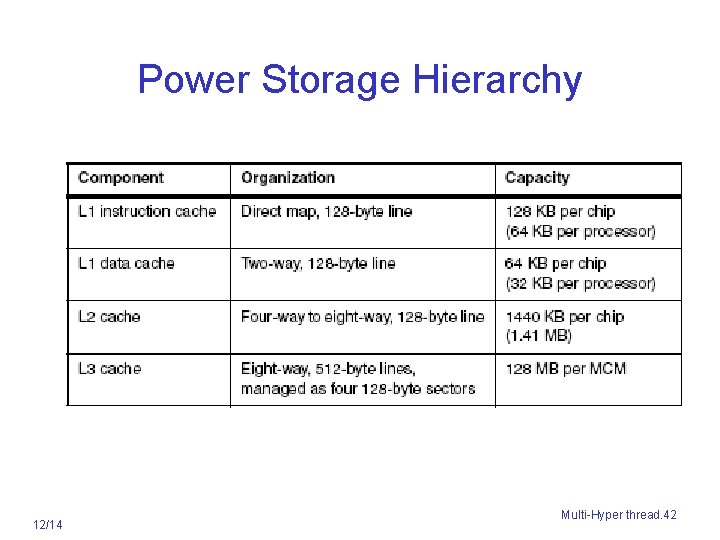

Power Storage Hierarchy 12/14 Multi-Hyper thread. 42

Power Storage Hierarchy • Hardware data prefetch – hardware prefetches Data from L 2, L 3 & memory : hides memory latency transparently loads the L 1 data cache – Triggered by data cache line misses • L 1 prefetches 1 cache line ahead • L 2 prefetches 5 cache lines ahead • L 3 prefetches 17 to 20 lines 12/14 Multi-Hyper thread. 43

Moore’s Law reinterpreted • Number of cores per chip will double every two years • Clock speed will not increase (possibly decrease) • Need to deal with systems with millions of concurrent threads • Need to deal with inter-chip parallelism as well as intra-chip parallelism 12/14 Multi-Hyper thread. 44

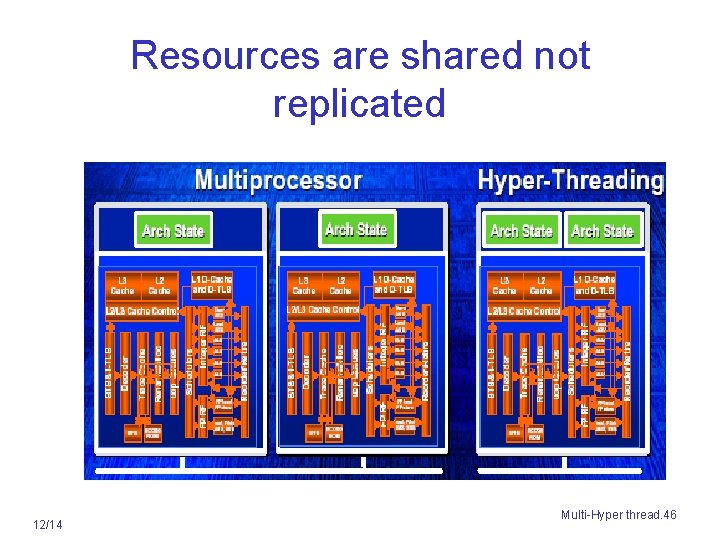

Intel’s Hyper-threading technology is SMT Pentium 4 (Xeon) • Executes two tasks simultaneously – Two different applications – Two threads of same application • CPU maintains architecture state for two processors – Two logical processors per physical processor • Implemented on Intel® Xeon™ and most Pentium 4 – Two logical processors for < 5% additional die area – Power efficient performance gain 12/14 Multi-Hyper thread. 45

Resources are shared not replicated 12/14 Multi-Hyper thread. 46

Multithreaded Microarchitecture • Dedicated local context per running thread • Efficient resource sharing – Time sharing – Space sharing • Fast thread synchronization / communication – Explicit instructions – Implicit via shared registers / cache / buffer 12/14 Multi-Hyper thread. 47

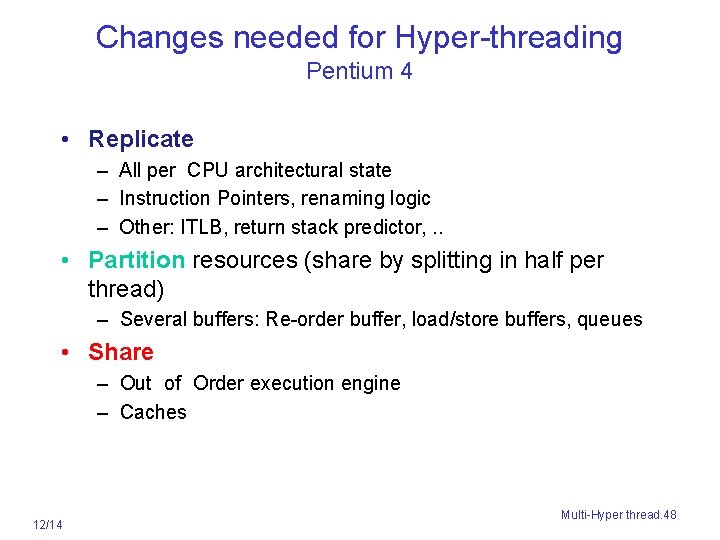

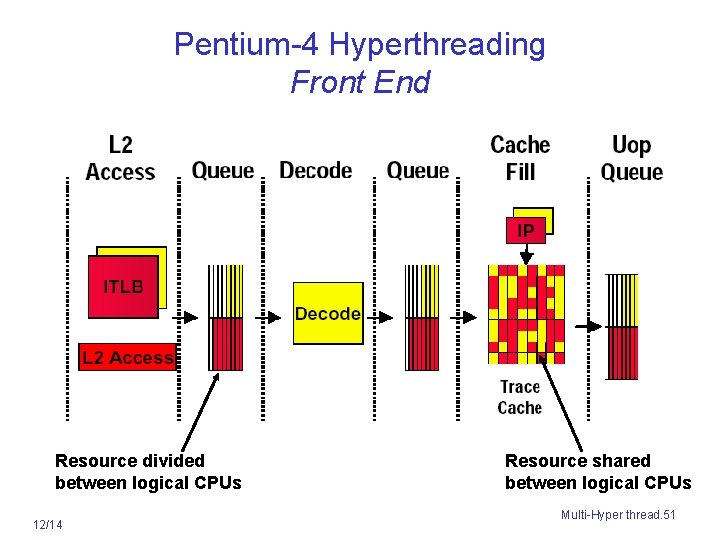

Changes needed for Hyper-threading Pentium 4 • Replicate – All per CPU architectural state – Instruction Pointers, renaming logic – Other: ITLB, return stack predictor, . . So • Partition resources (share by splitting in half per thread) – Several buffers: Re-order buffer, load/store buffers, queues • Share – Out -of -Order execution engine – Caches 12/14 Multi-Hyper thread. 48

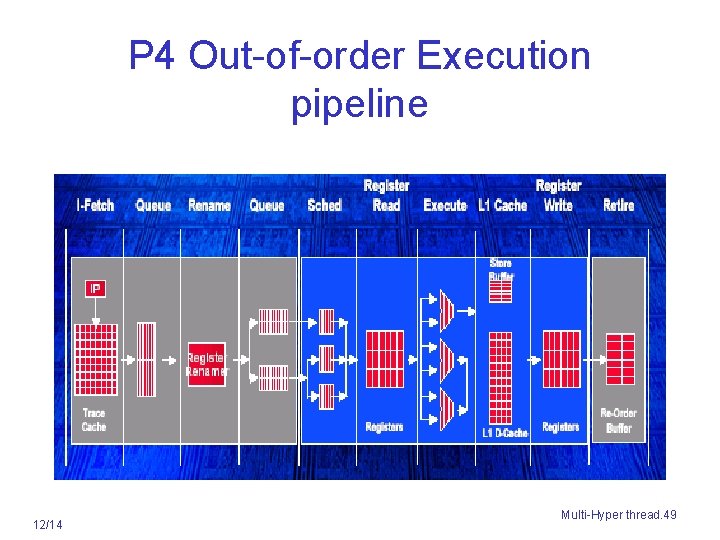

P 4 Out-of-order Execution pipeline 12/14 Multi-Hyper thread. 49

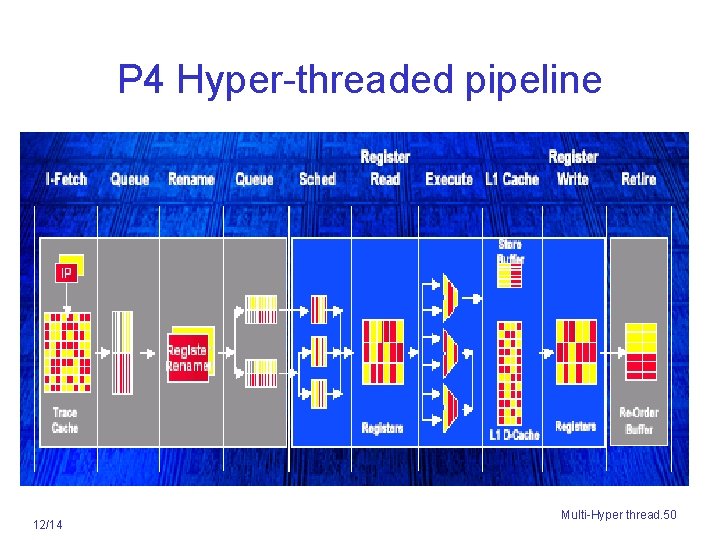

P 4 Hyper-threaded pipeline 12/14 Multi-Hyper thread. 50

Pentium-4 Hyperthreading Front End Resource divided between logical CPUs 12/14 Resource shared between logical CPUs Multi-Hyper thread. 51

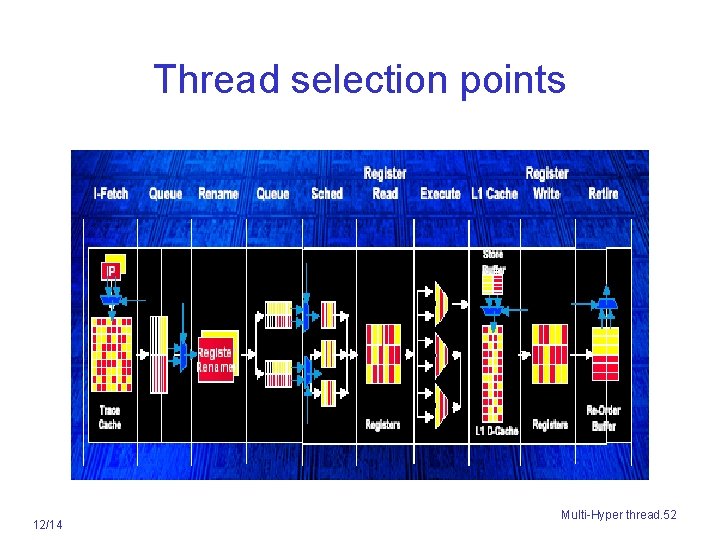

Thread selection points 12/14 Multi-Hyper thread. 52

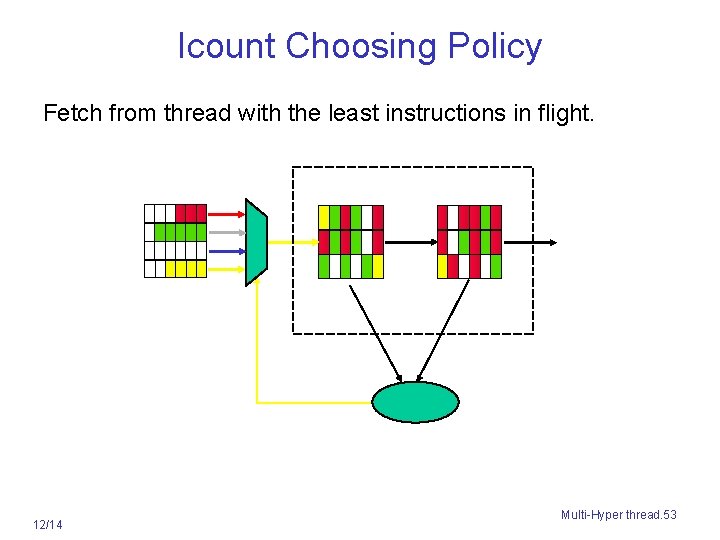

Icount Choosing Policy Fetch from thread with the least instructions in flight. 12/14 Multi-Hyper thread. 53

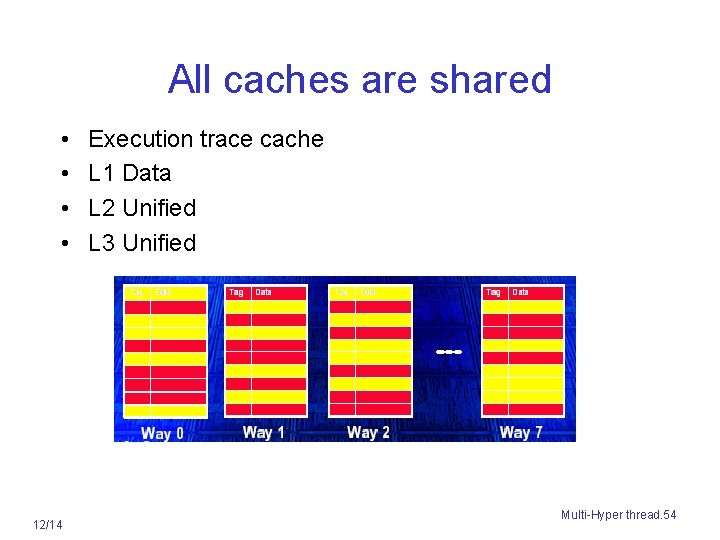

All caches are shared • • 12/14 Execution trace cache L 1 Data L 2 Unified L 3 Unified Multi-Hyper thread. 54

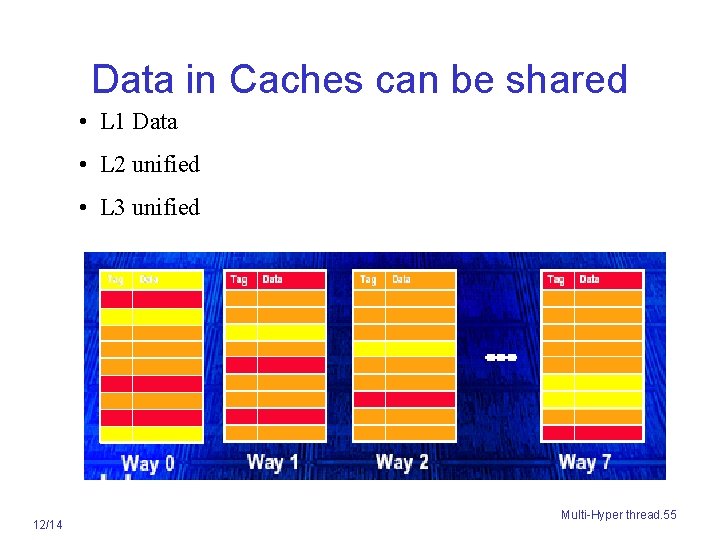

Data in Caches can be shared • L 1 Data • L 2 unified • L 3 unified 12/14 Multi-Hyper thread. 55

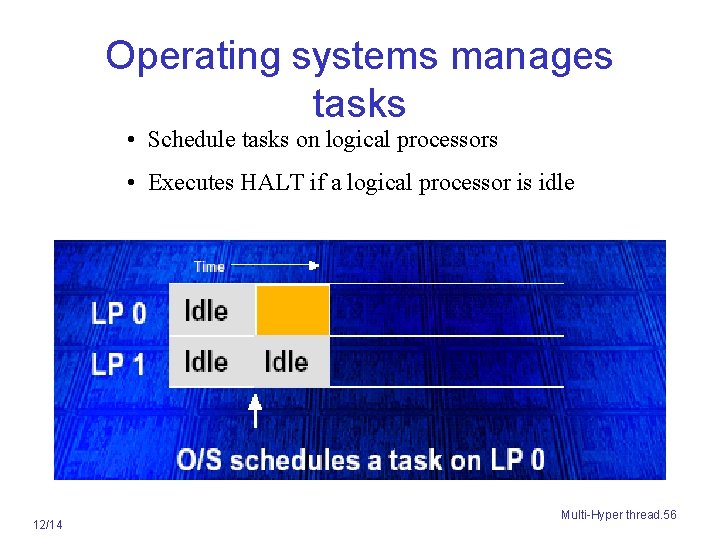

Operating systems manages tasks • Schedule tasks on logical processors • Executes HALT if a logical processor is idle 12/14 Multi-Hyper thread. 56

Initial Performance of SMT • Pentium 4 Extreme SMT yields 1. 01 speedup for SPECint_rate benchmark and 1. 07 for SPECfp_rate – Pentium 4 is dual threaded SMT • Running on Pentium 4 each of 26 SPEC benchmarks paired with every other (262 runs) speed-ups from 0. 90 to 1. 58; average was 1. 20 • Power 5, 8 -processor server 1. 23 faster for SPECint_rate with SMT, 1. 16 faster for SPECfp_rate • Power 5 running 2 copies of each app speedup between 0. 89 and 1. 41 – Most gained some – Fl. Pt. apps had most cache conflicts and least gains 12/14 Multi-Hyper thread. 57

Hyper-threading technology • Significant new technology direction for Intel’s future CPUs • Exploits parallelism in today’s applications and usage – Two logical processors on one physical processor • Accelerates performance for low silicon and power costs • Implemented in Xeon MP, Pentium 4, Itanium 2 12/14 Multi-Hyper thread. 58

Multicore & Manycore • Revolution needed • Software or architecture alone can’t fix parallel programming problem, need innovations in both • “Multicore” 2 X cores per generation: 2, 4, 8, … • “Manycore” 100 s is highest performance per unit area, and per Watt, then 2 X per generation: 64, 128, 256, 512, 1024 … • Multicore architectures & Programming Models good for 2 to 32 cores won’t evolve to Manycore systems of 1000’s of processors Desperately need HW/SW models that work for Manycore or will run out of steam (as ILP ran out of steam at 4 instructions) 12/14 Multi-Hyper thread. 59

Time (processor cycle) Summary: Multithreaded Categories Superscalar Simultaneous Fine-Grained. Coarse-Grained. Multiprocessing. Multithreading Thread 1 Thread 2 12/14 Thread 3 Thread 4 Thread 5 Idle slot Multi-Hyper thread. 60

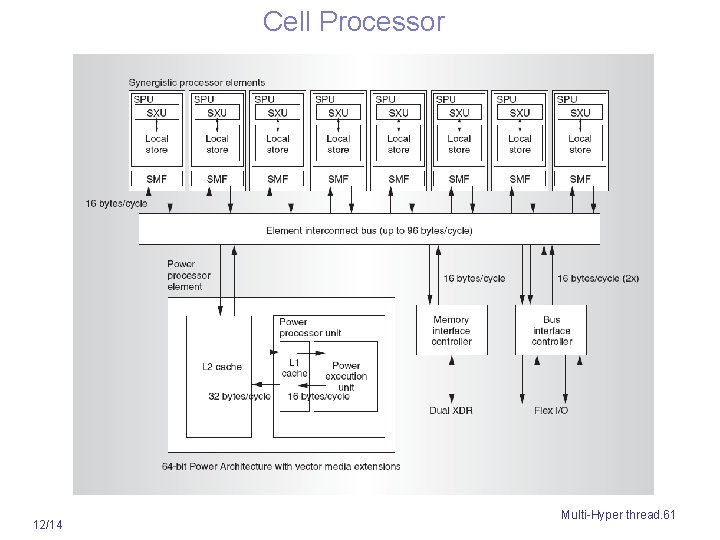

Cell Processor 12/14 Multi-Hyper thread. 61

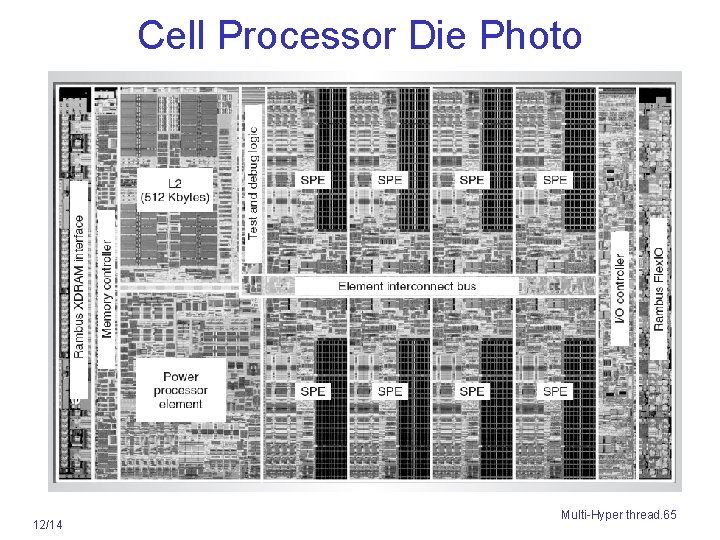

Cell Processor Features • 64 b Power core & its L 2 cache • 8 SPE – processing elements with local memory • High bandwidth interconnect bus • Memory interface controller • 10 simultaneous threads, 8 on SPEs + 2 on Power core • 234 M transistors, 90 nm, SOI, 8 -level Copper • On-chip temperature monitored – cooling adjusted 12/14 Multi-Hyper thread. 62

12/10 12/14 Multi-Hyper thread. 63

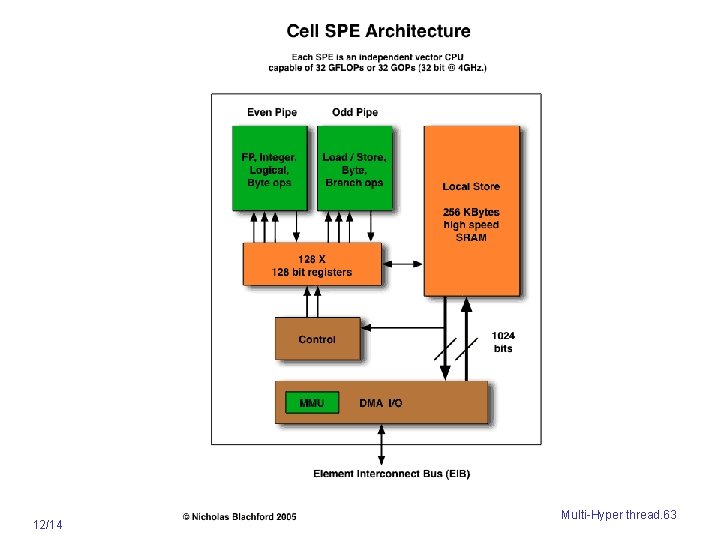

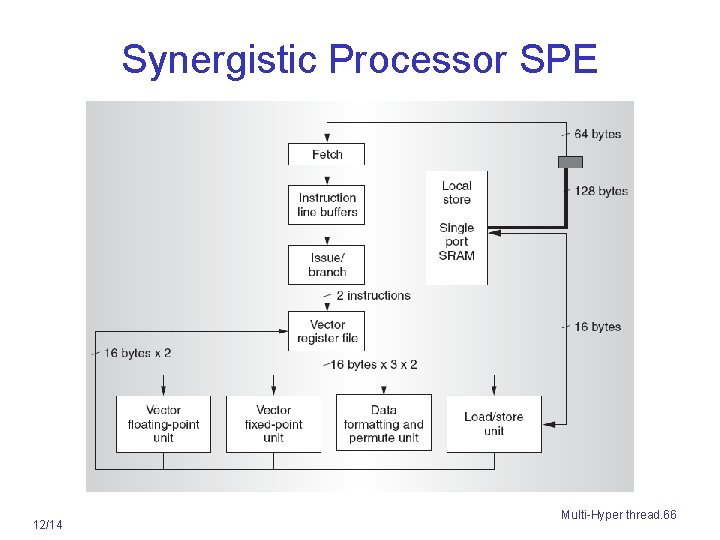

SPE • SPE optimized for compute intensive applications • Both types of processor cores share access to common address space, • main memory, and address ranges corresponding to each SPE’s local store, control registers, and I/O devices. • Simple high speed pipeline • Pervasive parallel computing …. SIMD data level parallelism • 128 x 128 register file (scalar – vector) • Optimized scalar – uses same h/w path as vector instructions • 256 k local store ( similar to but not a cache, no tags, . . etc) 12/14 Multi-Hyper thread. 64

Cell Processor Die Photo 12/14 Multi-Hyper thread. 65

Synergistic Processor SPE 12/14 Multi-Hyper thread. 66

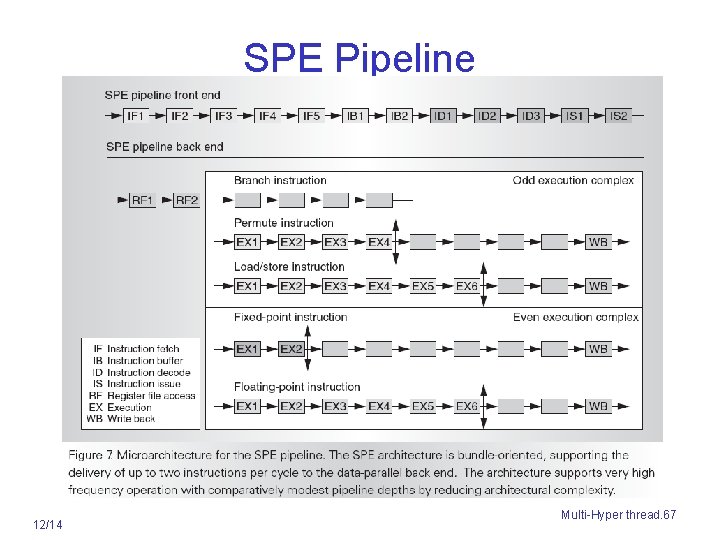

SPE Pipeline 12/14 Multi-Hyper thread. 67

- Slides: 67