Multithreading vs Event Driven in Code Development of

- Slides: 13

Multithreading vs. Event Driven in Code Development of High Performance Servers

Goals l Maximize server utilization and decrease its latency. – Server process doesn’t block! l Robust under heavy loads. l Relatively easy to develop.

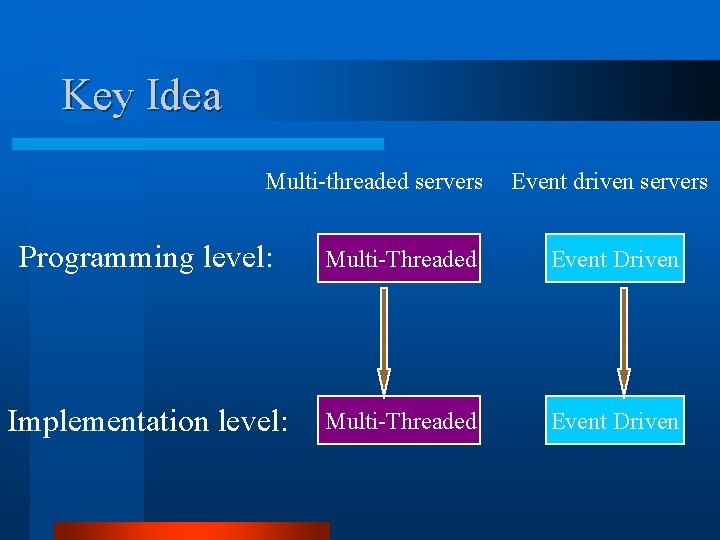

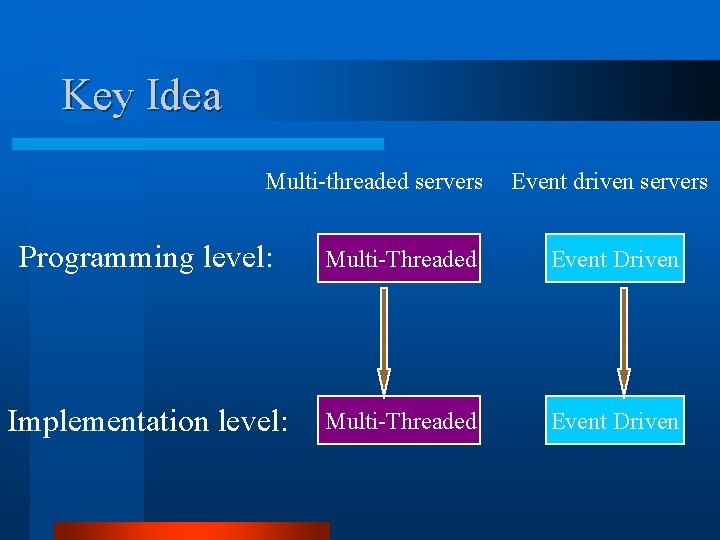

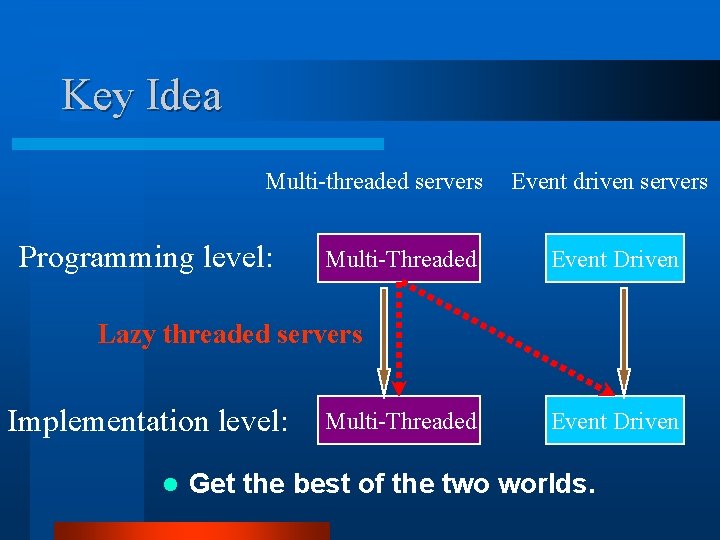

Key Idea Multi-threaded servers Event driven servers Programming level: Multi-Threaded Event Driven Implementation level: Multi-Threaded Event Driven

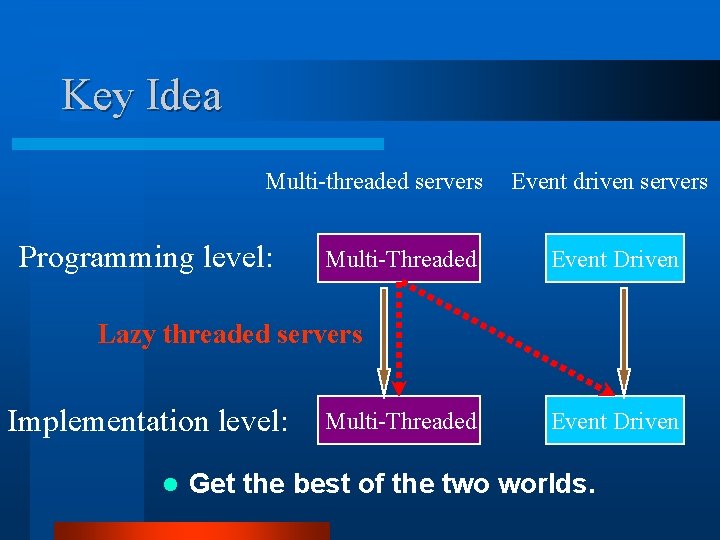

Key Idea Multi-threaded servers Programming level: Multi-Threaded Event driven servers Event Driven Lazy threaded servers Implementation level: l Multi-Threaded Event Driven Get the best of the two worlds.

Backgound: AIO l Asynchronous I/O mainly used for disk reads/writes. l Issue disk request and return immediately without blocking for results. l On completion an event is raised.

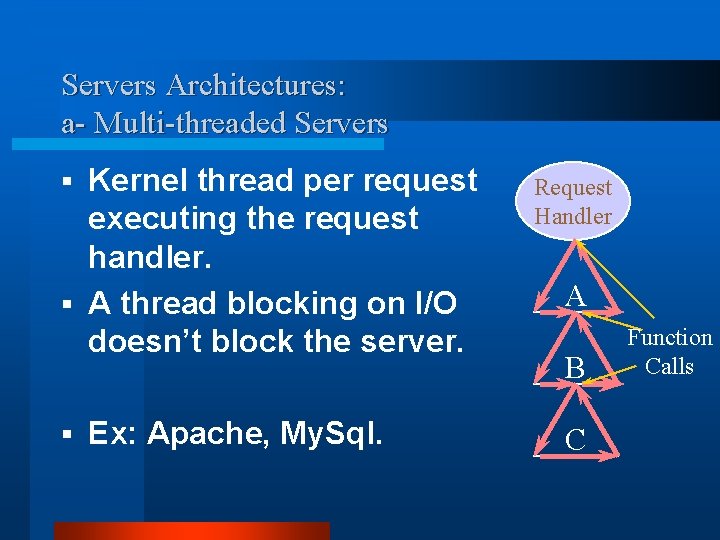

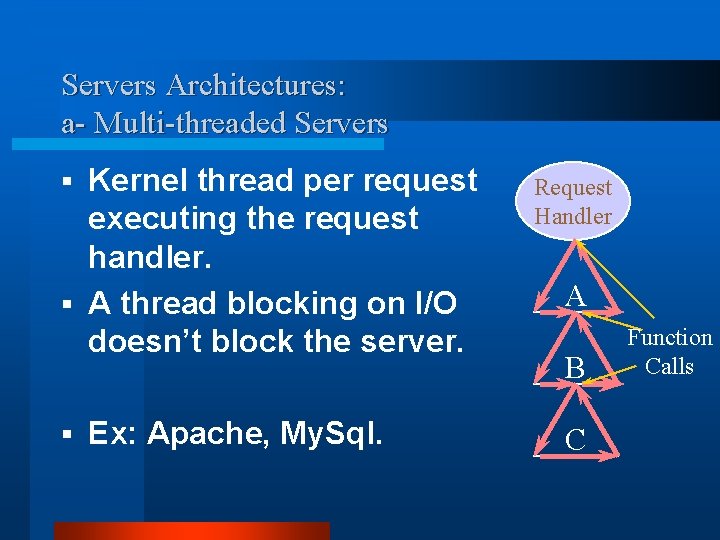

Servers Architectures: a- Multi-threaded Servers Kernel thread per request executing the request handler. § A thread blocking on I/O doesn’t block the server. § § Ex: Apache, My. Sql. Request Handler A B C Function Calls

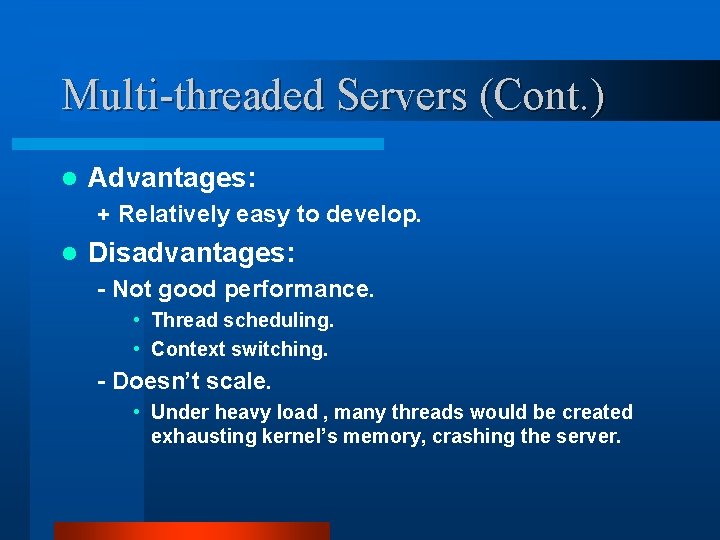

Multi-threaded Servers (Cont. ) l Advantages: + Relatively easy to develop. l Disadvantages: - Not good performance. • Thread scheduling. • Context switching. - Doesn’t scale. • Under heavy load , many threads would be created exhausting kernel’s memory, crashing the server.

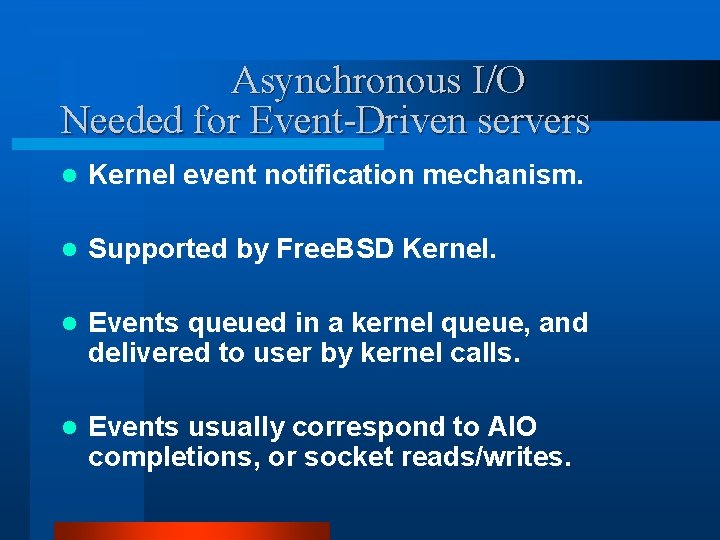

Asynchronous I/O Needed for Event-Driven servers l Kernel event notification mechanism. l Supported by Free. BSD Kernel. l Events queued in a kernel queue, and delivered to user by kernel calls. l Events usually correspond to AIO completions, or socket reads/writes.

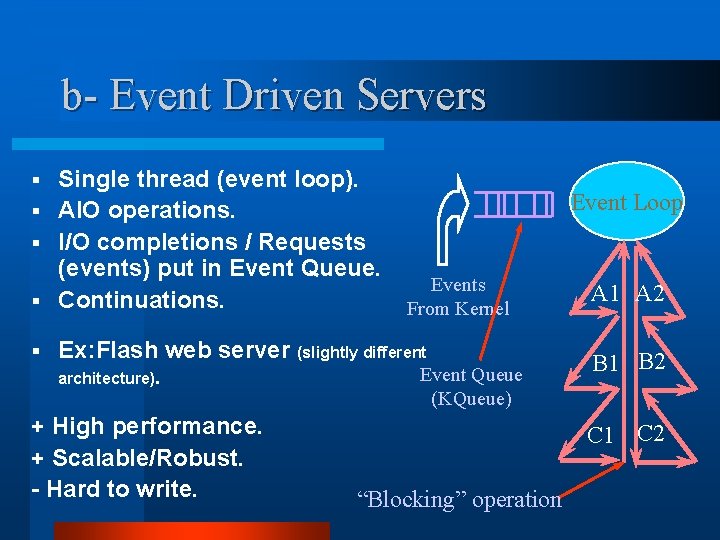

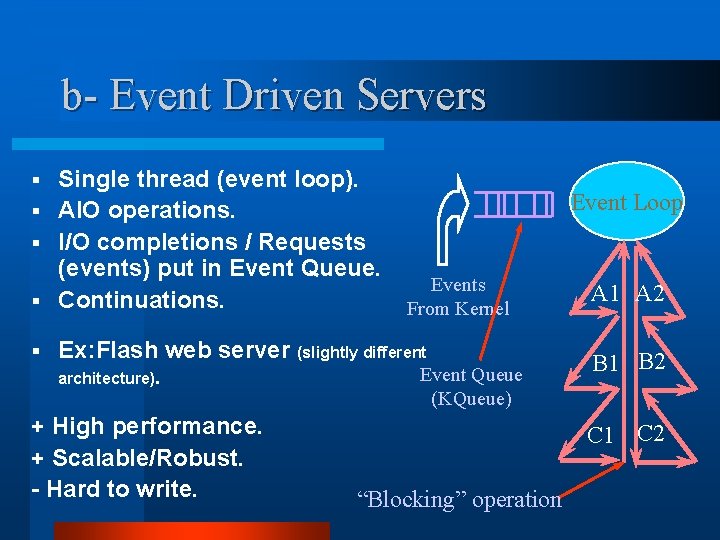

b- Event Driven Servers Single thread (event loop). § AIO operations. § I/O completions / Requests (events) put in Event Queue. § Continuations. § § Event Loop Events From Kernel Ex: Flash web server (slightly different Event Queue architecture). A 1 A 2 B 1 B 2 (KQueue) + High performance. + Scalable/Robust. - Hard to write. C 1 C 2 “Blocking” operation

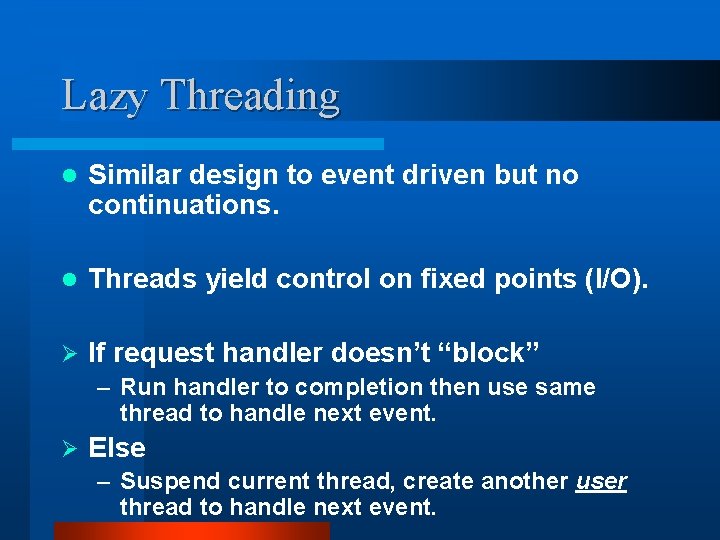

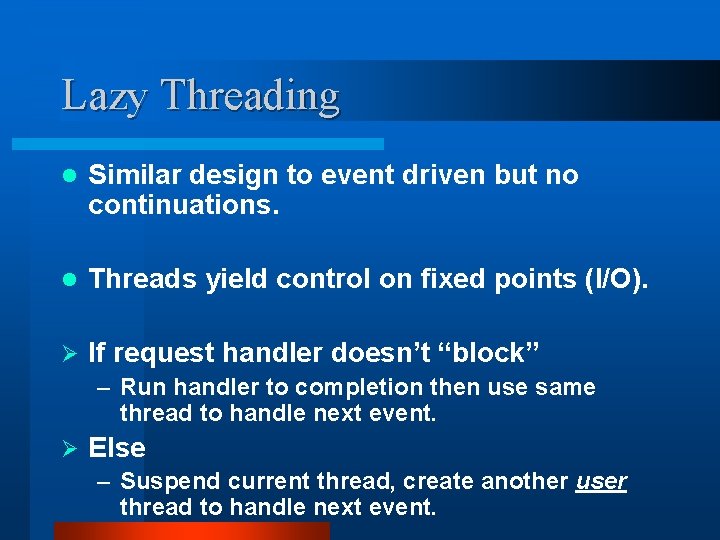

Lazy Threading l Similar design to event driven but no continuations. l Threads yield control on fixed points (I/O). Ø If request handler doesn’t “block” – Run handler to completion then use same thread to handle next event. Ø Else – Suspend current thread, create another user thread to handle next event.

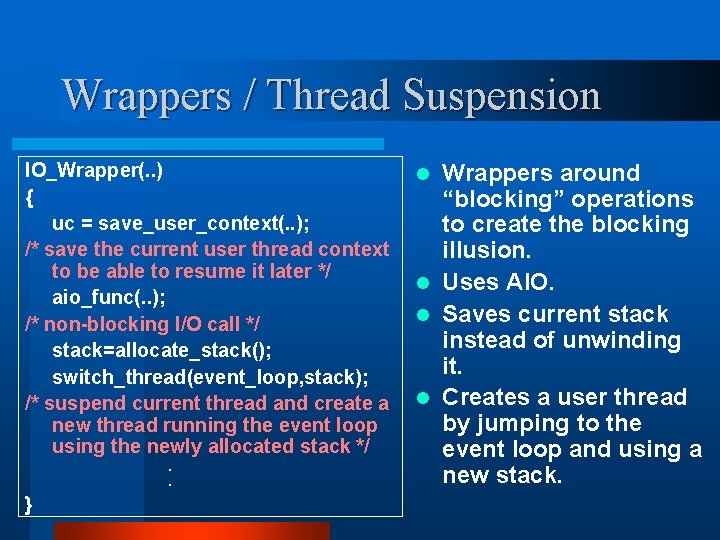

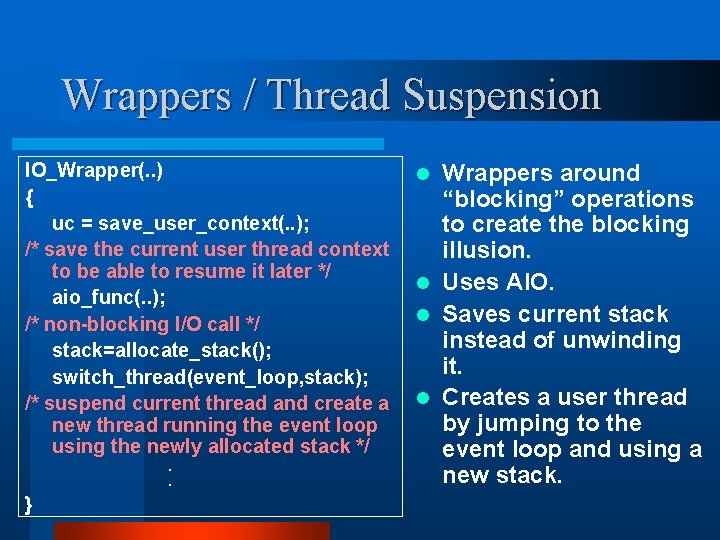

Wrappers / Thread Suspension IO_Wrapper(. . ) { uc = save_user_context(. . ); /* save the current user thread context to be able to resume it later */ aio_func(. . ); /* non-blocking I/O call */ stack=allocate_stack(); switch_thread(event_loop, stack); /* suspend current thread and create a new thread running the event loop using the newly allocated stack */. . } Wrappers around “blocking” operations to create the blocking illusion. l Uses AIO. l Saves current stack instead of unwinding it. l Creates a user thread by jumping to the event loop and using a new stack. l

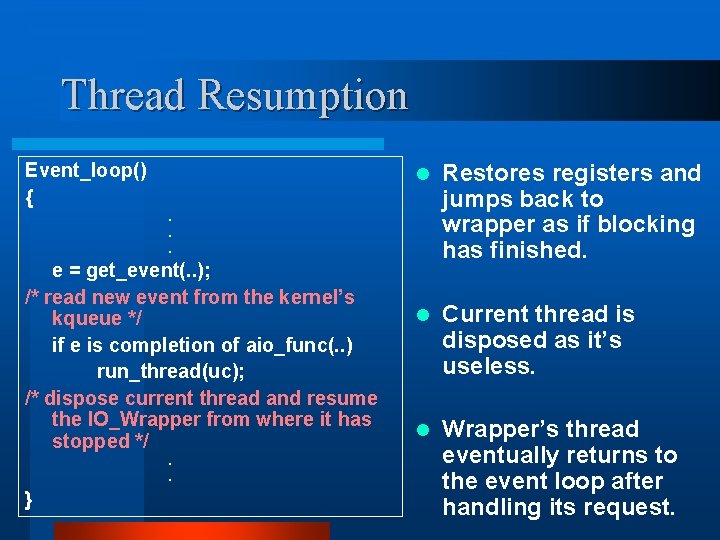

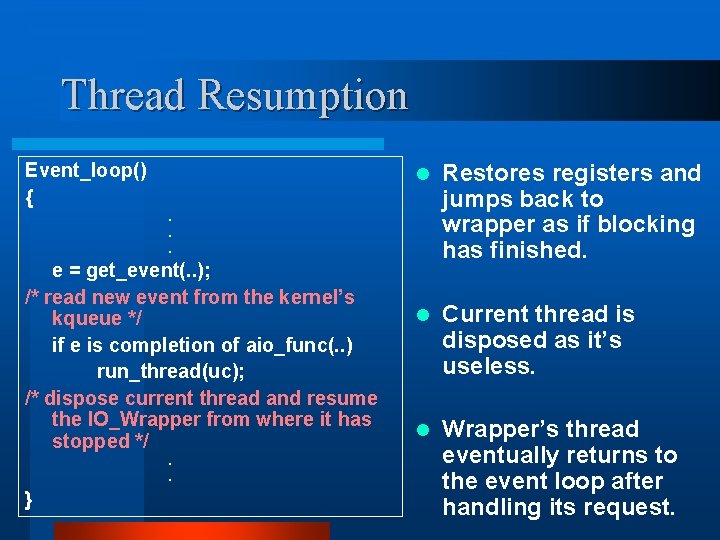

Thread Resumption Event_loop() { l Restores registers and jumps back to wrapper as if blocking has finished. l Current thread is disposed as it’s useless. l Wrapper’s thread eventually returns to the event loop after handling its request. . e = get_event(. . ); /* read new event from the kernel’s kqueue */ if e is completion of aio_func(. . ) run_thread(uc); /* dispose current thread and resume the IO_Wrapper from where it has stopped */. . }

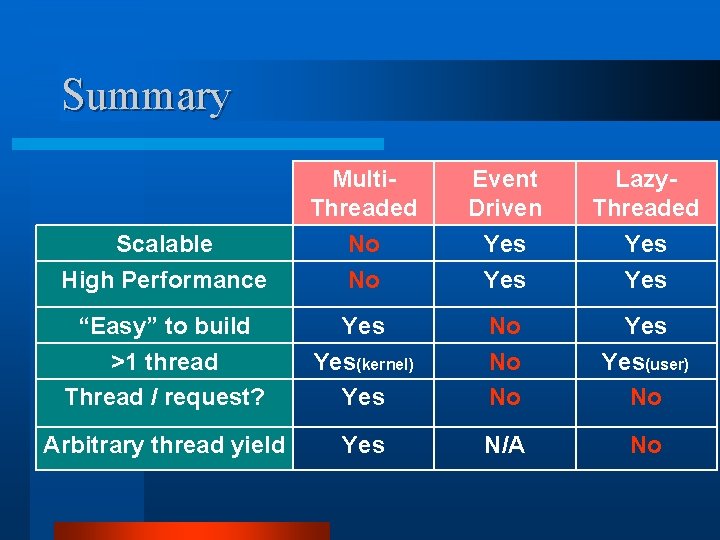

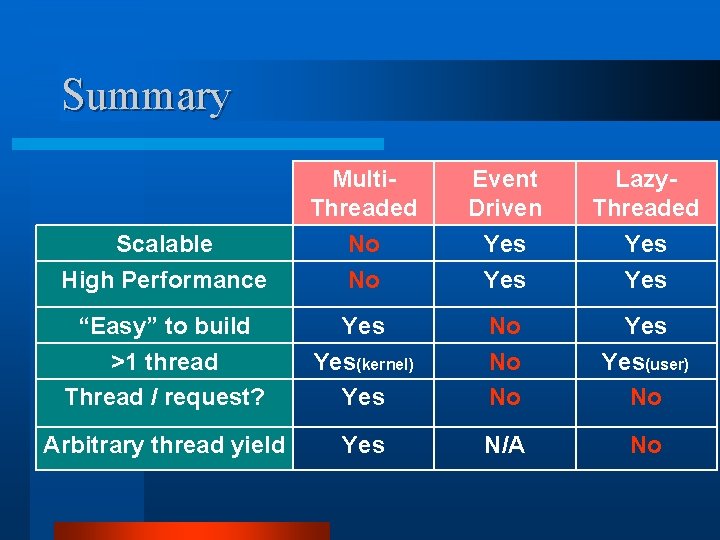

Summary Scalable High Performance Multi. Threaded No No Event Driven Yes Lazy. Threaded Yes “Easy” to build >1 thread Thread / request? Yes(kernel) Yes No No No Yes(user) No Arbitrary thread yield Yes N/A No