Multithreaded Architectures Dr Avi Mendelson Architecture fall 2003

- Slides: 24

Multithreaded Architectures Dr. Avi Mendelson Architecture – fall 2003, Technion (base. Advance on a Computer presentation made by Dr. Amir Roth) 1

Overview Multithreaded Architecture q Multithreaded Micro-Architecture q Conclusions q Advance Computer Architecture – fall 2003, Technion 2

References q q q q “Asynchrony in Parallel Computing: From Dataflow to Multithreading” by Jurij Silc, Borut Robic, and Theo Ungerer, Parallel and Distributed Computing Practices Vol. 1, No. 1, March 1998. http: //www-csd. ijs. si/silc/pdcp. html R. S. Nikhil, G. M. Papadopoulos and Arvind. *T: A Multithreaded Massively Parallel Architecture. In Proc. 19 th Annual International Symposium on Computer Architecture, pp. 156 -167, 1992. A. Agarwal, J. Kubiatowicz, D. Kranz, B. -H. Lim, D. Yeung, G. D'Souza, M. Parkin. Sparcle: An Evolutionary Processor Design for Large-Scale Multiprocessors. IEEE Micro, vol. 13, no. 3, pp. 48 -61, June 1993. R. Alverson, D. Callahan, D. Cummings, B. Koblenz, A. Porterfield, B. Smith. The Tera Computer System. In Proc. 1990 International Conference on Supercomputing, pp. 1 -6, June 1990 H. Hirata, K. Kimura, S. Nagamine, Y. Mochizuki, A. Nishimura, Y. Nakase and T. Nishizawa. An elementary Processor Architecture with Simultaneous Instruction Issuing from Multiple Threads. ISCA ‘ 19, pp. 136 -145, 1992. G. S. Sohi, S. E. Breach, T. N. Vijaykumar. Multiscalar Processors. ISCA ‘ 22, 1995. M. Fillo, S. W. Keckler, W. J. Dally, N. P. Carter, A. Chang. Y. Gurevich, W. S. Lee. The M-Machine Multicomputer. In Proc. 28 th Annual Inter. Sym. on Microarchitecture, pp 146 -156, 1995 "Simultaneous Multithreading: Maximizing On-Chip Parallelism" by Tullsen, Eggers and Levy. ISCA’ 95. D. M. Tullsen, S. J. Eggers, J. S. Emer, H. M. Levy, J. L. Lo, R. L. Stamm. Exploiting choice: Instruction Fetch and Issue on an Implementable Simultaneous Multithreading Processor. ISCA ‘ 23, 1996. “Converting Thread-Level Parallelism to Instruction-Level Parallelism via Simultaneous Multithreading” by Lo, Eggers, Emer, Levy, Stamm and Tullsen in ACM Transactions on Computer Systems, August 1997. “Simultaneous Multithreading: A Platform for Next-Generation Prcoessors” by Eggers, Emer, Levy, Lo, Stamm and Tullsen in IEEE Micro, October, 1997. “Simultaneous Multithreading: Multiplying Alpha Performance”, Joel Emer, Micro. Processor Forum 1999. http: //www. alphapowered. com/simu-multi-thread. ppt “A Dynamic Multithreading Processor” by H. Akkary (Intel), M. Driscoll (Portland State Univ. ). Micro-31, Nov ‘ 1999. "Speculative Data-Driven Multithreading" by Amir Roth, Guri Sohi. Submitted to ASPLOS ‘ 00. “Micro. Unity Lifts Veil on Media. Processor: New Architecture Designed for Broadband Communications”, Michael Slater, http: //www. mpronline. com/mpr/h/19951023/091402. html, Microprocessor report 10/23/95 “A Bandwidth Efficient Architecture for Media Processing”, S. Rixner, W. J. Dally, et-al. . . MICRO-31, November 1999. Advance Computer Architecture – fall 2003, Technion 3

Goals of Multithreaded Architecture q Successful MTA must have: l Minimal impact on the conventional design l Improved throughput on multiple thread workloads ü l l q Multiple thread = multithreaded or multiprogrammed workload Good cost/throughput Minimal impact on single-thread performance Would also like l Performance gain on multithreaded applications ü Shared resources = faster communication/synchronization Advance Computer Architecture – fall 2003, Technion 4

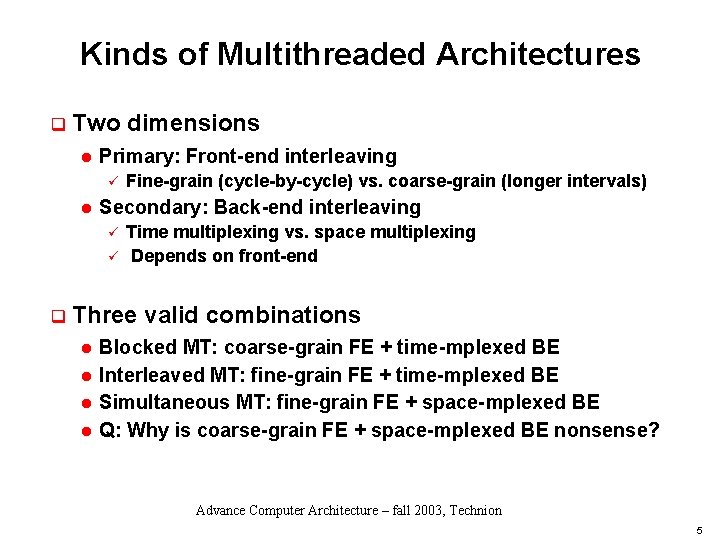

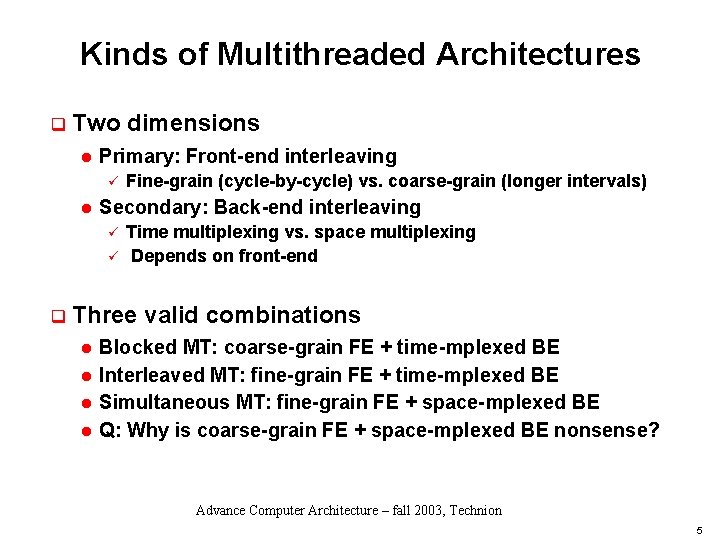

Kinds of Multithreaded Architectures q Two dimensions l Primary: Front-end interleaving ü l Secondary: Back-end interleaving ü ü q Fine-grain (cycle-by-cycle) vs. coarse-grain (longer intervals) Time multiplexing vs. space multiplexing Depends on front-end Three valid combinations l l Blocked MT: coarse-grain FE + time-mplexed BE Interleaved MT: fine-grain FE + time-mplexed BE Simultaneous MT: fine-grain FE + space-mplexed BE Q: Why is coarse-grain FE + space-mplexed BE nonsense? Advance Computer Architecture – fall 2003, Technion 5

Throughput vs. Utilization q Not the same thing l Throughput: how many instructions complete per cycle l Utilization: how many resources busy per cycle l Can increase one without the other Can increase one while decreasing the other We would like to increase both l l Advance Computer Architecture – fall 2003, Technion 6

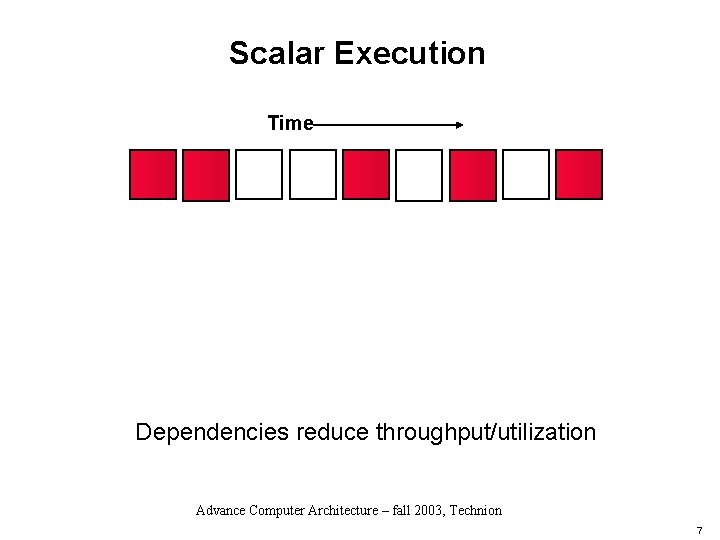

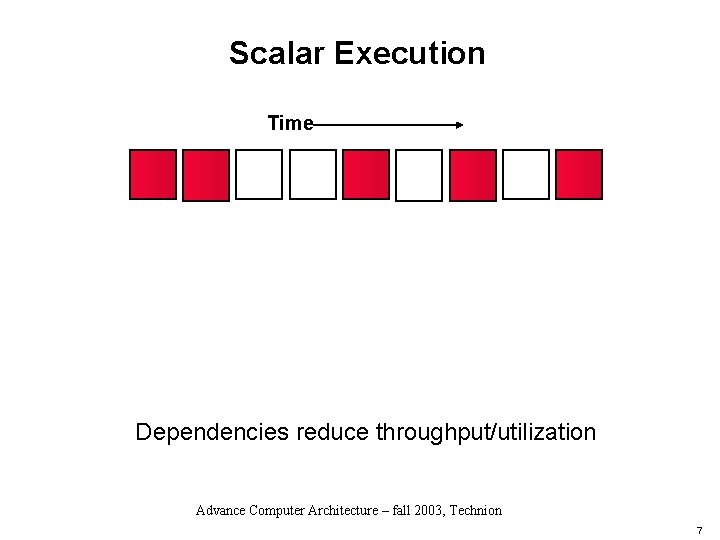

Scalar Execution Time Dependencies reduce throughput/utilization Advance Computer Architecture – fall 2003, Technion 7

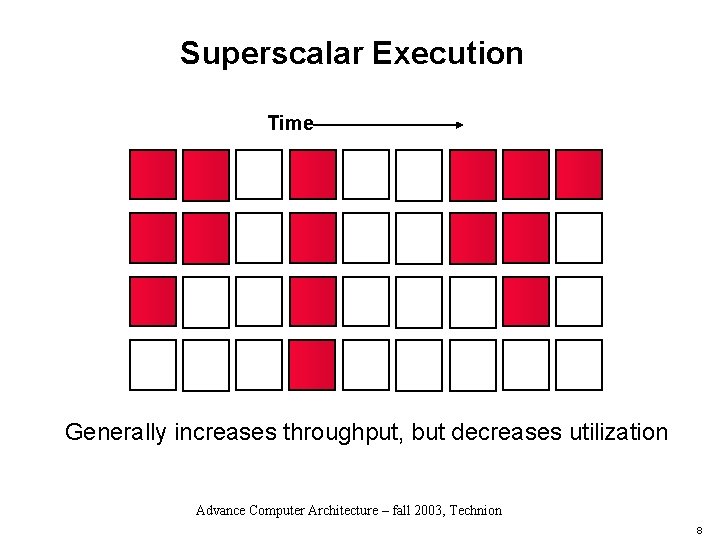

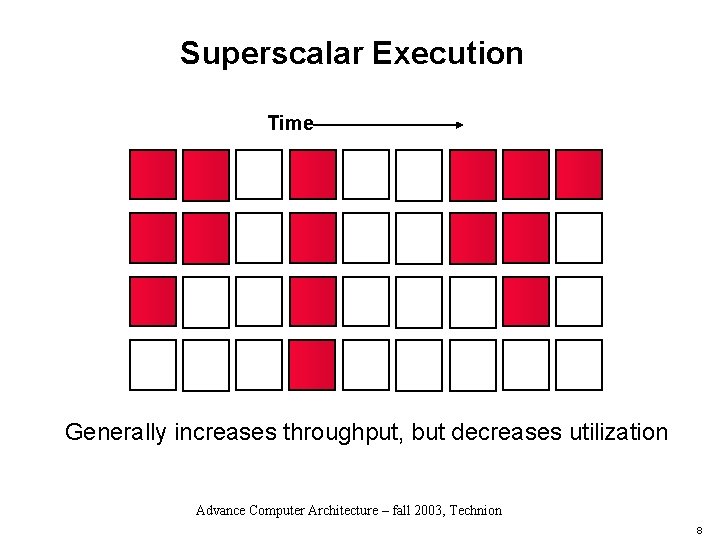

Superscalar Execution Time Generally increases throughput, but decreases utilization Advance Computer Architecture – fall 2003, Technion 8

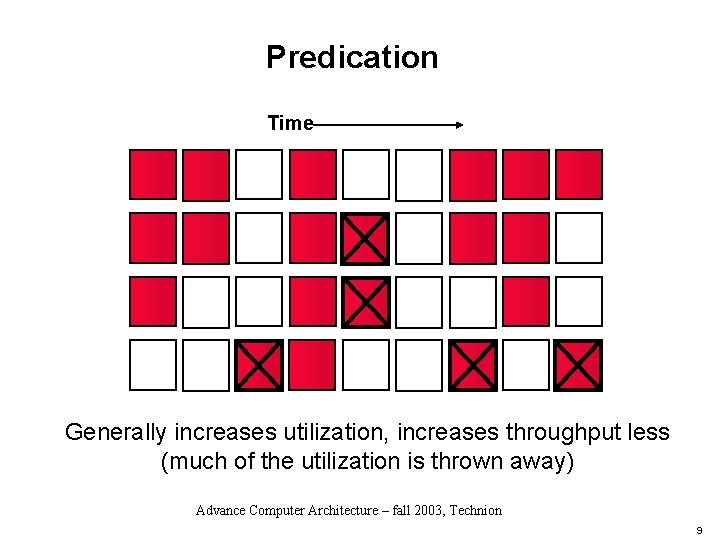

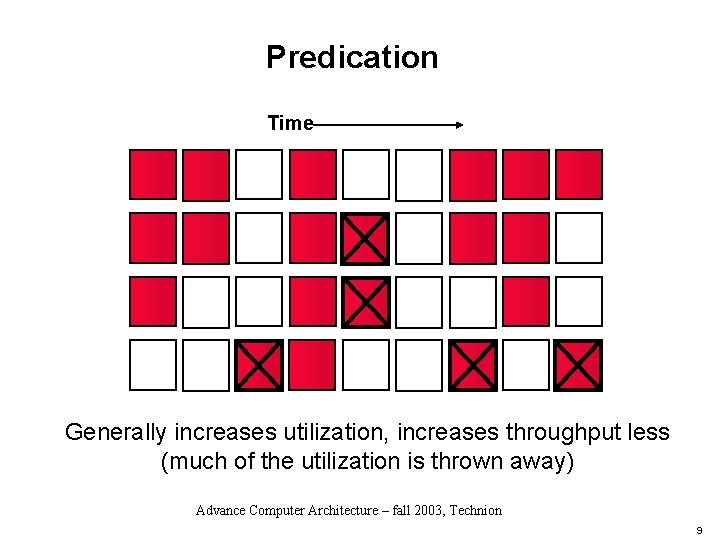

Predication Time Generally increases utilization, increases throughput less (much of the utilization is thrown away) Advance Computer Architecture – fall 2003, Technion 9

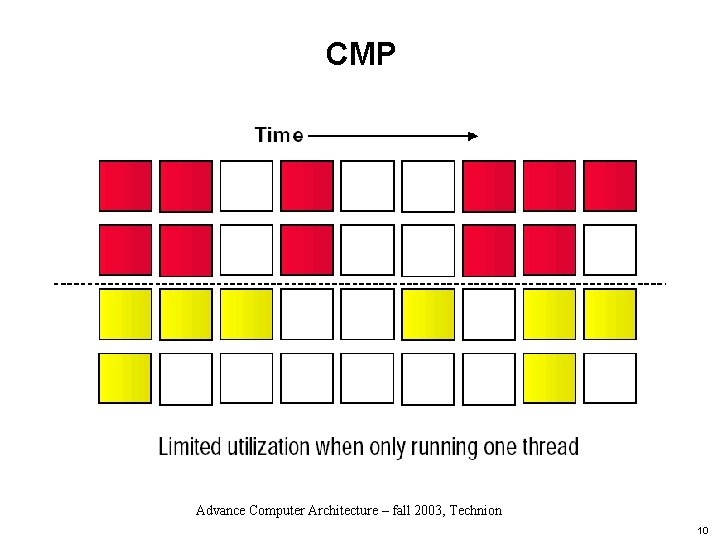

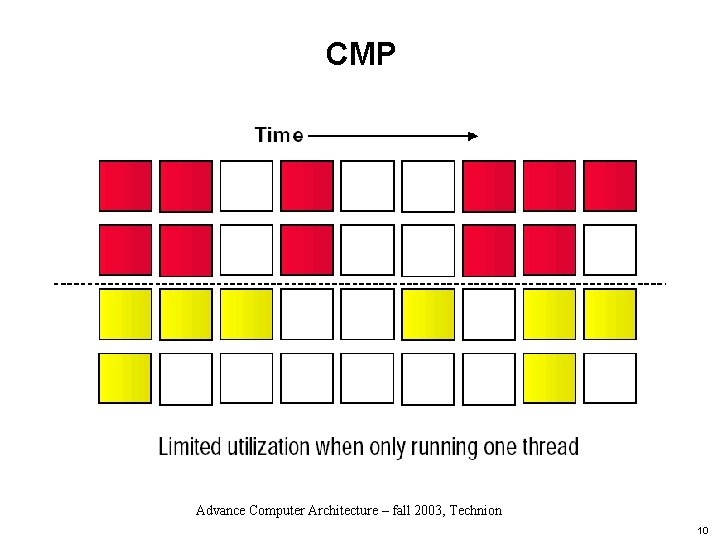

CMP Advance Computer Architecture – fall 2003, Technion 10

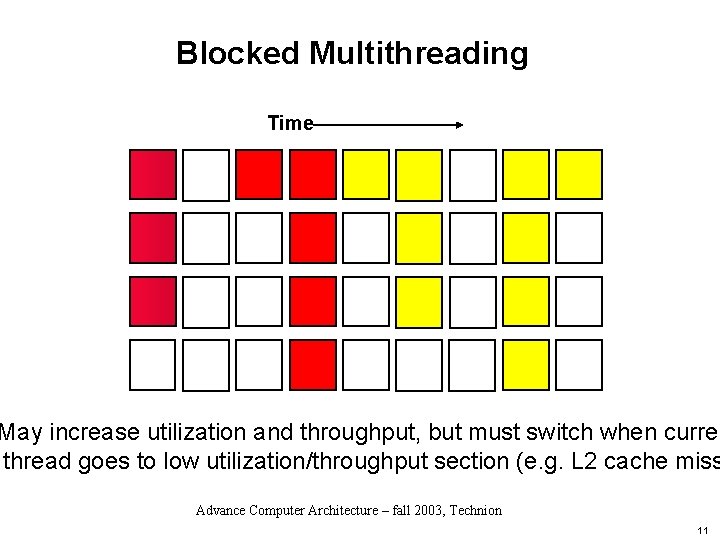

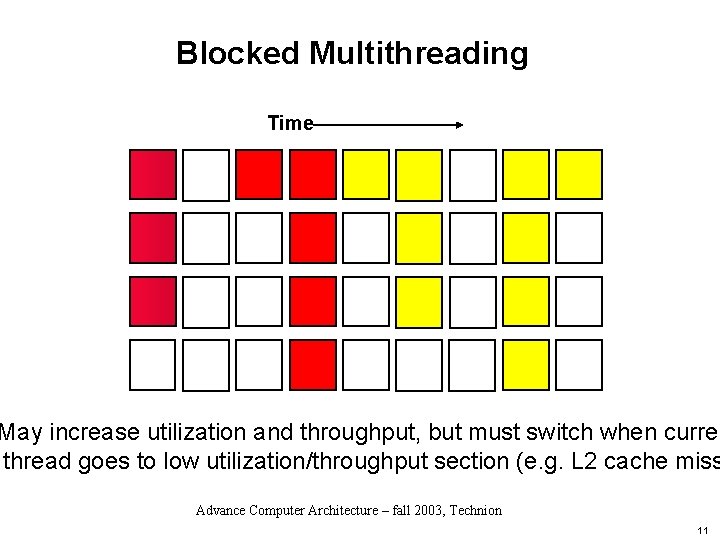

Blocked Multithreading Time May increase utilization and throughput, but must switch when curren thread goes to low utilization/throughput section (e. g. L 2 cache miss Advance Computer Architecture – fall 2003, Technion 11

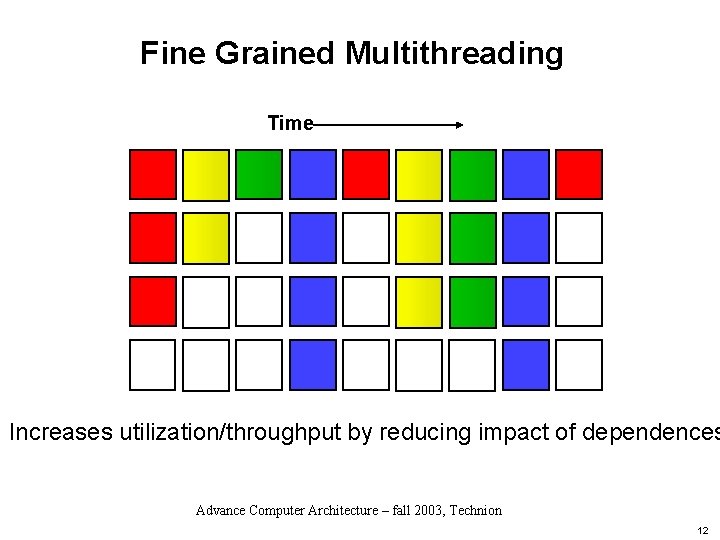

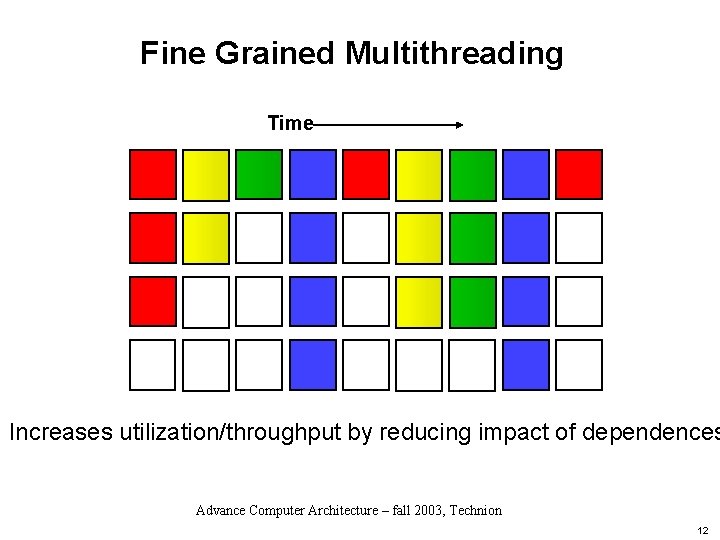

Fine Grained Multithreading Time Increases utilization/throughput by reducing impact of dependences Advance Computer Architecture – fall 2003, Technion 12

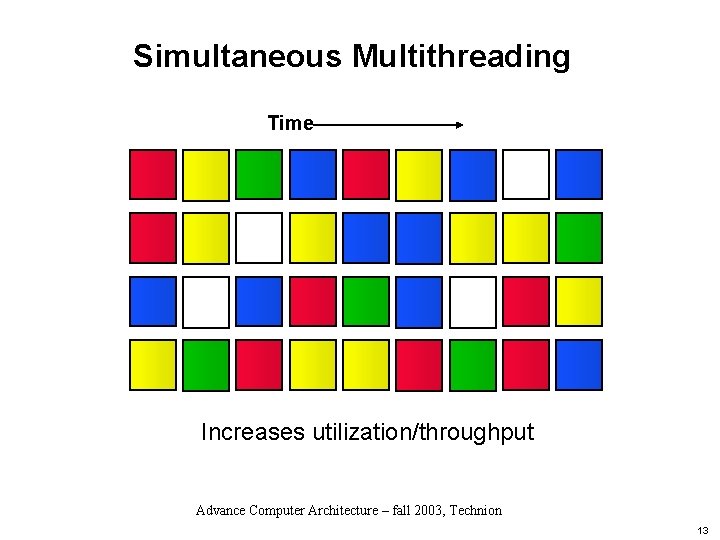

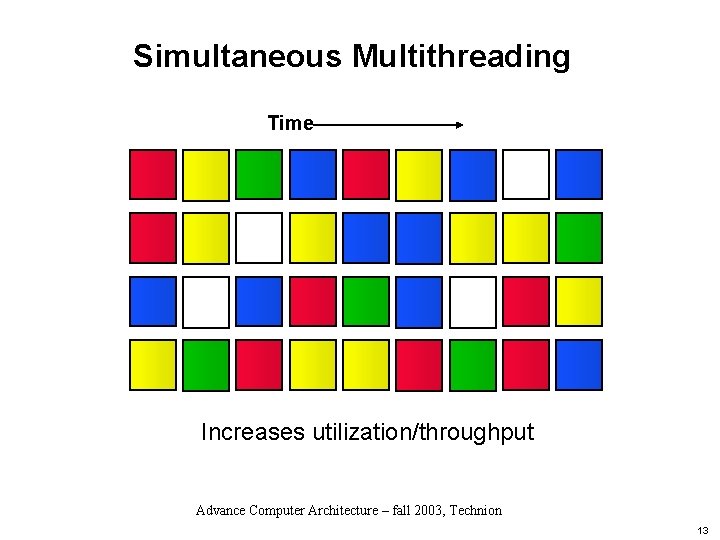

Simultaneous Multithreading Time Increases utilization/throughput Advance Computer Architecture – fall 2003, Technion 13

Blocked Multithreading q Critical decision: when to switch threads l Answer: when current thread’s utilization/thput is about to drop ü q Requirements for throughput: l Thread-switch + pipe-fill time << blocking latency ü l l q Primary example: L 2 cache miss Would like to get some work done before other thread comes back Fast thread-switch: multiple register banks Fast pipe-fill: short pipe Examples l l l Macro-dataflow machine MIT Alewife IBM Northstar Advance Computer Architecture – fall 2003, Technion 14

Interleaved Multithreading q Critical decision: none? q Requirements for throughput: l Enough threads to eliminate intra-thread hazards ü q Increasing number of threads reduces single-thread performance Examples: l l HEP Denelcor: 8 threads (latencies were shorter then) TERA: 128 threads Advance Computer Architecture – fall 2003, Technion 15

Simultaneous Multi-threading q Critical decision: fetch-interleaving policy q Requirements for throughput: l Enough threads to utilize resources ü q Notice, many fewer than needed to stretch dependences Examples: l l Compaq Alpha EV 8 Intel P 4 Advance Computer Architecture – fall 2003, Technion 16

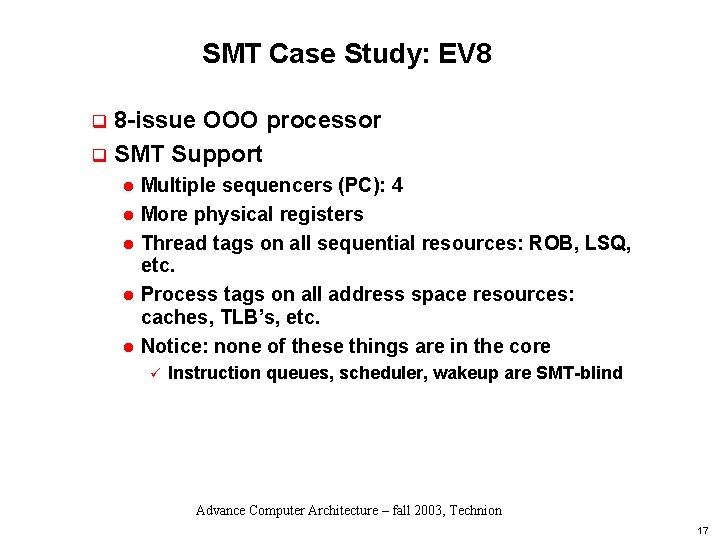

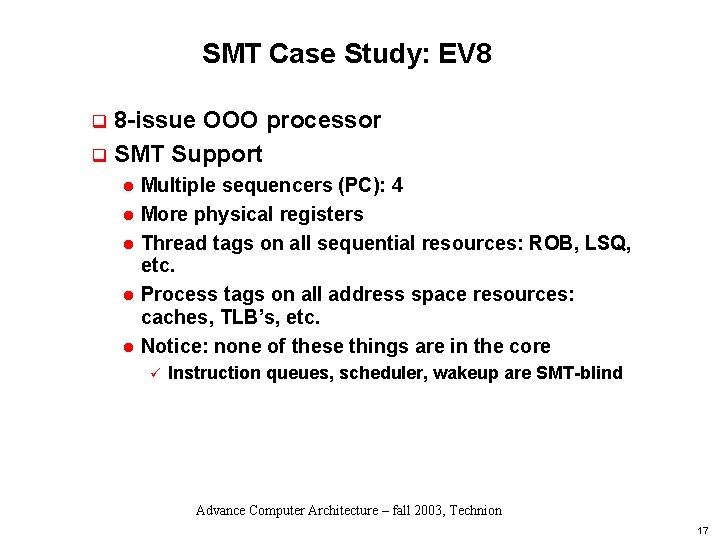

SMT Case Study: EV 8 8 -issue OOO processor q SMT Support q l l l Multiple sequencers (PC): 4 More physical registers Thread tags on all sequential resources: ROB, LSQ, etc. Process tags on all address space resources: caches, TLB’s, etc. Notice: none of these things are in the core ü Instruction queues, scheduler, wakeup are SMT-blind Advance Computer Architecture – fall 2003, Technion 17

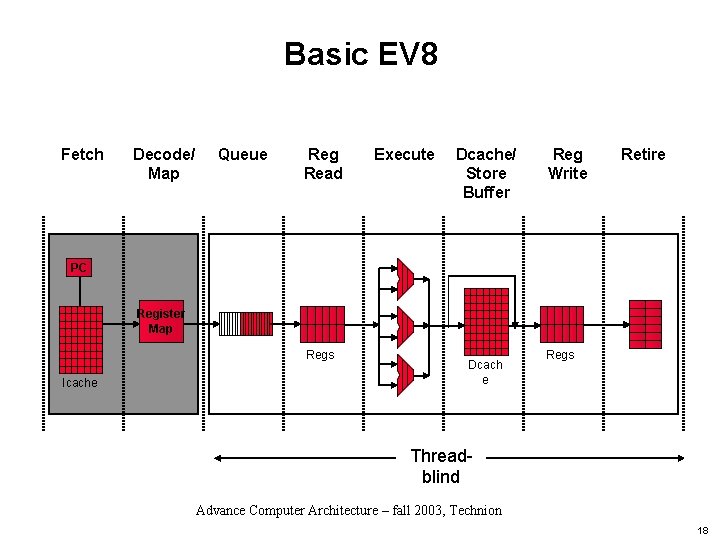

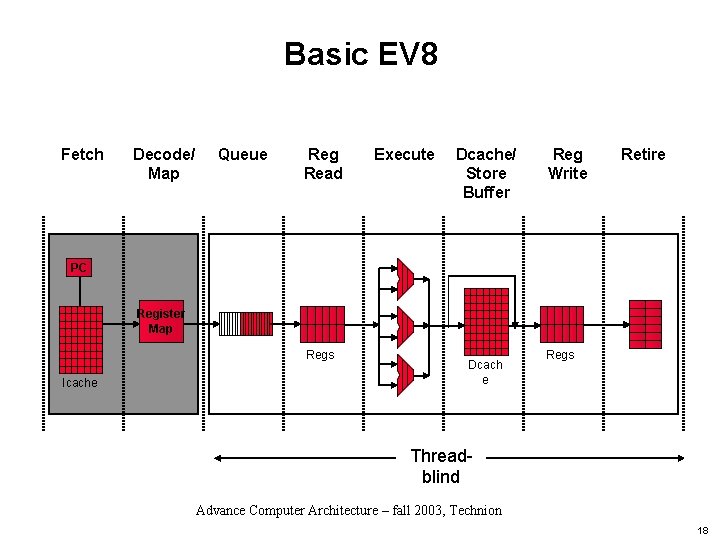

Basic EV 8 Fetch Decode/ Map Queue Reg Read Execute Dcache/ Store Buffer Reg Write Retire PC Register Map Regs Icache Dcach e Regs Threadblind Advance Computer Architecture – fall 2003, Technion 18

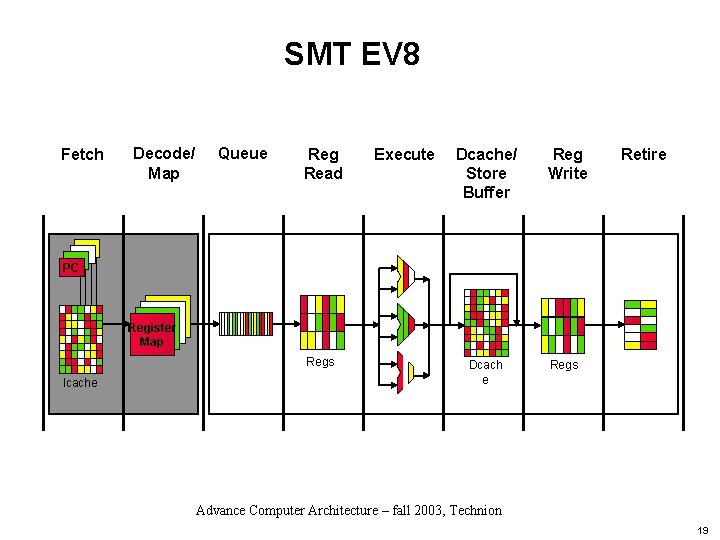

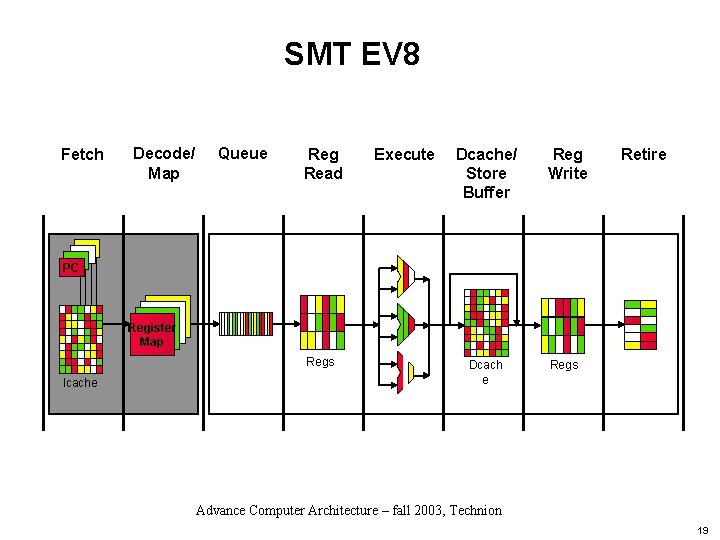

SMT EV 8 Fetch Decode/ Map Queue Reg Read Execute Dcache/ Store Buffer Reg Write Retire PC Register Map Regs Icache Dcach e Regs Advance Computer Architecture – fall 2003, Technion 19

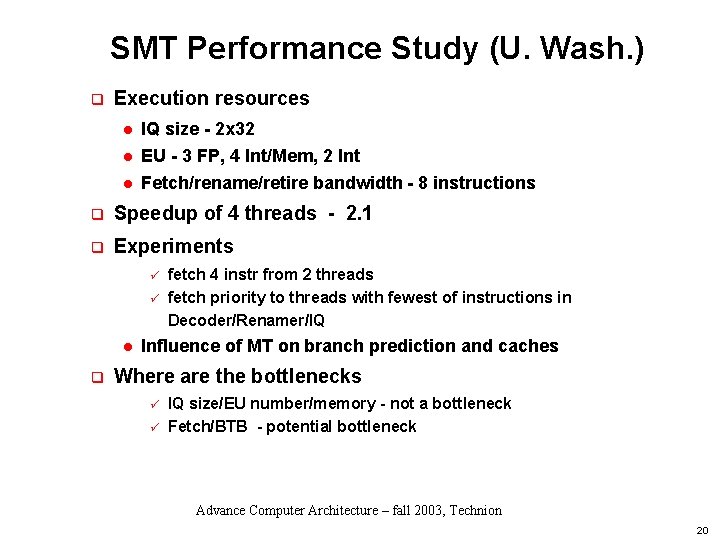

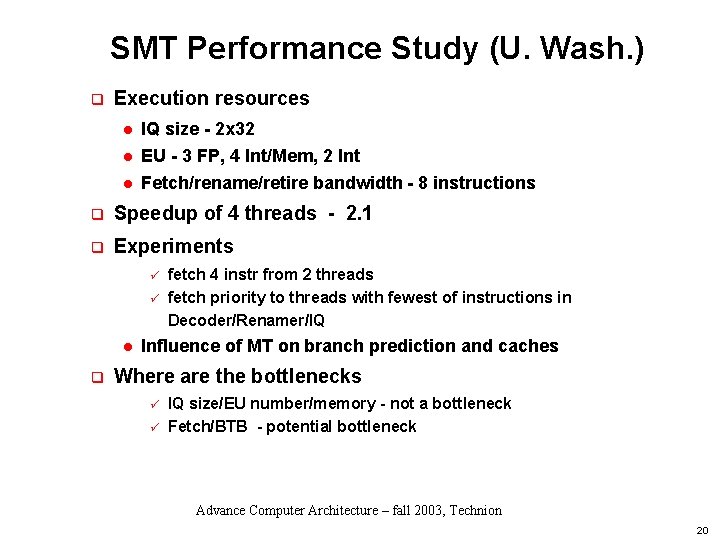

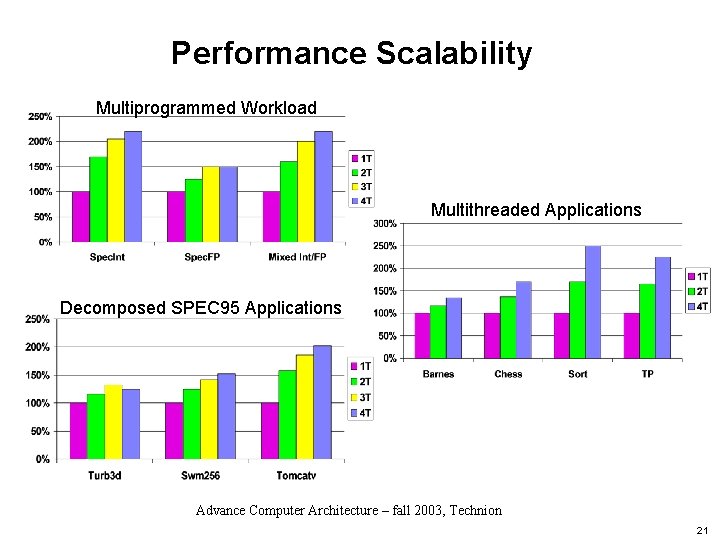

SMT Performance Study (U. Wash. ) q Execution resources l IQ size - 2 x 32 l EU - 3 FP, 4 Int/Mem, 2 Int Fetch/rename/retire bandwidth - 8 instructions l q Speedup of 4 threads - 2. 1 q Experiments ü ü l q fetch 4 instr from 2 threads fetch priority to threads with fewest of instructions in Decoder/Renamer/IQ Influence of MT on branch prediction and caches Where are the bottlenecks ü ü IQ size/EU number/memory - not a bottleneck Fetch/BTB - potential bottleneck Advance Computer Architecture – fall 2003, Technion 20

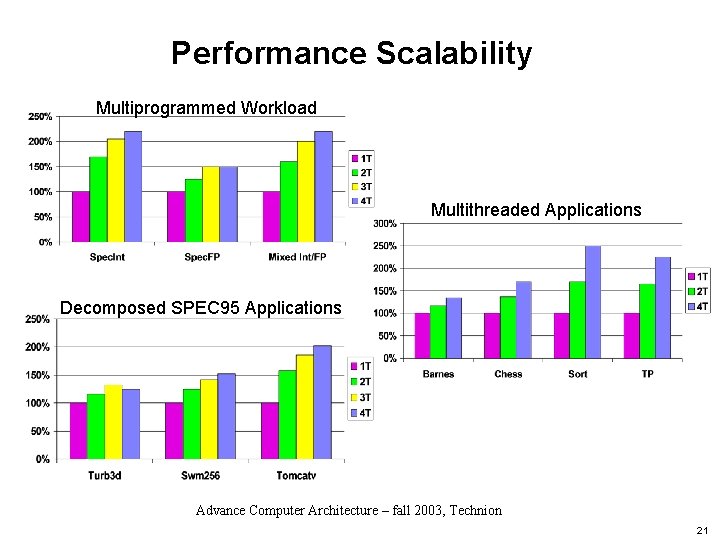

Performance Scalability Multiprogrammed Workload Multithreaded Applications Decomposed SPEC 95 Applications Advance Computer Architecture – fall 2003, Technion 21

Fetch Interleaving on SMT q What if one thread gets “stuck”? l Round-robin: eventually it will fill up the machine (not good) l ICOUNT: thread with fewest instructions in pipe has priority ü q Translation: thread doesn’t get to fetch until it gets “unstuck” Variation: what if one thread is spinning? l Not really stuck, gets to keep fetching l Have to stick it artificially (QUIESCE) Advance Computer Architecture – fall 2003, Technion 22

Improving Performance on MT Apps q Shared memory apps: l Communicate through caches ü ü Communication is faster if happens in the same cache No coherence overhead Advance Computer Architecture – fall 2003, Technion 23

Summary q Multithreaded Software q Multithreaded Architecture l l Advantageous cost/throughput Blocked MT ü ü ü l Interleaved MT ü ü ü l Good single thread performance Good throughput Needs fast thread switch and short pipe Bad single thread performance Good throughput Needs many threads Simultaneous MT ü ü Good throughput Good single thread performance Good utilization Need fewer threads Advance Computer Architecture – fall 2003, Technion 24