Multitask Recurrent Model for Speech and Speaker Recognition

![Experiments Speech and Speaker Recognition, on WSJ [Kaldi nnet 3] ASR Baseline SRE Baseline Experiments Speech and Speaker Recognition, on WSJ [Kaldi nnet 3] ASR Baseline SRE Baseline](https://slidetodoc.com/presentation_image_h2/3ffb38c9c17054a883d9162d3d0135a9/image-16.jpg)

- Slides: 21

Multi-task Recurrent Model for Speech and Speaker Recognition Zhiyuan Tang, Lantian Li and Dong Wang* CSLT/RIIT, Tsinghua University wangdong 99@mails. tsinghua. edu. cn Presented by Lantian Li APSIPA ASC, Dec 13 -16, 2016, Jeju, Korea

Contents >>>>> 1 Introduction 2 Multi-task Recurrent Model 3 Experiments 4 Conclusions

Introduction Speech and Speaker I do think so! Wahaha, wahaha! A wonderful place! Who are they? What are they talking? Are they happy? ……

Introduction Multi-task Learning Speech Recognition Speaker Recognition Emotion Recognition ……

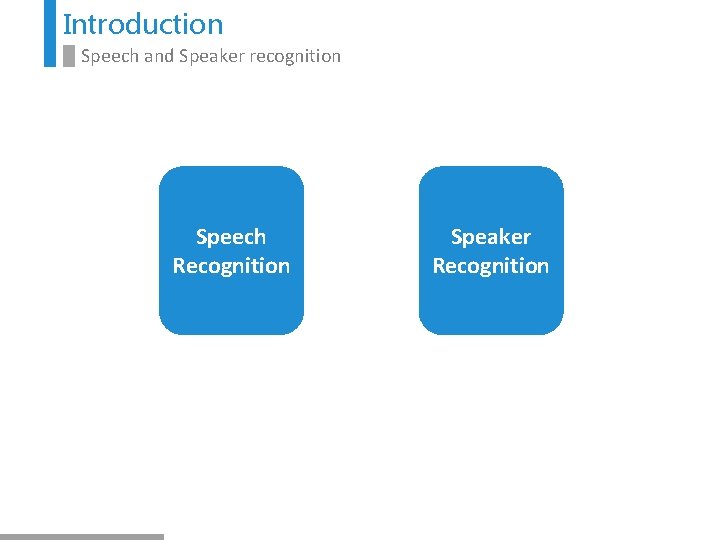

Introduction Speech and Speaker recognition Speech Recognition Speaker Recognition

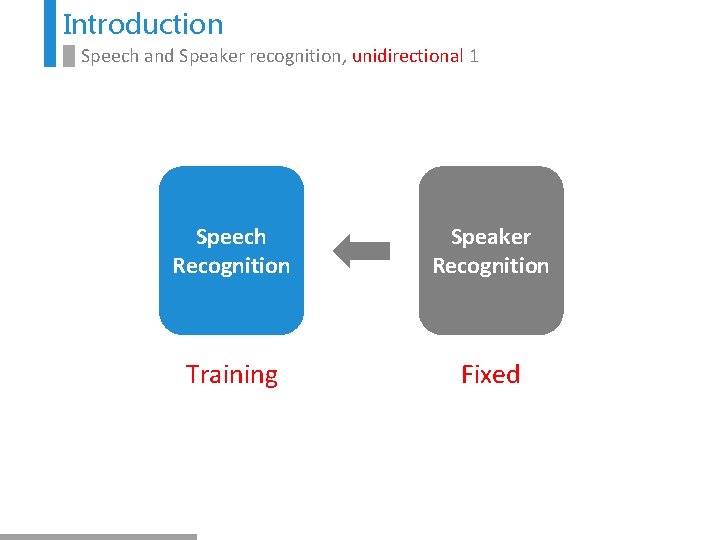

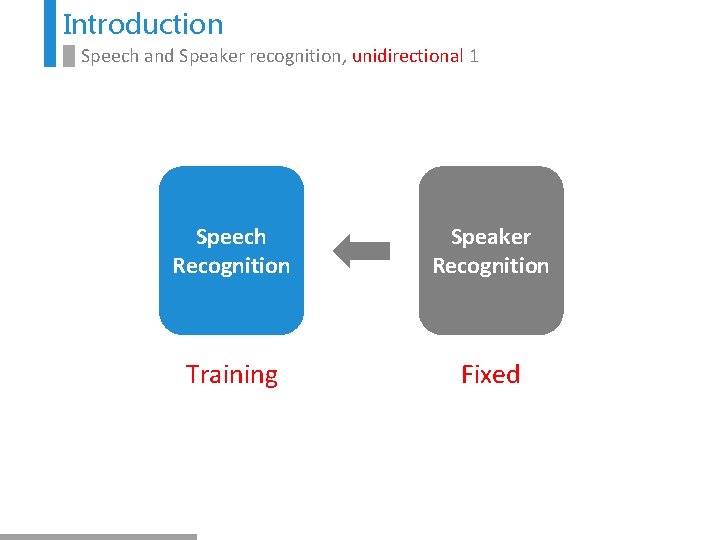

Introduction Speech and Speaker recognition, unidirectional 1 Speech Recognition Speaker Recognition Training Fixed

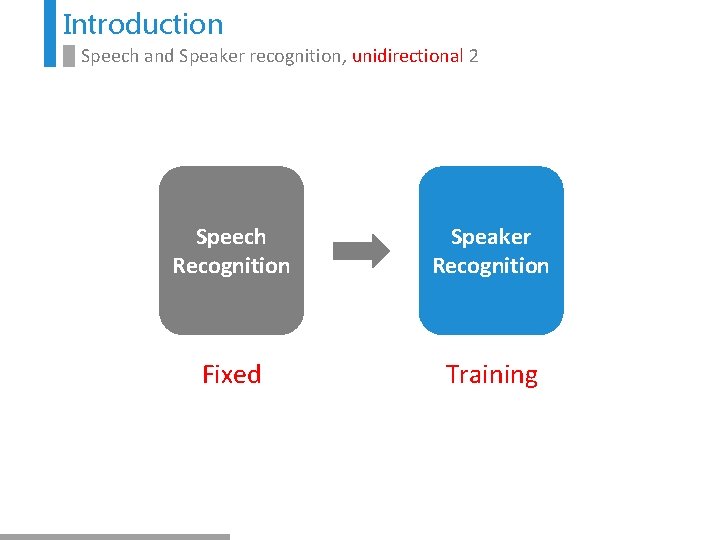

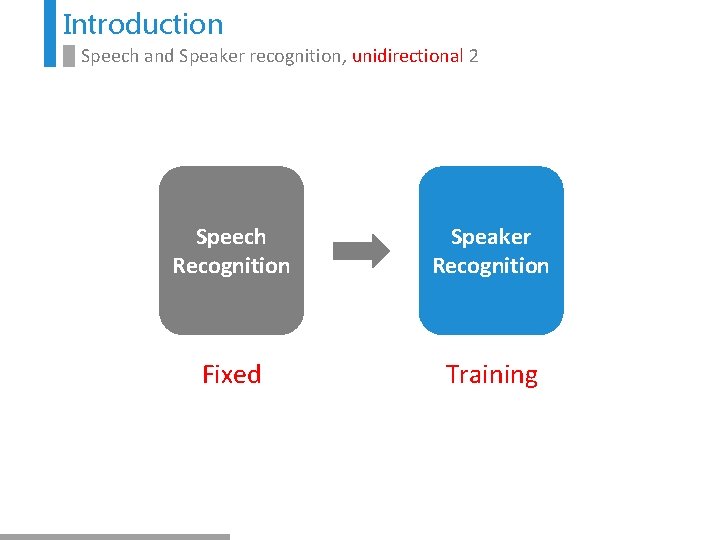

Introduction Speech and Speaker recognition, unidirectional 2 Speech Recognition Speaker Recognition Fixed Training

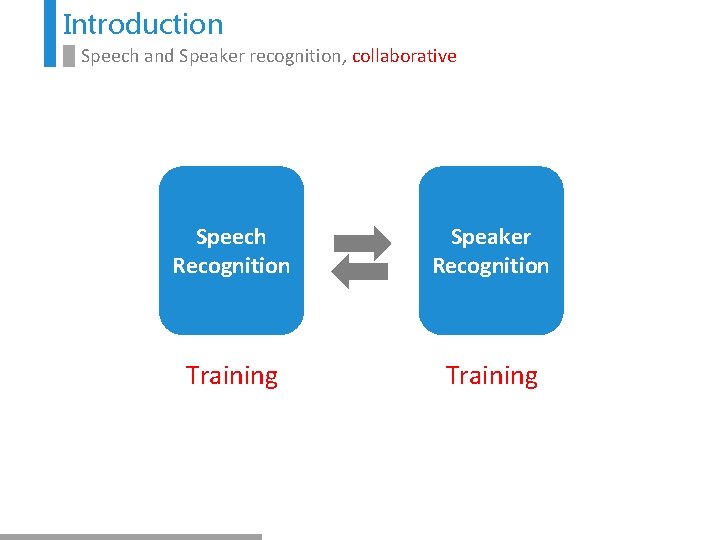

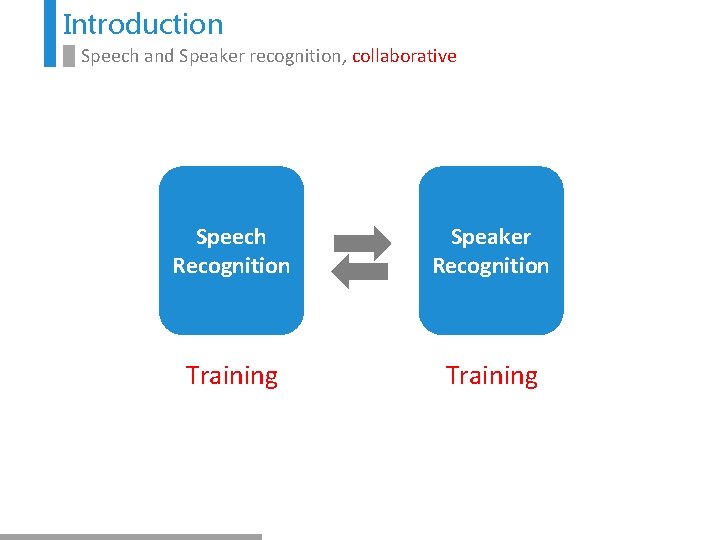

Introduction Speech and Speaker recognition, collaborative Speech Recognition Speaker Recognition Training

Contents >>>>> 1 Introduction 2 Multi-task Recurrent Model 3 Experiments 4 Conclusions

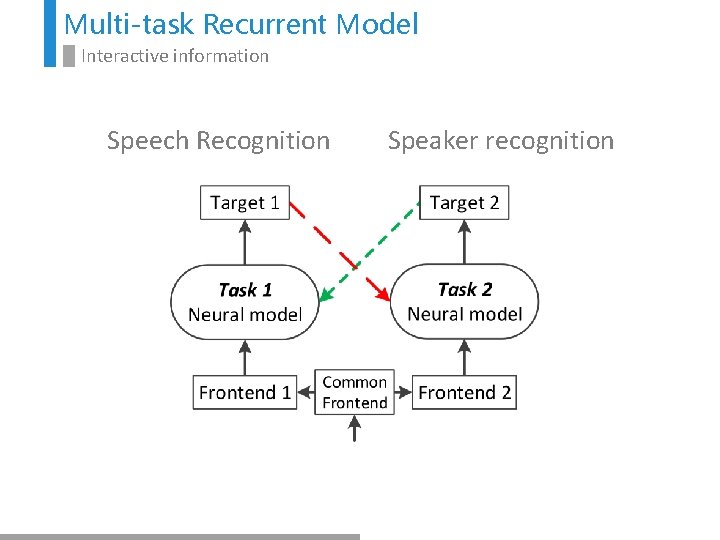

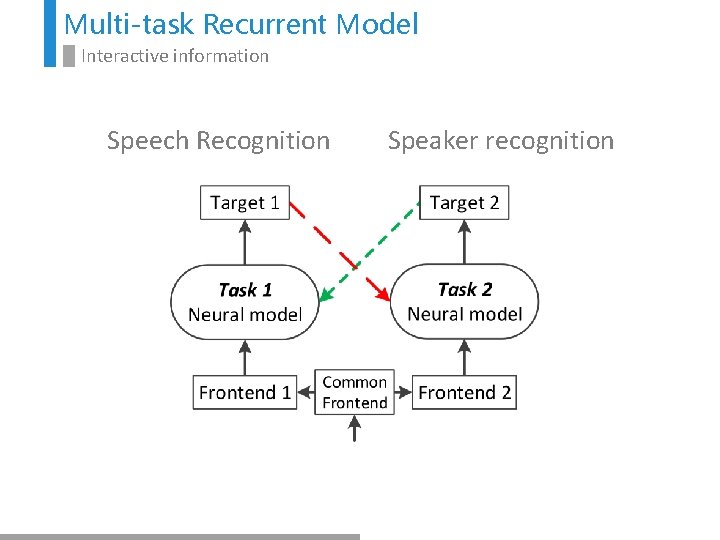

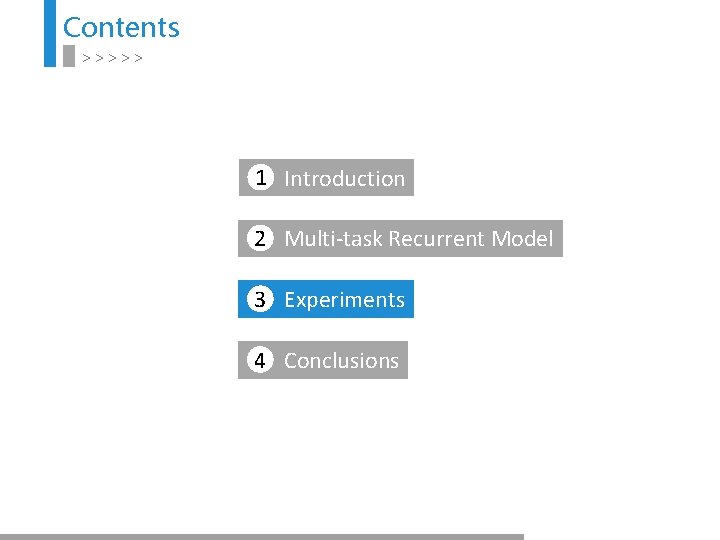

Multi-task Recurrent Model Interactive information Speech Recognition Speaker recognition

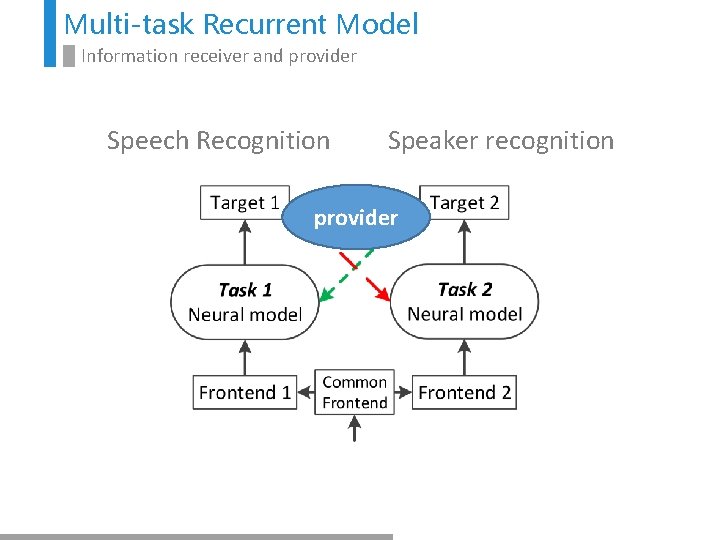

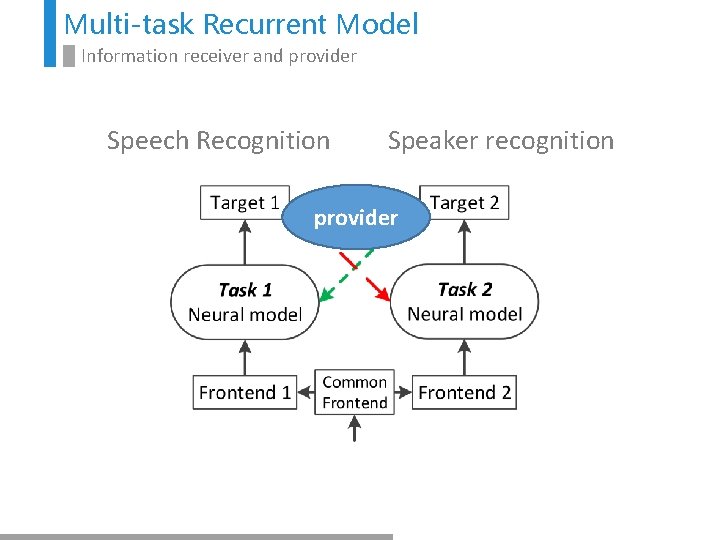

Multi-task Recurrent Model Information receiver and provider Speech Recognition Speaker recognition provider

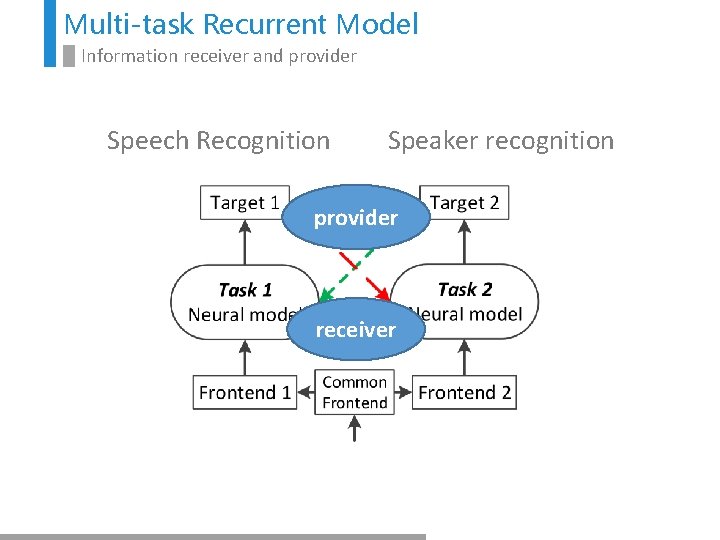

Multi-task Recurrent Model Information receiver and provider Speech Recognition Speaker recognition provider receiver

Multi-task Recurrent Model Neural model: Long short-term memory (LSTM) Forget gate Output gate Input gate Recurrent Output g function h function Projection

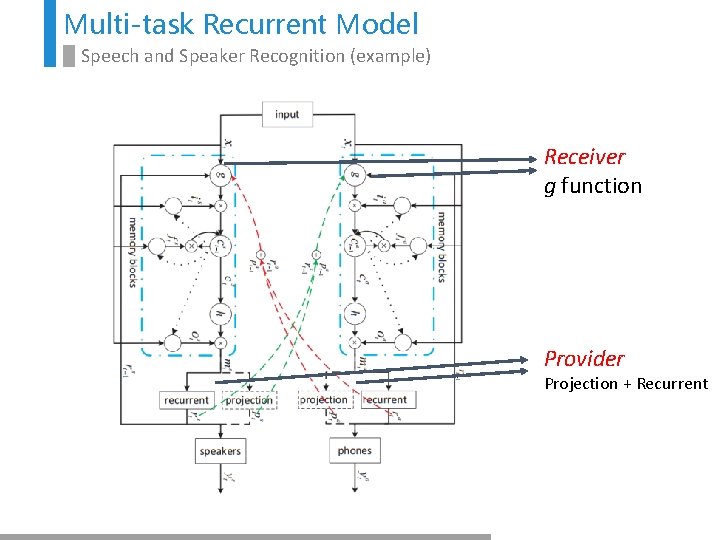

Multi-task Recurrent Model Speech and Speaker Recognition (example) Receiver g function Provider Projection + Recurrent

Contents >>>>> 1 Introduction 2 Multi-task Recurrent Model 3 Experiments 4 Conclusions

![Experiments Speech and Speaker Recognition on WSJ Kaldi nnet 3 ASR Baseline SRE Baseline Experiments Speech and Speaker Recognition, on WSJ [Kaldi nnet 3] ASR Baseline SRE Baseline](https://slidetodoc.com/presentation_image_h2/3ffb38c9c17054a883d9162d3d0135a9/image-16.jpg)

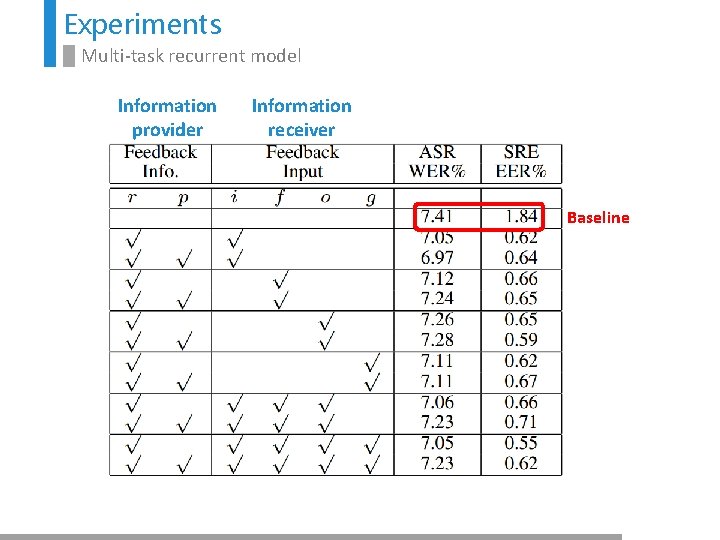

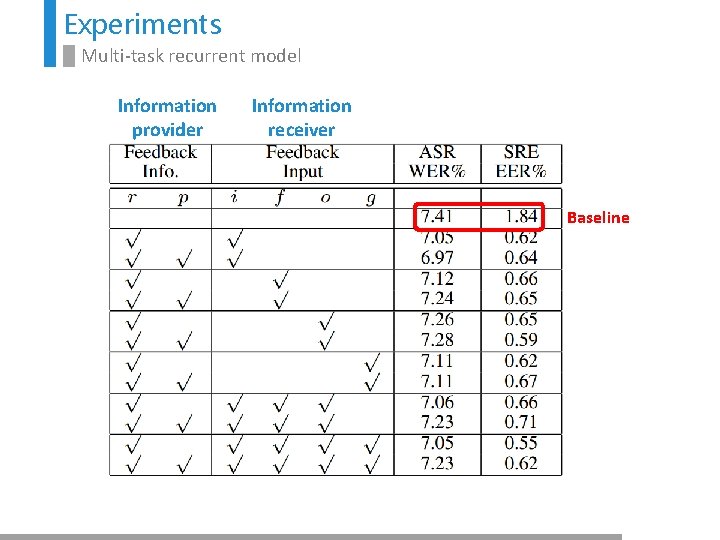

Experiments Speech and Speaker Recognition, on WSJ [Kaldi nnet 3] ASR Baseline SRE Baseline

Experiments Multi-task recurrent model Information provider Information receiver

Experiments Multi-task recurrent model Information provider Information receiver Baseline

Contents >>>>> 1 Introduction 2 Multi-task Recurrent Model 3 Experiments 4 Conclusions

Conclusions >>>>> • Primary results show that we can train ASR and SRE at the same time and the two tasks can improve each other. • Future work includes: • partially labeled database. • Collaborative Joint Training with Multi-task Recurrent Model for Speech and Speaker Recognition [TASLP] • more than two tasks.

Thanks a lot.