MultiSite Clustering with Windows Server 2008 R 2

- Slides: 58

Multi-Site Clustering with Windows Server 2008 R 2 Elden Christensen Senior Program Manager Lead Microsoft Session Code: SVR 319

Session Objectives And Takeaways Session Objective(s): Understanding the need and benefit of multi-site clusters What to consider as you plan, design, and deploy your first multi-site cluster Windows Server Failover Clustering is a great solution for not only high availability, but also disaster recovery

Multi-Site Clustering Introduction Networking Storage Quorum Workloads

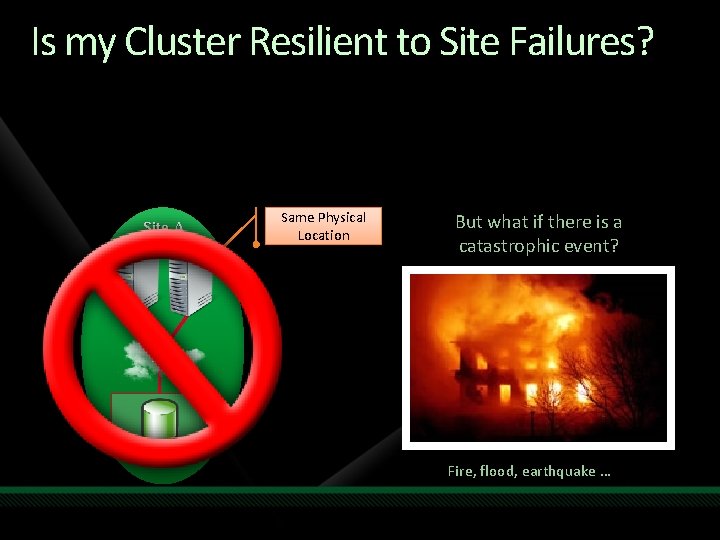

Is my Cluster Resilient to Site Failures? Site A Same Physical Location But what if there is a catastrophic event? SAN Fire, flood, earthquake …

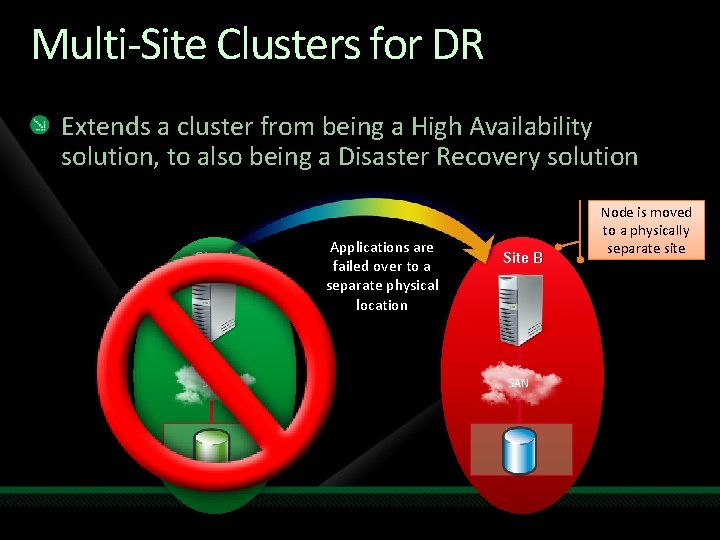

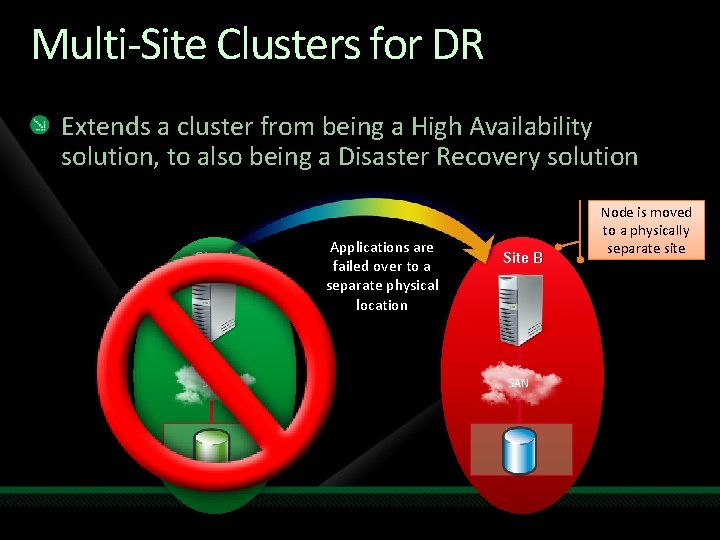

Multi-Site Clusters for DR Extends a cluster from being a High Availability solution, to also being a Disaster Recovery solution Site A SAN Applications are failed over to a separate physical location Site B SAN Node is moved to a physically separate site

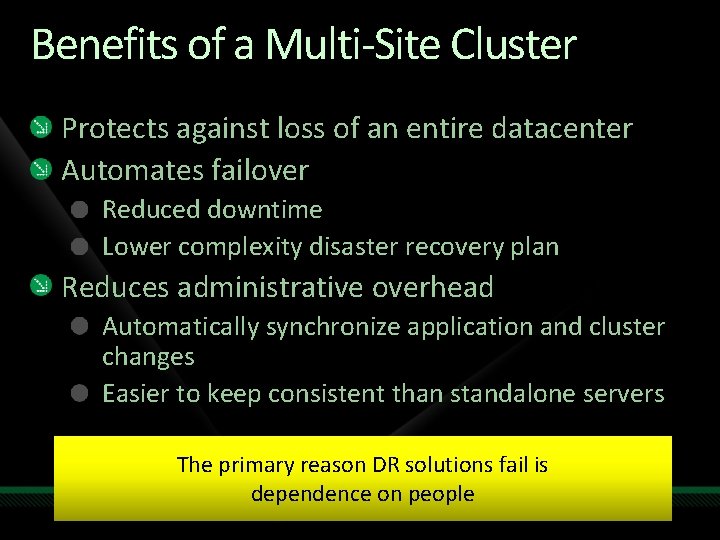

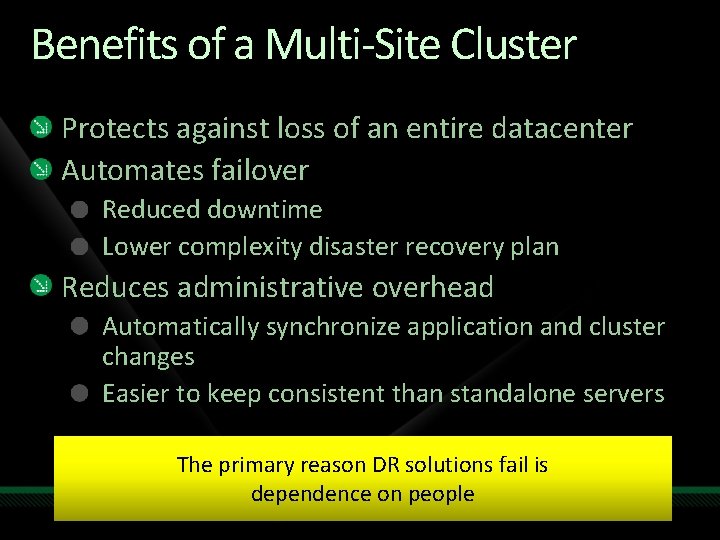

Benefits of a Multi-Site Cluster Protects against loss of an entire datacenter Automates failover Reduced downtime Lower complexity disaster recovery plan Reduces administrative overhead Automatically synchronize application and cluster changes Easier to keep consistent than standalone servers The primary reason DR solutions fail is dependence on people

Multi-Site Clustering Introduction Networking Storage Quorum Workloads

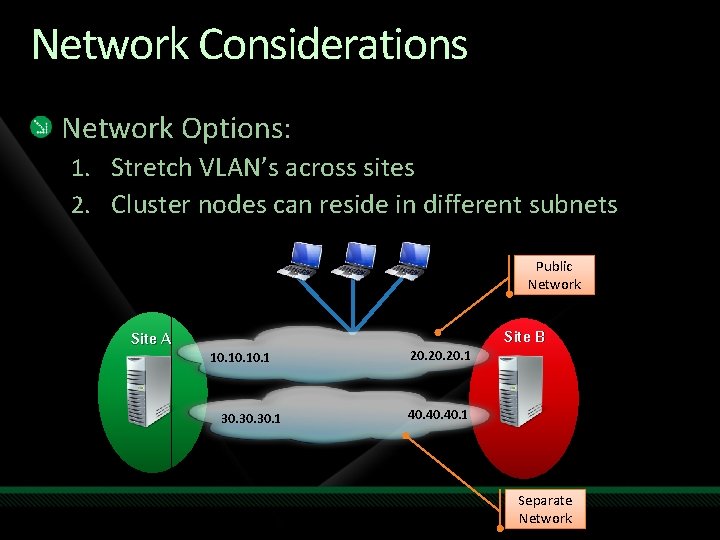

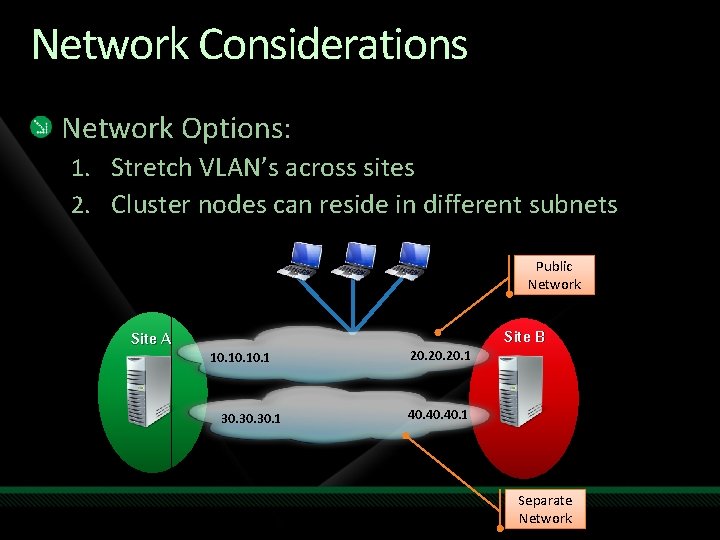

Network Considerations Network Options: 1. Stretch VLAN’s across sites 2. Cluster nodes can reside in different subnets Public Network Site B Site A 10. 10. 1 30. 30. 1 20. 20. 1 40. 40. 1 Separate Network

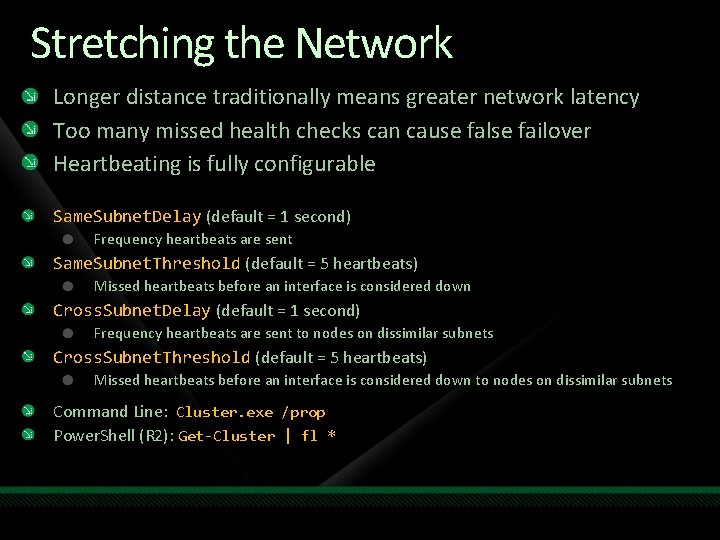

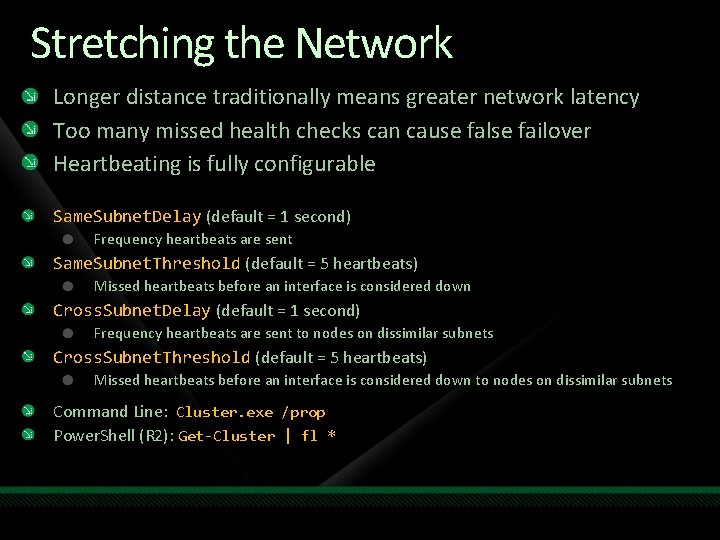

Stretching the Network Longer distance traditionally means greater network latency Too many missed health checks can cause false failover Heartbeating is fully configurable Same. Subnet. Delay (default = 1 second) Frequency heartbeats are sent Same. Subnet. Threshold (default = 5 heartbeats) Missed heartbeats before an interface is considered down Cross. Subnet. Delay (default = 1 second) Frequency heartbeats are sent to nodes on dissimilar subnets Cross. Subnet. Threshold (default = 5 heartbeats) Missed heartbeats before an interface is considered down to nodes on dissimilar subnets Command Line: Cluster. exe /prop Power. Shell (R 2): Get-Cluster | fl *

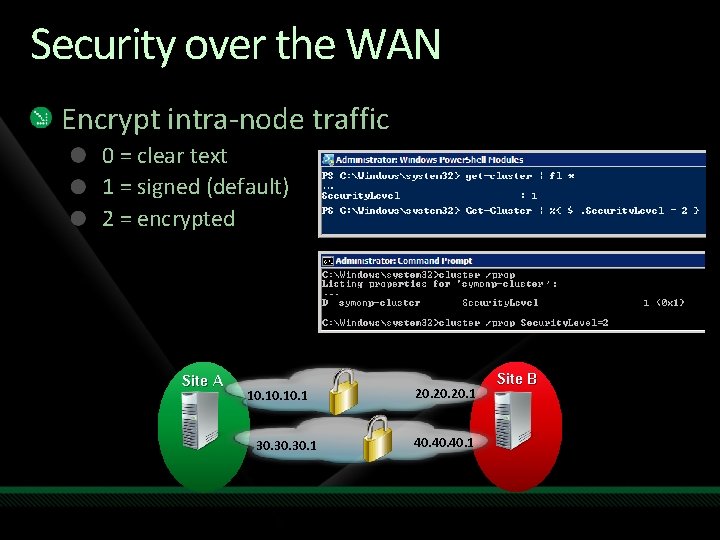

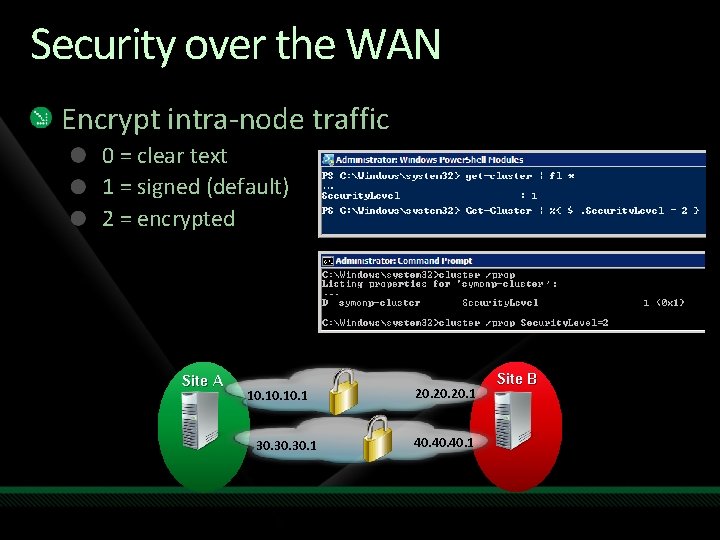

Security over the WAN Encrypt intra-node traffic 0 = clear text 1 = signed (default) 2 = encrypted Site A 10. 10. 1 30. 30. 1 20. 20. 1 40. 40. 1 Site B

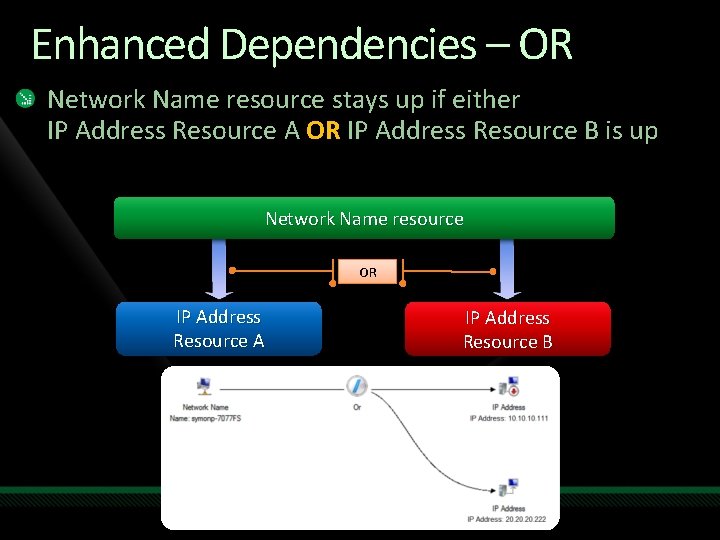

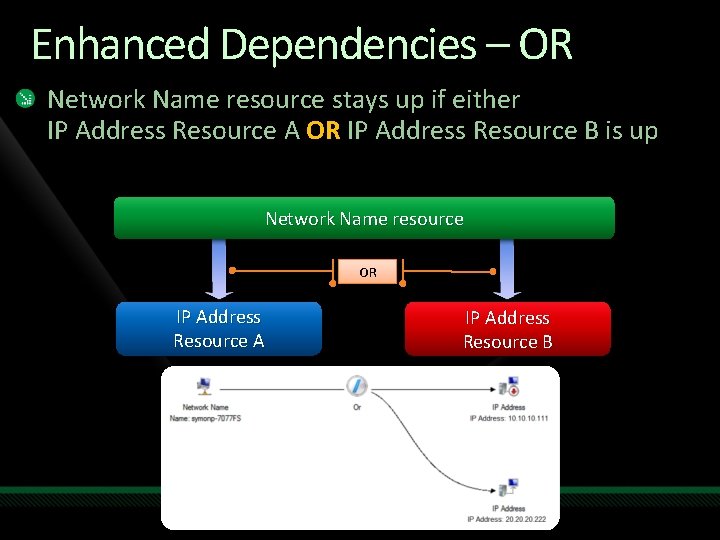

Enhanced Dependencies – OR Network Name resource stays up if either IP Address Resource A OR IP Address Resource B is up Network Name resource OR IP Address Resource A IP Address Resource B

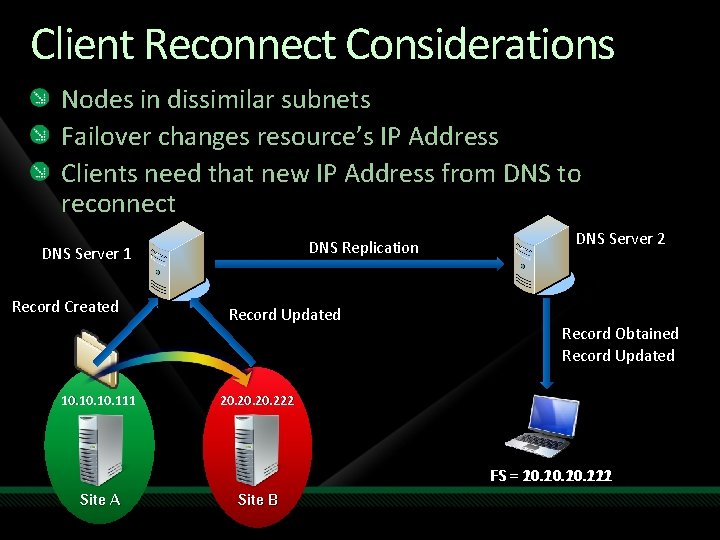

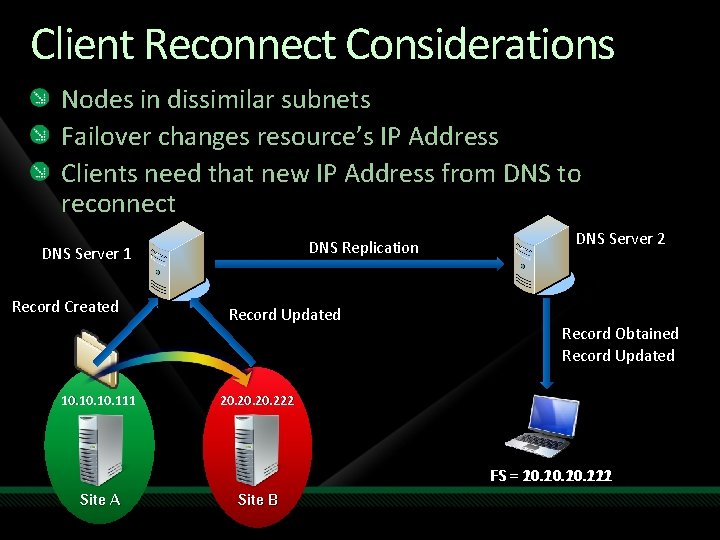

Client Reconnect Considerations Nodes in dissimilar subnets Failover changes resource’s IP Address Clients need that new IP Address from DNS to reconnect DNS Replication DNS Server 1 Record Created 10. 10. 111 Record Updated DNS Server 2 Record Obtained Record Updated 20. 20. 222 FS = 10. 10. 111 20. 20. 222 Site A Site B

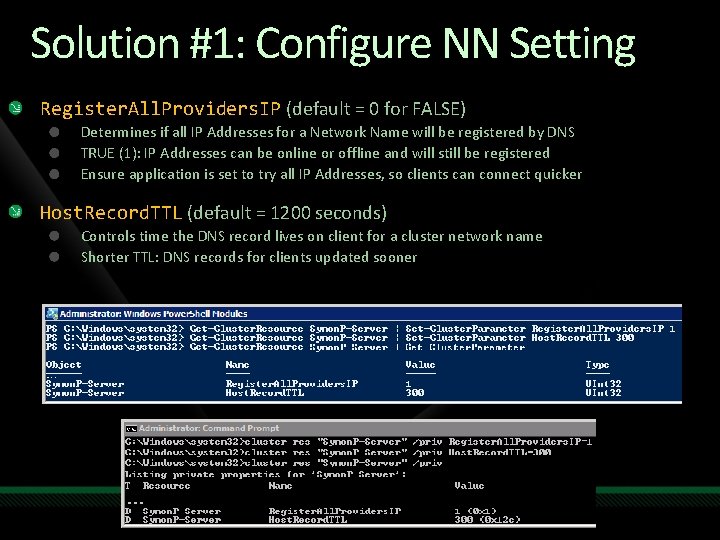

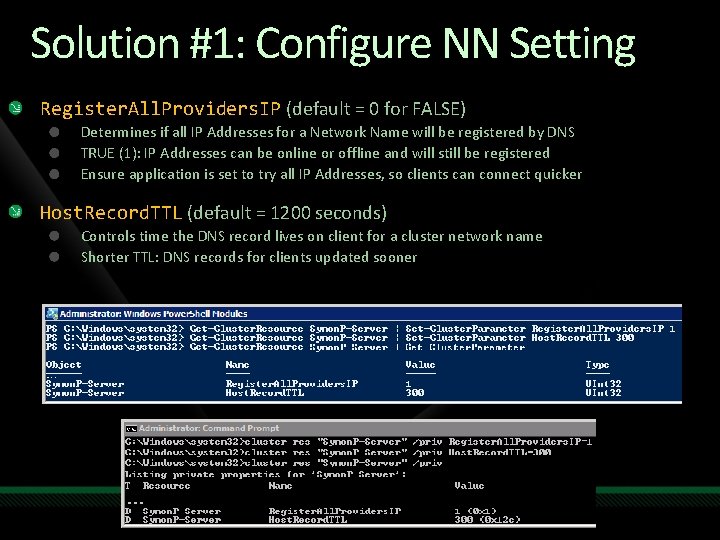

Solution #1: Configure NN Setting Register. All. Providers. IP (default = 0 for FALSE) Determines if all IP Addresses for a Network Name will be registered by DNS TRUE (1): IP Addresses can be online or offline and will still be registered Ensure application is set to try all IP Addresses, so clients can connect quicker Host. Record. TTL (default = 1200 seconds) Controls time the DNS record lives on client for a cluster network name Shorter TTL: DNS records for clients updated sooner

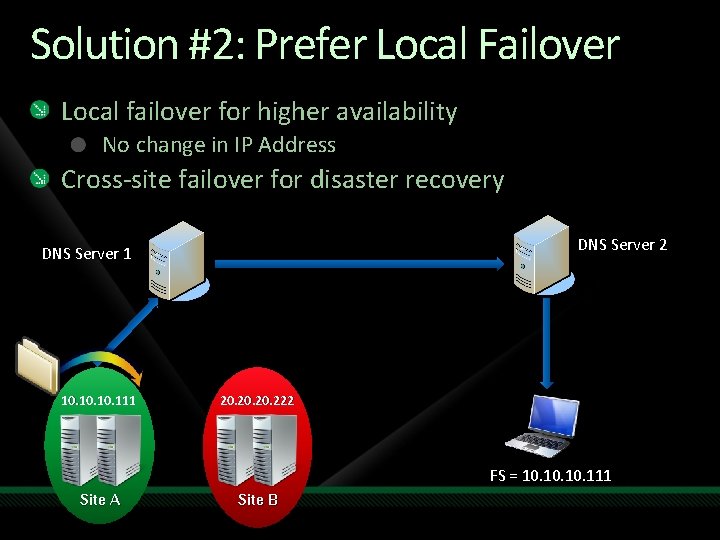

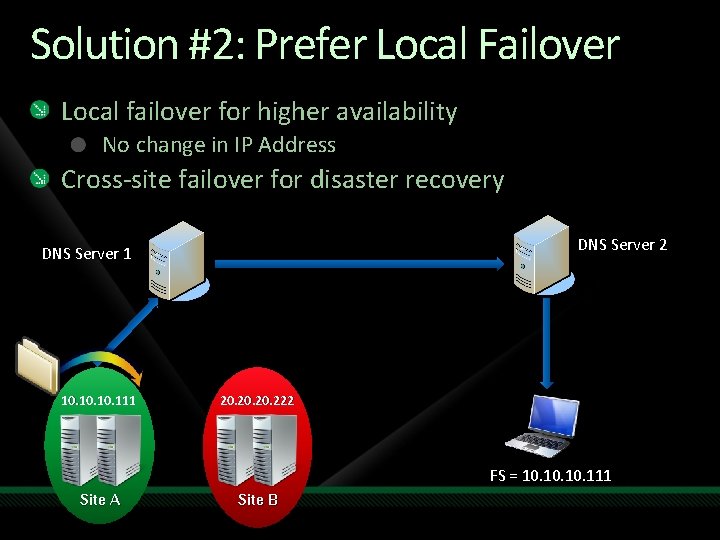

Solution #2: Prefer Local Failover Local failover for higher availability No change in IP Address Cross-site failover for disaster recovery DNS Server 2 DNS Server 1 10. 10. 111 20. 20. 222 FS = 10. 10. 111 Site A Site B

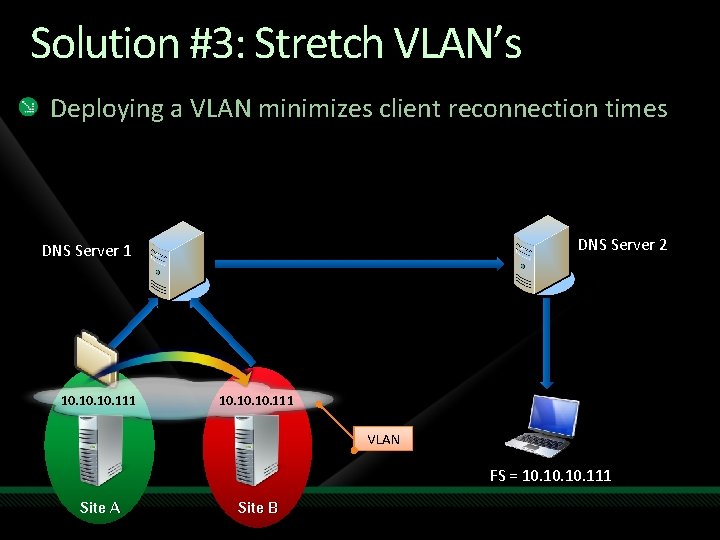

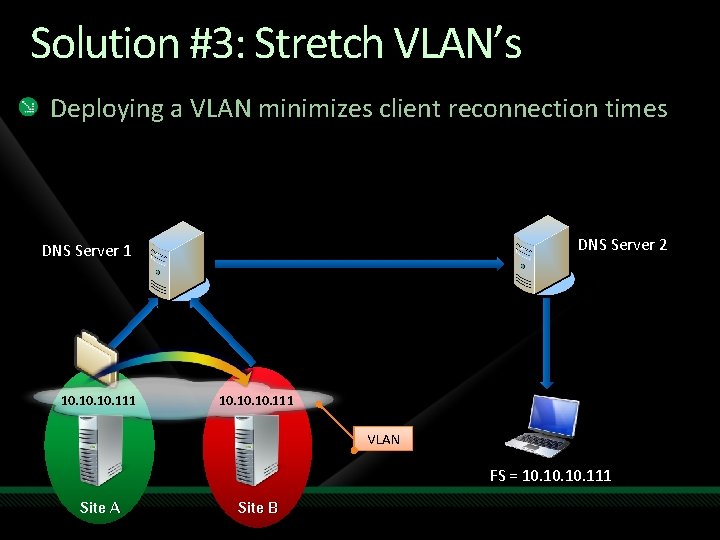

Solution #3: Stretch VLAN’s Deploying a VLAN minimizes client reconnection times DNS Server 2 DNS Server 1 10. 10. 10. 111 VLAN FS = 10. 10. 111 Site A Site B

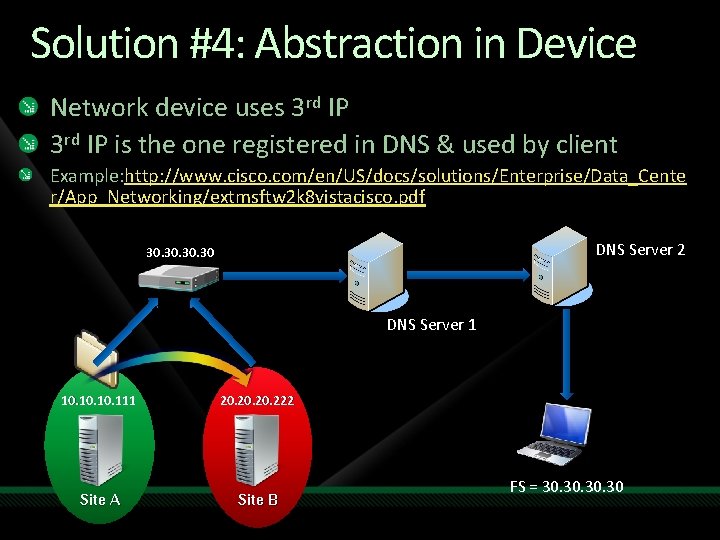

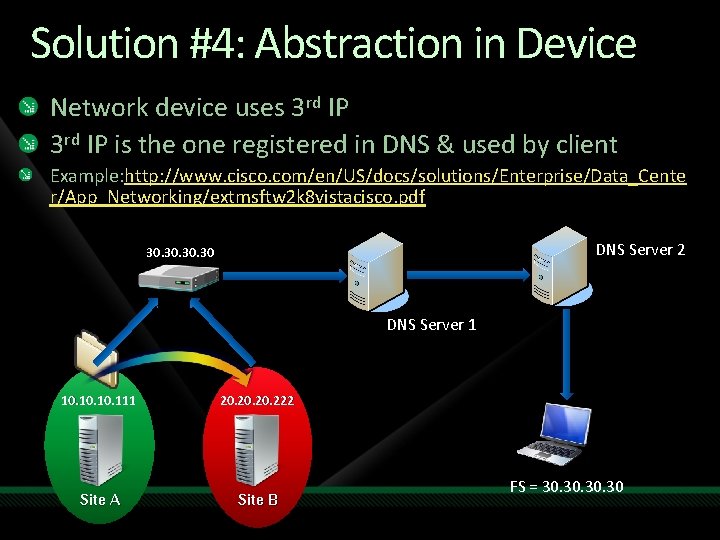

Solution #4: Abstraction in Device Network device uses 3 rd IP is the one registered in DNS & used by client Example: http: //www. cisco. com/en/US/docs/solutions/Enterprise/Data_Cente r/App_Networking/extmsftw 2 k 8 vistacisco. pdf DNS Server 2 30. 30. 30 DNS Server 1 10. 10. 111 Site A 20. 20. 222 Site B FS = 30. 30. 30

This is generic guidance… If you have other creative ideas, that’s ok!

Multi-Site Clustering Introduction Networking Storage Quorum Workloads

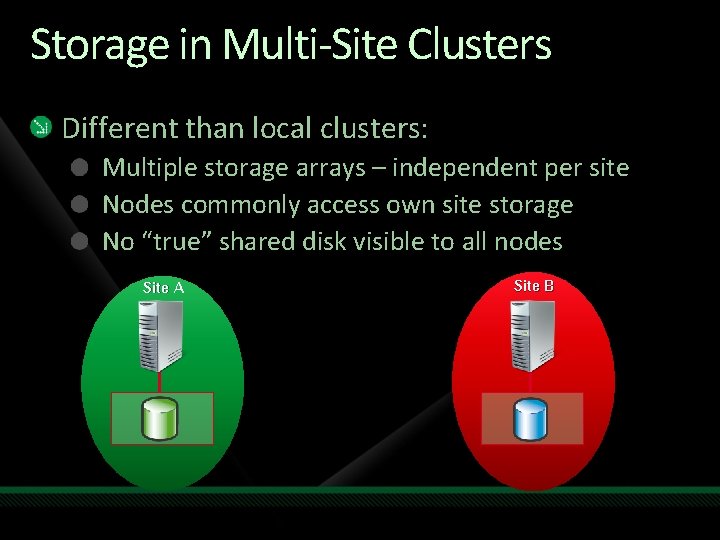

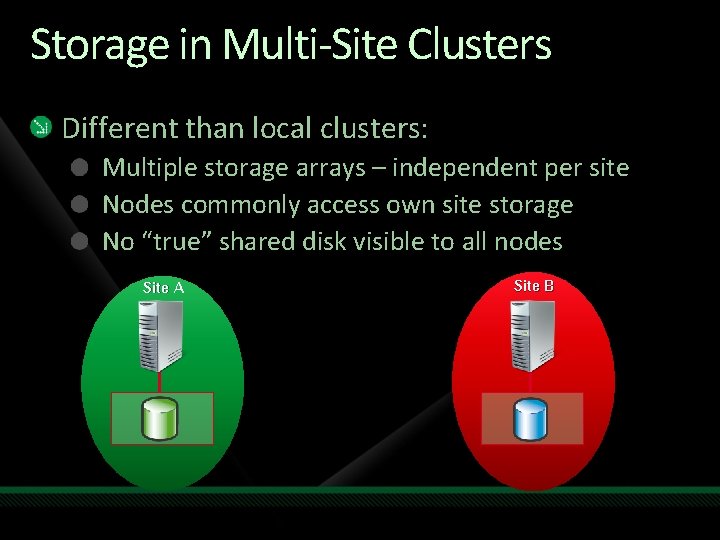

Storage in Multi-Site Clusters Different than local clusters: Multiple storage arrays – independent per site Nodes commonly access own site storage No “true” shared disk visible to all nodes Site A Site B

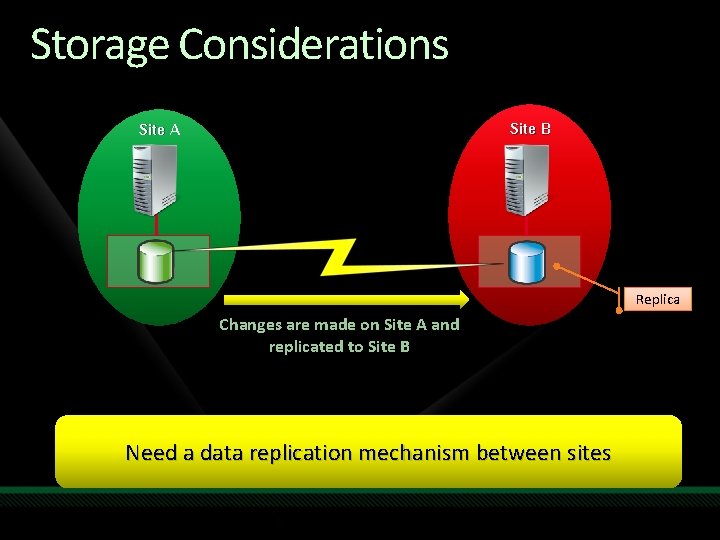

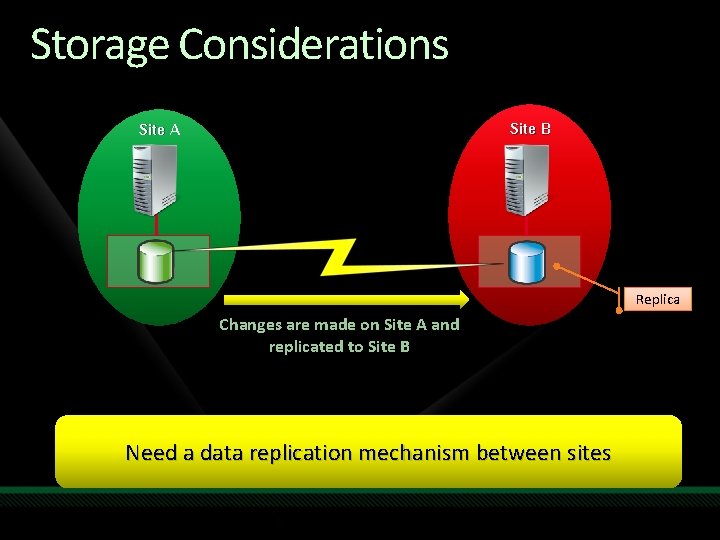

Storage Considerations Site B Site A Replica Changes are made on Site A and replicated to Site B Need a data replication mechanism between sites

Replication Options Replication levels: Hardware storage-based replication Software host-based replication Application-based replication

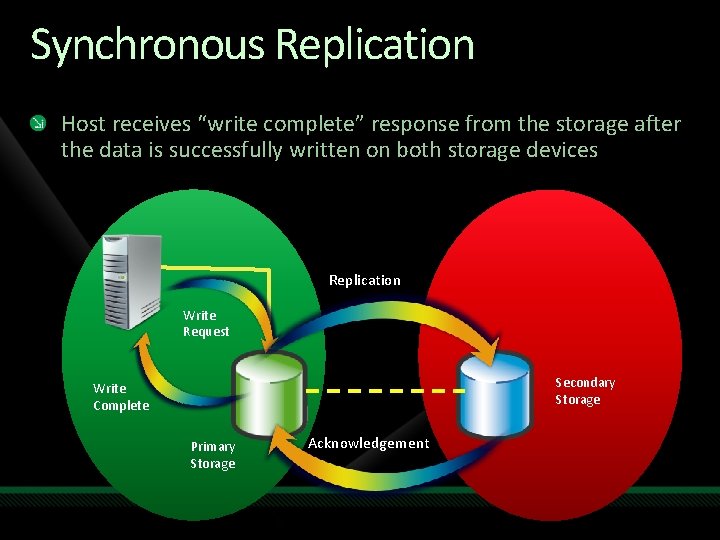

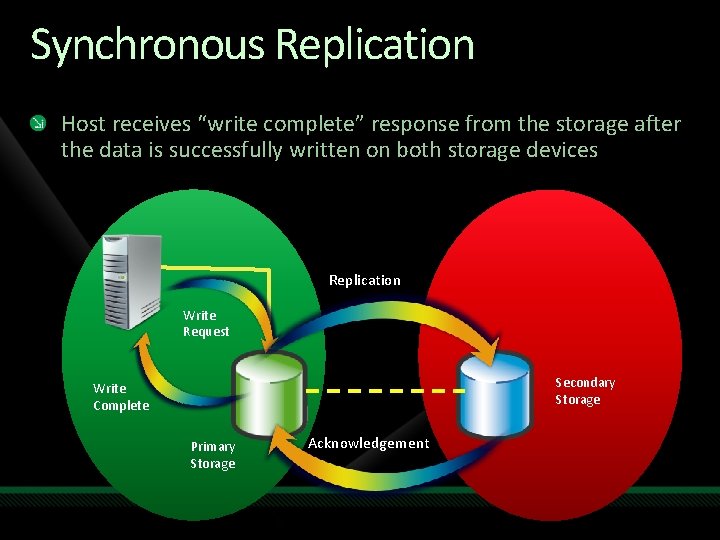

Synchronous Replication Host receives “write complete” response from the storage after the data is successfully written on both storage devices Replication Write Request Secondary Storage Write Complete Primary Storage Acknowledgement

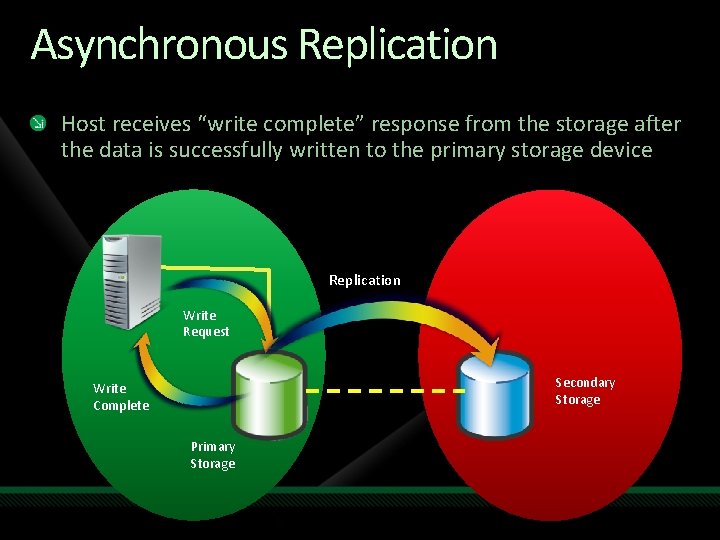

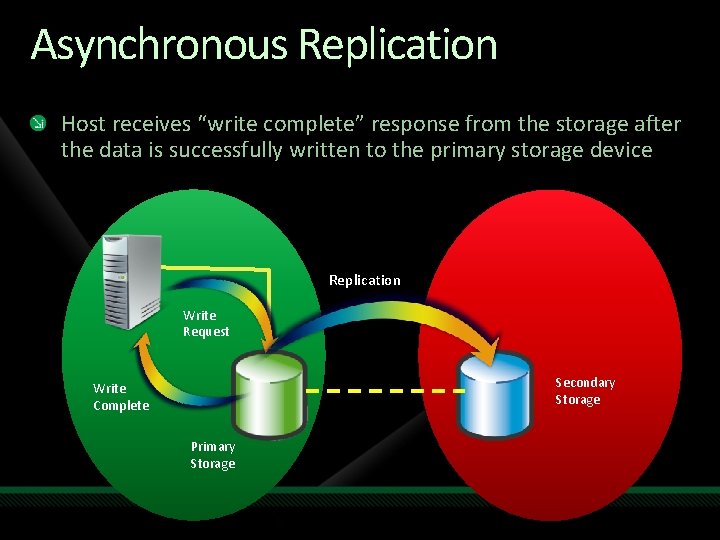

Asynchronous Replication Host receives “write complete” response from the storage after the data is successfully written to the primary storage device Replication Write Request Secondary Storage Write Complete Primary Storage

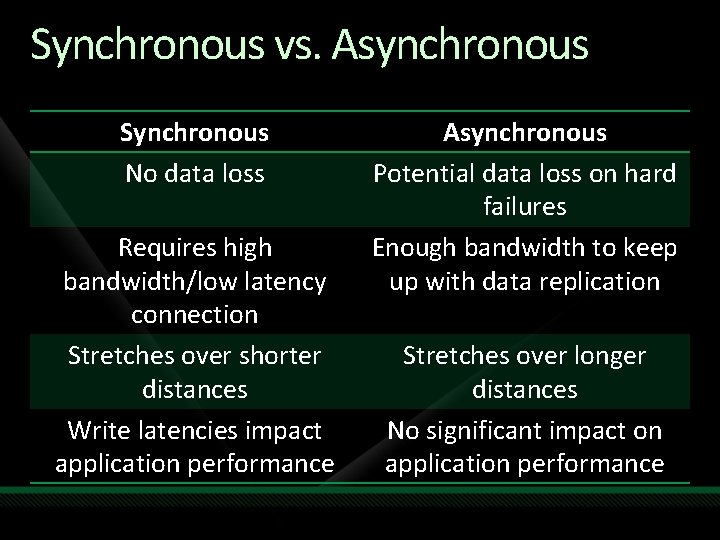

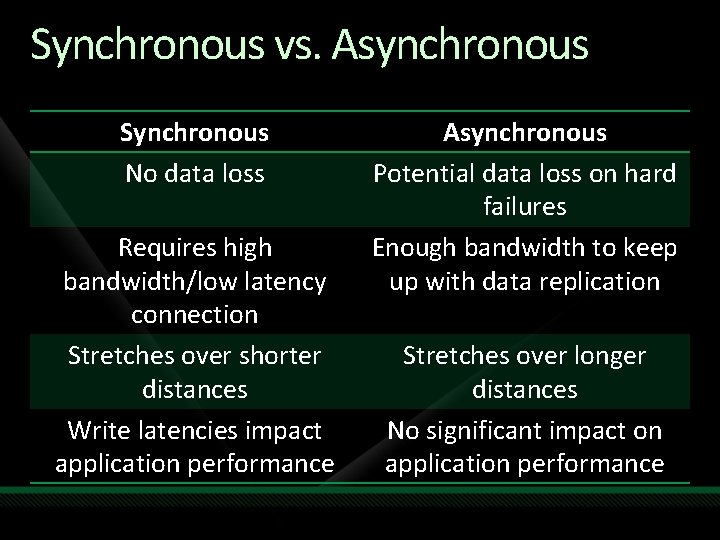

Synchronous vs. Asynchronous Synchronous No data loss Requires high bandwidth/low latency connection Stretches over shorter distances Write latencies impact application performance Asynchronous Potential data loss on hard failures Enough bandwidth to keep up with data replication Stretches over longer distances No significant impact on application performance

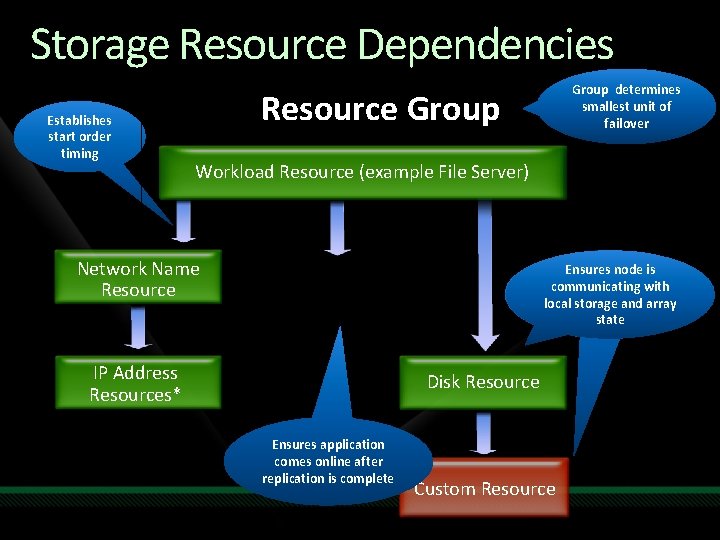

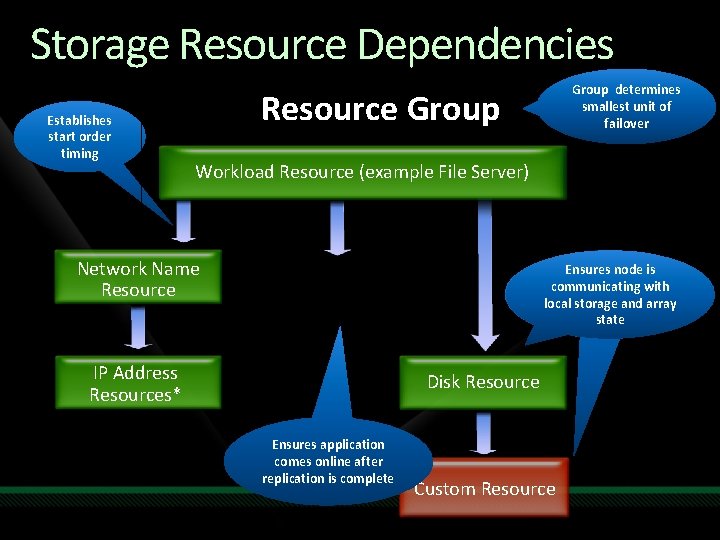

Storage Resource Dependencies Establishes start order timing Group determines smallest unit of failover Resource Group Workload Resource (example File Server) Network Name Resource Ensures node is communicating with local storage and array state IP Address Resources* Disk Resource Ensures application comes online after replication is complete Custom Resource

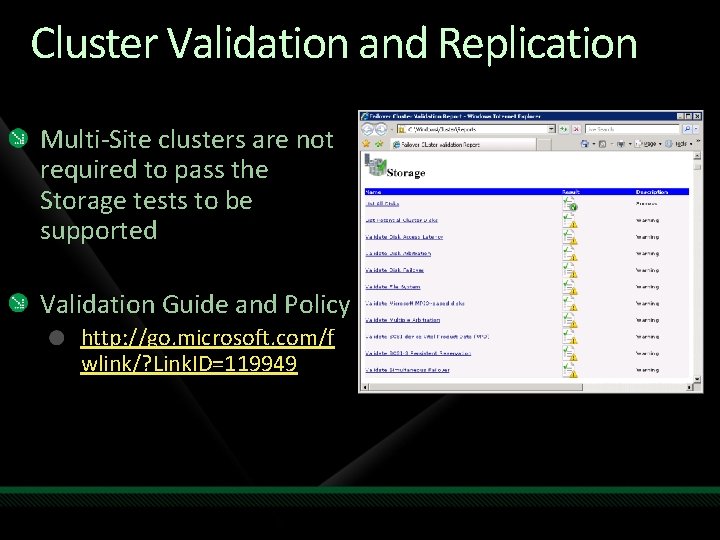

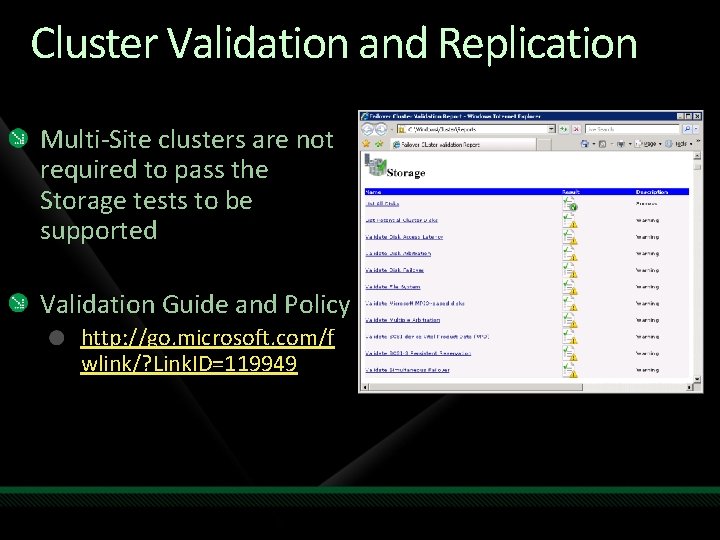

Cluster Validation and Replication Multi-Site clusters are not required to pass the Storage tests to be supported Validation Guide and Policy http: //go. microsoft. com/f wlink/? Link. ID=119949

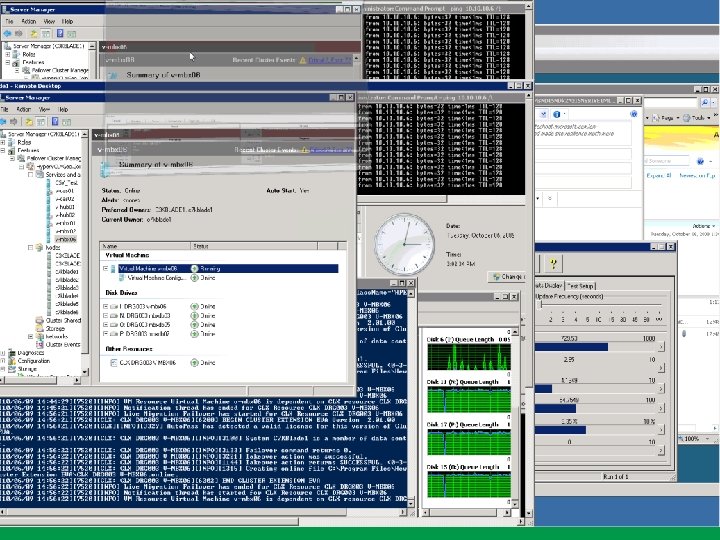

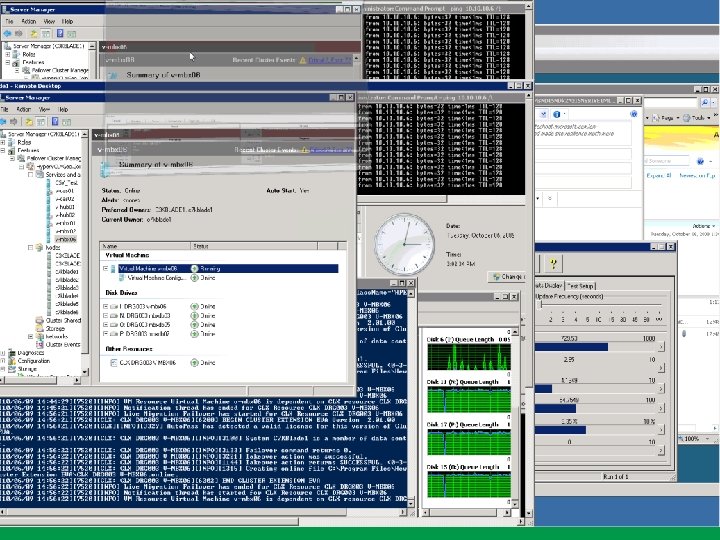

partner HP’s Multi-Site Implementation & Demo Matthias Popp Architect HP

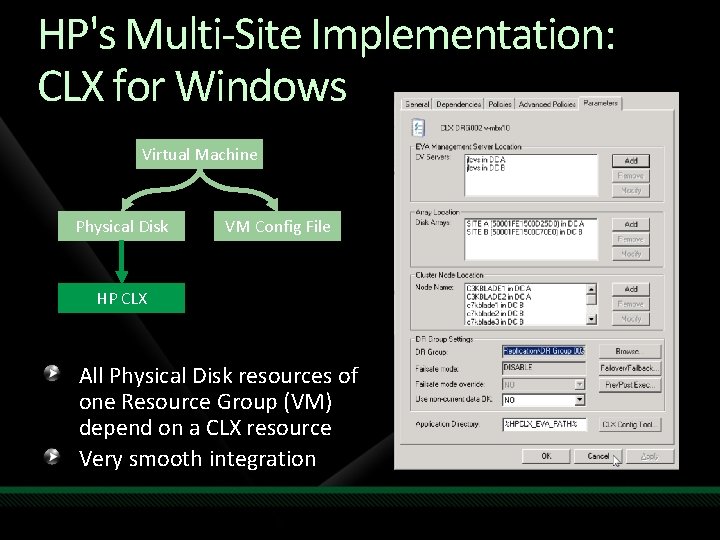

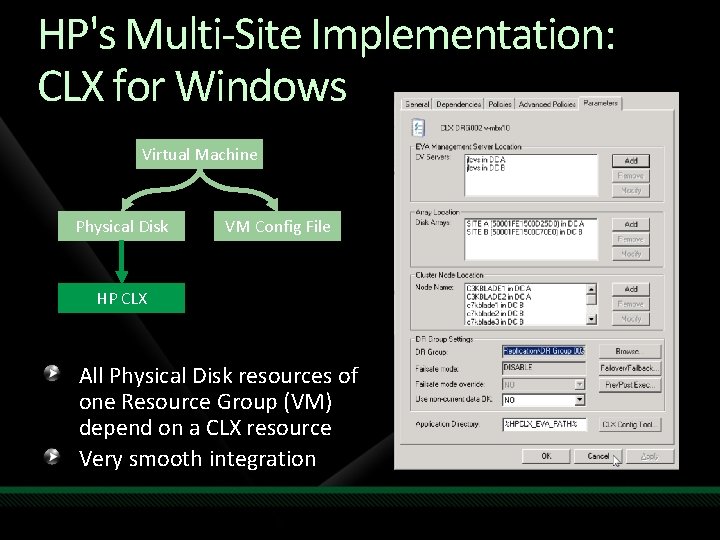

HP's Multi-Site Implementation: CLX for Windows Virtual Machine Physical Disk VM Config File HP CLX All Physical Disk resources of one Resource Group (VM) depend on a CLX resource Very smooth integration

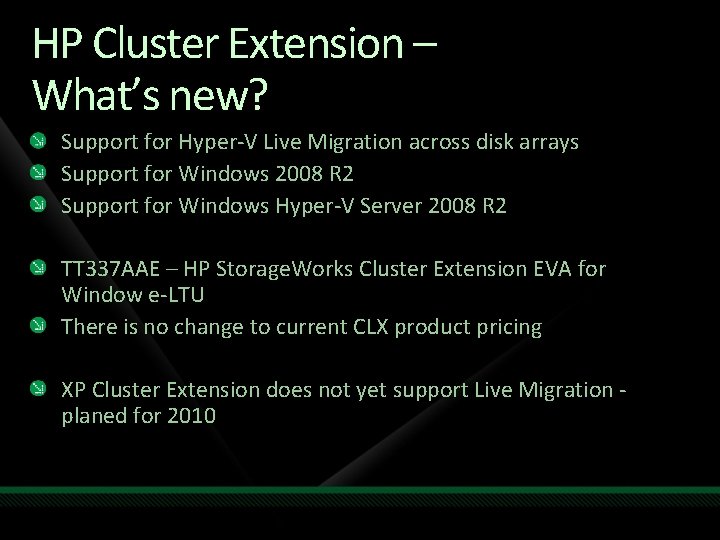

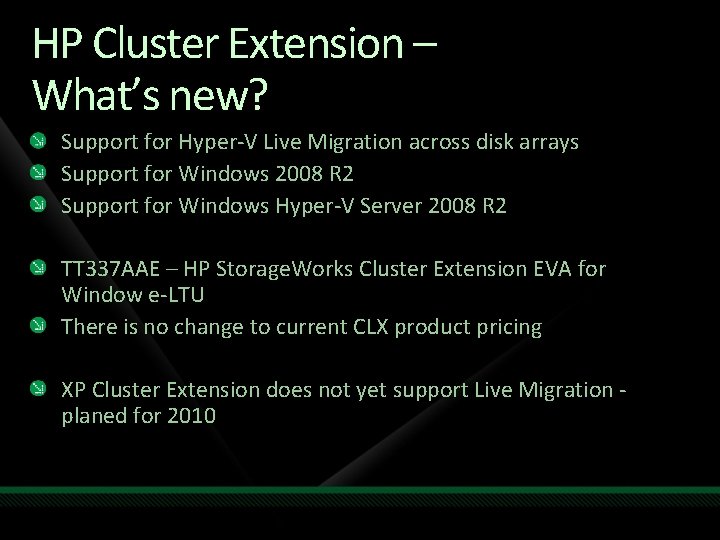

HP Cluster Extension – What’s new? Support for Hyper-V Live Migration across disk arrays Support for Windows 2008 R 2 Support for Windows Hyper-V Server 2008 R 2 TT 337 AAE – HP Storage. Works Cluster Extension EVA for Window e-LTU There is no change to current CLX product pricing XP Cluster Extension does not yet support Live Migration planed for 2010

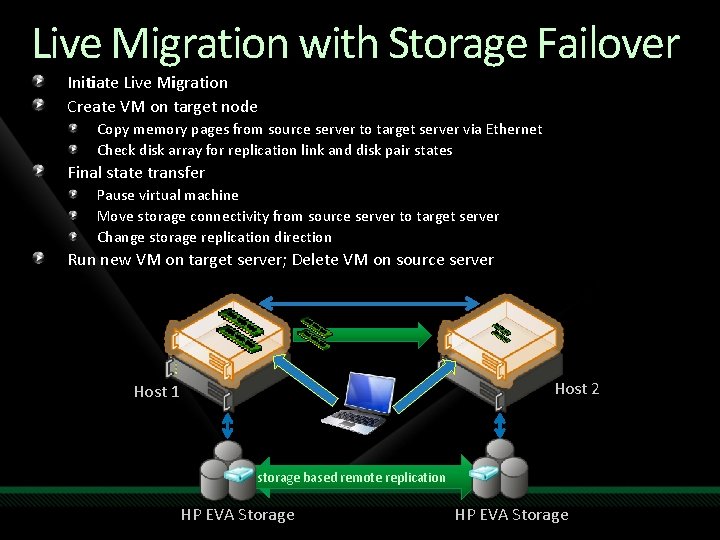

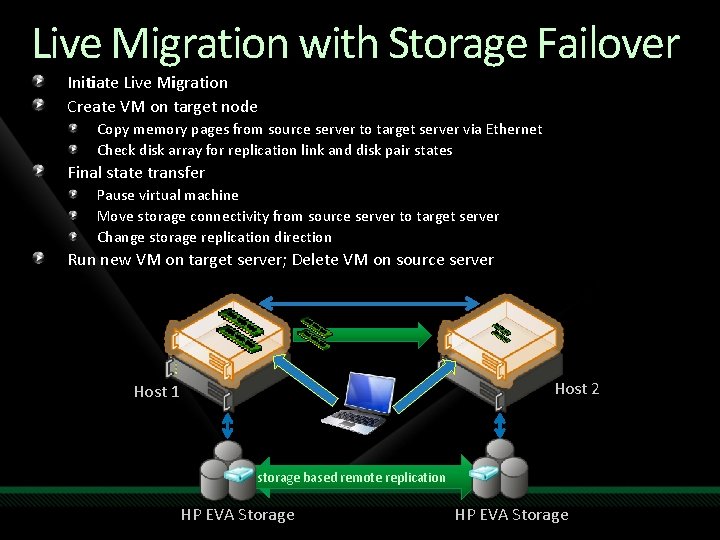

Live Migration with Storage Failover Initiate Live Migration Create VM on target node Copy memory pages from source server to target server via Ethernet Check disk array for replication link and disk pair states Final state transfer Pause virtual machine Move storage connectivity from source server to target server Change storage replication direction Run new VM on target server; Delete VM on source server Host 2 Host 1 storagebasedremotereplication HP EVA Storage

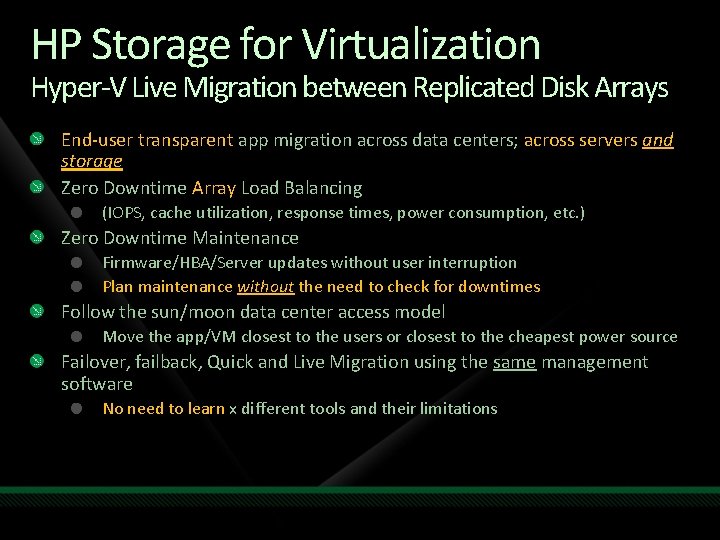

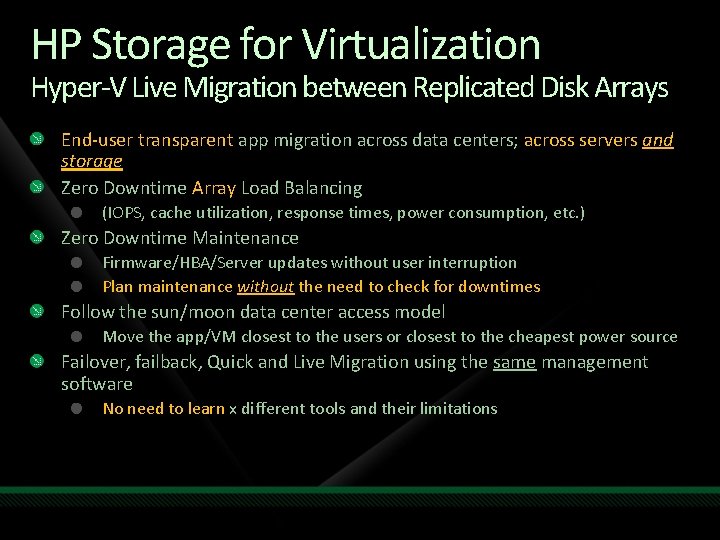

HP Storage for Virtualization Hyper-V Live Migration between Replicated Disk Arrays End-user transparent app migration across data centers; across servers and storage Zero Downtime Array Load Balancing (IOPS, cache utilization, response times, power consumption, etc. ) Zero Downtime Maintenance Firmware/HBA/Server updates without user interruption Plan maintenance without the need to check for downtimes Follow the sun/moon data center access model Move the app/VM closest to the users or closest to the cheapest power source Failover, failback, Quick and Live Migration using the same management software No need to learn x different tools and their limitations

demo EVA CLX with Exchange 2010 Live Migration

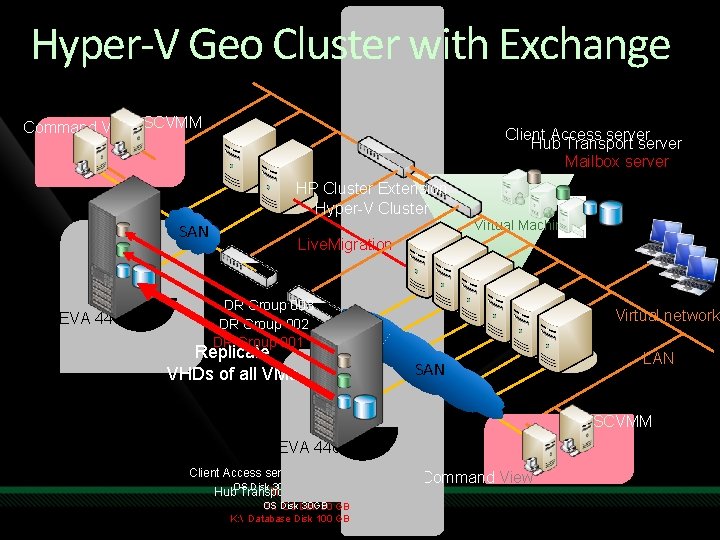

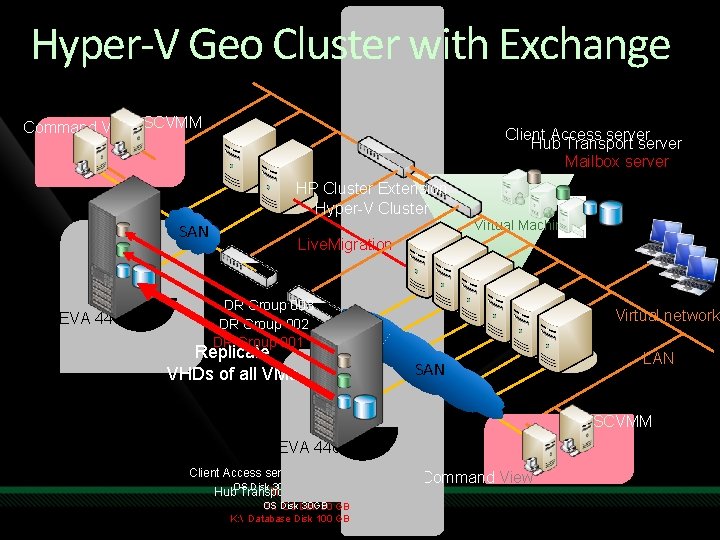

Hyper-V Geo Cluster with Exchange Command View SCVMM Client Access server Hub Transport server Mailbox server HP Cluster Extension Hyper-V Cluster Virtual Machines SAN EVA 4400 Live. Migration DR Group 003 DR Group 002 DR Group 001 Replicate VHDs of all VMs Virtual network SAN LAN SCVMM EVA 4400 Client Access server OS Disk 30 GB Mailbox server Hub Transport server OS 30 GB G: Disk OS Disk 30 GB K: Database Disk 100 GB Command View

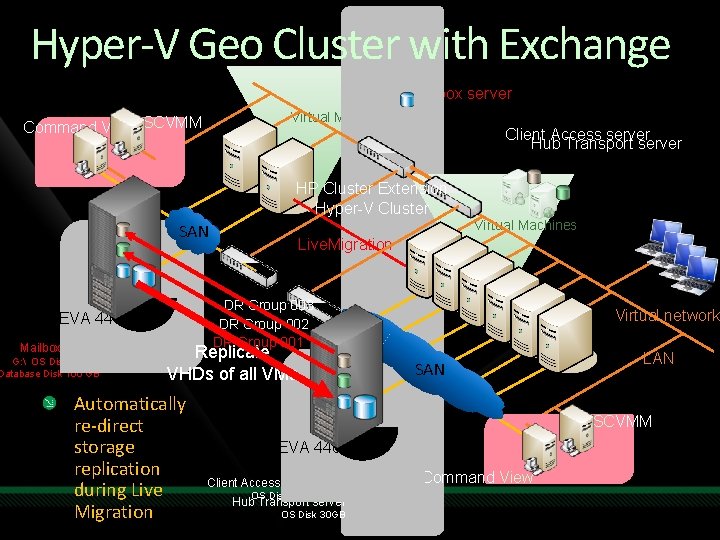

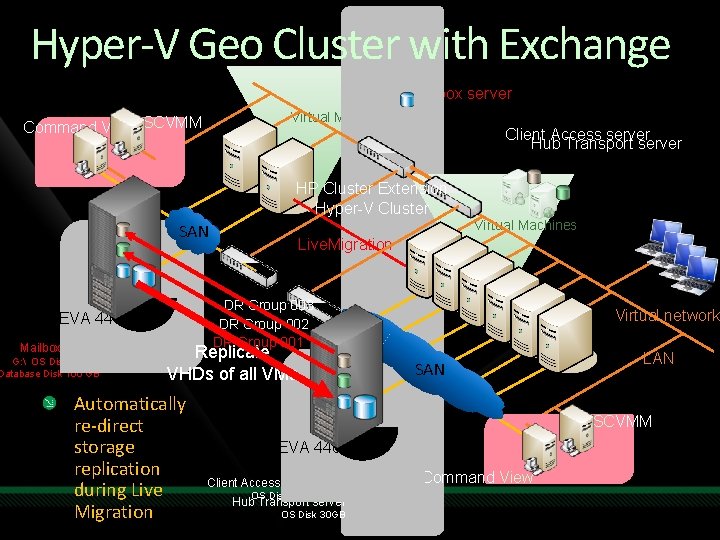

Hyper-V Geo Cluster with Exchange Mailbox server Virtual Machines Command View SCVMM Client Access server Hub Transport server HP Cluster Extension Hyper-V Cluster Virtual Machines SAN EVA 4400 Mailbox server G: OS Disk 30 GB Database Disk 100 GB Live. Migration DR Group 003 DR Group 002 DR Group 001 Replicate VHDs of all VMs Automatically re-direct storage replication during Live Migration Virtual network SAN LAN SCVMM EVA 4400 Client Access server OS Disk 30 GB Hub Transport server OS Disk 30 GB Command View

Additional HP Resources HP website for Hyper-V www. hp. com/go/hyper-v HP and Microsoft Frontline Partnership website www. hp. com/go/microsoft HP website for Windows Server 2008 R 2 www. hp. com/go/ws 2008 r 2 HP website for management tools www. hp. com/go/insight HP OS Support Matrix www. hp. com/go/osssupport Information on HP Pro. Liant Network Adapter Teaming for Hyper-V http: //h 20000. www 2. hp. com/bc/docs/support/Support. Manual/c 01663264/c 01 663264. pdf Technical overview on HP Pro. Liant Network Adapter Teaming http: //h 20000. www 2. hp. com/bc/docs/support/Support. Manual/c 01415139/c 01 415139. pdf? jumpid=reg_R 1002_USEN Whitepaper: Disaster Tolerant Virtualization Architecture with HP Storage. Works Cluster Extension and Microsoft Hyper-V™ http: //h 20195. www 2. hp. com/V 2/getdocument. aspx? docname=4 AA 26905 ENW. pdf 37

Multi-Site Clustering Introduction Networking Storage Quorum Workloads

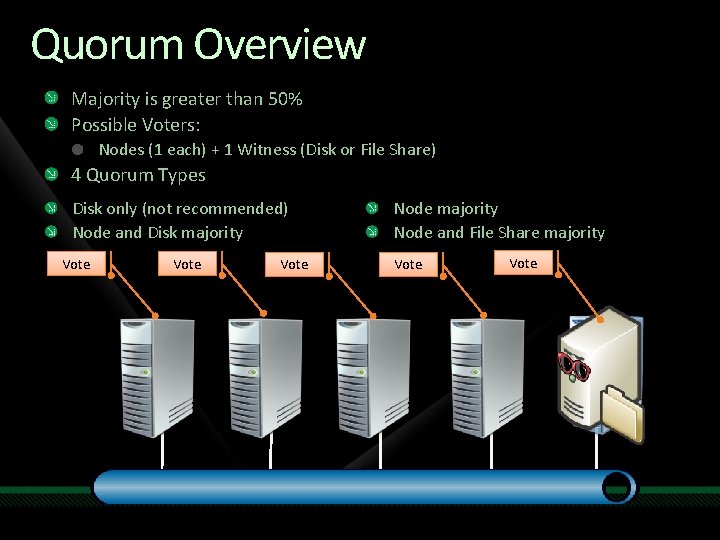

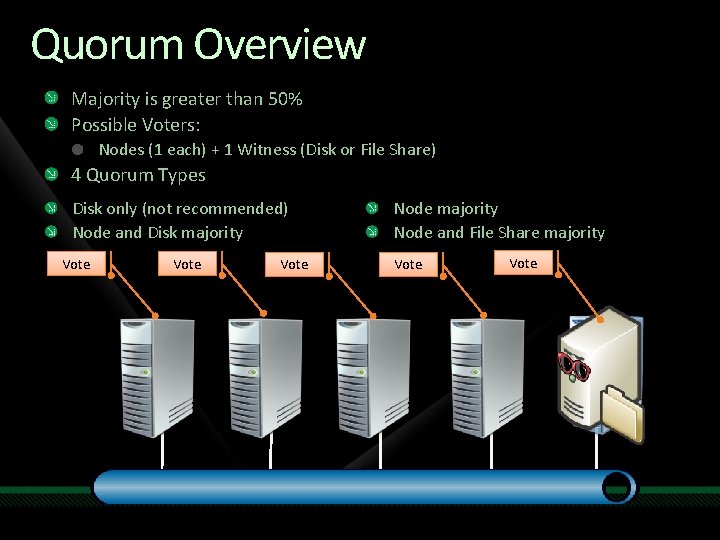

Quorum Overview Majority is greater than 50% Possible Voters: Nodes (1 each) + 1 Witness (Disk or File Share) 4 Quorum Types Disk only (not recommended) Node and Disk majority Vote Node majority Node and File Share majority Vote

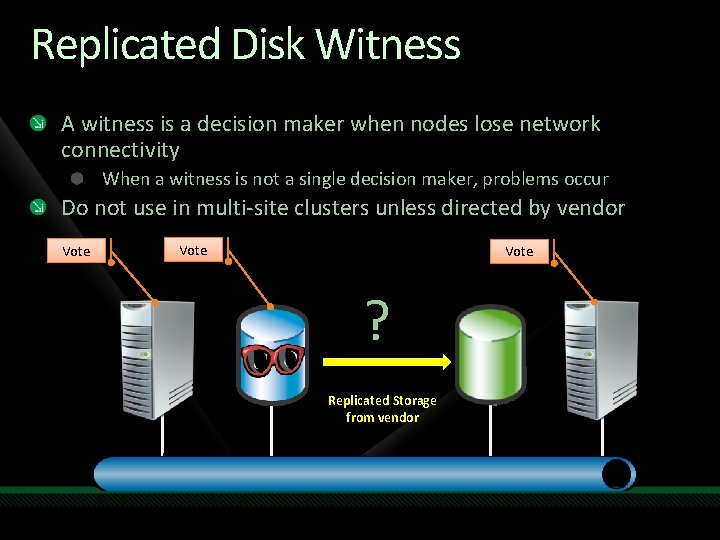

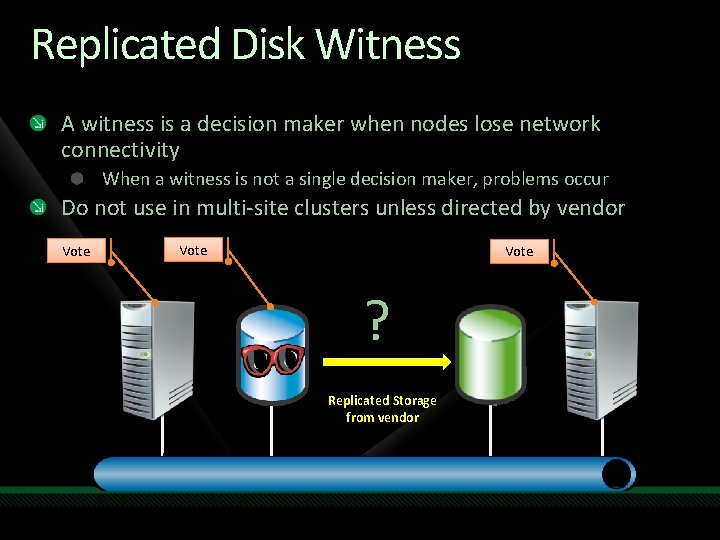

Replicated Disk Witness A witness is a decision maker when nodes lose network connectivity When a witness is not a single decision maker, problems occur Do not use in multi-site clusters unless directed by vendor Vote ? Replicated Storage from vendor

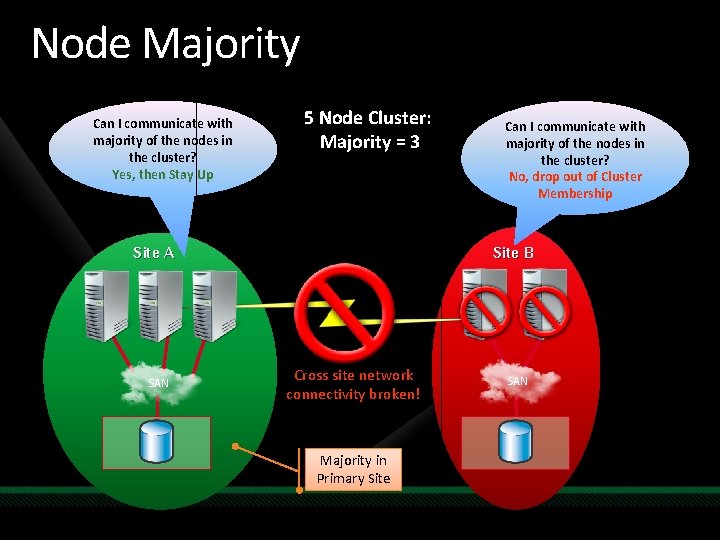

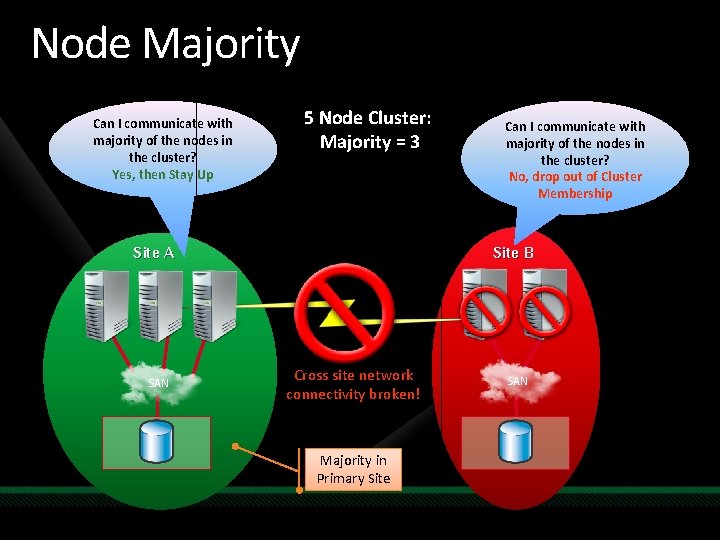

Node Majority Can I communicate with majority of the nodes in the cluster? Yes, then Stay Up 5 Node Cluster: Majority = 3 Site B Site A SAN Can I communicate with majority of the nodes in the cluster? No, drop out of Cluster Membership Cross site network connectivity broken! Majority in Primary Site SAN

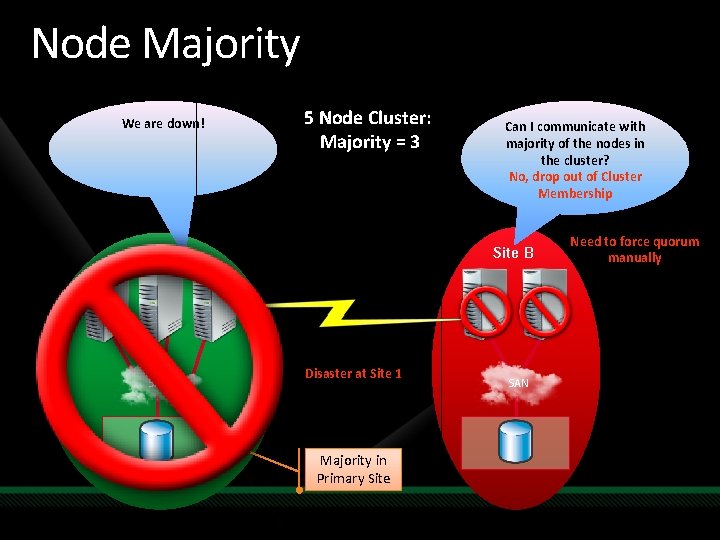

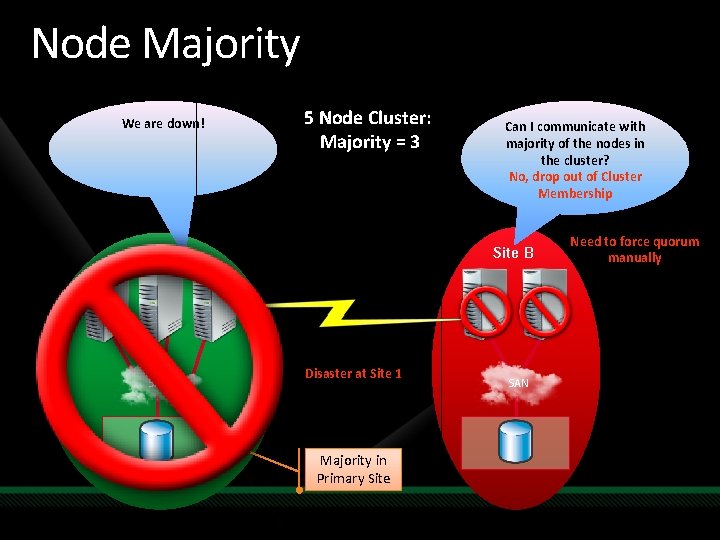

Node Majority We are down! 5 Node Cluster: Majority = 3 Site B Site A SAN Can I communicate with majority of the nodes in the cluster? No, drop out of Cluster Membership Disaster at Site 1 Majority in Primary Site SAN Need to force quorum manually

Forcing Quorum Always understand why quorum was lost Used to bring cluster online without quorum Cluster starts in a special “forced” state Once majority achieved, no more “forced” state Command Line: net start clussvc /fixquorum (or /fq) Power. Shell (R 2): Start-Cluster. Node –Fix. Quorum (or –fq)

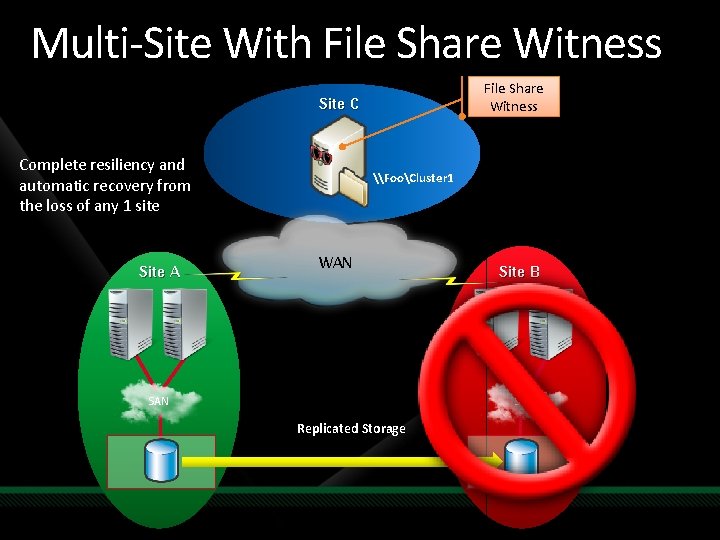

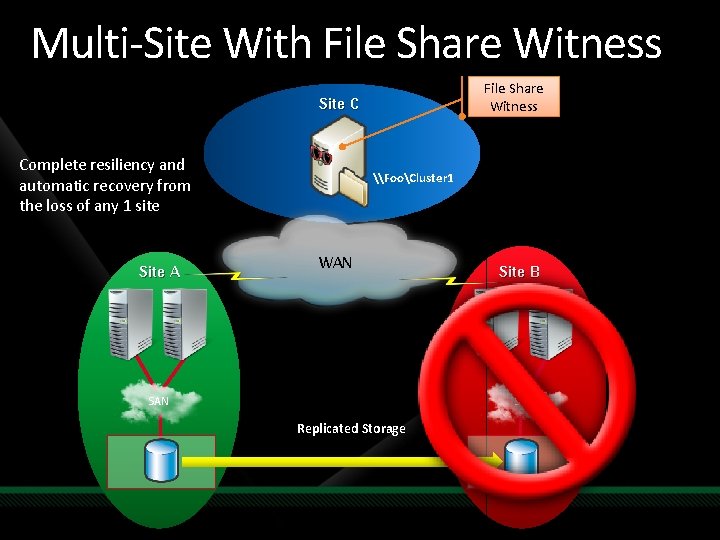

Multi-Site With File Share Witness Site C Complete resiliency and automatic recovery from the loss of any 1 site Site A \FooCluster 1 WAN Site B SAN Replicated Storage

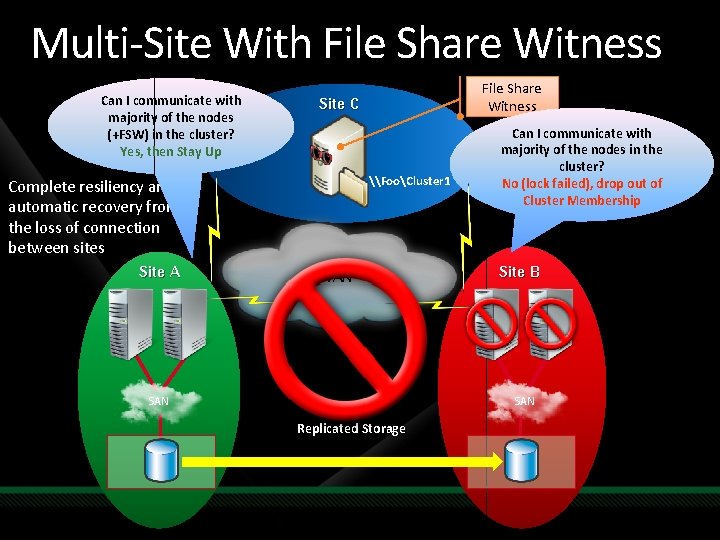

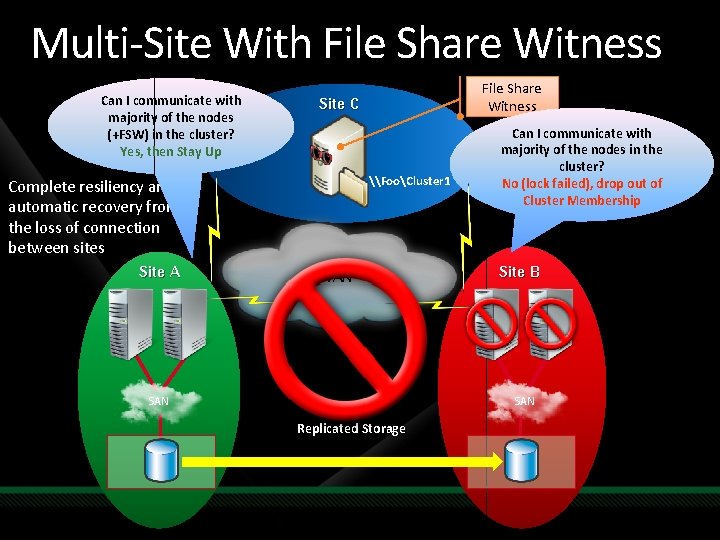

Multi-Site With File Share Witness Can I communicate with majority of the nodes (+FSW) in the cluster? Yes, then Stay Up Site C \FooCluster 1 Complete resiliency and automatic recovery from the loss of connection between sites Site A File Share Witness WAN SAN Can I communicate with majority of the nodes in the cluster? No (lock failed), drop out of Cluster Membership Site B SAN Replicated Storage

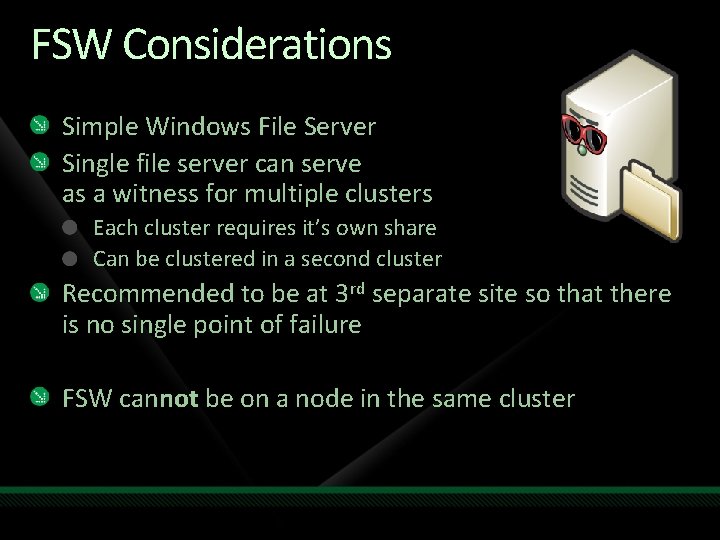

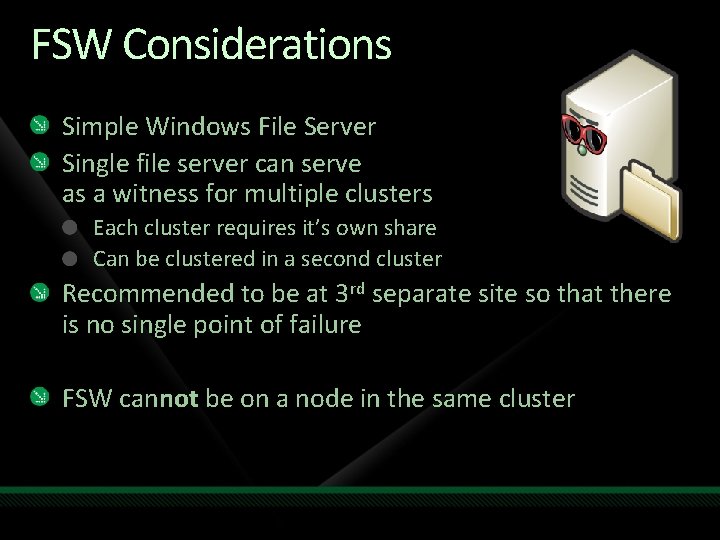

FSW Considerations Simple Windows File Server Single file server can serve as a witness for multiple clusters Each cluster requires it’s own share Can be clustered in a second cluster Recommended to be at 3 rd separate site so that there is no single point of failure FSW cannot be on a node in the same cluster

Quorum Model Summary No Majority: Disk Only Not Recommended Use as directed by vendor Node and Disk Majority Use as directed by vendor Node Majority Odd number of nodes More nodes in primary site Node and File Share Majority Even number of nodes Best availability solution – FSW in 3 rd site

Multi-Site Clustering Introduction Networking Storage Quorum Workloads

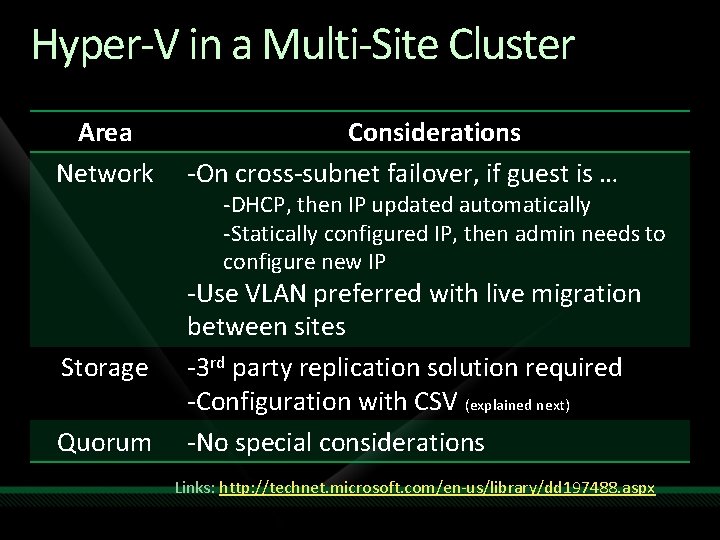

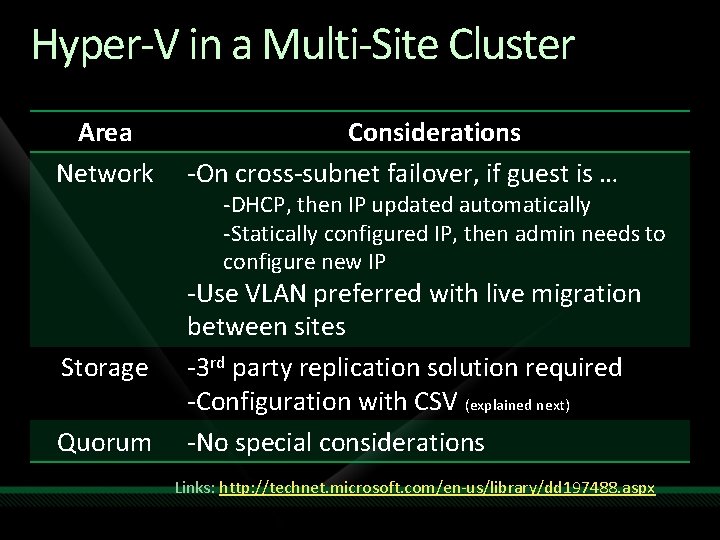

Hyper-V in a Multi-Site Cluster Area Network Considerations -On cross-subnet failover, if guest is … Storage -Use VLAN preferred with live migration between sites -3 rd party replication solution required -Configuration with CSV (explained next) -No special considerations Quorum -DHCP, then IP updated automatically -Statically configured IP, then admin needs to configure new IP Links: http: //technet. microsoft. com/en-us/library/dd 197488. aspx

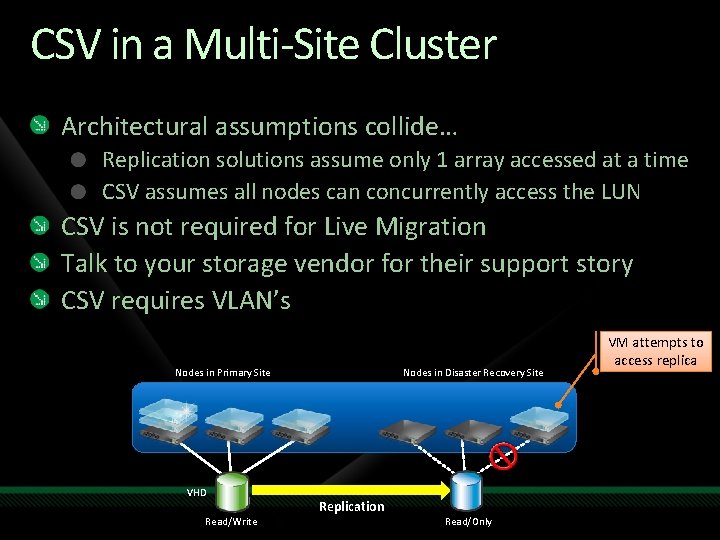

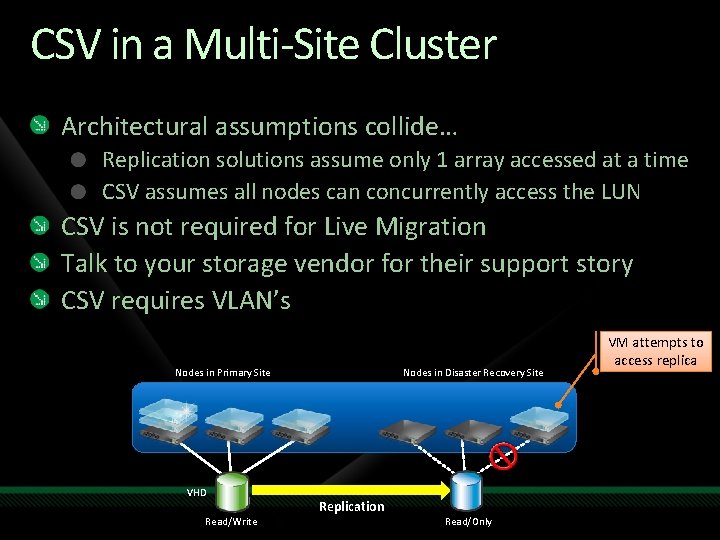

CSV in a Multi-Site Cluster Architectural assumptions collide… Replication solutions assume only 1 array accessed at a time CSV assumes all nodes can concurrently access the LUN CSV is not required for Live Migration Talk to your storage vendor for their support story CSV requires VLAN’s Nodes in Primary Site VHD Read/Write Nodes in Disaster Recovery Site Replication Read/Only VM attempts to access replica

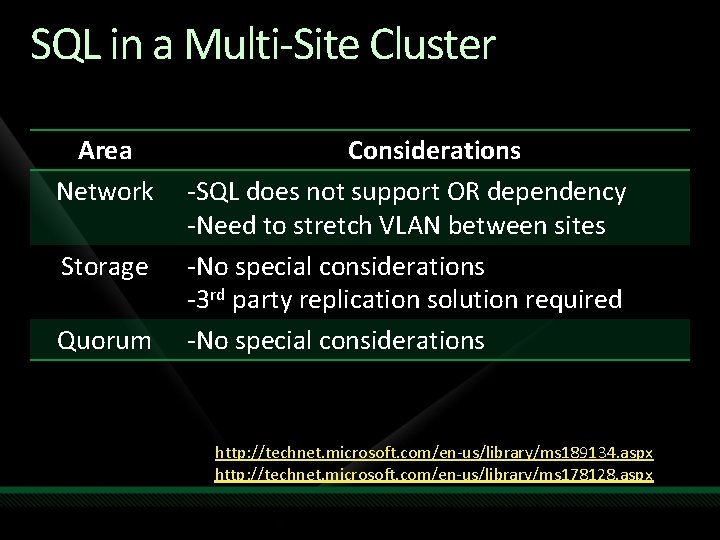

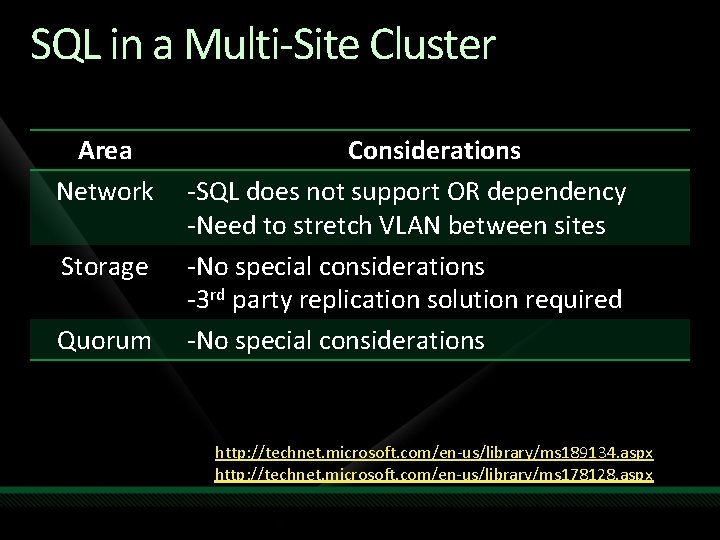

SQL in a Multi-Site Cluster Area Network Storage Quorum Considerations -SQL does not support OR dependency -Need to stretch VLAN between sites -No special considerations -3 rd party replication solution required -No special considerations Links: http: //technet. microsoft. com/en-us/library/ms 189134. aspx http: //technet. microsoft. com/en-us/library/ms 178128. aspx

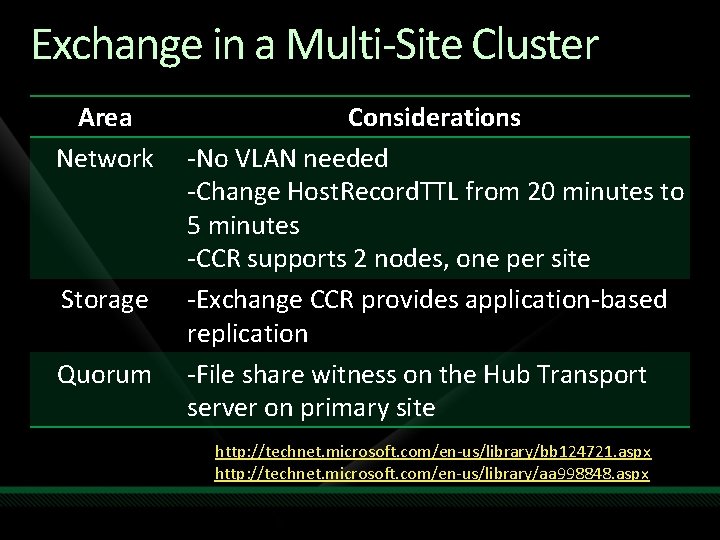

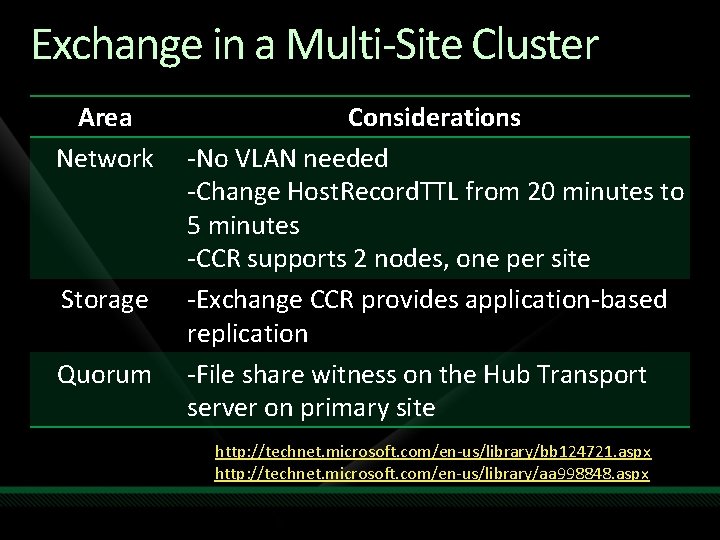

Exchange in a Multi-Site Cluster Area Network Storage Quorum Considerations -No VLAN needed -Change Host. Record. TTL from 20 minutes to 5 minutes -CCR supports 2 nodes, one per site -Exchange CCR provides application-based replication -File share witness on the Hub Transport server on primary site Links: http: //technet. microsoft. com/en-us/library/bb 124721. aspx http: //technet. microsoft. com/en-us/library/aa 998848. aspx

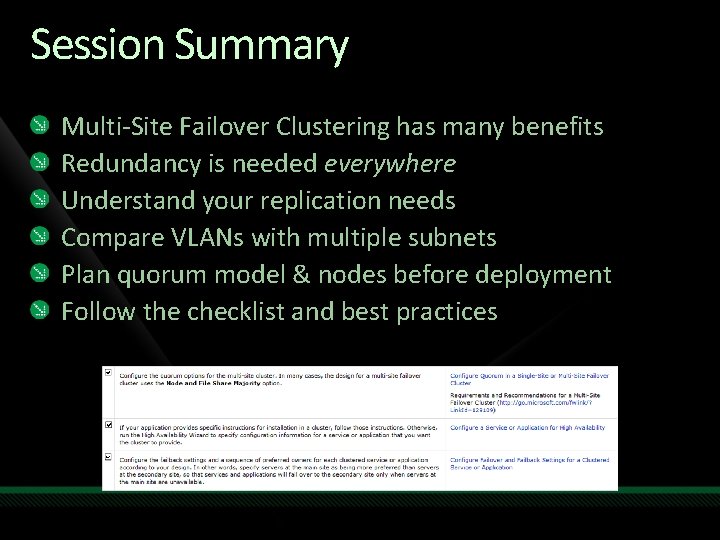

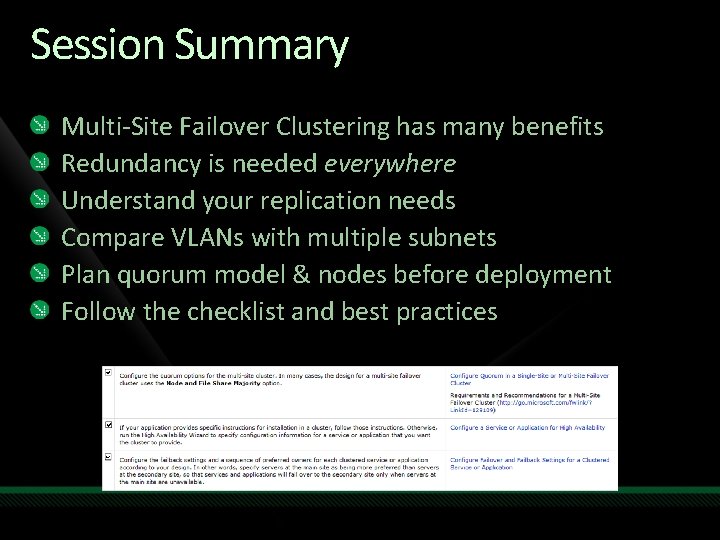

Session Summary Multi-Site Failover Clustering has many benefits Redundancy is needed everywhere Understand your replication needs Compare VLANs with multiple subnets Plan quorum model & nodes before deployment Follow the checklist and best practices

Resources www. microsoft. com/teched www. microsoft. com/learning Sessions On-Demand & Community Microsoft Certification & Training Resources http: //microsoft. com/technet http: //microsoft. com/msdn Resources for IT Professionals Resources for Developers

Related Content Breakout Sessions SVR 208 Gaining Higher Availability with Windows Server 2008 R 2 Failover Clustering SVR 319 Multi-Site Clustering with Windows Server 2008 R 2 DAT 312 All You Needed to Know about Microsoft SQL Server 2008 Failover Clustering UNC 307 Microsoft Exchange Server 2010 High Availability SVR 211 The Challenges of Building and Managing a Scalable and Highly Available Windows Server 2008 R 2 Virtualisation Solution SVR 314 From Zero to Live Migration. How to Set Up a Live Migration Demo Sessions SVR 01 -DEMO Free Live Migration and High Availability with Microsoft Hyper-V Server 2008 R 2 Hands-on Labs UNC 12 -HOL Microsoft Exchange Server 2010 High Availability and Storage Scenarios

Multi-Site Clustering Content Design guide: http: //technet. microsoft. com/en-us/library/dd 197430. aspx Deployment guide/checklist: http: //technet. microsoft. com/en-us/library/dd 197546. aspx

Complete an evaluation on Comm. Net and enter to win an Xbox 360 Elite!

© 2009 Microsoft Corporation. All rights reserved. Microsoft, Windows Vista and other product names are or may be registered trademarks and/or trademarks in the U. S. and/or other countries. The information herein is for informational purposes only and represents the current view of Microsoft Corporation as of the date of this presentation. Because Microsoft must respond to changing market conditions, it should not be interpreted to be a commitment on the part of Microsoft, and Microsoft cannot guarantee the accuracy of any information provided after the date of this presentation. MICROSOFT MAKES NO WARRANTIES, EXPRESS, IMPLIED OR STATUTORY, AS TO THE INFORMATION IN THIS PRESENTATION.