Multiresolution Analysis Computational Chemistry and Implications for High

- Slides: 1

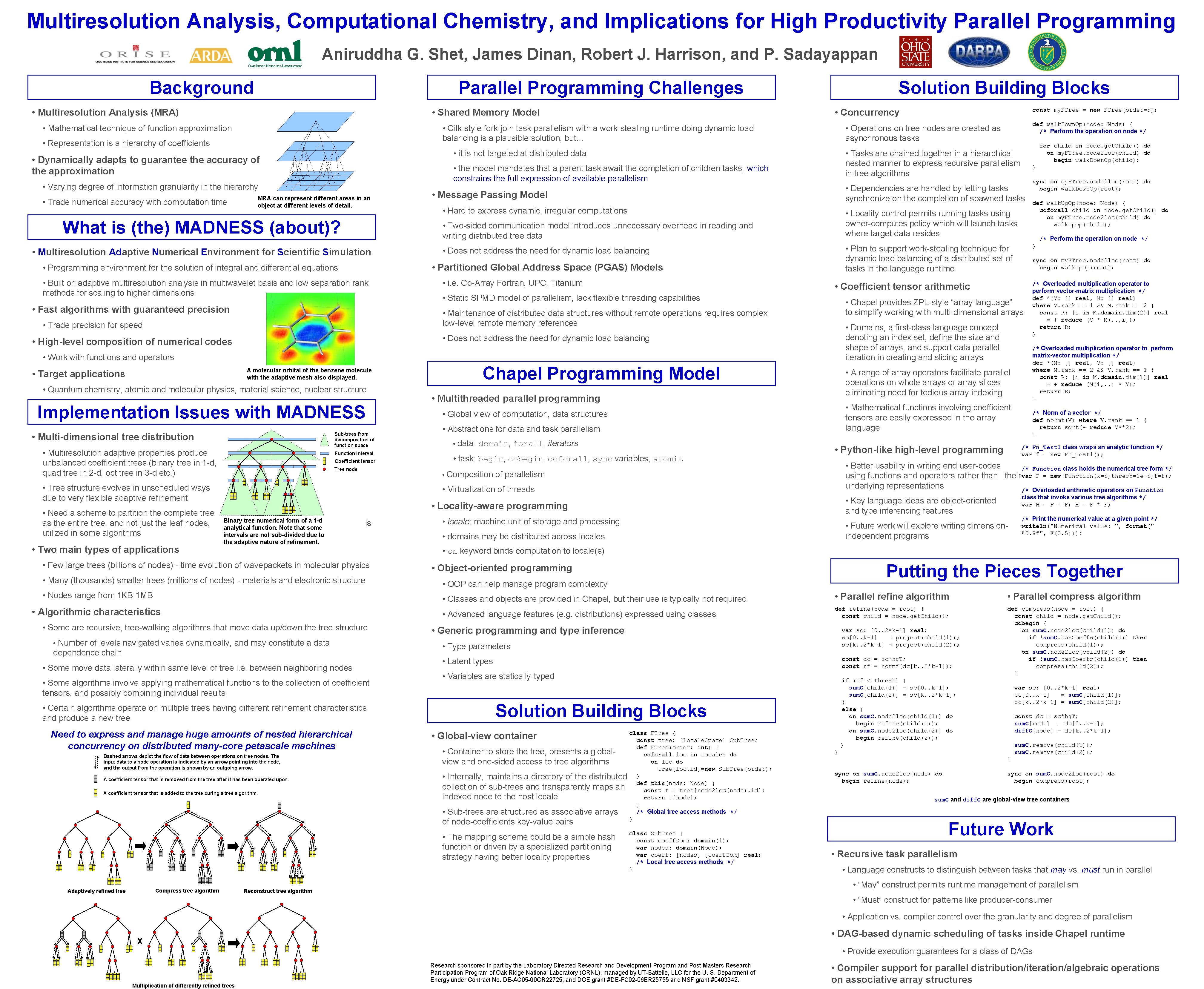

Multiresolution Analysis, Computational Chemistry, and Implications for High Productivity Parallel Programming Aniruddha G. Shet, James Dinan, Robert J. Harrison, and P. Sadayappan Background Parallel Programming Challenges • Multiresolution Analysis (MRA) • the model mandates that a parent task await the completion of children tasks, which constrains the full expression of available parallelism • Varying degree of information granularity in the hierarchy • Trade numerical accuracy with computation time MRA can represent different areas in an object at different levels of detail. What is (the) MADNESS (about)? • Message Passing Model • Hard to express dynamic, irregular computations • Locality control permits running tasks using owner-computes policy which will launch tasks where target data resides • Does not address the need for dynamic load balancing • Built on adaptive multiresolution analysis in multiwavelet basis and low separation rank methods for scaling to higher dimensions • Fast algorithms with guaranteed precision • i. e. Co-Array Fortran, UPC, Titanium • Trade precision for speed • Domains, a first-class language concept denoting an index set, define the size and shape of arrays, and support data parallel iteration in creating and slicing arrays • Does not address the need for dynamic load balancing • High-level composition of numerical codes • Work with functions and operators A molecular orbital of the benzene molecule with the adaptive mesh also displayed. • Target applications • Quantum chemistry, atomic and molecular physics, material science, nuclear structure Implementation Issues with MADNESS Sub-trees from decomposition of function space • Multi-dimensional tree distribution • Multiresolution adaptive properties produce unbalanced coefficient trees (binary tree in 1 -d, quad tree in 2 -d, oct tree in 3 -d etc. ) Function interval Coefficient tensor Tree node • Need a scheme to partition the complete tree as the entire tree, and not just the leaf nodes, utilized in some algorithms • Two main types of applications Chapel Programming Model • A range of array operators facilitate parallel operations on whole arrays or array slices eliminating need for tedious array indexing • Multithreaded parallel programming • Mathematical functions involving coefficient tensors are easily expressed in the array language • Global view of computation, data structures • Abstractions for data and task parallelism • data: domain, forall, iterators • task: begin, coforall, sync variables, atomic /* Norm of a vector */ def normf(V) where V. rank == 1 { return sqrt(+ reduce V**2); } class that invoke various tree algorithms */ var H = F + F; H = F * F; • Key language ideas are object-oriented and type inferencing features • Locality-aware programming is /* Overloaded multiplication operator to perform matrix-vector multiplication */ def *(M: [] real, V: [] real) where M. rank == 2 && V. rank == 1 { const R: [i in M. domain. dim(1)] real = + reduce (M(i, . . ) * V); return R; } • Better usability in writing end user-codes /* Function class holds the numerical tree form */ using functions and operators rather than their var F = new Function(k=5, thresh=1 e-5, f=f); underlying representations /* Overloaded arithmetic operators on Function • Virtualization of threads Binary tree numerical form of a 1 -d analytical function. Note that some intervals are not sub-divided due to the adaptive nature of refinement. /* Overloaded multiplication operator to perform vector-matrix multiplication */ def *(V: [] real, M: [] real) where V. rank == 1 && M. rank == 2 { const R: [i in M. domain. dim(2)] real = + reduce (V * M(. . , i)); return R; } /* Fn_Test 1 class wraps an analytic function */ var f = new Fn_Test 1(); • Python-like high-level programming • Composition of parallelism • Tree structure evolves in unscheduled ways due to very flexible adaptive refinement sync on my. FTree. node 2 loc(root) do begin walk. Up. Op(root); • Chapel provides ZPL-style “array language” to simplify working with multi-dimensional arrays • Maintenance of distributed data structures without remote operations requires complex low-level remote memory references def walk. Up. Op(node: Node) { coforall child in node. get. Child() do on my. FTree. node 2 loc(child) do walk. Up. Op(child); } • Coefficient tensor arithmetic • Static SPMD model of parallelism, lack flexible threading capabilities sync on my. FTree. node 2 loc(root) do begin walk. Down. Op(root); /* Perform the operation on node */ • Plan to support work-stealing technique for dynamic load balancing of a distributed set of tasks in the language runtime • Partitioned Global Address Space (PGAS) Models • Programming environment for the solution of integral and differential equations } • Dependencies are handled by letting tasks synchronize on the completion of spawned tasks • Two-sided communication model introduces unnecessary overhead in reading and writing distributed tree data • Multiresolution Adaptive Numerical Environment for Scientific Simulation for child in node. get. Child() do on my. FTree. node 2 loc(child) do begin walk. Down. Op(child); • Tasks are chained together in a hierarchical nested manner to express recursive parallelism in tree algorithms • it is not targeted at distributed data • Dynamically adapts to guarantee the accuracy of the approximation def walk. Down. Op(node: Node) { /* Perform the operation on node */ • Operations on tree nodes are created as asynchronous tasks • Cilk-style fork-join task parallelism with a work-stealing runtime doing dynamic load balancing is a plausible solution, but… • Representation is a hierarchy of coefficients const my. FTree = new FTree(order=5); • Concurrency • Shared Memory Model • Mathematical technique of function approximation Solution Building Blocks • locale: machine unit of storage and processing • Future work will explore writing dimensionindependent programs • domains may be distributed across locales /* Print the numerical value at a given point */ writeln("Numerical value: ", format(" %0. 8 f", F(0. 5))); • on keyword binds computation to locale(s) • Few large trees (billions of nodes) - time evolution of wavepackets in molecular physics Putting the Pieces Together • Object-oriented programming • Many (thousands) smaller trees (millions of nodes) - materials and electronic structure • OOP can help manage program complexity • Nodes range from 1 KB-1 MB • Classes and objects are provided in Chapel, but their use is typically not required • Parallel refine algorithm • Parallel compress algorithm • Advanced language features (e. g. distributions) expressed using classes def refine(node = root) { const child = node. get. Child(); def compress(node = root) { const child = node. get. Child(); cobegin { on sum. C. node 2 loc(child(1)) do if !sum. C. has. Coeffs(child(1)) then compress(child(1)); on sum. C. node 2 loc(child(2)) do if !sum. C. has. Coeffs(child(2)) then compress(child(2)); } • Algorithmic characteristics • Some are recursive, tree-walking algorithms that move data up/down the tree structure • Number of levels navigated varies dynamically, and may constitute a data dependence chain • Some move data laterally within same level of tree i. e. between neighboring nodes • Some algorithms involve applying mathematical functions to the collection of coefficient tensors, and possibly combining individual results • Certain algorithms operate on multiple trees having different refinement characteristics and produce a new tree Need to express and manage huge amounts of nested hierarchical concurrency on distributed many-core petascale machines Dashed arrows depict the flow of data between operations on tree nodes. The input data to a node operation is indicated by an arrow pointing into the node, and the output from the operation is shown by an outgoing arrow. A coefficient tensor that is removed from the tree after it has been operated upon. A coefficient tensor that is added to the tree during a tree algorithm. • Generic programming and type inference • Type parameters const dc = sc*hg. T; const nf = normf(dc[k. . 2*k-1]); • Latent types • Variables are statically-typed • Global-view container • Container to store the tree, presents a globalview and one-sided access to tree algorithms • Internally, maintains a directory of the distributed collection of sub-trees and transparently maps an indexed node to the host locale • The mapping scheme could be a simple hash function or driven by a specialized partitioning strategy having better locality properties Compress tree algorithm if (nf < thresh) { sum. C[child(1)] = sc[0. . k-1]; sum. C[child(2)] = sc[k. . 2*k-1]; } else { on sum. C. node 2 loc(child(1)) do begin refine(child(1)); on sum. C. node 2 loc(child(2)) do begin refine(child(2)); } Solution Building Blocks • Sub-trees are structured as associative arrays of node-coefficients key-value pairs Adaptively refined tree var sc: [0. . 2*k-1] real; sc[0. . k-1] = project(child(1)); sc[k. . 2*k-1] = project(child(2)); class FTree { const tree: [Locale. Space] Sub. Tree; def FTree(order: int) { coforall loc in Locales do on loc do tree[loc. id]=new Sub. Tree(order); } def this(node: Node) { const t = tree[node 2 loc(node). id]; return t[node]; } /* Global tree access methods */ } class Sub. Tree { const coeff. Dom: domain(1); var nodes: domain(Node); var coeff: [nodes] [coeff. Dom] real; /* Local tree access methods */ } var sc: [0. . 2*k-1] real; sc[0. . k-1] = sum. C[child(1)]; sc[k. . 2*k-1] = sum. C[child(2)]; const dc = sc*hg. T; sum. C[node] = dc[0. . k-1]; diff. C[node] = dc[k. . 2*k-1]; sum. C. remove(child(1)); sum. C. remove(child(2)); } } sync on sum. C. node 2 loc(node) do begin refine(node); sync on sum. C. node 2 loc(root) do begin compress(root); sum. C and diff. C are global-view tree containers Future Work • Recursive task parallelism • Language constructs to distinguish between tasks that may vs. must run in parallel • “May” construct permits runtime management of parallelism Reconstruct tree algorithm • “Must” construct for patterns like producer-consumer • Application vs. compiler control over the granularity and degree of parallelism • DAG-based dynamic scheduling of tasks inside Chapel runtime X • Provide execution guarantees for a class of DAGs Multiplication of differently refined trees Research sponsored in part by the Laboratory Directed Research and Development Program and Post Masters Research Participation Program of Oak Ridge National Laboratory (ORNL), managed by UT-Battelle, LLC for the U. S. Department of Energy under Contract No. DE-AC 05 -00 OR 22725, and DOE grant #DE-FC 02 -06 ER 25755 and NSF grant #0403342. • Compiler support for parallel distribution/iteration/algebraic operations on associative array structures