Multiprocessor Architectures David Gregg Department of Computer Science

- Slides: 32

Multiprocessor Architectures David Gregg Department of Computer Science University of Dublin, Trinity College 1

Multiprocessors • Machines with multiple processors are what we often think of when we talk of parallel computing • Multiple processors cooperate and communicate to solve problems fast • Multiple processor architecture consists of – Individual processor computer architecture – Communication architecture 2

All kinds of everything • Two kinds of physical memory organization: • Physically centralized memory – Allows only a few dozen processor chips • Physically distributed memory – Larger number chips and cores – Simpler hardware – More memory bandwidth – More variable memory latency • Latency depends heavily on distance to memory 3

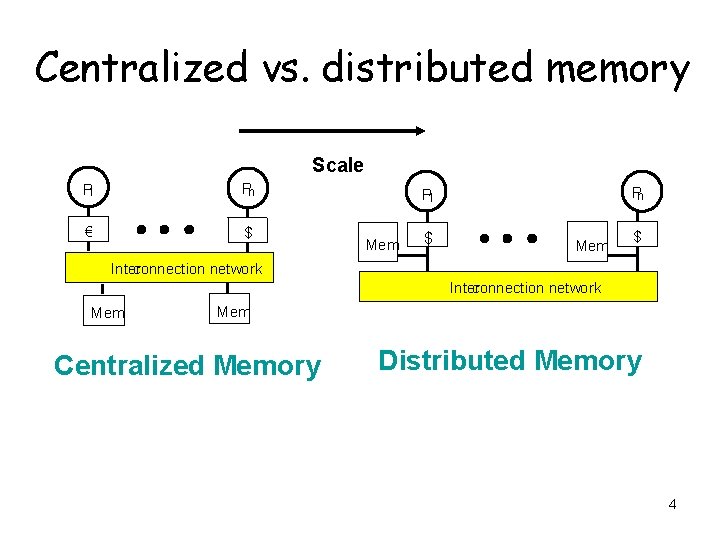

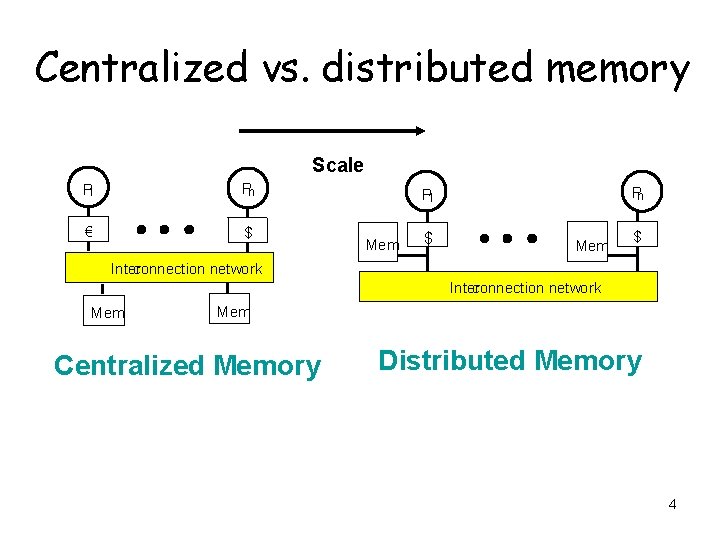

Centralized vs. distributed memory Scale P 1 Pn € $ Pn P 1 Mem $ Inter connection network Mem Centralized Memory Distributed Memory 4

More kinds of everything • Two logical views of memory – Logically shared memory – Logically distributed memory • Logical view does not have to follow physical implementation – Logically shared memory can be implemented on top of a physically distributed memory • Often with hardware support – Logically distributed memory can be built on top of a physically centralized memory 5

Logical view of memory • Logically shared memory programming model – communication between processors uses shared variables and locks – e. g. Open. MP, pthreads, Java threads • Logically distributed memory – communication between processors uses message passing – e. g. MPI, Occam 6

UMA versus NUMA • Shared memory may be physically centralized or physically distributed • Physically centralized – – – All processors have equal access to memory Symmetric multiprocessor model Uniform memory access (UMA) costs Scales to a few dozen processors Cost increases rapidly as number of processors increases 7

UMA versus NUMA (contd. ) • Physically distributed shared memory – Each processor has its own local memory – Cost of accessing local memory is low – But address space is shared, so each processor can access any memory in system – Accessing memories of other processors has higher cost than local memory – Some memories may be very distant – Non-uniform memory access (NUMA) costs 8

Summary • Four main categories of multiprocessor – Physically centralized logically shared memory • UMA, symmetric multiprocessor – Physically distributed logically shared • NUMA – Physically distributed logically distributed memory • Message passing parallel machines • Most supercomputers today – Physically centralized logically distributed memory • No specific machines designed to do this • Significant cost of building a centralized memory • But programming in languages with message passing model is not unknown on SMP machines. 9

Symmetric Multiprocessors (SMP) • Common type of shared memory machine – Physically centralized memory – Logically shared address space • Local caches are used to reduce bus traffic to the central memory • Programming is relatively simple – Parallel threads sharing memory – Memory access costs uniform – Need to avoid cache misses, like sequential programming 10

SMP Multi-core • Symmetric multi-processing is the most common model for multi-core processors • Multi-core is a relatively new term – Previous name was always chip multiprocessor (CMP) • Multiple cores on a single chip, sharing a single external memory – with caches to reduce memory traffic 11

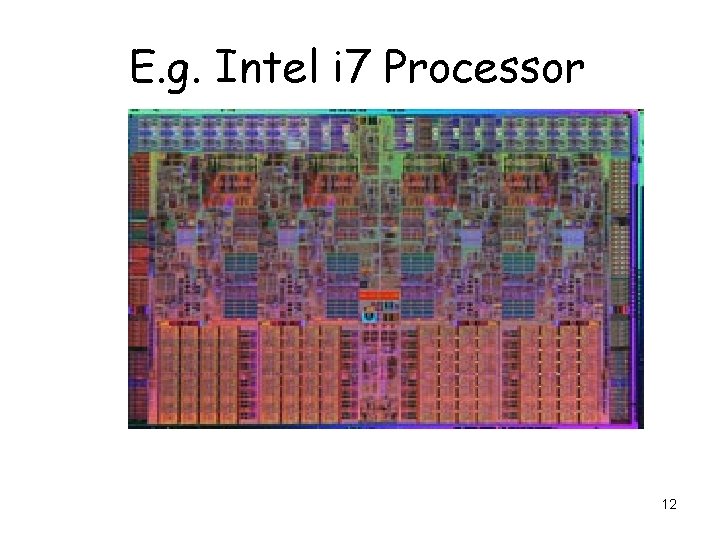

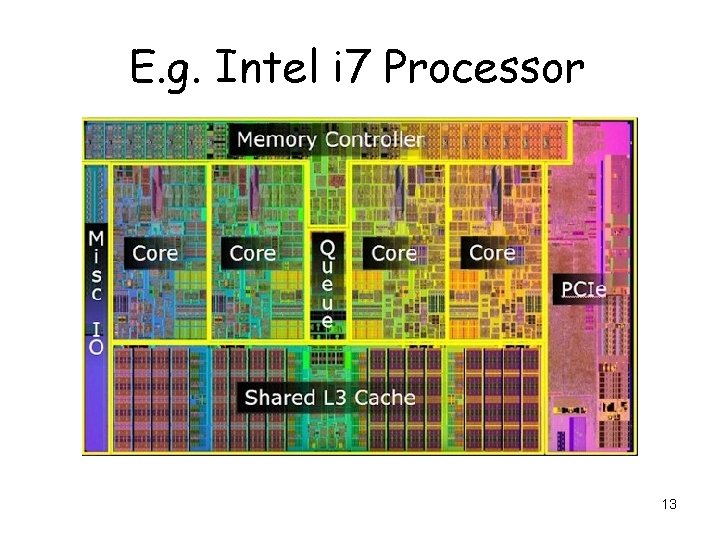

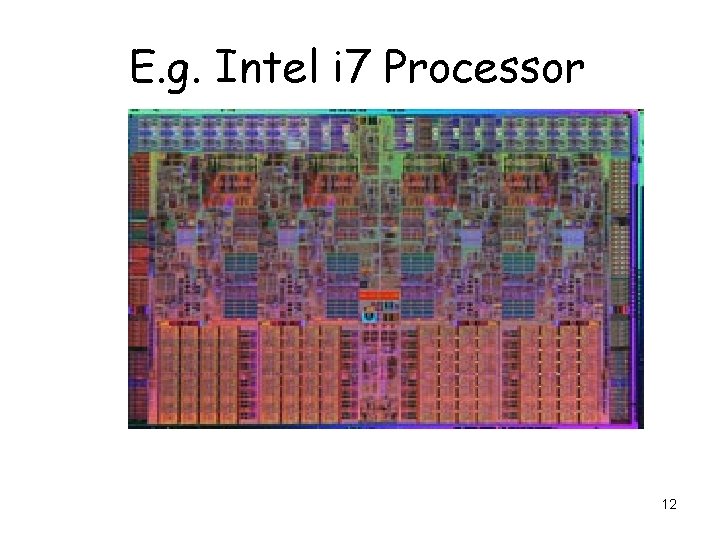

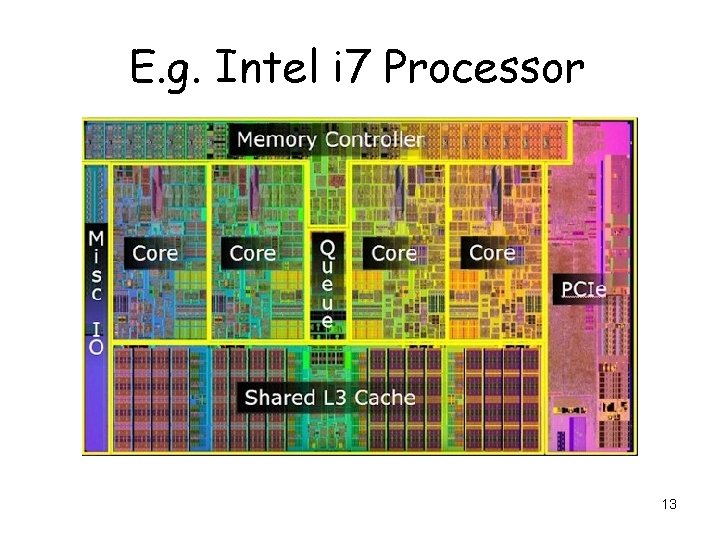

E. g. Intel i 7 Processor 12

E. g. Intel i 7 Processor 13

E. g. Intel i 7 Processor • Family of processors, we consider one example • Four cores per chip – Each core has own L 1 and L 2 cache • 4 X 256 K – Single shared L 3 cache • 8 MB • External bus from L 3 cache to external memory 14

Other SMP Multi-cores • Another common pattern is to reuse configurations from chips with fewer cores • E. g. Dual-core processors – Popular to take a dual-core processor and replicate it on a chip – E. g. Four cores – Each has its own L 1 cache – Each pair has a shared L 2 cache – L 2 caches connected to memory 15

SMP Multi-cores • SMP multi-cores scale pretty well but they may not scale forever • Immediate issue: cache coherency – Cache coherency is much simpler on a single chip than between chips – But it is still complex and doesn’t scale well – In the medium to long term we may see more multi-core processors that do not share all their memory • E. g. Cell B/E or Movidius Myriad; but how do we 16 program these?

SMP Multi-cores • Immediate issue: memory bandwidth – As the number of cores rises, the amount of memory bandwidth needed will also rise • Moore’s law means that number of cores can double every 18 -24 months (at least in the medium term) • But number of pins is limited – Experimental architectures put pins through chip to stacked memory – Latency can be traded for bandwidth – Bigger on-chip caches • Caches may be much larger and much slower in future • Possible move to placing processor in memory? 17

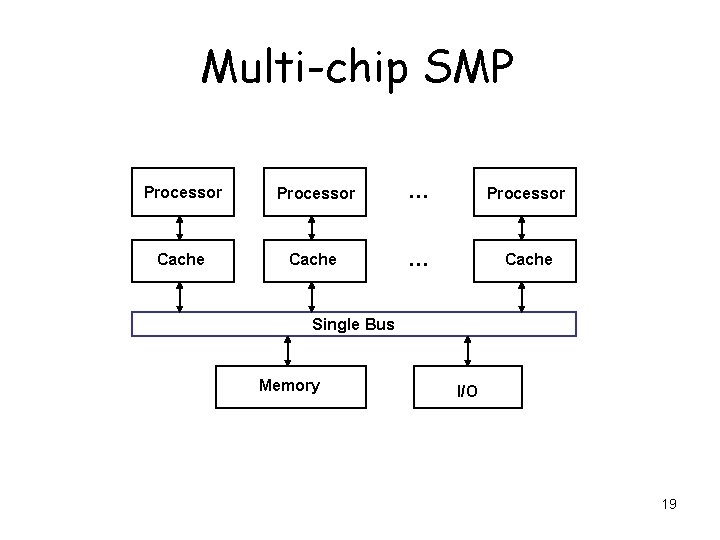

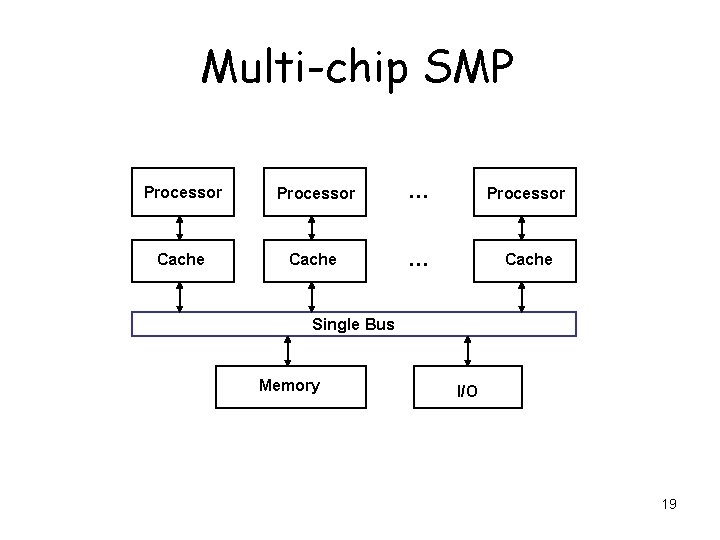

Multi-chip SMP • Traditional type of SMP machine • More difficult to build than multi-core – Running wires within a chip is cheap – Running wires between chips is expensive • Caches are essential to lower bus traffic • Must provide hardware to ensure that caches and memory are consistent (cache coherency) – Expensive across multiple chips • Must provide a hardware mechanism to support process synchronization 18

Multi-chip SMP Processor … Processor Cache … Cache Single Bus Memory I/O 19

Multi-chip SMP • Whether the SMP is single or multiple chip, the programming model is the same • But performance trade-offs may be different – Communication may be much more expensive in multi-chip SMP 20

Multi-chip SMP • Multiple different processors sharing the same memory tends to cause bottlenecks – Memory access time (i. e. latency) becomes slow for all processors – Shared memory bandwidth becomes a bottleneck • Computers are physical things, and a single physical shared memory is a problem 21

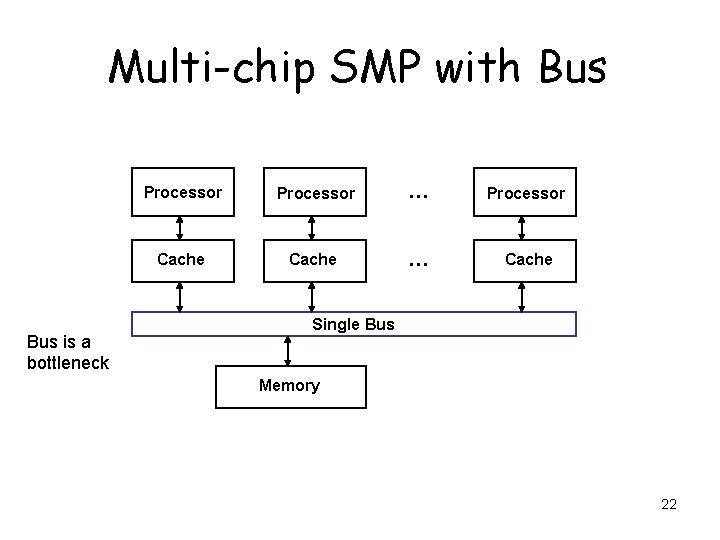

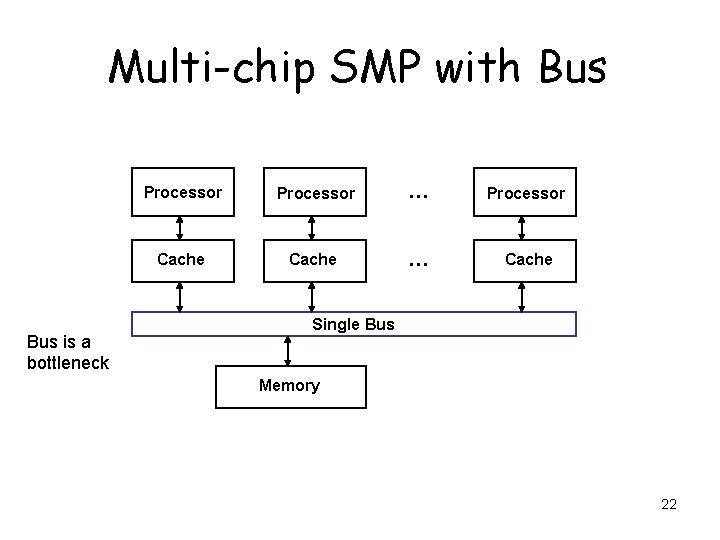

Multi-chip SMP with Bus is a bottleneck Processor … Processor Cache … Cache Single Bus Memory 22

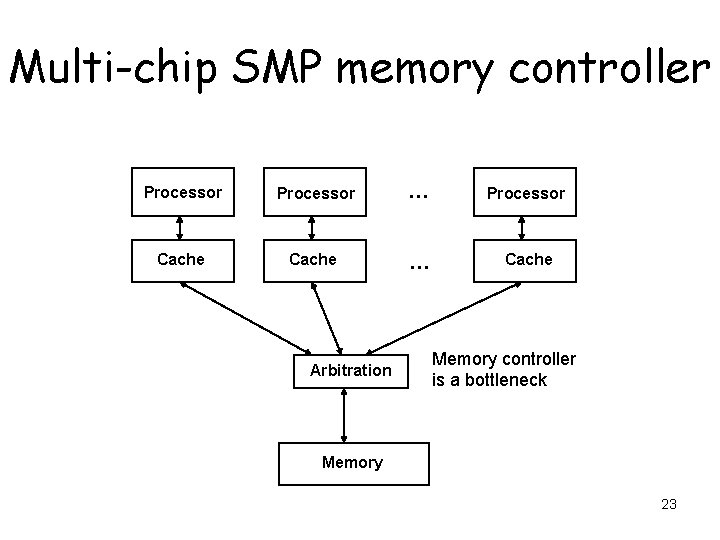

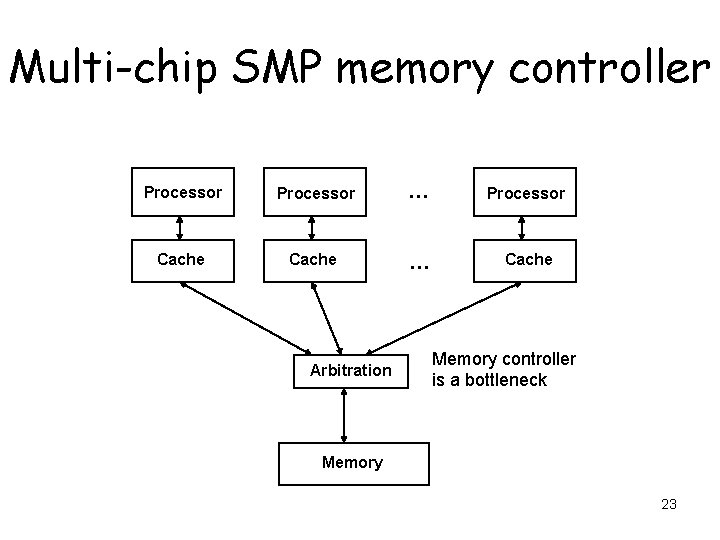

Multi-chip SMP memory controller Processor … Processor Cache … Cache Arbitration Memory controller is a bottleneck Memory 23

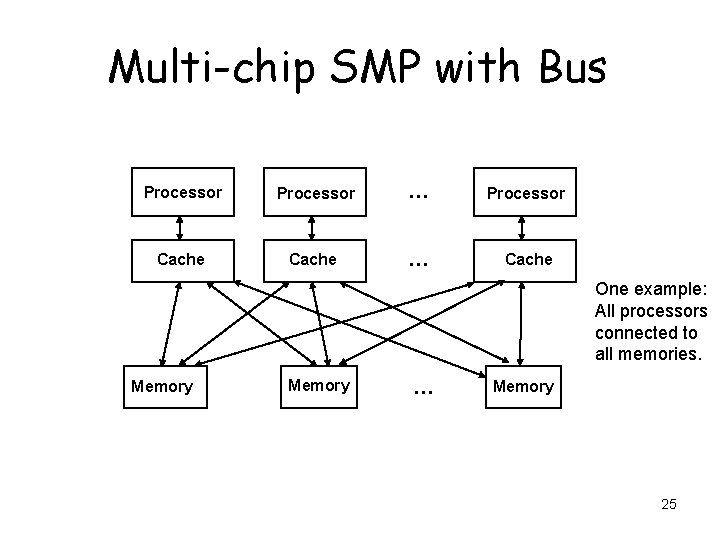

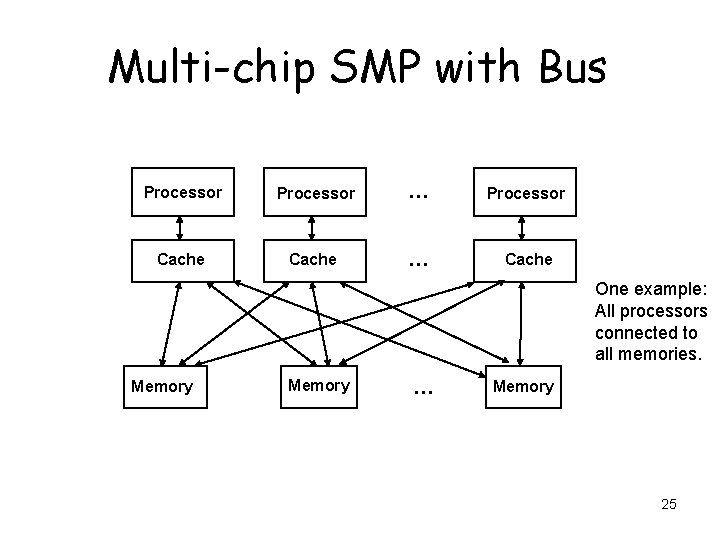

Multi-chip SMP • An alternative to having a single physical memory shared between all processors is a distributed memory – Many arrangements are possible • Multiple processors and multiple memories – All-to-all connections – Crossbar – Multiple buses • Similar to distributed memory machines 24

Multi-chip SMP with Bus Processor … Processor Cache … Cache One example: All processors connected to all memories. Memory … Memory 25

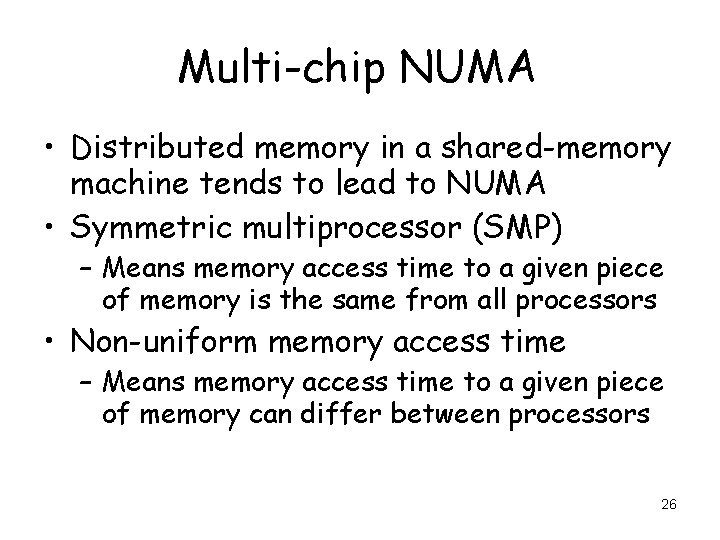

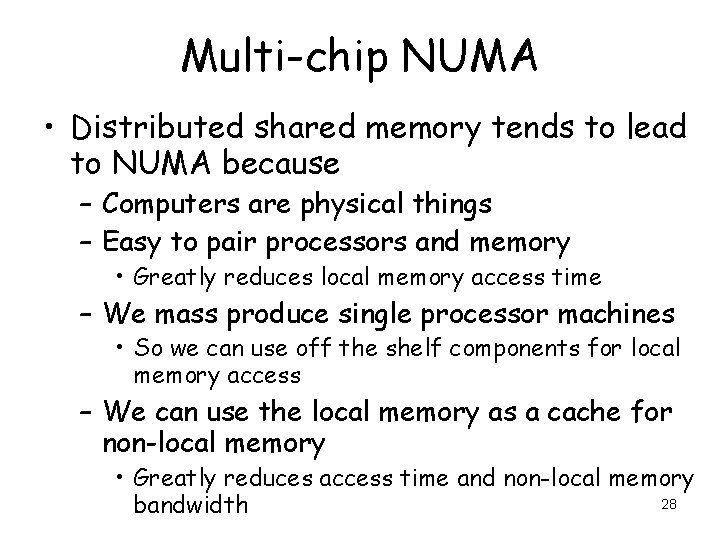

Multi-chip NUMA • Distributed memory in a shared-memory machine tends to lead to NUMA • Symmetric multiprocessor (SMP) – Means memory access time to a given piece of memory is the same from all processors • Non-uniform memory access time – Means memory access time to a given piece of memory can differ between processors 26

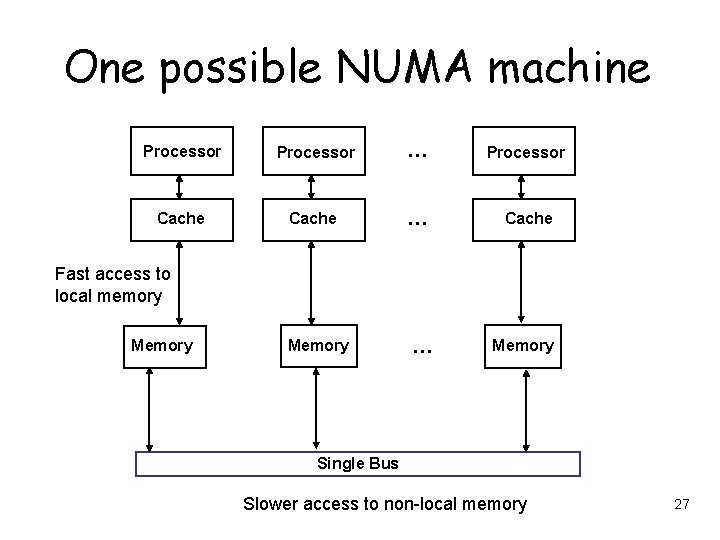

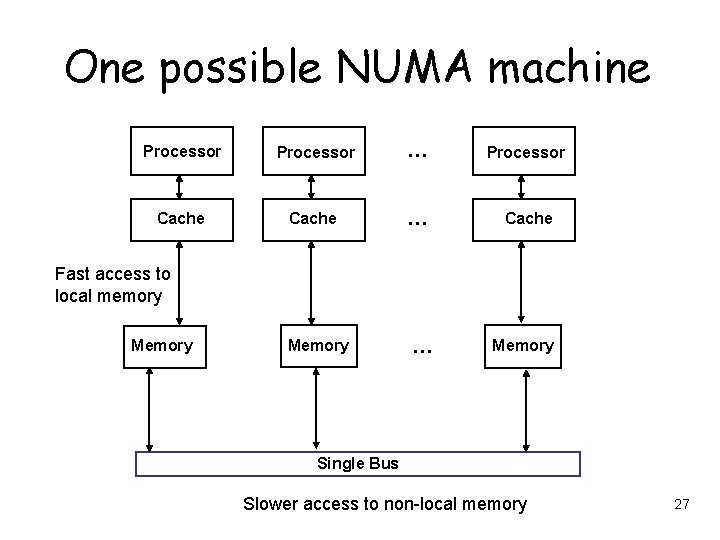

One possible NUMA machine Processor … Processor Cache … Cache Fast access to local memory Memory … Memory Single Bus Slower access to non-local memory 27

Multi-chip NUMA • Distributed shared memory tends to lead to NUMA because – Computers are physical things – Easy to pair processors and memory • Greatly reduces local memory access time – We mass produce single processor machines • So we can use off the shelf components for local memory access – We can use the local memory as a cache for non-local memory • Greatly reduces access time and non-local memory 28 bandwidth

Shared memory and 3 D stacking • Most current multicore processors have a single shared SMP memory – (There are exceptions such as the Cell processor, which has distributed on-chip memory) – The distance between different cores on the processor is much smaller than the distance between any core and memory – It mostly does not make sense to have nonuniform memory access times within a single chip 29

Shared memory and 3 D stacking • But this may all change with 3 D stacking • 3 D stacking – Place the memory chip directly on top of the processor – “Through-silicon via” (TSV) is a tiny connector on the face of each chip – Hundreds of TSVs connecting processor and memory – Processor and memory communicate along TSVs 30

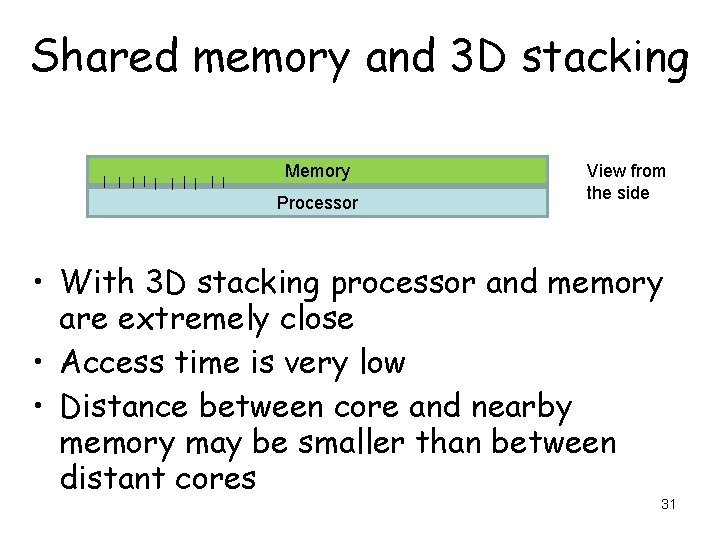

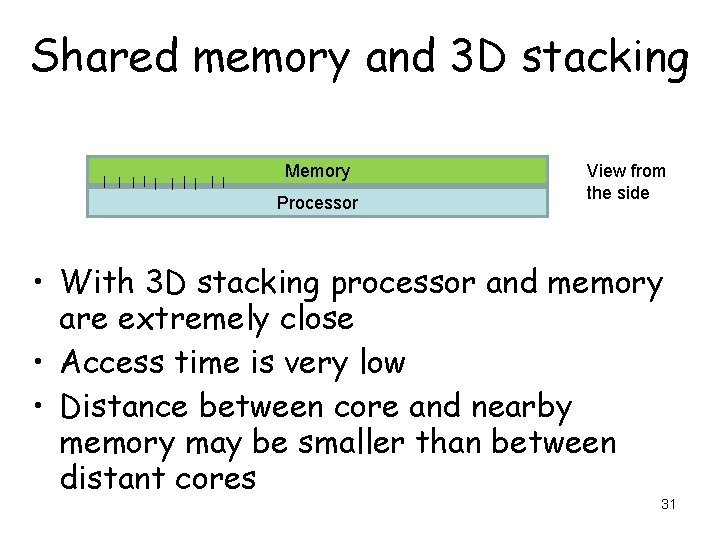

Shared memory and 3 D stacking Memory Processor View from the side • With 3 D stacking processor and memory are extremely close • Access time is very low • Distance between core and nearby memory may be smaller than between distant cores 31

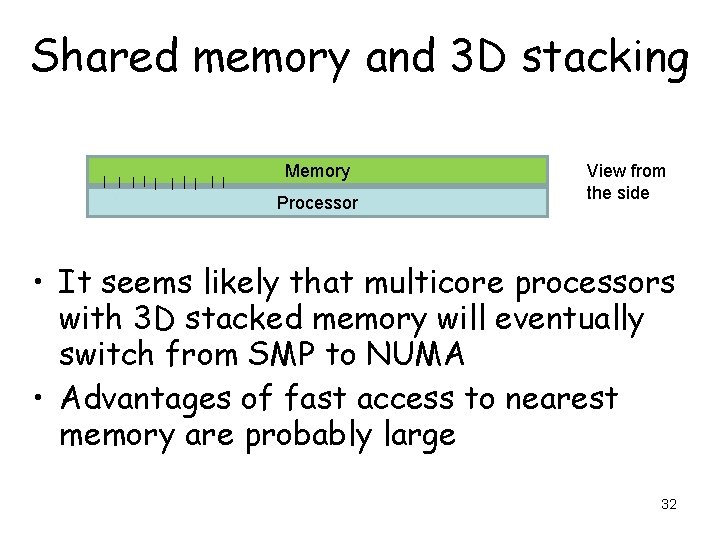

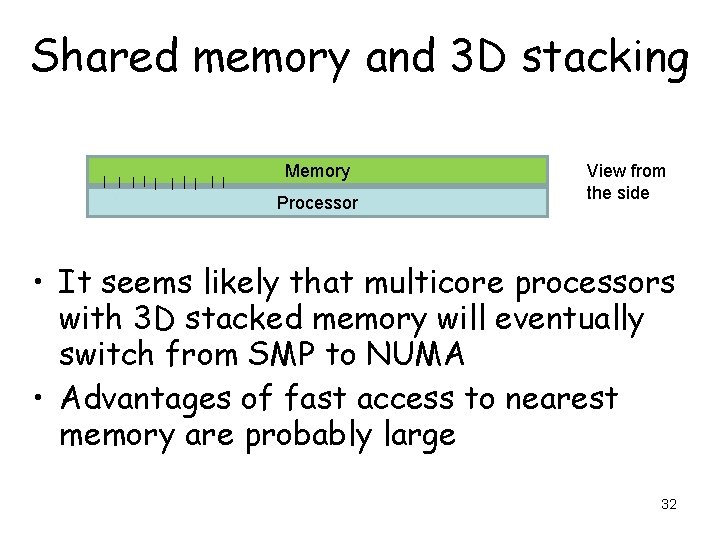

Shared memory and 3 D stacking Memory Processor View from the side • It seems likely that multicore processors with 3 D stacked memory will eventually switch from SMP to NUMA • Advantages of fast access to nearest memory are probably large 32