Multiplicative updates for L 1 regularized regression Prof

- Slides: 25

Multiplicative updates for L 1 regularized regression Prof. Lawrence Saul Dept of Computer Science & Engineering UC San Diego (Joint work with Fei Sha & Albert Park)

Trends in data analysis • Larger data sets – In 1990 s : thousands of examples – In 2000+ : millions or billions • Increased dimensionality – High resolution, multispectral images – Large vocabulary text processing – Gene expression data

How do we scale? • Faster computers: – Moore’s law is not enough. – Data acquisition is too fast. • Massive parallelism: – Effective, but expensive. – Not always easy to program. • Brain over brawn: – New, better algorithms. – Intelligent data analysis.

Searching for sparse models • Less is more: Number of nonzero parameters should not scale with size or dimensionality. • Models with sparse solutions: – Support vector machines – Nonnegative matrix factorization – L 1 -norm regularized regression

An unexpected connection • Different problems – large margin classification – high dimensional data analysis – linear and logistic regression • Similar learning algorithms – Multiplicative vs additive updates – Guarantees of monotonic convergence

This talk I. Multiplicative updates – Unusual form – Attractive properties II. Sparse regression – L 1 norm regularization – Relation to quadratic programming III. Experimental results – Sparse solutions – Convex duality – Large-scale problems

Part I. Multiplicative updates Be fruitful and multiply.

Nonnegative quadratic programming (NQP) • Optimization • Solutions – Cannot be found analytically. – Tend to be sparse.

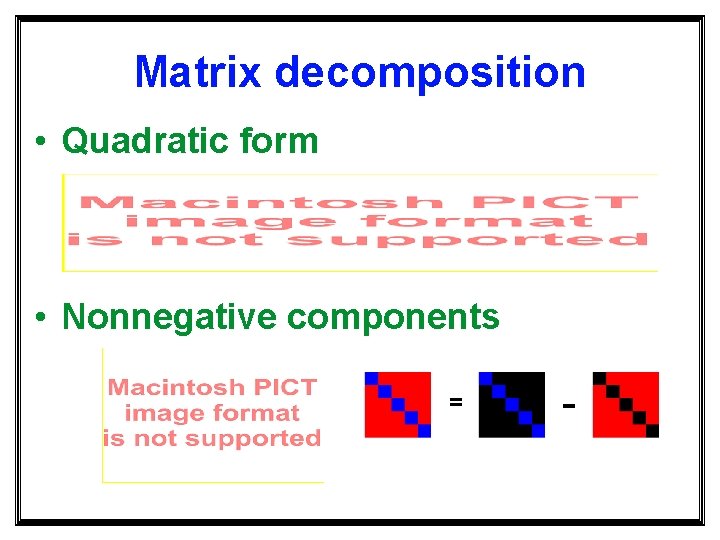

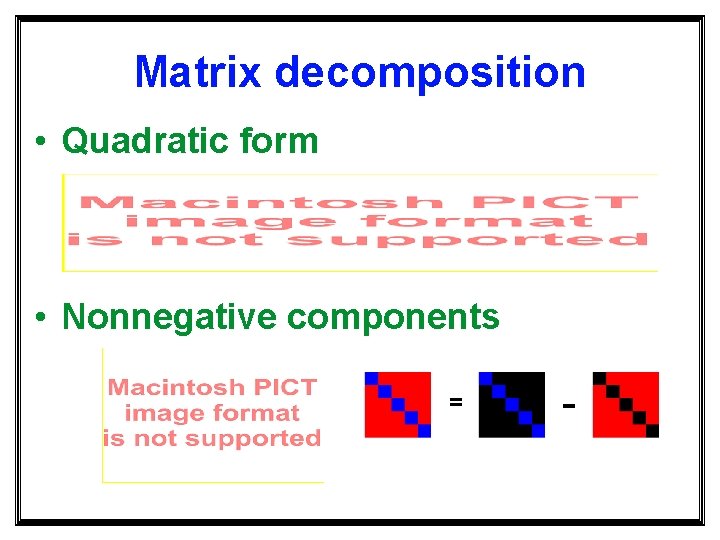

Matrix decomposition • Quadratic form • Nonnegative components = -

Multiplicative update • Matrix-vector products By construction, these vectors are nonnegative. • Iterative update – multiplicative – elementwise – no learning rate – enforces nonnegativity

Fixed points • vi = 0 When multiplicative factor is less than unity, element decays quickly to zero. • vi > 0 When multiplicative factor equals unity, partial derivative vanishes: (Av+b)i = 0.

Attractive properties for NQP • Theoretical guarantees Objective decreases at each iteration. Updates converge to global minimum. • Practical advantages – No learning rate. – No constraint checking. – Easy to implement (and vectorize).

Part II. Sparse regression Feature selection via L 1 norm regularization…

Linear regression • Training examples – vector inputs – scalar outputs • Model fitting – tractable: least squares – ill-posed: if dimensionality exceeds n

Regularization • L 2 norm • L 1 norm What is the difference?

L 2 versus L 1 • L 2 norm – Differentiable – Analytically tractable – Favors small (but nonzero) weights. • L 1 norm – Non-differentiable, but convex – Requires iterative solution. – Estimated weights are sparse!

Reformulation as NQP • L 1 -regularized regression • Change of variables – Separate out +/- elements of w. – Introduce nonnegativity constraints.

L 1 norm as NQP change of variables These problems are equivalent!

Why reformulate? • Differentiability Simpler to optimize a smooth function, even with constraints. • Multiplicative updates – – Well-suited to NQP. Monotonic convergence. No learning rate. Enforce nonnegativity.

Logistic regression • Training examples – vector inputs – binary (0/1) outputs • L 1 -regularized model-fitting Solve optimization via multiple L 1 -regularized linear regressions.

Part III. Experimental results

Convergence to sparse solution Evolution of weight vector under multiplicative updates for L 1 -regularized linear regression.

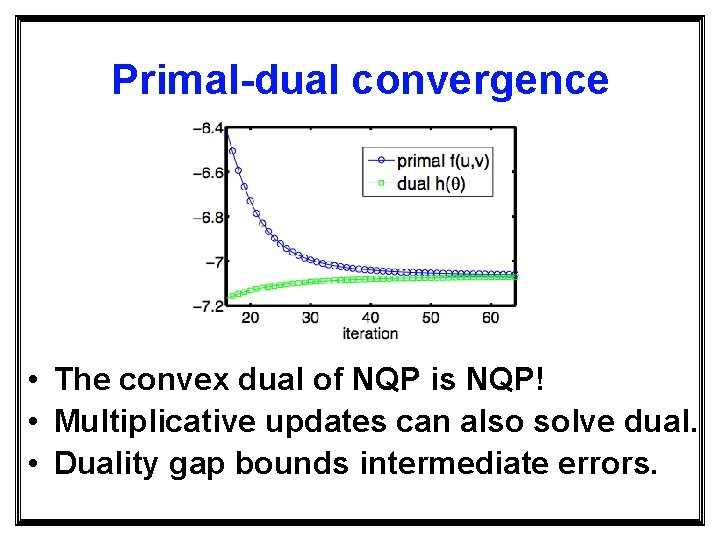

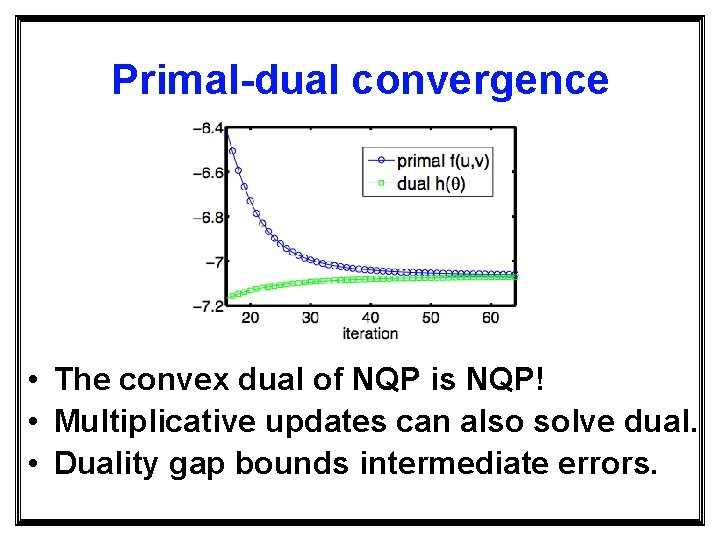

Primal-dual convergence • The convex dual of NQP is NQP! • Multiplicative updates can also solve dual. • Duality gap bounds intermediate errors.

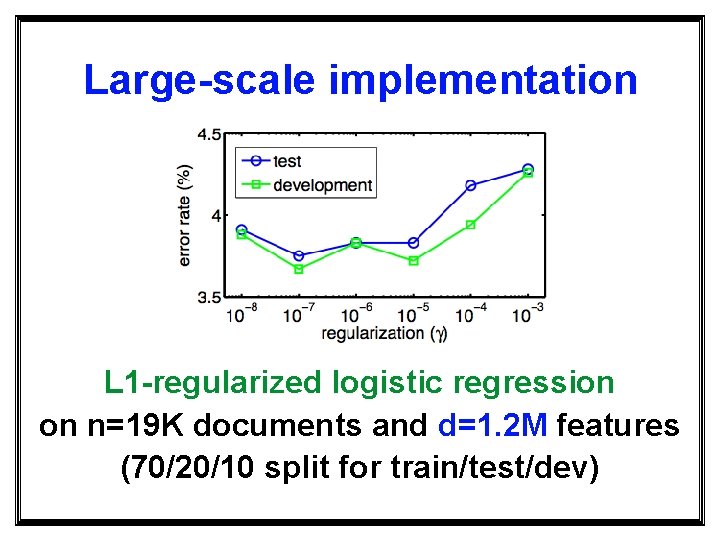

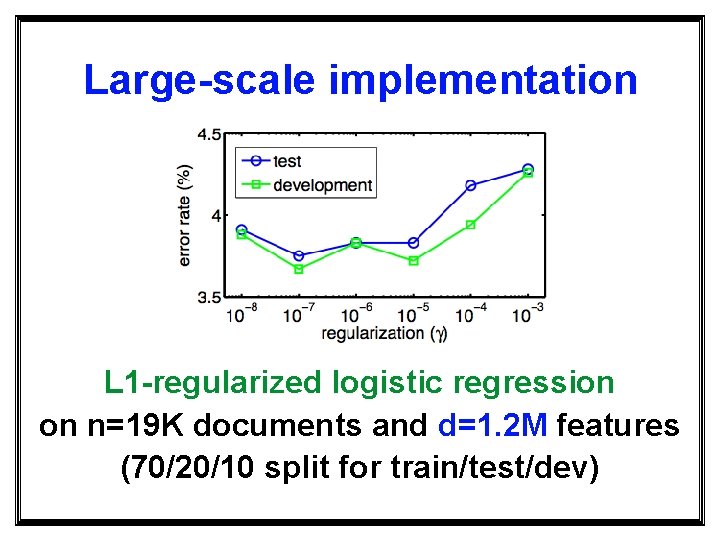

Large-scale implementation L 1 -regularized logistic regression on n=19 K documents and d=1. 2 M features (70/20/10 split for train/test/dev)

Discussion • Related work based on: – auxiliary functions – iterative least squares – nonnegativity constraints • Strengths of our approach: – – simplicity scalability modularity insights from related models