Multiple regression Overview Simple linear regression SPSS output

- Slides: 17

Multiple regression

Overview • Simple linear regression SPSS output Linearity assumption • Multiple regression … in action; 7 steps checking assumptions (and repairing) Presenting multiple regression in a paper

Simple linear regression Class attendance and language learning Bob: 10 classes; 100 words Carol: 15 classes; 150 words Dave: 12 classes; 120 words Ann: 17 classes; 170 words Here’s some data. We expect that the more classes someone attends, the more words they learn.

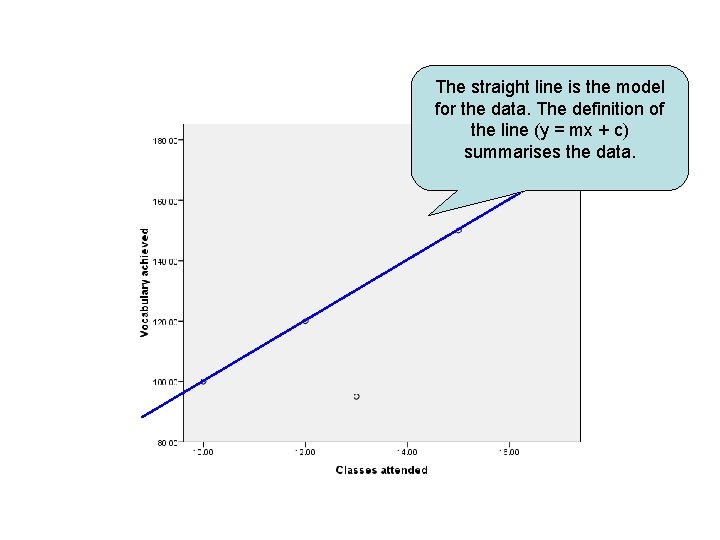

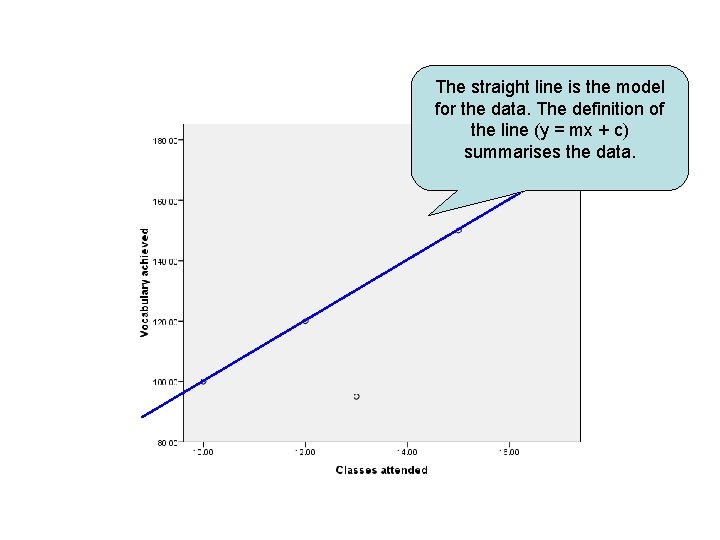

The straight line is the model for the data. The definition of the line (y = mx + c) summarises the data.

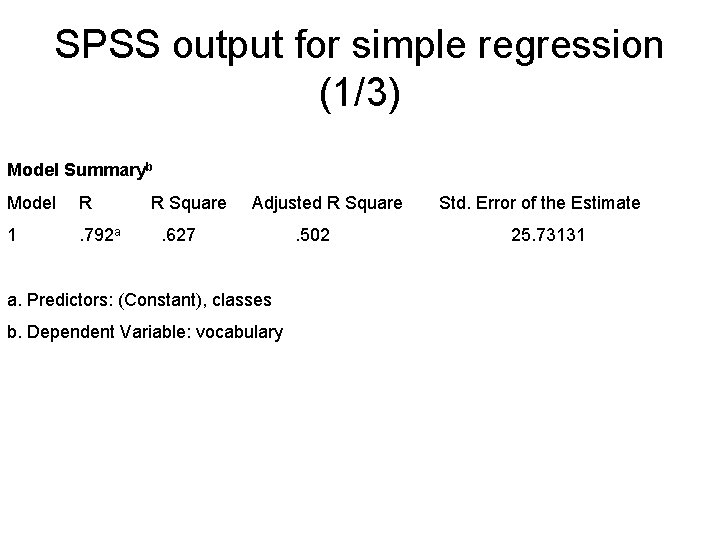

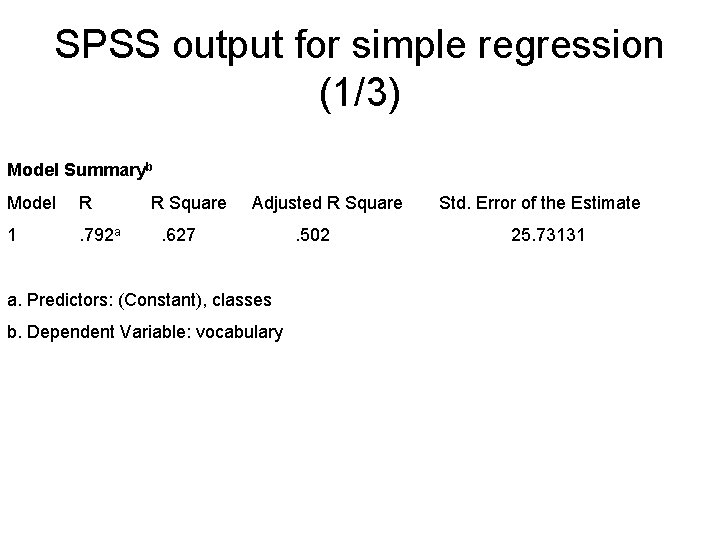

SPSS output for simple regression (1/3) Model Summaryb Model R 1 . 792 a R Square Adjusted R Square . 627 a. Predictors: (Constant), classes b. Dependent Variable: vocabulary . 502 Std. Error of the Estimate 25. 73131

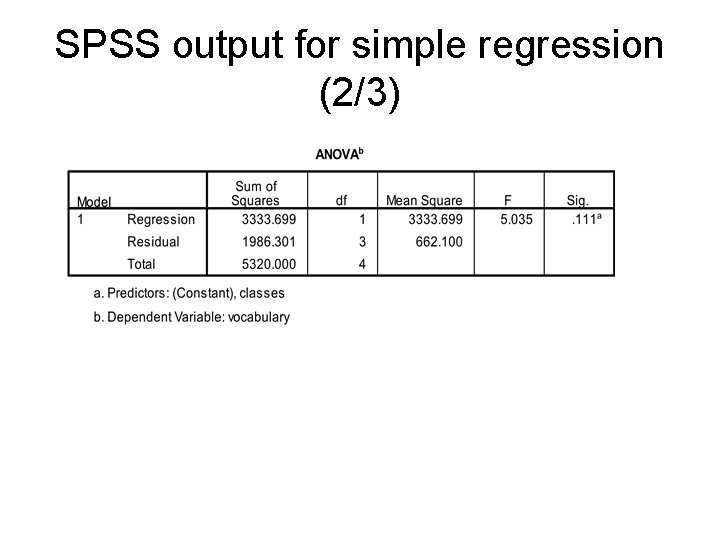

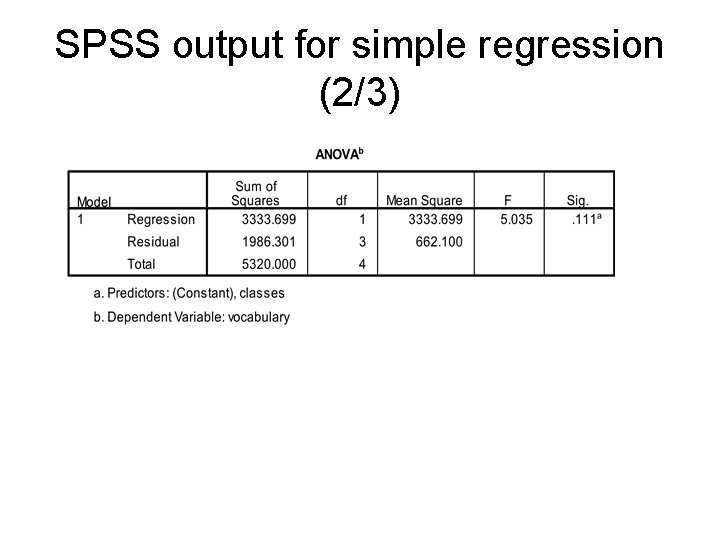

SPSS output for simple regression (2/3)

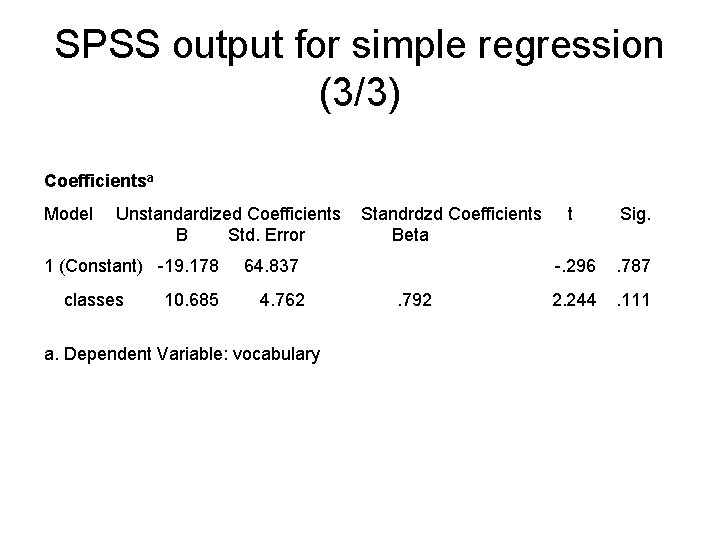

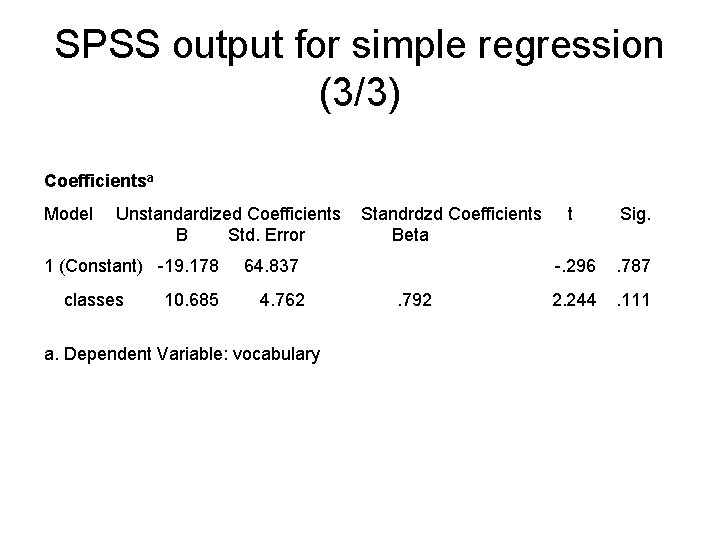

SPSS output for simple regression (3/3) Coefficientsa Model Unstandardized Coefficients B Std. Error 1 (Constant) -19. 178 classes 10. 685 Standrdzd Coefficients Beta 64. 837 4. 762 a. Dependent Variable: vocabulary . 792 t Sig. -. 296 . 787 2. 244 . 111

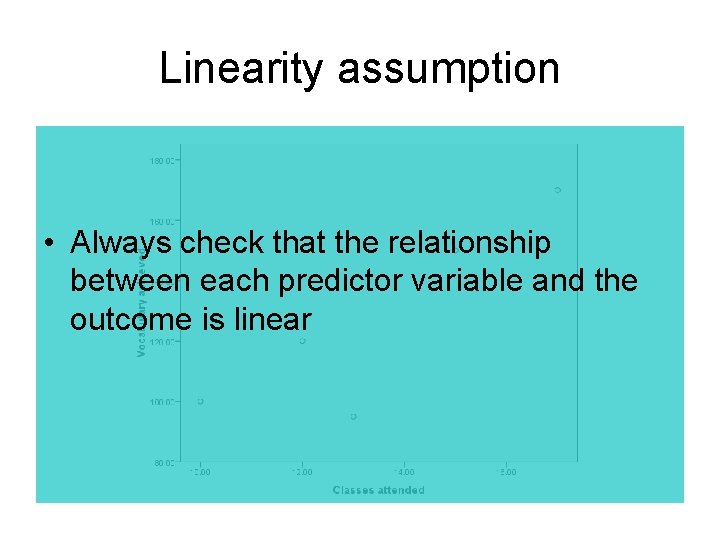

Linearity assumption • Always check that the relationship between each predictor variable and the outcome is linear

Multiple regression More than one predictor e. g. predict vocabulary from classes + homework + L 1 vocabulary

Multiple regression in action 1. Bivariate correlations & scatterplots – check for outliers 2. Analyse / Regression 3. Overall fit (R 2) and its significance (F) 4. Coefficients for each predictor (‘m’s) 5. Regression equation 6. Check mulitcollinearity (Tolerance) 7. Check residuals are normally distributed

Bivariate outlier

Multivariate outlier Test Mahalanobis distance (In SPSS, click ‘Save’ button in Regression dialog) to test sig. , treat as a chi-square value with df = number of predictors

Multicollinearity Tolerance should not be too close to zero T = 1 – R 2 where R 2 is for prediction of this predictor by the others If it fails, you need to reduce the number of predictors (you don’t need the extra ones anyway)

Failed normality assumption If residuals do not (roughly) follow a normal distribution … it is often because one or more predictors is not normally distributed May be able to transform predictor

Categorical predictor Typically predictors are continuous variables Categorical predictors e. g. Sex (male, female) can do: code as 0, 1 Compare simple regression with t-test (vocabulary = constant + Sex)

Presenting multiple regression Table is a good idea: Include correlations (bivariate) R 2 adjusted Report F (df, df), and its p, for the overall model Report N Coefficient, t, and p (sig. ) for each predictor Mention that assumptions of linearity, normality, and absence of multicollinearity were checked, and satisfied

Further reading Tabachnik & Fidell (2001, 2007) Using Multivariate Statistics. Ch 5 Multiple regression