Multiple Camera Object Tracking Helmy Eltoukhy and Khaled

Multiple Camera Object Tracking Helmy Eltoukhy and Khaled Salama EE 392 J Final Project, March 20, 2002 1

Outline b Introduction b Point Correspondence between multiple cameras b Robust Object Tracking b Camera Communication and decision making b Results EE 392 J Final Project, March 20, 2002 2

Object Tracking b The objective is to obtain an accurate estimate of the position (x, y) of the object tracked b Tracking algorithms can be classified into • • Single object & Single Camera Multiple objects & Multiple Cameras Single object & Multiple Cameras EE 392 J Final Project, March 20, 2002 3

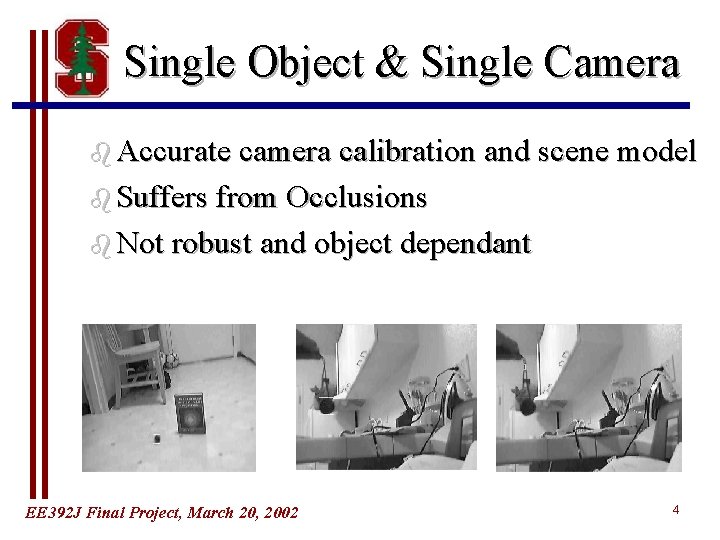

Single Object & Single Camera b Accurate camera calibration and scene model b Suffers from Occlusions b Not robust and object dependant EE 392 J Final Project, March 20, 2002 4

Single Object & Multiple Camera b Accurate point correspondence between scenes b Occlusions can be minimized or even avoided b Redundant information for better estimation b Multiple camera Communication problem EE 392 J Final Project, March 20, 2002 5

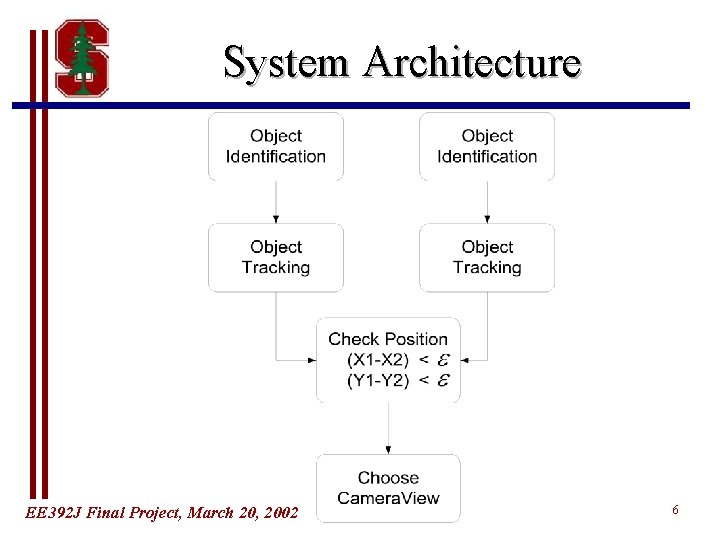

System Architecture EE 392 J Final Project, March 20, 2002 6

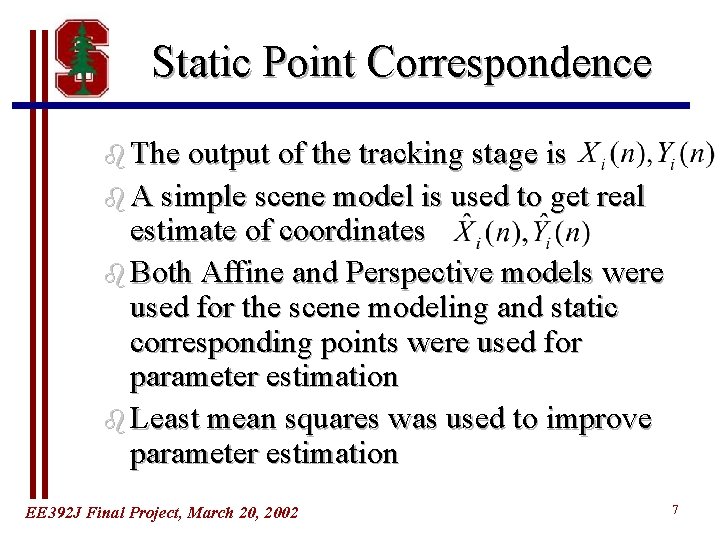

Static Point Correspondence b The output of the tracking stage is b A simple scene model is used to get real estimate of coordinates b Both Affine and Perspective models were used for the scene modeling and static corresponding points were used for parameter estimation b Least mean squares was used to improve parameter estimation EE 392 J Final Project, March 20, 2002 7

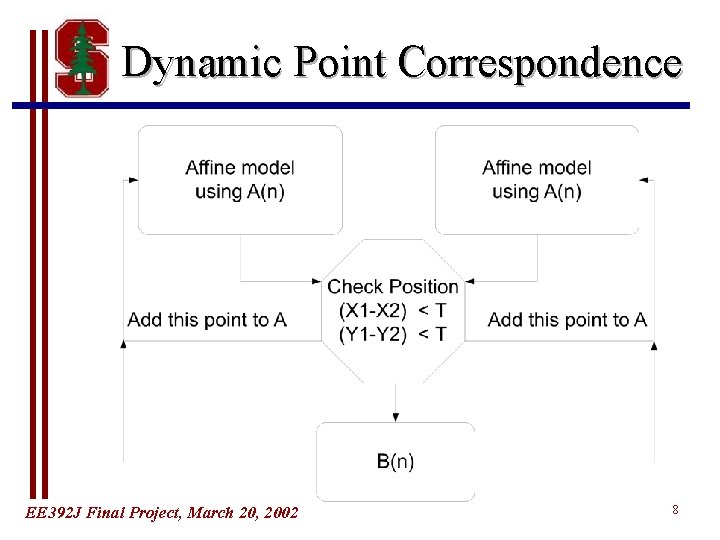

Dynamic Point Correspondence EE 392 J Final Project, March 20, 2002 8

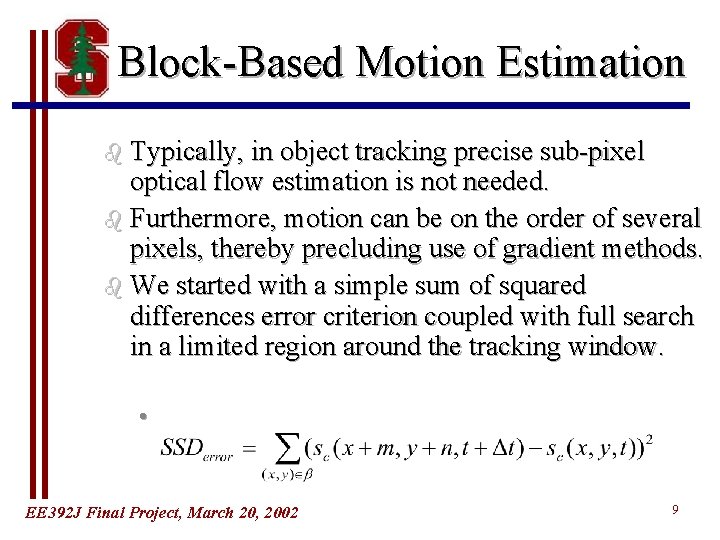

Block-Based Motion Estimation b Typically, in object tracking precise sub-pixel optical flow estimation is not needed. b Furthermore, motion can be on the order of several pixels, thereby precluding use of gradient methods. b We started with a simple sum of squared differences error criterion coupled with full search in a limited region around the tracking window. • EE 392 J Final Project, March 20, 2002 9

Adaptive Window Sizing b Although simple block-based motion estimation may work reasonably well when motion is purely translational, it can lose the object if its relative size changes. • If the object’s camera field of view shrinks, the SSD error is strongly influenced by the background. • If the object’s camera field of view grows, the window fails to make use of entire object information and can slip away. EE 392 J Final Project, March 20, 2002 10

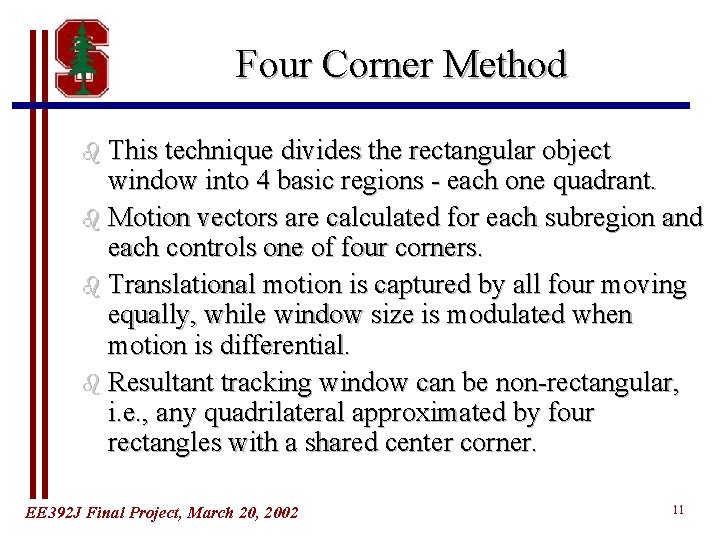

Four Corner Method b This technique divides the rectangular object window into 4 basic regions - each one quadrant. b Motion vectors are calculated for each subregion and each controls one of four corners. b Translational motion is captured by all four moving equally, while window size is modulated when motion is differential. b Resultant tracking window can be non-rectangular, i. e. , any quadrilateral approximated by four rectangles with a shared center corner. EE 392 J Final Project, March 20, 2002 11

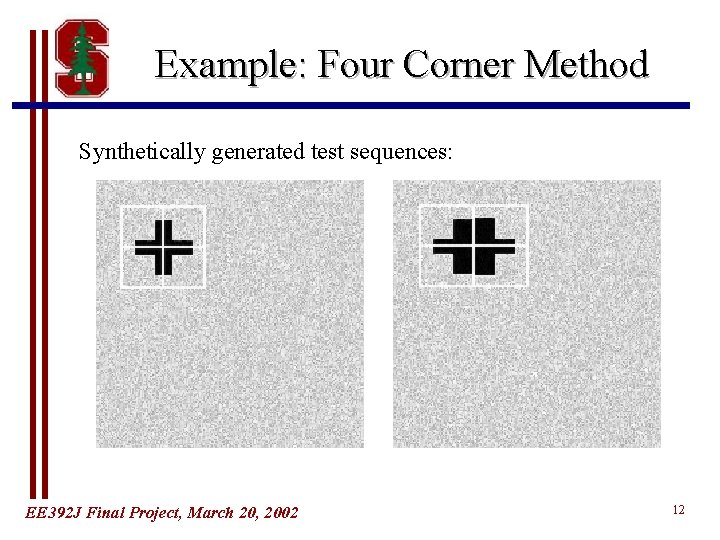

Example: Four Corner Method Synthetically generated test sequences: EE 392 J Final Project, March 20, 2002 12

Correlative Method b Four corner method is strongly subject to error accumulation which can result in drift of one or more of the tracking window quadrants. b Once drift occurs, sizing of window is highly inaccurate. b Need a method that has some corrective feedback so window can converge to correct size even after some errors. b Correlation of current object features to some template view is one solution. EE 392 J Final Project, March 20, 2002 13

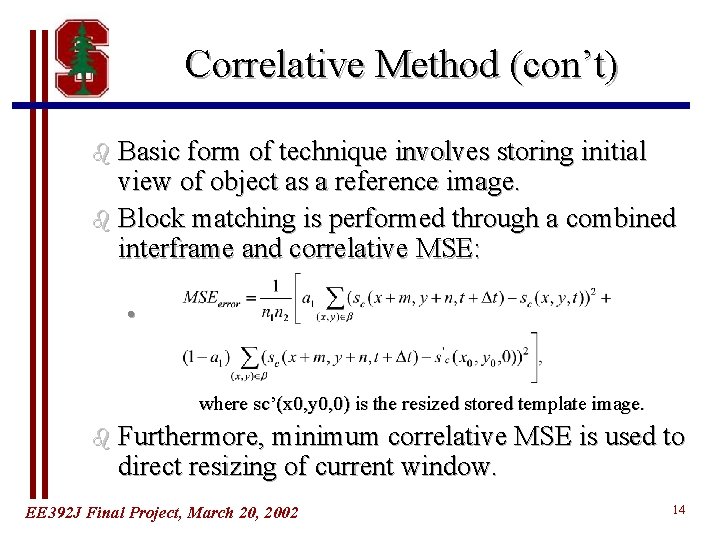

Correlative Method (con’t) b Basic form of technique involves storing initial view of object as a reference image. b Block matching is performed through a combined interframe and correlative MSE: • where sc’(x 0, y 0, 0) is the resized stored template image. b Furthermore, minimum correlative MSE is used to direct resizing of current window. EE 392 J Final Project, March 20, 2002 14

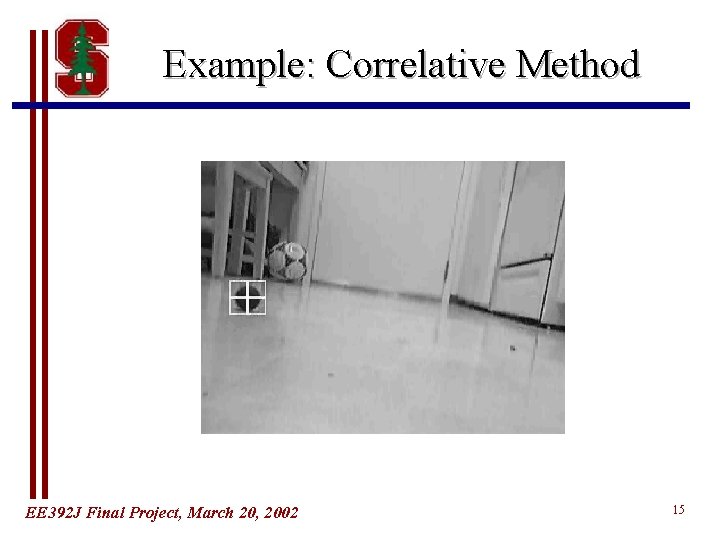

Example: Correlative Method EE 392 J Final Project, March 20, 2002 15

Occlusion Detection b In order for multi-camera feature tracking to work, each camera must possess an ability to assess the validity of its tracking (e. g. to detect occlusion). b Comparing the minimum error at each point to some absolute threshold is problematic since error can grow even when tracking is still valid. b Threshold must be adaptive to current conditions. b One solution is to use a threshold of k (constant > 1) times the moving average of the MSE. b Thus, only precipitous changes in error trigger indication of possibly fallacious tracking. EE 392 J Final Project, March 20, 2002 16

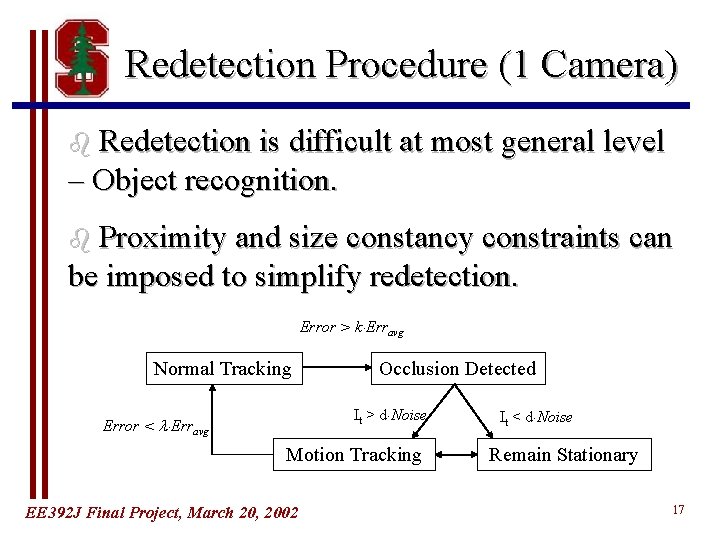

Redetection Procedure (1 Camera) b Redetection is difficult at most general level – Object recognition. b Proximity and size constancy constraints can be imposed to simplify redetection. Error > k Erravg Normal Tracking Occlusion Detected It > d Noise Error < Erravg Motion Tracking EE 392 J Final Project, March 20, 2002 It < d Noise Remain Stationary 17

Example: Occlusion EE 392 J Final Project, March 20, 2002 18

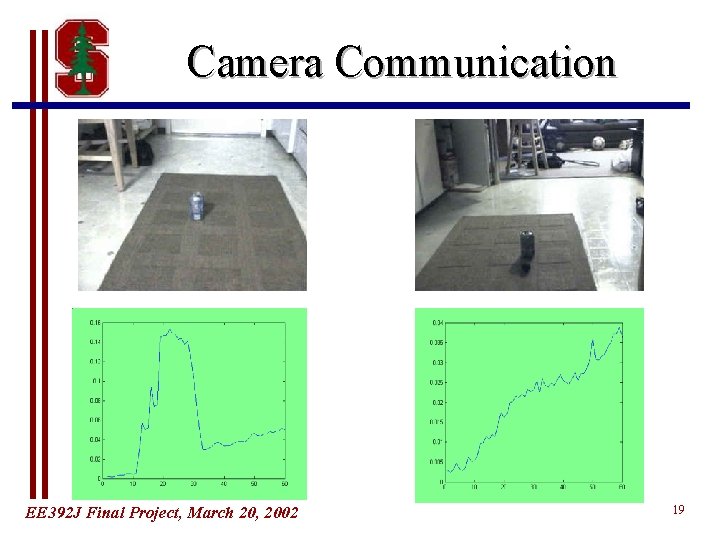

Camera Communication EE 392 J Final Project, March 20, 2002 19

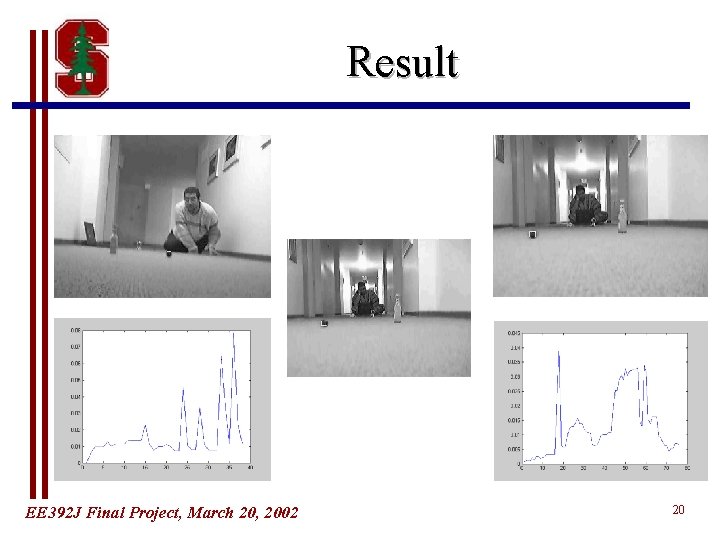

Result EE 392 J Final Project, March 20, 2002 20

Conclusion b Multiple cameras can do more than just 3 D imaging b Camera calibration only works if you have an accurate scene and camera model b Tracking is sensitive to the camera characteristics (noise, blur, frame rate, . . ) b Tracking accuracy can be improved using multiple cameras EE 392 J Final Project, March 20, 2002 21

- Slides: 21