Multimodal Input for Meeting Browsing and Retrieval Interfaces

Multimodal Input for Meeting Browsing and Retrieval Interfaces: Preliminary Findings Agnes Lisowska & Susan Armstrong ISSCO/TIM/ETI, University of Geneva IM 2. HMI

The Problem Many meeting centered projects, resulting in databases of meeting data… but … MLMI’ 06 - May 2 nd, 2006

The Problem Many meeting centered projects, resulting in databases of meeting data… but … How can a real-world user best exploit this data? MLMI’ 06 - May 2 nd, 2006

Mouse-keyboard vs. Multimodal Input n Web - similar media (video, pictures, text, sound) and we are used to manipulating them with keyboard and mouse but … MLMI’ 06 - May 2 nd, 2006

Mouse-keyboard vs. Multimodal Input n Web - similar media (video, pictures, text, sound) and we are used to manipulating them with keyboard and mouse but … n Multimedia meeting domain is novel q interesting information found across media in the database so …. MLMI’ 06 - May 2 nd, 2006

Mouse-keyboard vs. Multimodal Input n Web - similar media (video, pictures, text, sound) and we are used to manipulating them with keyboard and mouse but … n Multimedia meeting domain is novel q interesting information found across media in the database so …. n Multimodal interaction could be the most efficient way to exploit cross-media information MLMI’ 06 - May 2 nd, 2006

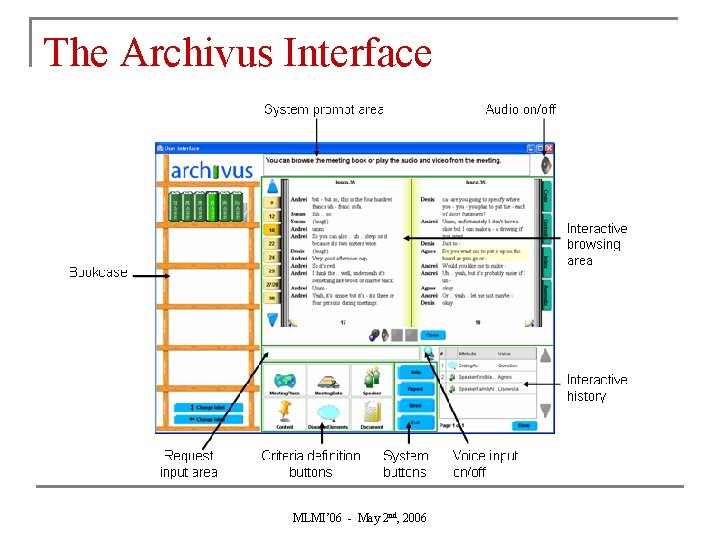

The Archivus System n Designed based on q q n Flexibly multimodal q n a user requirements study data and annotations available in IM 2 project can study the system with minimal a priori assumptions about interaction modalities Input q q pointing: mouse, touchscreen language: voice, keyboard n n freeform questions allowed, but not a QA system Output q text, graphics, video, audio MLMI’ 06 - May 2 nd, 2006

The Archivus Interface MLMI’ 06 - May 2 nd, 2006

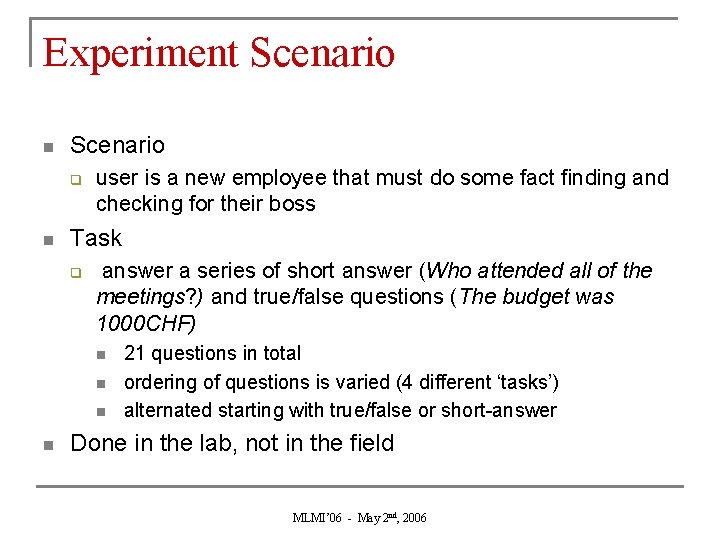

Experiment Scenario n Scenario q n user is a new employee that must do some fact finding and checking for their boss Task q answer a series of short answer (Who attended all of the meetings? ) and true/false questions (The budget was 1000 CHF) n n 21 questions in total ordering of questions is varied (4 different ‘tasks’) alternated starting with true/false or short-answer Done in the lab, not in the field MLMI’ 06 - May 2 nd, 2006

Experiment Methodology: Wizard of Oz n What it is … q n Why … q n user interacts with what they think is a fully functioning system … but a human is actually controlling the system and processing (language) input allows experimenting with natural language input without having to implement SR and NLP Data q video and audio n n n user’s face (reaction to the system) user’s input devices user’s screen MLMI’ 06 - May 2 nd, 2006

Experiment Environment n User’s room q PC with speakers q wireless mouse, keyboard q n q 2 cameras q recording equipment Wizard’s room q NL processing simulation q view of the user’s screen touchscreen MLMI’ 06 - May 2 nd, 2006

Procedure n n n Pre-experiment questionnaire (demographic information), consent form Read scenario description and software manual Phase 1: 20 minutes q q n Phase 2: 20 minutes q q n subset of modalities 11 questions (5 true/false, 6 short answer) all modalities 10 questions (5 true/false, 5 short answer) Post-experiment questionnaire and interview (time permitting) MLMI’ 06 - May 2 nd, 2006

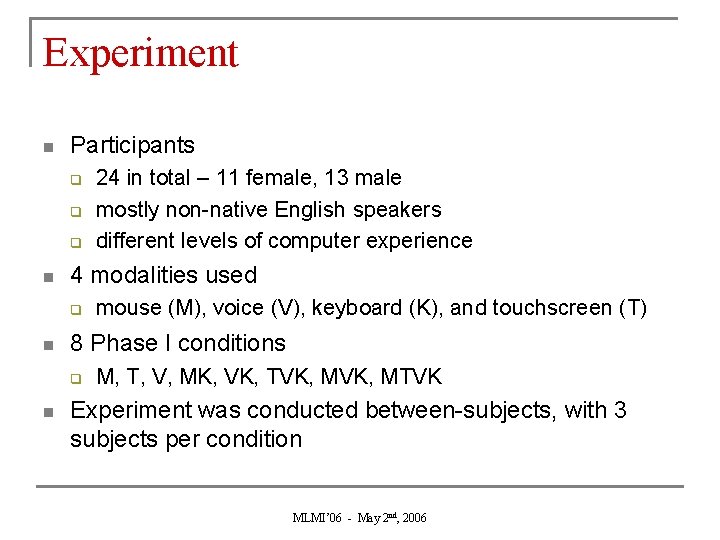

Experiment n Participants q q q n 4 modalities used q n mouse (M), voice (V), keyboard (K), and touchscreen (T) 8 Phase I conditions q n 24 in total – 11 female, 13 male mostly non-native English speakers different levels of computer experience M, T, V, MK, VK, TVK, MTVK Experiment was conducted between-subjects, with 3 subjects per condition MLMI’ 06 - May 2 nd, 2006

What we were looking at … n Task completion q n Learning Effect q n Which modalities result in most success? Does learning with a novel modality encourage its use later on? Number of Interactions q Are users equally active with functionally equivalent modalities? MLMI’ 06 - May 2 nd, 2006

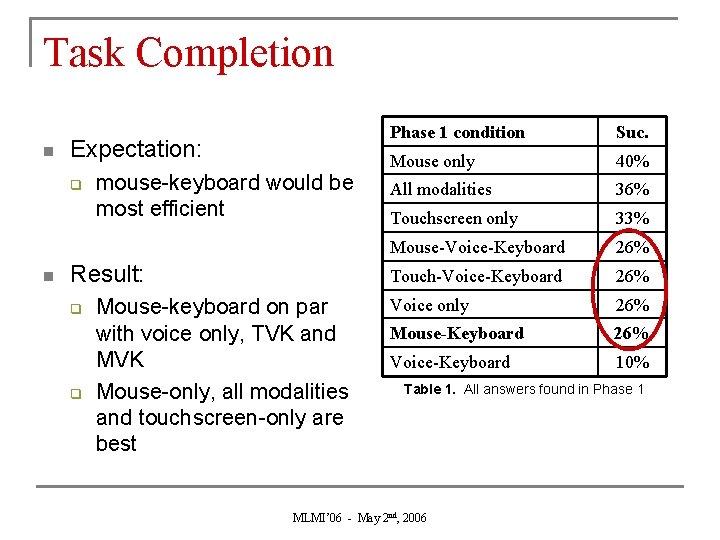

Task Completion n Expectation: q n mouse-keyboard would be most efficient Result: q q Mouse-keyboard on par with voice only, TVK and MVK Mouse-only, all modalities and touchscreen-only are best Phase 1 condition Suc. Mouse only 40% All modalities 36% Touchscreen only 33% Mouse-Voice-Keyboard 26% Touch-Voice-Keyboard 26% Voice only 26% Mouse-Keyboard 26% Voice-Keyboard 10% Table 1. All answers found in Phase 1 MLMI’ 06 - May 2 nd, 2006

Task Completion n In the mouse-only and touchscreen-only condition user can only make ‘correct’ moves q n Touchscreen-only was worse than mouse-only q q q n not the case when voice interaction is involved lower pointing accuracy with touchscreen blocking effect due to unfamiliarity with touchscreen similar results with MVK and TVK Combining voice with other modalities does add value to the interaction MLMI’ 06 - May 2 nd, 2006

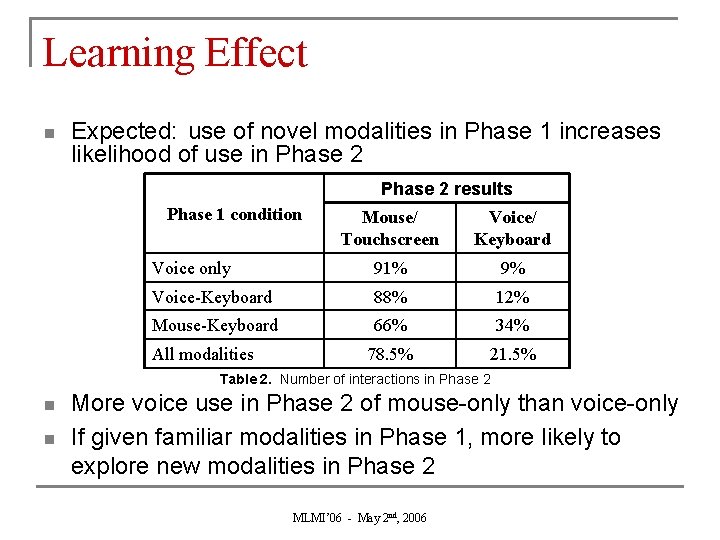

Learning Effect n Expected: use of novel modalities in Phase 1 increases likelihood of use in Phase 2 results Phase 1 condition Mouse/ Touchscreen Voice/ Keyboard Voice only 91% 9% Voice-Keyboard 88% 12% Mouse-Keyboard 66% 34% 78. 5% 21. 5% All modalities Table 2. Number of interactions in Phase 2 n n More voice use in Phase 2 of mouse-only than voice-only If given familiar modalities in Phase 1, more likely to explore new modalities in Phase 2 MLMI’ 06 - May 2 nd, 2006

Learning Effect n Lack of learning effect could be caused by … q q unconscious need to feel comfortable with the system and input modalities at early stages of interaction comfort can manifest in two ways n with system itself (same for all users) q n with interaction methods (different in conditions) q n knowing what graphics represent, type of info available and where it can be found what input modalities are available System is slower with voice MLMI’ 06 - May 2 nd, 2006

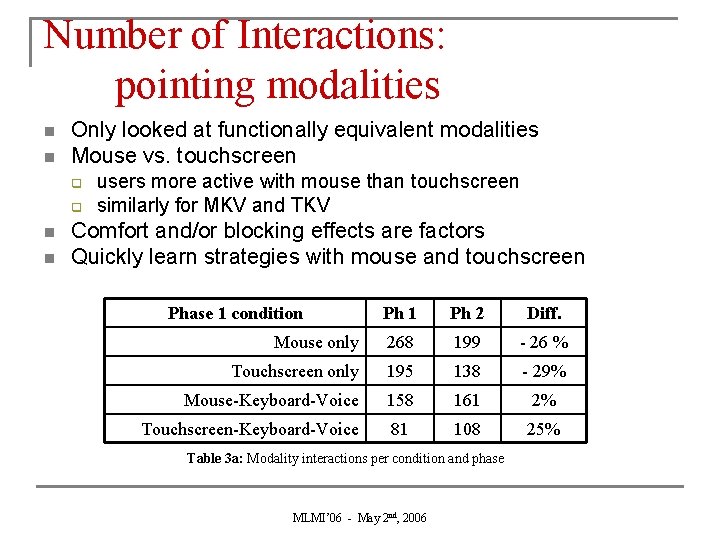

Number of Interactions: pointing modalities n n Only looked at functionally equivalent modalities Mouse vs. touchscreen q q n n users more active with mouse than touchscreen similarly for MKV and TKV Comfort and/or blocking effects are factors Quickly learn strategies with mouse and touchscreen Phase 1 condition Ph 1 Ph 2 Diff. Mouse only 268 199 - 26 % Touchscreen only 195 138 - 29% Mouse-Keyboard-Voice 158 161 2% Touchscreen-Keyboard-Voice 81 108 25% Table 3 a: Modality interactions per condition and phase MLMI’ 06 - May 2 nd, 2006

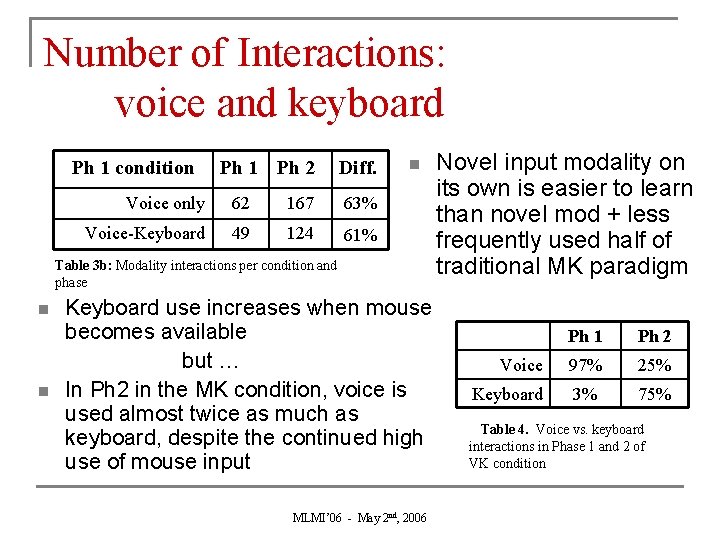

Number of Interactions: voice and keyboard Ph 1 condition Ph 1 Ph 2 Diff. Voice only 62 167 63% Voice-Keyboard 49 124 61% n Table 3 b: Modality interactions per condition and phase n n Keyboard use increases when mouse becomes available but … In Ph 2 in the MK condition, voice is used almost twice as much as keyboard, despite the continued high use of mouse input MLMI’ 06 - May 2 nd, 2006 Novel input modality on its own is easier to learn than novel mod + less frequently used half of traditional MK paradigm Ph 1 Ph 2 Voice 97% 25% Keyboard 3% 75% Table 4. Voice vs. keyboard interactions in Phase 1 and 2 of VK condition

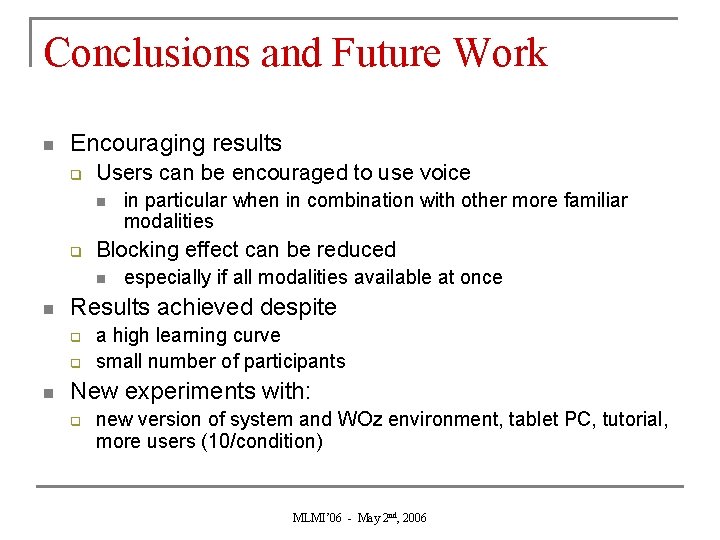

Conclusions and Future Work n Encouraging results q Users can be encouraged to use voice n q Blocking effect can be reduced n n especially if all modalities available at once Results achieved despite q q n in particular when in combination with other more familiar modalities a high learning curve small number of participants New experiments with: q new version of system and WOz environment, tablet PC, tutorial, more users (10/condition) MLMI’ 06 - May 2 nd, 2006

- Slides: 21