multimodal emotion recognition recognition models application dependency discrete

![Sample Profiles of Anger A 1: F 4[22, 124], F 31[-131, -25], F 32[-136, Sample Profiles of Anger A 1: F 4[22, 124], F 31[-131, -25], F 32[-136,](https://slidetodoc.com/presentation_image_h/27f2dfc70094812cf4677025ff996b9c/image-5.jpg)

- Slides: 24

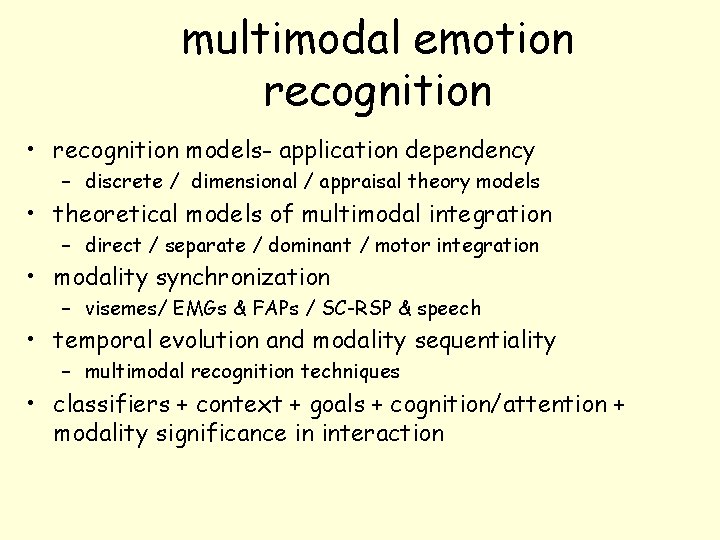

multimodal emotion recognition • recognition models- application dependency – discrete / dimensional / appraisal theory models • theoretical models of multimodal integration – direct / separate / dominant / motor integration • modality synchronization – visemes/ EMGs & FAPs / SC-RSP & speech • temporal evolution and modality sequentiality – multimodal recognition techniques • classifiers + context + goals + cognition/attention + modality significance in interaction

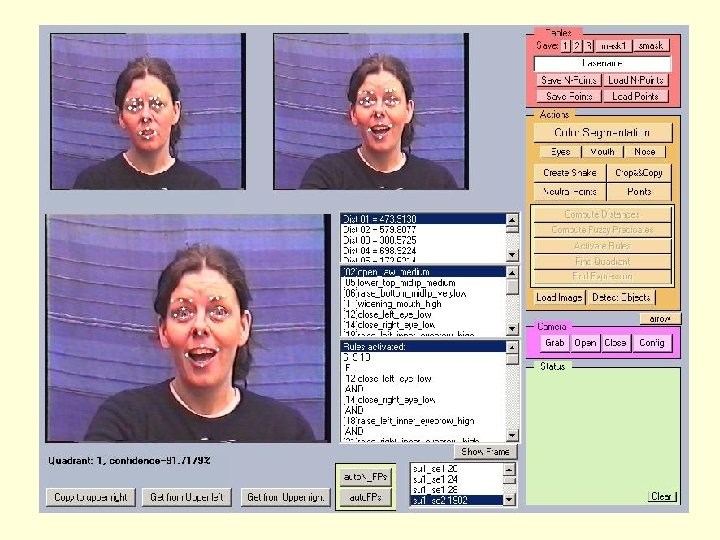

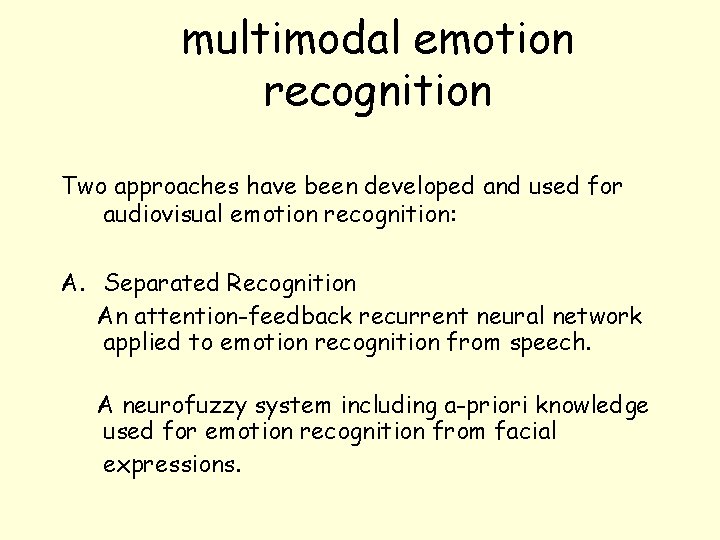

multimodal emotion recognition Two approaches have been developed and used for audiovisual emotion recognition: A. Separated Recognition An attention-feedback recurrent neural network applied to emotion recognition from speech. A neurofuzzy system including a-priori knowledge used for emotion recognition from facial expressions.

Separated Recognition The goal was to evaluate performance of the obtained recognition by each modality. Visual feeltracing was required. Pause detection & tune-based analysis, with speech playing the main role, was the means to synchronise the two modalities.

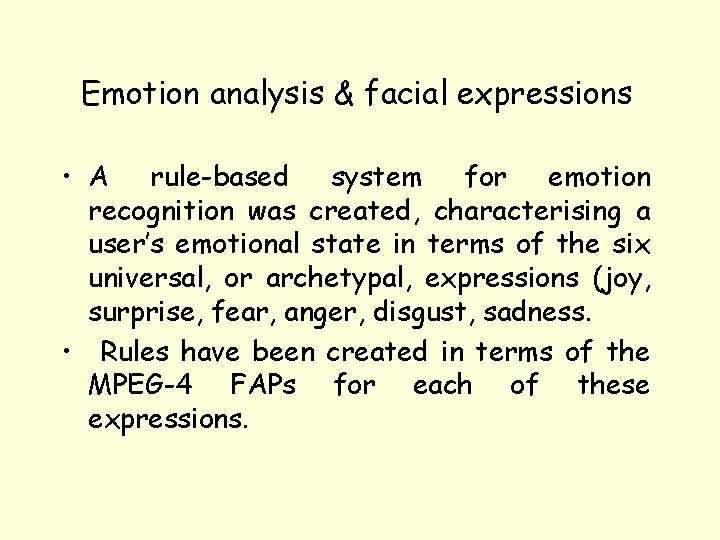

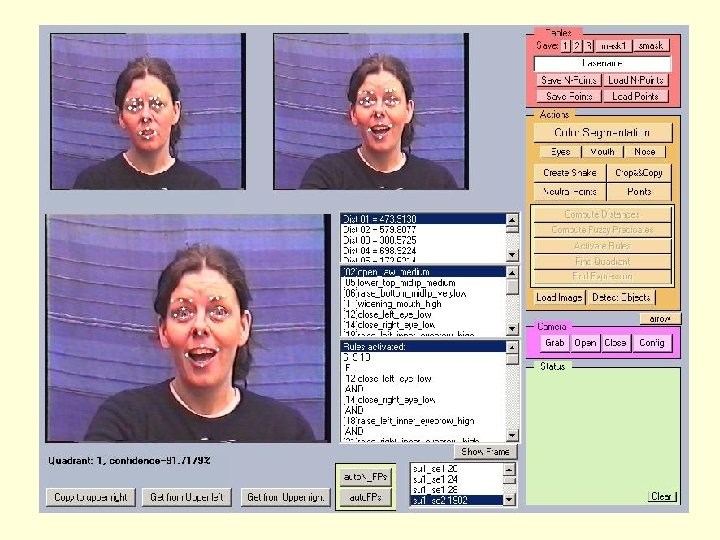

Emotion analysis & facial expressions • A rule-based system for emotion recognition was created, characterising a user’s emotional state in terms of the six universal, or archetypal, expressions (joy, surprise, fear, anger, disgust, sadness. • Rules have been created in terms of the MPEG-4 FAPs for each of these expressions.

![Sample Profiles of Anger A 1 F 422 124 F 31131 25 F 32136 Sample Profiles of Anger A 1: F 4[22, 124], F 31[-131, -25], F 32[-136,](https://slidetodoc.com/presentation_image_h/27f2dfc70094812cf4677025ff996b9c/image-5.jpg)

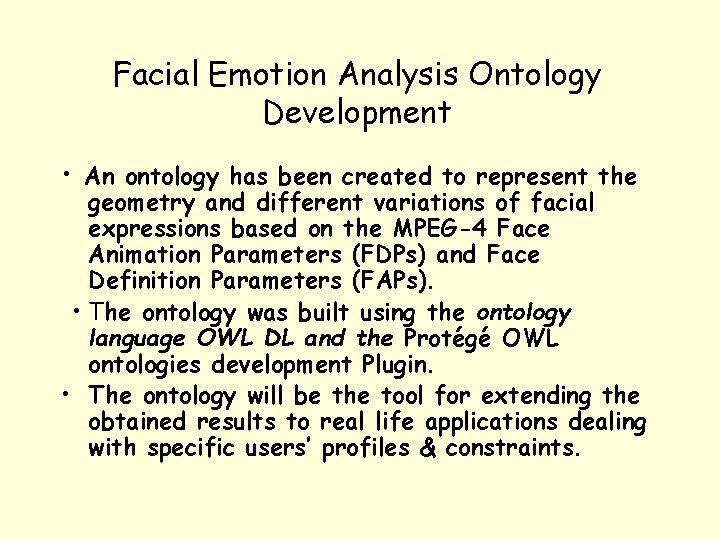

Sample Profiles of Anger A 1: F 4[22, 124], F 31[-131, -25], F 32[-136, -34], F 33[-189, -109], F 34[183, -105], F 35[-101, -31], F 36[-108, -32], F 37[29, 85], F 38[27, 89] A 2: F 19[-330, -200], F 20[-335, -205], F 21[200, 330], F 22[205, 335], F 31[ -200, -80], F 32[-194, -74], F 33[-190, -70], F 34=[-190, -70] A 3: F 19 [-330, -200], F 20[-335, -205], F 21[200, 330], F 22[205, 335], F 31[ -200, -80], F 32[-194, -74], F 33[70, 190], F 34[70, 190]

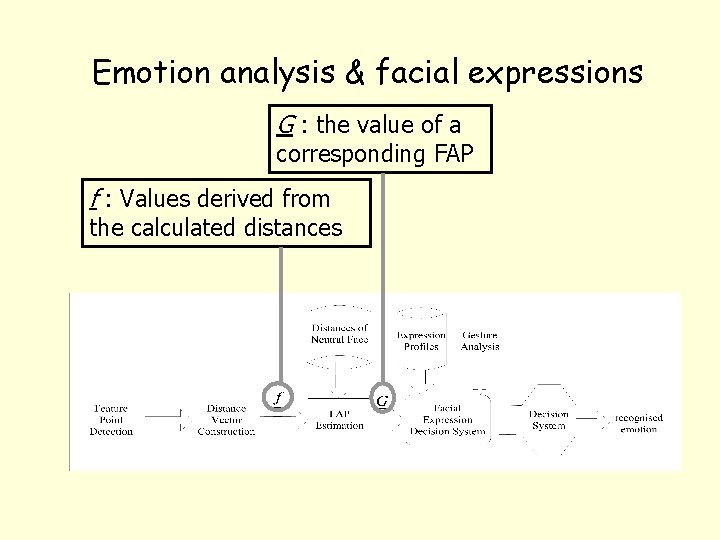

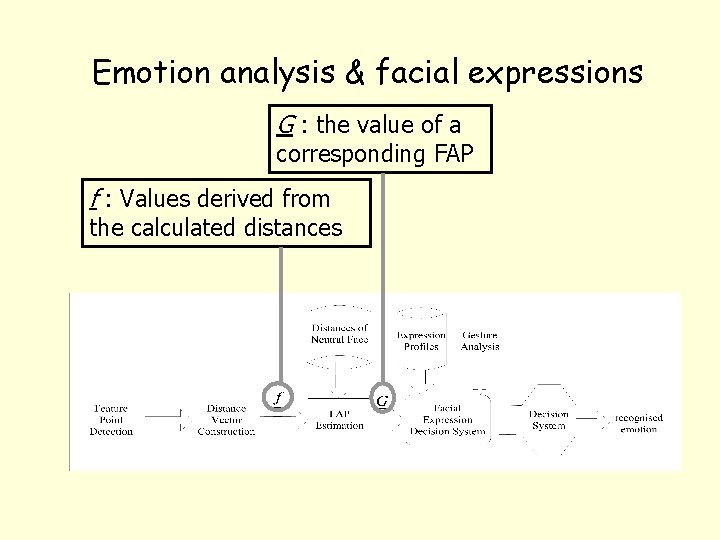

Emotion analysis & facial expressions G : the value of a corresponding FAP f : Values derived from the calculated distances

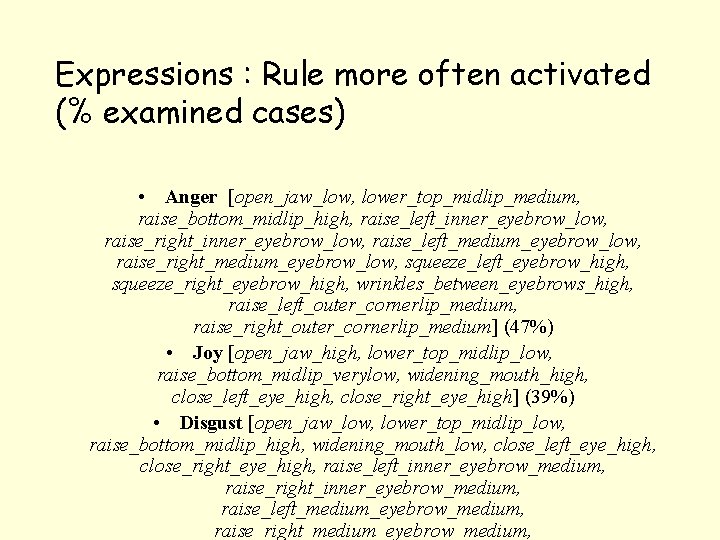

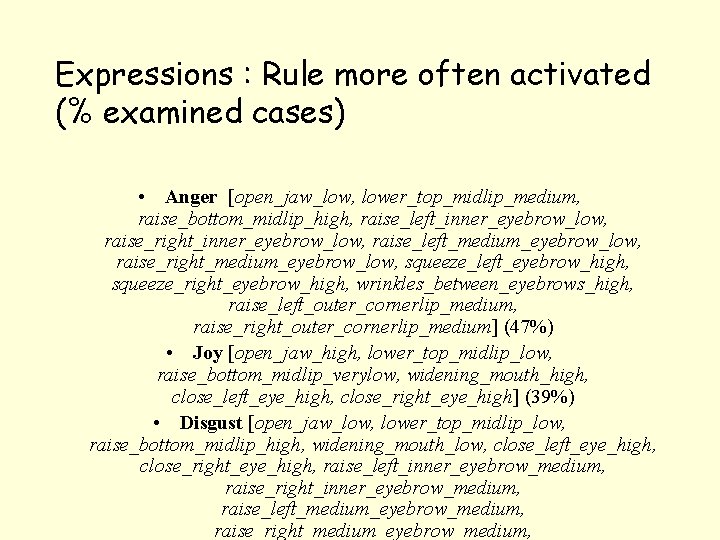

Expressions : Rule more often activated (% examined cases) • Anger [open_jaw_low, lower_top_midlip_medium, raise_bottom_midlip_high, raise_left_inner_eyebrow_low, raise_right_inner_eyebrow_low, raise_left_medium_eyebrow_low, raise_right_medium_eyebrow_low, squeeze_left_eyebrow_high, squeeze_right_eyebrow_high, wrinkles_between_eyebrows_high, raise_left_outer_cornerlip_medium, raise_right_outer_cornerlip_medium] (47%) • Joy [open_jaw_high, lower_top_midlip_low, raise_bottom_midlip_verylow, widening_mouth_high, close_left_eye_high, close_right_eye_high] (39%) • Disgust [open_jaw_low, lower_top_midlip_low, raise_bottom_midlip_high, widening_mouth_low, close_left_eye_high, close_right_eye_high, raise_left_inner_eyebrow_medium, raise_right_inner_eyebrow_medium, raise_left_medium_eyebrow_medium, raise_right_medium_eyebrow_medium,

Expressions : Rule more often activated (% examined cases) • Surprise [open_jaw_high, raise_bottom_midlip_verylow, widening_mouth_low, close_left_eye_low, close_right_eye_low, raise_left_inner_eyebrow_high, raise_right_inner_eyebrow_high, raise_left_medium_eyebrow_high, raise_right_medium_eyebrow_high, raise_left_outer_eyebrow_high, raise_right_outer_eyebrow_high, squeeze_left_eyebrow_low, squeeze_right_eyebrow_low, wrinkles_between_eyebrows_low] (71%) • Neutral [open_jaw_low, lower_top_midlip_medium, raise_left_inner_eyebrow_medium, raise_right_inner_eyebrow_medium, raise_left_medium_eyebrow_medium, raise_right_medium_eyebrow_medium, raise_left_outer_eyebrow_medium, raise_right_outer_eyebrow_medium, squeeze_left_eyebrow_medium, squeeze_right_eyebrow_medium, wrinkles_between_eyebrows_medium,

Expression based Emotion Analysis Results • These rules were extended to deal with 2 -D continuous (activationevaluation) 4 quadrant emotional space • They were applied to QUB SALAS generated data to test the performance to real life emotion expressive data sets.

Clustering/Neurofuzzy Analysis of Facial Features • The rule-based expression/emotion analysis system was extended to handle specific characteristics of each user in continuous 2 -D emotional analysis. • Novel clustering and fuzzy reasoning techniques were developed and used for producing specific FAP ranges (around 10 clusters) for each user and providing rules to handle them. • Results on the continuous 2 -D emotional framework with SALAS data indicate that a good performance (reaching 80%) was obtained applying the adapted systems to each specific user.

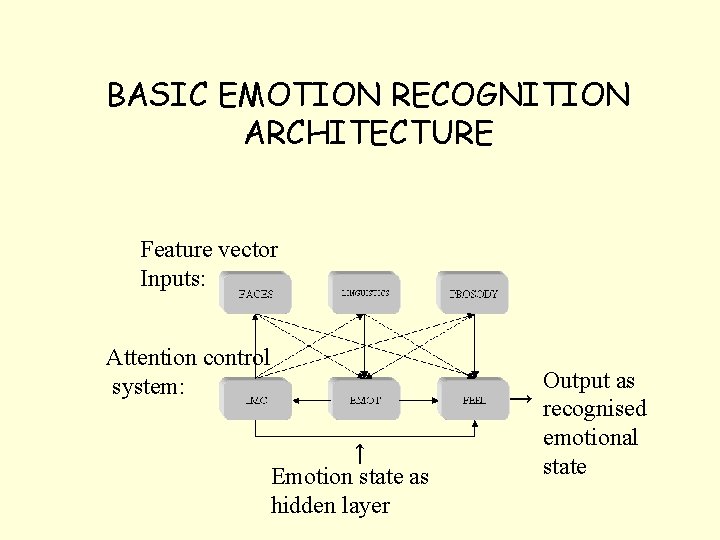

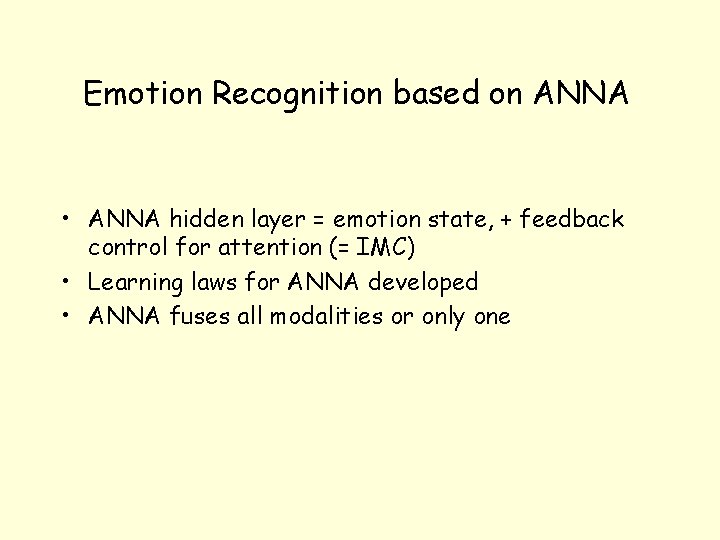

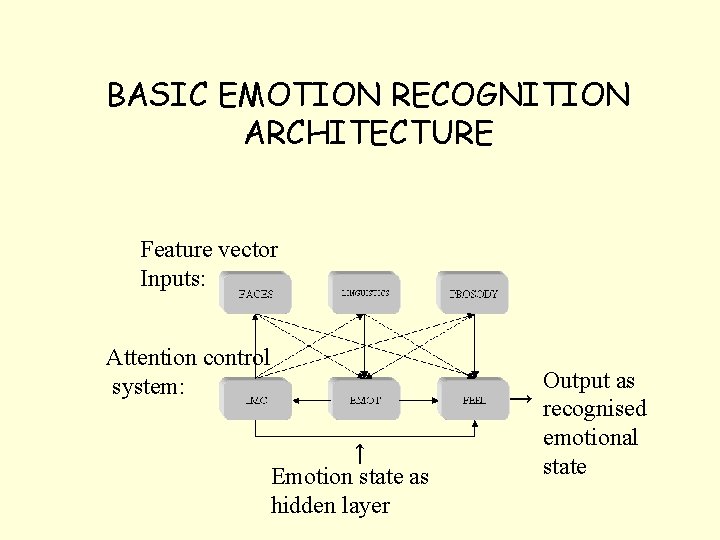

Direct Multimodal Recognition The attention-feedback recurrent neural network architecture (ANNA) was applied to emotion recognition based on all input modalities. Features extracted from all input modalities (linguistic, paralinguistic speech, FAPs) were provided by processing and analysing common SALAS emotional expressive data.

Emotion Recognition based on ANNA • ANNA hidden layer = emotion state, + feedback control for attention (= IMC) • Learning laws for ANNA developed • ANNA fuses all modalities or only one

BASIC EMOTION RECOGNITION ARCHITECTURE Feature vector Inputs: Attention control system: ↑ Emotion state as hidden layer Output as → recognised emotional state

Text Post-Processing Module • Prof. Whissell compiled ‘Dictionary of Affect in Language (DAL)’ • Mapping of ~9000 words → (activation-evaluation), based on students’ assessment • Take words from meaningful segments obtained by pause detection → (activation-evaluation) space • But humans use context to assign emotional content to words

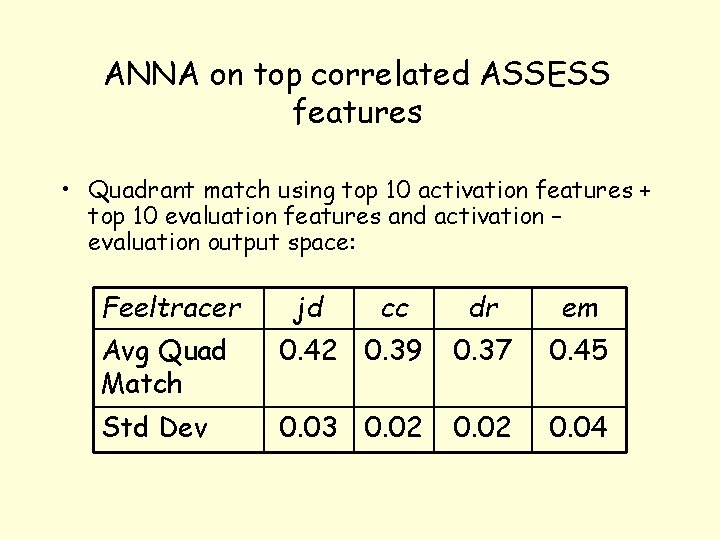

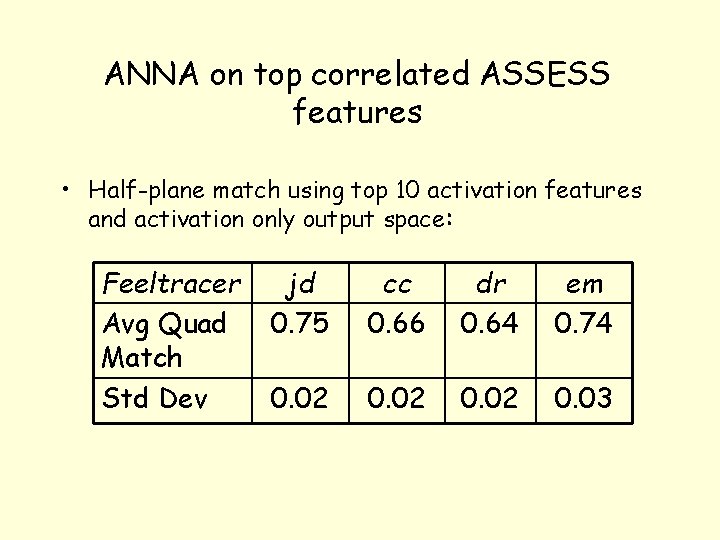

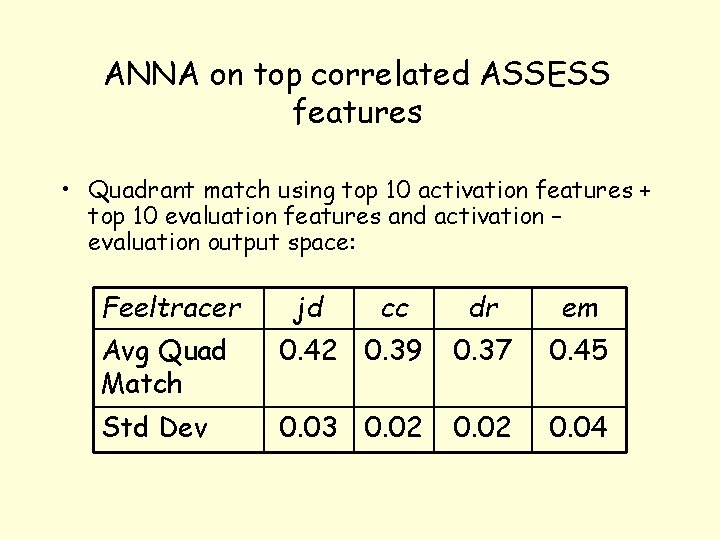

ANNA on top correlated ASSESS features • Quadrant match using top 10 activation features + top 10 evaluation features and activation – evaluation output space: Feeltracer jd cc dr em Avg Quad Match 0. 42 0. 39 0. 37 0. 45 Std Dev 0. 03 0. 02 0. 04

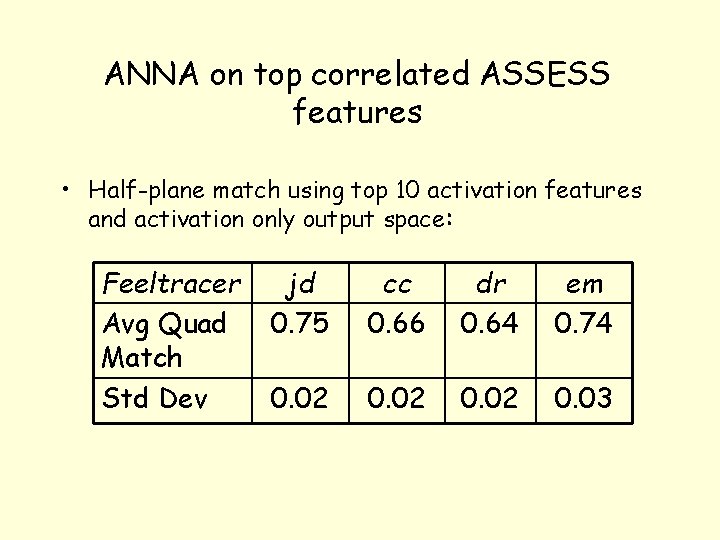

ANNA on top correlated ASSESS features • Half-plane match using top 10 activation features and activation only output space: Feeltracer Avg Quad Match Std Dev jd 0. 75 cc 0. 66 dr 0. 64 em 0. 74 0. 02 0. 03

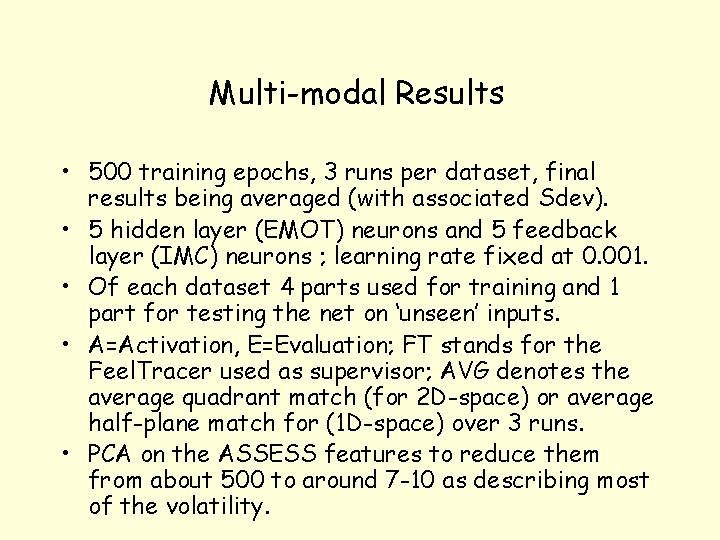

Multi-modal Results • 500 training epochs, 3 runs per dataset, final results being averaged (with associated Sdev). • 5 hidden layer (EMOT) neurons and 5 feedback layer (IMC) neurons ; learning rate fixed at 0. 001. • Of each dataset 4 parts used for training and 1 part for testing the net on ‘unseen’ inputs. • A=Activation, E=Evaluation; FT stands for the Feel. Tracer used as supervisor; AVG denotes the average quadrant match (for 2 D-space) or average half-plane match for (1 D-space) over 3 runs. • PCA on the ASSESS features to reduce them from about 500 to around 7 -10 as describing most of the volatility.

Multi-modal Results: Using A Output only Classification using A output only: relatively high (in three cases up to 98%, and with two more at 90% or above) Effectiveness of data from Feel. Tracer EM: Average success rates of (86%, 88%, 98%, 89%, 95%, 98%) for 7 choices of input combinations: (ass/fap/dal/a+f/d+f/a+d+f) Also high success with Feeltracer JD Consistently lower values for Feel. Tracer DR (all in 60 -66% band) Also for CC (64%, 54%, 75%, 63%, 73%, 73%).

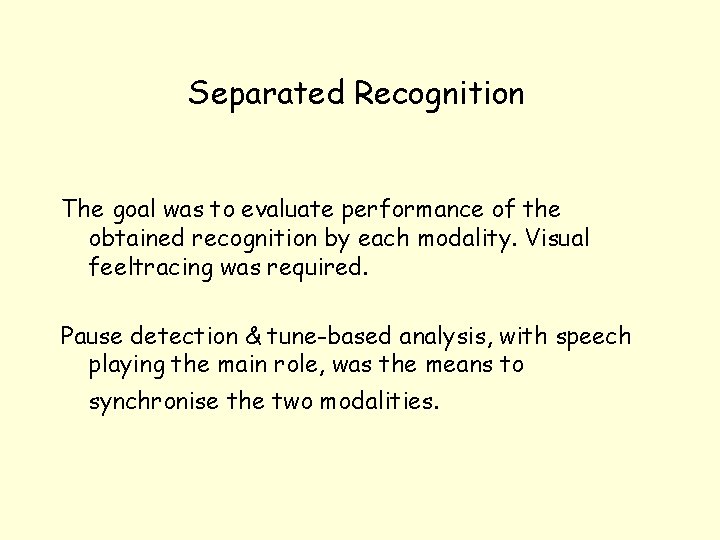

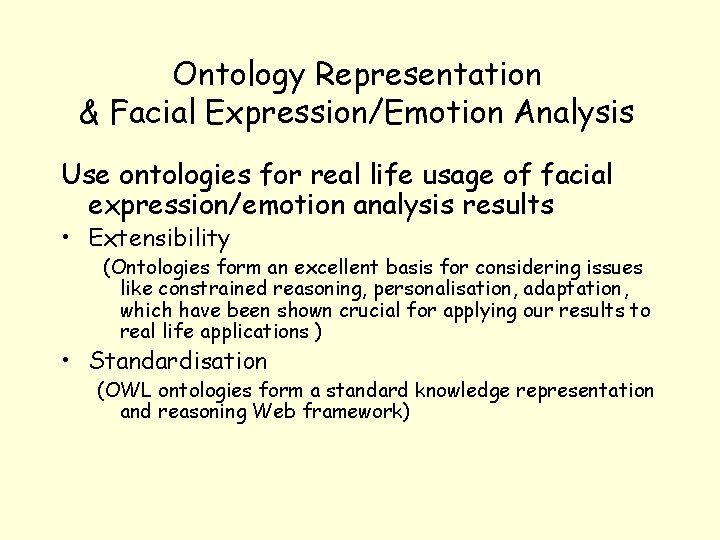

Ontology Representation & Facial Expression/Emotion Analysis Use ontologies for real life usage of facial expression/emotion analysis results • Extensibility (Ontologies form an excellent basis for considering issues like constrained reasoning, personalisation, adaptation, which have been shown crucial for applying our results to real life applications ) • Standardisation (OWL ontologies form a standard knowledge representation and reasoning Web framework)

Facial Emotion Analysis Ontology Development • An ontology has been created to represent the geometry and different variations of facial expressions based on the MPEG-4 Face Animation Parameters (FDPs) and Face Definition Parameters (FAPs). • The ontology was built using the ontology language OWL DL and the Protégé OWL ontologies development Plugin. • The ontology will be the tool for extending the obtained results to real life applications dealing with specific users’ profiles & constraints.

Concept and Relation Examples Concepts – – Face_Animation_Parameter Face_Definition_Parameter Facial_Expression Relations q q q is_Defined_By is_Animated_By has_Facial_Expression