Multimedia Operating Systems Chapter 9 Scope Contents 1

- Slides: 61

Multimedia Operating Systems Chapter 9

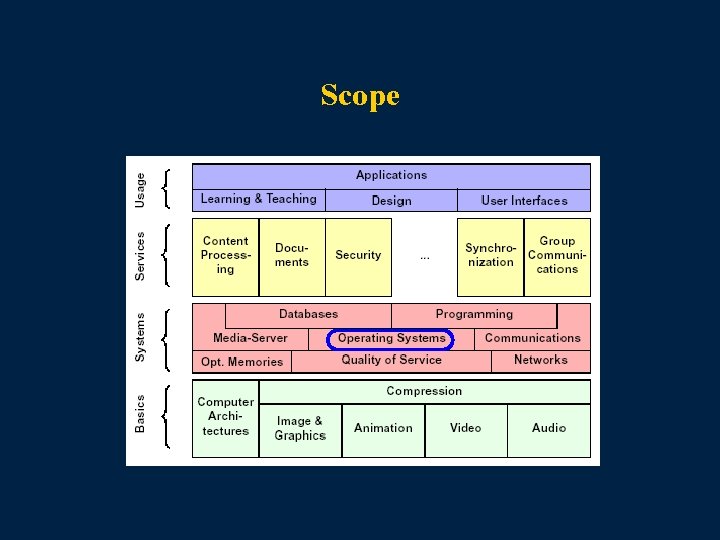

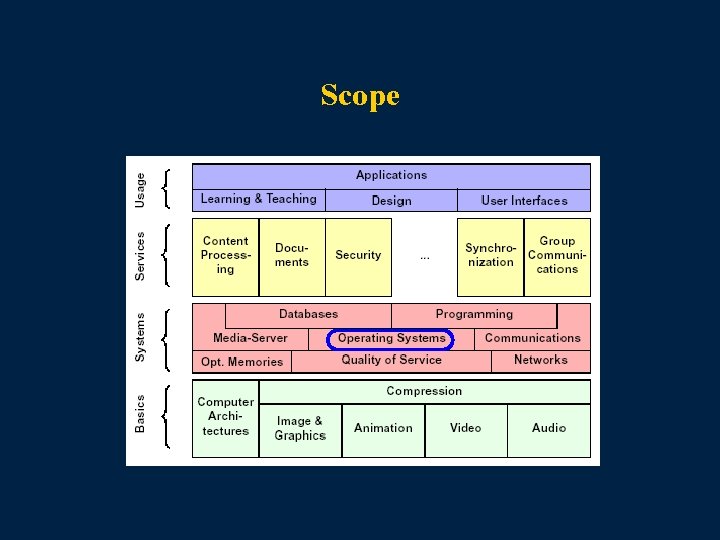

Scope

Contents 1. Real-Time Characterization 2. Resource Scheduling: Motivation 3. Properties of Multimedia Streams 4. MM Scheduling Algorithms a. Deadline-Based Scheduling – EDF b. Rate-Monotonic Scheduling c. Deadline-Based vs. Rate-Monotonic Scheduling

Multimedia Operating Systems • Resource management – How to achieve a coordinated processing of all operating system function in order to meet QOS. • Process management – How to schedule processes with meeting their deadlines • Rate monotonic scheduling • Earliest deadline first • Scheduling strategies

Continuous Media • A distributed integrated computer system supports continuous media (CM) if – The system stores and transmits CM in digital form – CM is handled by the same hardware (CPU, network, I/O) as the other data – CM data is handled in the same software framework

Considerations • Perception of audio and video media in a natural, error free way. • Real time processing of continuous media data • Main tasks of operating systems are – Resource reservation – Communication and synchronization requirements and timing relations among different media – Memory management - access to data with guaranteed timing delay and efficient data manipulation functions. – Data base management- file systems should allow transparent and guaranteed continuous retrieval of audio and video. – Device management - integration of audio and video devices in similar way to other I/O devices.

Real-Time Characterization Real-time process: “A process which delivers the results of the processing in a given time-span. ” Real-time system: “A system in which the correctness of a computation depends not only on obtaining the right result, but also upon providing the result on time. ”

Real-Time Characterization • Programs for the processing of data must be available during the entire run time of the system. • The real time system receives information from the environment spontaneously (random) or in a periodic interval (deterministic) and delivers it to the environment within certain time constraints. • The data may require processing at a priori known point in time, or unknown demand. • The main characteristics of real time systems is the correctness (errorless and timeliness) of the computations. • Timing and logical dependencies among different related tasks, processed at the same time must also be considered.

Real-time application: • Example: Control of temperature in a chemical plant – Driven by interrupts from an external device – These interrupts occur at irregular and unpredictable intervals • Example: control of flight simulator – Execution at periodic intervals – Scheduled by timer-service which the application requests from the OS

Deadlines • A deadline represents the latest acceptable time for the presentation of a processing result Soft deadlines: • in some cases the deadline is missed – not too many deadlines are missed – deadlines are not missed by much • presented result has still some value • Example: train/plain arrival-departure Hard deadlines: • should never be violated – violation means system failure • too late presented result has no value Critical: • violation means severe (potentially catastrophic) system failure

Real-Time Operating System – Requirements • Processing guarantees for time critical tasks • A high degree of schedulability- resource utilization at which or below which the deadline of each time critical task can be taken into account. • Stability under transient load – Under system overload, processing of time critical tasks must be ensured.

Real-Time and Multimedia • Audio and video consist of periodically changing of continuous media data (video frames or audio samples). – Jitter is only allowed before the final presentation to the user. • Each logical data unit must be presented by a well determined deadline.

Real-Time and Multimedia Real time requirements of multimedia systems: · The fault-tolerance requirements of multimedia systems are usually less strict than those of real time systems that have a direct physical impact. · Missing a deadline is not a severe failure · A sequence of digital continuous media data is the result of periodically sampling a sound or image signal. Schedulability for periodic tasks is easier. · The bandwidth demand of continuous media must not be priori fixed. The quality may be adjusted according to the available bandwidth by changing the encoding parameters. This is known as scalable video.

Resource Management • Multimedia systems with integrated audio and video are at the limit of their capacity, even with data compression and utilization of new technologies. • In an integrated distributed multimedia systems, several applications compete for system resources. • The system must employ adequate resource allocation schedules to meet the needs of the applications. Thereby the resource is first allocated then managed.

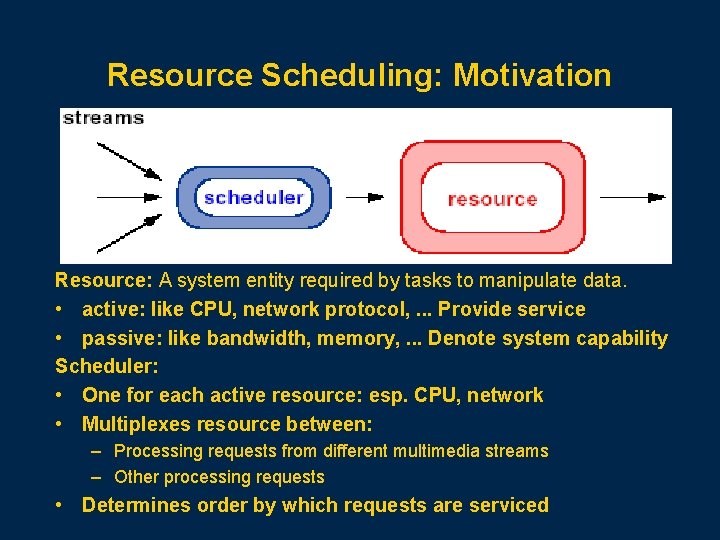

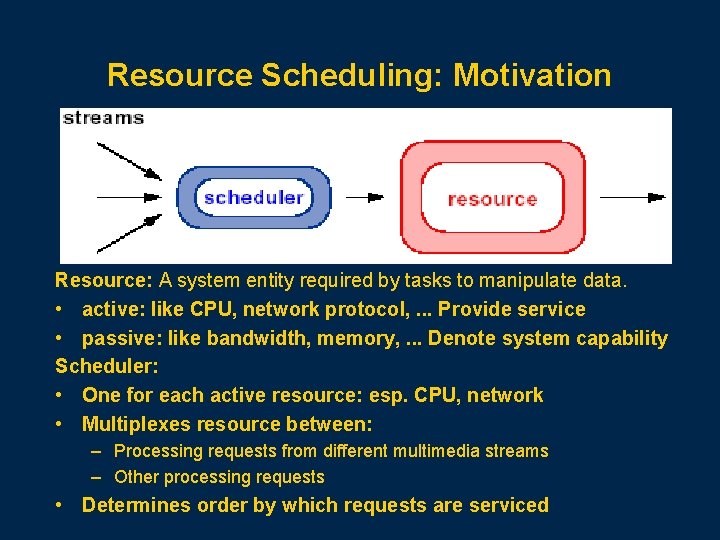

Resource Scheduling: Motivation Resource: A system entity required by tasks to manipulate data. • active: like CPU, network protocol, . . . Provide service • passive: like bandwidth, memory, . . . Denote system capability Scheduler: • One for each active resource: esp. CPU, network • Multiplexes resource between: – Processing requests from different multimedia streams – Other processing requests • Determines order by which requests are serviced

Requirements Resource management maps the requirements onto respective capacity. The specifications for the transmission and processing requirements of the applications (Qo. S) are as follows: • The troughput : the size and rate of data needed to satisfy the requirement • Local and global (end-to-end) delay: – The delay at the resource is the maximum time span for the completion of a certain task at this resource. – The end-to-end delay is the total delay for a data unit to be transmitted from the source to its destination. • • The jitter determines the maximum allowed variance in the arrival of data at the destination. The reliability defines error detection and correction mechanisms used for the transmission and processing of multimedia tasks.

Components and Phases Resource allocation and management may be based on the interaction between clients and their respective resource managers. The clients request an allocation by specifying its Qo. S specifications. The server checks its resources and decides if the request can be met. Since the existing reservations are stored, the requests in terms of existing capacity is guaranteed. During the connection establishment phase, the Qo. S parameters are usually negotiated between the requester (the client) and the resource manger (resource reservation protocols like ST-11 are needed).

Phases of Resource Reservation and Management Process Schedulability test: Check if enough remaining capacity can meet the request Quality of Service calculation: After the schedulability test, the resource manager calculates the best performance the resource can guarantee for the new process. Resource reservation: Allocate the required capacity with the Qo. S parameters Resource scheduling: Incoming requests are scheduled according to Qo. S guarantees.

Allocation Scheme Pessimistic Approach: • Allocations are done to meet the worst case (longest processing time, largest bandwidth) • Guarantee Qo. S • Misuse of resources Optimistic Approach • Resources are reserved according to average workload • Overloading may cause failures • Optimum use of resources

Continuous Media Resource Model • A resource can be a single schedulable device like CPU or a complex subsystem like a network. • Linear Bounded Arrival Process (LBAP) : A distributed system is decomposed into chain of resources traversed by the messages on their end-to-end path. • The data streams consist of LDUs (messages). Various data streams are independent of each other. • A burst of messages consist of messages that arrived ahead of schedule.

LBAP Model • LBAP is a message arrival process at a resource defined by three parameters. – M = Maximum message size (byte/message) – R = Maximum message rate (message/second) – B = Maximum burstiness (message) (workahead limit)

LBAP Model Example: • Two workstations are connected by a LAN. A CD player is connected to one of workstations. Single channel audio data are transferred from the CD player over the network to the other computer. At this station audio data are delivered to a speaker. The audio signal is sampled with the frequency of 44. 1 k. Hz. Each sample is coded with 16 bits. This results in a data rate of: Rbyte = 44100 Hz x 16 bits/(8 bits/byte) = 88200 bytes/s

LBAP Model The samples on a CD are assembled into frames. These frames are the audio messages to be transmitted. 75 of these audio messages are transmitted per second according to the CD-format standard. Therefore the maximum message size is: M = (88200 bytes/s) / (75 messages/s)= 1176 bytes/message Up to 12000 bytes are assembled into one packet and transmitted over the LAN. 12000 bytes / (1176 bytes/message) >= 10 messages = B

LBAP Model It follows that: M = 1176 bytes/message R = 75 messages/s B = 10 messages

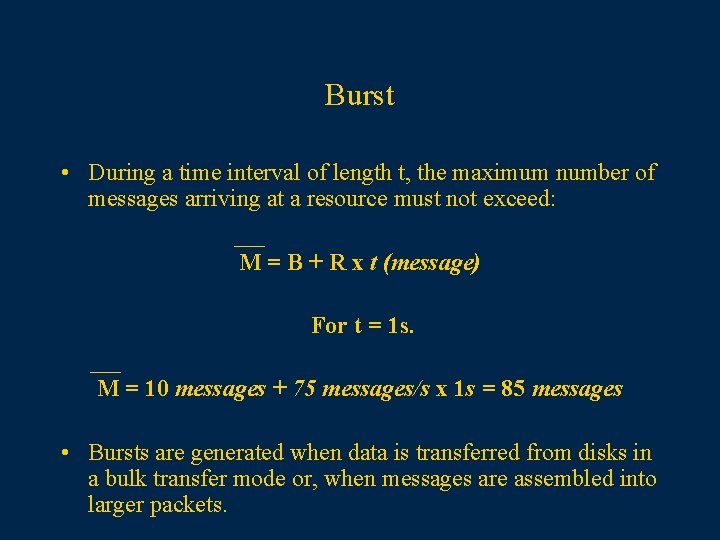

Burst • During a time interval of length t, the maximum number of messages arriving at a resource must not exceed: M = B + R x t (message) For t = 1 s. M = 10 messages + 75 messages/s x 1 s = 85 messages • Bursts are generated when data is transferred from disks in a bulk transfer mode or, when messages are assembled into larger packets.

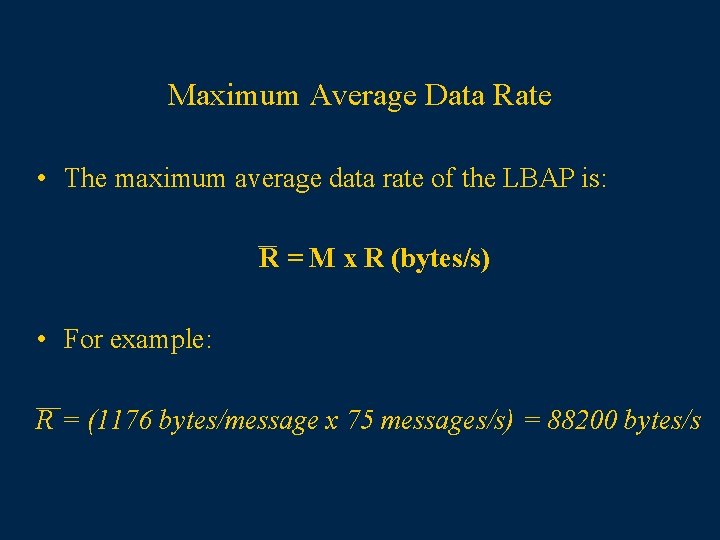

Maximum Average Data Rate • The maximum average data rate of the LBAP is: R = M x R (bytes/s) • For example: R = (1176 bytes/message x 75 messages/s) = 88200 bytes/s

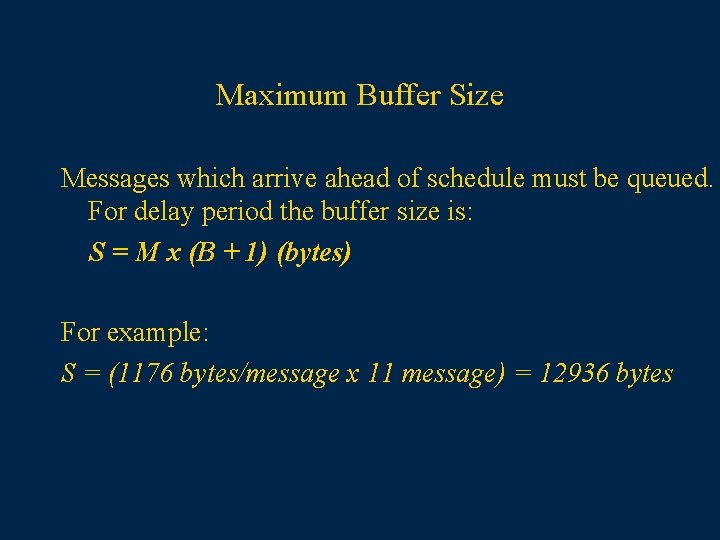

Maximum Buffer Size Messages which arrive ahead of schedule must be queued. For delay period the buffer size is: S = M x (B + 1) (bytes) For example: S = (1176 bytes/message x 11 message) = 12936 bytes

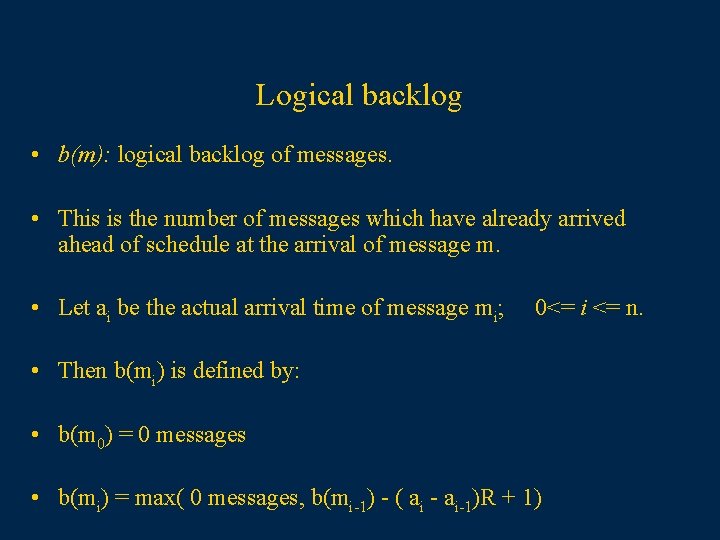

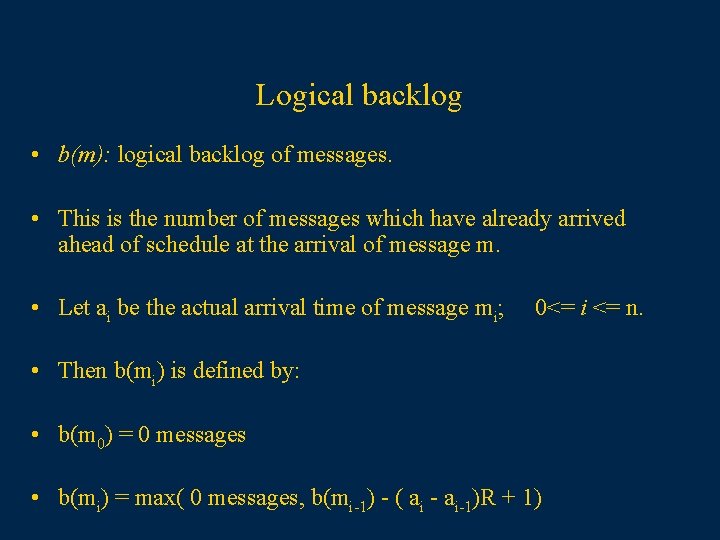

Logical backlog • b(m): logical backlog of messages. • This is the number of messages which have already arrived ahead of schedule at the arrival of message m. • Let ai be the actual arrival time of message mi; 0<= i <= n. • Then b(mi) is defined by: • b(m 0) = 0 messages • b(mi) = max( 0 messages, b(mi-1) - ( ai - ai-1)R + 1)

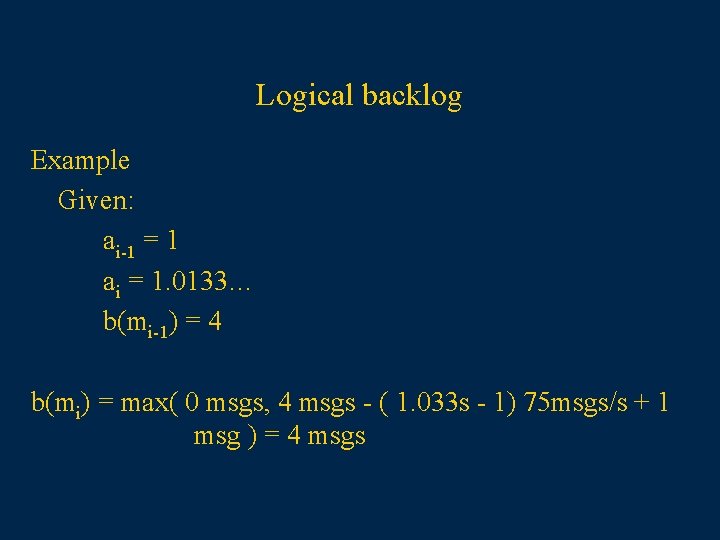

Logical backlog Example Given: ai-1 = 1 ai = 1. 0133… b(mi-1) = 4 b(mi) = max( 0 msgs, 4 msgs - ( 1. 033 s - 1) 75 msgs/s + 1 msg ) = 4 msgs

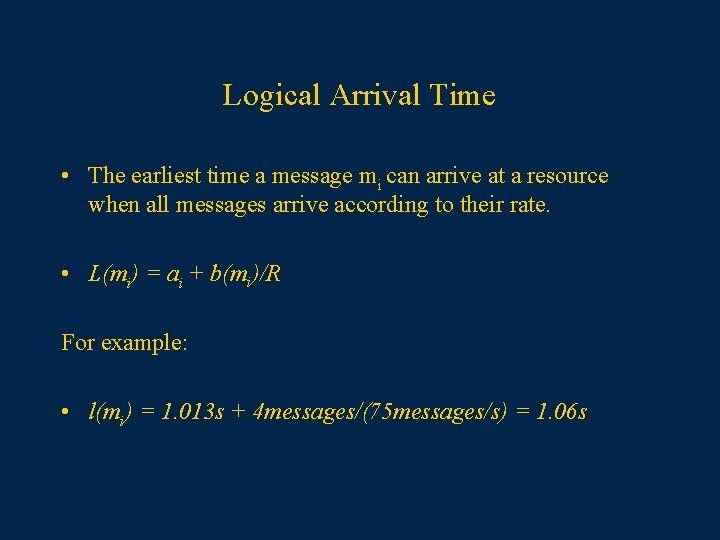

Logical Arrival Time • The earliest time a message mi can arrive at a resource when all messages arrive according to their rate. • L(mi) = ai + b(mi)/R For example: • l(mi) = 1. 013 s + 4 messages/(75 messages/s) = 1. 06 s

Guaranteed Logical Delay • The maximum time between the logical arrival time of m and its latest completion time. It results from the waiting time and the processing time of the message. • The deadline d(m) is derived from the delay for the processing of a message m at a resource. The deadline is the sum of the logical arrival time and its logical delay.

Workahead Messages • If a message arrives ahead of schedule and the resource is idle, it can be processed immediately. This is called a workahead message. • A maximum workahead time A can be specified from the application for each process. This results in a maximum workahead limit W: W=Ax. R For example: A = 0. 04 s W = 0. 04 x 75 messages/s = 3 message

Process Management • The process manager maps single processes onto resources according to a specific scheduling policy such that all processes meet their requirements. A process can be in one of the following states: • Idle: no resource is assigned to the process • Waiting: a process is waiting for an event • Ready to run: all necessary resources are assigned to the process. The process is waiting for execution • Running: a process runs as long as the processor is assigned to it

Process management • The process manager is the scheduler. • It transfers a process into the ready-to-run state by assigning it a position in the respective queue of the dispatcher. • The dispatcher manages the transition from readyto-run to run. The next process to run is chosen according to a priority policy.

Real Time Process Management in Conventional Operating Systems: OS/2 Threads Thread is the dispatchable unit of execution in the operating system. A thread belongs to exactly one address space. Each thread has its own stack, register values, and dispatch state. Each thread belongs to one of the following priority classes: – – The time critical class : require immediate attention The fixed high class: require good responsiveness The regular class: normal tasks The idle time class: threads with the lowest priority

Priorities • Within each class 32 priorities exist • Through time slicing threads with equal priority have equal chance for execution • Threads are preemptive • Threads of the regular class may be subject to dynamic rise of priority as a function of the waiting time.

Physical Device Driver as Process Manager • In OS/2, applications with real time requirements can run as Physical Device Drivers (PDD) at ring 0 (kernel mode). • As soon as an interrupt occurs at a device PDD gets control and handles the interrupt. PDD is device bounded. • This is insufficient for MM applications where messages may arrive at different adapter cards.

Enhanced System Scheduler as Process Manager • All time critical threads can run in application layer (3) with higher priority than others. • Schedular controls and coordinates threads according to the adapted scheduling algorithm and respective processing requirements. • The resource manager – determines feasible schedules – takes care of Qo. S calculating – reserves resources • The employment of internal scheduling strategy and resource management provides processing guaranties.

Meta-Scheduler as Process Manager • Continuous media requires processing at predetermined times and they must be completed at certain deadlines. • System components (CPU, schedular, network, file system, etc. ) must offer performance guarantees. • Meta-Scheduler coordinates these components negotiating end-to end guarantees.

Real Time Processing Requirements • In MM systems continuous and discrete (non time critical) data are processed concurrently. • For scheduling of MM tasks two conflicting goals must be considered. – An uncritical process should not suffer from starvation because time critical processes are executed. – A time critical process must never be subject to priority inversion.

Traditional vs. Real-Time Scheduling • The goal of traditional scheduling on time-sharing computers is – optimal throughput – optimal resource utilization – fair queuing • The main goal of real time scheduling is to allow as many time critical tasks to be processed in time according to their deadlines • The scheduling algorithm must map tasks onto resources such that all tasks meet their time requirements.

Real Time Scheduling: System Model • The essential component of real time scheduling algorithms are: – resources – tasks – scheduling goals • Task is a schedulable entity of the system - corresponds to threads • A task is characterized by its: – timing constraints – resource requirements

Scheduler Requirements Support Qo. S scheme: • Allow calculation of Qo. S guarantees • Enforce given Qo. S guarantees – support high, continuous media data throughput – take into account for deadlines Account for stream-specific properties: • Streams with periodic processing requirements – real-time requests • Streams with aperiodic requirements – should not starve multimedia service – should not be starved by multimedia service

Overall Approach Adapt real-time scheduling to continuous media • Deadline-based (EDF) and rate-monotonic (RM) • Preemptive and non-preemptive

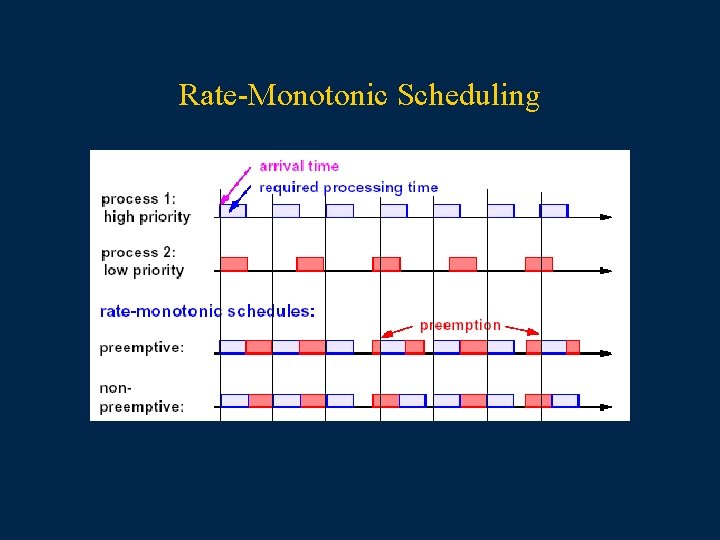

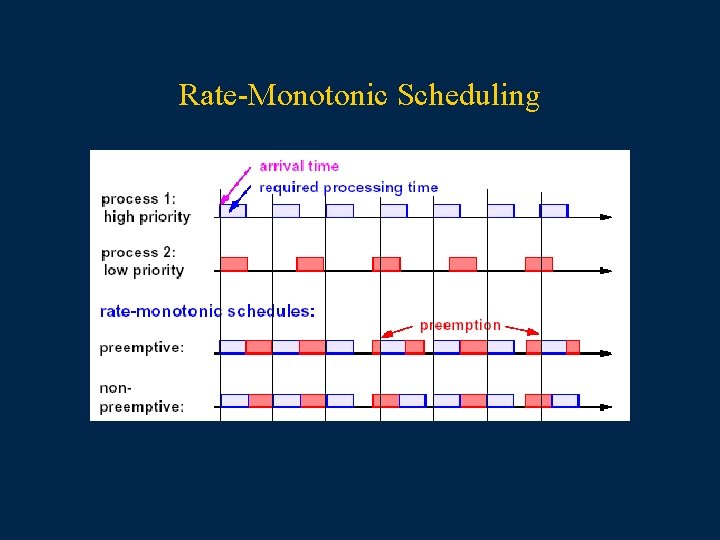

Preemptions Preemptive scheduling: • Running process is preempted when process with higher priority arrives • For CPU scheduling: often directly supported by operating system • Overhead for process switching Non-preemptive scheduling: • High-priority process must wait until running process finishes • Inherent property of, e. g. , the network • Less frequent process switches • Non-preemptive scheduling can be a better choice if processing times are short

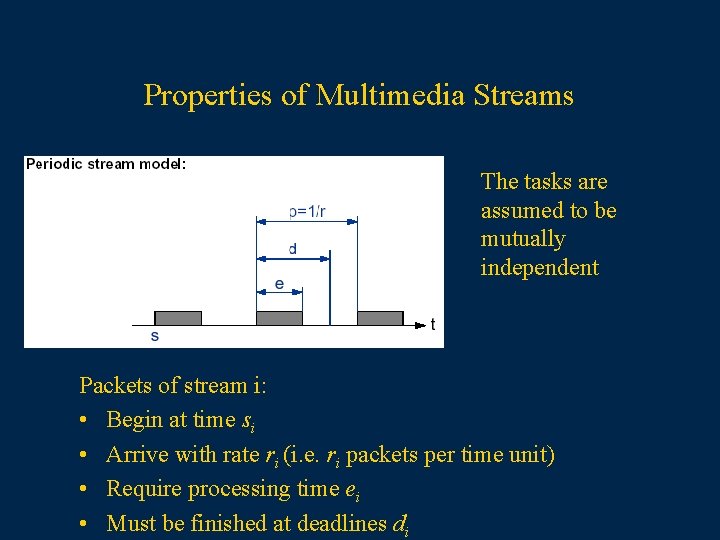

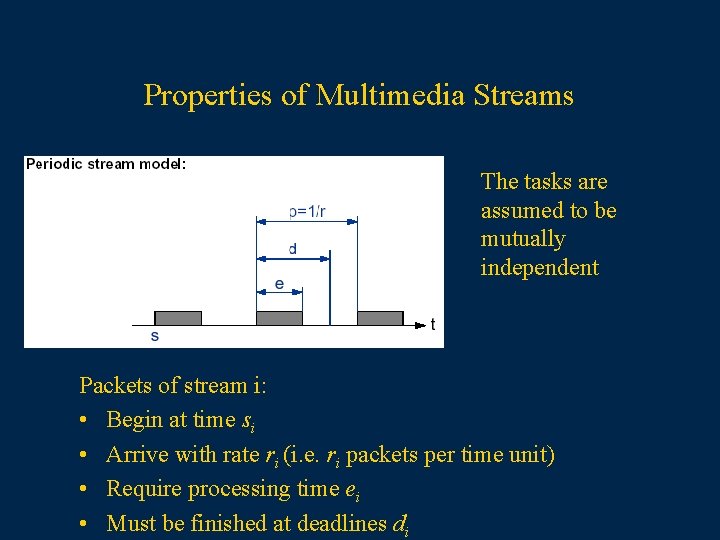

Properties of Multimedia Streams The tasks are assumed to be mutually independent Packets of stream i: • Begin at time si • Arrive with rate ri (i. e. ri packets per time unit) • Require processing time ei • Must be finished at deadlines di

Properties of Multimedia Streams • 0<= e <= d <= p • At s + (k - 1)p The task T is ready for processing for the kth time. • At s + (k - 1)p + d The processing of T in the period k must be finished. • A scheduling algorithm is said to guarantee a newly arrived task if the algorithm can find a schedule where the new task and all the previously guaranteed tasks can finish processing to their deadlines in every period over the whole run time.

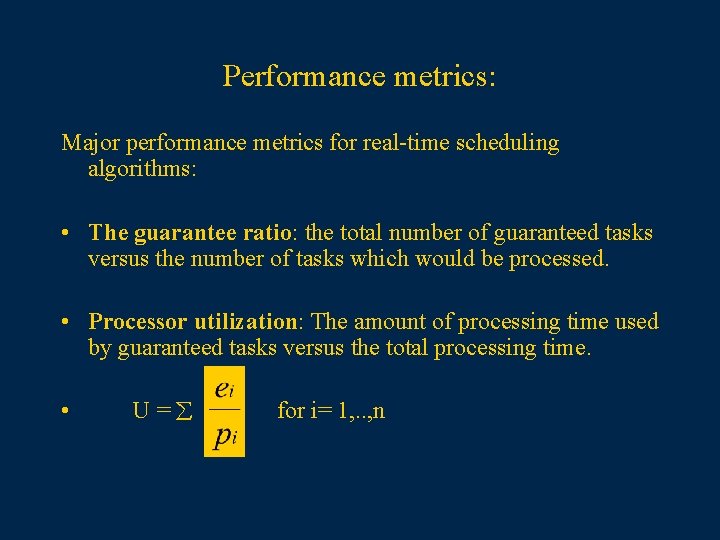

Performance metrics: Major performance metrics for real-time scheduling algorithms: • The guarantee ratio: the total number of guaranteed tasks versus the number of tasks which would be processed. • Processor utilization: The amount of processing time used by guaranteed tasks versus the total processing time. • U=å for i= 1, . . , n

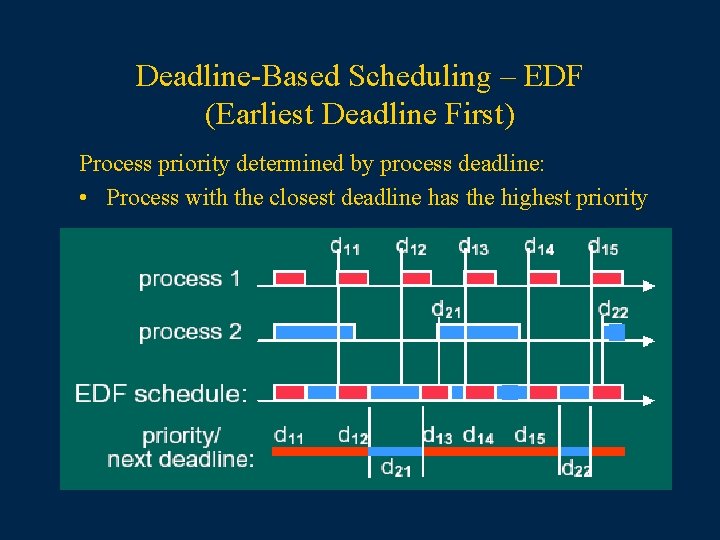

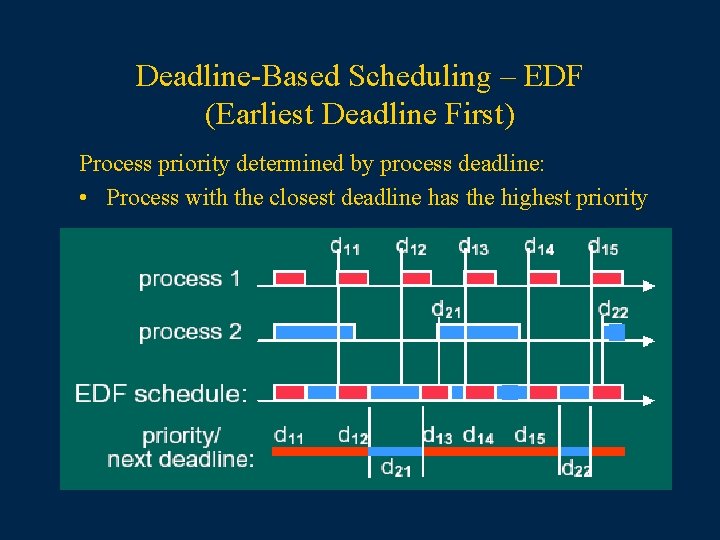

Deadline-Based Scheduling – EDF (Earliest Deadline First) Process priority determined by process deadline: • Process with the closest deadline has the highest priority

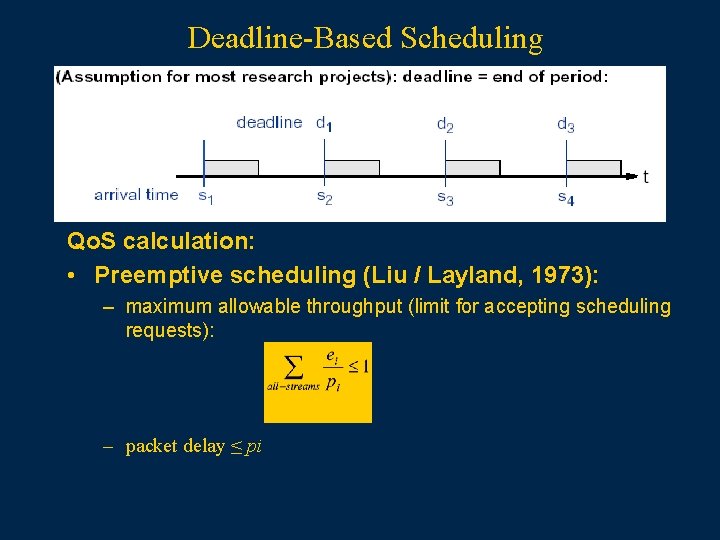

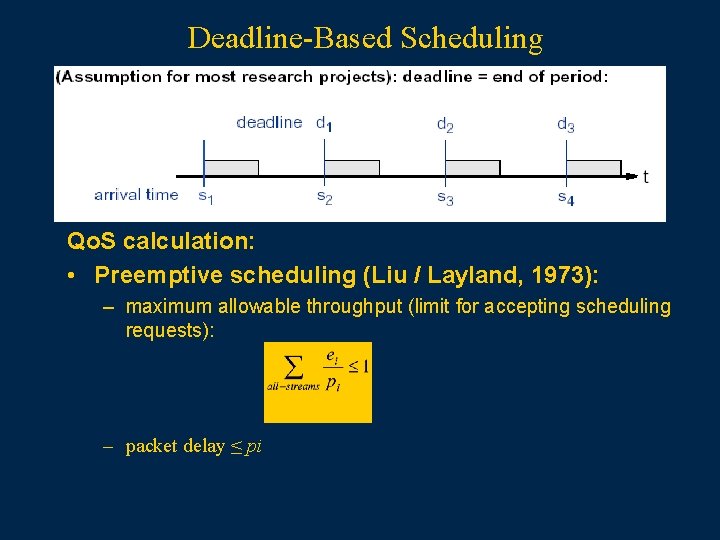

Deadline-Based Scheduling Qo. S calculation: • Preemptive scheduling (Liu / Layland, 1973): – maximum allowable throughput (limit for accepting scheduling requests): – packet delay ≤ pi

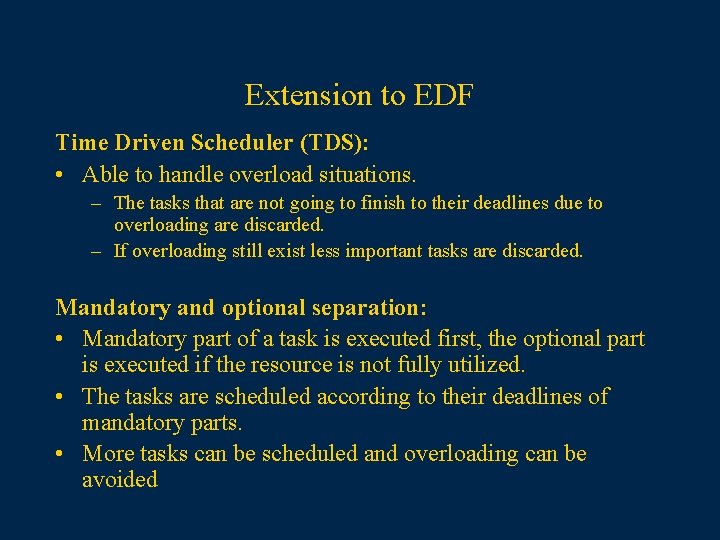

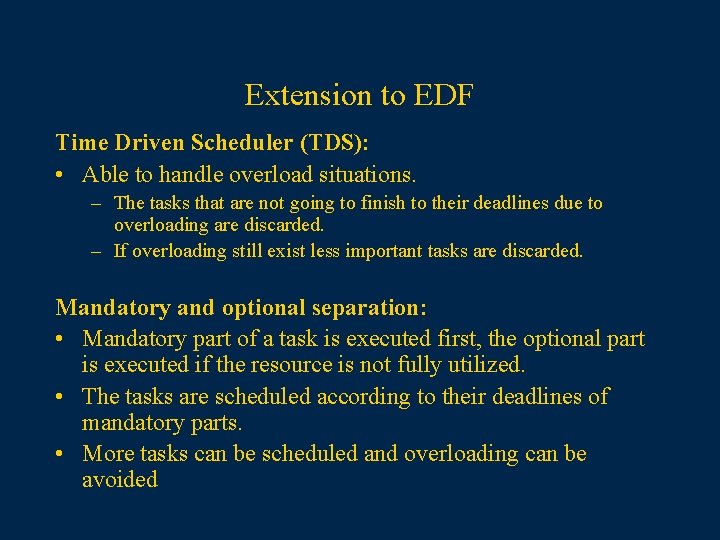

Extension to EDF Time Driven Scheduler (TDS): • Able to handle overload situations. – The tasks that are not going to finish to their deadlines due to overloading are discarded. – If overloading still exist less important tasks are discarded. Mandatory and optional separation: • Mandatory part of a task is executed first, the optional part is executed if the resource is not fully utilized. • The tasks are scheduled according to their deadlines of mandatory parts. • More tasks can be scheduled and overloading can be avoided

Rate Monotonic Algorithm • Optimal, static priority driven algorithm for preemptive, periodic jobs. Assumptions for the application of the algorithm: 1. The requests for all tasks with deadlines are periodic 2. Each task must be completed before the next arrives 3. All tasks are independent 4. Run time for each request of a task is constant 5. Any non periodic task in the system has no required deadline

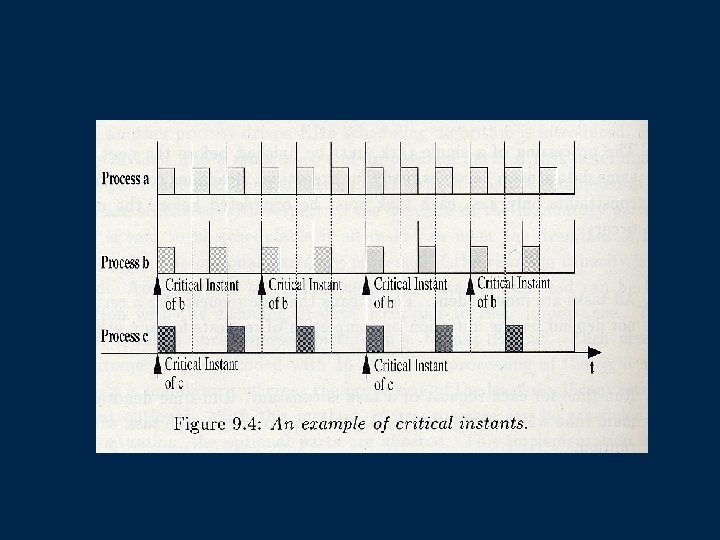

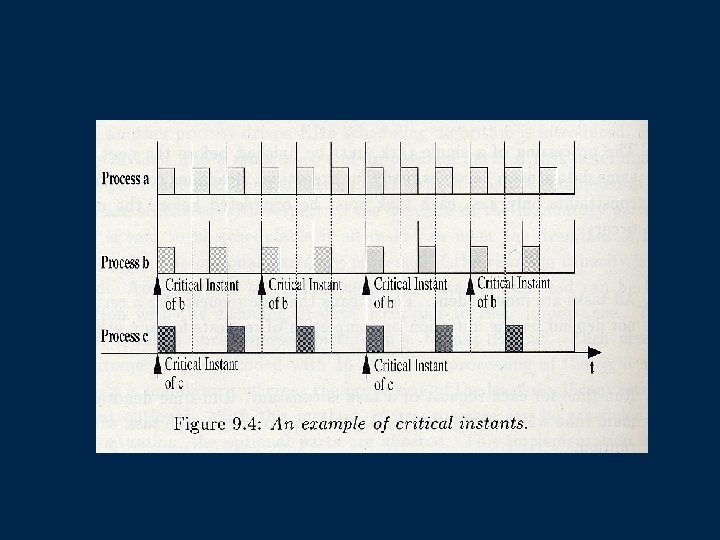

Rate Monotonic Algorithm • Static priorities are assigned to tasks at the set up phase according to their request rates • The task with the shortest period gets the highest priority. • The response time is the time span between the request and the end of processing time • The response time is longest at the critical instant – Critical instant occurs when all processes with a higher priority request to be processed at the same time. – The critical time zone is the time interval between the critical instant and a completion of a task.

Rate-Monotonic Scheduling

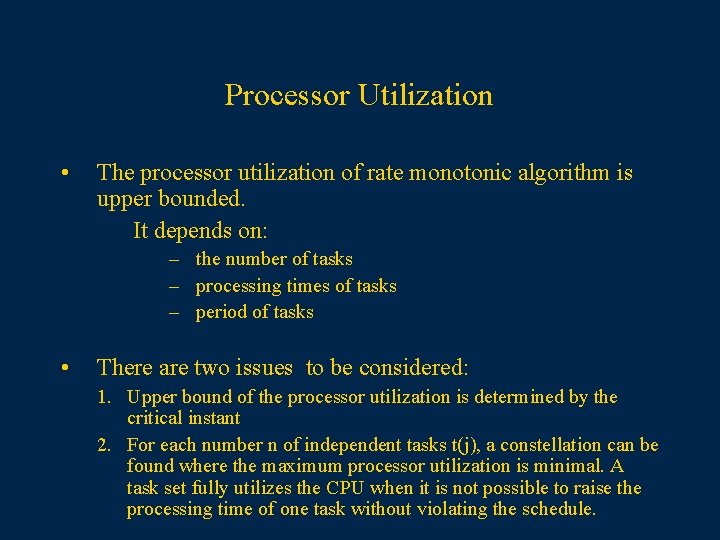

Processor Utilization • The processor utilization of rate monotonic algorithm is upper bounded. It depends on: – the number of tasks – processing times of tasks – period of tasks • There are two issues to be considered: 1. Upper bound of the processor utilization is determined by the critical instant 2. For each number n of independent tasks t(j), a constellation can be found where the maximum processor utilization is minimal. A task set fully utilizes the CPU when it is not possible to raise the processing time of one task without violating the schedule.

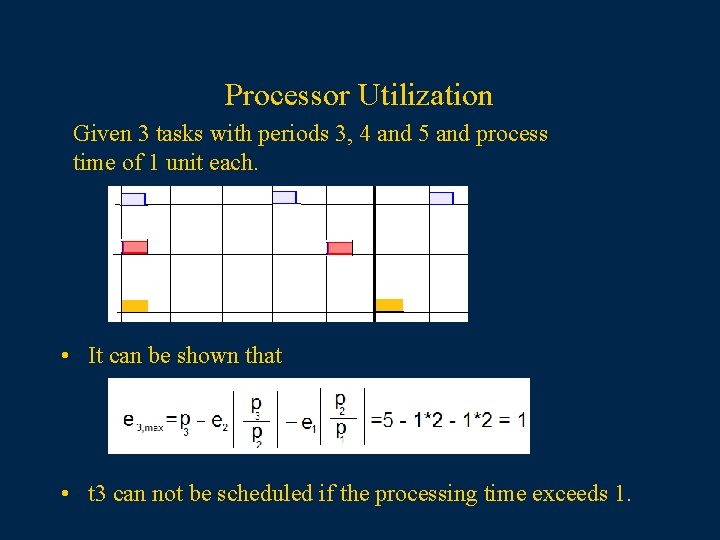

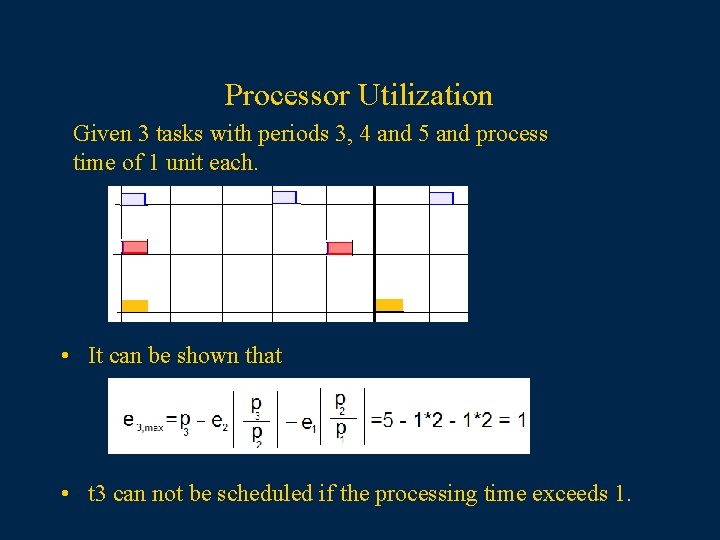

Processor Utilization Given 3 tasks with periods 3, 4 and 5 and process time of 1 unit each. • It can be shown that • t 3 can not be scheduled if the processing time exceeds 1.

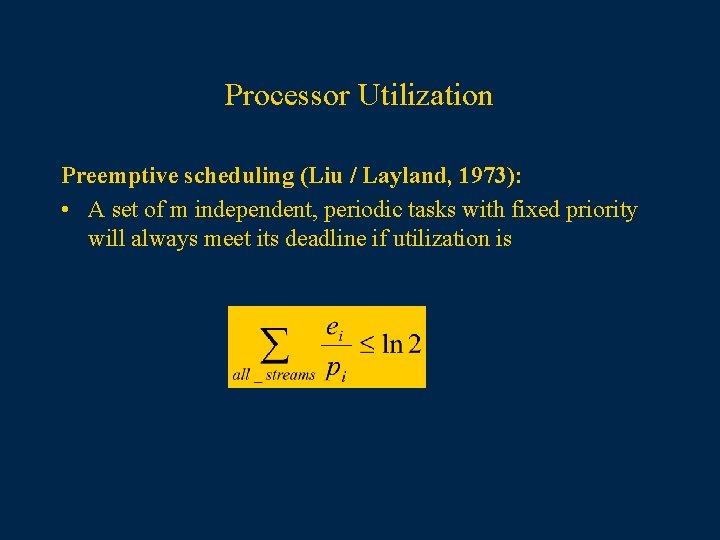

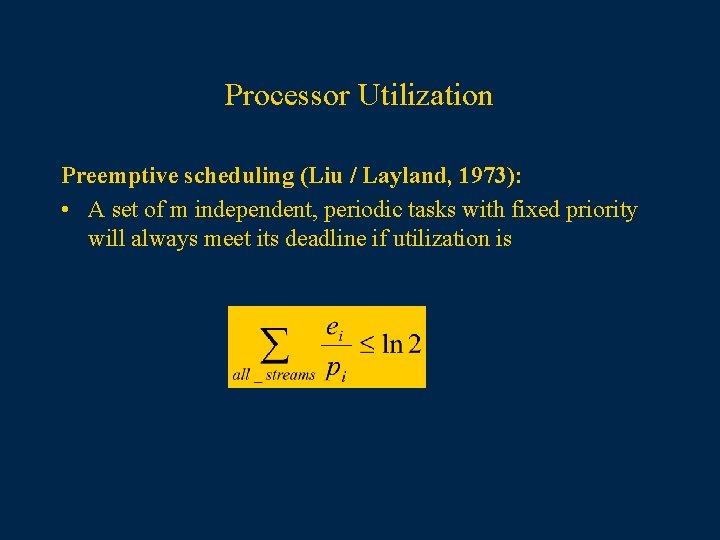

Processor Utilization Preemptive scheduling (Liu / Layland, 1973): • A set of m independent, periodic tasks with fixed priority will always meet its deadline if utilization is

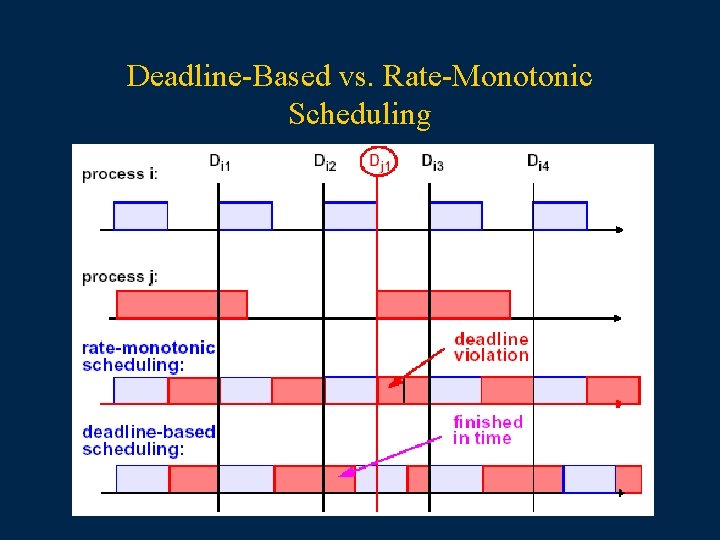

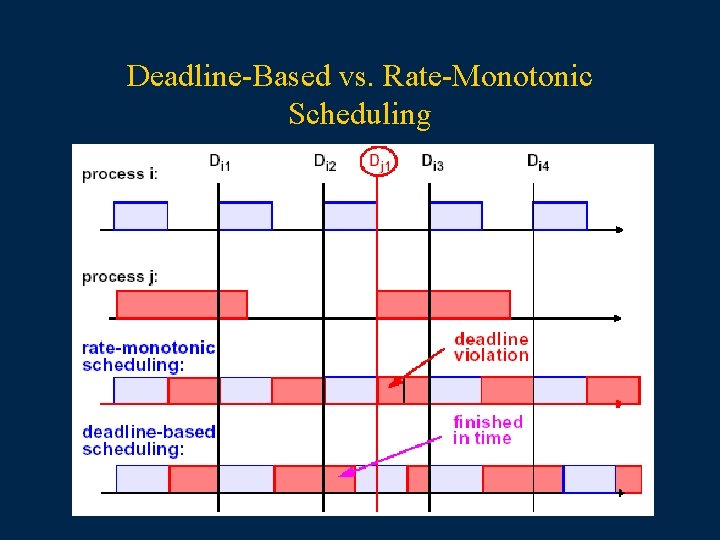

Deadline-Based vs. Rate-Monotonic Scheduling

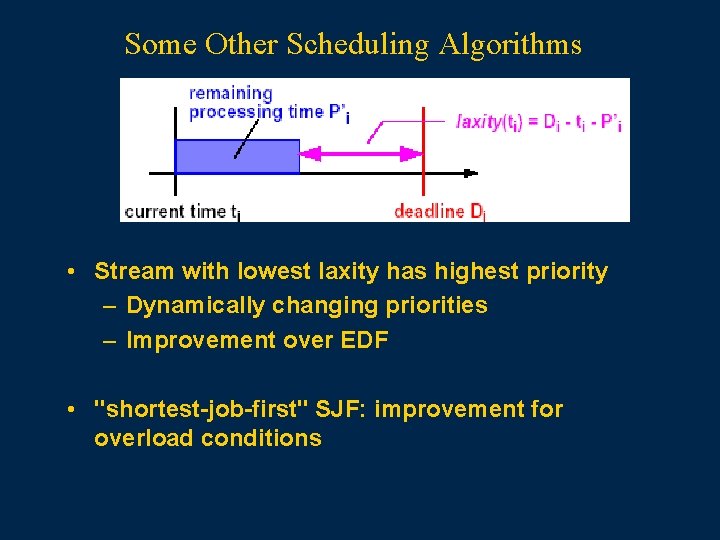

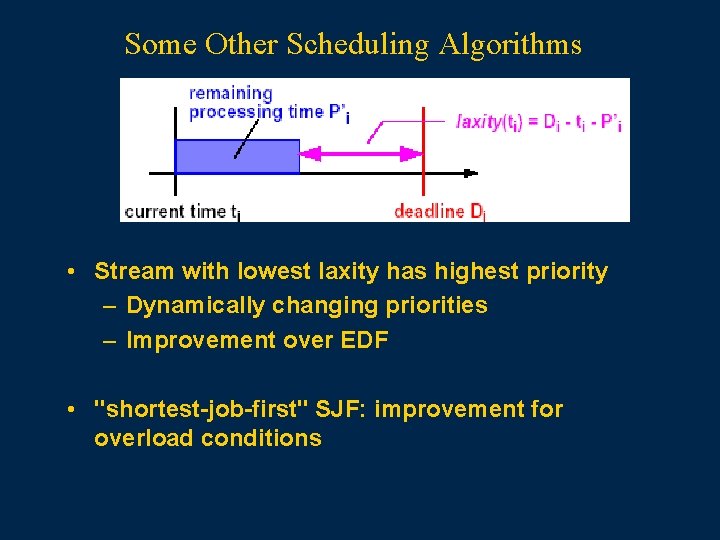

Some Other Scheduling Algorithms • Stream with lowest laxity has highest priority – Dynamically changing priorities – Improvement over EDF • "shortest-job-first" SJF: improvement for overload conditions

Reference Prof. Dr. -Ing. Ralf Steinmetz Prof. Dr. Max Mühlhäuser MM: TU Darmstadt - Darmstadt University of Technology, Dept. of of Computer Science TK - Telecooperation, Tel. +49 6151 16 -3709, Alexanderstr. 6, D-64283 Darmstadt, Germany, max@informatik. tu-darmstadt. de Fax. +49 6151 16 -3052