Multimedia Interfaces What is a multimedia interface Most

- Slides: 27

Multimedia Interfaces • What is a multimedia interface – Most anything where users do not just interact with text – E. g. , audio, speech, images, faces, video, sensor data, …

Working with Multimedia • Symbolic vs. non-symbolic content – How can users search and browse for the content they need? – What is represented and what is not? – Important that interface design be appropriate to the particular content processing techniques • Static vs. dynamic content – How can users locate particular states within a piece of content? – Need visualizations that enable state/segment-based indexing and visualization

General Audio • Mapping audio cues to events – Recognizing sounds related to particular events (e. g. gunshot, falling, scream) • Mapping events to audio cues – Audio debugger to speed up stepping through code • Spatialized audio – Provides additional geographic/navigational channel – Example: Michael Joyce’s Interactive Central Park

Spatialized Audio • Spatialized audio is easier when assuming headphones because of control • Head-related transfer function (HRTF) – Difference in timing and signal strength determine how we identify position of sound • Beamforming – Timing for constructive interference to create stronger signal at desired location • Crosstalk Cancellation – Destructive interference to remove parts of signal at desired location

Audio Signal Analysis • Fast Fourier Transform (FFT) and Discrete Wavelet Transform (DWT) – Transforms commonly used on audio signals – Allow for analysis of frequency features across time (e. g. power contained in a frequency interval) – FFTs have equal sized windows where wavelets can vary based on frequency • Mel-frequency cepstral coeffients (MFCC) – Based on FFTs – Maps results into bands approximating human auditory system

Speech • Speaker segmentation – Identify when a change in speaker occurs – Useful for basic indexing or summarization of speech content • Speaker identification – Identify who is speaking during a segment – Enables search (and other features) based on speaker • Speech recognition – Identify the content of speech

Speech Recognition • Start by segmenting utterances and characterizing phonemes – Use gaps to segment – Group segments into words • Limited vocabulary of commands – Classifiers for limited vocabulary (HMMs) • Continuous speech – Language models for disambiguation – Speaker dependent or not

Music • Music processing can support a variety of activities • Composition – From traditional to interactive • Selection – Example: i. Tunes, Pandora, – Use for shared spaces • Playback – Interactive playback, social playback • Management & Summarization – Example: Music. Wiz • Games – Guitar Hero, Rockband, etc.

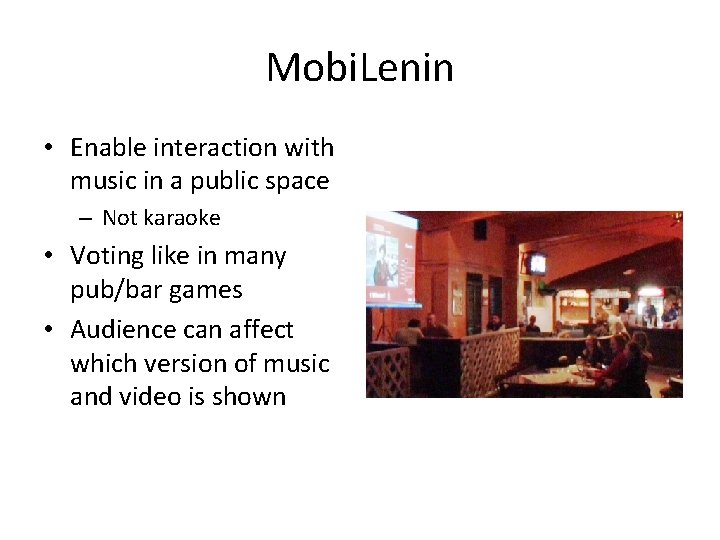

Mobi. Lenin • Enable interaction with music in a public space – Not karaoke • Voting like in many pub/bar games • Audience can affect which version of music and video is shown

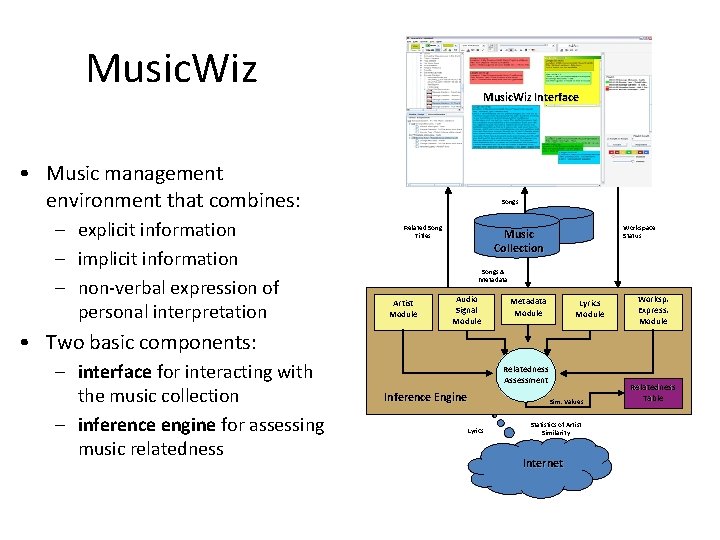

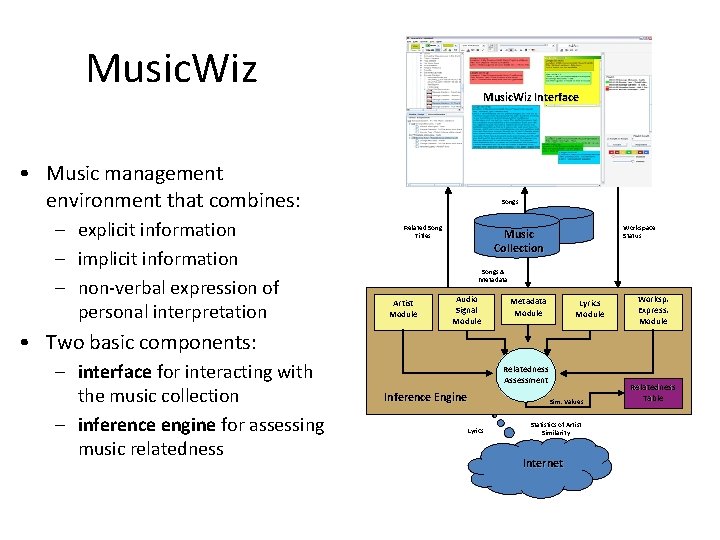

Music. Wiz Interface • Music management environment that combines: – explicit information – implicit information – non-verbal expression of personal interpretation Songs Related Song Titles Workspace Status Music Collection Songs & Metadata Artist Module Audio Signal Module Metadata Module Lyrics Module Worksp. Express. Module • Two basic components: – interface for interacting with the music collection – inference engine for assessing music relatedness Relatedness Assessment Inference Engine Lyrics Sim. Values Statistics of Artist Similarity Internet Relatedness Table

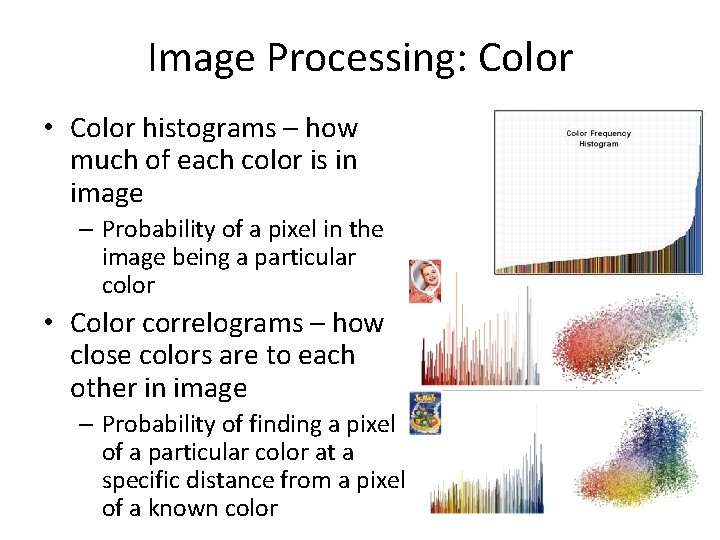

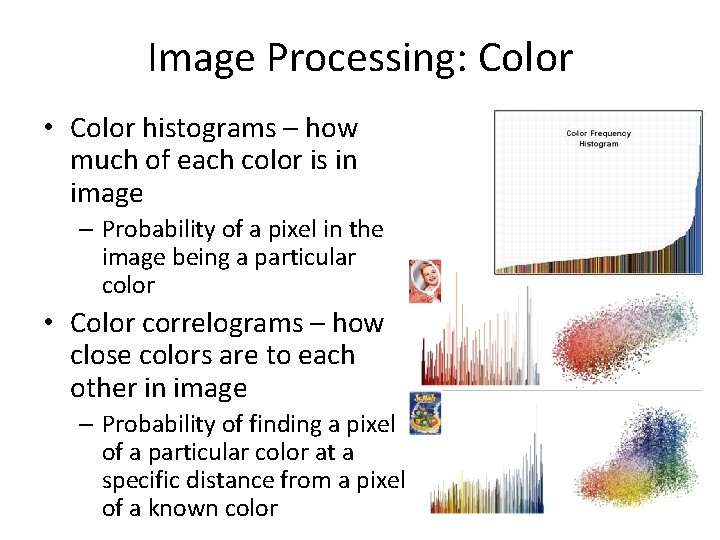

Image Processing: Color • Color histograms – how much of each color is in image – Probability of a pixel in the image being a particular color • Color correlograms – how close colors are to each other in image – Probability of finding a pixel of a particular color at a specific distance from a pixel of a known color

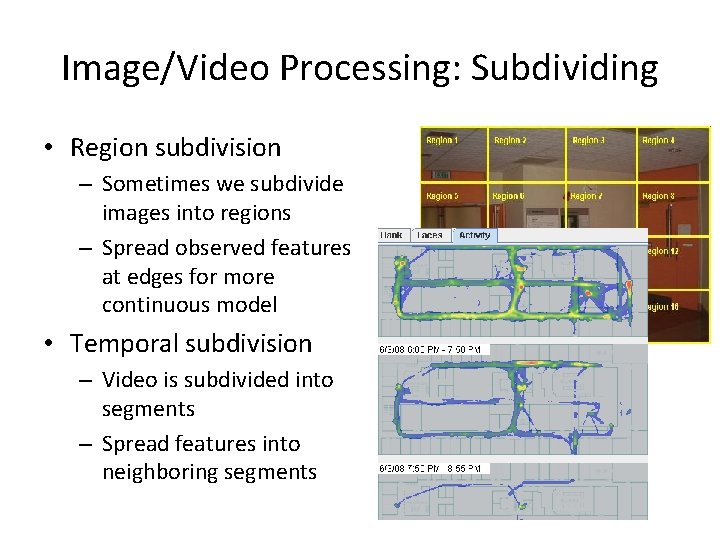

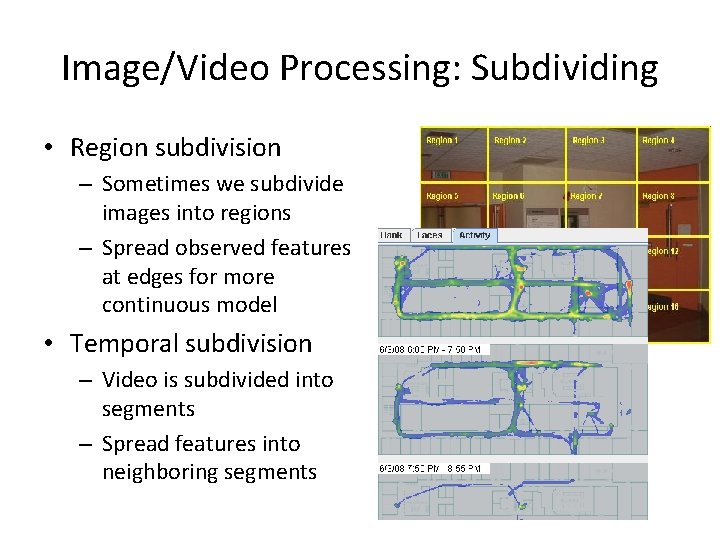

Image/Video Processing: Subdividing • Region subdivision – Sometimes we subdivide images into regions – Spread observed features at edges for more continuous model • Temporal subdivision – Video is subdivided into segments – Spread features into neighboring segments

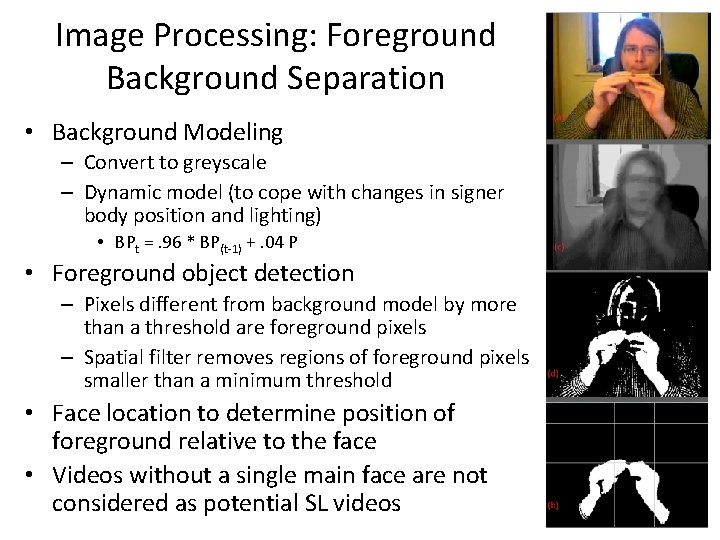

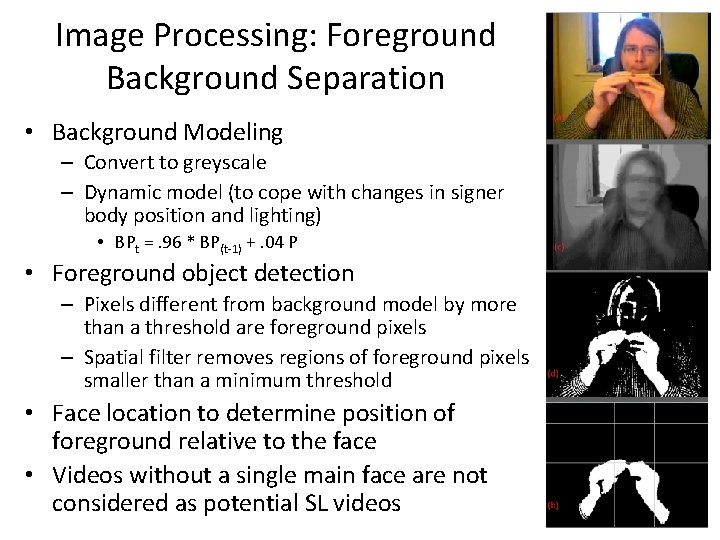

Image Processing: Foreground Background Separation • Background Modeling – Convert to greyscale – Dynamic model (to cope with changes in signer body position and lighting) • BPt =. 96 * BP(t-1) +. 04 P • Foreground object detection – Pixels different from background model by more than a threshold are foreground pixels – Spatial filter removes regions of foreground pixels smaller than a minimum threshold • Face location to determine position of foreground relative to the face • Videos without a single main face are not considered as potential SL videos 13

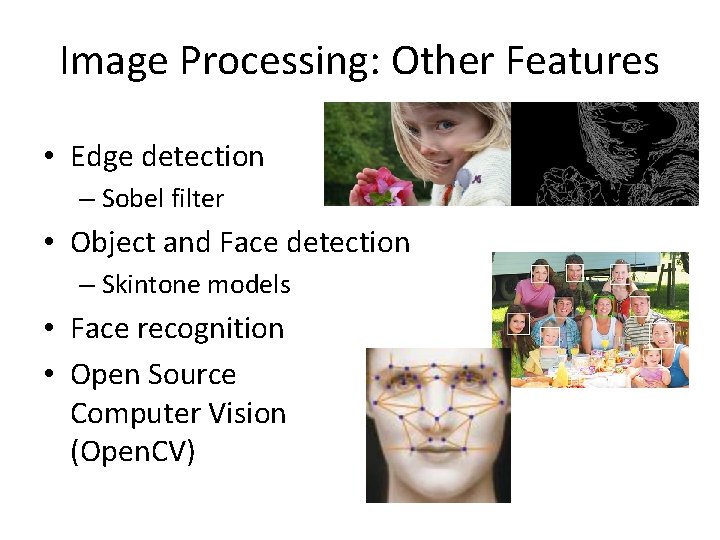

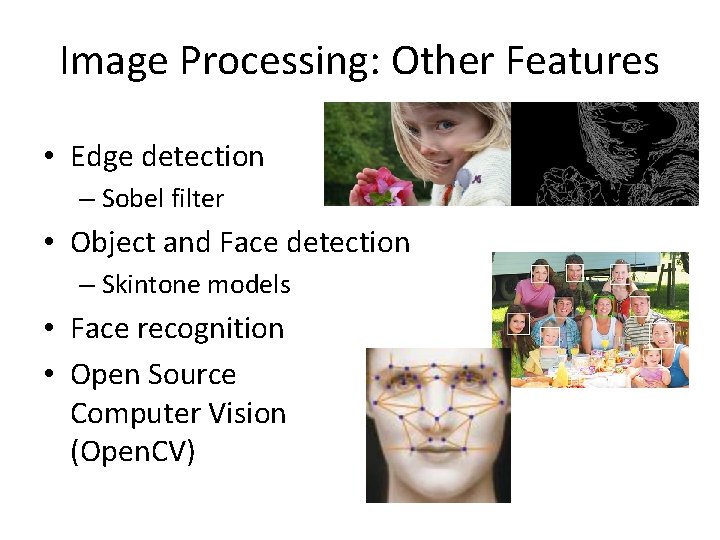

Image Processing: Other Features • Edge detection – Sobel filter • Object and Face detection – Skintone models • Face recognition • Open Source Computer Vision (Open. CV)

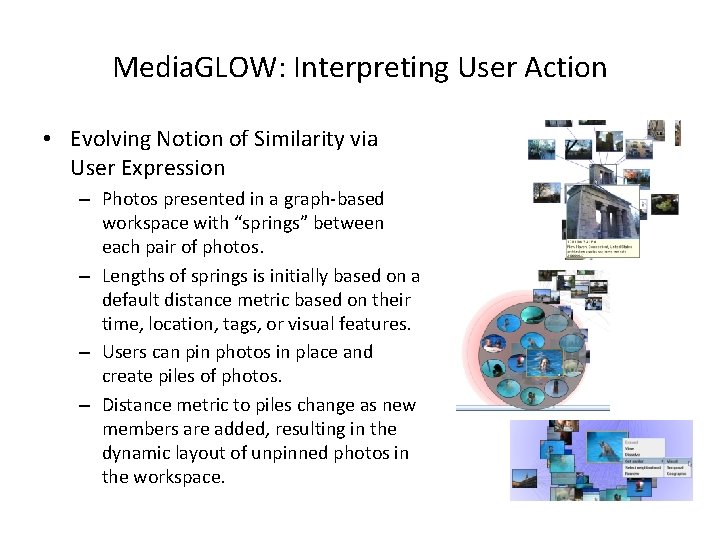

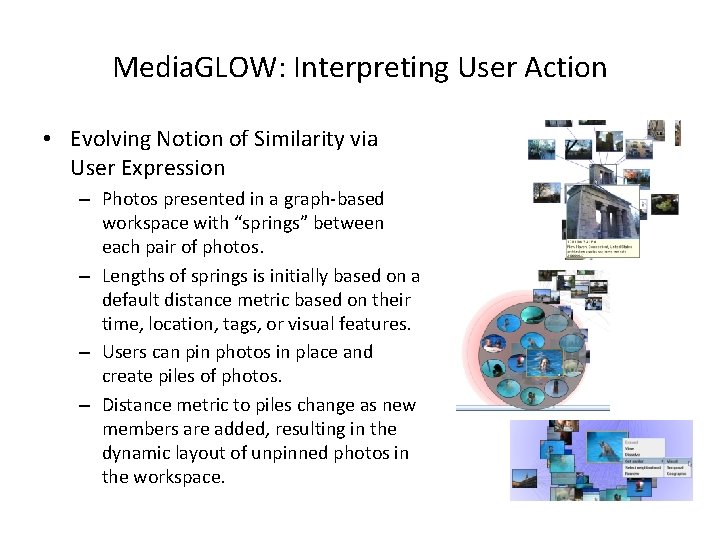

Media. GLOW: Interpreting User Action • Evolving Notion of Similarity via User Expression – Photos presented in a graph-based workspace with “springs” between each pair of photos. – Lengths of springs is initially based on a default distance metric based on their time, location, tags, or visual features. – Users can pin photos in place and create piles of photos. – Distance metric to piles change as new members are added, resulting in the dynamic layout of unpinned photos in the workspace.

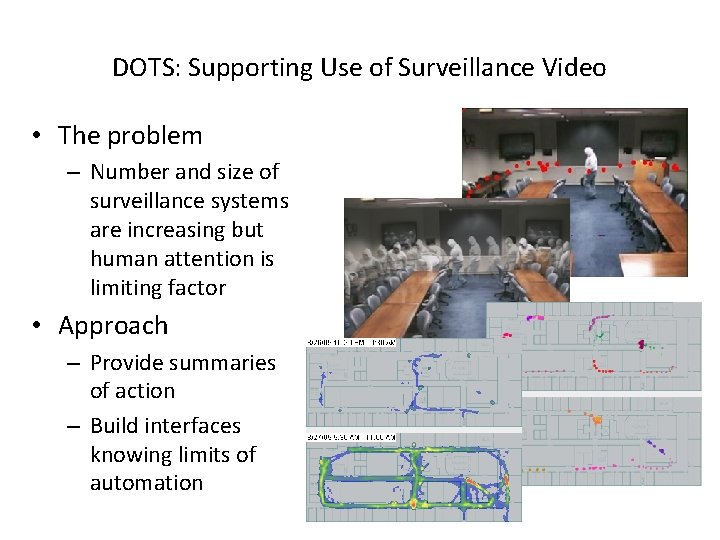

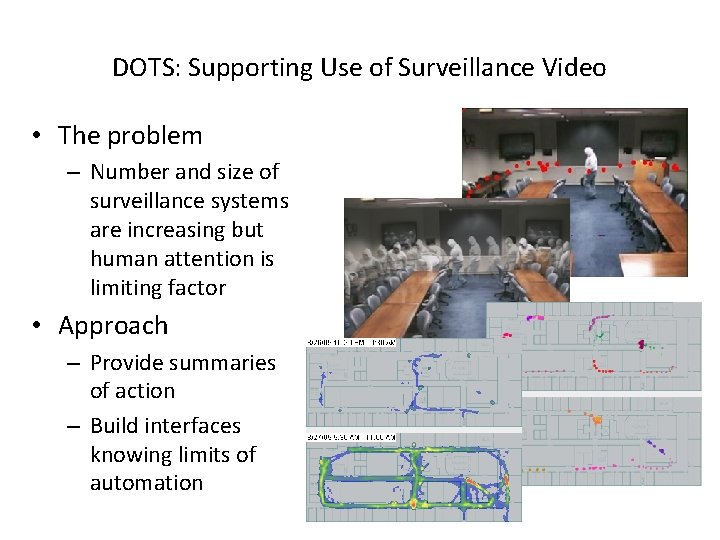

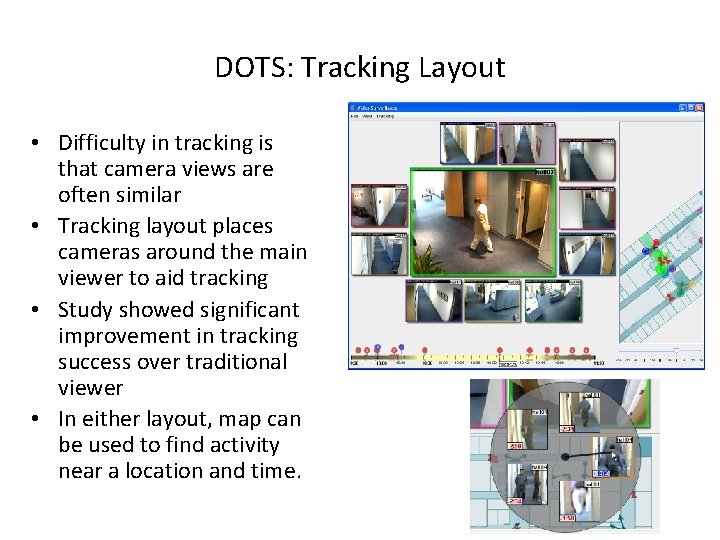

DOTS: Supporting Use of Surveillance Video • The problem – Number and size of surveillance systems are increasing but human attention is limiting factor • Approach – Provide summaries of action – Build interfaces knowing limits of automation

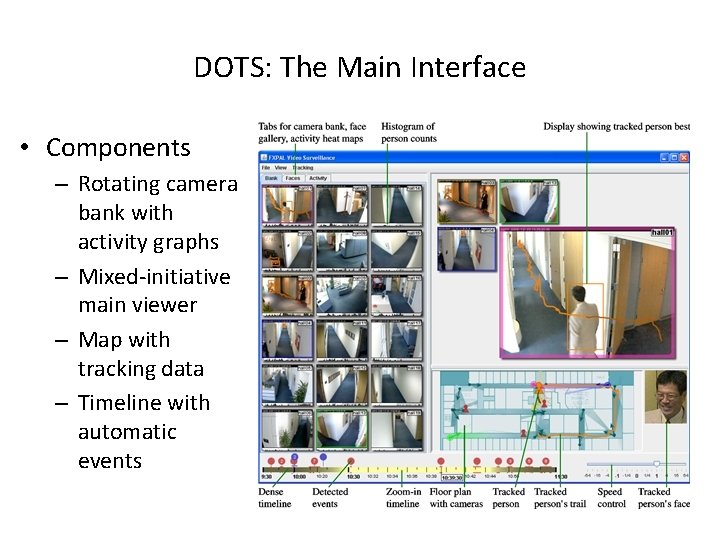

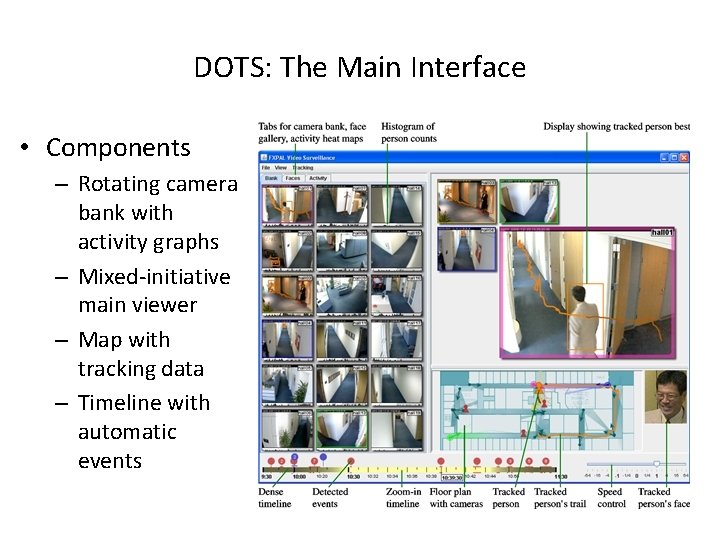

DOTS: The Main Interface • Components – Rotating camera bank with activity graphs – Mixed-initiative main viewer – Map with tracking data – Timeline with automatic events

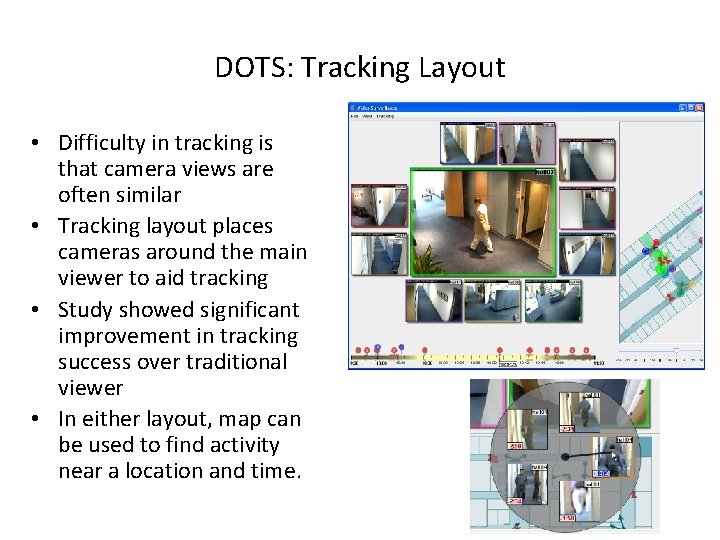

DOTS: Tracking Layout • Difficulty in tracking is that camera views are often similar • Tracking layout places cameras around the main viewer to aid tracking • Study showed significant improvement in tracking success over traditional viewer • In either layout, map can be used to find activity near a location and time.

Hyper. Hitchcock: Interactive Video • Issue – Vision: Seamlessly interact with characters in the show – Reality: Difficult to author even simple interactive videos • Today, video is included within pages of content but links between playing videos are not common.

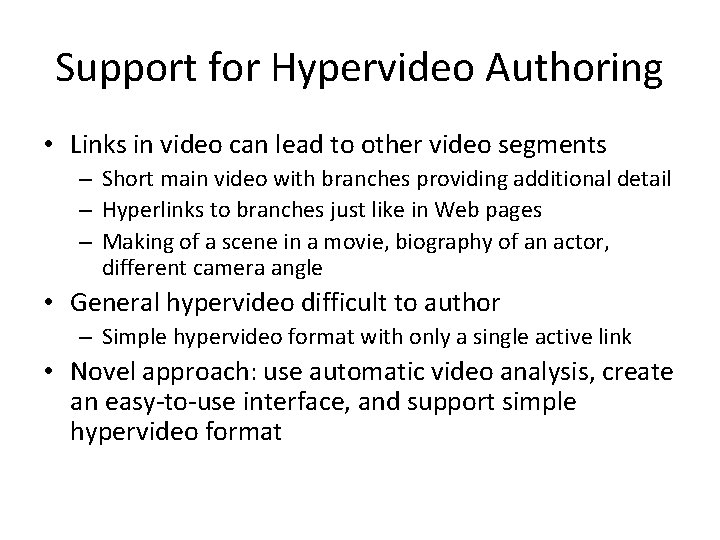

Support for Hypervideo Authoring • Links in video can lead to other video segments – Short main video with branches providing additional detail – Hyperlinks to branches just like in Web pages – Making of a scene in a movie, biography of an actor, different camera angle • General hypervideo difficult to author – Simple hypervideo format with only a single active link • Novel approach: use automatic video analysis, create an easy-to-use interface, and support simple hypervideo format

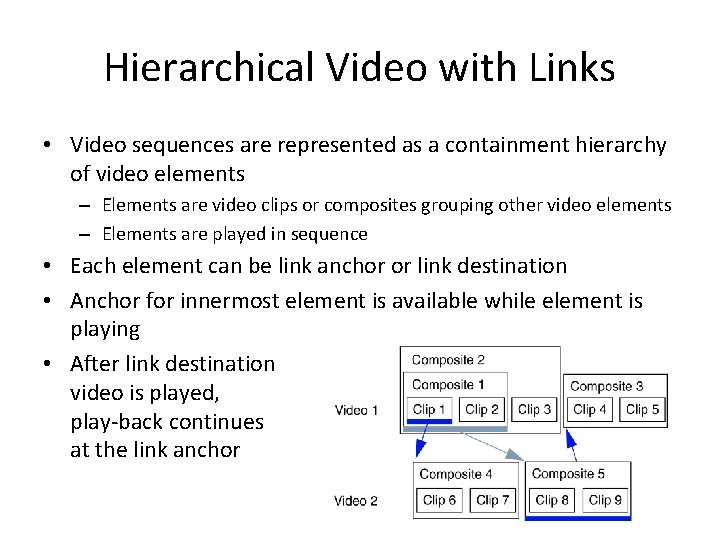

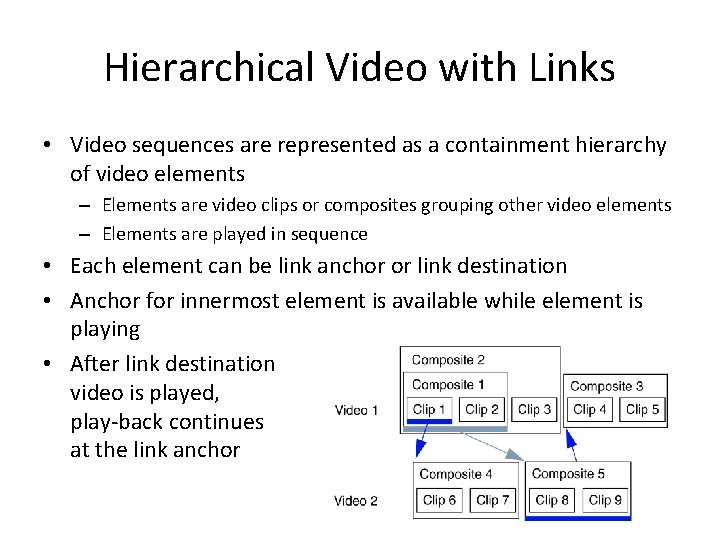

Hierarchical Video with Links • Video sequences are represented as a containment hierarchy of video elements – Elements are video clips or composites grouping other video elements – Elements are played in sequence • Each element can be link anchor or link destination • Anchor for innermost element is available while element is playing • After link destination video is played, play-back continues at the link anchor

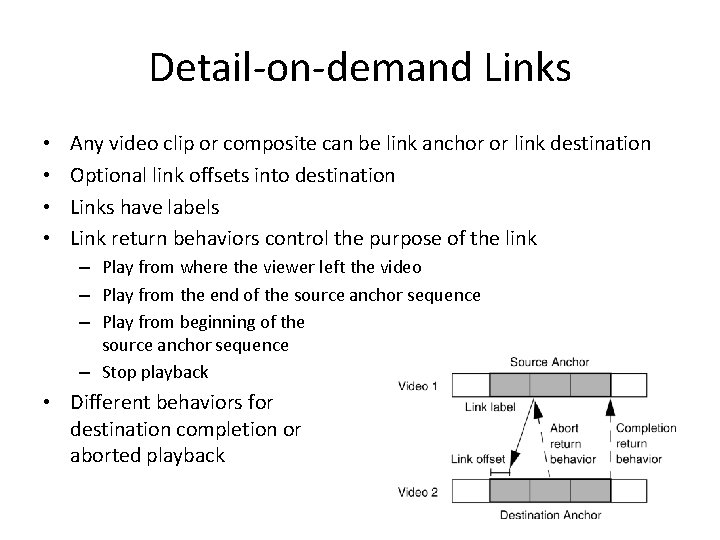

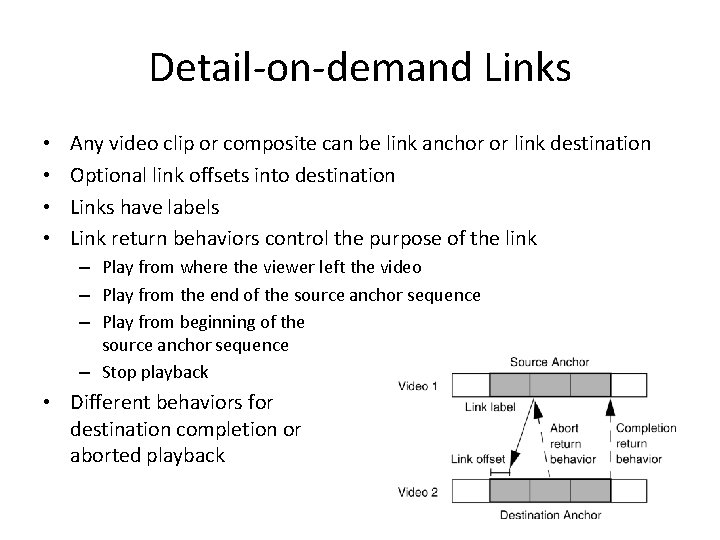

Detail-on-demand Links • • Any video clip or composite can be link anchor or link destination Optional link offsets into destination Links have labels Link return behaviors control the purpose of the link – Play from where the viewer left the video – Play from the end of the source anchor sequence – Play from beginning of the source anchor sequence – Stop playback • Different behaviors for destination completion or aborted playback

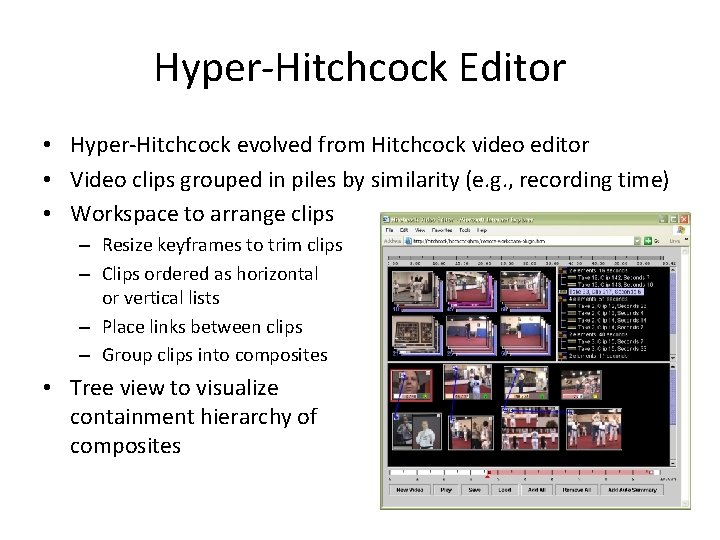

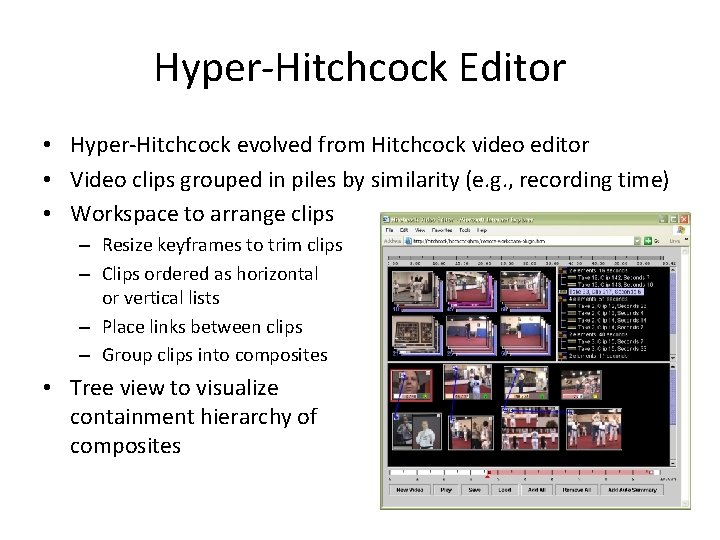

Hyper-Hitchcock Editor • Hyper-Hitchcock evolved from Hitchcock video editor • Video clips grouped in piles by similarity (e. g. , recording time) • Workspace to arrange clips – Resize keyframes to trim clips – Clips ordered as horizontal or vertical lists – Place links between clips – Group clips into composites • Tree view to visualize containment hierarchy of composites

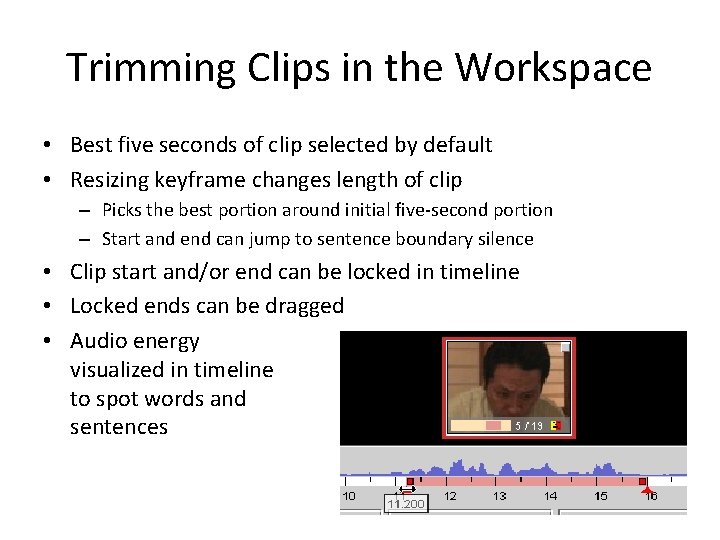

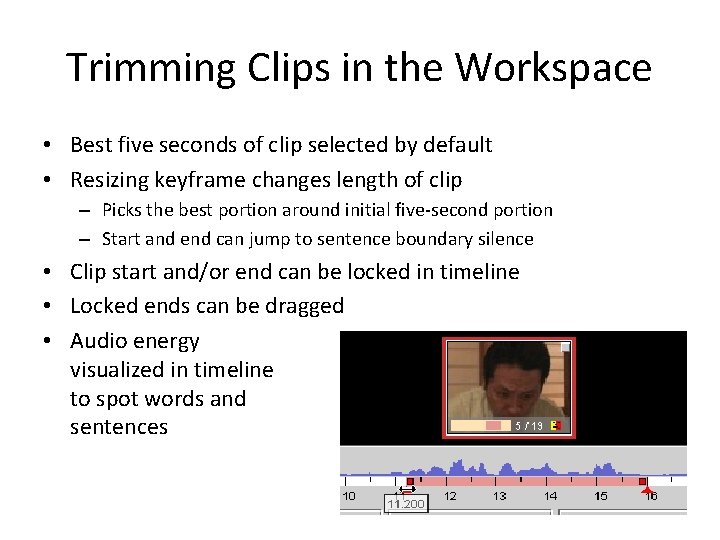

Trimming Clips in the Workspace • Best five seconds of clip selected by default • Resizing keyframe changes length of clip – Picks the best portion around initial five-second portion – Start and end can jump to sentence boundary silence • Clip start and/or end can be locked in timeline • Locked ends can be dragged • Audio energy visualized in timeline to spot words and sentences

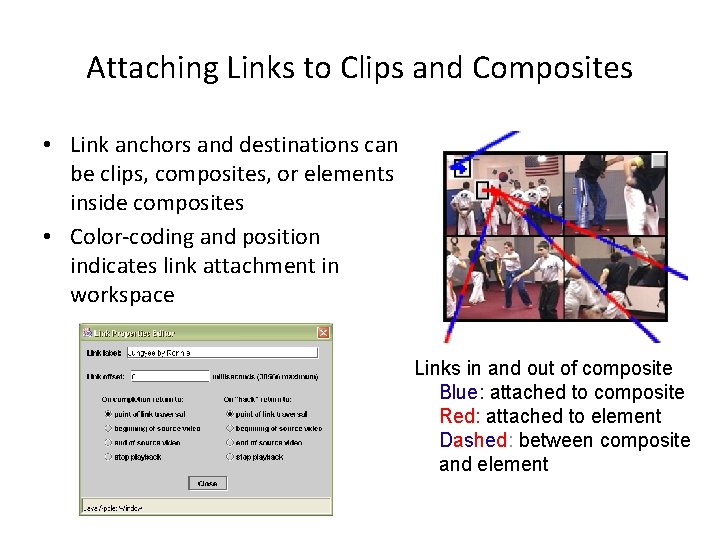

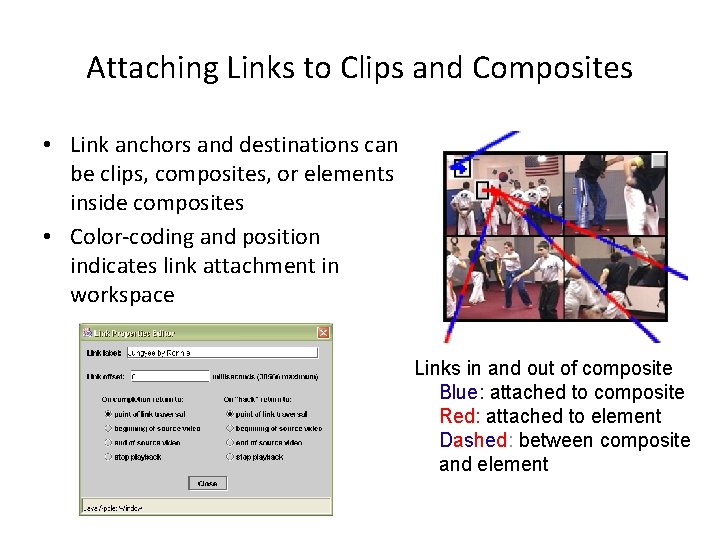

Attaching Links to Clips and Composites • Link anchors and destinations can be clips, composites, or elements inside composites • Color-coding and position indicates link attachment in workspace Links in and out of composite Blue: attached to composite Red: attached to element Dashed: between composite and element

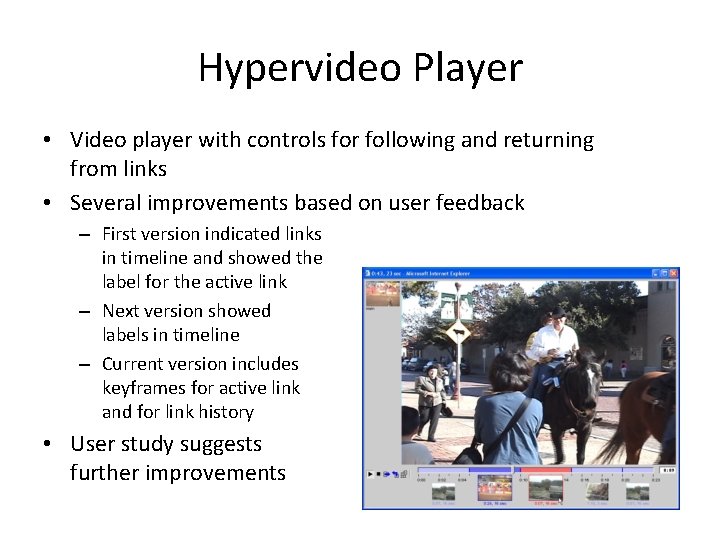

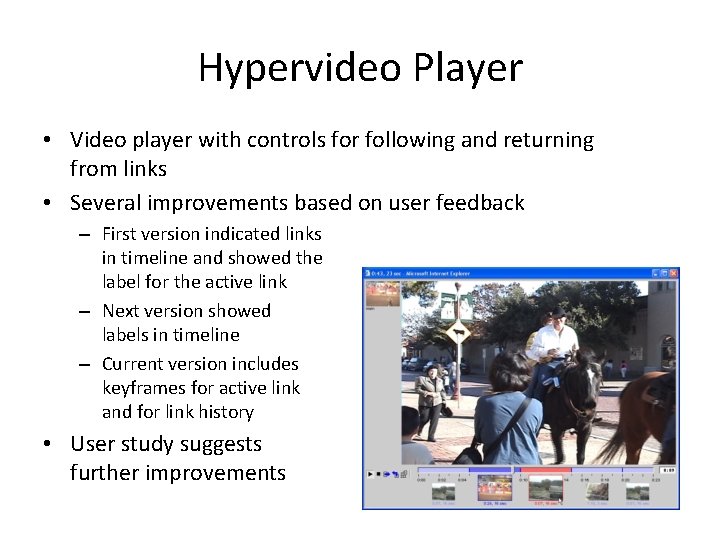

Hypervideo Player • Video player with controls for following and returning from links • Several improvements based on user feedback – First version indicated links in timeline and showed the label for the active link – Next version showed labels in timeline – Current version includes keyframes for active link and for link history • User study suggests further improvements

Today’s Topics • General Audio – Audio cues, spatialized audio • Speech – Segmentation, speaker id, recognition • Music – Interactive music, summarization, organization • Image and video processing – – Color-oriented representations Region and temporal segmentation Foreground-background separation Edge and face detection • Image and video applications – Media. Glow – image selection – DOTS – surveillance – Hyper. Hitchcock – interactive video