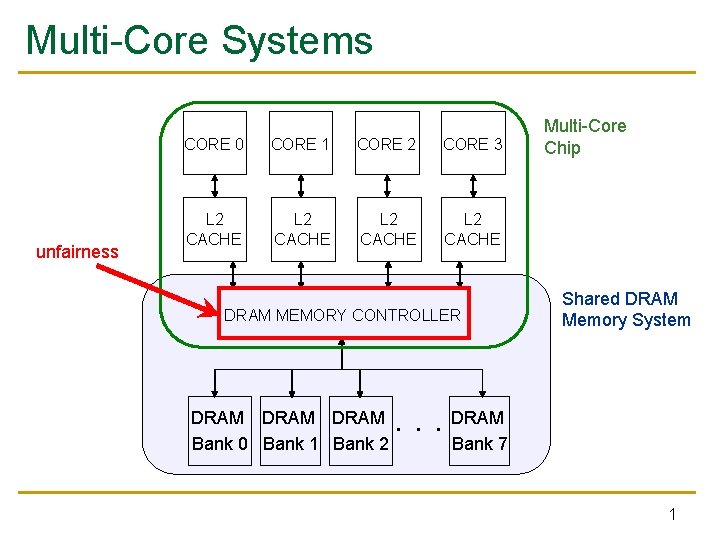

MultiCore Systems unfairness CORE 0 CORE 1 CORE

- Slides: 30

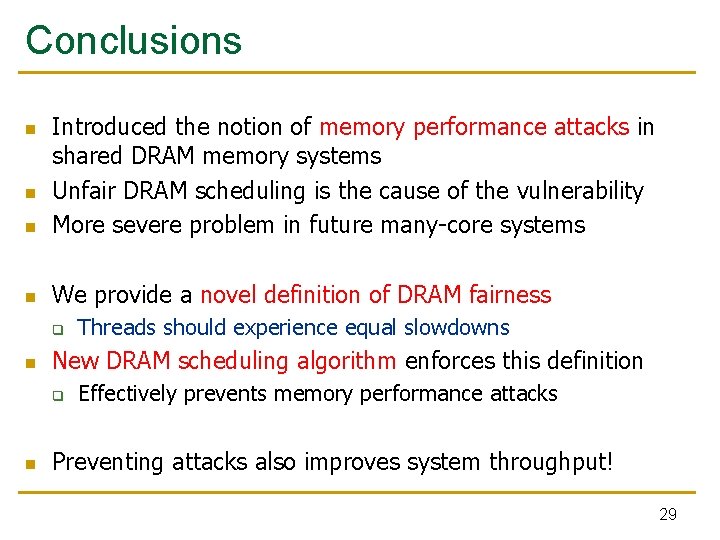

Multi-Core Systems unfairness CORE 0 CORE 1 CORE 2 CORE 3 L 2 CACHE DRAM MEMORY CONTROLLER DRAM Bank 0 Bank 1 Bank 2 Multi-Core Chip Shared DRAM Memory System . . . DRAM Bank 7 1

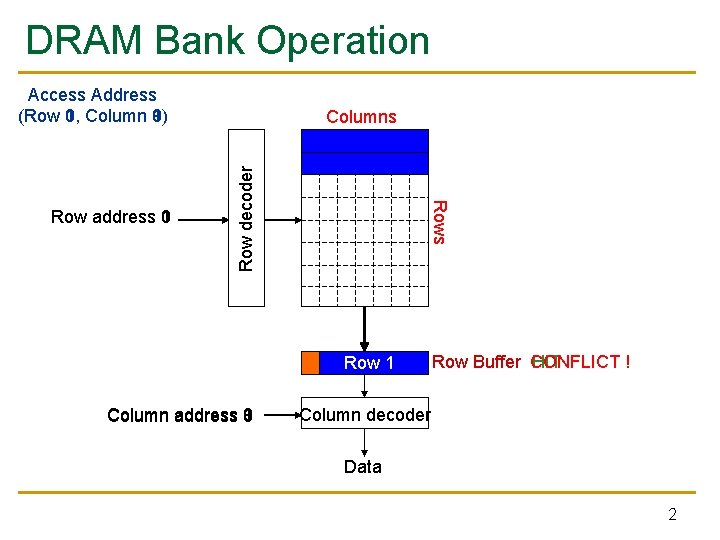

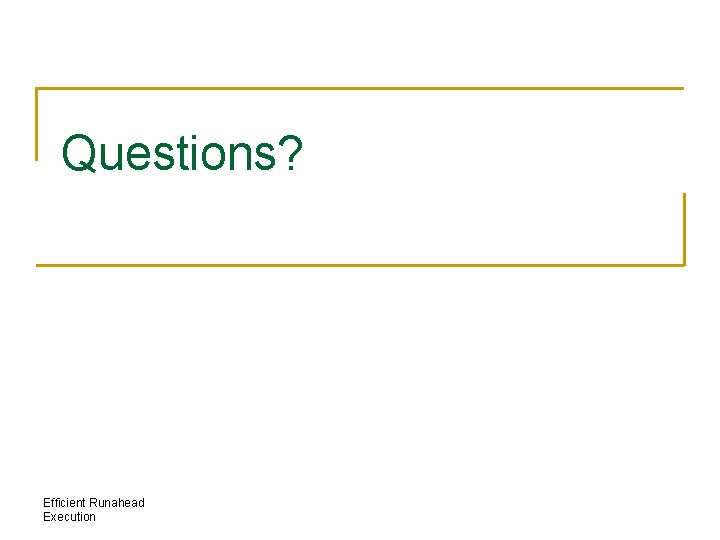

DRAM Bank Operation Access Address (Row 1, 0, Column 0) 1) 9) Row decoder Rows Row address 0 1 Columns Row 01 Row Empty 1 9 Column address 0 Row Buffer CONFLICT HIT ! Column decoder Data 2

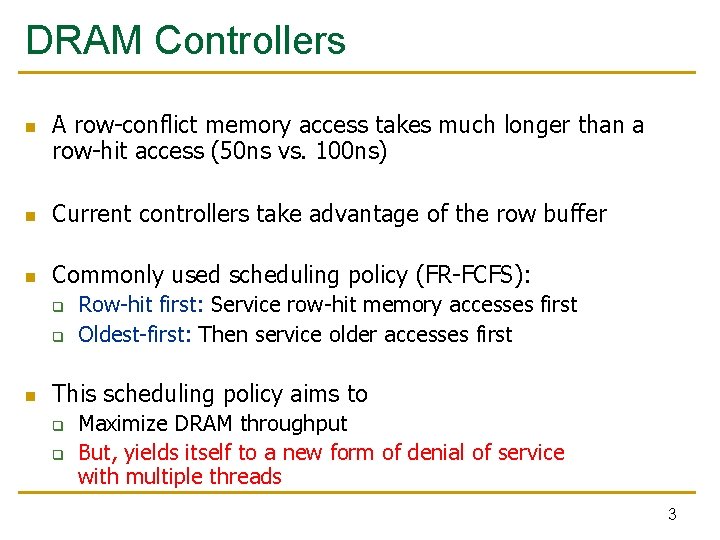

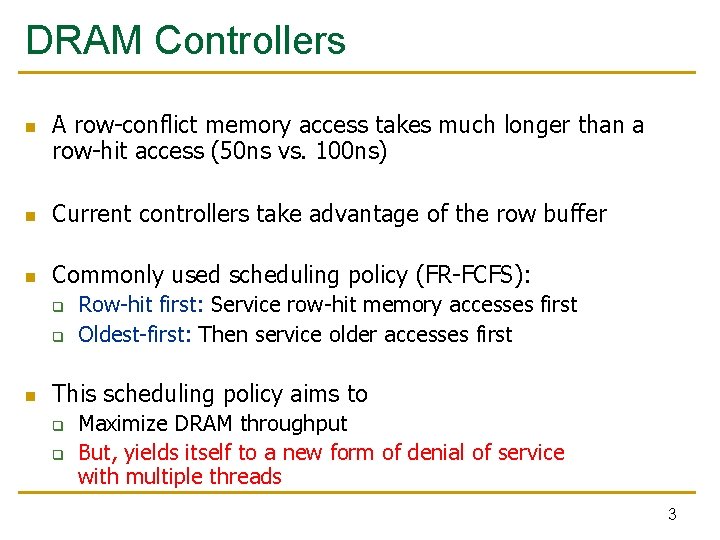

DRAM Controllers n A row-conflict memory access takes much longer than a row-hit access (50 ns vs. 100 ns) n Current controllers take advantage of the row buffer n Commonly used scheduling policy (FR-FCFS): q q n Row-hit first: Service row-hit memory accesses first Oldest-first: Then service older accesses first This scheduling policy aims to q q Maximize DRAM throughput But, yields itself to a new form of denial of service with multiple threads 3

Memory Performance Attacks: Denial of Memory Service in Multi-Core Systems Thomas Moscibroda and Onur Mutlu Computer Architecture Group Microsoft Research Efficient Runahead Execution

Outline n The Problem q n n Preventing Memory Performance Hogs Our Solution q n n Memory Performance Hogs Fair Memory Scheduling Experimental Evaluation Conclusions 5

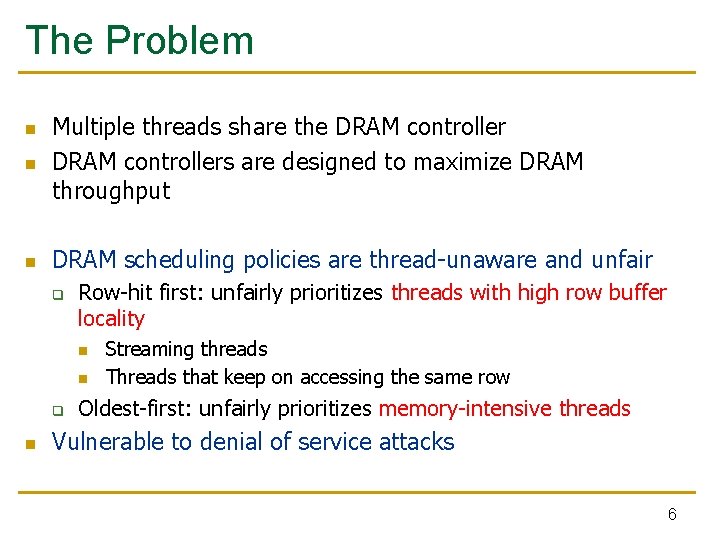

The Problem n Multiple threads share the DRAM controllers are designed to maximize DRAM throughput n DRAM scheduling policies are thread-unaware and unfair n q Row-hit first: unfairly prioritizes threads with high row buffer locality n n q n Streaming threads Threads that keep on accessing the same row Oldest-first: unfairly prioritizes memory-intensive threads Vulnerable to denial of service attacks 6

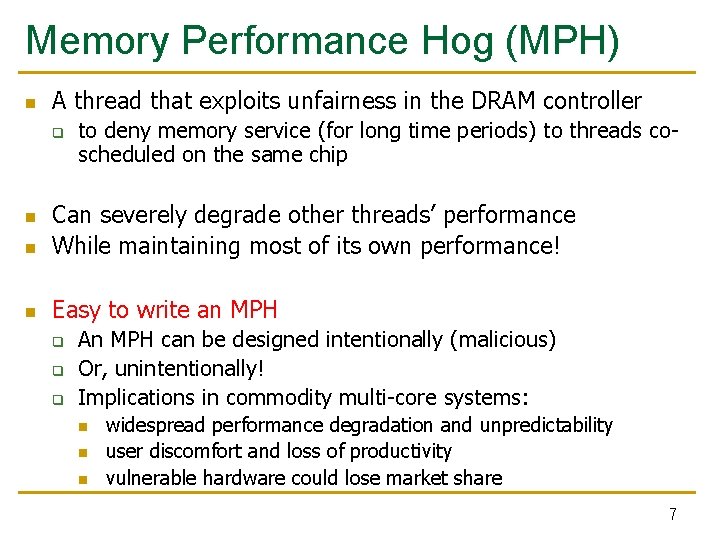

Memory Performance Hog (MPH) n A thread that exploits unfairness in the DRAM controller q to deny memory service (for long time periods) to threads coscheduled on the same chip n Can severely degrade other threads’ performance While maintaining most of its own performance! n Easy to write an MPH n q q q An MPH can be designed intentionally (malicious) Or, unintentionally! Implications in commodity multi-core systems: n n n widespread performance degradation and unpredictability user discomfort and loss of productivity vulnerable hardware could lose market share 7

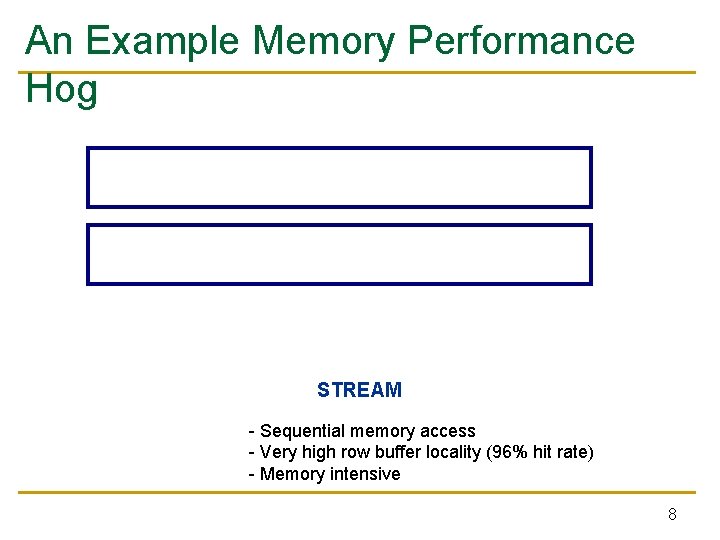

An Example Memory Performance Hog STREAM - Sequential memory access - Very high row buffer locality (96% hit rate) - Memory intensive 8

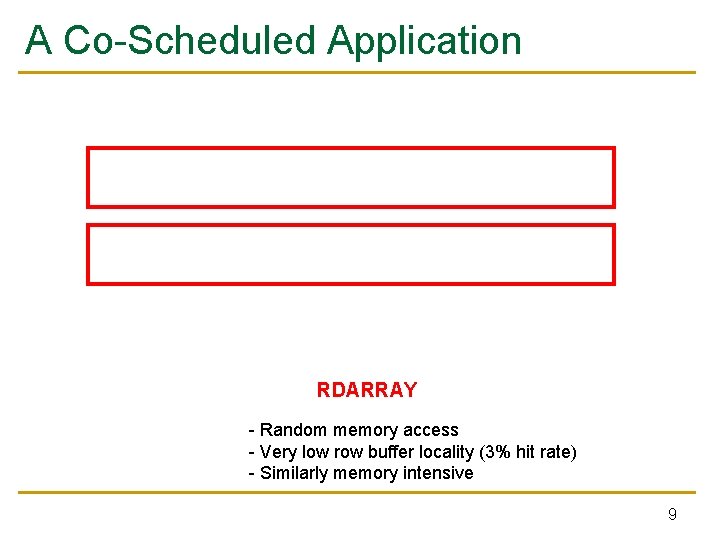

A Co-Scheduled Application RDARRAY - Random memory access - Very low row buffer locality (3% hit rate) - Similarly memory intensive 9

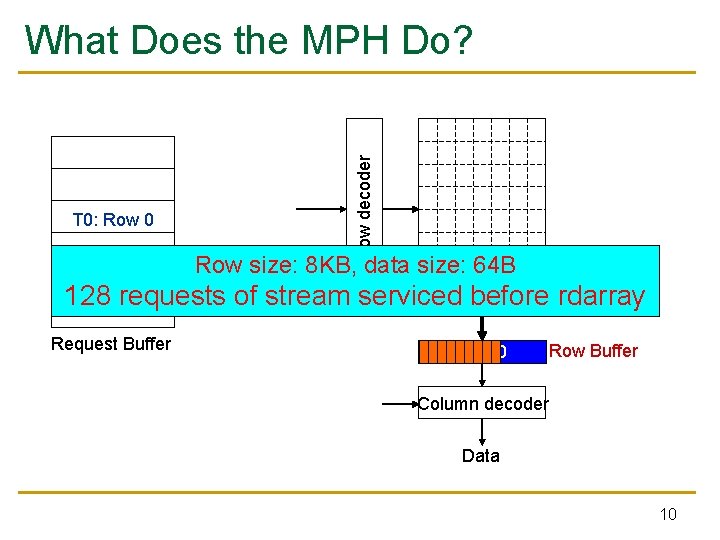

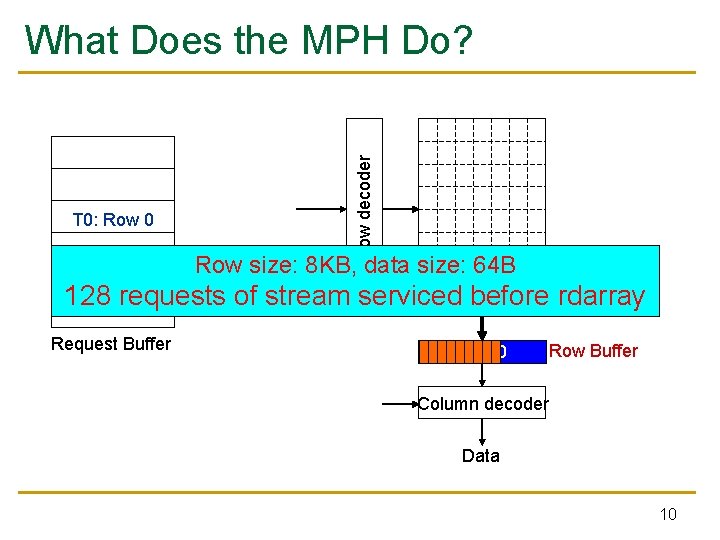

T 0: Row 0 T 0: T 1: Row 05 T 1: T 0: Row 111 0 Row decoder What Does the MPH Do? Row size: 8 KB, data size: 64 B 128 requests of stream serviced before rdarray T 1: T 0: Row 16 0 Request Buffer Row 00 Row Buffer Column decoder Data 10

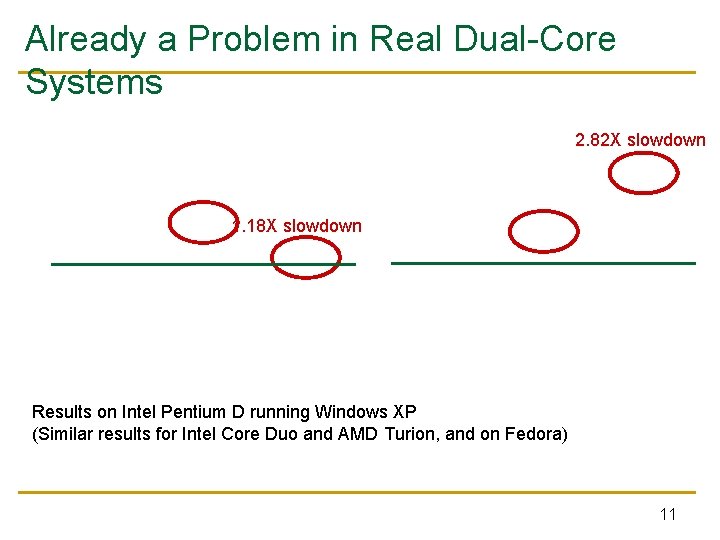

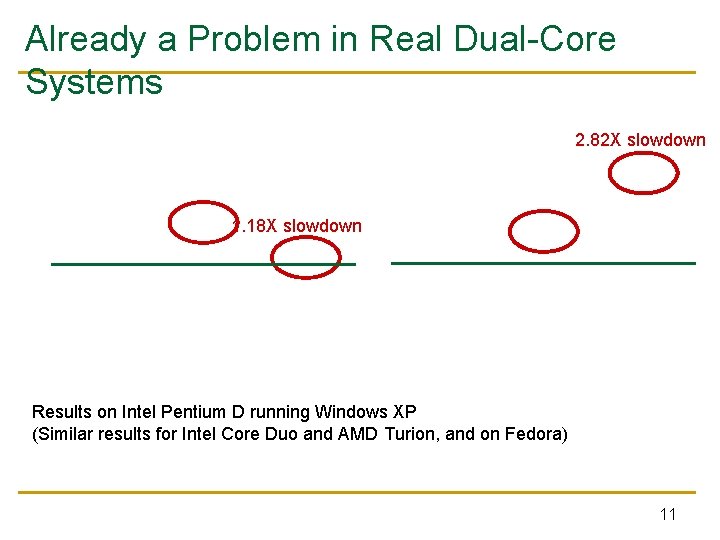

Already a Problem in Real Dual-Core Systems 2. 82 X slowdown 1. 18 X slowdown Results on Intel Pentium D running Windows XP (Similar results for Intel Core Duo and AMD Turion, and on Fedora) 11

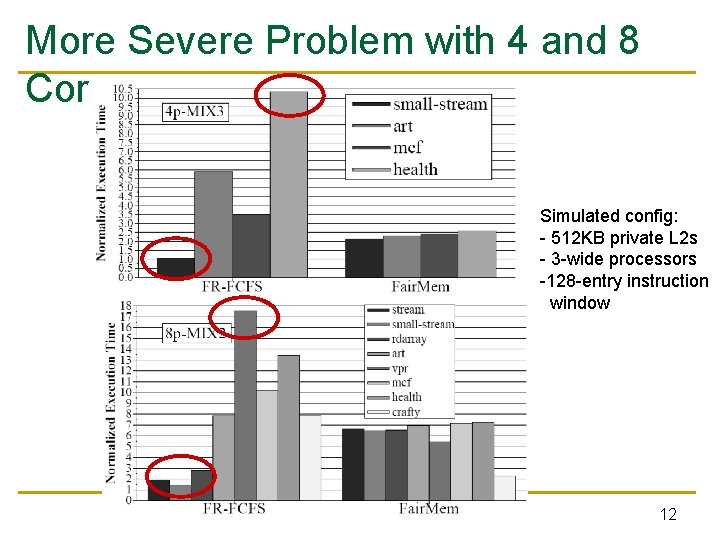

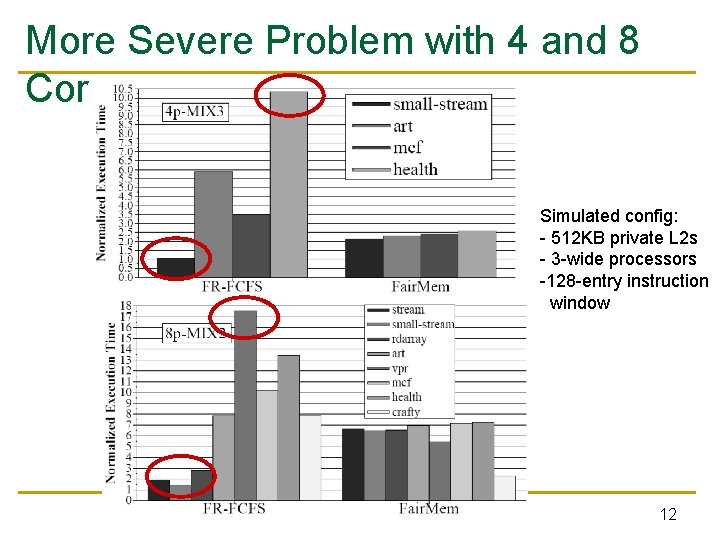

More Severe Problem with 4 and 8 Cores Simulated config: - 512 KB private L 2 s - 3 -wide processors -128 -entry instruction window 12

Outline n The Problem q n n Preventing Memory Performance Hogs Our Solution q n n Memory Performance Hogs Fair Memory Scheduling Experimental Evaluation Conclusions 13

Preventing Memory Performance Hogs n MPHs cannot be prevented in software q n Software has no direct control over DRAM controller’s scheduling q q n Fundamentally hard to distinguish between malicious and unintentional MPHs OS/VMM can adjust applications’ virtual physical page mappings No control over DRAM controller’s hardwired page bank mappings MPHs should be prevented in hardware q q Hardware also cannot distinguish malicious vs unintentional Goal: Contain and limit MPHs by providing fair memory scheduling 14

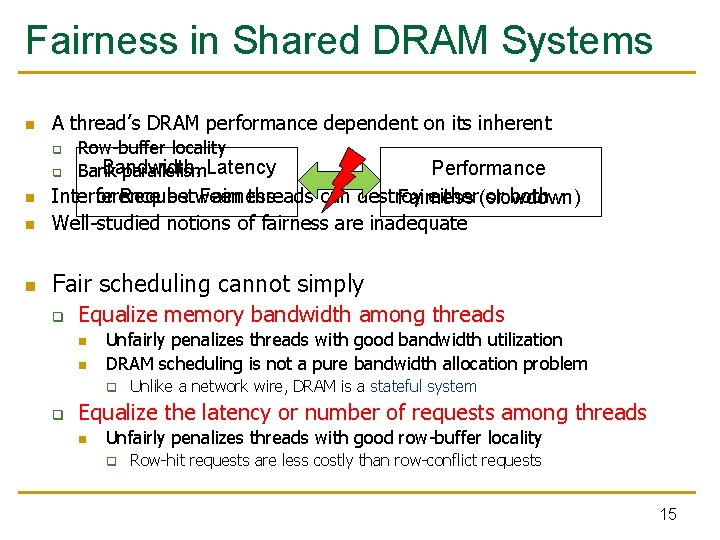

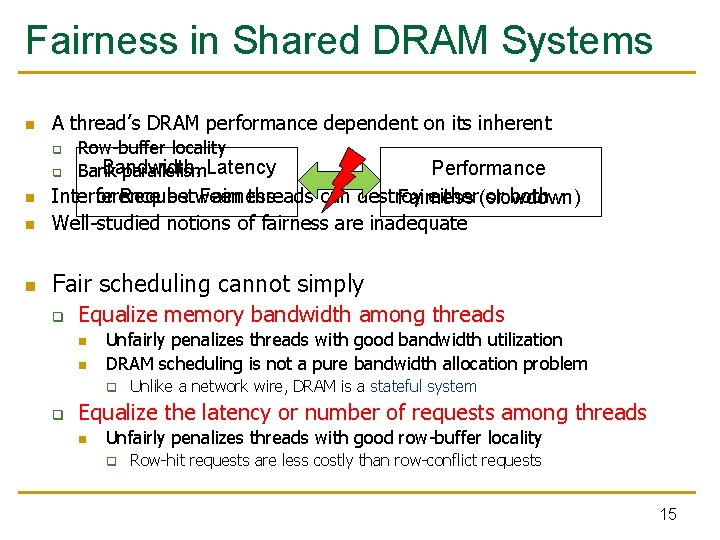

Fairness in Shared DRAM Systems n A thread’s DRAM performance dependent on its inherent q Row-buffer locality Bandwidth, Bank parallelism. Latency n Performance or Request Fairness Interference between threads can destroy either(slowdown) or both Fairness Well-studied notions of fairness are inadequate n Fair scheduling cannot simply q n q Equalize memory bandwidth among threads n n Unfairly penalizes threads with good bandwidth utilization DRAM scheduling is not a pure bandwidth allocation problem q q Unlike a network wire, DRAM is a stateful system Equalize the latency or number of requests among threads n Unfairly penalizes threads with good row-buffer locality q Row-hit requests are less costly than row-conflict requests 15

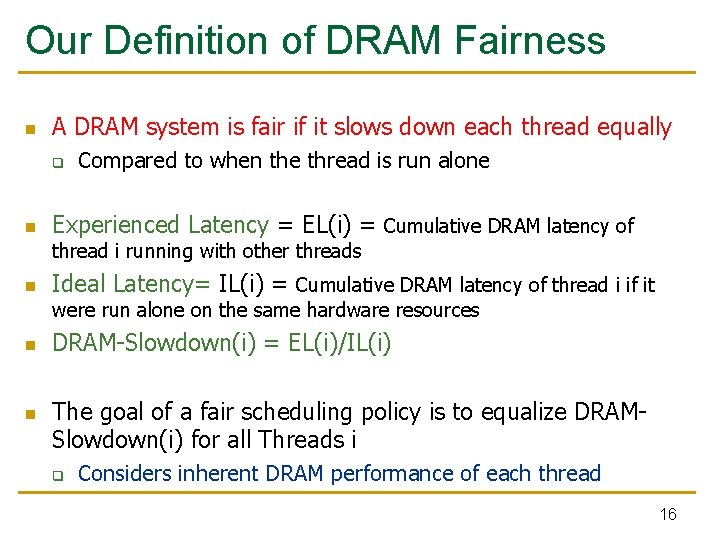

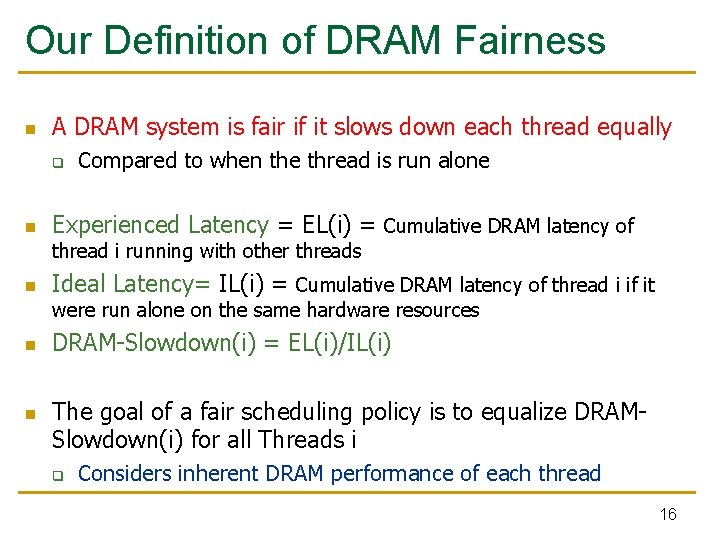

Our Definition of DRAM Fairness n A DRAM system is fair if it slows down each thread equally q n Compared to when the thread is run alone Experienced Latency = EL(i) = Cumulative DRAM latency of thread i running with other threads n Ideal Latency= IL(i) = Cumulative DRAM latency of thread i if it were run alone on the same hardware resources n n DRAM-Slowdown(i) = EL(i)/IL(i) The goal of a fair scheduling policy is to equalize DRAMSlowdown(i) for all Threads i q Considers inherent DRAM performance of each thread 16

Outline n The Problem q n n Preventing Memory Performance Hogs Our Solution q n n Memory Performance Hogs Fair Memory Scheduling Experimental Evaluation Conclusions 17

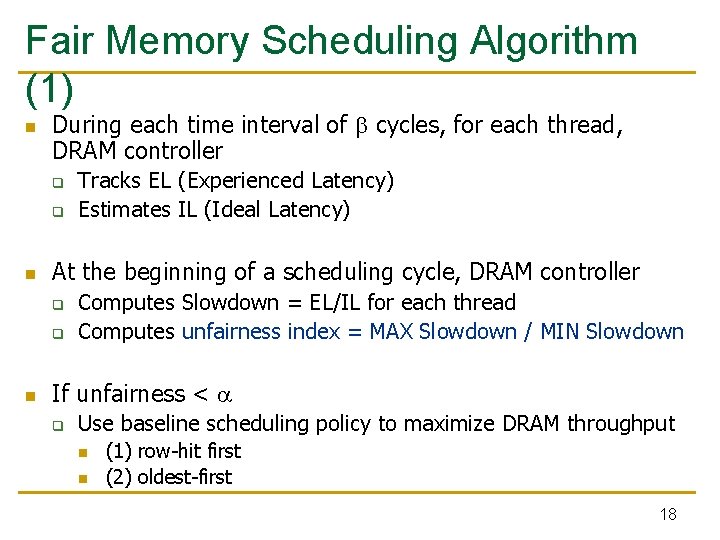

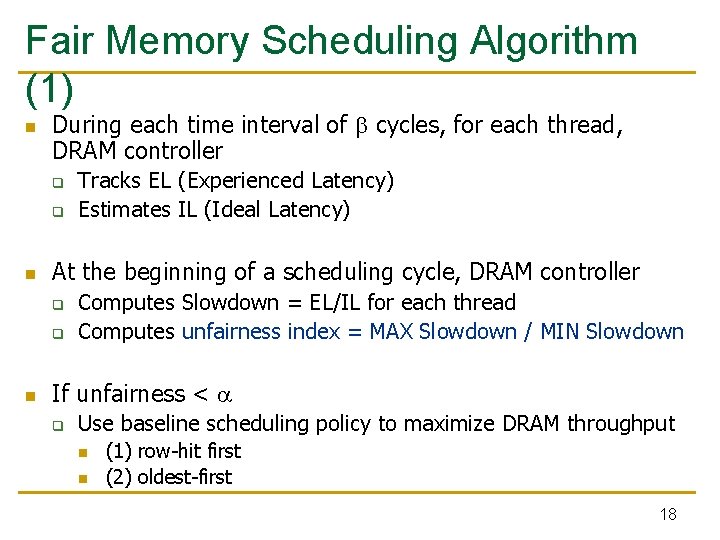

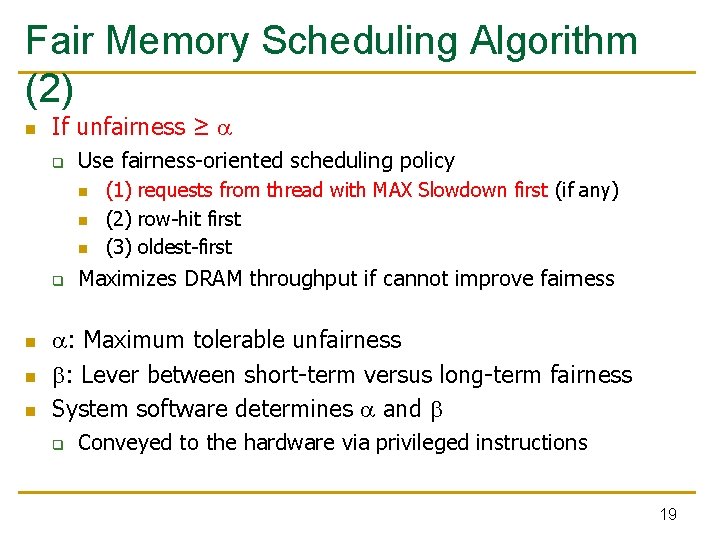

Fair Memory Scheduling Algorithm (1) n During each time interval of cycles, for each thread, DRAM controller q q n At the beginning of a scheduling cycle, DRAM controller q q n Tracks EL (Experienced Latency) Estimates IL (Ideal Latency) Computes Slowdown = EL/IL for each thread Computes unfairness index = MAX Slowdown / MIN Slowdown If unfairness < q Use baseline scheduling policy to maximize DRAM throughput n n (1) row-hit first (2) oldest-first 18

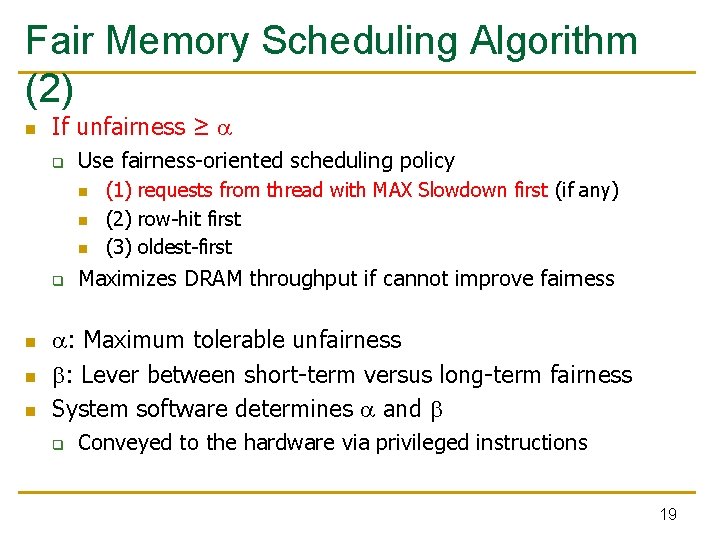

Fair Memory Scheduling Algorithm (2) n If unfairness ≥ q Use fairness-oriented scheduling policy n n n q n n n (1) requests from thread with MAX Slowdown first (if any) (2) row-hit first (3) oldest-first Maximizes DRAM throughput if cannot improve fairness : Maximum tolerable unfairness : Lever between short-term versus long-term fairness System software determines and q Conveyed to the hardware via privileged instructions 19

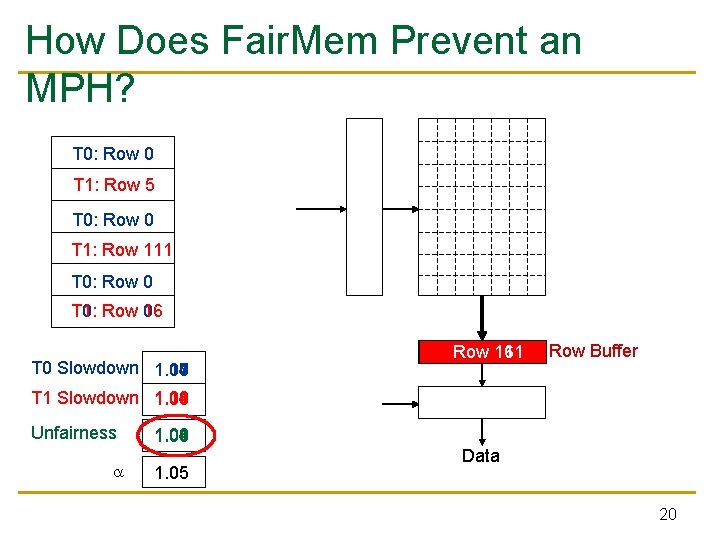

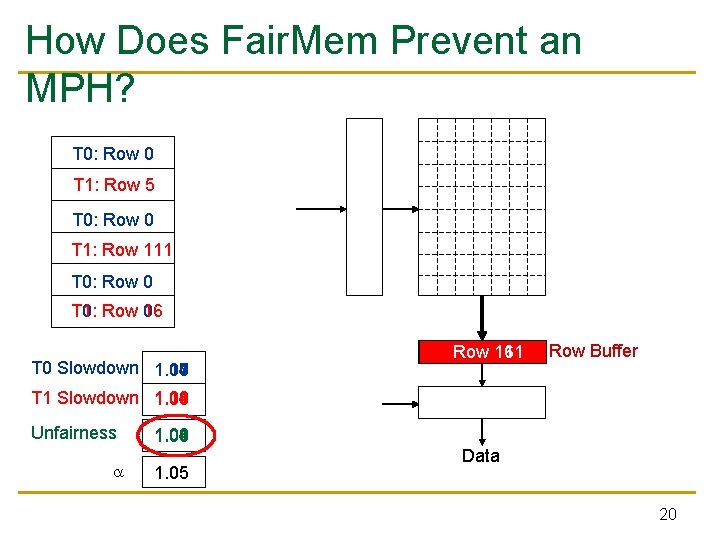

How Does Fair. Mem Prevent an MPH? T 0: Row 0 T 1: Row 5 T 0: Row 0 T 1: Row 111 T 0: Row 0 T 0: T 1: Row 0 16 T 0 Slowdown 1. 10 1. 04 1. 07 1. 03 Row 16 Row 00 Row 111 Row Buffer T 1 Slowdown 1. 14 1. 03 1. 06 1. 08 1. 11 1. 00 Unfairness 1. 06 1. 04 1. 03 1. 00 1. 05 Data 20

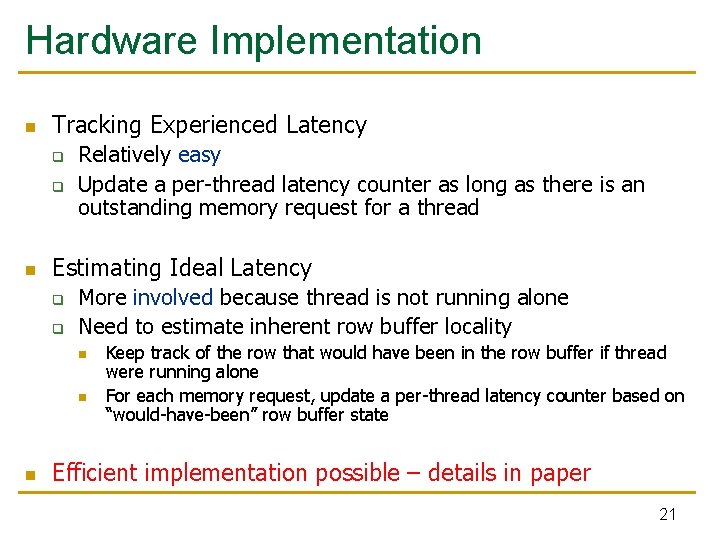

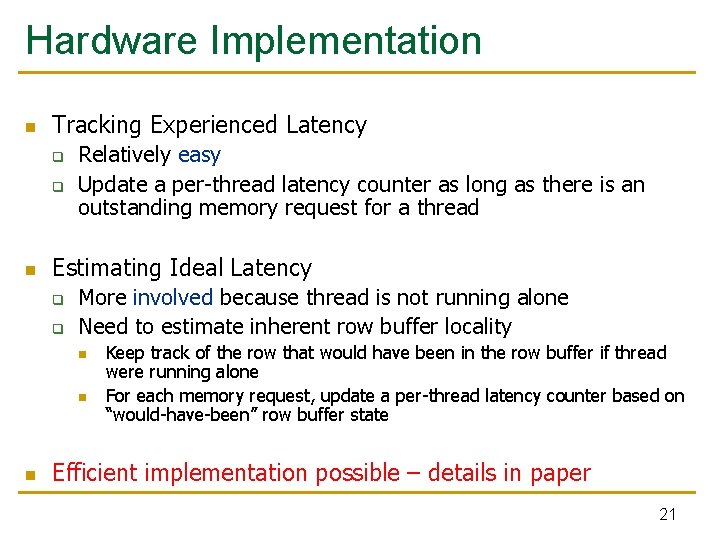

Hardware Implementation n Tracking Experienced Latency q q n Relatively easy Update a per-thread latency counter as long as there is an outstanding memory request for a thread Estimating Ideal Latency q q More involved because thread is not running alone Need to estimate inherent row buffer locality n n n Keep track of the row that would have been in the row buffer if thread were running alone For each memory request, update a per-thread latency counter based on “would-have-been” row buffer state Efficient implementation possible – details in paper 21

Outline n The Problem q n n Preventing Memory Performance Hogs Our Solution q n n Memory Performance Hogs Fair Memory Scheduling Experimental Evaluation Conclusions 22

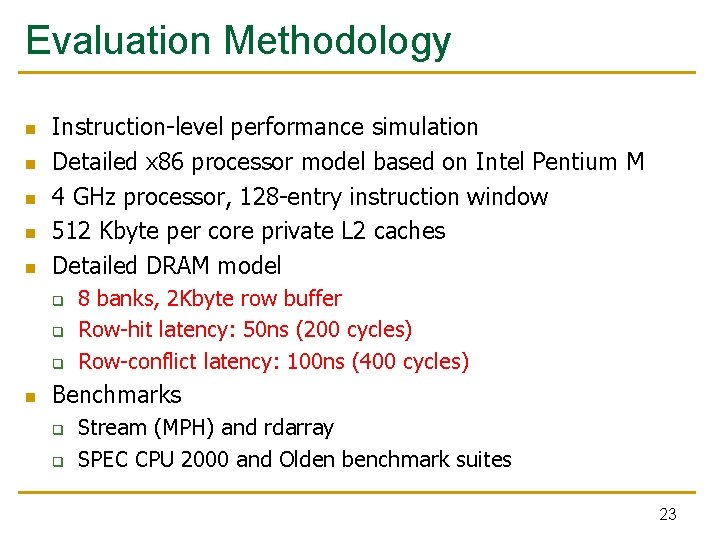

Evaluation Methodology n n n Instruction-level performance simulation Detailed x 86 processor model based on Intel Pentium M 4 GHz processor, 128 -entry instruction window 512 Kbyte per core private L 2 caches Detailed DRAM model q q q n 8 banks, 2 Kbyte row buffer Row-hit latency: 50 ns (200 cycles) Row-conflict latency: 100 ns (400 cycles) Benchmarks q q Stream (MPH) and rdarray SPEC CPU 2000 and Olden benchmark suites 23

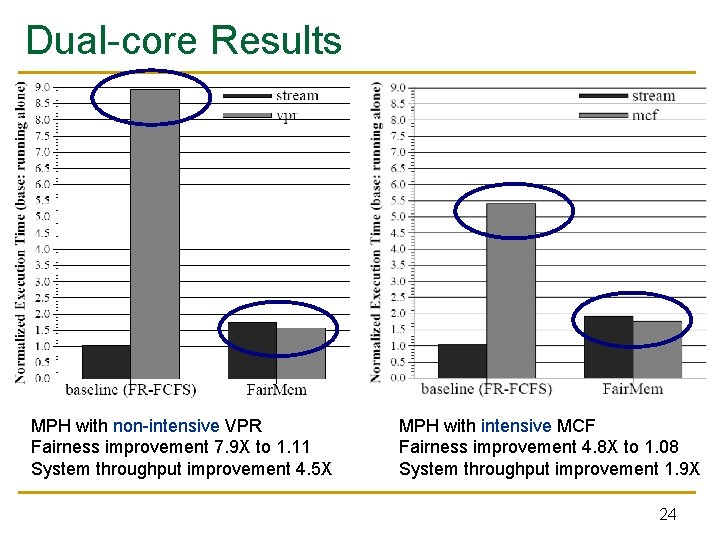

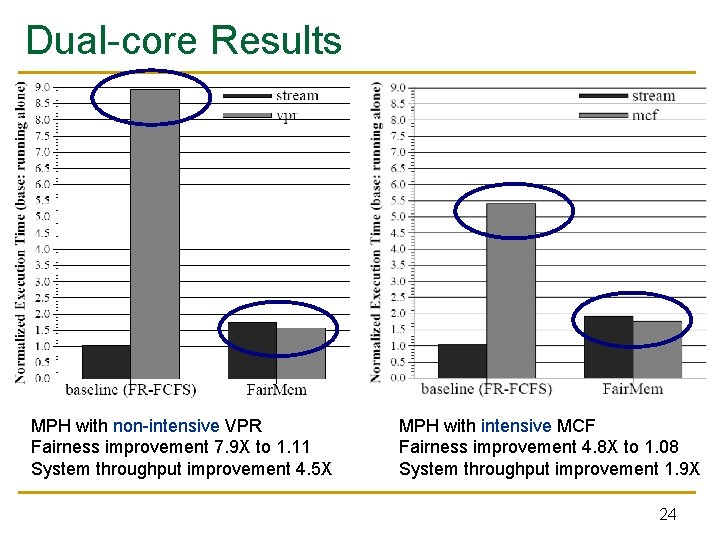

Dual-core Results MPH with non-intensive VPR Fairness improvement 7. 9 X to 1. 11 System throughput improvement 4. 5 X MPH with intensive MCF Fairness improvement 4. 8 X to 1. 08 System throughput improvement 1. 9 X 24

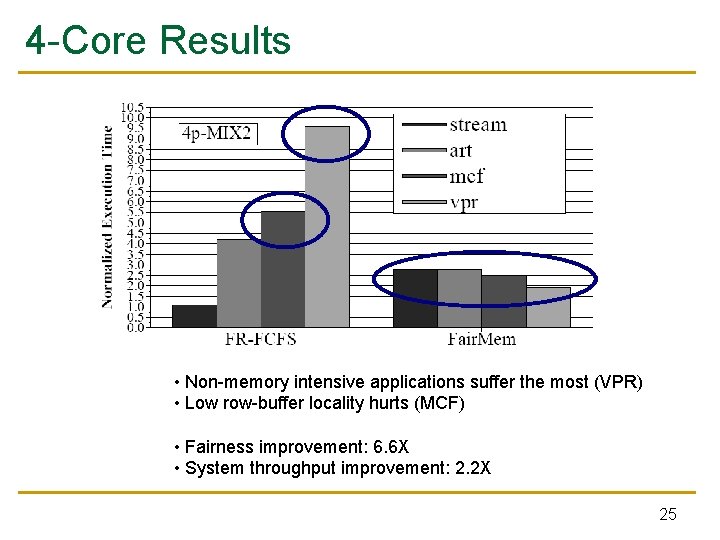

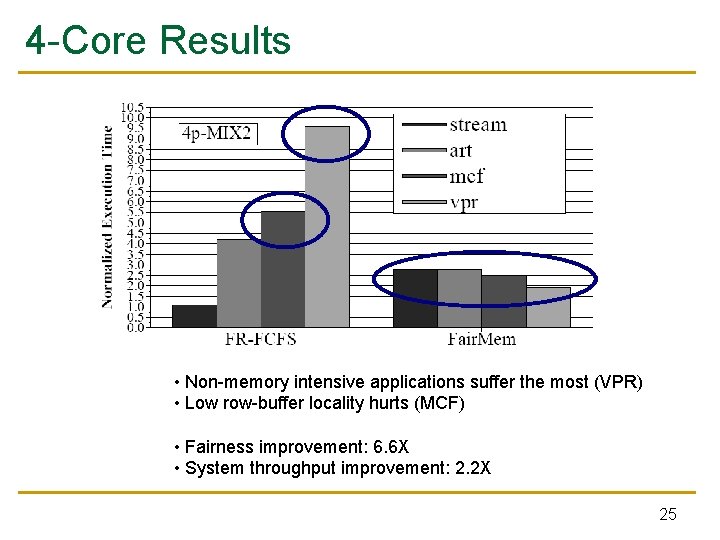

4 -Core Results • Non-memory intensive applications suffer the most (VPR) • Low row-buffer locality hurts (MCF) • Fairness improvement: 6. 6 X • System throughput improvement: 2. 2 X 25

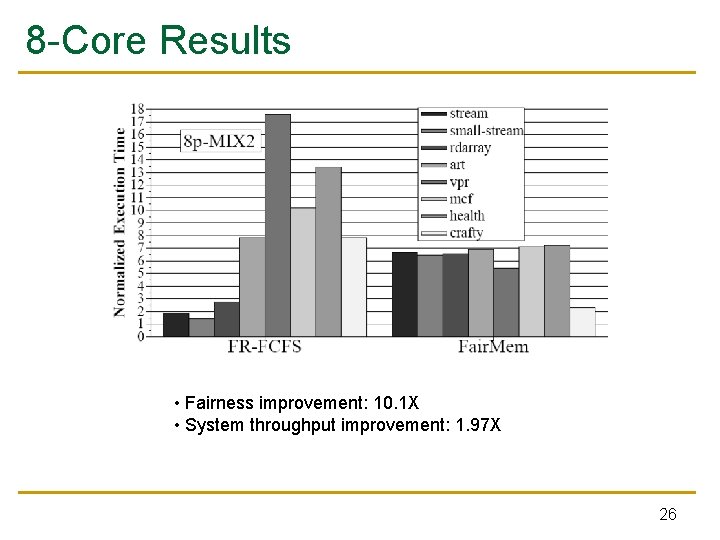

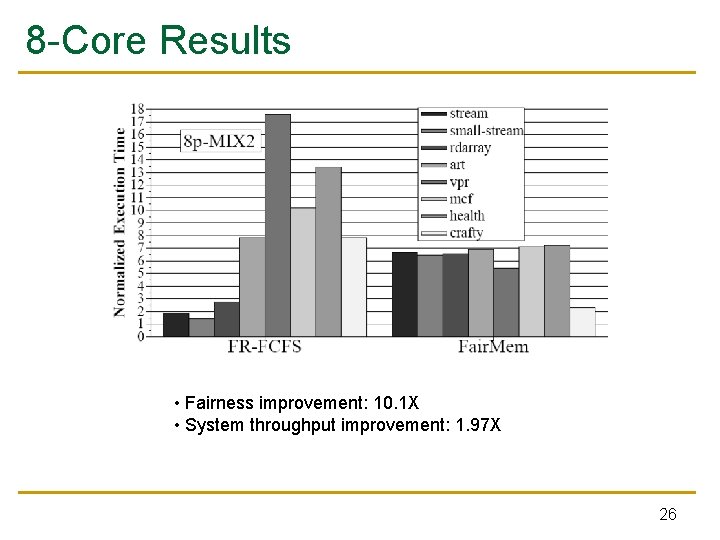

8 -Core Results • Fairness improvement: 10. 1 X • System throughput improvement: 1. 97 X 26

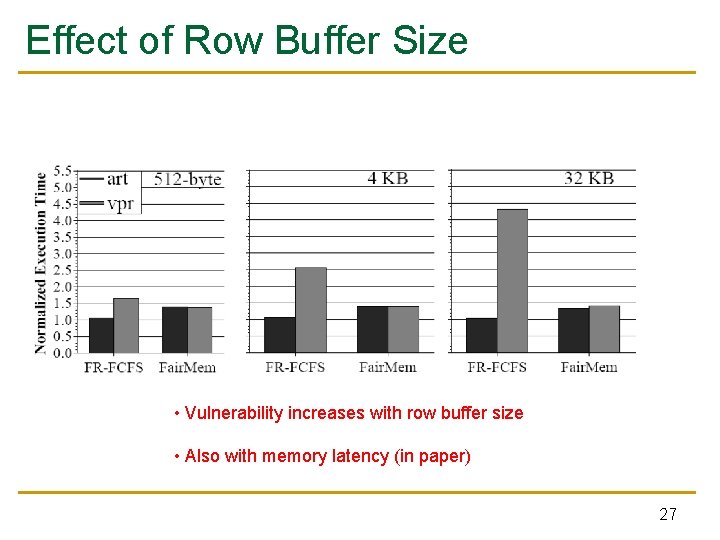

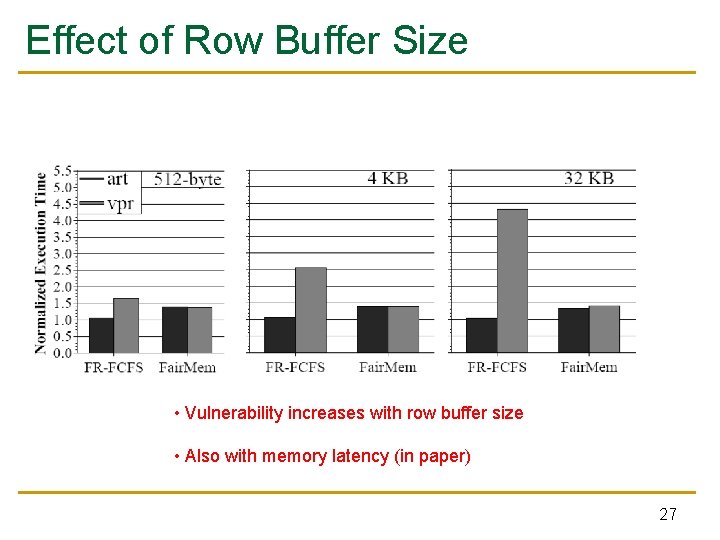

Effect of Row Buffer Size • Vulnerability increases with row buffer size • Also with memory latency (in paper) 27

Outline n The Problem q n n Preventing Memory Performance Hogs Our Solution q n n Memory Performance Hogs Fair Memory Scheduling Experimental Evaluation Conclusions 28

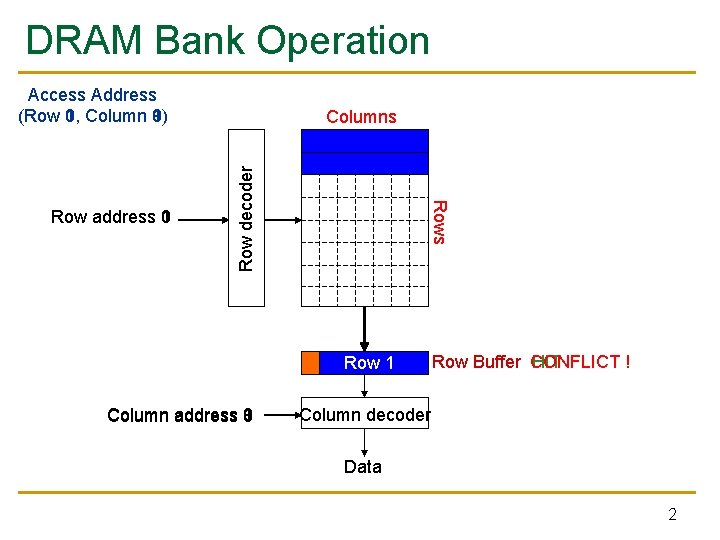

Conclusions n Introduced the notion of memory performance attacks in shared DRAM memory systems Unfair DRAM scheduling is the cause of the vulnerability More severe problem in future many-core systems n We provide a novel definition of DRAM fairness n n q n New DRAM scheduling algorithm enforces this definition q n Threads should experience equal slowdowns Effectively prevents memory performance attacks Preventing attacks also improves system throughput! 29

Questions? Efficient Runahead Execution