MULTICLOUD AS THE NEXT GENERATION CLOUD INFRASTRUCTURE Deepti

- Slides: 43

MULTICLOUD AS THE NEXT GENERATION CLOUD INFRASTRUCTURE Deepti Chandra, Jacopo Pianigiani

Agenda The “Application-aware” Cloud Principle Problem Statement in Multicloud Deployment SDN in the Multicloud Building Blocks Building the Private Cloud – DC Fabric Building the Private Cloud – DC Interconnect (DCI) Building the Private Cloud – WAN Integration Building the Private Cloud – Traffic Optimization 2 © 2018 Juniper Networks, Inc. All rights reserved.

THE APPLICATION-AWARE CLOUD PRINCIPLE

The Big Picture: Cooperative Clouds MANAGEABILITY & OPERATIONS SECURITY REQUIRING CONNECTIVITY USE MULTIPLE LOCATIONS Embedded (e. g. device or vehicle), in a Data Center RUNNING End Users Applications People, vehicles, appliances, devices Made of software components MULTIPLE ENVIRONMENTS Containers, VMs, BMS MANAGEABILITY & OPERATIONS CONNECTIVITY SECURITY CPE Remote Branch Office IP Fabric VMs Containers BMS FIREWALL Public Cloud (VPCs) Multi-site DC / Private Cloud 4 Telco POPs Home Data. Centers

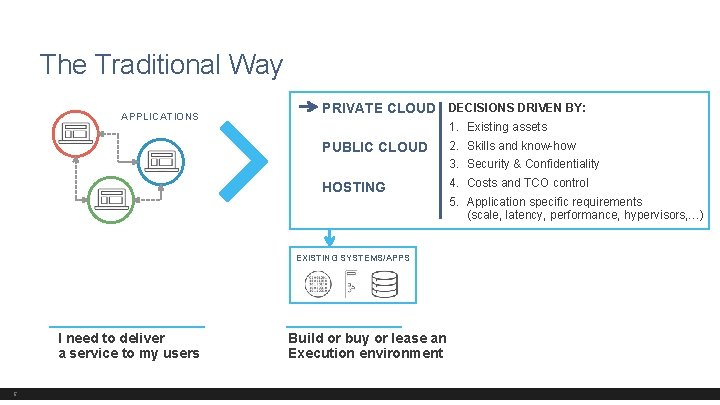

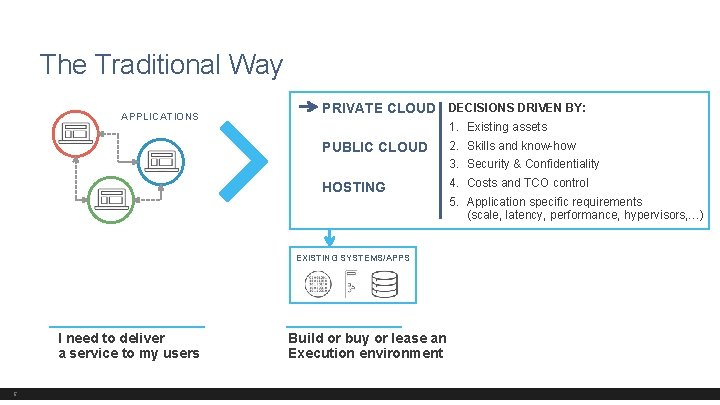

The Traditional Way APPLICATIONS PRIVATE CLOUD DECISIONS DRIVEN BY: 1. Existing assets PUBLIC CLOUD 2. Skills and know-how 3. Security & Confidentiality HOSTING 4. Costs and TCO control 5. Application specific requirements (scale, latency, performance, hypervisors, …) EXISTING SYSTEMS/APPS I need to deliver a service to my users 5 Build or buy or lease an Execution environment

Why Data Centers Need Multicloud? PRIVATE CLOUD PUBLIC CLOUD HOSTING EXISTING SYSTEMS/APPS I need to deliver a service to my users 6 Today – The New Cloud Centralized Distributed Racks cnt Containers DR Saa. S Paa. S. . . vm cnt VM bms replic BMS Resource pooling NEW APPLICATIONS Today most applications leverage cooperation between components deployed across multiple cloud infrastructure burs ting What Has Changed cnt VM cnt bmsaa. S DECISIONS DRIVEN BY: 1. User experience 2. Costs and TCO control 3. Agility (time to change) 4. Security and confidentiality 5. Skills and know-how 6. Application specific requirements (scale, latency, performance, hypervisors, …)

PROBLEM STATEMENT IN THE MULTICLOUD DEPLOYMENT

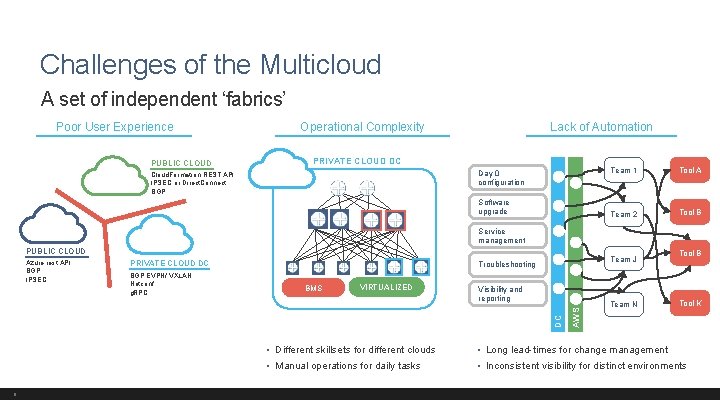

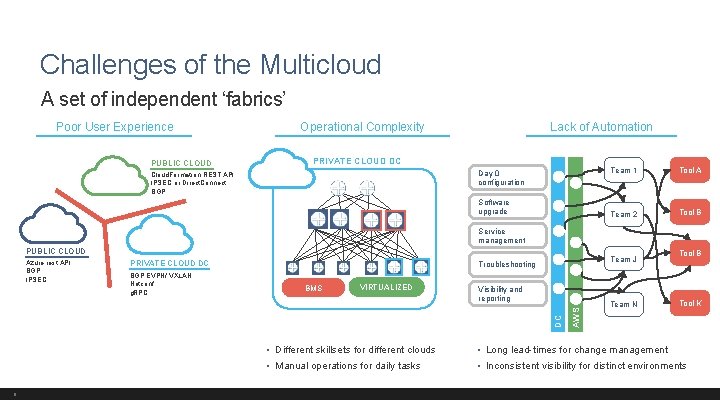

Challenges of the Multicloud A set of independent ‘fabrics’ Poor User Experience PUBLIC CLOUD Operational Complexity Lack of Automation PRIVATE CLOUD DC Day 0 configuration Cloud. Formation REST API IPSEC or Direct. Connect BGP Software upgrade Team 1 Tool A Team 2 Tool B Service management PUBLIC CLOUD PRIVATE CLOUD DC BMS VIRTUALIZED Visibility and reporting 8 AWS BGP EVPN/ VXLAN Netconf g. RPC Team J Troubleshooting DC Azure rest API BGP IPSEC Team N Tool B Tool K • Different skillsets for different clouds • Long lead-times for change management • Manual operations for daily tasks • Inconsistent visibility for distinct environments

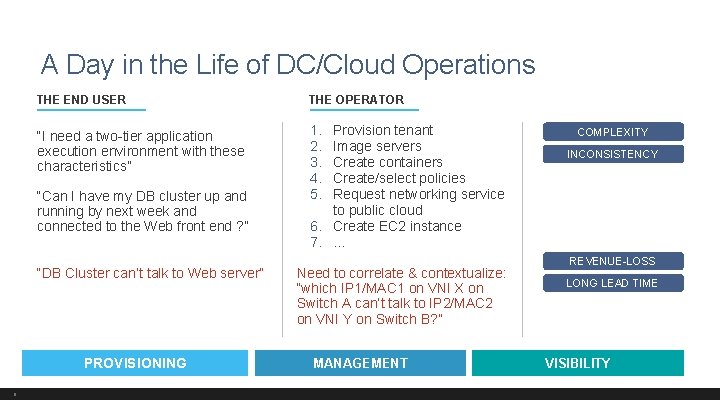

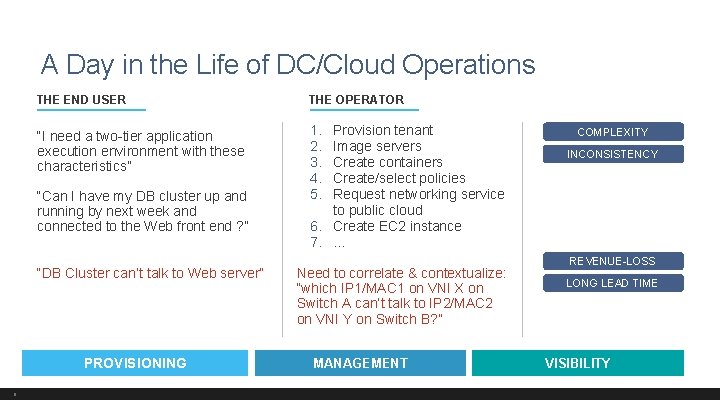

A Day in the Life of DC/Cloud Operations THE END USER “I need a two-tier application execution environment with these characteristics” “Can I have my DB cluster up and running by next week and connected to the Web front end ? ” “DB Cluster can’t talk to Web server” PROVISIONING 9 THE OPERATOR 1. 2. 3. 4. 5. Provision tenant Image servers Create containers Create/select policies Request networking service to public cloud 6. Create EC 2 instance 7. . Need to correlate & contextualize: “which IP 1/MAC 1 on VNI X on Switch A can’t talk to IP 2/MAC 2 on VNI Y on Switch B? ” MANAGEMENT COMPLEXITY INCONSISTENCY REVENUE-LOSS LONG LEAD TIME VISIBILITY

SDN IN THE MULTICLOUD

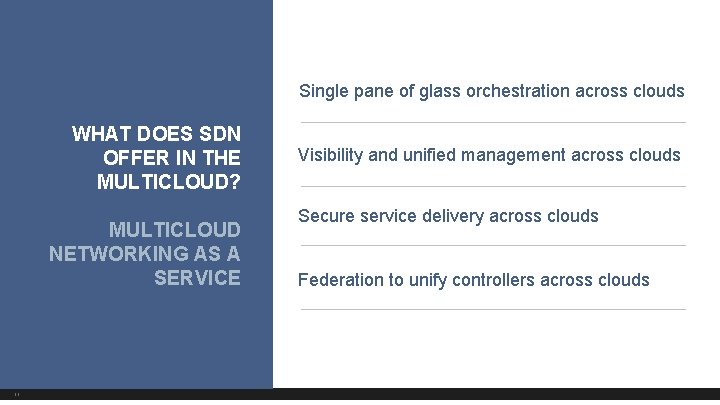

Single pane of glass orchestration across clouds WHAT DOES SDN OFFER IN THE MULTICLOUD? MULTICLOUD NETWORKING AS A SERVICE 11 Visibility and unified management across clouds Secure service delivery across clouds Federation to unify controllers across clouds

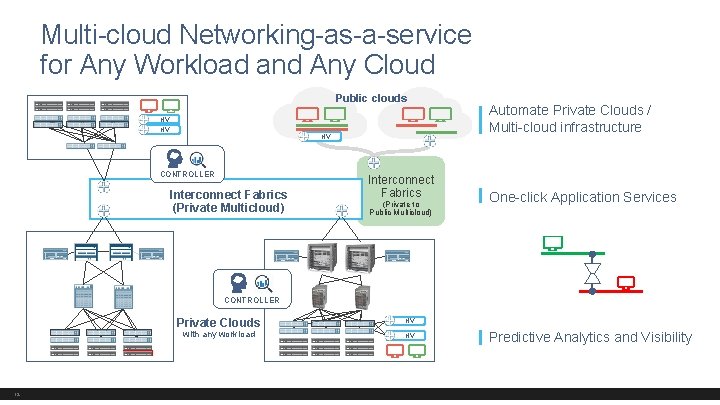

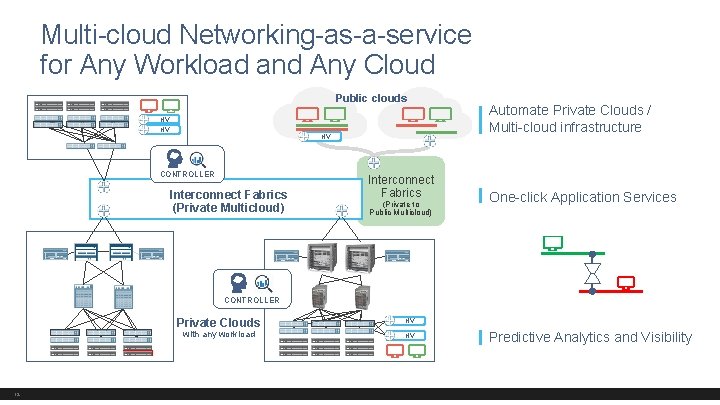

Multi-cloud Networking-as-a-service for Any Workload and Any Cloud Public clouds HV HV HV CONTROLLER Interconnect Fabrics (Private Multicloud) Interconnect Fabrics (Private to Public Multicloud) Automate Private Clouds / Multi-cloud infrastructure One-click Application Services CONTROLLER Private Clouds with any workload 12 HV HV Predictive Analytics and Visibility

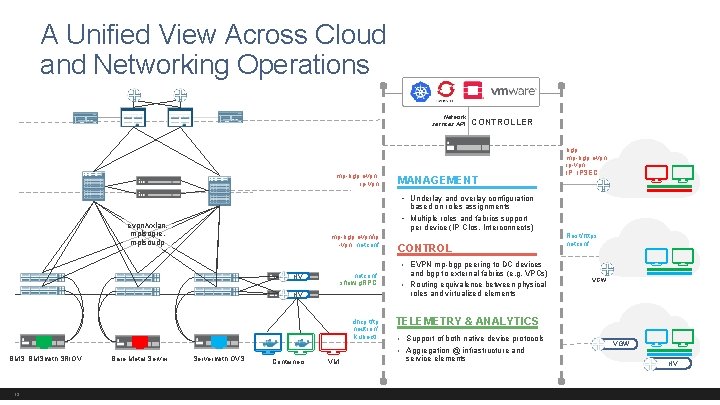

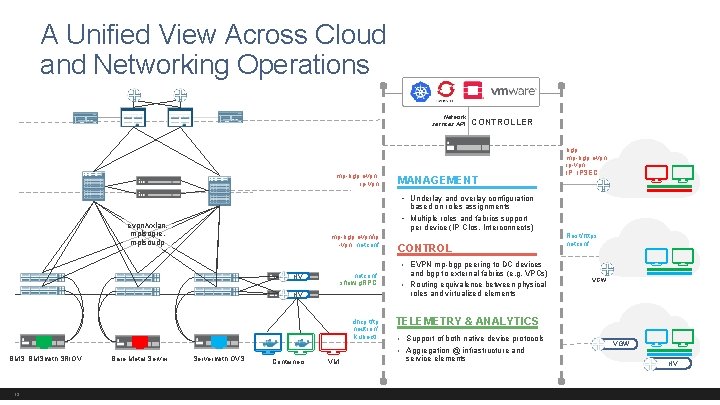

A Unified View Across Cloud and Networking Operations Network services API mp-bgp evpn, ip-vpn CONTROLLER MANAGEMENT bgp, mp-bgp evpn, ip-vpn IP, IPSEC • Underlay and overlay configuration based on roles assignments • Multiple roles and fabrics support evpn/vxlan, mplsogre, mplsoudp per device (IP Clos, Interconnects) mp-bgp evpn/ip -vpn, netconf CONTROL Rest/https netconf • EVPN mp-bgp peering to DC devices netconf sflow, g. RPC HV and bgp to external fabrics (e. g. VPCs) • Routing equivalence between physical HV dhcp, tftp neutron/ kubectl TELEMETRY & ANALYTICS • Support of both native device protocols • Aggregation @ infrastructure and BMS, BMS with SRIOV 13 Bare Metal Server with OVS Containers VGW roles and virtualized elements VM service elements VGW HV

BUILDING BLOCKS

Data Center Requirements Design Requirement 15 Technology Attribute Rising EW traffic growth Easy scale-out Resiliency and low latency Non-blocking, fast fail-over Agility and speed Any service anywhere Open architecture No vendor lock-in Design simplicity No steep learning curve Architectural flexibility EW, NS & DCI

Common Building Blocks for Data Centers Public Internet DCI Peering Routers MPLS/IP Backbone Public Cloud DATA CENTER FABRIC DATA CENTER INTERCONNECT WAN INTEGRATION HYBRID CLOUD CONNECTIVITY CLOS Fabric (IP, MPLS) WAN or Dark fiber Private/Public WAN High Performance Routing DC Edge CORE Service Edge Boundary Spine TORs 16 On-prem DC extension into public cloud CORE DC Edge (collapsed)) DC Edge OR Spine Data Center 1 Data Center 2 COLO BASED INTERCONNECT DC Edge Cloud Edge Data Center 1 Data Center 2 Data Center 1 (on-prem) Data Center 1 (public cloud)

BUILDING THE PRIVATE CLOUD – DC FABRIC

Defining Terminology… E Edge (DC Edge) E Optional: Can be collapsed into one layer F POD-1 L 18 S S L POD-N S L L Fabric (DC Core/Interconnect) F L S S L Spine (DC Aggregation Layer) S L L Leaf (DC Access Layer)

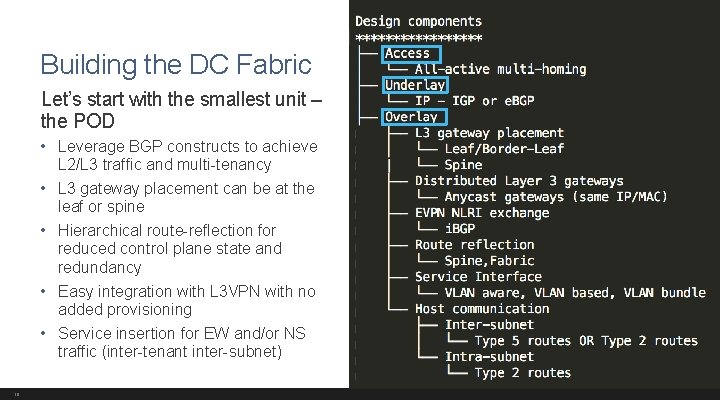

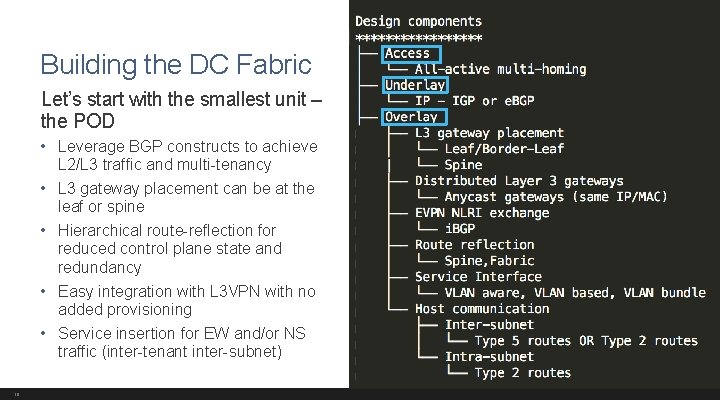

Building the DC Fabric Let’s start with the smallest unit – the POD • Leverage BGP constructs to achieve L 2/L 3 traffic and multi-tenancy • L 3 gateway placement can be at the leaf or spine • Hierarchical route-reflection for reduced control plane state and redundancy • Easy integration with L 3 VPN with no added provisioning • Service insertion for EW and/or NS traffic (inter-tenant inter-subnet) 19

Architectural Flexibility Containerization influence on network infrastructure PROBLEM STATEMENT Communication needs to be enabled between 2000 containers residing on servers spread across racks IP FABRIC Connection between servers and TORs can be Layer 2 or Layer 3 c 1 20 c 2 … c 2000

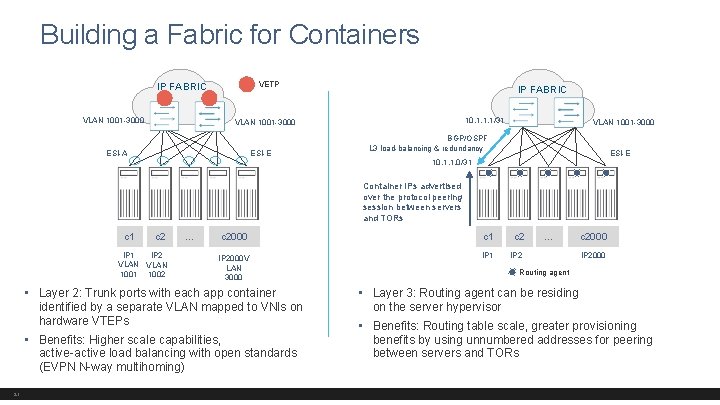

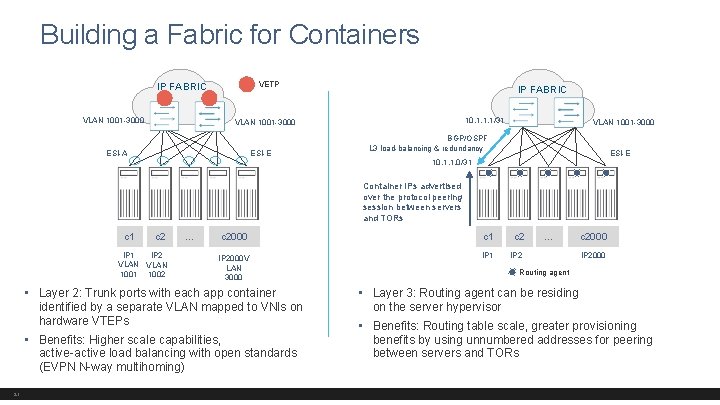

Building a Fabric for Containers VETP IP FABRIC VLAN 1001 -3000 IP FABRIC 10. 1. 1. 1/31 VLAN 1001 -3000 ESI-A ESI-E VLAN 1001 -3000 BGP/OSPF L 3 load-balancing & redundancy ESI-E 10. 1. 1. 0/31 Container IPs advertised over the protocol peering session between servers and TORs c 1 c 2 IP 1 IP 2 VLAN 1001 1002 … c 2000 c 1 c 2 IP 2000 V LAN 3000 IP 1 IP 2 • Layer 2: Trunk ports with each app container identified by a separate VLAN mapped to VNIs on hardware VTEPs • Benefits: Higher scale capabilities, active-active load balancing with open standards (EVPN N-way multihoming) 21 … c 2000 IP 2000 Routing agent • Layer 3: Routing agent can be residing on the server hypervisor • Benefits: Routing table scale, greater provisioning benefits by using unnumbered addresses for peering between servers and TORs

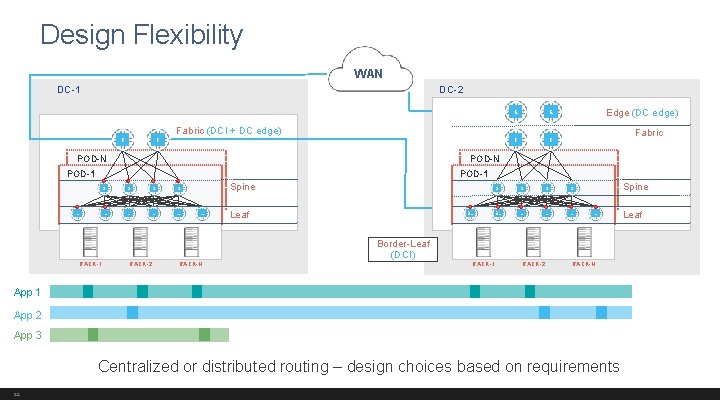

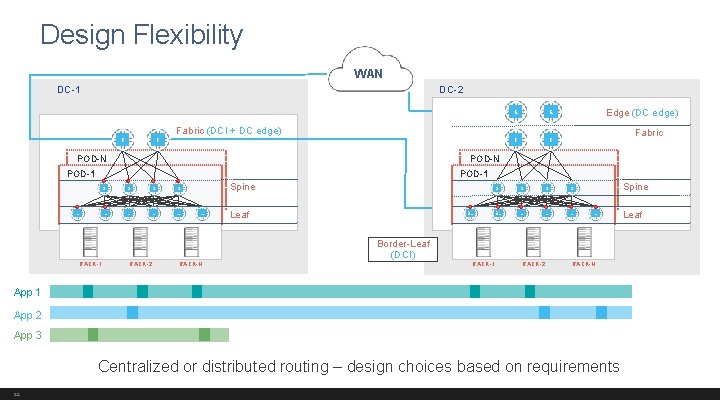

Design Flexibility WAN DC-1 DC-2 F F Fabric (DCI + DC edge) POD-N E E F F Edge (DC edge) Fabric POD-N POD-1 L S S L L Spine L Leaf BL S S BL L Spine L Border-Leaf (DCI) RACK-1 RACK-2 RACK-N App 1 App 2 App 3 Centralized or distributed routing – design choices based on requirements 22 Leaf

BUILDING THE PRIVATE CLOUD – DC INTERCONNECT (DCI)

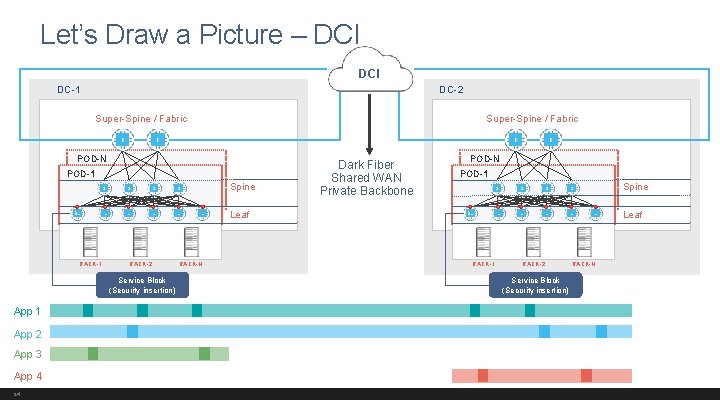

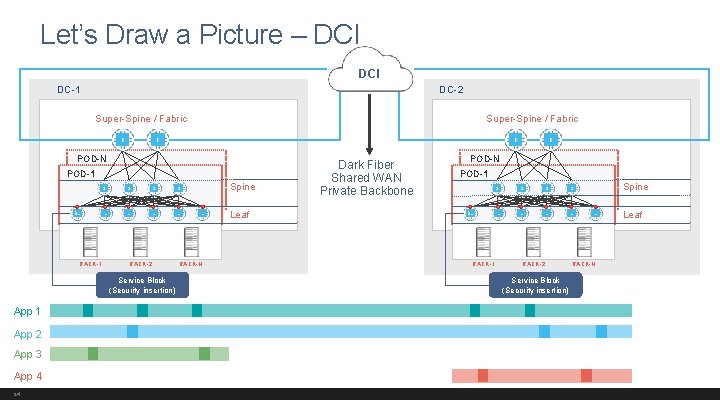

Let’s Draw a Picture – DCI DC-1 DC-2 Super-Spine / Fabric F F POD-N POD-1 BL RACK-1 S S L L RACK-2 Service Block (Security insertion) App 1 App 2 App 3 App 4 24 F F Spine L RACK-N Leaf Dark Fiber Shared WAN Private Backbone POD-N POD-1 BL RACK-1 S S L L RACK-2 Service Block (Security insertion) Spine L RACK-N Leaf

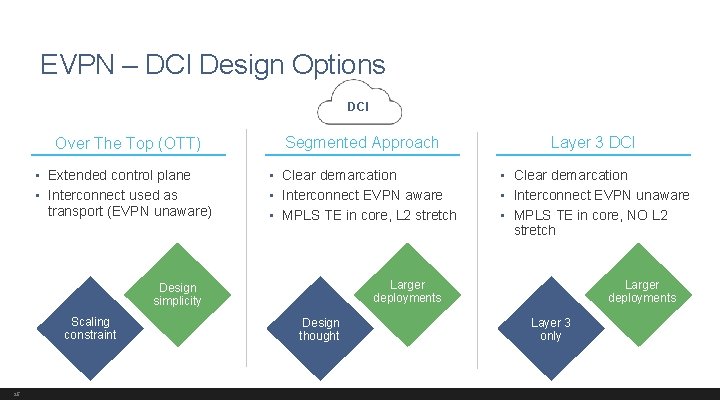

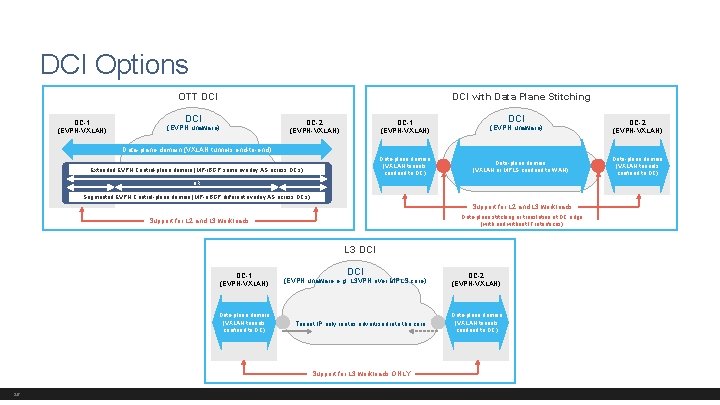

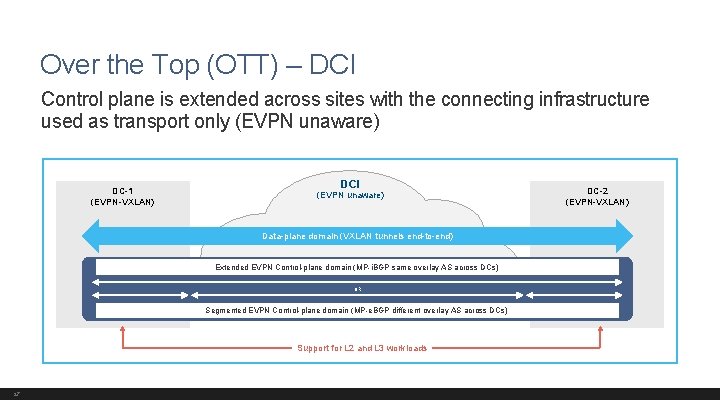

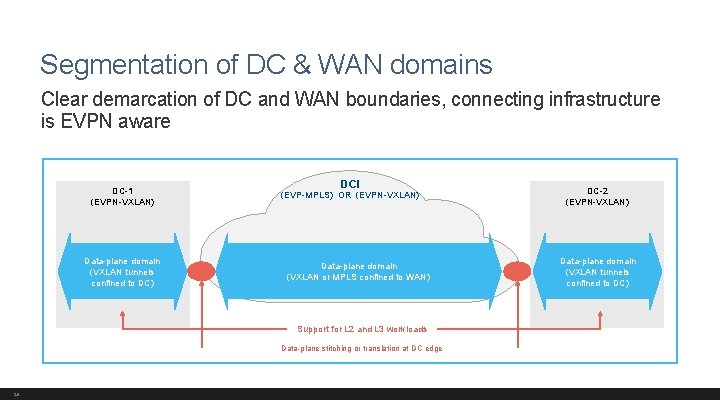

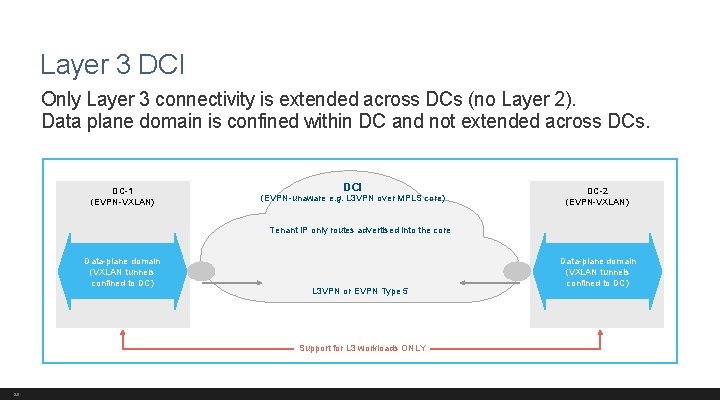

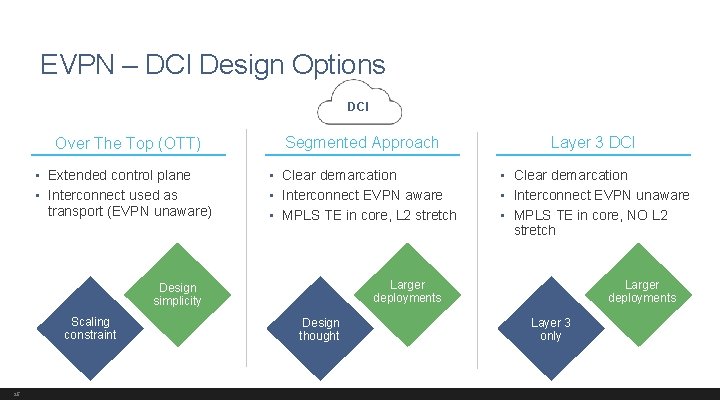

EVPN – DCI Design Options DCI Over The Top (OTT) • Extended control plane • Interconnect used as transport (EVPN unaware) Segmented Approach Layer 3 DCI • Clear demarcation • Interconnect EVPN aware • MPLS TE in core, L 2 stretch • Clear demarcation • Interconnect EVPN unaware • MPLS TE in core, NO L 2 stretch Scaling constraint 25 Larger deployments Design simplicity Design thought Layer 3 only

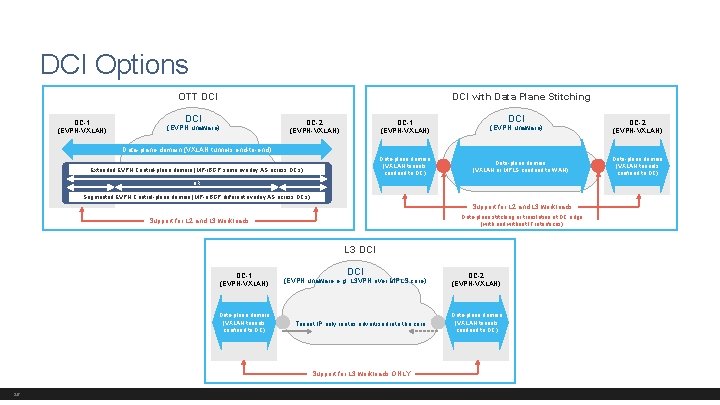

DCI Options OTT DCI DC-1 (EVPN-VXLAN) DCI with Data Plane Stitching DCI (EVPN unaware) DC-2 (EVPN-VXLAN) DC-1 (EVPN-VXLAN) DCI (EVPN unaware) DC-2 (EVPN-VXLAN) Data-plane domain (VXLAN tunnels end-to-end) Data-plane domain (VXLAN tunnels confined to DC) Extended EVPN Control-plane domain (MP-i. BGP same overlay AS across DCs) Data-plane domain (VXLAN or MPLS confined to WAN) OR Segmented EVPN Control-plane domain (MP-e. BGP different overlay AS across DCs) Support for L 2 and L 3 workloads Data-plane stitching or translation at DC edge (with and without IT interfaces) Support for L 2 and L 3 workloads L 3 DCI DC-1 (EVPN-VXLAN) (EVPN unaware e. g. L 3 VPN over MPLS core) DC-2 (EVPN-VXLAN) Data-plane domain (VXLAN tunnels confined to DC) Tenant IP only routes advertised into the core Data-plane domain (VXLAN tunnels confined to DC) Support for L 3 workloads ONLY 26 Data-plane domain (VXLAN tunnels confined to DC)

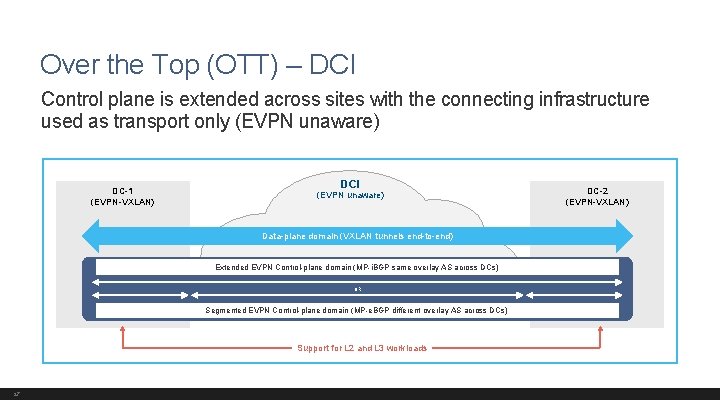

Over the Top (OTT) – DCI Control plane is extended across sites with the connecting infrastructure used as transport only (EVPN unaware) DC-1 (EVPN-VXLAN) DCI (EVPN unaware) Data-plane domain (VXLAN tunnels end-to-end) Extended EVPN Control-plane domain (MP-i. BGP same overlay AS across DCs) OR Segmented EVPN Control-plane domain (MP-e. BGP different overlay AS across DCs) Support for L 2 and L 3 workloads 27 DC-2 (EVPN-VXLAN)

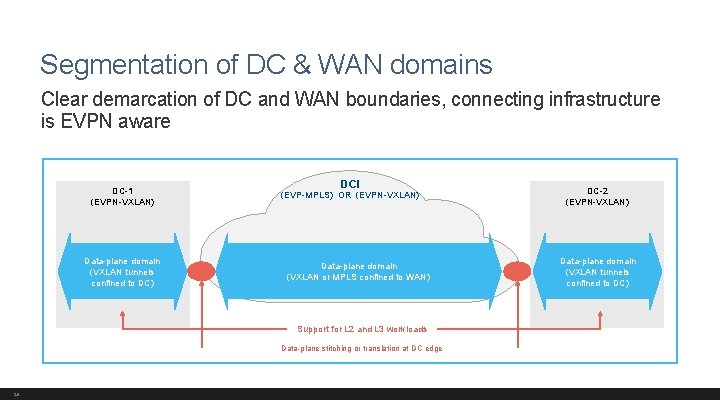

Segmentation of DC & WAN domains Clear demarcation of DC and WAN boundaries, connecting infrastructure is EVPN aware DC-1 (EVPN-VXLAN) Data-plane domain (VXLAN tunnels confined to DC) DCI (EVP-MPLS) OR (EVPN-VXLAN) Data-plane domain (VXLAN or MPLS confined to WAN) Support for L 2 and L 3 workloads Data-plane stitching or translation at DC edge 28 DC-2 (EVPN-VXLAN) Data-plane domain (VXLAN tunnels confined to DC)

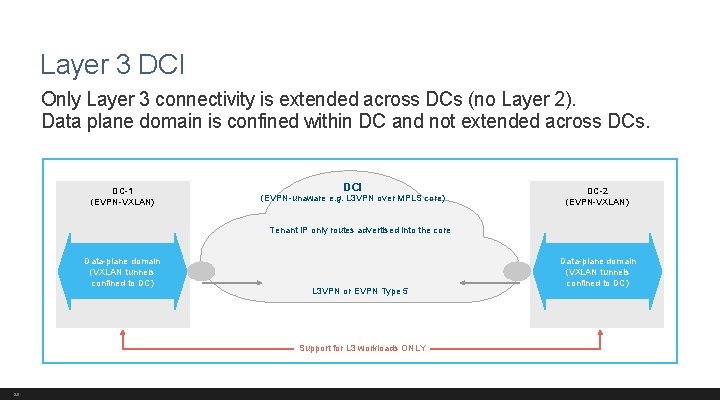

Layer 3 DCI Only Layer 3 connectivity is extended across DCs (no Layer 2). Data plane domain is confined within DC and not extended across DCs. DC-1 (EVPN-VXLAN) DCI (EVPN-unaware e. g. L 3 VPN over MPLS core) DC-2 (EVPN-VXLAN) Tenant IP only routes advertised into the core Data-plane domain (VXLAN tunnels confined to DC) L 3 VPN or EVPN Type 5 Support for L 3 workloads ONLY 29 Data-plane domain (VXLAN tunnels confined to DC)

BUILDING THE PRIVATE CLOUD – WAN INTEGRATION

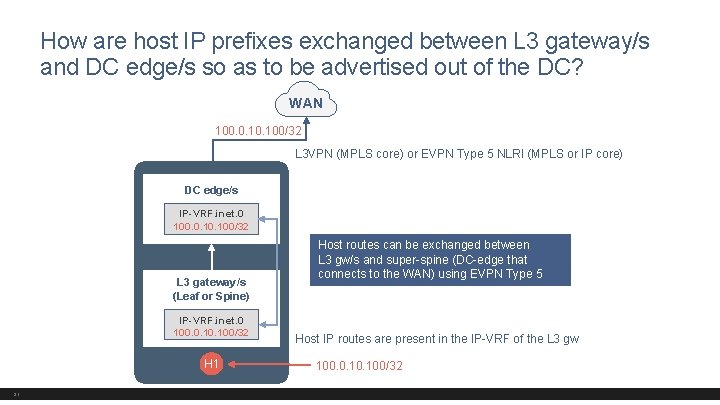

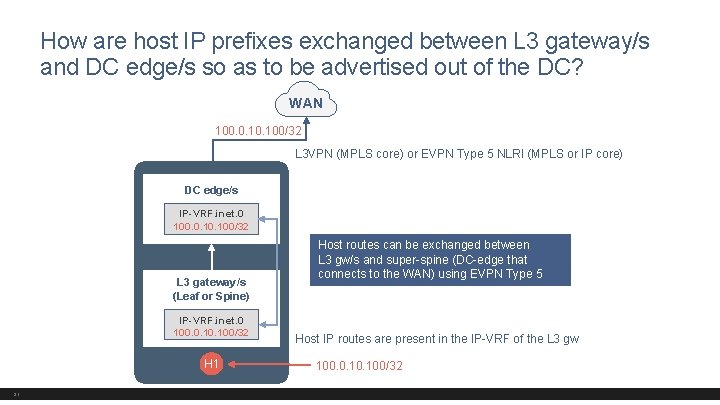

How are host IP prefixes exchanged between L 3 gateway/s and DC edge/s so as to be advertised out of the DC? WAN 100. 0. 100/32 L 3 VPN (MPLS core) or EVPN Type 5 NLRI (MPLS or IP core) DC edge/s IP-VRF. inet. 0 100. 0. 100/32 L 3 gateway/s (Leaf or Spine) IP-VRF. inet. 0 100. 0. 100/32 H 1 31 Host routes can be exchanged between L 3 gw/s and super-spine (DC-edge that connects to the WAN) using EVPN Type 5 Host IP routes are present in the IP-VRF of the L 3 gw 100. 0. 100/32

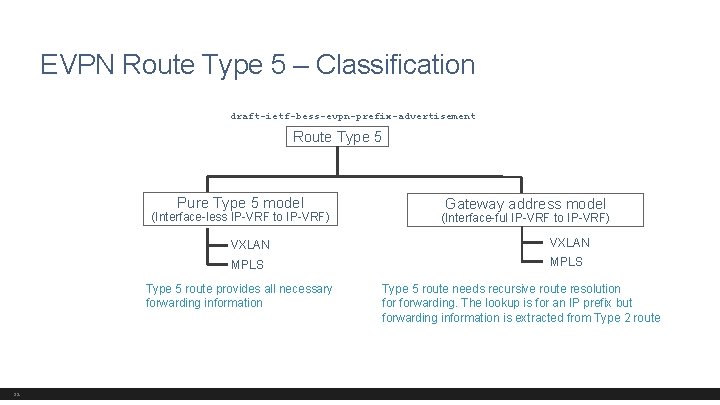

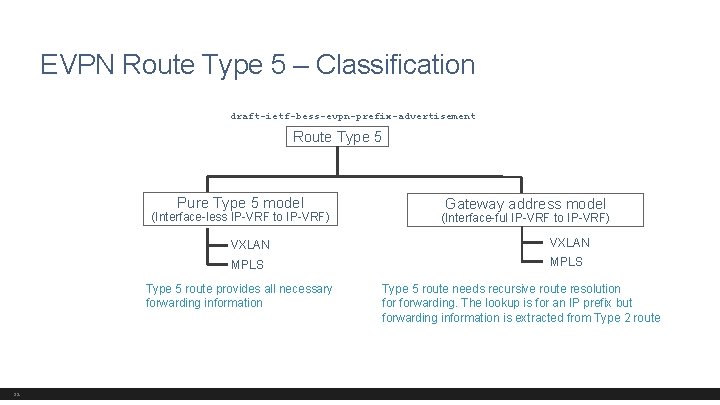

EVPN Route Type 5 – Classification draft-ietf-bess-evpn-prefix-advertisement Route Type 5 Pure Type 5 model (Interface-less IP-VRF to IP-VRF) VXLAN MPLS Type 5 route provides all necessary forwarding information 32 Gateway address model (Interface-ful IP-VRF to IP-VRF) Type 5 route needs recursive route resolution forwarding. The lookup is for an IP prefix but forwarding information is extracted from Type 2 route

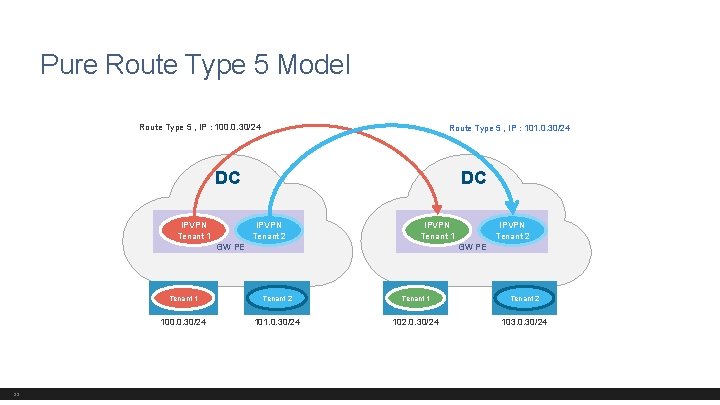

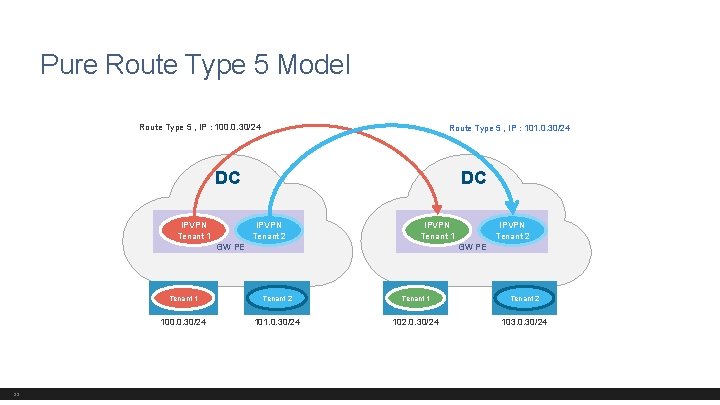

Pure Route Type 5 Model Route Type 5 , IP : 100. 0. 30/24 Route Type 5 , IP : 101. 0. 30/24 DC IPVPN Tenant 1 DC IPVPN Tenant 2 IPVPN Tenant 1 GW PE 33 IPVPN Tenant 2 GW PE Tenant 1 Tenant 2 100. 0. 30/24 101. 0. 30/24 102. 0. 30/24 103. 0. 30/24

Packet Walk – Pure Route Type 5 MAC-VRF (VRF_TENANT_1) IP-VRF MAC-VRF irb. 5010 irb. 5020 VNI 1020 VNI 5010 2 3 D-IP: LEAF-3, 4 S-IP: LEAF-1, 2 VNI : VNI 1020 LEAF-1 LEAF-2 INGRESS VTEPs S-MAC: ROUTER-MAC (LEAF-1, 2) D-MAC: VRRP MAC S-MAC: MAC 1 D-IP : IP 4 S-IP : IP 1 1 VRF_TENANT_1 H 1 (VLAN 10) IP 1 = 100. 0. 30. 100 MAC 1 = 00: 1 e: 63: c 8: 7 c 34 D-MAC: ROUTER-MAC (LEAF-3, 4) D-IP : IP 4 S-IP : IP 1 LEAF-3 LEAF-4 EGRESS VTEPs D-MAC: MAC 4 4 VRF_TENANT_1 H 4 (VLAN 20) IP 4 = 102. 0. 30. 100 MAC 4 = 00: 00: 93: 3 c: f 4 S-MAC: IRB MAC D-IP : IP 4 S-IP : IP 1

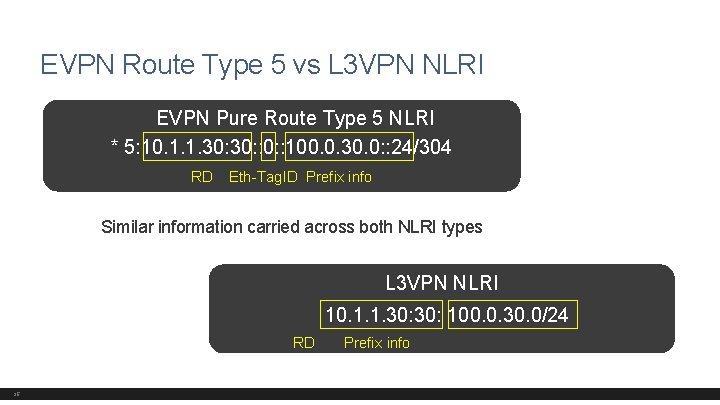

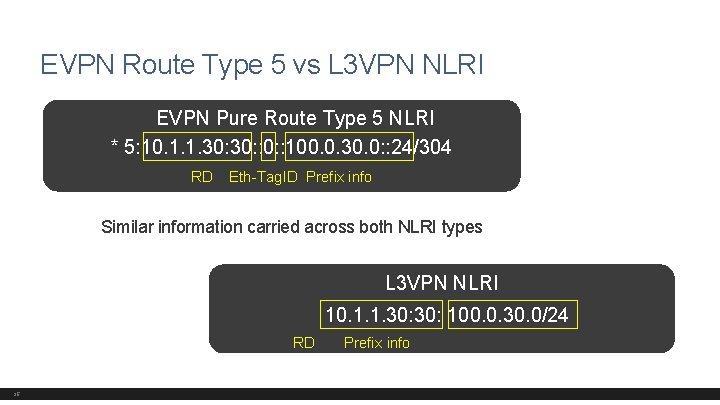

EVPN Route Type 5 vs L 3 VPN NLRI EVPN Pure Route Type 5 NLRI * 5: 10. 1. 1. 30: : 100. 0. 30. 0: : 24/304 RD Eth-Tag. ID Prefix info Similar information carried across both NLRI types L 3 VPN NLRI 10. 1. 1. 30: 100. 0. 30. 0/24 RD 35 Prefix info

Benefits with EVPN Type 5 • Unified solution end to end with one address family inside the DC and outside • Data plane flexibility with EVPN – use over MPLS or IP core • If you do not have MPLS between DCs for DCI • It is not possible to run L 3 VPN over VXLAN • For control plane, Route Type 5 is the only option • Hybrid cloud connectivity (Type 5 with VXLAN over GRE/IPsec) 36

BUILDING THE PRIVATE CLOUD – TRAFFIC OPTIMIZATION

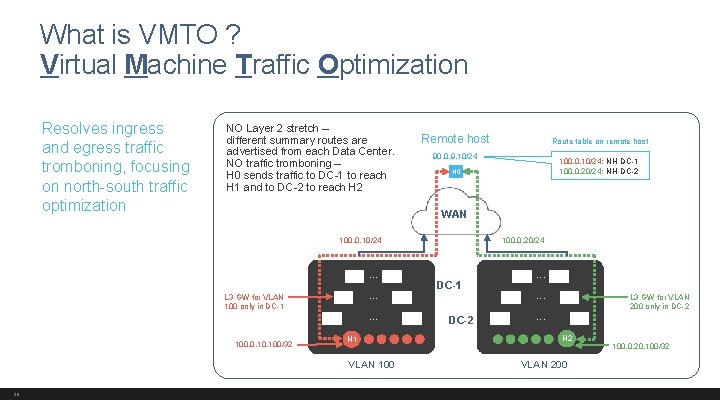

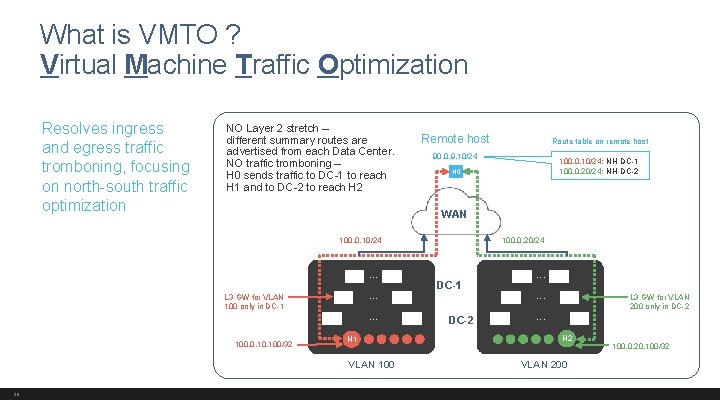

What is VMTO ? Virtual Machine Traffic Optimization Resolves ingress and egress traffic tromboning, focusing on north-south traffic optimization NO Layer 2 stretch – different summary routes are advertised from each Data Center. NO traffic tromboning – H 0 sends traffic to DC-1 to reach H 1 and to DC-2 to reach H 2 Remote host 90. 0. 9. 10/24 100. 0. 10/24: NH DC-1 100. 0. 20/24: NH DC-2 H 0 WAN 100. 0. 10/24 . . . L 3 GW for VLAN 100 only in DC-1 100. 0. 100/32 . . . H 1 VLAN 100 38 Route table on remote host 100. 0. 20/24 DC-1 DC-2 . . . L 3 GW for VLAN 200 only in DC-2 . . . H 2 VLAN 200 100. 0. 20. 100/32

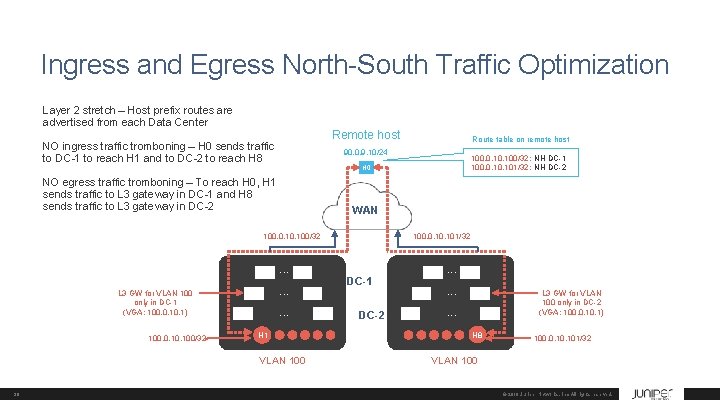

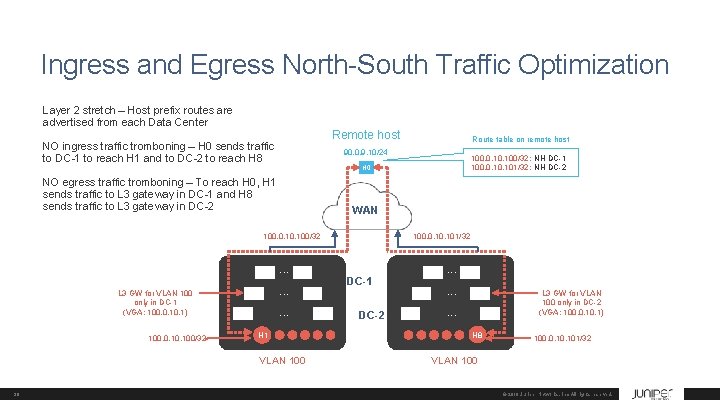

Ingress and Egress North-South Traffic Optimization Layer 2 stretch – Host prefix routes are advertised from each Data Center Remote host NO ingress traffic tromboning – H 0 sends traffic to DC-1 to reach H 1 and to DC-2 to reach H 8 WAN 100. 0. 10. 100/32 . . . H 1 VLAN 100 39 100. 0. 100/32: NH DC-1 100. 0. 101/32: NH DC-2 H 0 NO egress traffic tromboning – To reach H 0, H 1 sends traffic to L 3 gateway in DC-1 and H 8 sends traffic to L 3 gateway in DC-2 L 3 GW for VLAN 100 only in DC-1 (VGA: 100. 0. 1) Route table on remote host 90. 0. 9. 10/24 100. 0. 101/32 DC-1 DC-2 . . . L 3 GW for VLAN 100 only in DC-2 (VGA: 100. 0. 1) . . . H 8 100. 0. 101/32 VLAN 100 © 2018 Juniper Networks, Inc. All rights reserved.

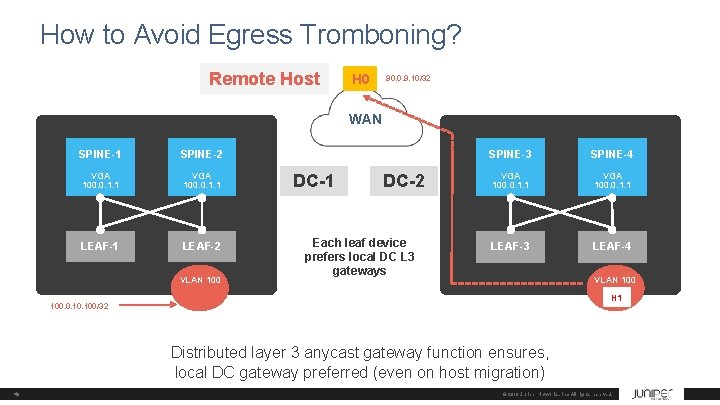

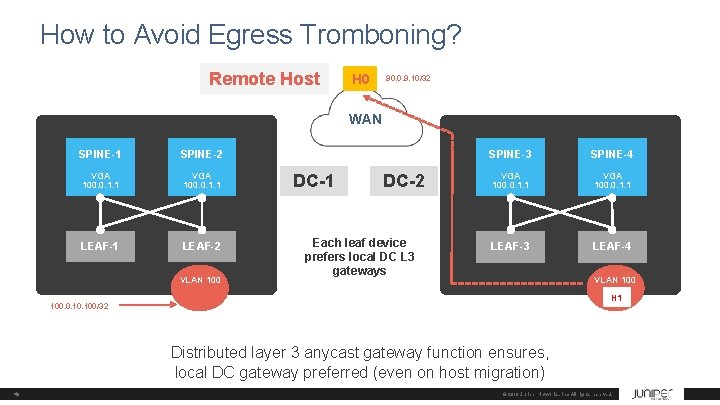

How to Avoid Egress Tromboning? Remote Host H 0 90. 0. 9. 10/32 WAN SPINE-1 SPINE-2 VGA 100. 0. 1. 1 LEAF-1 LEAF-2 VLAN 100 DC-1 DC-2 Each leaf device prefers local DC L 3 gateways SPINE-3 SPINE-4 VGA 100. 0. 1. 1 LEAF-3 LEAF-4 VLAN 100 H 1 100. 0. 100/32 Distributed layer 3 anycast gateway function ensures, local DC gateway preferred (even on host migration) 40 © 2018 Juniper Networks, Inc. All rights reserved.

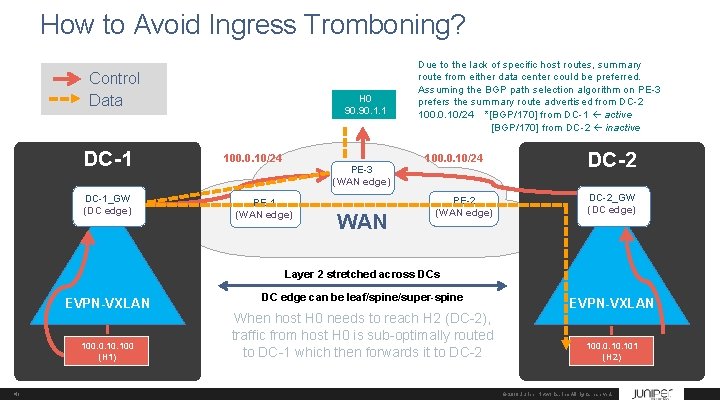

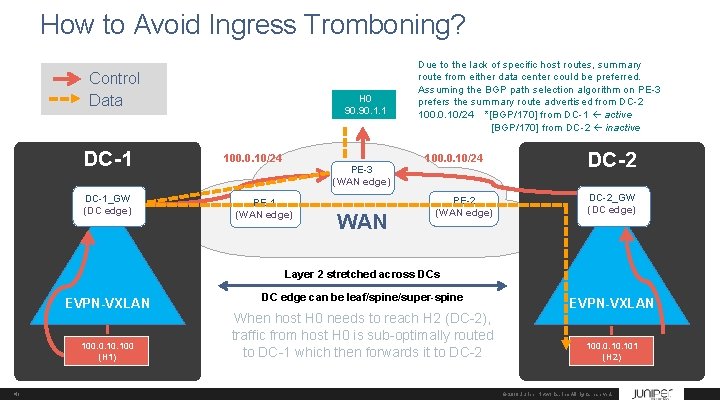

How to Avoid Ingress Tromboning? Control Data DC-1_GW (DC edge) H 0 90. 1. 1 100. 0. 10/24 PE-3 (WAN edge) PE-1 (WAN edge) WAN Due to the lack of specific host routes, summary route from either data center could be preferred. Assuming the BGP path selection algorithm on PE-3 prefers the summary route advertised from DC-2 100. 0. 10/24 *[BGP/170] from DC-1 active [BGP/170] from DC-2 inactive 100. 0. 10/24 PE-2 (WAN edge) DC-2_GW (DC edge) Layer 2 stretched across DCs EVPN-VXLAN 100. 0. 100 (H 1) 41 DC edge can be leaf/spine/super-spine When host H 0 needs to reach H 2 (DC-2), traffic from host H 0 is sub-optimally routed to DC-1 which then forwards it to DC-2 EVPN-VXLAN 100. 0. 101 (H 2) © 2018 Juniper Networks, Inc. All rights reserved.

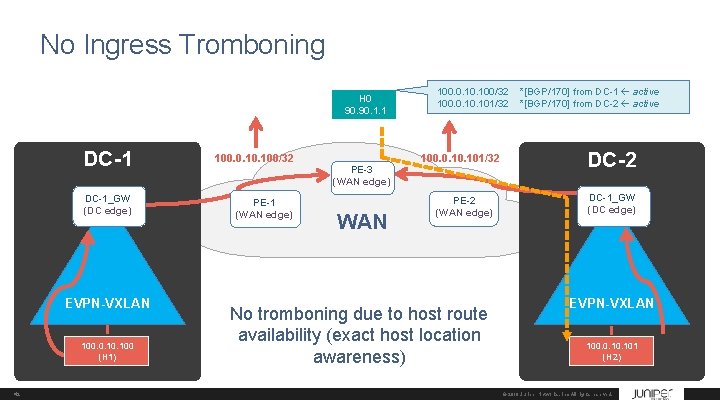

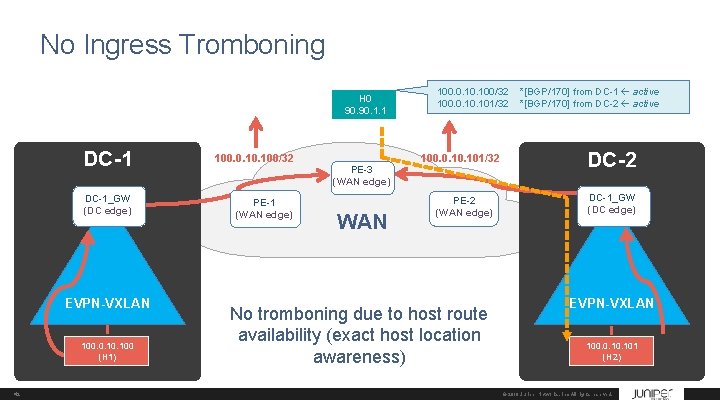

No Ingress Tromboning H 0 90. 1. 1 DC-1 100. 0. 100/32 DC-1_GW (DC edge) PE-1 (WAN edge) EVPN-VXLAN 100. 0. 100 (H 1) 42 PE-3 (WAN edge) WAN 100. 0. 100/32 100. 0. 10. 101/32 PE-2 (WAN edge) No tromboning due to host route availability (exact host location awareness) *[BGP/170] from DC-1 active *[BGP/170] from DC-2 active DC-2 DC-1_GW (DC edge) EVPN-VXLAN 100. 0. 101 (H 2) © 2018 Juniper Networks, Inc. All rights reserved.

Thank You