MultiAgent Systems Lecture 2 Computer Science WPI Spring

- Slides: 27

Multi-Agent Systems Lecture 2 Computer Science WPI Spring 2002 Adina Magda Florea adina@wpi. edu

Models of agency and architectures Lecture outline n n n Links with other disciplines Subjects of study in MAS Cognitive agent architectures Reactive agent architectures Layered architectures Middleware (if time permits)

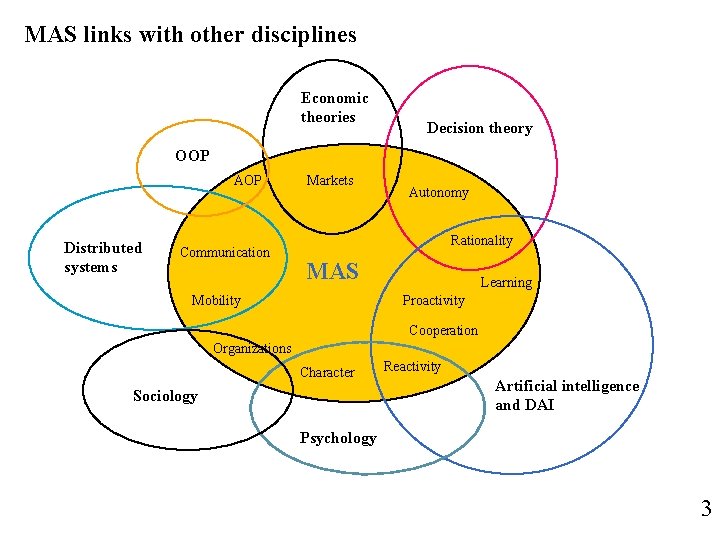

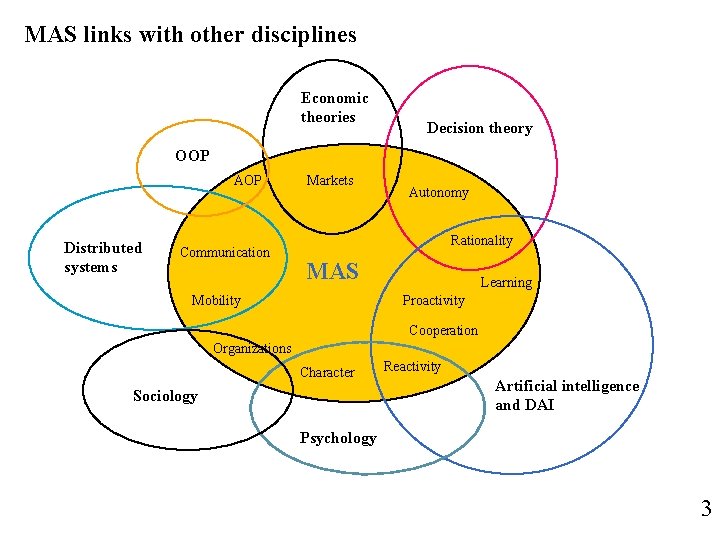

MAS links with other disciplines Economic theories Decision theory OOP AOP Distributed systems Communication Markets Autonomy Rationality MAS Mobility Learning Proactivity Cooperation Organizations Character Sociology Reactivity Artificial intelligence and DAI Psychology 3

Areas of R&D in MAS § § § § § Agent architectures Knowledge representation: of world, of itself, of the other agents Communication: languages, protocols Planning: task sharing, result sharing, distributed planning Coordination, distributed search Decision making: negotiation, markets, coalition formation Learning Organizational theories Implementation: – Agent programming: paradigms, languages – Agent platforms – Middleware, mobility, security § Applications – Industrial applications: real-time monitoring and management of manufacturing and production process, telecommunication networks, transportation systems, electricity distribution systems, etc. – Business process management, decision support – ecommerce, emarkets - CAI, Web-based learning – information retrieving and filtering - Human-computer interaction – PDAs - Entertainment – CSCW 4

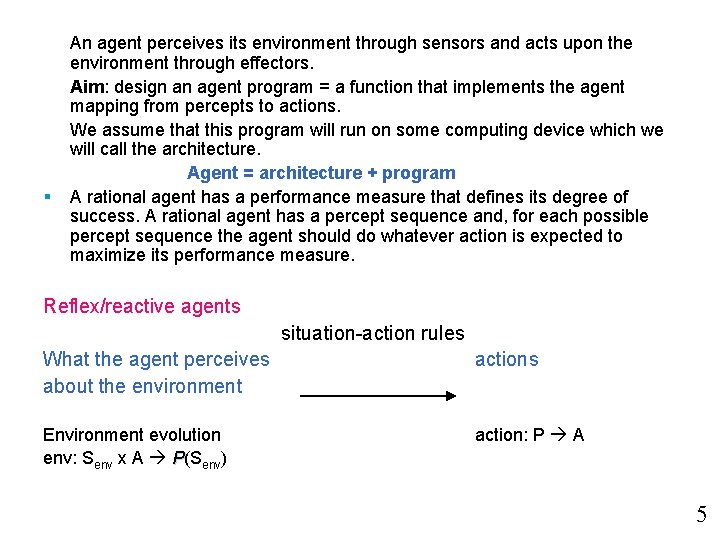

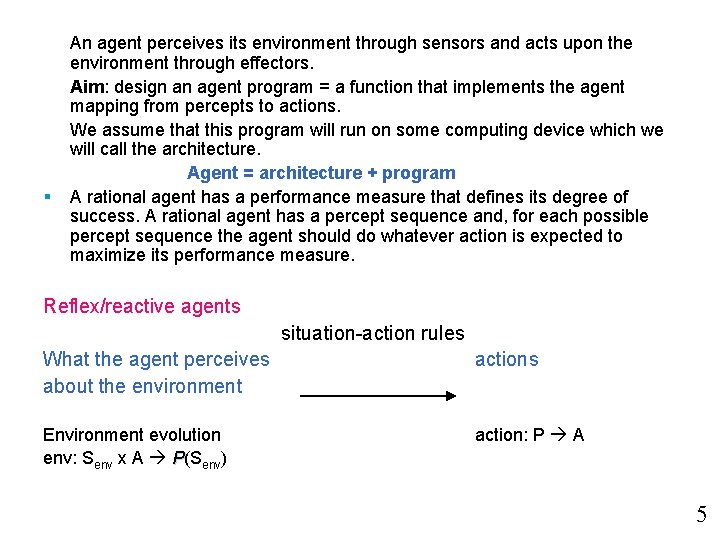

§ An agent perceives its environment through sensors and acts upon the environment through effectors. Aim: design an agent program = a function that implements the agent mapping from percepts to actions. We assume that this program will run on some computing device which we will call the architecture. Agent = architecture + program A rational agent has a performance measure that defines its degree of success. A rational agent has a percept sequence and, for each possible percept sequence the agent should do whatever action is expected to maximize its performance measure. Reflex/reactive agents situation-action rules What the agent perceives about the environment actions Environment evolution env: Senv x A P(Senv) action: P A 5

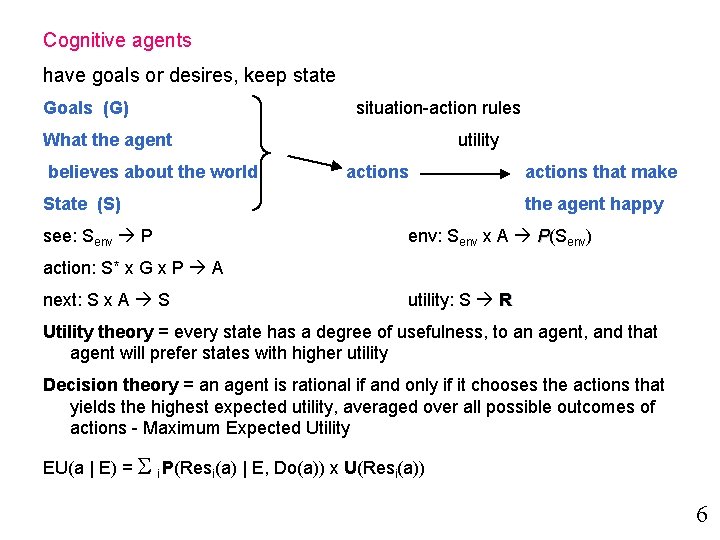

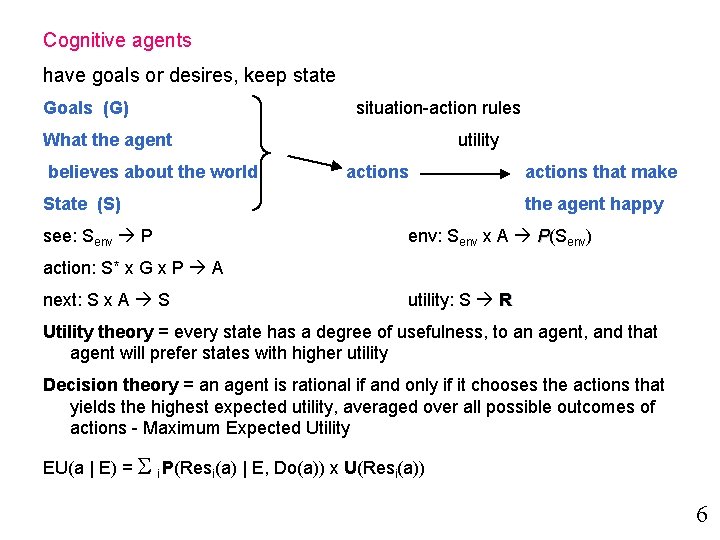

Cognitive agents have goals or desires, keep state Goals (G) situation-action rules What the agent believes about the world utility actions State (S) see: Senv P actions that make the agent happy env: Senv x A P(Senv) action: S* x G x P A next: S x A S utility: S R Utility theory = every state has a degree of usefulness, to an agent, and that agent will prefer states with higher utility Decision theory = an agent is rational if and only if it chooses the actions that yields the highest expected utility, averaged over all possible outcomes of actions - Maximum Expected Utility EU(a | E) = i P(Resi(a) | E, Do(a)) x U(Resi(a)) 6

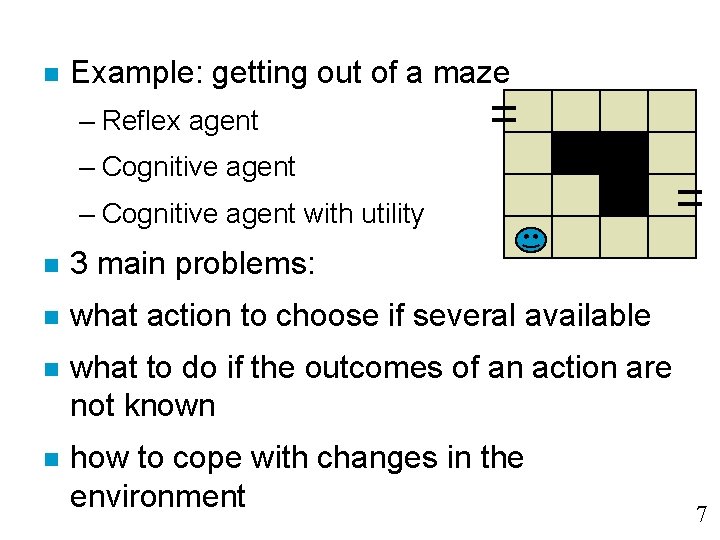

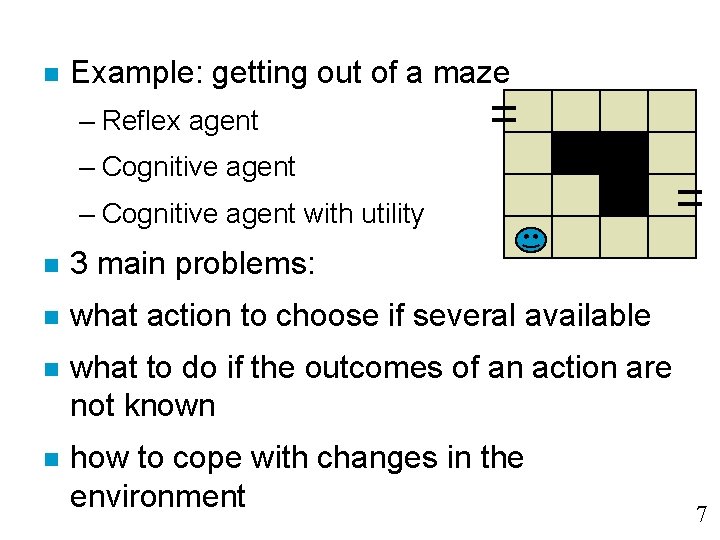

n Example: getting out of a maze – Reflex agent – Cognitive agent with utility n 3 main problems: n what action to choose if several available n what to do if the outcomes of an action are not known n how to cope with changes in the environment 7

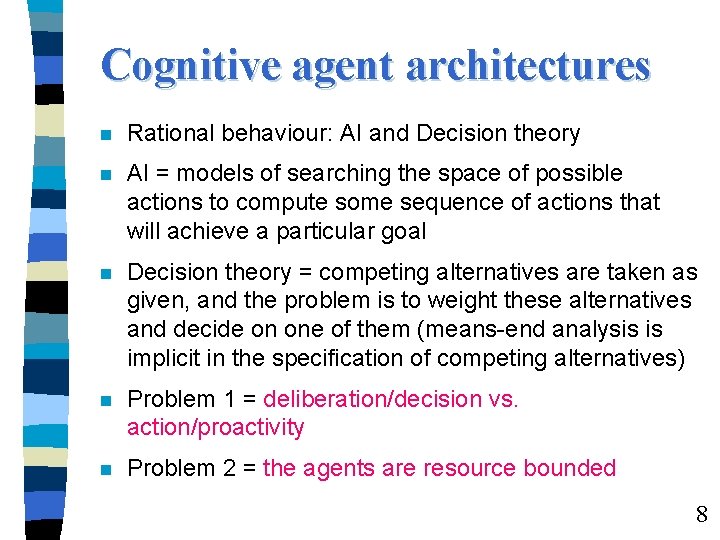

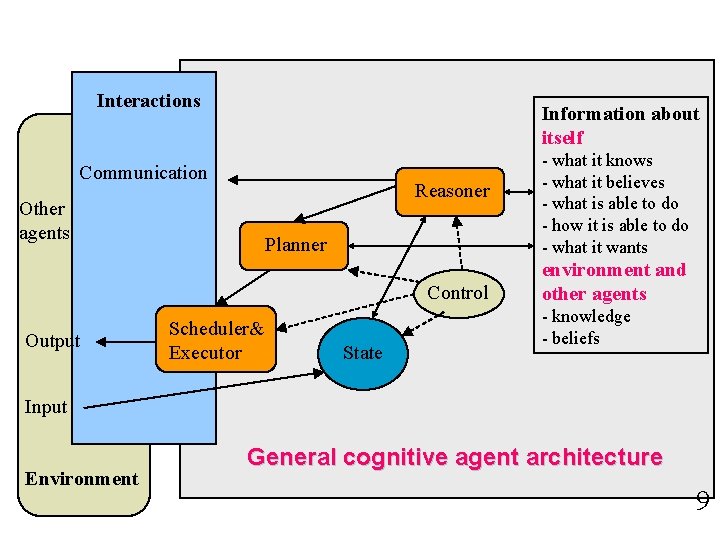

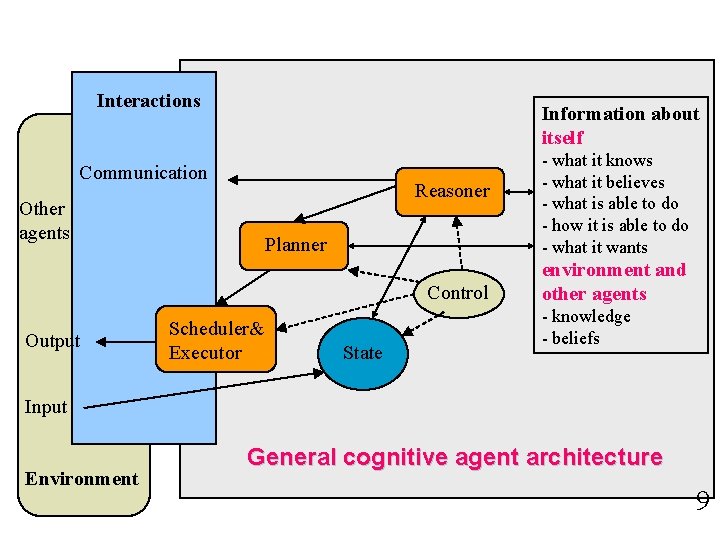

Cognitive agent architectures n Rational behaviour: AI and Decision theory n AI = models of searching the space of possible actions to compute some sequence of actions that will achieve a particular goal n Decision theory = competing alternatives are taken as given, and the problem is to weight these alternatives and decide on one of them (means-end analysis is implicit in the specification of competing alternatives) n Problem 1 = deliberation/decision vs. action/proactivity n Problem 2 = the agents are resource bounded 8

Interactions Information about itself Communication Reasoner Other agents Planner Control Output Scheduler& Executor State - what it knows - what it believes - what is able to do - how it is able to do - what it wants environment and other agents - knowledge - beliefs Input Environment General cognitive agent architecture 9

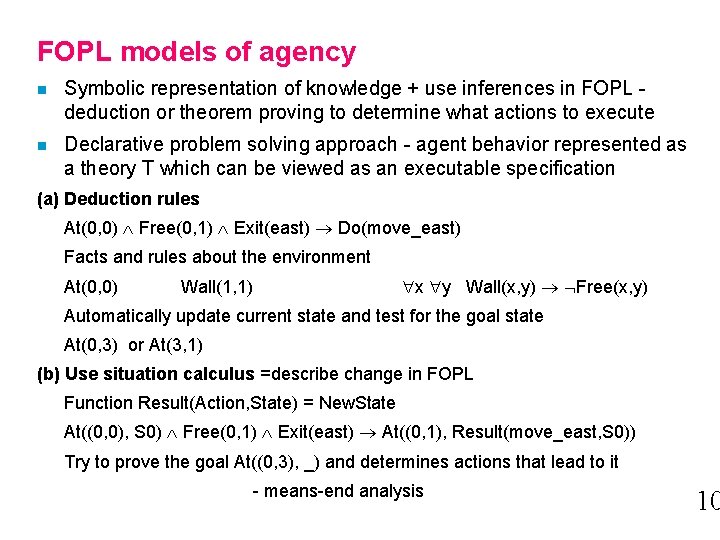

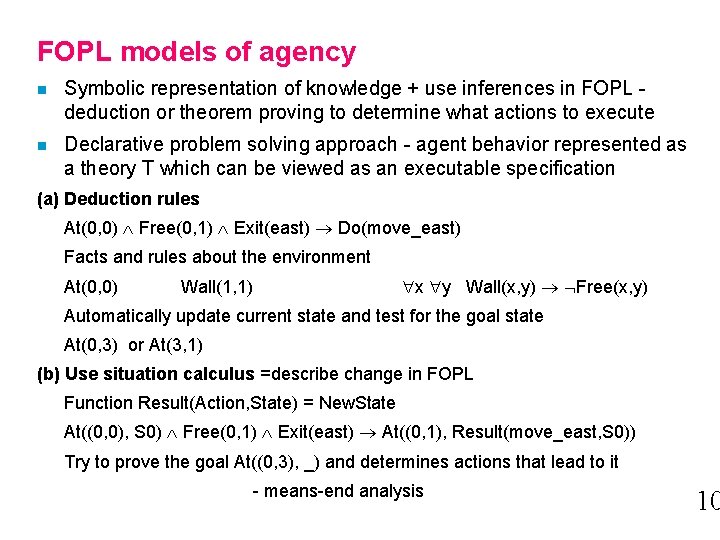

FOPL models of agency n Symbolic representation of knowledge + use inferences in FOPL deduction or theorem proving to determine what actions to execute n Declarative problem solving approach - agent behavior represented as a theory T which can be viewed as an executable specification (a) Deduction rules At(0, 0) Free(0, 1) Exit(east) Do(move_east) Facts and rules about the environment At(0, 0) x y Wall(x, y) Free(x, y) Wall(1, 1) Automatically update current state and test for the goal state At(0, 3) or At(3, 1) (b) Use situation calculus =describe change in FOPL Function Result(Action, State) = New. State At((0, 0), S 0) Free(0, 1) Exit(east) At((0, 1), Result(move_east, S 0)) Try to prove the goal At((0, 3), _) and determines actions that lead to it - means-end analysis 10

Advantages of FOPL - simple, elegant - executable specifications Disadvantages - difficult to represent changes over time other logics - decision making is deduction and selection of a strategy - intractable - semi-decidable 11

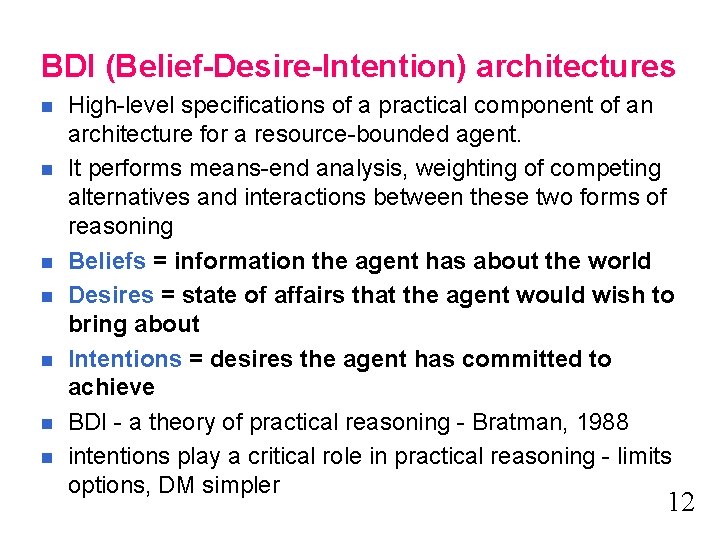

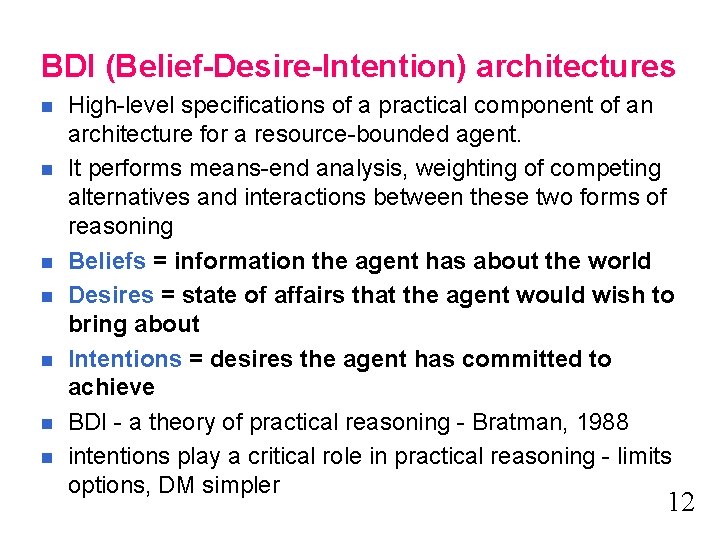

BDI (Belief-Desire-Intention) architectures n n n n High-level specifications of a practical component of an architecture for a resource-bounded agent. It performs means-end analysis, weighting of competing alternatives and interactions between these two forms of reasoning Beliefs = information the agent has about the world Desires = state of affairs that the agent would wish to bring about Intentions = desires the agent has committed to achieve BDI - a theory of practical reasoning - Bratman, 1988 intentions play a critical role in practical reasoning - limits options, DM simpler 12

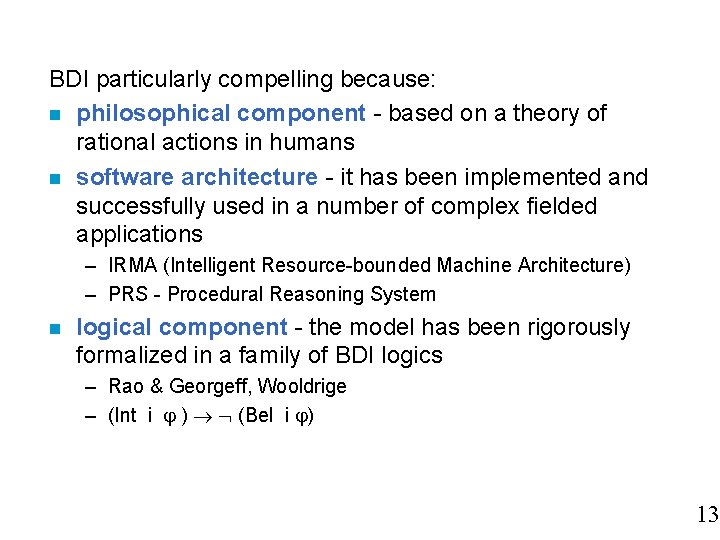

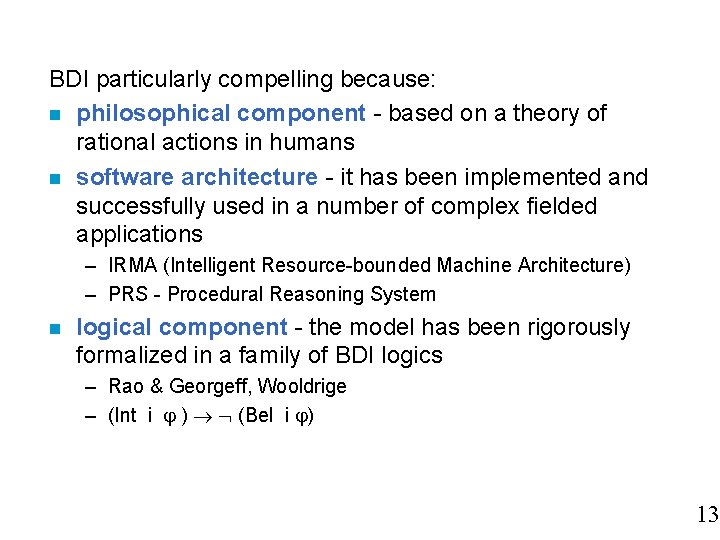

BDI particularly compelling because: n philosophical component - based on a theory of rational actions in humans n software architecture - it has been implemented and successfully used in a number of complex fielded applications – IRMA (Intelligent Resource-bounded Machine Architecture) – PRS - Procedural Reasoning System n logical component - the model has been rigorously formalized in a family of BDI logics – Rao & Georgeff, Wooldrige – (Int i ) (Bel i ) 13

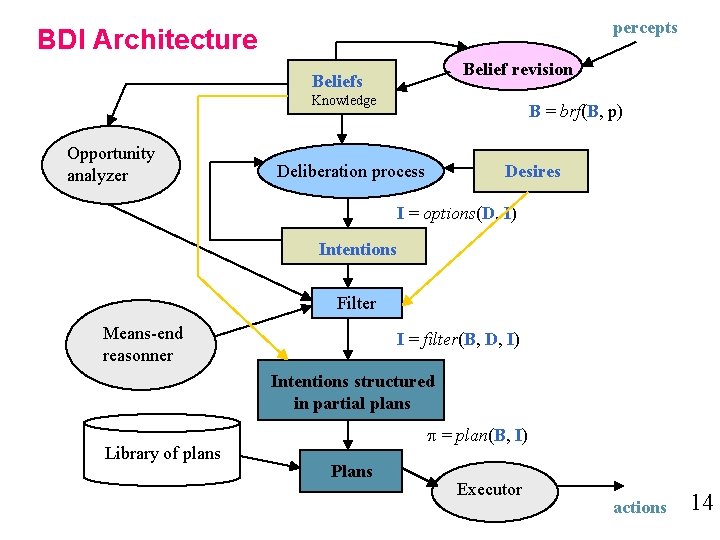

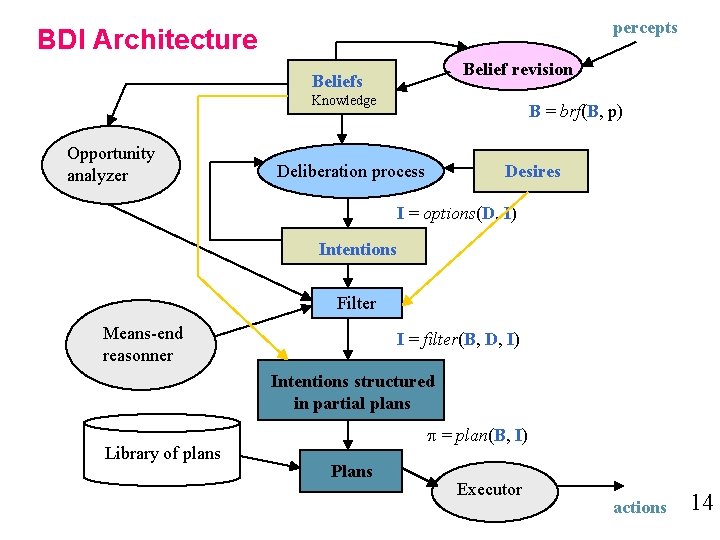

percepts BDI Architecture Belief revision Beliefs Knowledge Opportunity analyzer B = brf(B, p) Deliberation process Desires I = options(D, I) Intentions Filter Means-end reasonner I = filter(B, D, I) Intentions structured in partial plans Library of plans = plan(B, I) Plans Executor actions 14

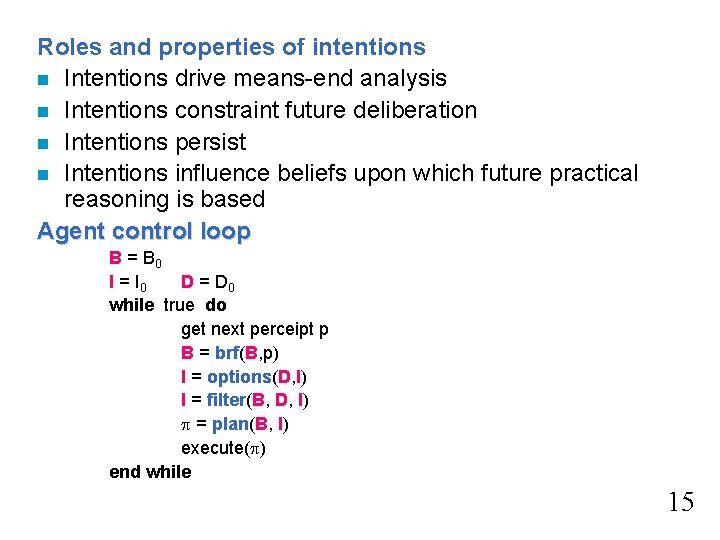

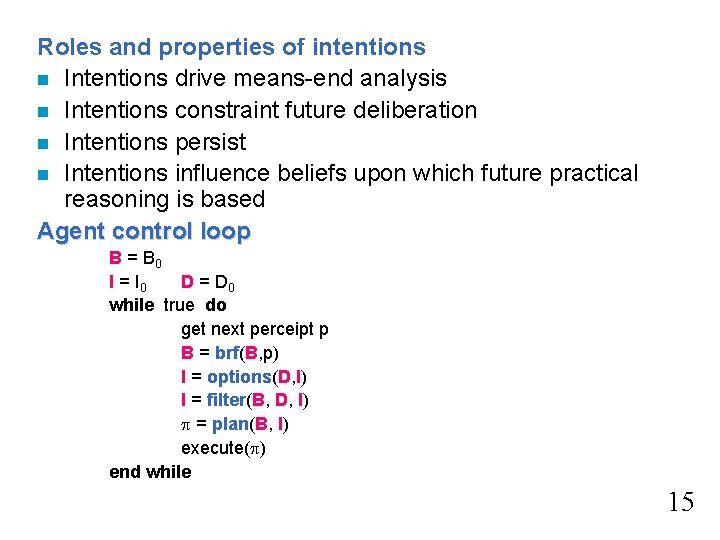

Roles and properties of intentions n Intentions drive means-end analysis n Intentions constraint future deliberation n Intentions persist n Intentions influence beliefs upon which future practical reasoning is based Agent control loop B = B 0 I = I 0 D = D 0 while true do get next perceipt p B = brf(B, p) I = options(D, I) I = filter(B, D, I) = plan(B, I) execute( ) end while 15

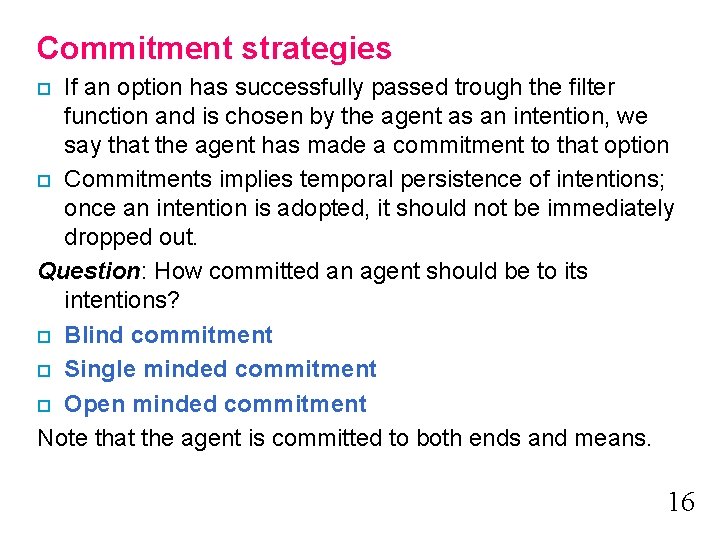

Commitment strategies If an option has successfully passed trough the filter function and is chosen by the agent as an intention, we say that the agent has made a commitment to that option o Commitments implies temporal persistence of intentions; once an intention is adopted, it should not be immediately dropped out. Question: How committed an agent should be to its intentions? o Blind commitment o Single minded commitment o Open minded commitment Note that the agent is committed to both ends and means. o 16

B = B 0 Revised BDI agent control loop I = I 0 D = D 0 while true do get next perceipt p B = brf(B, p) I = options(D, I) Dropping intentions that are impossible I = filter(B, D, I) or have succeeded = plan(B, I) while not (empty( ) or succeeded (I, B) or impossible(I, B)) do = head( ) execute( ) = tail( ) get next perceipt p B = brf(B, p) if not sound( , I, B) then = plan(B, I) Reactivity, replan end while 17

Reactive agent architectures Subsumption architecture - Brooks, 1986 n n n DM = {Task Accomplishing Behaviours} A TAB is represented by a competence module (c. m. ) Every c. m. is responsible for a clearly defined, but not particular complex task - concrete behavior The c. m. are operating in parallel Lower layers in the hierarchy have higher priority and are able to inhibit operations of higher layers The modules located at the lower end of the hierarchy are responsible for basic, primitive tasks; the higher modules reflect more complex patterns of behaviour and incorporate a subset of the tasks of the subordinate modules subsumtion architecture 18

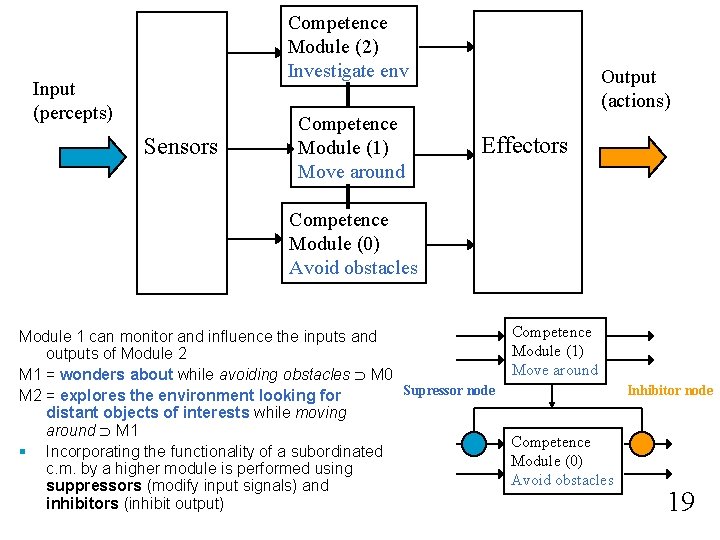

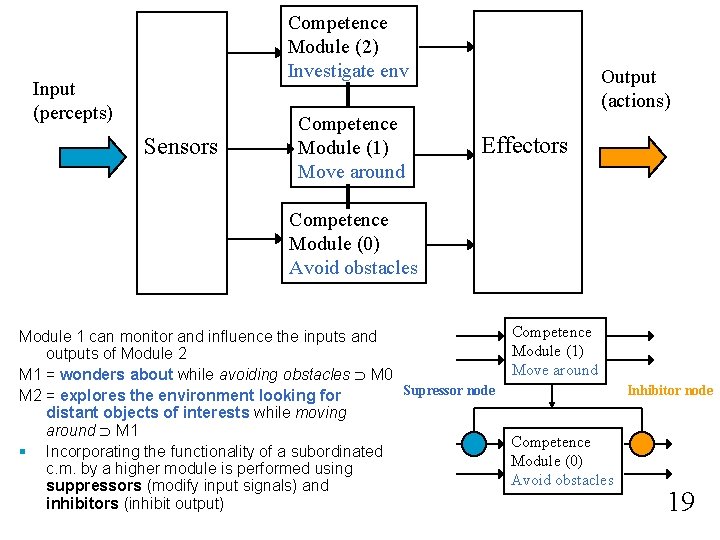

Competence Module (2) Investigate env Input (percepts) Sensors Competence Module (1) Move around Output (actions) Effectors Competence Module (0) Avoid obstacles Competence Module 1 can monitor and influence the inputs and Module (1) outputs of Module 2 Move around M 1 = wonders about while avoiding obstacles M 0 Supressor node Inhibitor node M 2 = explores the environment looking for distant objects of interests while moving around M 1 Competence § Incorporating the functionality of a subordinated Module (0) c. m. by a higher module is performed using Avoid obstacles suppressors (modify input signals) and inhibitors (inhibit output) 19

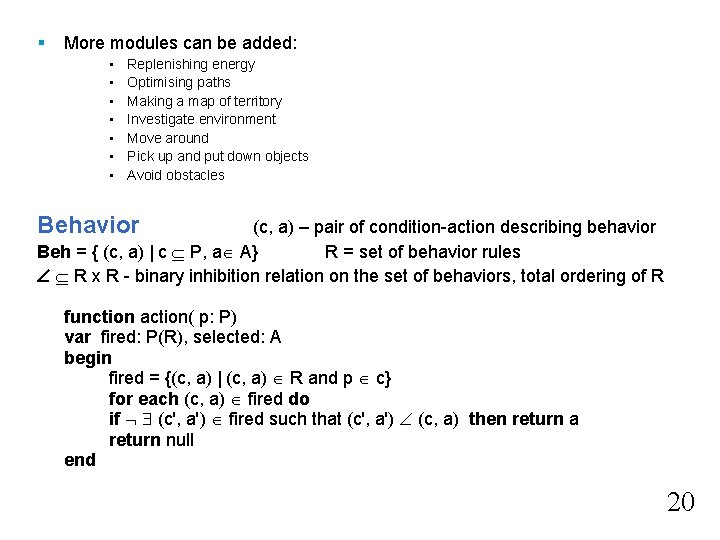

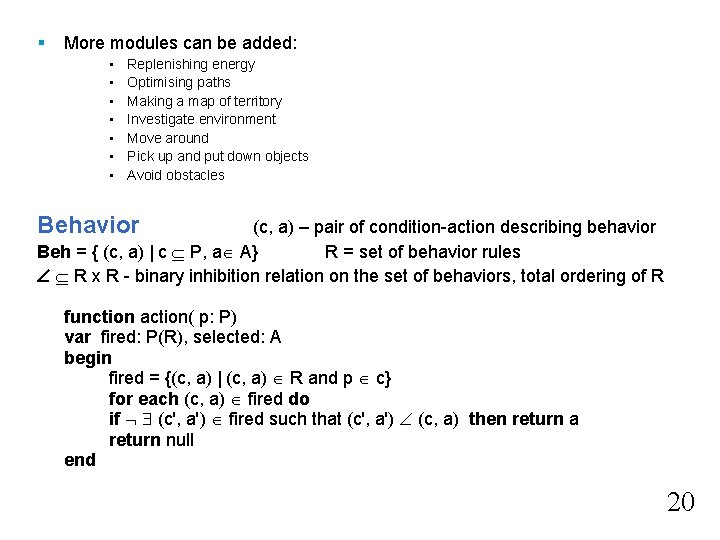

§ More modules can be added: • • Replenishing energy Optimising paths Making a map of territory Investigate environment Move around Pick up and put down objects Avoid obstacles Behavior (c, a) – pair of condition-action describing behavior Beh = { (c, a) | c P, a A} R = set of behavior rules R x R - binary inhibition relation on the set of behaviors, total ordering of R function action( p: P) var fired: P(R), selected: A begin fired = {(c, a) | (c, a) R and p c} for each (c, a) fired do if (c', a') fired such that (c', a') (c, a) then return a return null end 20

n n Every c. m. is described using a subsumption language based on AFSM - Augmented Finite State Machines An AFSM initiates a response as soon as its input signal exceeds a specific threshold value. Every AFSM operates independently and asynchronously of other AFSMs and is in continuos competition with the other c. m. for the control of the agent - real distributed internal control 1990 - Brooks extends the architecture to cope with a large number of c. m. - Behavior Language Structured AFSM - one AFSM models the concrete behavior pattern of a group of agents / group of AFSMs competence modules. n Steels - indirect communication - takes into account the social feature of agents n Advantages of reactive architectures Disadvantages n 21

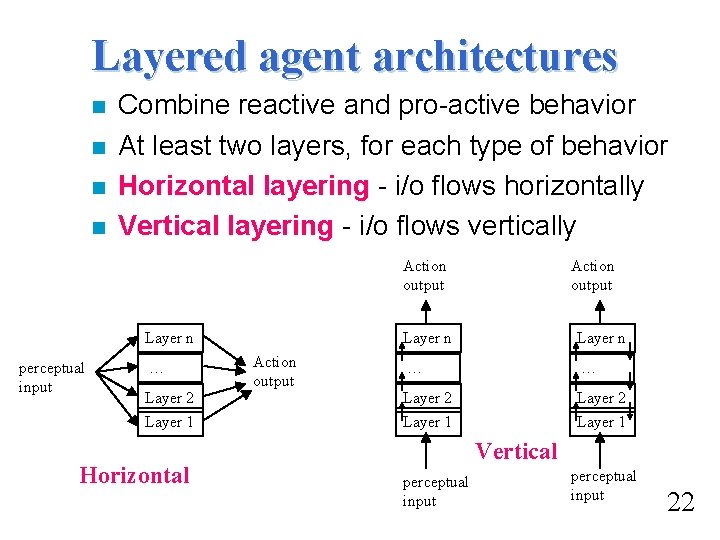

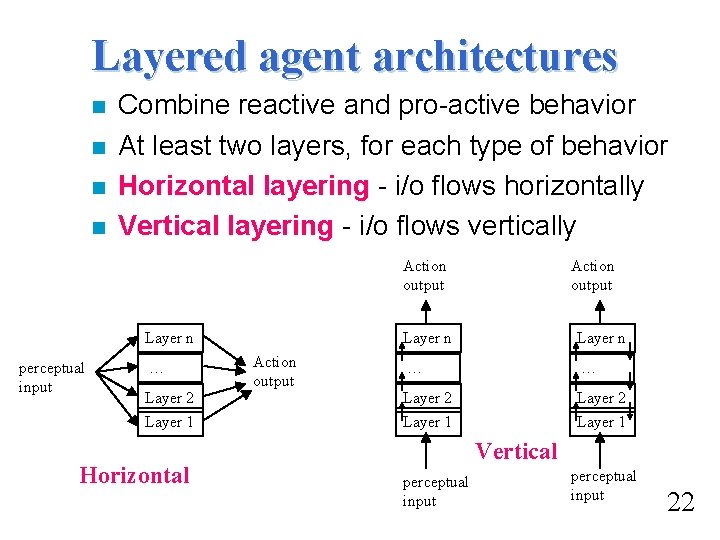

Layered agent architectures n n Combine reactive and pro-active behavior At least two layers, for each type of behavior Horizontal layering - i/o flows horizontally Vertical layering - i/o flows vertically Action output Layer n perceptual input … Layer 2 Layer 1 Horizontal Action output Layer n … … Layer 2 Layer 1 Vertical perceptual input 22

Touring. Machine n n n Horizontal layering - 3 activity producing layers, each layer produces suggestions for actions to be performed reactive layer - set of situation-action rules, react to precepts from the environment planning layer - pro-active behavior - uses a library of plan skeletons called schemas n n - hierarchical structured plans refined in this layer modeling layer - represents the world, the agent and other agents - set up goals, predicts conflicts - goals are given to the planning layer to be achieved Control subsystem - centralized component, contains a set of control rules - the rules: suppress info from a lower layer to give control to a higher one - censor actions of layers, so as to control which layer will do the actions 23

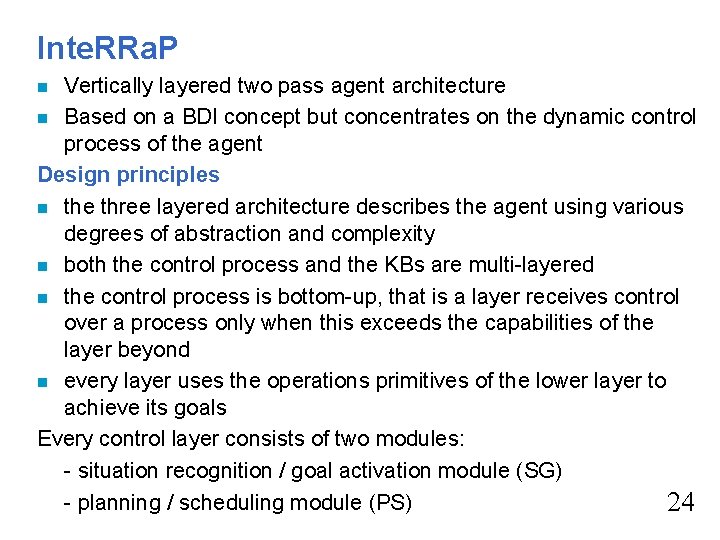

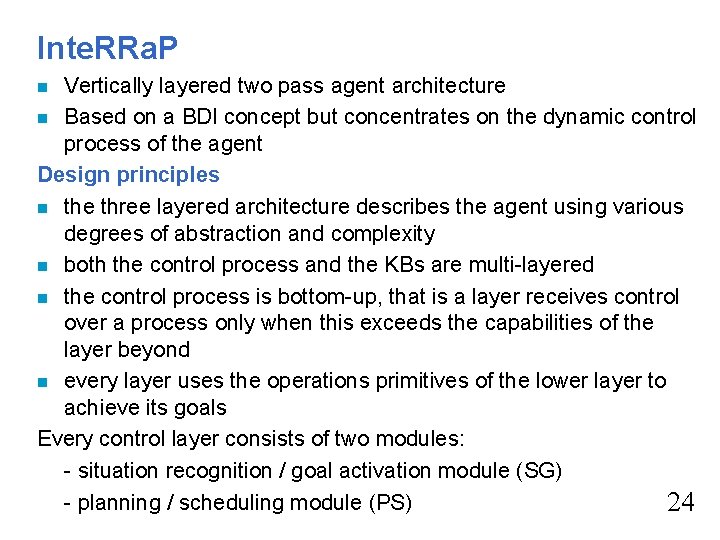

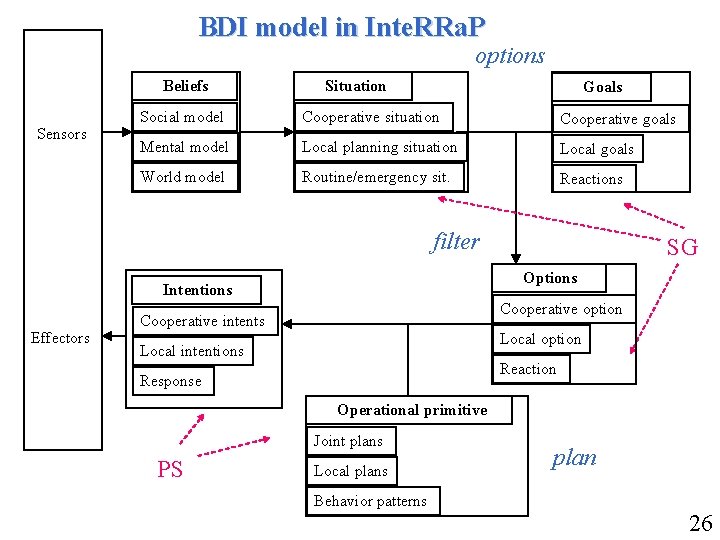

Inte. RRa. P Vertically layered two pass agent architecture n Based on a BDI concept but concentrates on the dynamic control process of the agent Design principles n the three layered architecture describes the agent using various degrees of abstraction and complexity n both the control process and the KBs are multi-layered n the control process is bottom-up, that is a layer receives control over a process only when this exceeds the capabilities of the layer beyond n every layer uses the operations primitives of the lower layer to achieve its goals Every control layer consists of two modules: - situation recognition / goal activation module (SG) - planning / scheduling module (PS) 24 n

Cooperative planning layer I n t e R R a P Local planning layer Behavior based layer World interface actions SG SG SG Sensors Social KB PS Planning KB PS World KB PS Effectors Communication percepts 25

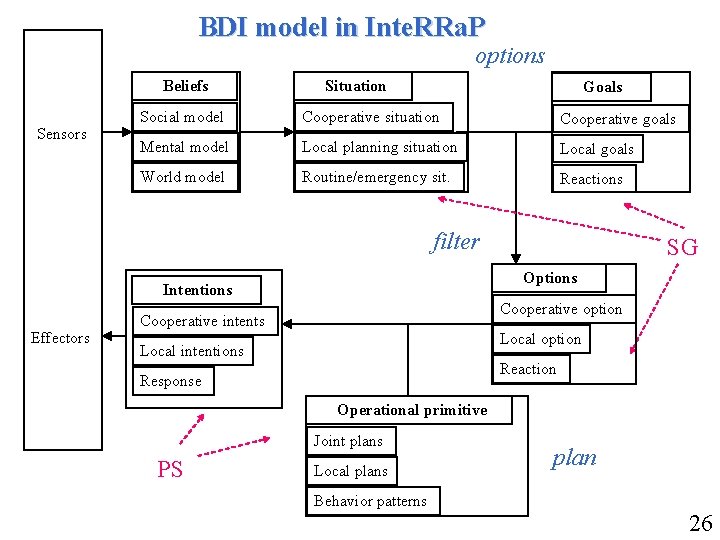

BDI model in Inte. RRa. P options Beliefs Sensors Situation Goals Social model Cooperative situation Cooperative goals Mental model Local planning situation Local goals World model Routine/emergency sit. Reactions filter Options Intentions Effectors SG Cooperative option Cooperative intents Local option Local intentions Reaction Response Operational primitive Joint plans PS Local plans Behavior patterns plan 26

§ § Muller tested Inte. RRa. P in a simulated loading area. A number of agents act as automatic fork-lifts that move in the loading area, remove and replace stock from various storage bays, and so compete with other agents for resources 27