MPI Message Passing Interface Yvon Kermarrec 1 More

![Simple full example #include <stdio. h> #include <mpi. h> int main(int argc, char *argv[]) Simple full example #include <stdio. h> #include <mpi. h> int main(int argc, char *argv[])](https://slidetodoc.com/presentation_image_h/050800e89fccfc0f2f07b7bdadf8849f/image-34.jpg)

- Slides: 44

MPI Message Passing Interface Yvon Kermarrec 1

More readings ¢ ¢ ¢ 2 “Parallel programming with MPI”, Peter Pacheco, Morgan Kaufmann Publishers LAM/MPI User Guide: http: //www. lam-mpi. org/tutorials/lam/ The MPI standard is available from http: //www. mpiforum. org/ Y Kermarrec

Agenda ¢ Part 0 – the context • Slides extracted from a lecture from Hanjun Kin, Princeton U. ¢ Part 1 - Introduction • Basics of Parallel Computing • Six-function MPI • Point-to-Point Communications ¢ Part 2 – Advanced features of MPI • Collective Communication ¢ Part 3 – examples and how to program an MPI application 3 Y Kermarrec

Serial Computing ¢ 1 k pieces puzzle ¢ Takes 10 hours Y Kermarrec

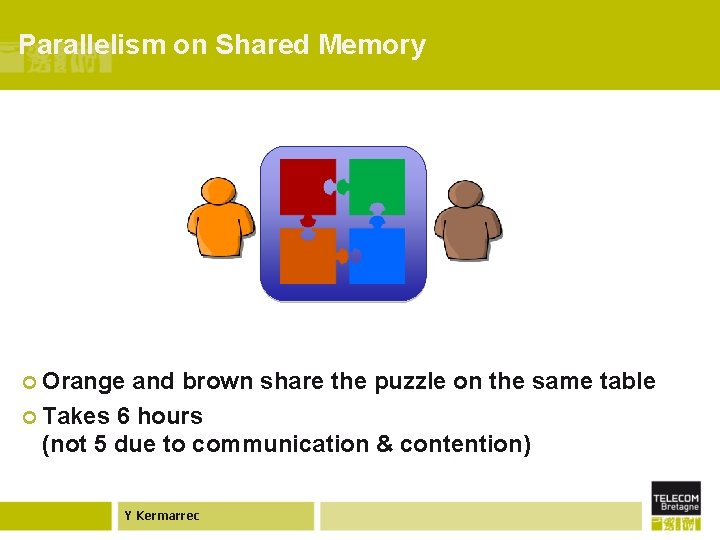

Parallelism on Shared Memory ¢ Orange and brown share the puzzle on the same table ¢ Takes 6 hours (not 5 due to communication & contention) Y Kermarrec

The more, the better? ? ¢ Lack of seats (Resource limit) ¢ More contention among people Y Kermarrec

Parallelism on Distributed Systems ¢ Scalable seats (Scalable Resource) ¢ Less contention from private memory spaces Y Kermarrec

How to share the puzzle? ¢ DSM (Distributed Shared Memory) ¢ Message Passing Y Kermarrec

DSM (Distributed Shared Memory) ¢ Provides shared memory physically or virtually ¢ Pros - Easy to use ¢ Cons - Limited Scalability, High coherence overhead Y Kermarrec

Message Passing ¢ Pros – Scalable, Flexible ¢ Cons – Someone says it’s more difficult than DSM Y Kermarrec

Agenda ¢ Part 1 - Introduction • Basics of Parallel Computing • Six-function MPI • Point-to-Point Communications ¢ Part 2 – Advanced features of MPI • Collective Communication ¢ Part 3 – examples and how to program an MPI application 11 Y Kermarrec

Agenda ¢ Part 0 – the context • Slides extracted from a lecture from Hanjun Kin, Princeton U. ¢ Part 1 - Introduction • Basics of Parallel Computing • Six-function MPI • Point-to-Point Communications ¢ Part 2 – Advanced features of MPI • Collective Communication ¢ Part 3 – examples and how to program an MPI application 12 Y Kermarrec

We need more computational power ¢ The • • • 13 weather forcast example by P Pacheco: Suppose we wish to predict the weather over the United and Canada for the next 48 hours Also suppose that we want to model the atmosphere from sea level to an altitude of 20 km we use a cubical grid, with each cube measuring 0. 1 km to model the atmosphere , or 2. 0 x 107 km 2 x 20 km x 103 cubes per km 3 = 4 x 1011 grid points Suppose we need to computer 100 instructions for each points for the next 48 hours : we need 4 x 1013 x 48 operations If our computer executes 109 ope/sec, we need 23 Ydays Kermarrec

The need for parallel programming ¢ We face numerous challenges in science (biology, simulation, earthquakes, …) and we cannot build fast enough computers…. ¢ Data can be big (big data…) and memory is rather limited ¢ Processors can do a lot. . . But to adress figures as mentionned we can program smarter but that is not enough 14 Y Kermarrec

The need for parallel machines ¢ We can build a parallel machines, but there is still a huge amount of work to be done: • decide on and implement an interconnection network for the processors and memory modules, • design and implement system software for the hardware • Design algorithms and data structures to solve our problem • Divide the algorithms and data structures into subproblems • Indentify the communications and data exchanges • Assign subproblems to processors 15 Y Kermarrec

The need for parallel machines ¢ Flynn’s • • 16 taxonomy (or how to work more!) SISD : Single Instruction – Single Data : the common and classical machine… SIMD : Single Instruction – Multiple data : the same instructions are carried out simultaneously on multiple data items MIMD : Multiple Instructions – Multiple Data SPMD : Single Program – Multiple Data : the same version of the program is replicated and run on different data Y Kermarrec

The need for parallel machines ¢ We can build one parallel computer … but that would be very expensive, time and energy consuming, … and hard to maintain ¢ We may want to integrate what is available in the labs – to agregate the available computing ressources and reuse ordinary machines : • US D. o Energy and the PVM project (Parallel Virtual Machine) from ‘ 89 17 Y Kermarrec

MPI : Message Passing Interface ? ¢ MPI ¢A • • • 18 : an Interface message-passing library specification extended message-passing model not a language or compiler specification not a specific implementation or product For parallel computers, clusters, and heterogeneous networks A riche set of features Designed to provide access to advanced parallel hardware for end users, library writers, and tool developers Y Kermarrec

MPI ? ¢ An international product ¢ Early vendor systems (Intel’s NX, IBM’s EUI, TMC’s CMMD) were not portable ¢ Early portable systems (PVM, p 4, TCGMSG, Chameleon) were mainly research efforts • Were rather limited… and lacked vendor support ¢ Were not implemented at the most efficient level ¢ The MPI Forum organized in 1992 with broad participation by: • vendors: IBM, Intel, TMC, SGI, Convex … • users: application scientists and library writers 19 Y Kermarrec

How big is the MPI library? ¢ Huge ( 125 Functions )… ¢ Basic ( 6 Functions ) ¢ But only a subset is needed to program a distributed application Y Kermarrec

Environments for parallel programming ¢ Upshot, Jumpshot, and MPE tools • http: //www. mcs. anl. gov/research/projects/perfvis/soft ware/viewers/ ¢ • Pallas VAMPIR • http: //www. vampir. eu/ ¢ • Paragraph • http: //www. ncsa. uiuc. edu/Apps/MCS/Para. Graph/Par a. Graph. html 21 Y Kermarrec

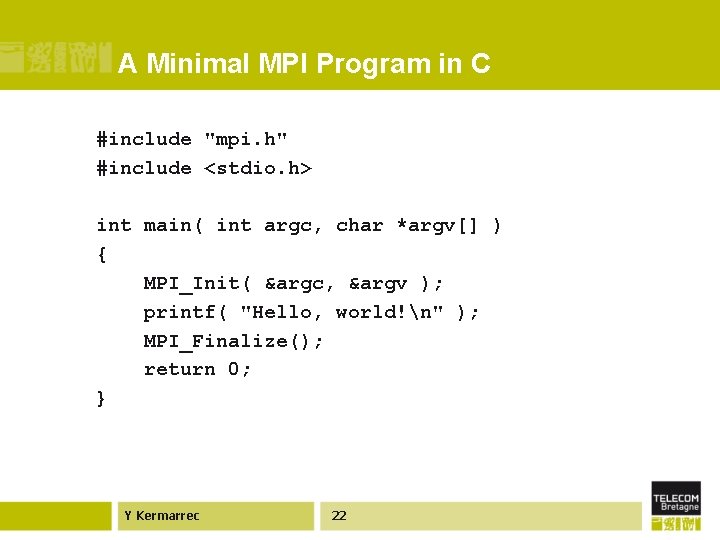

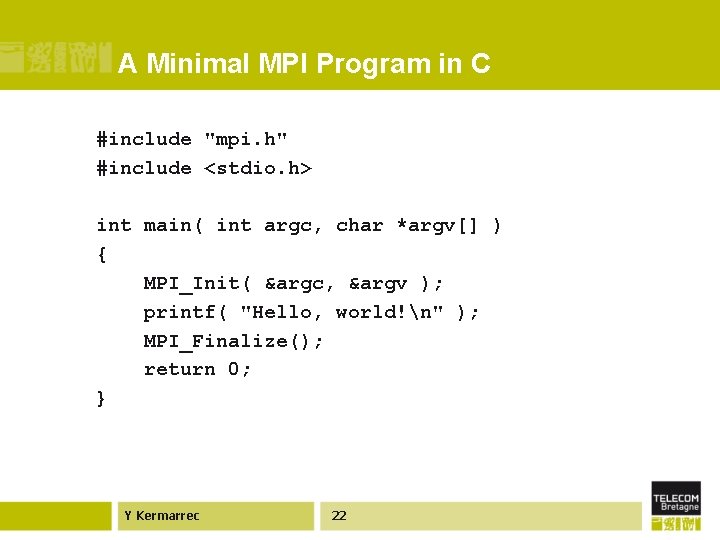

A Minimal MPI Program in C #include "mpi. h" #include <stdio. h> int main( int argc, char *argv[] ) { MPI_Init( &argc, &argv ); printf( "Hello, world!n" ); MPI_Finalize(); return 0; } Y Kermarrec 22

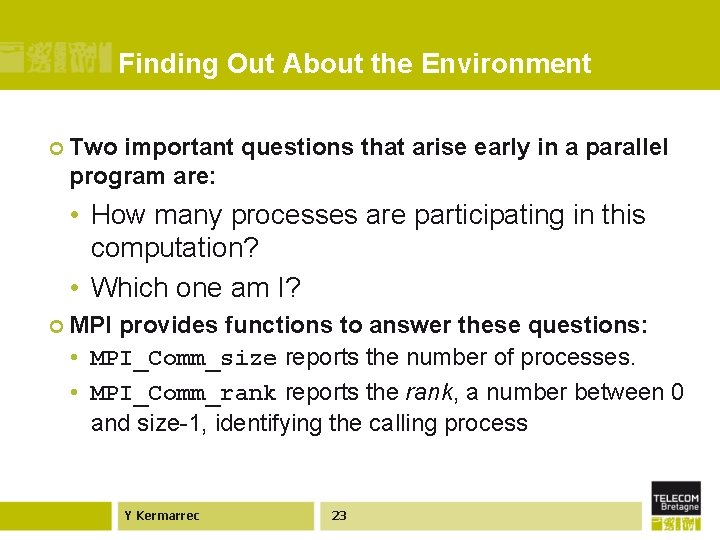

Finding Out About the Environment ¢ Two important questions that arise early in a parallel program are: • How many processes are participating in this computation? • Which one am I? ¢ MPI provides functions to answer these questions: • MPI_Comm_size reports the number of processes. • MPI_Comm_rank reports the rank, a number between 0 and size-1, identifying the calling process Y Kermarrec 23

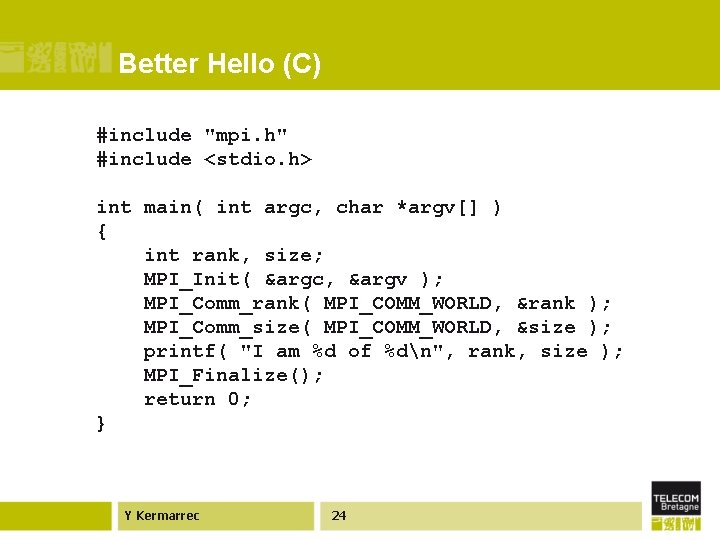

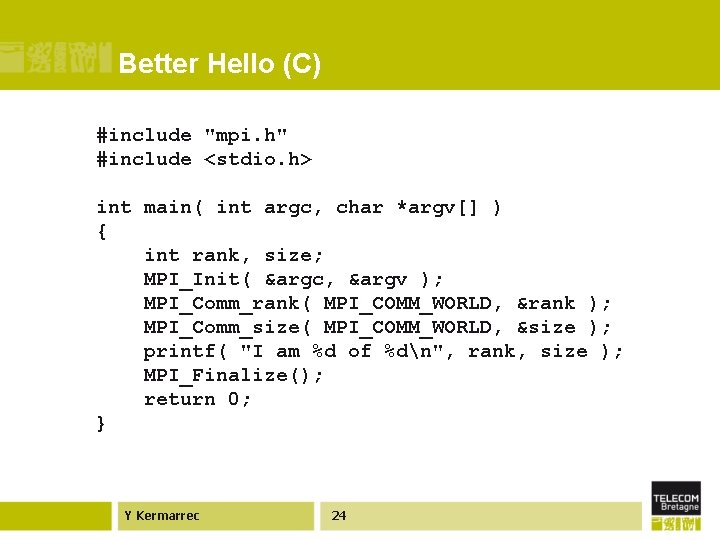

Better Hello (C) #include "mpi. h" #include <stdio. h> int main( int argc, char *argv[] ) { int rank, size; MPI_Init( &argc, &argv ); MPI_Comm_rank( MPI_COMM_WORLD, &rank ); MPI_Comm_size( MPI_COMM_WORLD, &size ); printf( "I am %d of %dn", rank, size ); MPI_Finalize(); return 0; } Y Kermarrec 24

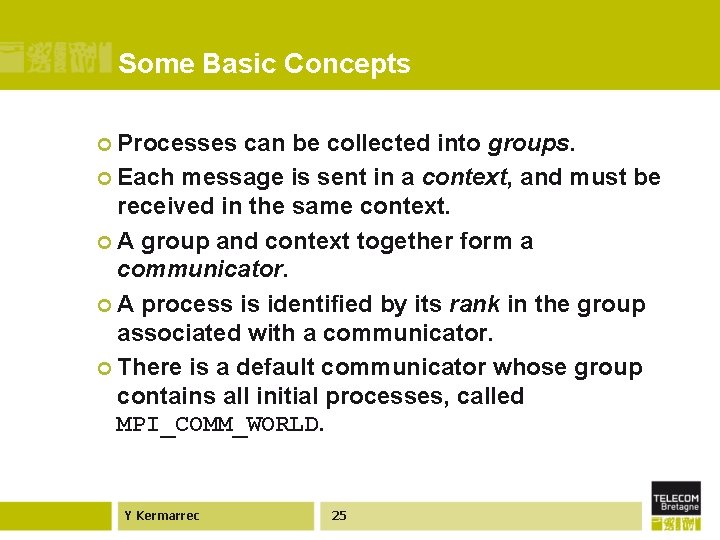

Some Basic Concepts ¢ Processes can be collected into groups. ¢ Each message is sent in a context, and must be received in the same context. ¢ A group and context together form a communicator. ¢ A process is identified by its rank in the group associated with a communicator. ¢ There is a default communicator whose group contains all initial processes, called MPI_COMM_WORLD. Y Kermarrec 25

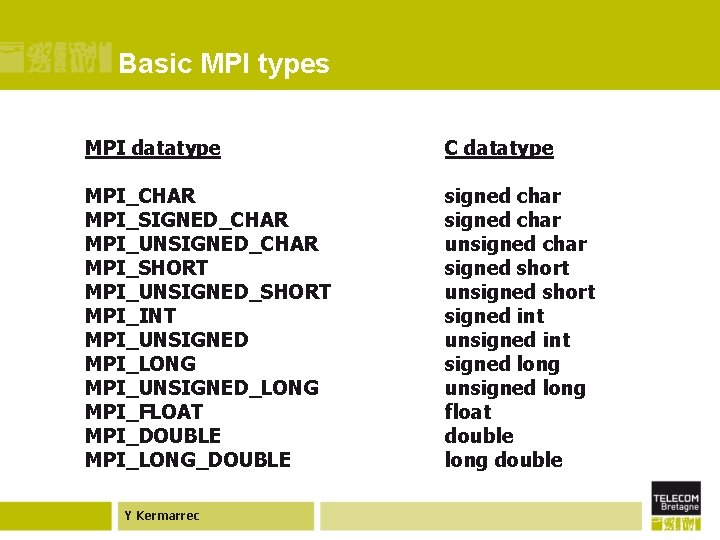

MPI Datatypes ¢ The data in a message to sent or received is described by a triple (address, count, datatype), where ¢ An MPI datatype is recursively defined as: • predefined, corresponding to a data type from the language (e. g. , MPI_INT, MPI_DOUBLE_PRECISION) • a contiguous array of MPI datatypes • an indexed array of blocks of datatypes • an arbitrary structure of datatypes ¢ There are MPI functions to construct custom datatypes, such an array of (int, float) pairs, or a row of a matrix stored columnwise. Y Kermarrec 26

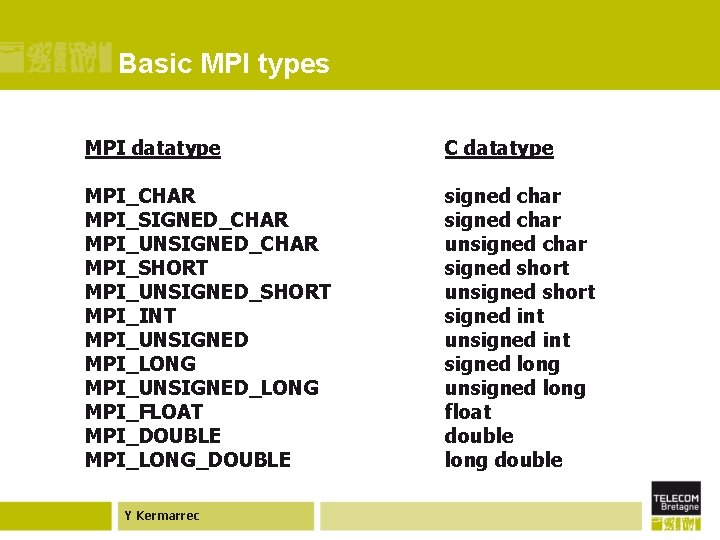

Basic MPI types MPI datatype C datatype MPI_CHAR MPI_SIGNED_CHAR MPI_UNSIGNED_CHAR MPI_SHORT MPI_UNSIGNED_SHORT MPI_INT MPI_UNSIGNED MPI_LONG MPI_UNSIGNED_LONG MPI_FLOAT MPI_DOUBLE MPI_LONG_DOUBLE signed char unsigned char signed short unsigned short signed int unsigned int signed long unsigned long float double long double Y Kermarrec

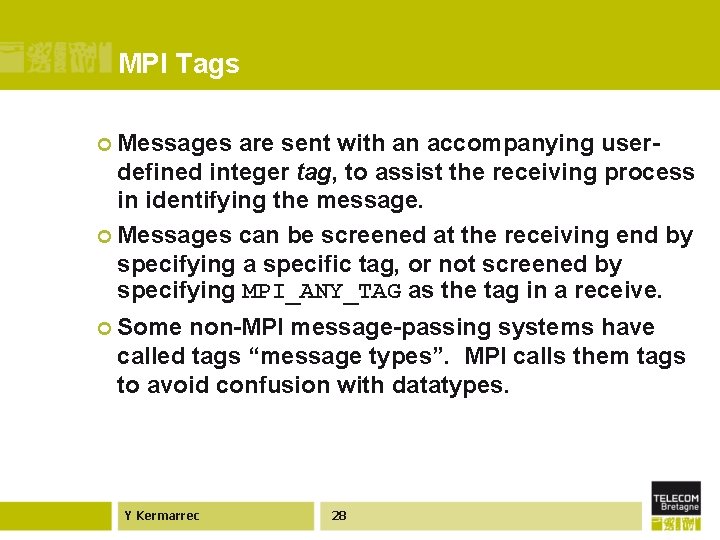

MPI Tags ¢ Messages are sent with an accompanying userdefined integer tag, to assist the receiving process in identifying the message. ¢ Messages can be screened at the receiving end by specifying a specific tag, or not screened by specifying MPI_ANY_TAG as the tag in a receive. ¢ Some non-MPI message-passing systems have called tags “message types”. MPI calls them tags to avoid confusion with datatypes. Y Kermarrec 28

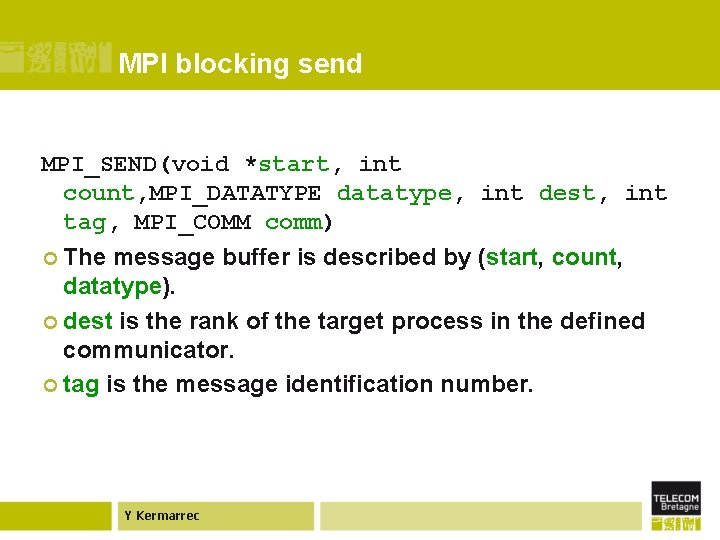

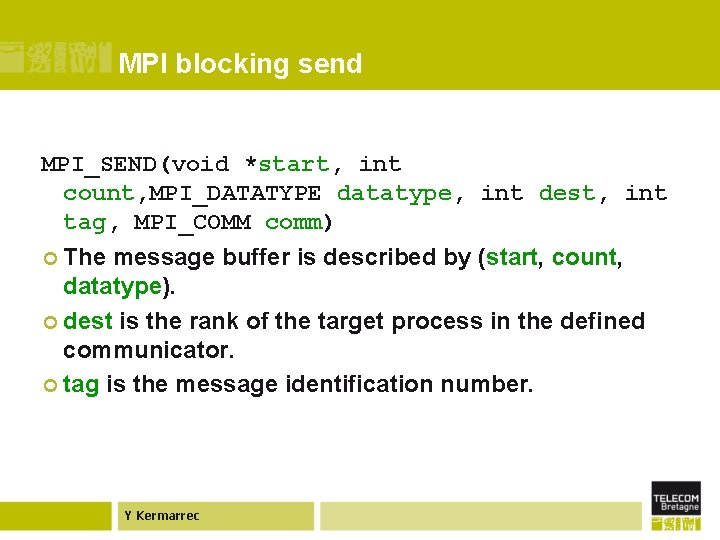

MPI blocking send MPI_SEND(void *start, int count, MPI_DATATYPE datatype, int dest, int tag, MPI_COMM comm) ¢ The message buffer is described by (start, count, datatype). ¢ dest is the rank of the target process in the defined communicator. ¢ tag is the message identification number. Y Kermarrec

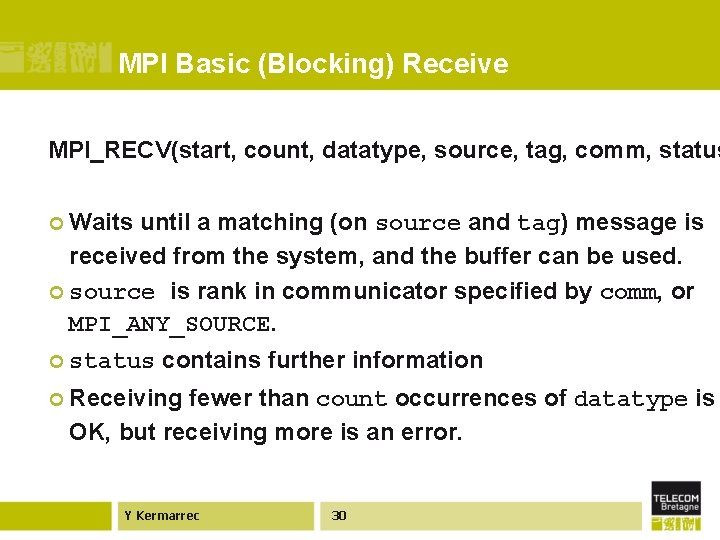

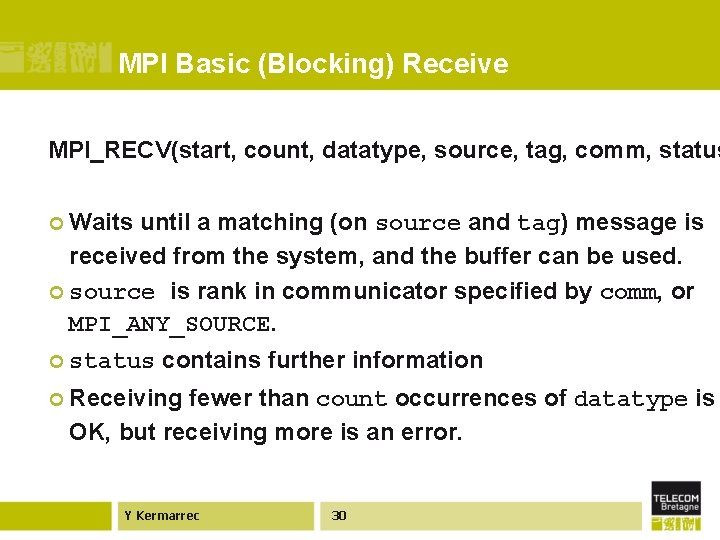

MPI Basic (Blocking) Receive MPI_RECV(start, count, datatype, source, tag, comm, status ¢ Waits until a matching (on source and tag) message is received from the system, and the buffer can be used. ¢ source is rank in communicator specified by comm, or MPI_ANY_SOURCE. ¢ status contains further information ¢ Receiving fewer than count occurrences of datatype is OK, but receiving more is an error. Y Kermarrec 30

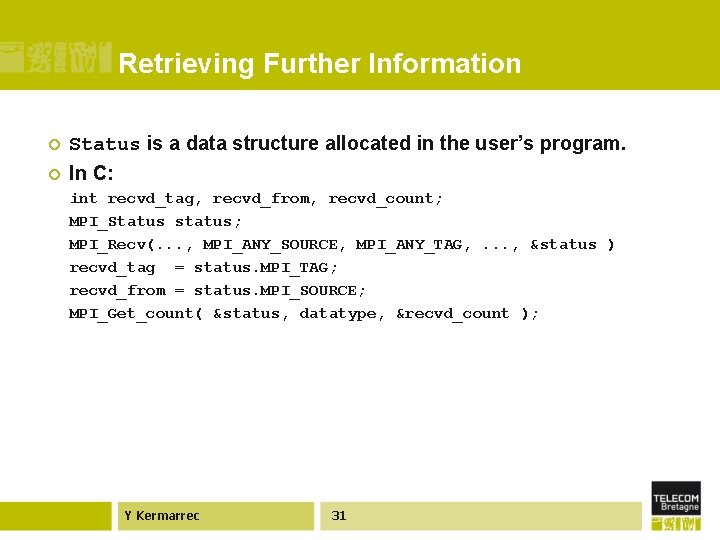

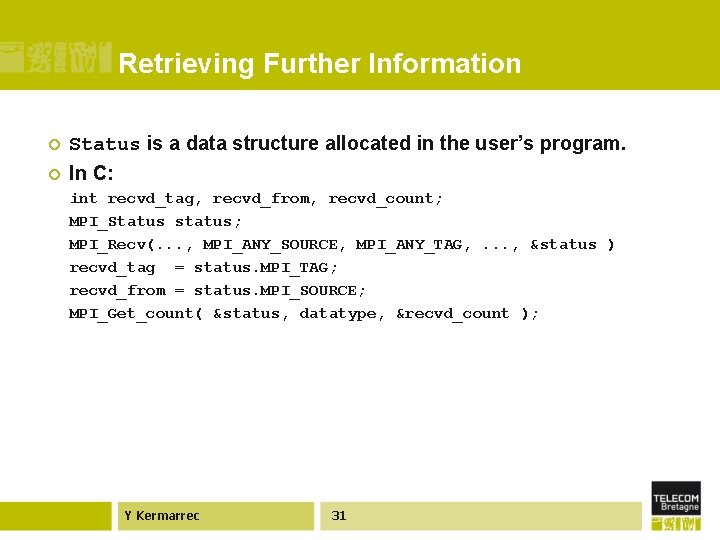

Retrieving Further Information ¢ Status is a data structure allocated in the user’s program. ¢ In C: int recvd_tag, recvd_from, recvd_count; MPI_Status status; MPI_Recv(. . . , MPI_ANY_SOURCE, MPI_ANY_TAG, . . . , &status ) recvd_tag = status. MPI_TAG; recvd_from = status. MPI_SOURCE; MPI_Get_count( &status, datatype, &recvd_count ); Y Kermarrec 31

More info ¢A receive operation may accept messages from an arbitrary sender, but a send operation must specify a unique receiver. ¢ Source equals destination is allowed, that is, a process can send a message to itself. Y Kermarrec

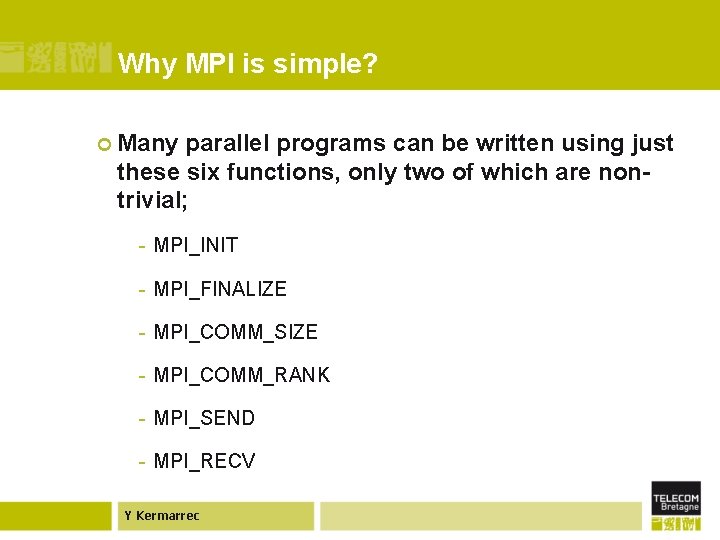

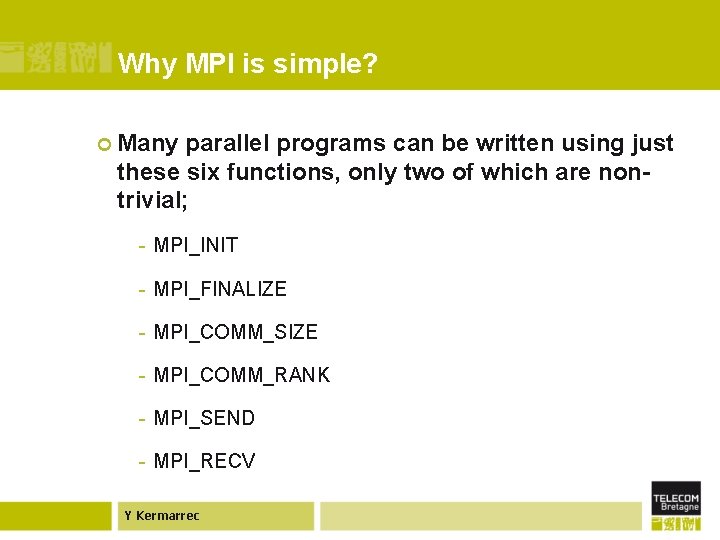

Why MPI is simple? ¢ Many parallel programs can be written using just these six functions, only two of which are nontrivial; - MPI_INIT - MPI_FINALIZE - MPI_COMM_SIZE - MPI_COMM_RANK - MPI_SEND - MPI_RECV Y Kermarrec

![Simple full example include stdio h include mpi h int mainint argc char argv Simple full example #include <stdio. h> #include <mpi. h> int main(int argc, char *argv[])](https://slidetodoc.com/presentation_image_h/050800e89fccfc0f2f07b7bdadf8849f/image-34.jpg)

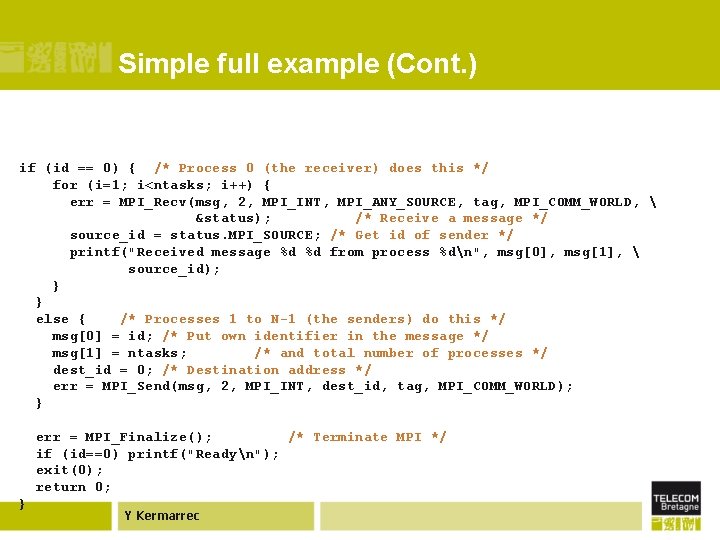

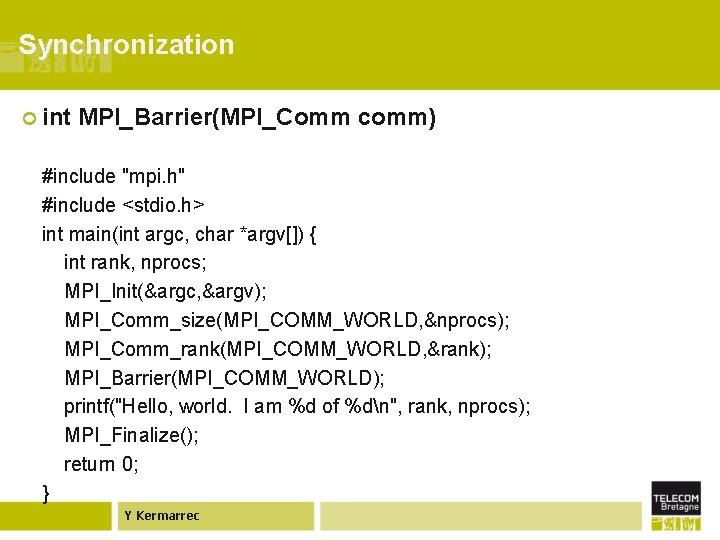

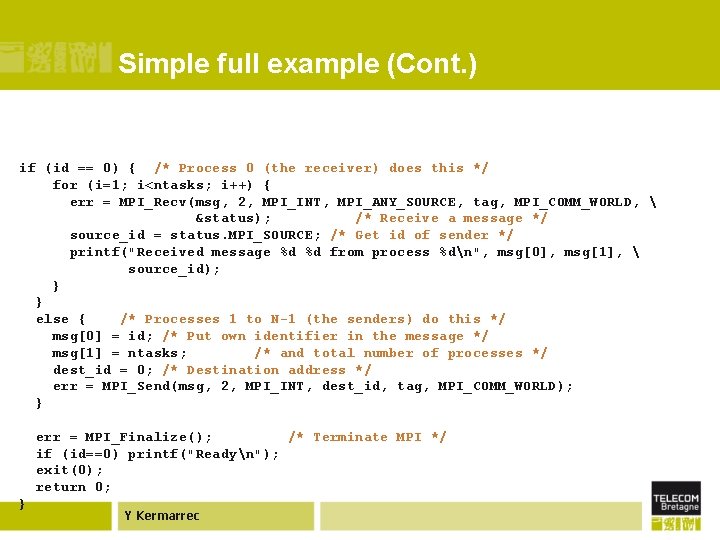

Simple full example #include <stdio. h> #include <mpi. h> int main(int argc, char *argv[]) { const int tag = 42; /* Message tag */ int id, ntasks, source_id, dest_id, err, i; MPI_Status status; int msg[2]; /* Message array */ err = MPI_Init(&argc, &argv); /* Initialize MPI */ if (err != MPI_SUCCESS) { printf("MPI initialization failed!n"); exit(1); } err = MPI_Comm_size(MPI_COMM_WORLD, &ntasks); /* Get nr of tasks */ err = MPI_Comm_rank(MPI_COMM_WORLD, &id); /* Get id of this process */ if (ntasks < 2) { printf("You have to use at least 2 processors to run this programn"); MPI_Finalize(); /* Quit if there is only one processor */ exit(0); } Y Kermarrec

Simple full example (Cont. ) if (id == 0) { /* Process 0 (the receiver) does this */ for (i=1; i<ntasks; i++) { err = MPI_Recv(msg, 2, MPI_INT, MPI_ANY_SOURCE, tag, MPI_COMM_WORLD, &status); /* Receive a message */ source_id = status. MPI_SOURCE; /* Get id of sender */ printf("Received message %d %d from process %dn", msg[0], msg[1], source_id); } } else { /* Processes 1 to N-1 (the senders) do this */ msg[0] = id; /* Put own identifier in the message */ msg[1] = ntasks; /* and total number of processes */ dest_id = 0; /* Destination address */ err = MPI_Send(msg, 2, MPI_INT, dest_id, tag, MPI_COMM_WORLD); } err = MPI_Finalize(); /* Terminate MPI */ if (id==0) printf("Readyn"); exit(0); return 0; } Y Kermarrec

Agenda ¢ Part 0 – the context • Slides extracted from a lecture from Hanjun Kin, Princeton U. ¢ Part 1 - Introduction • Basics of Parallel Computing • Six-function MPI • Point-to-Point Communications ¢ Part 2 – Advanced features of MPI • Collective Communication ¢ Part 3 – examples and how to program an MPI application 36 Y Kermarrec

Collective communications ¢A single call handles the communication between all the processes in a communicator ¢ There are 3 types of collective communications • Data movement (e. g. MPI_Bcast) • Reduction (e. g. MPI_Reduce) • Synchronization (e. g. MPI_Barrier) Y Kermarrec

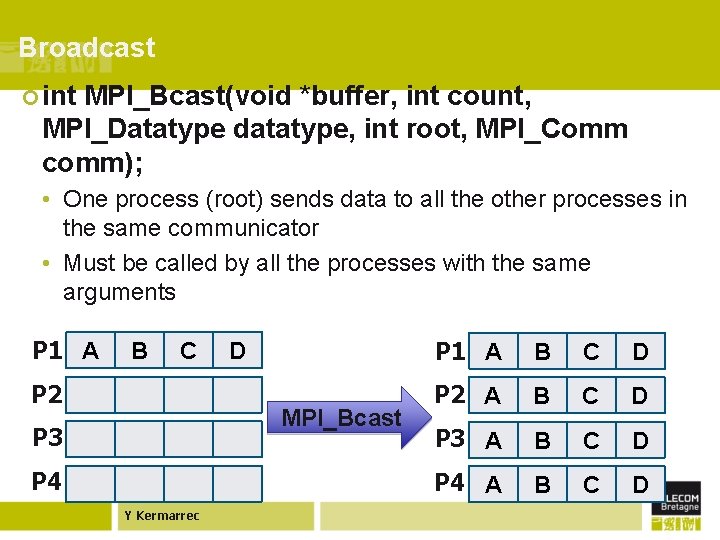

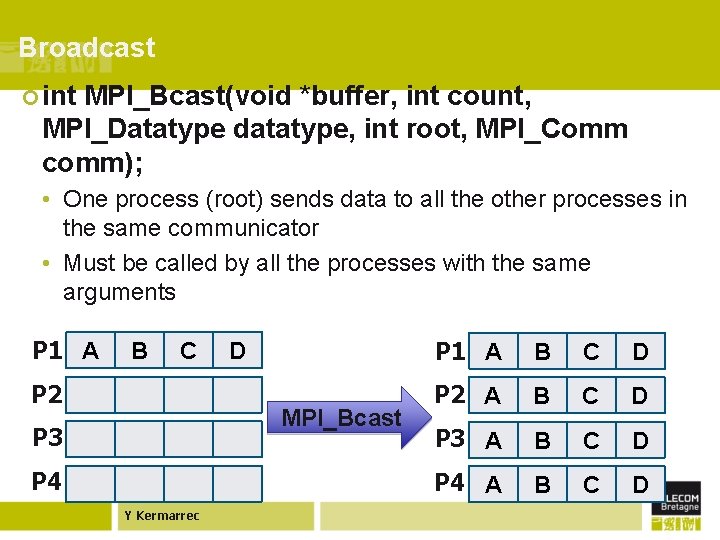

Broadcast ¢ int MPI_Bcast(void *buffer, int count, MPI_Datatype datatype, int root, MPI_Comm comm); • One process (root) sends data to all the other processes in the same communicator • Must be called by all the processes with the same arguments P 1 A B C P 2 D MPI_Bcast P 3 P 4 Y Kermarrec P 1 A B C D P 2 A B C D P 3 A B C D P 4 A B C D

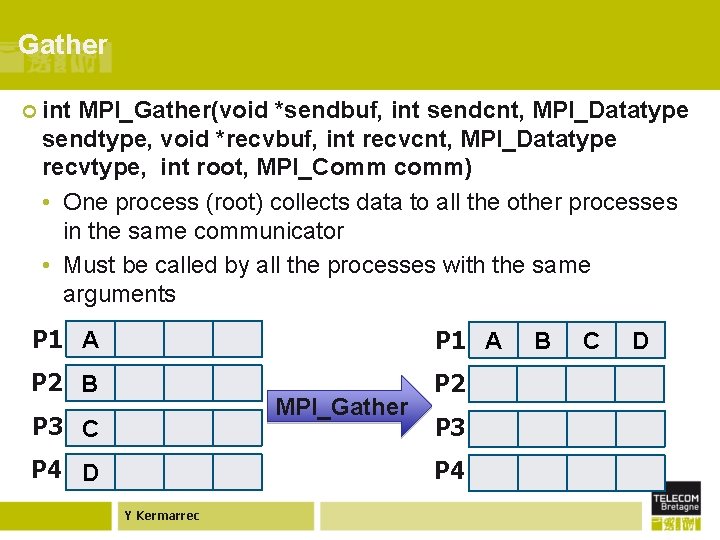

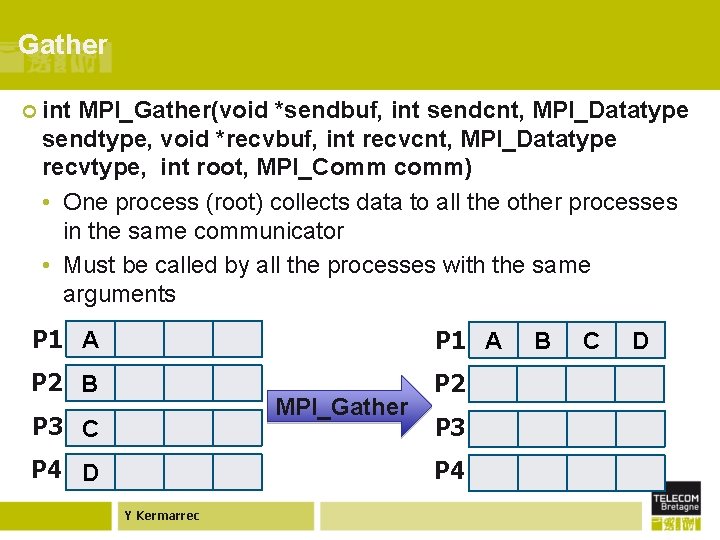

Gather ¢ int MPI_Gather(void *sendbuf, int sendcnt, MPI_Datatype sendtype, void *recvbuf, int recvcnt, MPI_Datatype recvtype, int root, MPI_Comm comm) • One process (root) collects data to all the other processes in the same communicator • Must be called by all the processes with the same arguments P 1 A P 2 B P 2 MPI_Gather P 3 C P 4 D P 3 P 4 Y Kermarrec B C D

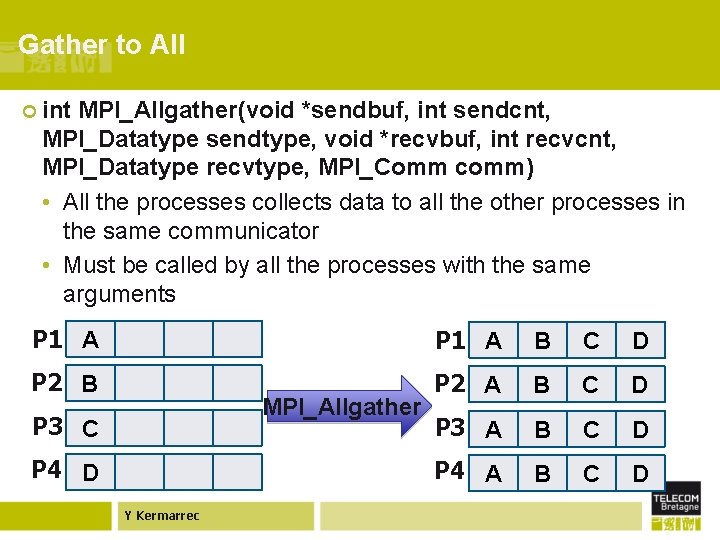

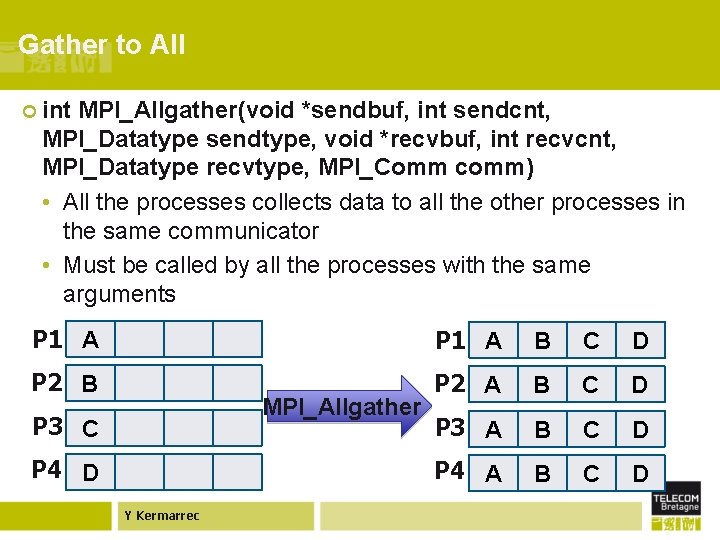

Gather to All ¢ int MPI_Allgather(void *sendbuf, int sendcnt, MPI_Datatype sendtype, void *recvbuf, int recvcnt, MPI_Datatype recvtype, MPI_Comm comm) • All the processes collects data to all the other processes in the same communicator • Must be called by all the processes with the same arguments P 1 A B C D P 2 B P 2 A B C D P 3 A B C D P 4 A B C D MPI_Allgather P 3 C P 4 D Y Kermarrec

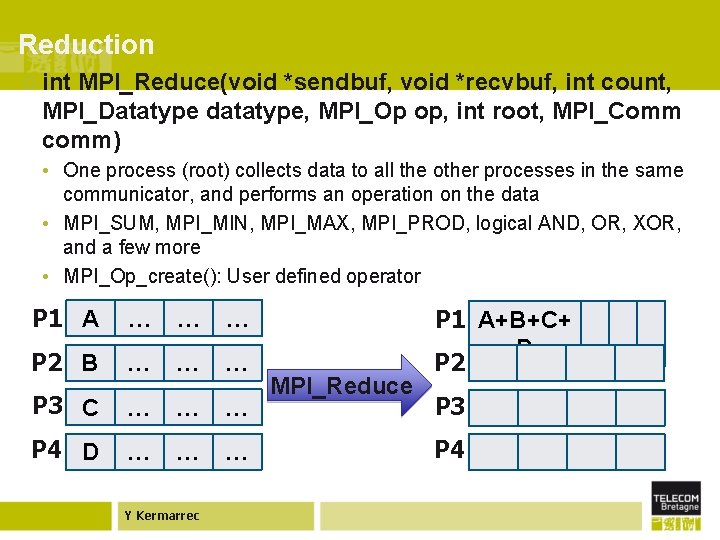

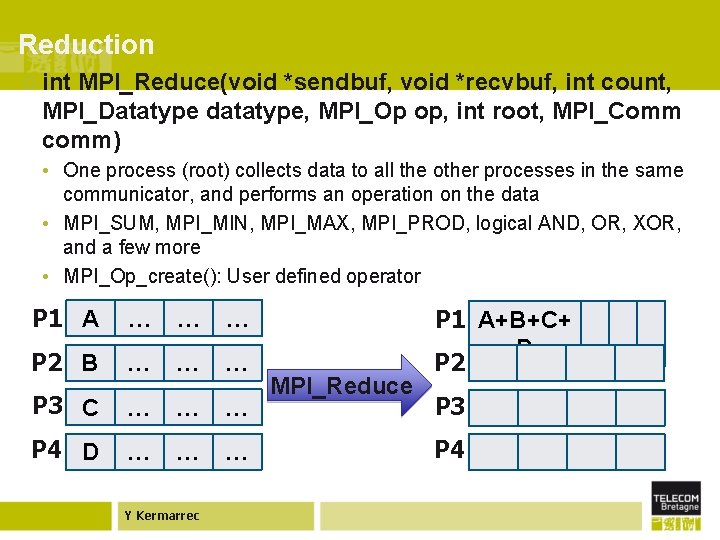

Reduction ¢ int MPI_Reduce(void *sendbuf, void *recvbuf, int count, MPI_Datatype datatype, MPI_Op op, int root, MPI_Comm comm) • One process (root) collects data to all the other processes in the same communicator, and performs an operation on the data • MPI_SUM, MPI_MIN, MPI_MAX, MPI_PROD, logical AND, OR, XOR, and a few more • MPI_Op_create(): User defined operator P 1 A … … … P 2 B … … … P 3 C … … … P 4 D … … … Y Kermarrec MPI_Reduce P 1 A+B+C+ D P 2 P 3 P 4

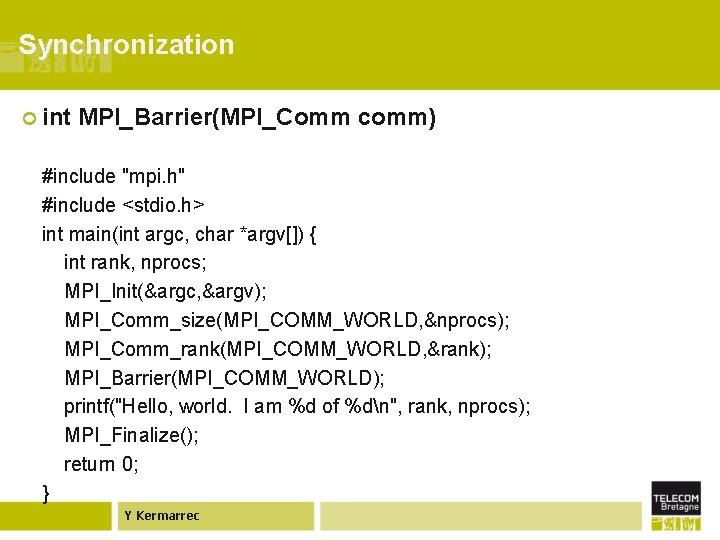

Synchronization ¢ int MPI_Barrier(MPI_Comm comm) #include "mpi. h" #include <stdio. h> int main(int argc, char *argv[]) { int rank, nprocs; MPI_Init(&argc, &argv); MPI_Comm_size(MPI_COMM_WORLD, &nprocs); MPI_Comm_rank(MPI_COMM_WORLD, &rank); MPI_Barrier(MPI_COMM_WORLD); printf("Hello, world. I am %d of %dn", rank, nprocs); MPI_Finalize(); return 0; } Y Kermarrec

Examples…. ¢ Master 43 Y Kermarrec and slaves

For more functions… ¢ http: //www. mpi-forum. org ¢ http: //www. llnl. gov/computing/tutorials/mpi/ ¢ http: //www. nersc. gov/nusers/help/tutorials/mpi/i ntro/ ¢ http: //www-unix. mcs. anl. gov/mpi/tutorial/ ¢ MPICH (http: //www-unix. mcs. anl. gov/mpich/) ¢ Open MPI (http: //www. open-mpi. org/) ¢ http: //w 3. pppl. gov/~ethier/MPI_Open. MP_2011. pdf Y Kermarrec