Motion Detection And Analysis Michael Knowles Tuesday 13

- Slides: 44

Motion Detection And Analysis Michael Knowles Tuesday 13 th January 2004

Introduction n Brief Discussion on Motion Analysis and its applications Static Scene Object Tracking Motion Compensation for Moving-Camera Sequences

Applications of Motion Tracking n Control Applications Object Avoidance n Automatic Guidance n Head Tracking for Video Conferencing n n Surveillance/Monitoring Applications Security Cameras n Traffic Monitoring n People Counting n

Two Approaches n Optical Flow n n Compute motion within region or the frame as a whole Object-based Tracking Detect objects within a scene n Track object across a number of frames n

My Work n n n Started by tracking moving objects in a static scene Develop a statistical model of the background Mark all regions that do not conform to the model as moving object

My Work n n n Now working on object detection and classification from a moving camera Current focus is motion compensated background filtering Determine motion of background apply to the model.

Static Scene Object Detection and Tracking n n Model the background and subtract to obtain object mask Filter to remove noise Group adjacent pixels to obtain objects Track objects between frames to develop trajectories

Background Modelling

Background Model

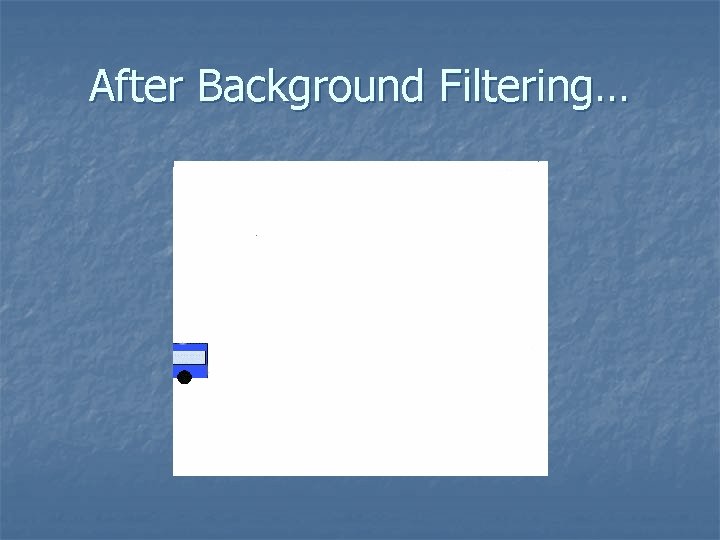

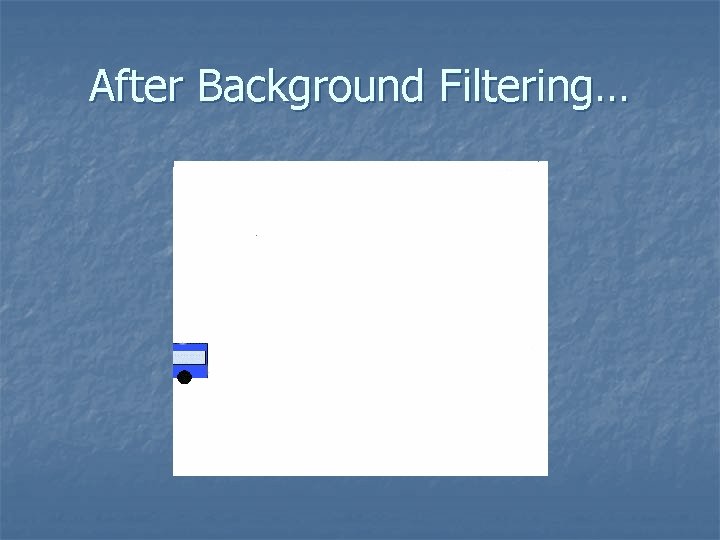

After Background Filtering…

Background Filtering n My algorithm based on: “Learning Patterns of Activity using Real-Time Tracking” C. Stauffer and W. E. L. Grimson. IEEE Trans. On Pattern Analysis and Machine Intelligence. August 2000 n The history of each pixel is modelled by a sequence of Gaussian distributions

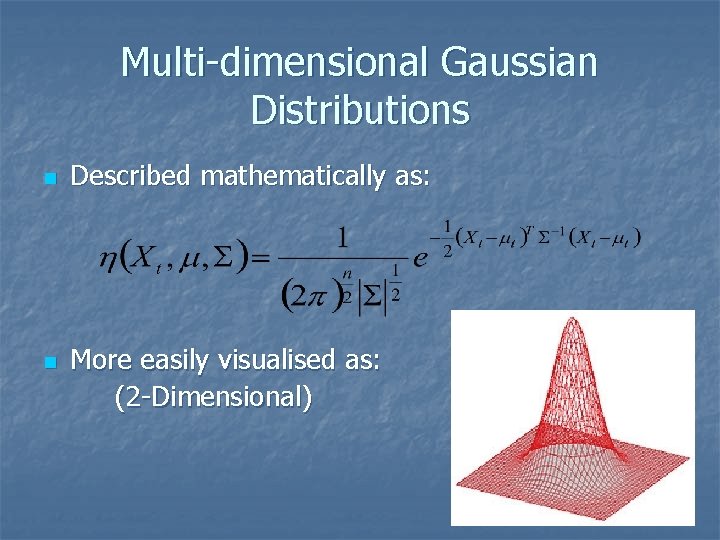

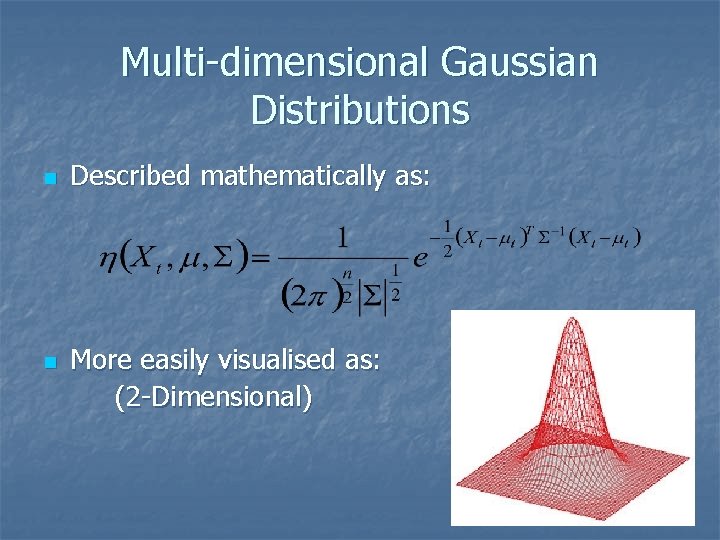

Multi-dimensional Gaussian Distributions n n Described mathematically as: More easily visualised as: (2 -Dimensional)

Simplifying…. n n Calculating the full Gaussian for every pixel in frame is very, very slow Therefore I use a linear approximation

How do we use this to represent a pixel? n n Stauffer and Grimson suggest using a static number of Gaussians for each pixel This was found to be inefficient – so the number of Gaussians used to represent each pixel is variable

Weights n n Each Gaussian carries a weight value This weight is a measure of how well the Gaussian represents the history of the pixel If a pixel is found to match a Gaussian the weight is increased and vice-versa If the weight drops below a threshold then that Gaussian is eliminated

Matching n n n Each incoming pixel value must be checked against all the Gaussians at that location If a match is found then the value of that Gaussian is updated If there is no match then a new Gaussian is created with a low weight

Updating n n If a Gaussian matches a pixel, then the value of that Gaussian is updated using the current value The rate of learning is greater in the early stages when the model is being formed

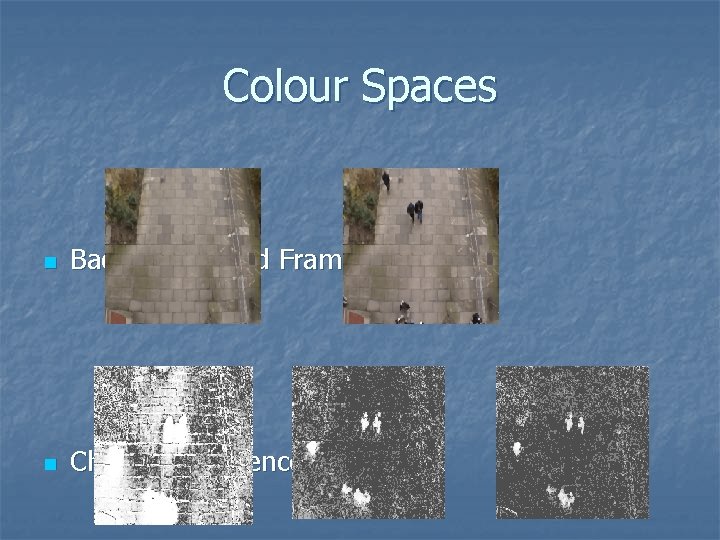

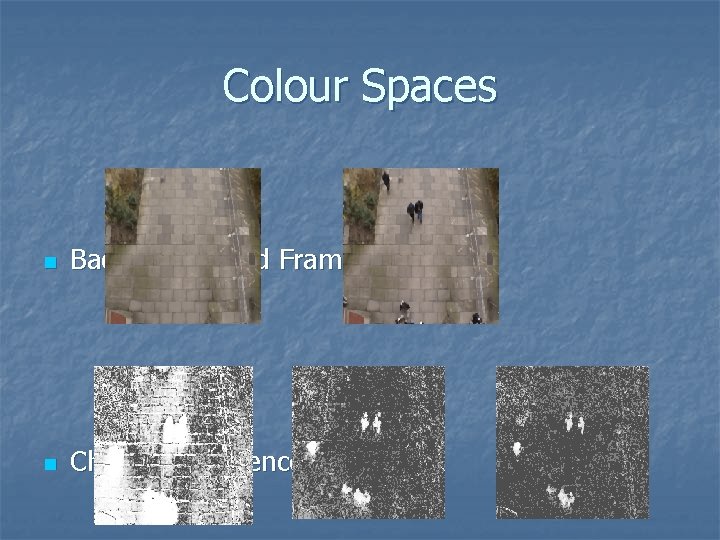

Colour Spaces n n n If RGB is used then the background filtering is sensitive to shadows The use of a colour space that separates intensity information from chromatic information overcomes this For this reason the YUV colour space is used

Colour Spaces n Background and Frame: n Channel Differences:

Isolate Objects n n n Groups of object pixels must be grouped to form objects A connected components algorithm is used The result is a list of objects and their position and size

Track objects n Objects are tracked from frame to frame using: Location n Direction of motion n Size n Colour n

The Story So Far… n n n Basic principle of background filtering Stages necessary in maintaining a background model How it is applied to tracking

Moving Camera Sequences n Basic Idea is the same as before n n Detect and track objects moving within a scene BUT – this time the camera is not stationary, so everything is moving

Motion Segmentation n Use a motion estimation algorithm on the whole frame Iteratively apply the same algorithm to areas that do not conform to this motion to find all motions present Problem – this is very, very slow

Motion Compensated Background Filtering n Basic Principle Develop and maintain background model as previously n Determine global motion and use this to update the model between frames n

Advantages n Only one motion model has to be found n n n This is therefore much faster Estimating motion for small regions can be unreliable Not as easy as it sounds though…. .

Motion Models n n Trying to determine the exact optical flow at every point in the frame would be ridiculously slow Therefore we try to fit a parametric model to the motion

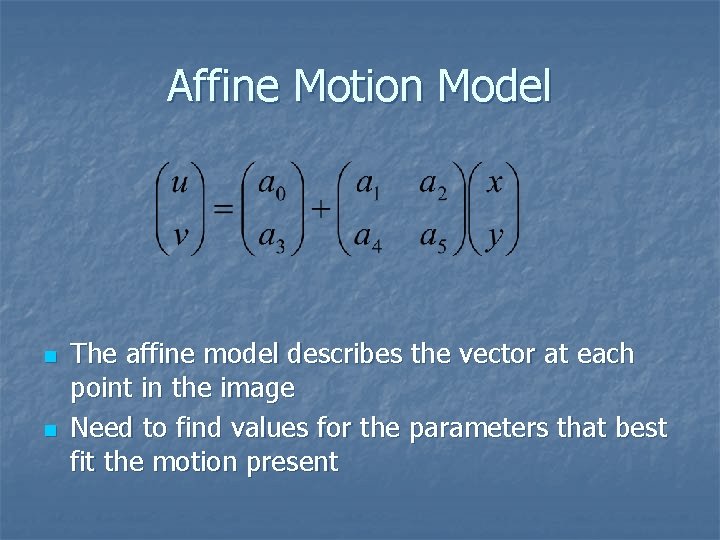

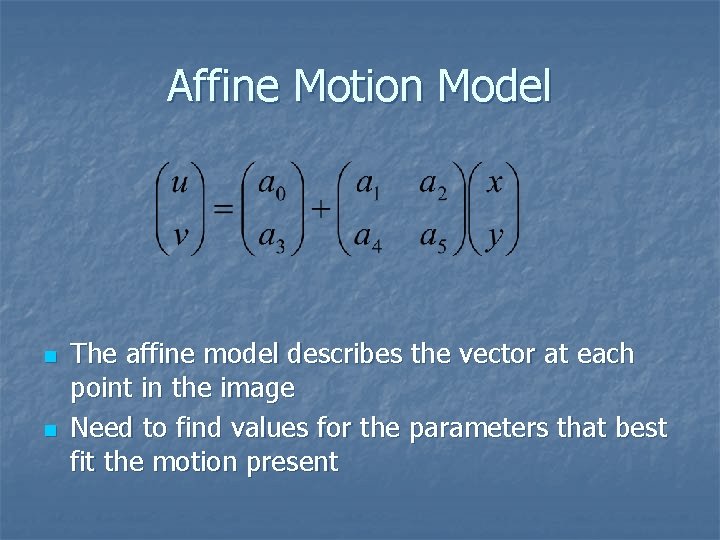

Affine Motion Model n n The affine model describes the vector at each point in the image Need to find values for the parameters that best fit the motion present

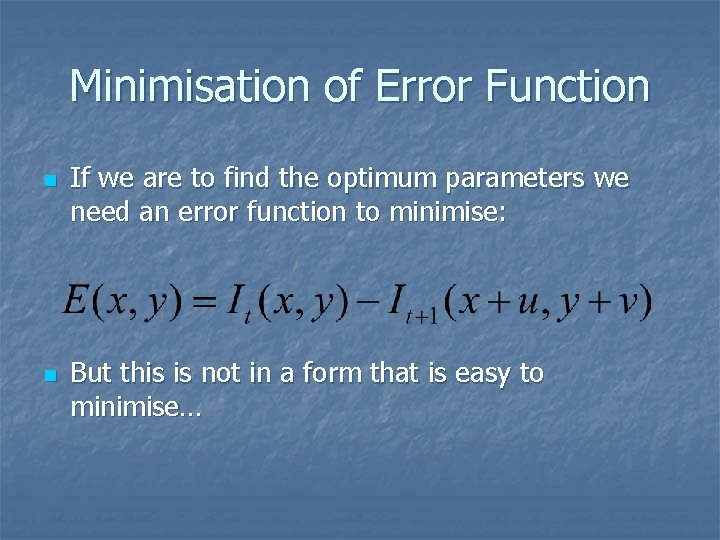

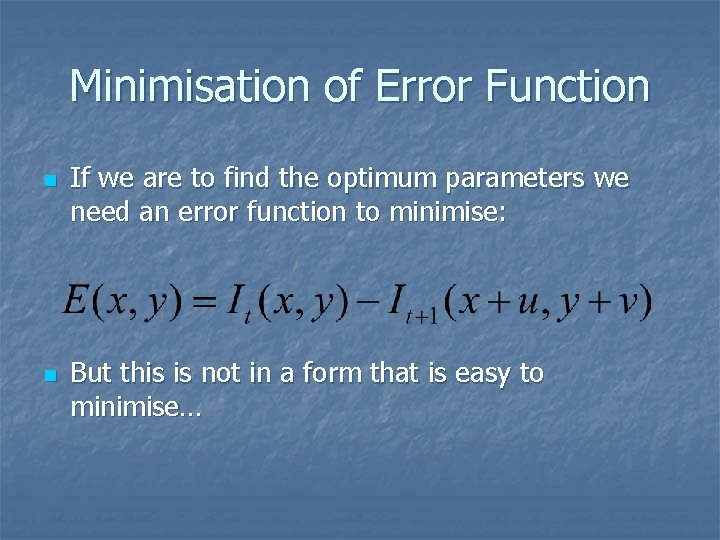

Minimisation of Error Function n n If we are to find the optimum parameters we need an error function to minimise: But this is not in a form that is easy to minimise…

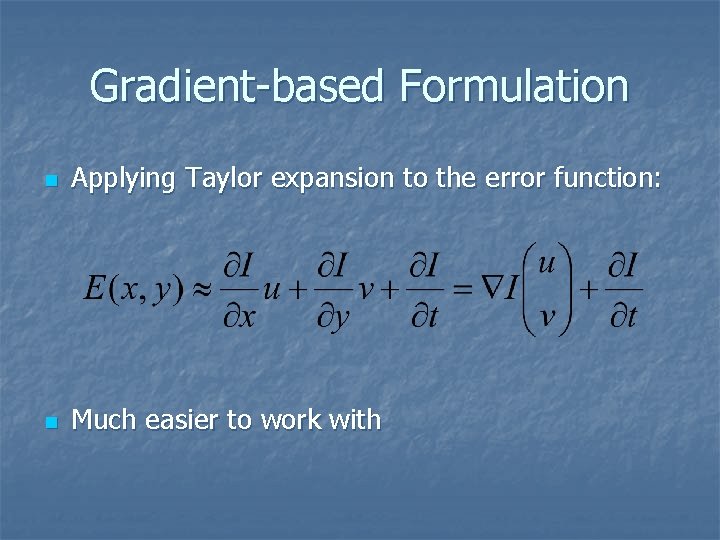

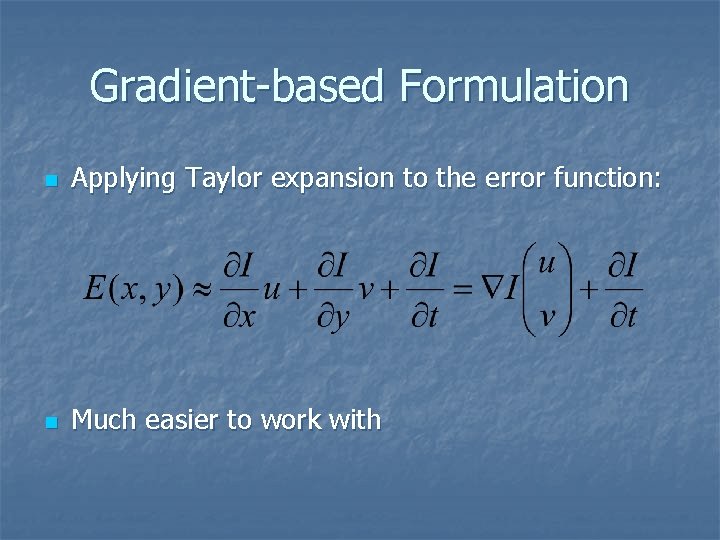

Gradient-based Formulation n Applying Taylor expansion to the error function: n Much easier to work with

Gradient-descent Minimisation n n If we know how the error changes with respect to the parameters, we can home in on the minimum error Various methods built on this principle:

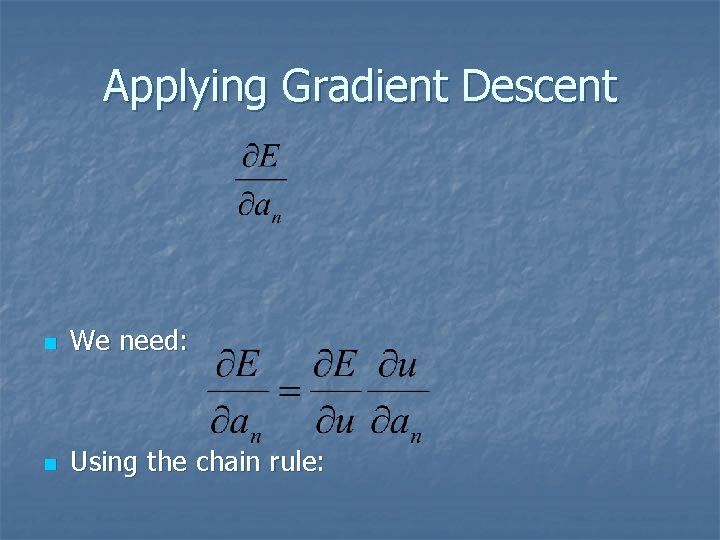

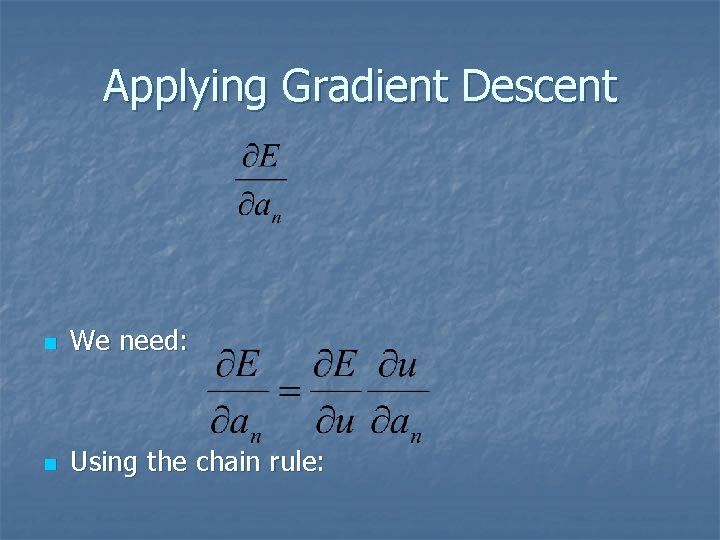

Applying Gradient Descent n We need: n Using the chain rule:

Robust Estimation n n What about points that do not belong to the motion we are estimating? These will pull the solution away from the true one

Robust Estimators n Robust estimators decrease the effect of outliers on estimation

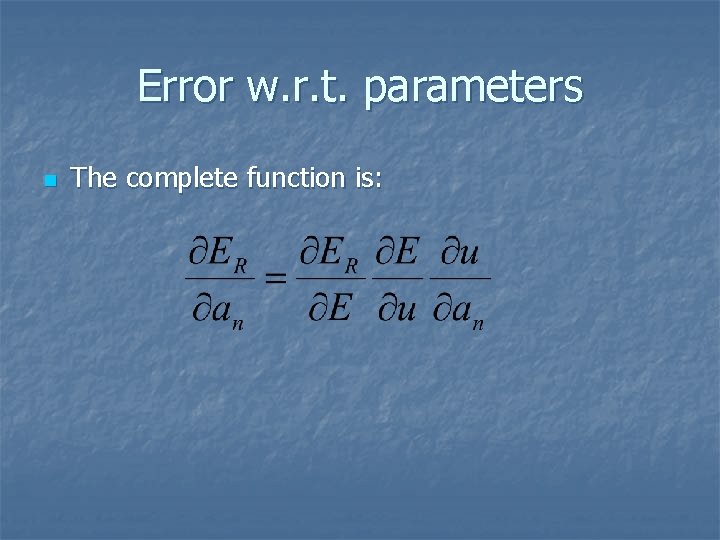

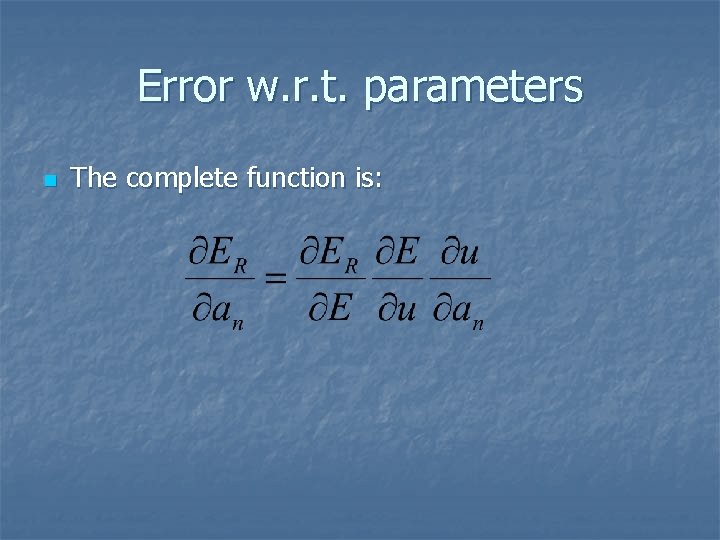

Error w. r. t. parameters n The complete function is:

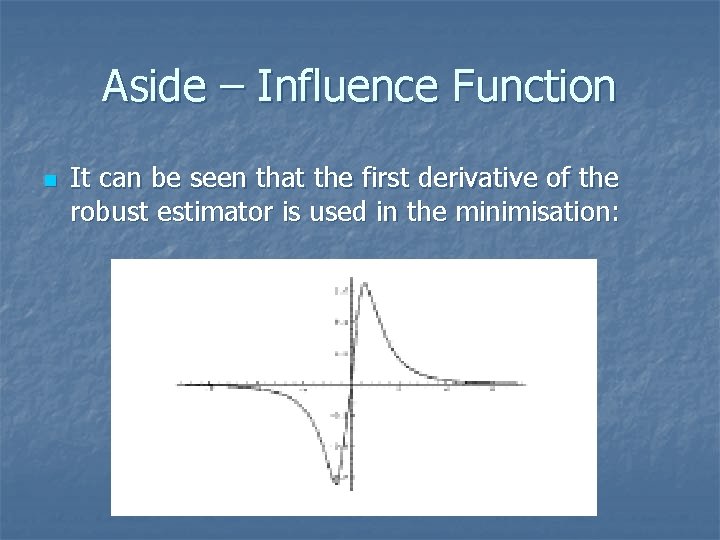

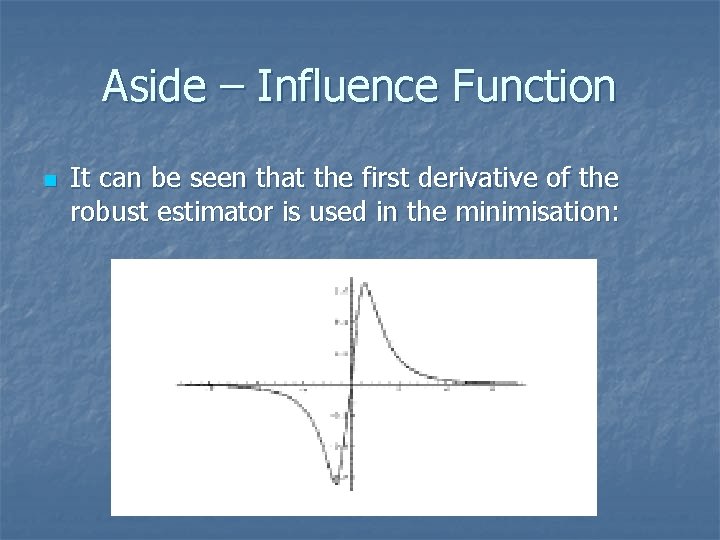

Aside – Influence Function n It can be seen that the first derivative of the robust estimator is used in the minimisation:

Pyramid Approach n n n Trying to estimate the parameters form scratch at full scale can be wasteful Therefore a ‘pyramid of resolutions’ or ‘Gaussian pyramid’ is used The principle is to estimate the parameters on a smaller scale and refine until full scale is reached

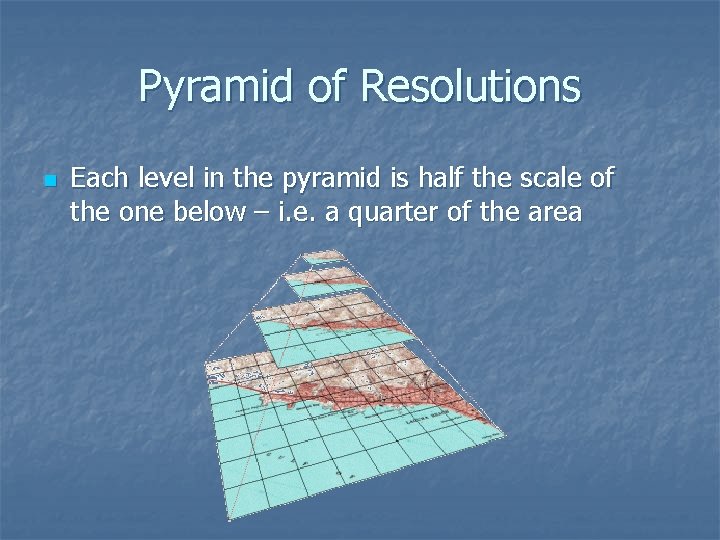

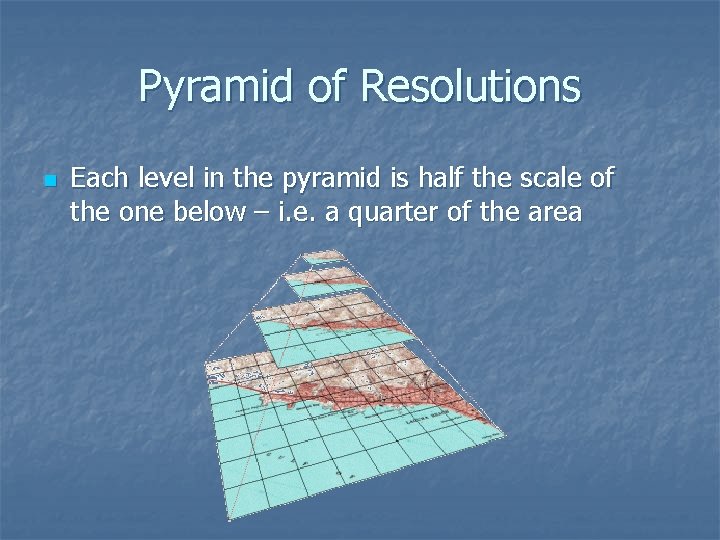

Pyramid of Resolutions n Each level in the pyramid is half the scale of the one below – i. e. a quarter of the area

n Out pops the solution…. n When combined with a suitable gradient based minimisation scheme…

Problems with this approach n Resampling the background model: Model cannot be too complex n Resampling will bring in errors n n Motion model is only an estimate of what is really happening n Can lead to false object detection – particularly close to boundaries

Background Model Design n n The background model needs to be robust to these problems We need some way to differentiate between genuine object detections and false ones from motion model and background model errors

My Approach n n Rather than updating model values with current, matched values replace them In this way resampling errors are not allowed to accumulate

Aside – ‘Real Time’ n The ability to process a sequence in realtime is dependent on THREE key factors: The speed of the algorithm n The frame rate required n The number of pixels n

Recap n n n Introduction to motion analysis Principles of background modelling Example of a static scene tracker Discussion of motion estimation Shortcomings when applied to background filtering