More than two groups ANOVA and Chisquare ANOVA

- Slides: 51

More than two groups: ANOVA and Chi-square

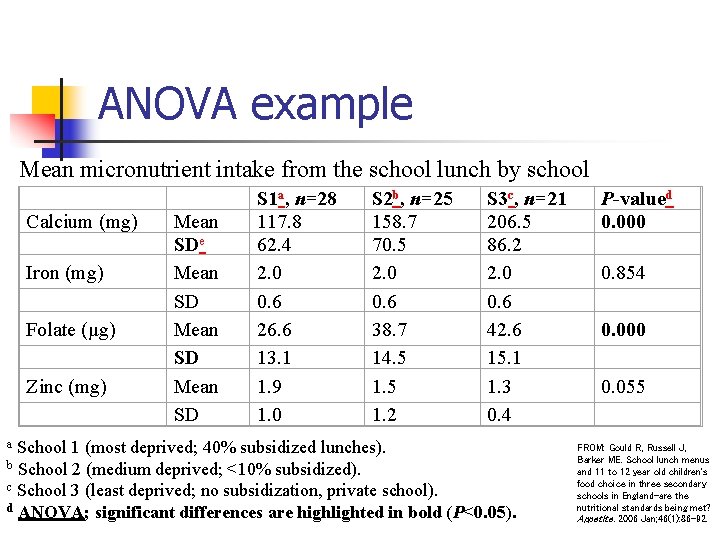

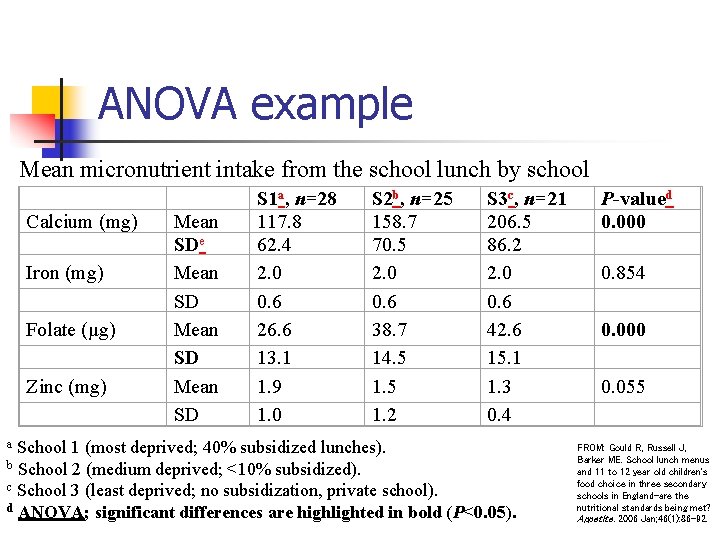

ANOVA example Mean micronutrient intake from the school lunch by school Calcium (mg) Iron (mg) Folate (μg) Zinc (mg) Mean SDe Mean SD S 1 a, n=28 117. 8 62. 4 2. 0 0. 6 26. 6 13. 1 1. 9 1. 0 S 2 b, n=25 158. 7 70. 5 2. 0 0. 6 38. 7 14. 5 1. 2 S 3 c, n=21 206. 5 86. 2 2. 0 0. 6 42. 6 15. 1 1. 3 0. 4 School 1 (most deprived; 40% subsidized lunches). b School 2 (medium deprived; <10% subsidized). c School 3 (least deprived; no subsidization, private school). d ANOVA; significant differences are highlighted in bold (P<0. 05). a P-valued 0. 000 0. 854 0. 000 0. 055 FROM: Gould R, Russell J, Barker ME. School lunch menus and 11 to 12 year old children's food choice in three secondary schools in England-are the nutritional standards being met? Appetite. 2006 Jan; 46(1): 86 -92.

ANOVA for comparing means between more than 2 groups

ANOVA (ANalysis Of VAriance) n n Idea: For two or more groups, test difference between means, for normally distributed variables. Just an extension of the t-test (an ANOVA with only two groups is mathematically equivalent to a t-test).

One-Way Analysis of Variance n Assumptions, same as ttest n Normally distributed outcome n Equal variances between the groups n Groups are independent

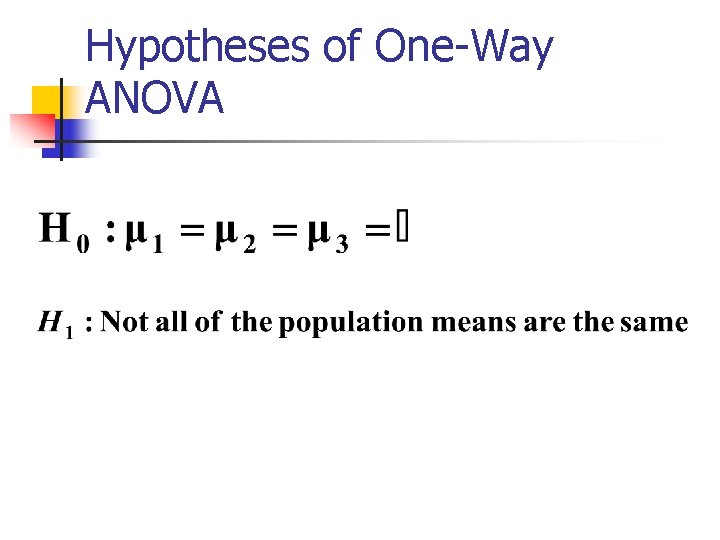

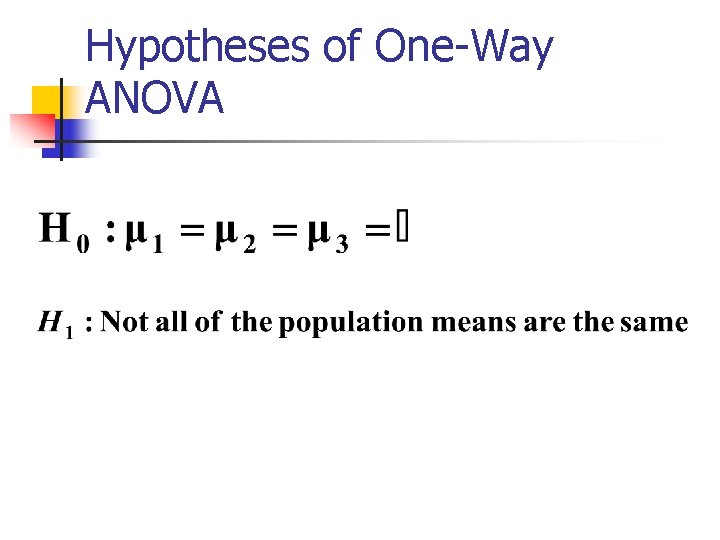

Hypotheses of One-Way ANOVA

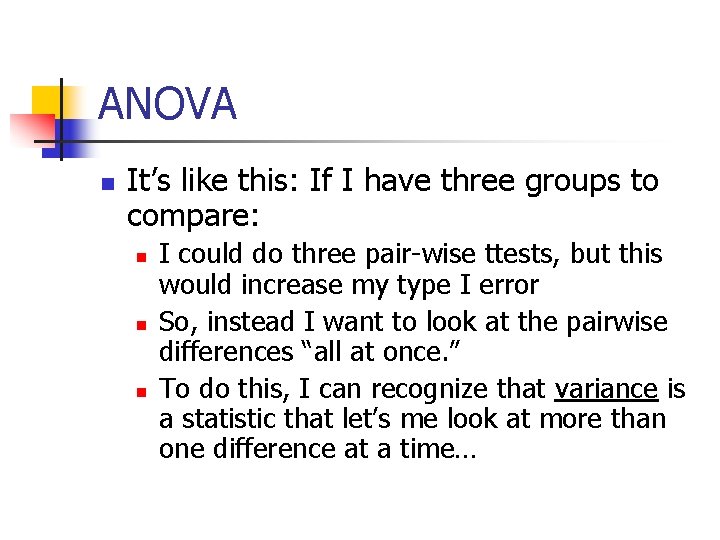

ANOVA n It’s like this: If I have three groups to compare: n n n I could do three pair-wise ttests, but this would increase my type I error So, instead I want to look at the pairwise differences “all at once. ” To do this, I can recognize that variance is a statistic that let’s me look at more than one difference at a time…

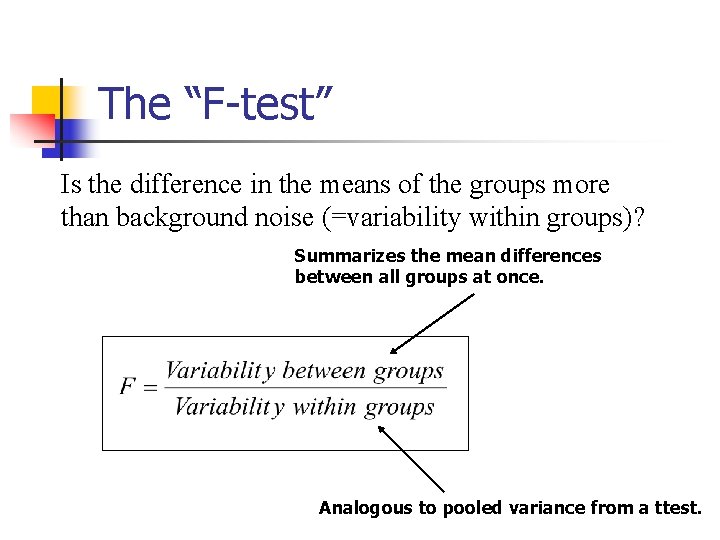

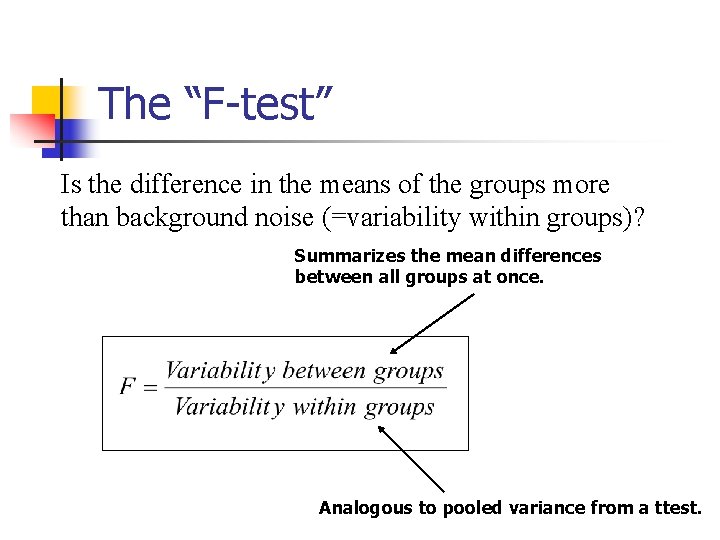

The “F-test” Is the difference in the means of the groups more than background noise (=variability within groups)? Summarizes the mean differences between all groups at once. Analogous to pooled variance from a ttest.

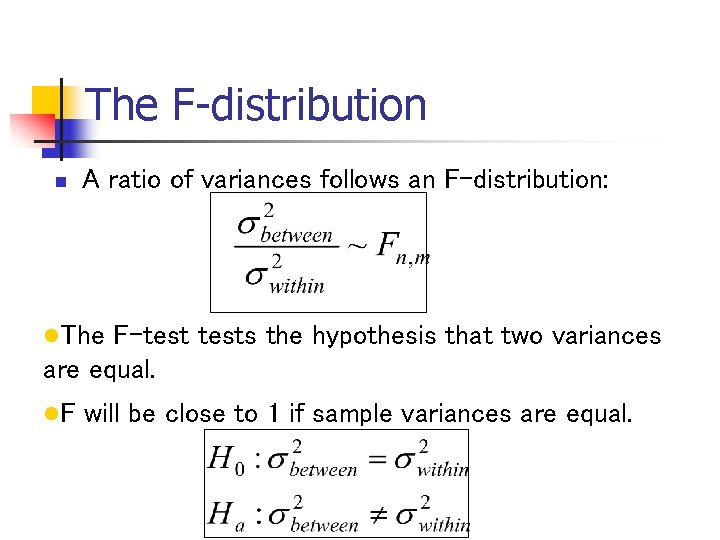

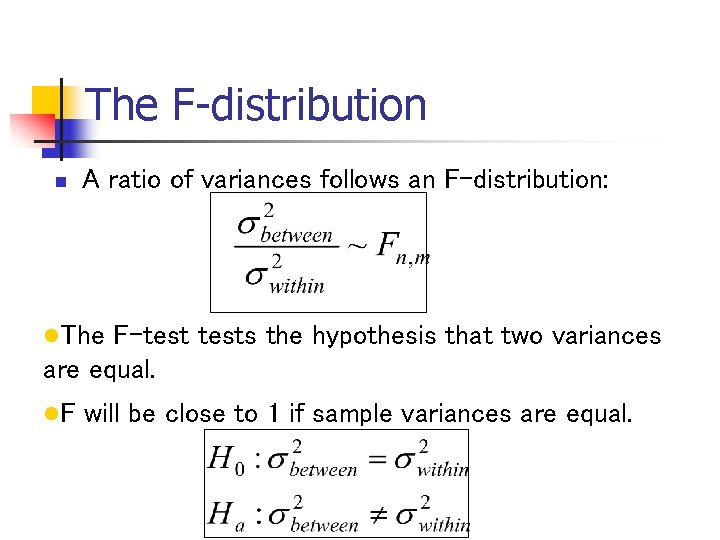

The F-distribution n A ratio of variances follows an F-distribution: l. The F-tests the hypothesis that two variances are equal. l. F will be close to 1 if sample variances are equal.

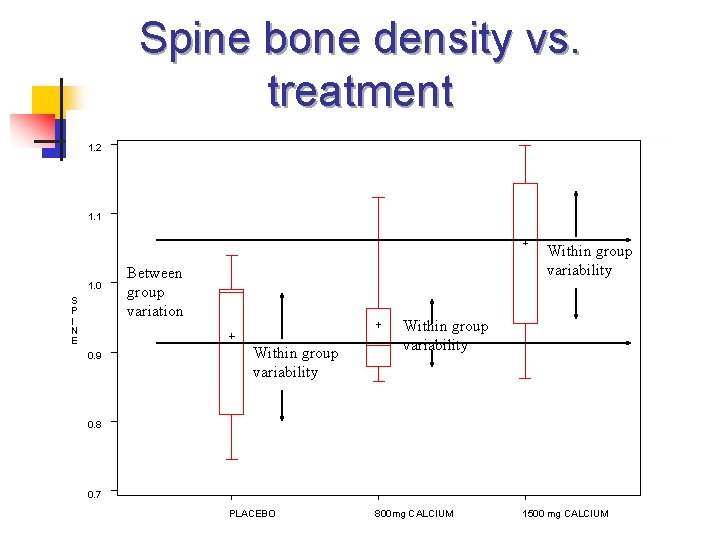

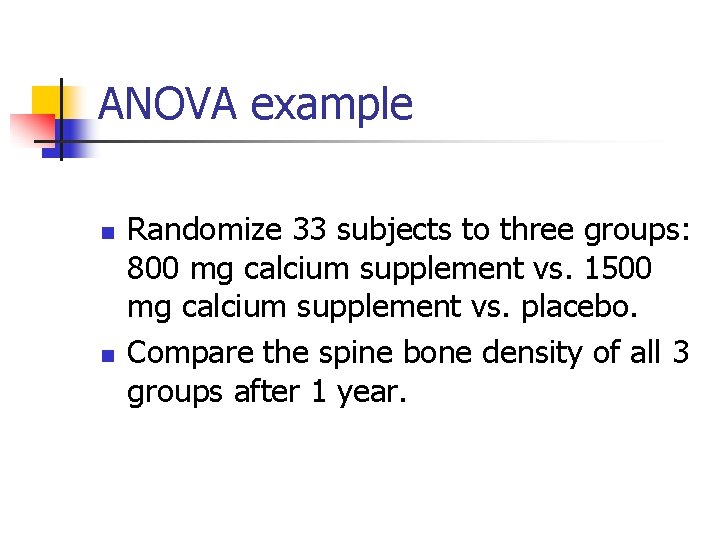

ANOVA example n n Randomize 33 subjects to three groups: 800 mg calcium supplement vs. 1500 mg calcium supplement vs. placebo. Compare the spine bone density of all 3 groups after 1 year.

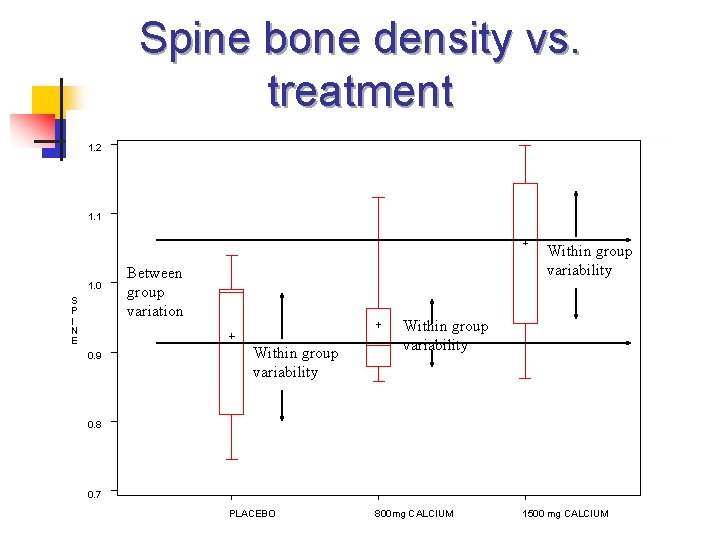

Spine bone density vs. treatment 1. 2 1. 1 1. 0 S P I N E 0. 9 Within group variability Between group variation Within group variability 0. 8 0. 7 PLACEBO 800 mg CALCIUM 1500 mg CALCIUM

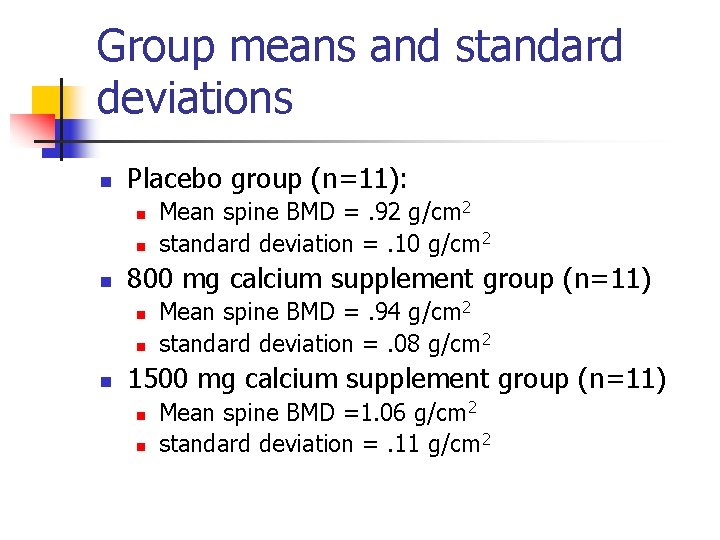

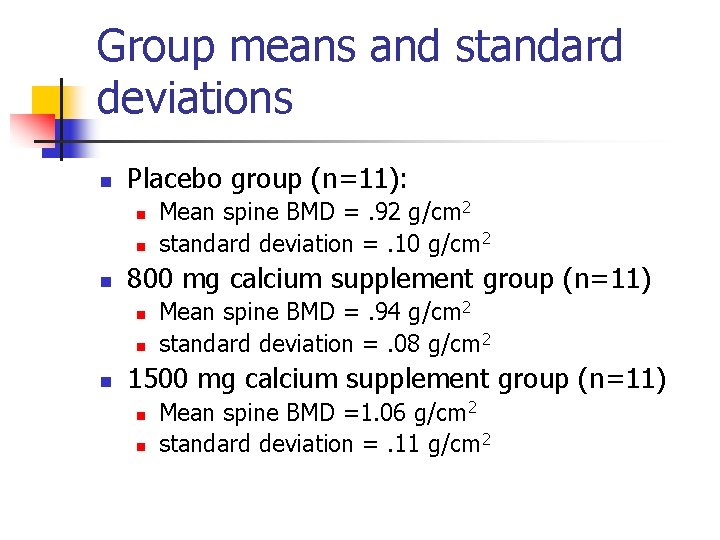

Group means and standard deviations n Placebo group (n=11): n n n 800 mg calcium supplement group (n=11) n n n Mean spine BMD =. 92 g/cm 2 standard deviation =. 10 g/cm 2 Mean spine BMD =. 94 g/cm 2 standard deviation =. 08 g/cm 2 1500 mg calcium supplement group (n=11) n n Mean spine BMD =1. 06 g/cm 2 standard deviation =. 11 g/cm 2

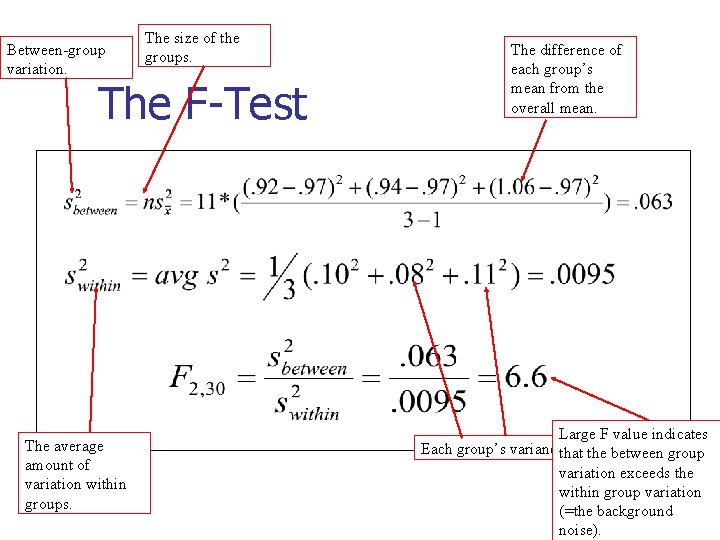

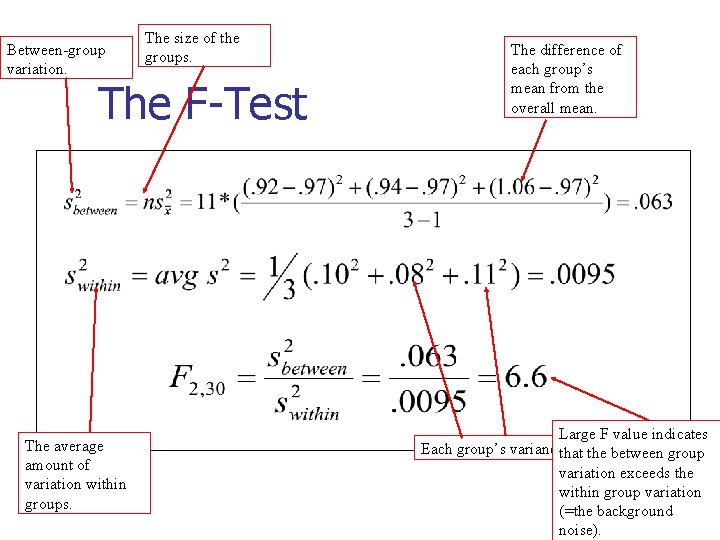

Between-group variation. The size of the groups. The F-Test The average amount of variation within groups. The difference of each group’s mean from the overall mean. Large F value indicates Each group’s variance. that the between group variation exceeds the within group variation (=the background noise).

Review Question 1 Which of the following is an assumption of ANOVA? a. b. c. d. e. The outcome variable is normally distributed. The variance of the outcome variable is the same in all groups. The groups are independent. All of the above. None of the above.

Review Question 1 Which of the following is an assumption of ANOVA? a. b. c. d. e. The outcome variable is normally distributed. The variance of the outcome variable is the same in all groups. The groups are independent. All of the above. None of the above.

ANOVA summary n n A statistically significant ANOVA (F-test) only tells you that at least two of the groups differ, but not which ones differ. Determining which groups differ (when it’s unclear) requires more sophisticated analyses to correct for the problem of multiple comparisons…

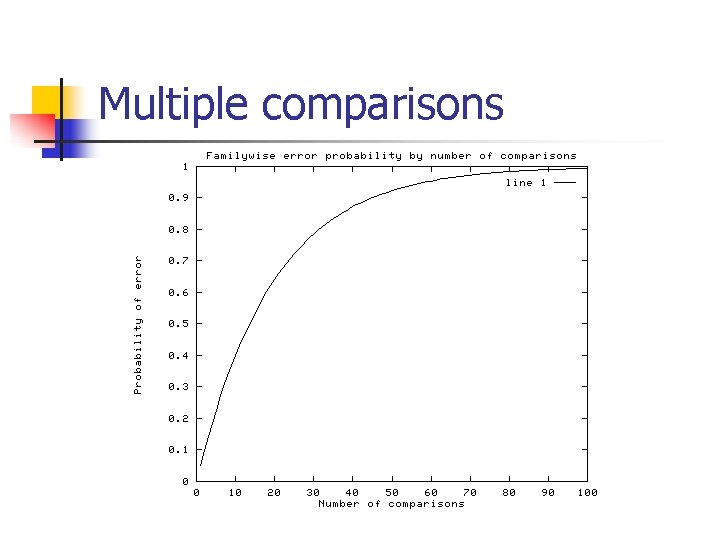

Question: Why not just do 3 pairwise ttests? n n Answer: because, at an error rate of 5% each test, this means you have an overall chance of up to 1(. 95)3= 14% of making a type-I error (if all 3 comparisons were independent) If you wanted to compare 6 groups, you’d have to do 15 pairwise ttests; which would give you a high chance of finding something significant just by chance.

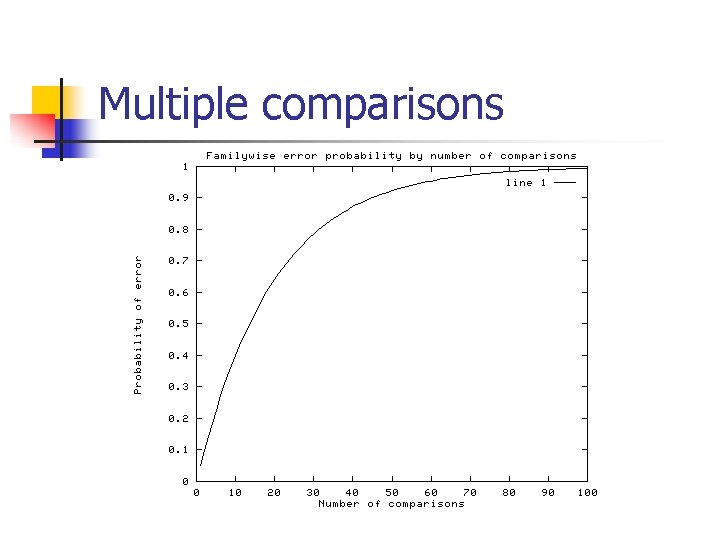

Multiple comparisons

Correction for multiple comparisons How to correct for multiple comparisons post-hoc… • Bonferroni correction (adjusts p by most conservative amount; assuming all tests independent, divide p by the number of tests) • Tukey (adjusts p) • Scheffe (adjusts p)

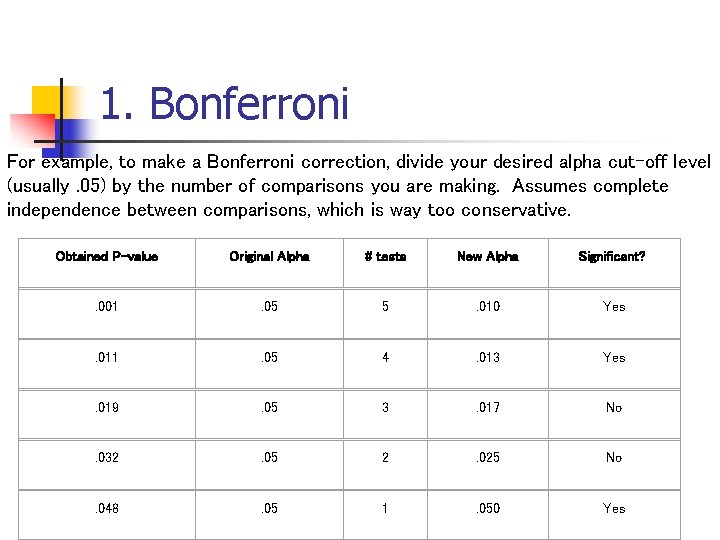

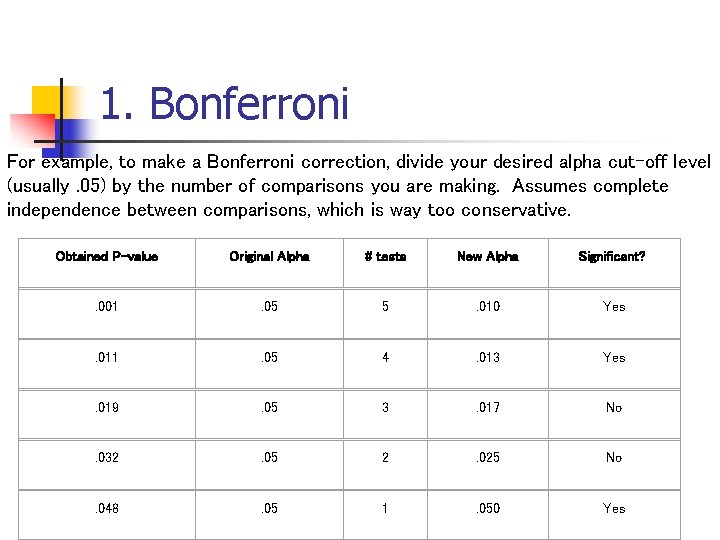

1. Bonferroni For example, to make a Bonferroni correction, divide your desired alpha cut-off level (usually. 05) by the number of comparisons you are making. Assumes complete independence between comparisons, which is way too conservative. Obtained P-value Original Alpha # tests New Alpha Significant? . 001 . 05 5 . 010 Yes . 011 . 05 4 . 013 Yes . 019 . 05 3 . 017 No . 032 . 05 2 . 025 No . 048 . 05 1 . 050 Yes

2/3. Tukey and Sheffé n Both methods increase your p-values to account for the fact that you’ve done multiple comparisons, but are less conservative than Bonferroni (let computer calculate for you!).

Review Question 2 I am doing an RCT of 4 treatment regimens for blood pressure. At the end of the day, I compare blood pressures in the 4 groups using ANOVA. My p-value is. 03. I conclude: a. b. c. d. e. All of the treatment regimens differ. I need to use a Bonferroni correction. One treatment is better than all the rest. At least one treatment is different from the others. In pairwise comparisons, no treatment will be

Review Question 2 I am doing an RCT of 4 treatment regimens for blood pressure. At the end of the day, I compare blood pressures in the 4 groups using ANOVA. My p-value is. 03. I conclude: a. b. c. d. e. All of the treatment regimens differ. I need to use a Bonferroni correction. One treatment is better than all the rest. At least one treatment is different from the others. In pairwise comparisons, no treatment will be

Chi-square test of Independence Chi-square test allows you to compare proportions between 2 or more groups (ANOVA for means; chi-square for proportions).

Example n Asch, S. E. (1955). Opinions and social pressure. Scientific American, 193, 3135.

The Experiment n n n A Subject volunteers to participate in a “visual perception study. ” Everyone else in the room is actually a conspirator in the study (unbeknownst to the Subject). The “experimenter” reveals a pair of cards…

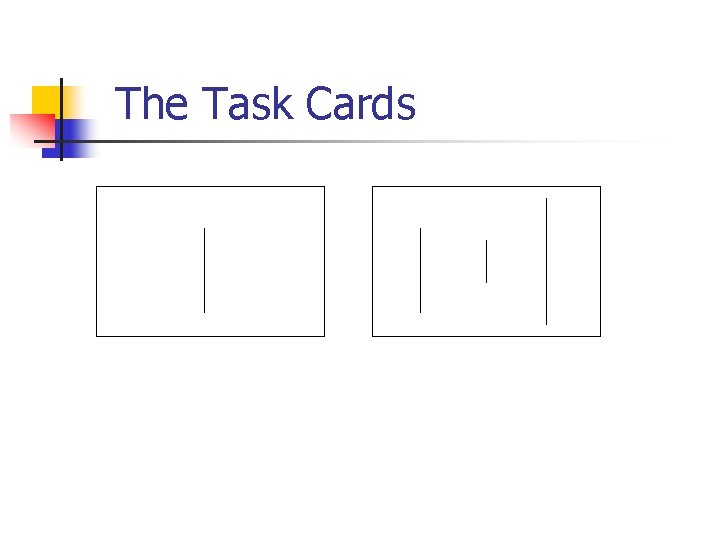

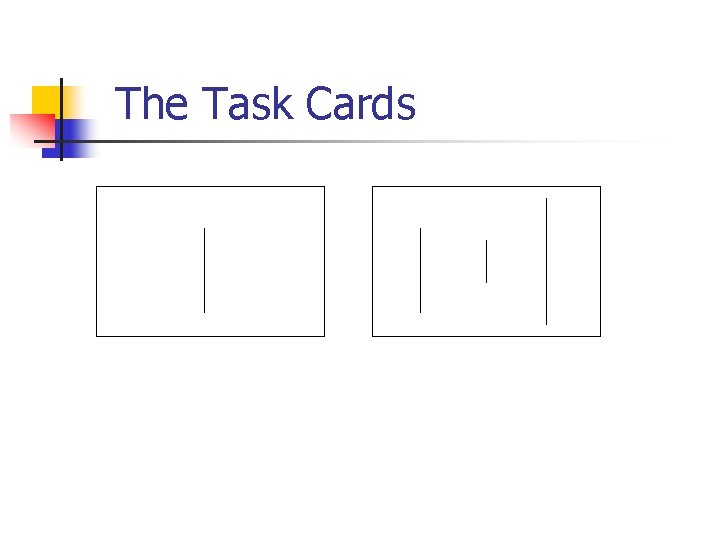

The Task Cards Standard line Comparison lines A, B, and C

The Experiment n n Everyone goes around the room and says which comparison line (A, B, or C) is correct; the true Subject always answers last – after hearing all the others’ answers. The first few times, the 7 “conspirators” give the correct answer. Then, they start purposely giving the (obviously) wrong answer. 75% of Subjects tested went along with the group’s consensus at least once.

Further Results n n In a further experiment, group size (number of conspirators) was altered from 2 -10. Does the group size alter the proportion of subjects who conform?

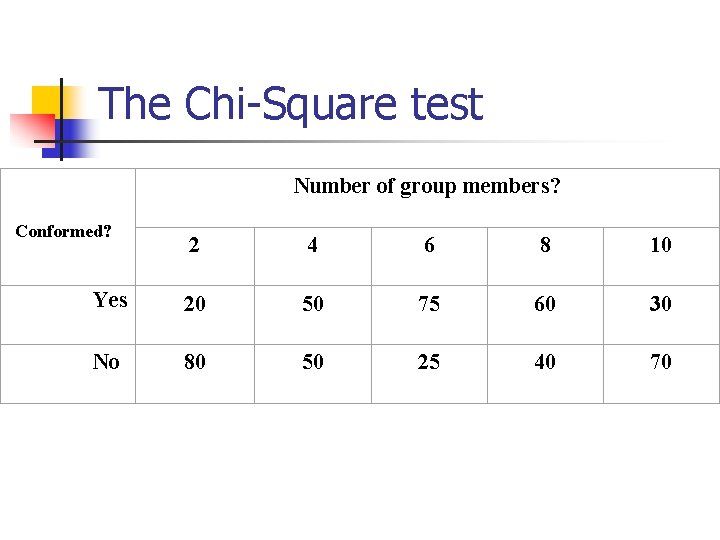

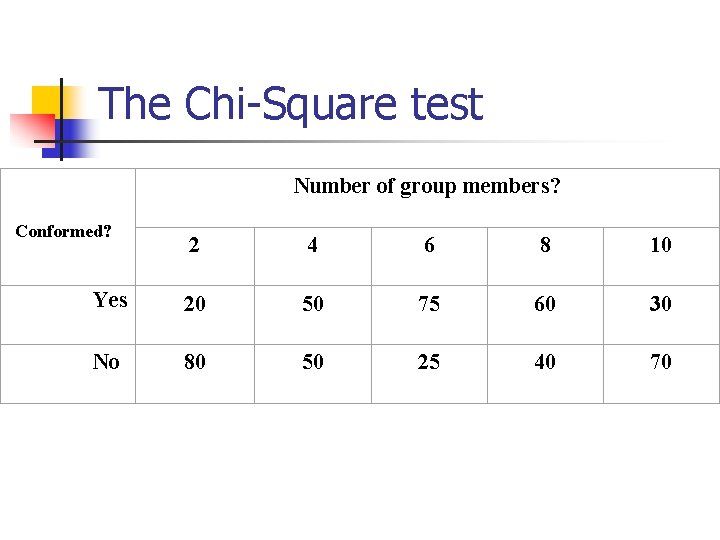

The Chi-Square test Number of group members? Conformed? 2 4 6 8 10 Yes 20 50 75 60 30 No 80 50 25 40 70 Apparently, conformity less likely when less or more group members…

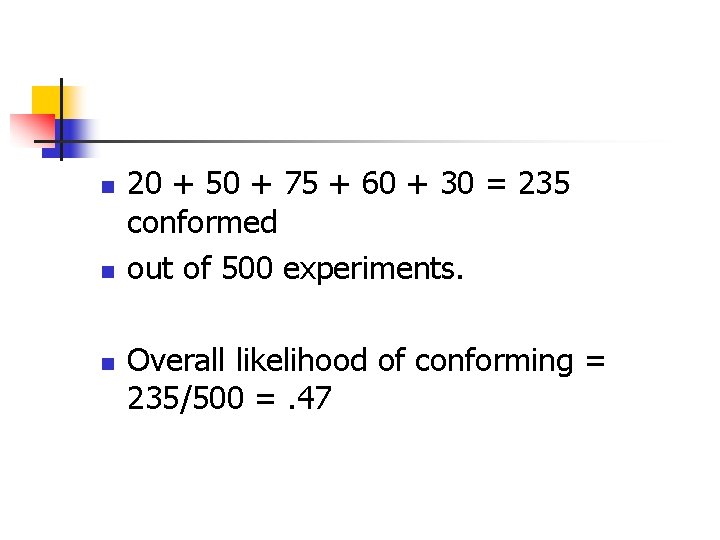

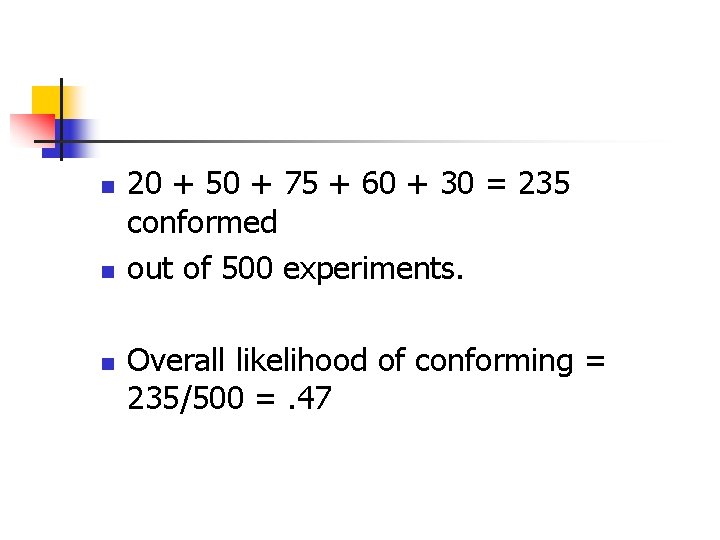

n n n 20 + 50 + 75 + 60 + 30 = 235 conformed out of 500 experiments. Overall likelihood of conforming = 235/500 =. 47

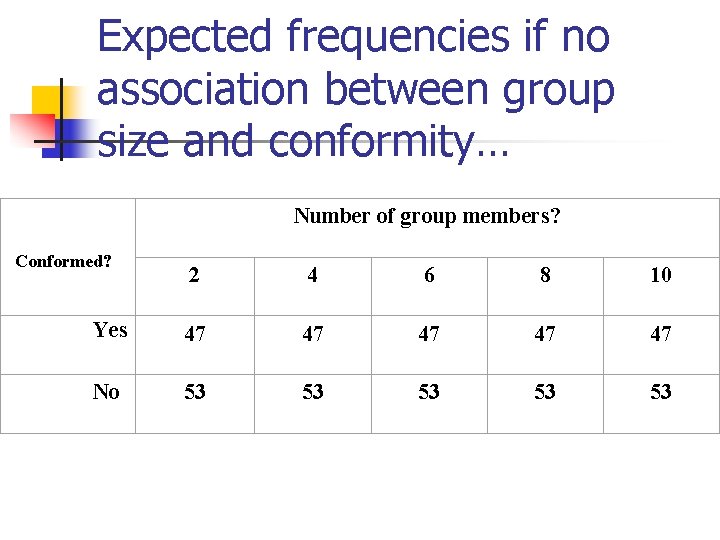

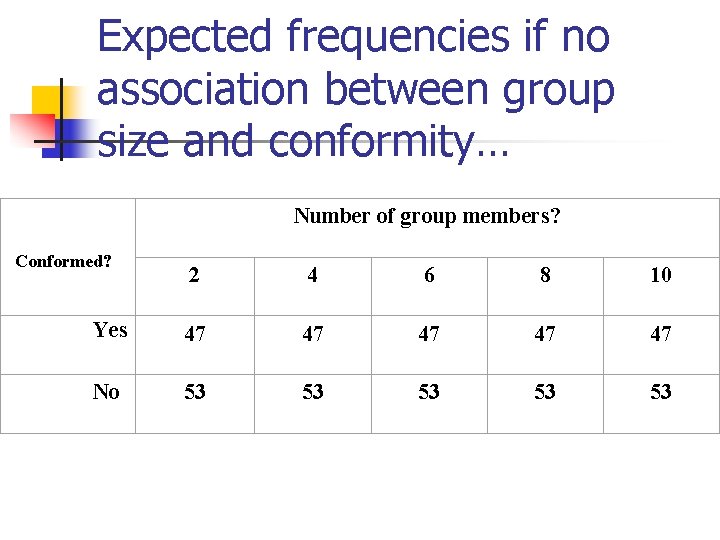

Expected frequencies if no association between group size and conformity… Number of group members? Conformed? 2 4 6 8 10 Yes 47 47 47 No 53 53 53

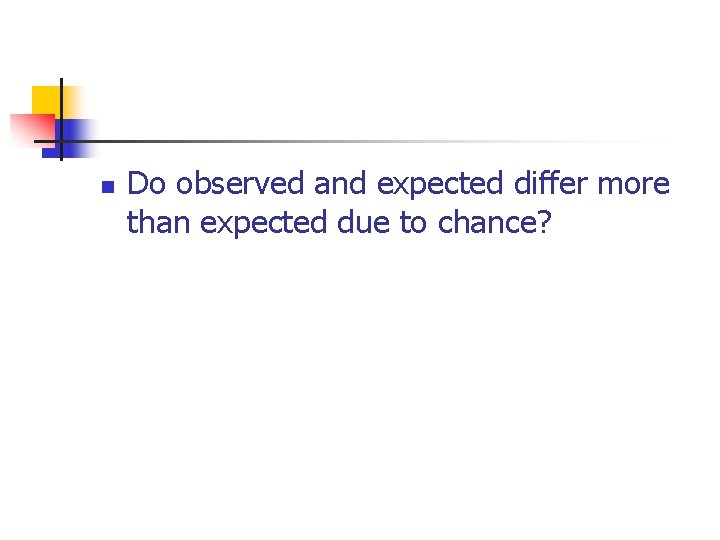

n Do observed and expected differ more than expected due to chance?

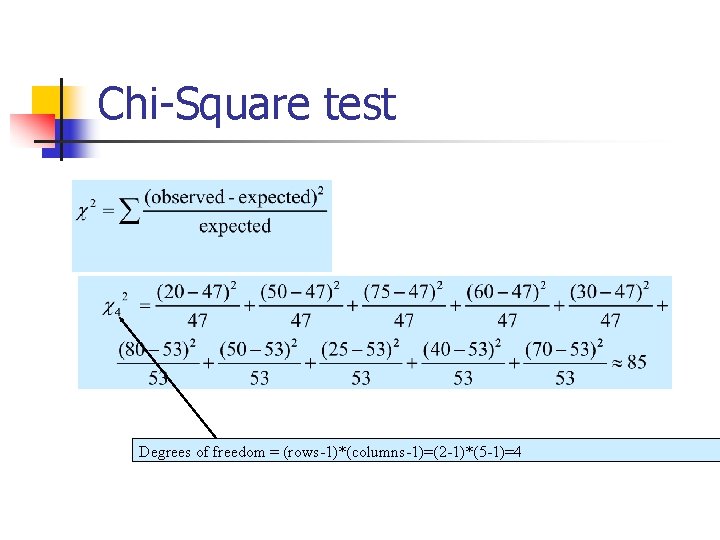

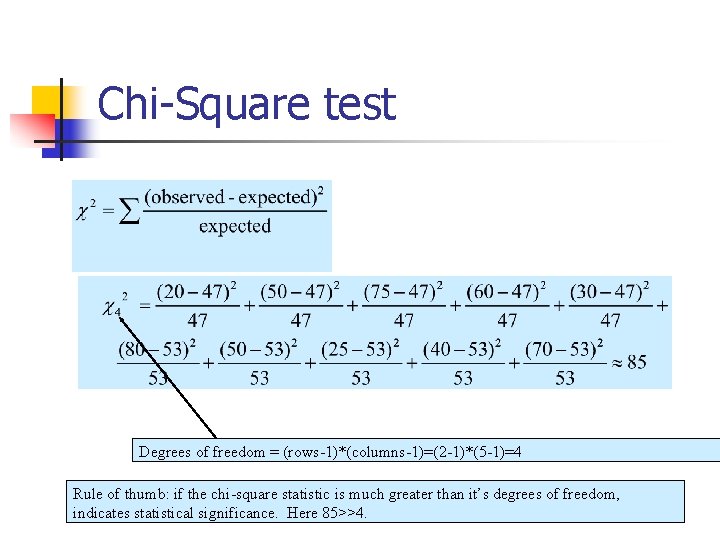

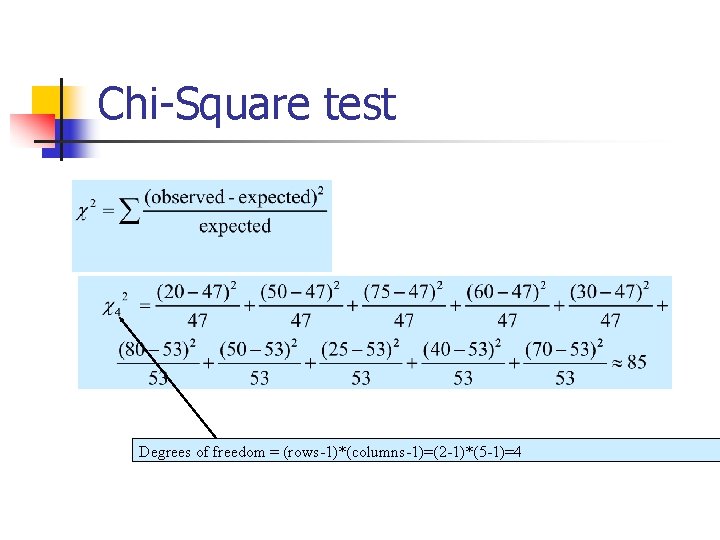

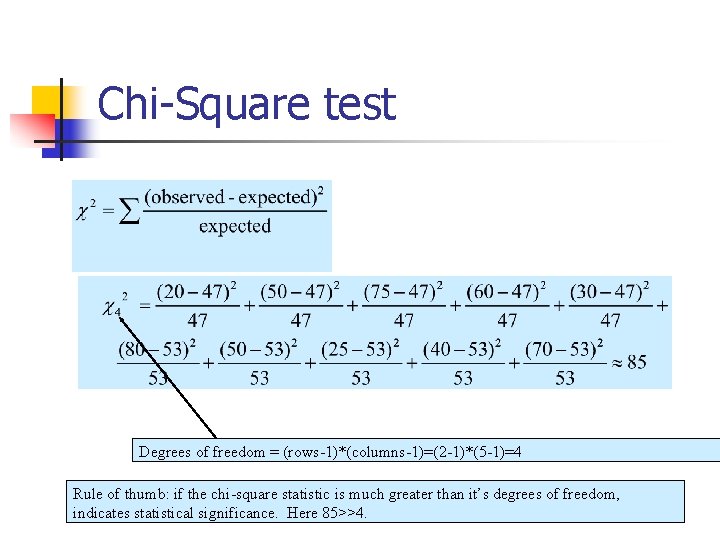

Chi-Square test Degrees of freedom = (rows-1)*(columns-1)=(2 -1)*(5 -1)=4

Chi-Square test Degrees of freedom = (rows-1)*(columns-1)=(2 -1)*(5 -1)=4 Rule of thumb: if the chi-square statistic is much greater than it’s degrees of freedom, indicates statistical significance. Here 85>>4.

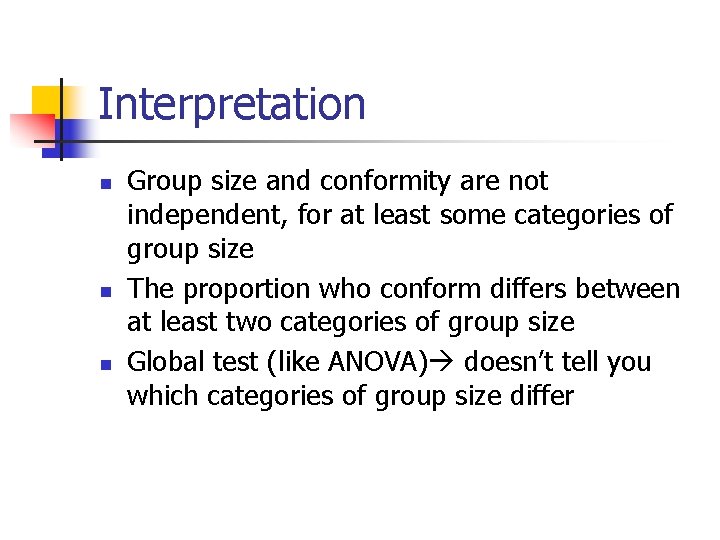

Interpretation n Group size and conformity are not independent, for at least some categories of group size The proportion who conform differs between at least two categories of group size Global test (like ANOVA) doesn’t tell you which categories of group size differ

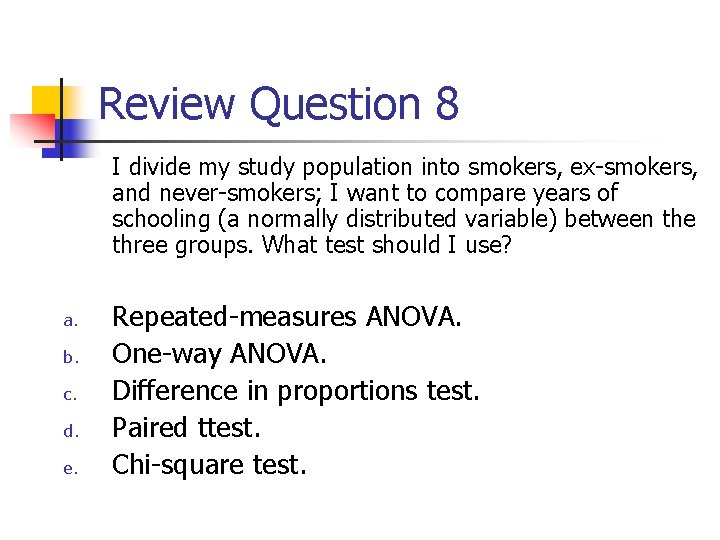

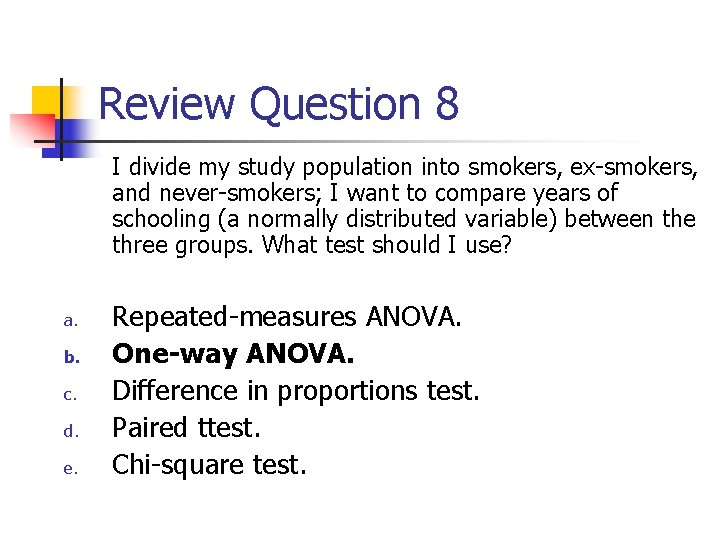

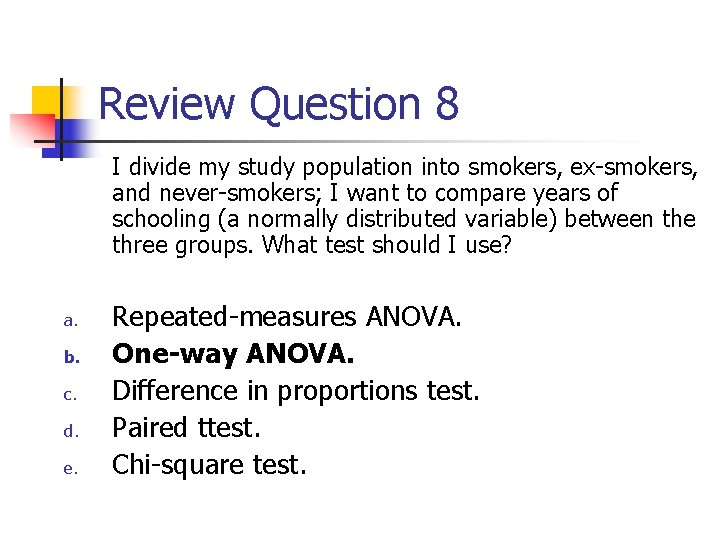

Review Question 8 I divide my study population into smokers, ex-smokers, and never-smokers; I want to compare years of schooling (a normally distributed variable) between the three groups. What test should I use? a. b. c. d. e. Repeated-measures ANOVA. One-way ANOVA. Difference in proportions test. Paired ttest. Chi-square test.

Review Question 8 I divide my study population into smokers, ex-smokers, and never-smokers; I want to compare years of schooling (a normally distributed variable) between the three groups. What test should I use? a. b. c. d. e. Repeated-measures ANOVA. One-way ANOVA. Difference in proportions test. Paired ttest. Chi-square test.

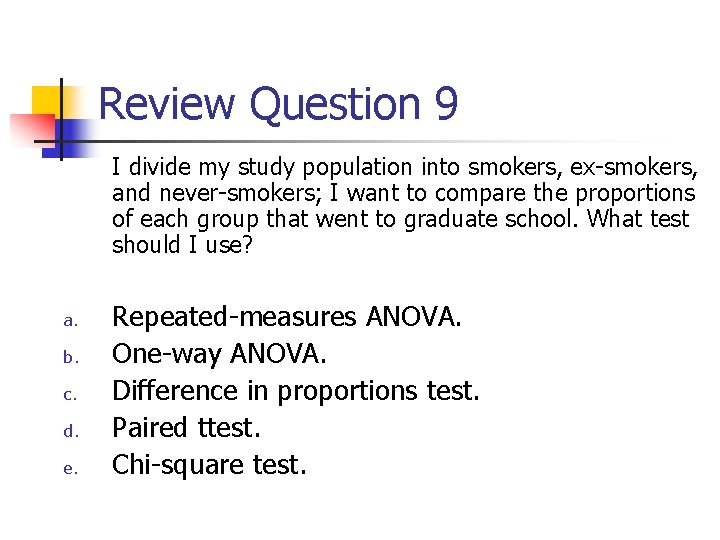

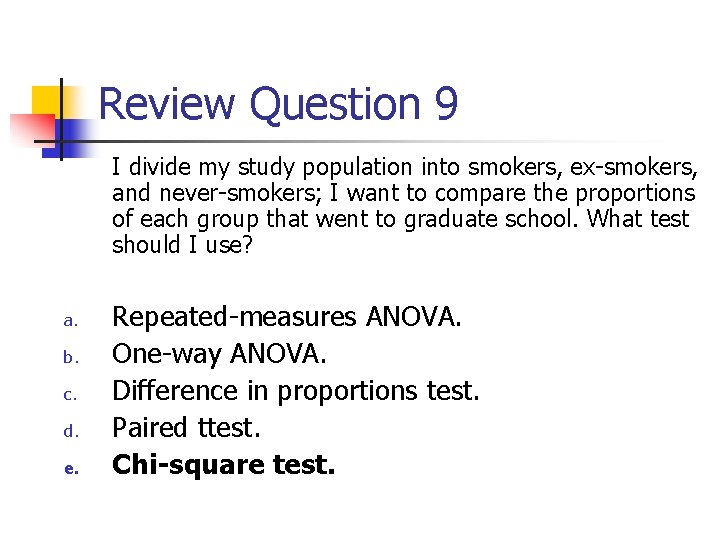

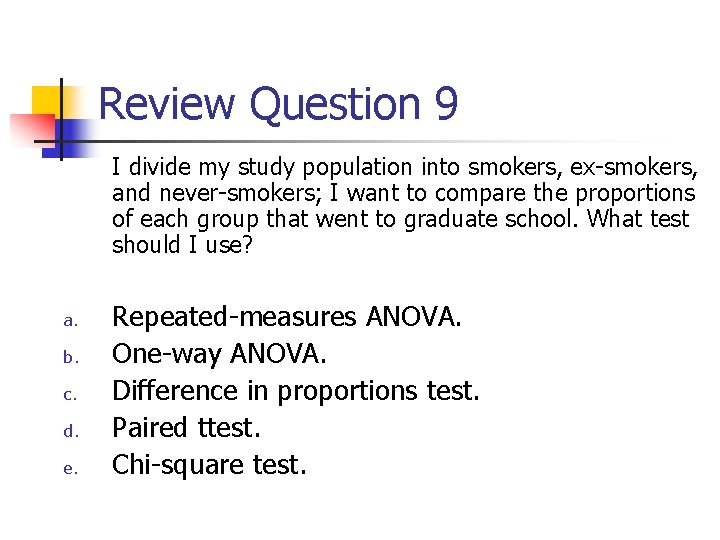

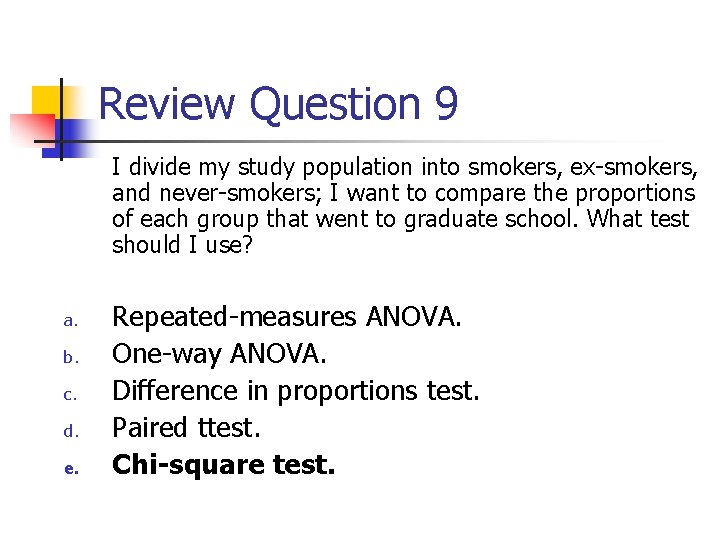

Review Question 9 I divide my study population into smokers, ex-smokers, and never-smokers; I want to compare the proportions of each group that went to graduate school. What test should I use? a. b. c. d. e. Repeated-measures ANOVA. One-way ANOVA. Difference in proportions test. Paired ttest. Chi-square test.

Review Question 9 I divide my study population into smokers, ex-smokers, and never-smokers; I want to compare the proportions of each group that went to graduate school. What test should I use? a. b. c. d. e. Repeated-measures ANOVA. One-way ANOVA. Difference in proportions test. Paired ttest. Chi-square test.

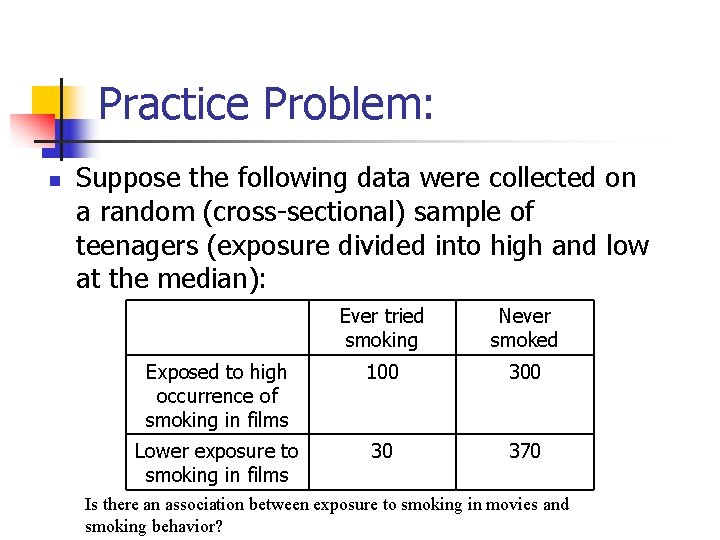

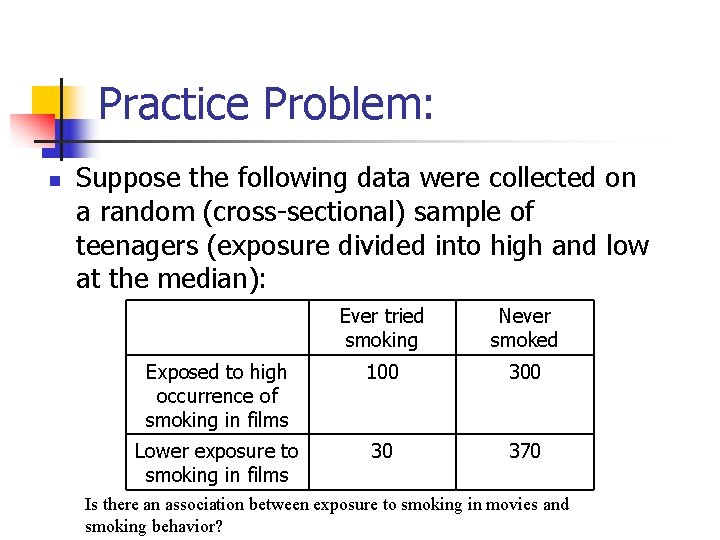

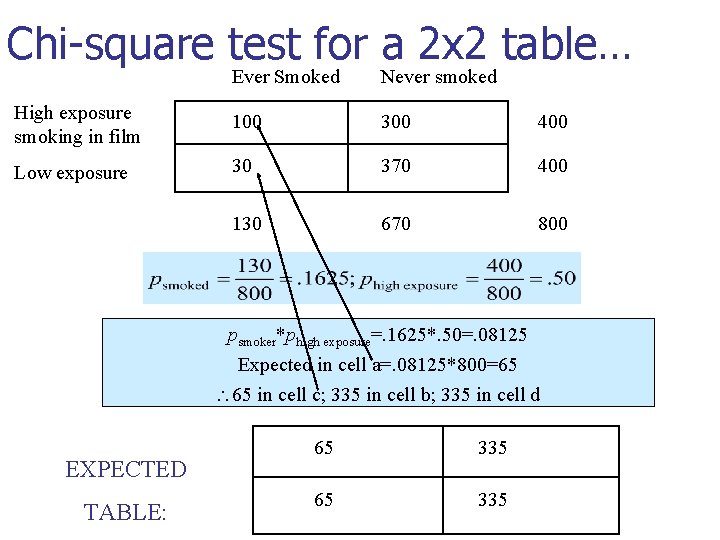

Practice Problem: n Suppose the following data were collected on a random (cross-sectional) sample of teenagers (exposure divided into high and low at the median): Ever tried smoking Never smoked Exposed to high occurrence of smoking in films 100 300 Lower exposure to smoking in films 30 370 Is there an association between exposure to smoking in movies and smoking behavior?

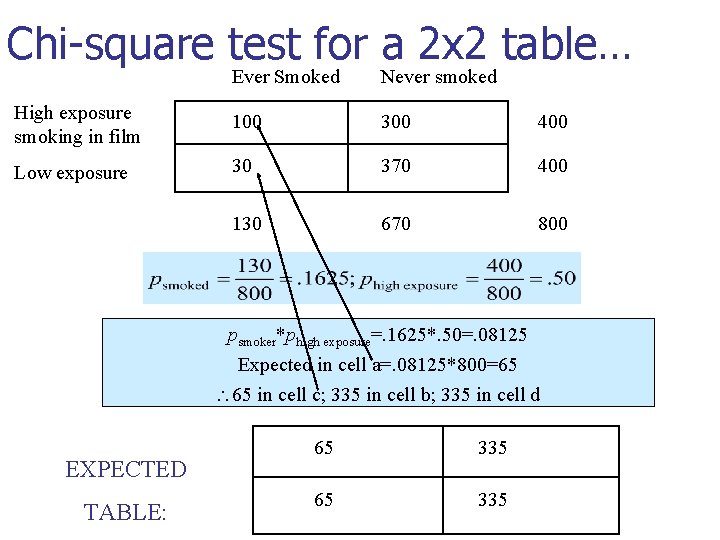

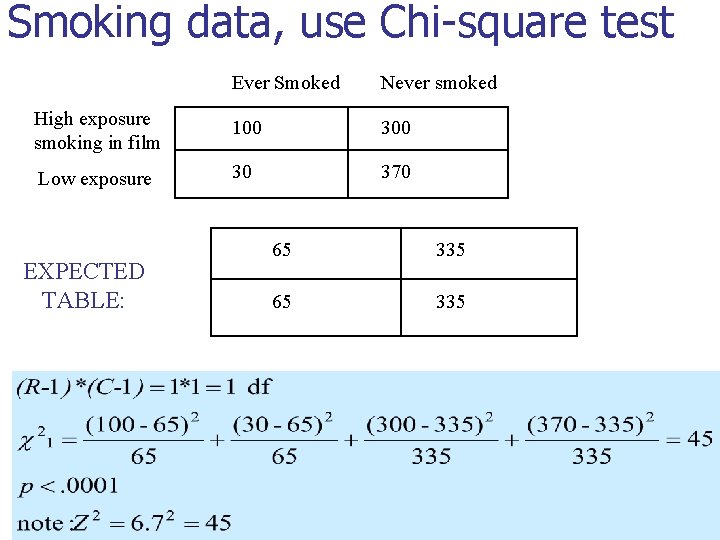

Chi-square test for a 2 x 2 table… Ever Smoked Never smoked High exposure smoking in film 100 300 400 Low exposure 30 370 400 130 670 800 psmoker*phigh exposure=. 1625*. 50=. 08125 Expected in cell a=. 08125*800=65 65 in cell c; 335 in cell b; 335 in cell d EXPECTED TABLE: 65 335

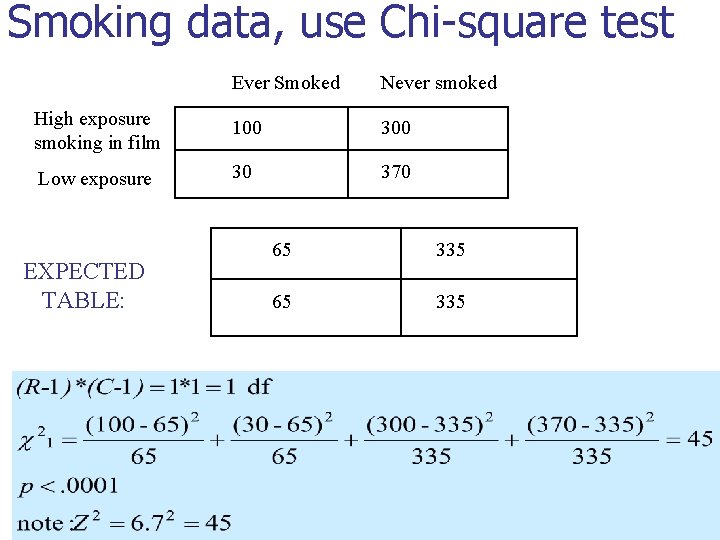

Smoking data, use Chi-square test Ever Smoked Never smoked High exposure smoking in film 100 300 Low exposure 30 370 EXPECTED TABLE: 65 335

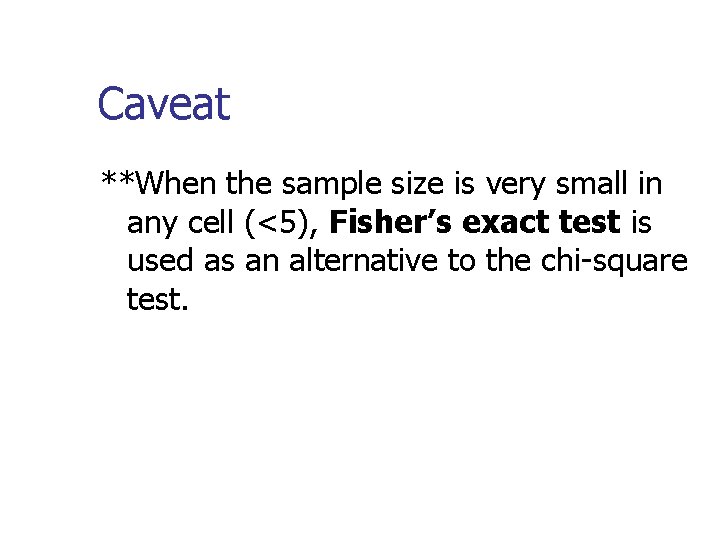

Caveat **When the sample size is very small in any cell (<5), Fisher’s exact test is used as an alternative to the chi-square test.

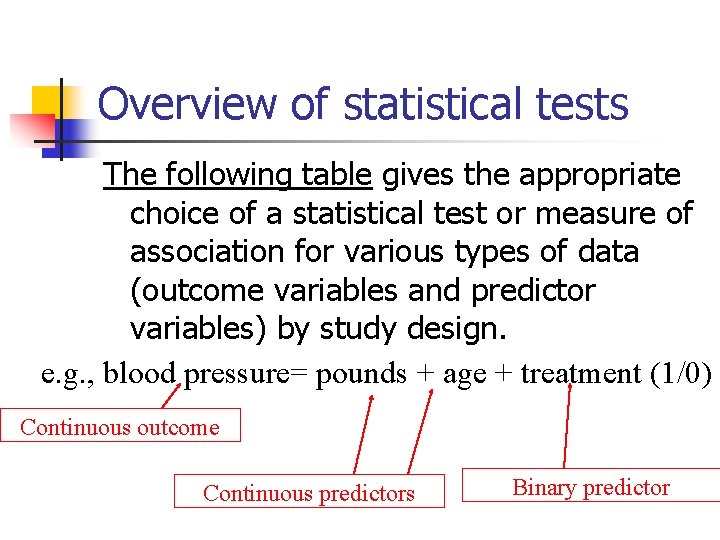

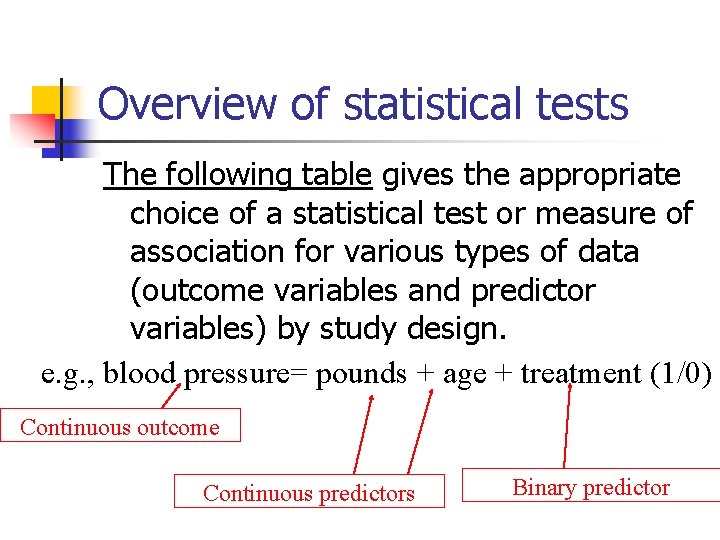

Overview of statistical tests The following table gives the appropriate choice of a statistical test or measure of association for various types of data (outcome variables and predictor variables) by study design. e. g. , blood pressure= pounds + age + treatment (1/0) Continuous outcome Continuous predictors Binary predictor

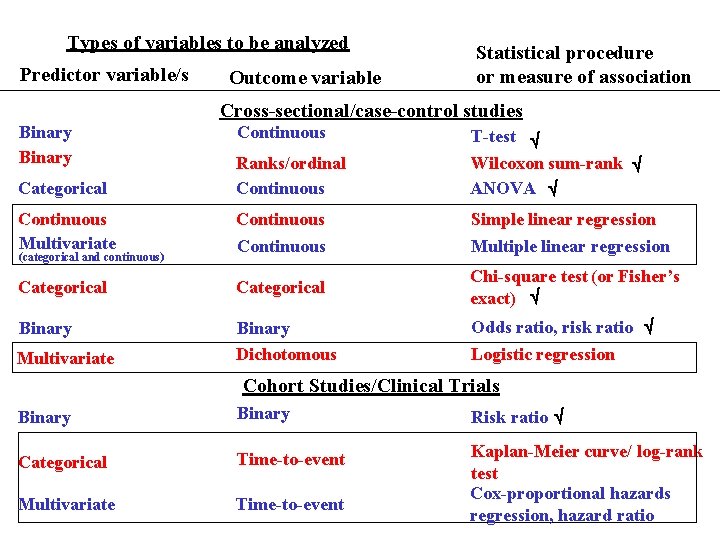

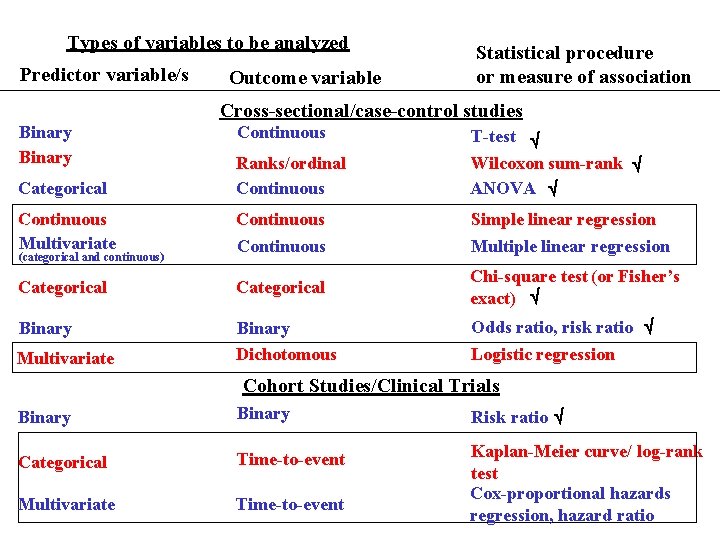

Types of variables to be analyzed Predictor variable/s Outcome variable Statistical procedure or measure of association Cross-sectional/case-control studies Binary Categorical Continuous Multivariate (categorical and continuous) Categorical Continuous Ranks/ordinal Continuous T-test Wilcoxon sum-rank ANOVA Continuous Simple linear regression Continuous Multiple linear regression Chi-square test (or Fisher’s exact) Categorical Multivariate Odds ratio, risk ratio Binary Dichotomous Logistic regression Cohort Studies/Clinical Trials Binary Risk ratio Categorical Time-to-event Multivariate Time-to-event Kaplan-Meier curve/ log-rank test Cox-proportional hazards Cox-proportional regression, hazards ratio regression, hazard ratio Binary

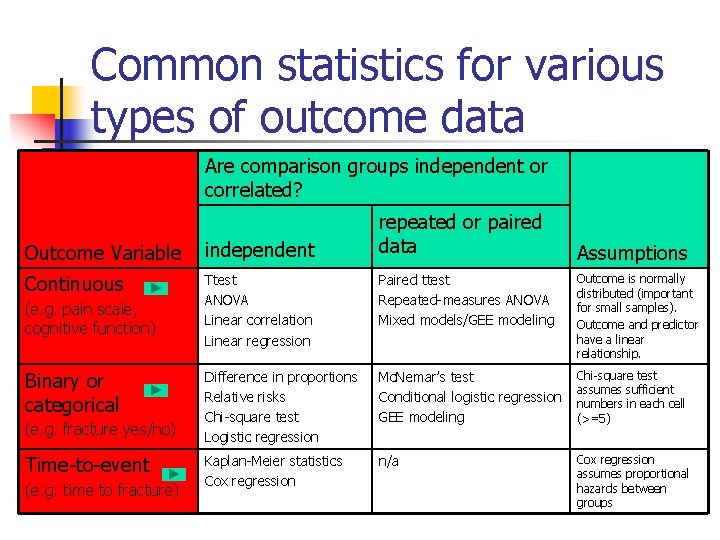

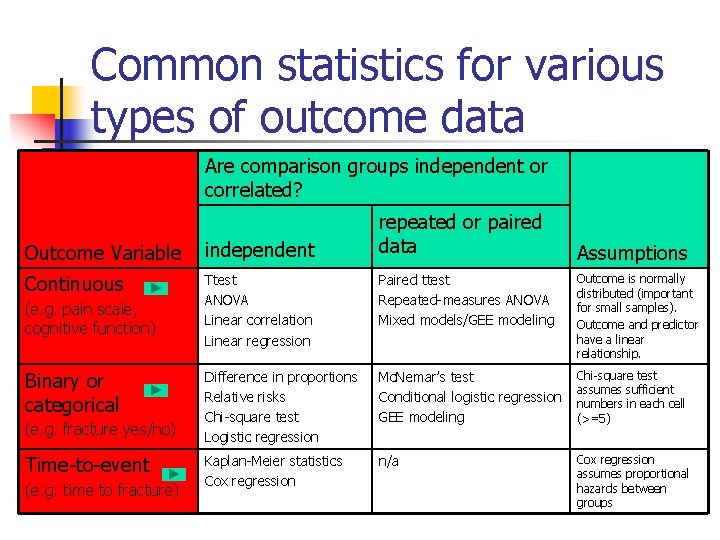

Common statistics for various types of outcome data Are comparison groups independent or correlated? repeated or paired data Outcome Variable independent Continuous Ttest ANOVA Linear correlation Linear regression Paired ttest Repeated-measures ANOVA Mixed models/GEE modeling Outcome is normally distributed (important for small samples). Outcome and predictor have a linear relationship. Difference in proportions Relative risks Chi-square test Logistic regression Mc. Nemar’s test Conditional logistic regression GEE modeling Chi-square test assumes sufficient numbers in each cell (>=5) Kaplan-Meier statistics Cox regression n/a Cox regression assumes proportional hazards between groups (e. g. pain scale, cognitive function) Binary or categorical (e. g. fracture yes/no) Time-to-event (e. g. time to fracture) Assumptions

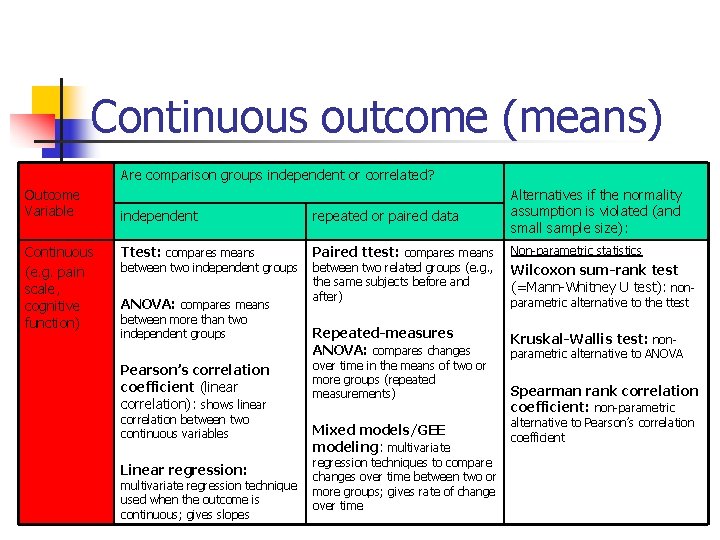

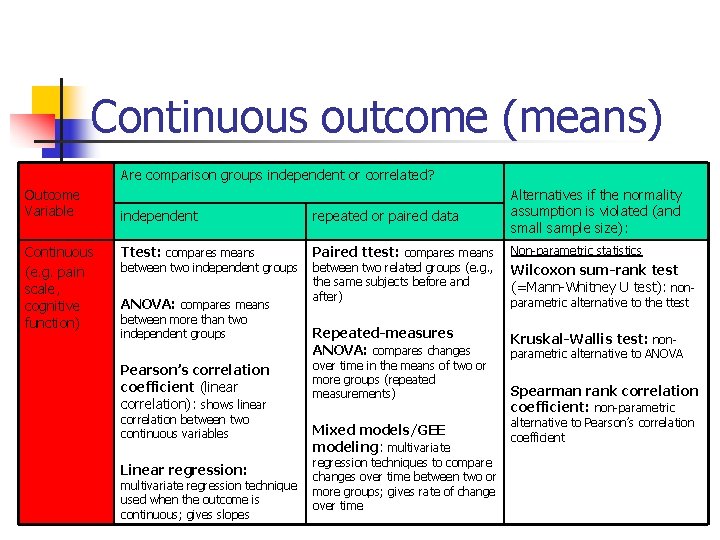

Continuous outcome (means) Are comparison groups independent or correlated? Outcome Variable Continuous (e. g. pain scale, cognitive function) independent repeated or paired data Alternatives if the normality assumption is violated (and small sample size): Ttest: compares means Paired ttest: compares means Non-parametric statistics between two independent groups ANOVA: compares means between more than two independent groups Pearson’s correlation coefficient (linear correlation): shows linear correlation between two continuous variables Linear regression: multivariate regression technique used when the outcome is continuous; gives slopes between two related groups (e. g. , the same subjects before and after) Wilcoxon sum-rank test (=Mann-Whitney U test): non- Repeated-measures ANOVA: compares changes Kruskal-Wallis test: non- over time in the means of two or more groups (repeated measurements) Mixed models/GEE modeling: multivariate regression techniques to compare changes over time between two or more groups; gives rate of change over time parametric alternative to the ttest parametric alternative to ANOVA Spearman rank correlation coefficient: non-parametric alternative to Pearson’s correlation coefficient

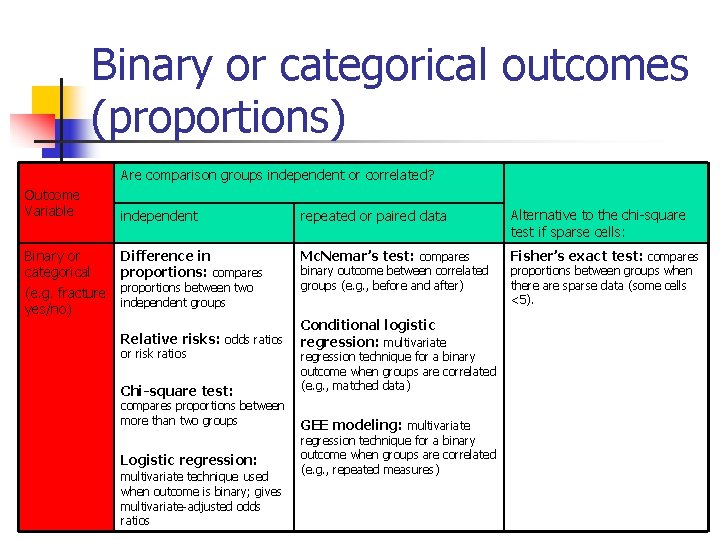

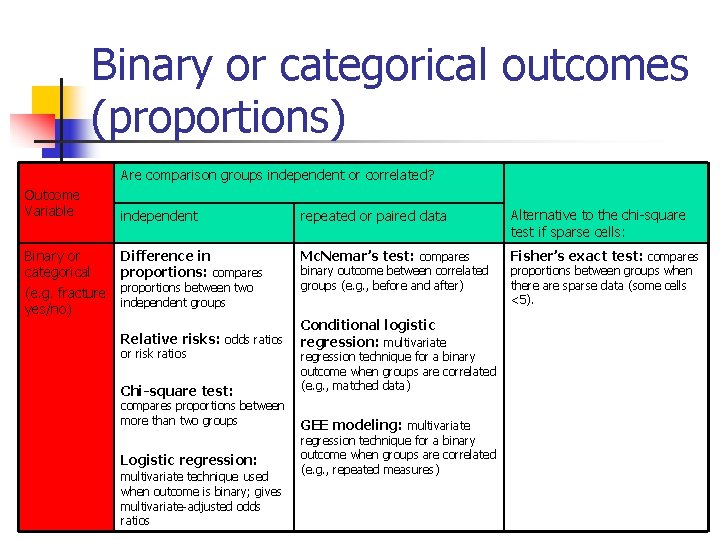

Binary or categorical outcomes (proportions) Are comparison groups independent or correlated? Outcome Variable Binary or categorical (e. g. fracture yes/no) independent repeated or paired data Alternative to the chi-square test if sparse cells: Difference in proportions: compares Mc. Nemar’s test: compares Fisher’s exact test: compares proportions between two independent groups Relative risks: odds ratios or risk ratios Chi-square test: compares proportions between more than two groups Logistic regression: multivariate technique used when outcome is binary; gives multivariate-adjusted odds ratios binary outcome between correlated groups (e. g. , before and after) Conditional logistic regression: multivariate regression technique for a binary outcome when groups are correlated (e. g. , matched data) GEE modeling: multivariate regression technique for a binary outcome when groups are correlated (e. g. , repeated measures) proportions between groups when there are sparse data (some cells <5).

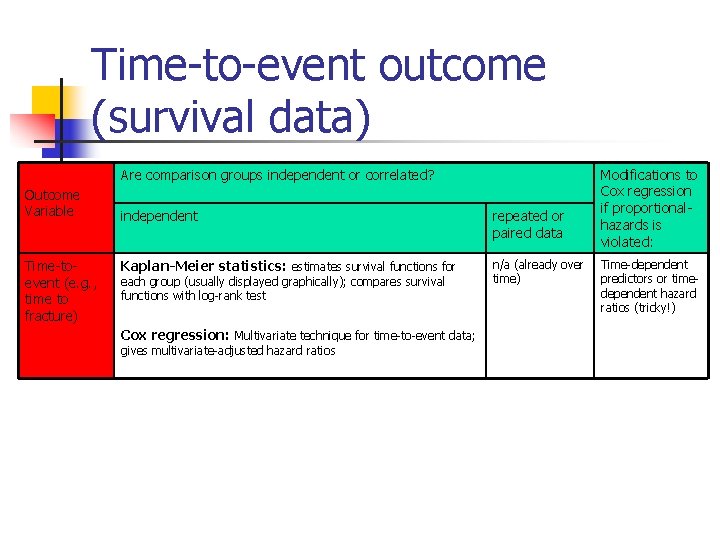

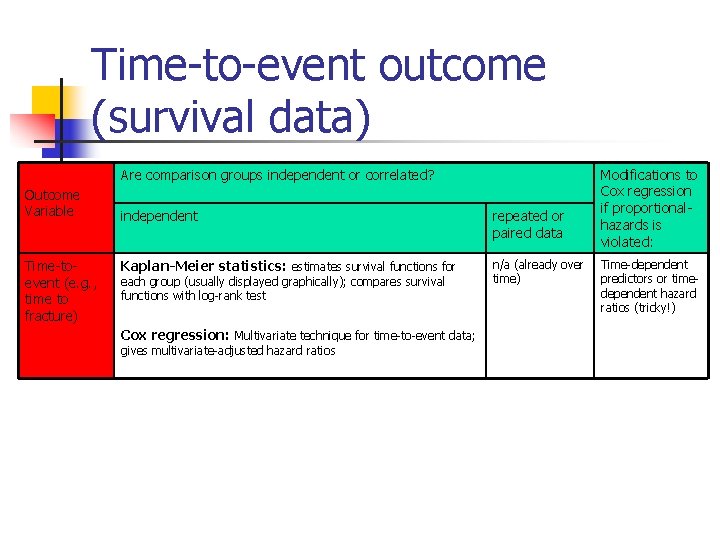

Time-to-event outcome (survival data) Are comparison groups independent or correlated? Outcome Variable Time-toevent (e. g. , time to fracture) independent repeated or paired data Kaplan-Meier statistics: estimates survival functions for n/a (already over time) each group (usually displayed graphically); compares survival functions with log-rank test Cox regression: Multivariate technique for time-to-event data; gives multivariate-adjusted hazard ratios Modifications to Cox regression if proportionalhazards is violated: Time-dependent predictors or timedependent hazard ratios (tricky!)

Homework n n n Continue reading textbook Problem Set 7 Journal Article