More Intel machine language and one more look

![Data manipulation instructions 6. Compute address of operand • • • if not [BX] Data manipulation instructions 6. Compute address of operand • • • if not [BX]](https://slidetodoc.com/presentation_image/7015e81d45f33c4bf2b45db3ad5bad37/image-4.jpg)

- Slides: 17

More Intel machine language and one more look at other architectures

Data manipulation instructions • Includes: add, sub, cmp, and, or, not • First operand is a register; second may require memory fetch • Takes 8 -17 clock cycles, as described on next slide

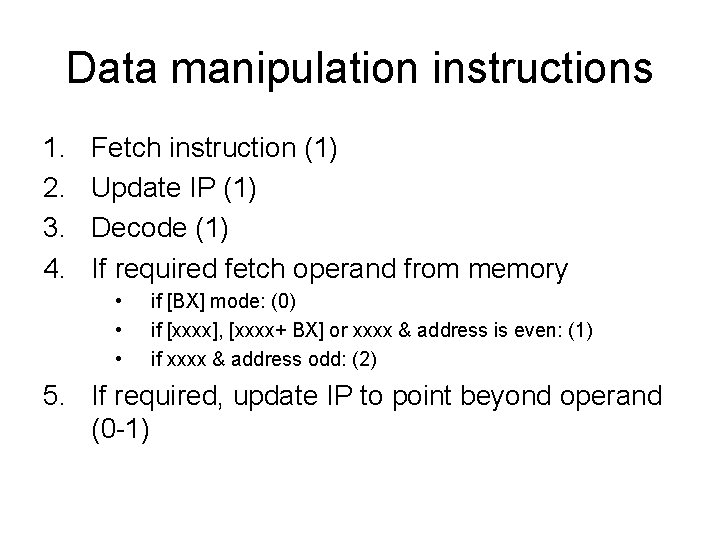

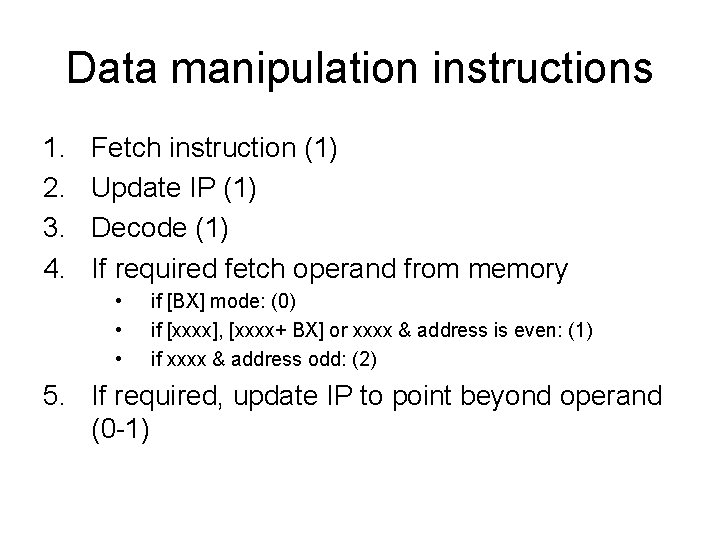

Data manipulation instructions 1. 2. 3. 4. Fetch instruction (1) Update IP (1) Decode (1) If required fetch operand from memory • • • if [BX] mode: (0) if [xxxx], [xxxx+ BX] or xxxx & address is even: (1) if xxxx & address odd: (2) 5. If required, update IP to point beyond operand (0 -1)

![Data manipulation instructions 6 Compute address of operand if not BX Data manipulation instructions 6. Compute address of operand • • • if not [BX]](https://slidetodoc.com/presentation_image/7015e81d45f33c4bf2b45db3ad5bad37/image-4.jpg)

Data manipulation instructions 6. Compute address of operand • • • if not [BX] or [xxxx+BX]: (0) if [BX]: (1) if [xxxx+BX]: (2) 7. Get value of operand & send to ALU • • if constant: (0) if register: (1) if word-aligned RAM: (2) if odd-addressed RAM: (3)

Data manipulation instructions 8. Fetch value of first operand (register) & send to ALU (1) 9. Perform operation (1) 10. Store result in 1 st operand (register) (1)

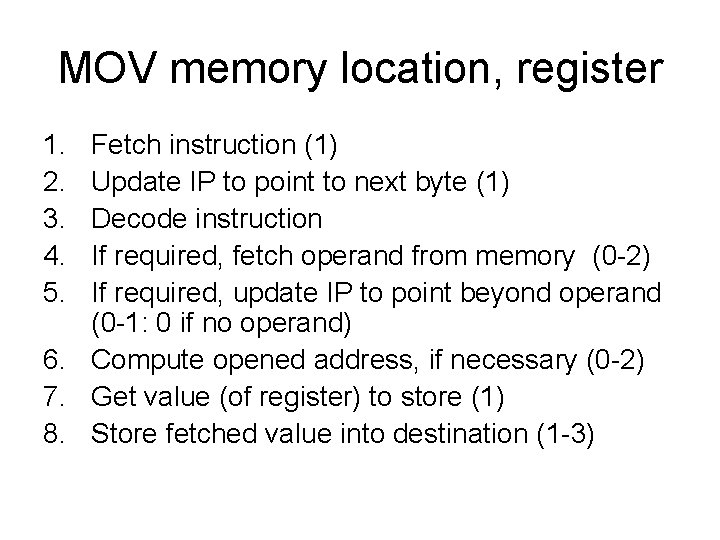

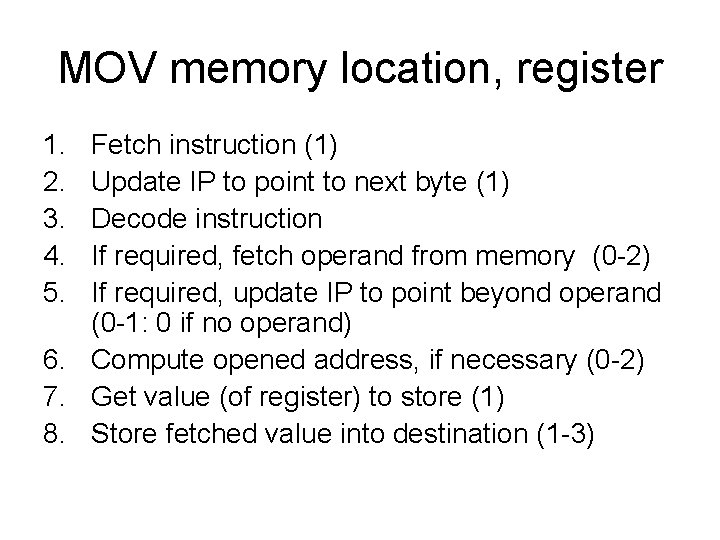

Data movement operation with RAM destination • Takes 5 -11 clock cycles • As with most instructions, the variation is due to the number of memory fetches that may be required during execution

MOV memory location, register 1. 2. 3. 4. 5. Fetch instruction (1) Update IP to point to next byte (1) Decode instruction If required, fetch operand from memory (0 -2) If required, update IP to point beyond operand (0 -1: 0 if no operand) 6. Compute opened address, if necessary (0 -2) 7. Get value (of register) to store (1) 8. Store fetched value into destination (1 -3)

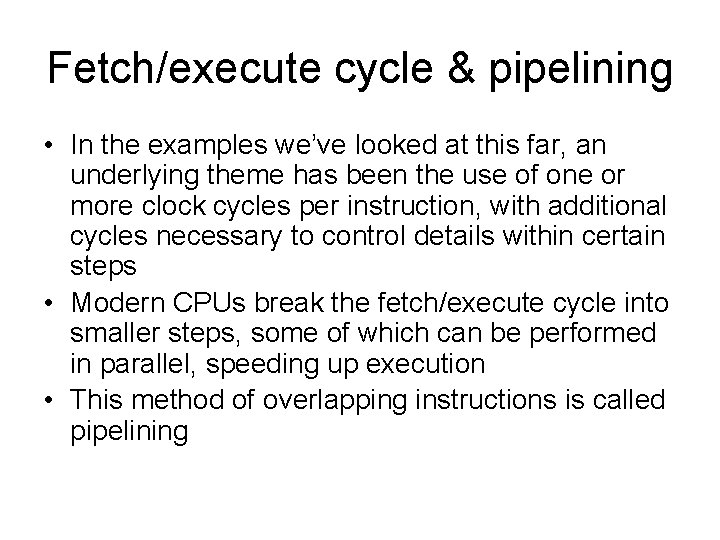

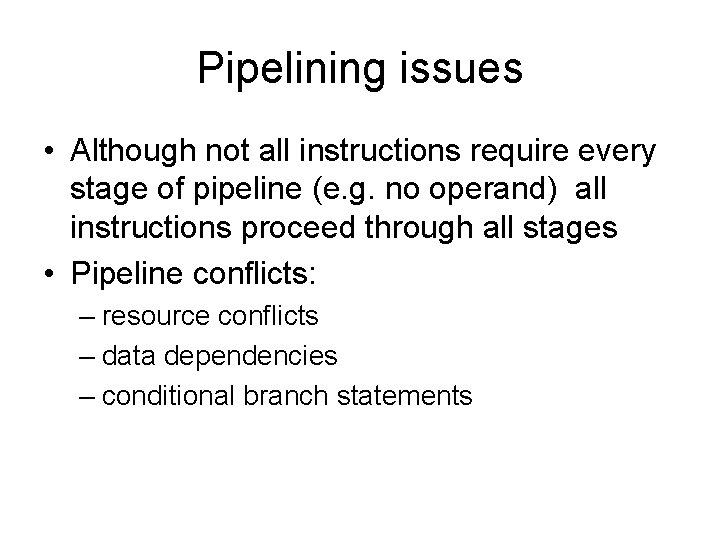

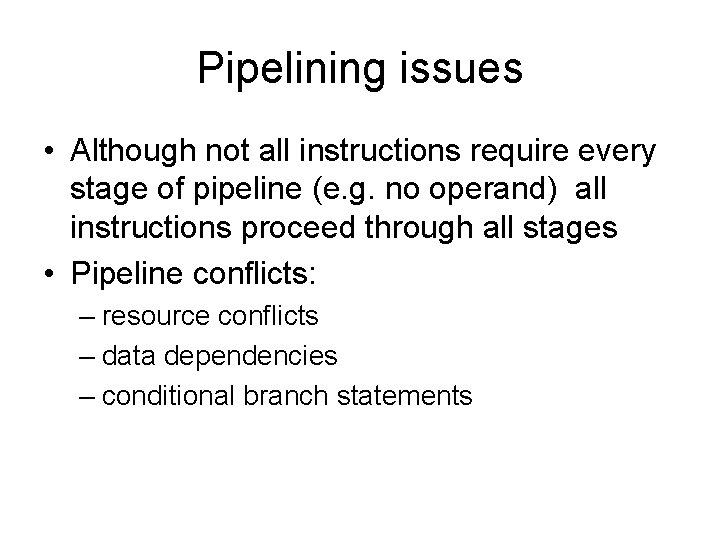

Fetch/execute cycle & pipelining • In the examples we’ve looked at this far, an underlying theme has been the use of one or more clock cycles per instruction, with additional cycles necessary to control details within certain steps • Modern CPUs break the fetch/execute cycle into smaller steps, some of which can be performed in parallel, speeding up execution • This method of overlapping instructions is called pipelining

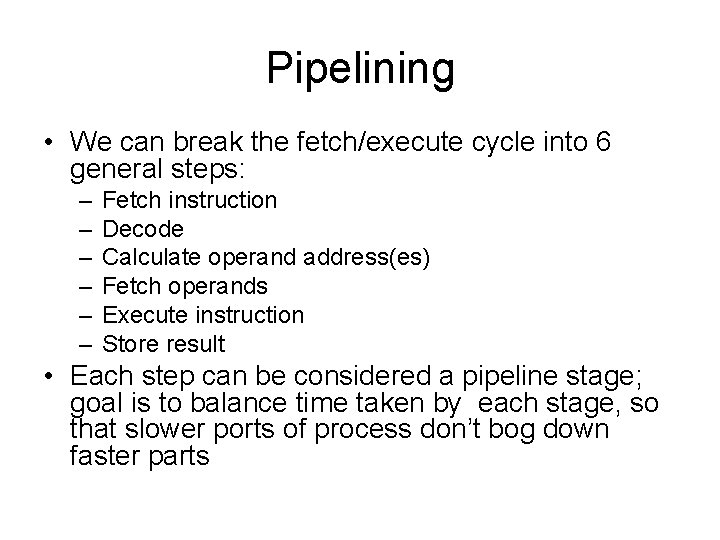

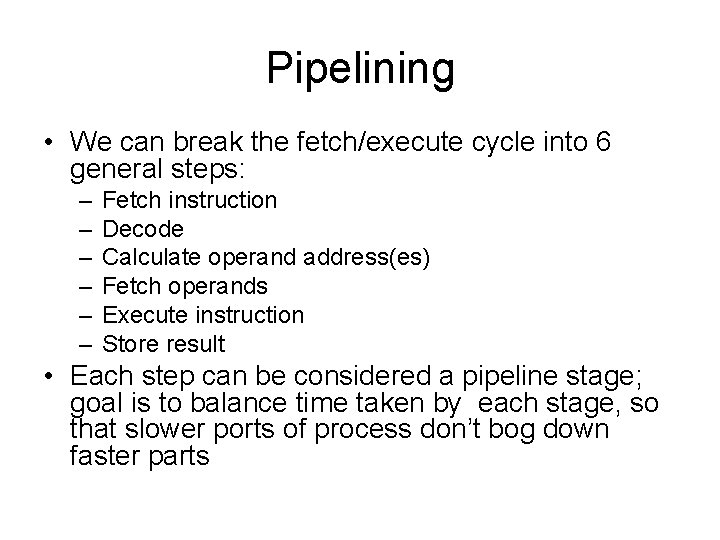

Pipelining • We can break the fetch/execute cycle into 6 general steps: – – – Fetch instruction Decode Calculate operand address(es) Fetch operands Execute instruction Store result • Each step can be considered a pipeline stage; goal is to balance time taken by each stage, so that slower ports of process don’t bog down faster parts

Standard von Neumann model vs. pipelining Source: http: //www. cs. cmu. edu/afs/cs/academic/class/15745 -s 06/web/handouts/11. pdf

Pipelining issues • Although not all instructions require every stage of pipeline (e. g. no operand) all instructions proceed through all stages • Pipeline conflicts: – resource conflicts – data dependencies – conditional branch statements

Intel & pipelining • 8086 -80486 were single-stage pipeline architectures • Pentium: 2 five-stage pipelines – Pentium II increased to 12 (mostly for MMX) – Pentium III: 14 – Pentium IV: 24

MIPS: a RISC architecture • • Little-endian Word-addressable Fixed-length instructions Load-store architecture: – only LOAD & store operations have RAM access – all other instructions must have register operands – requires large register set • 5 or 8 stage pipelining

One more architecture: the Java Virtual Machine • Java compiler is platform-independent: makes no assumptions about characteristics of underlying hardware • JVM required to run Java byte code • Works as a wrapper around a real machine’s architecture– so the JVM itself is extremely platform dependent

How it works • Java compiler translates source code into JBC • JVM acts as interpreter - translates specific byte codes into machine instructions specific to the harbor platform it’s running on • Acts like giant switch/case structure: each bytecode instruction triggers jump to a specific block of code that implements the instruction in the architecture’s native machine language

Characteristics of JVM and JBC • Stack-based language & machine • Instructions consist of one-byte opcode followed by 0 or more operands • 4 registers

Characteristics of JVM and JBC • All memory references based on register offsets - neither pointers nor absolute addresses are used • No general-purpose registers – means more memory fetches, detrimental to performance – tradeoff is high degree of portability