Module IV Analyzing Evidence and Providing Effective Feedback

Module IV: Analyzing Evidence and Providing Effective Feedback Caldwell Early College High School March 29, 2012

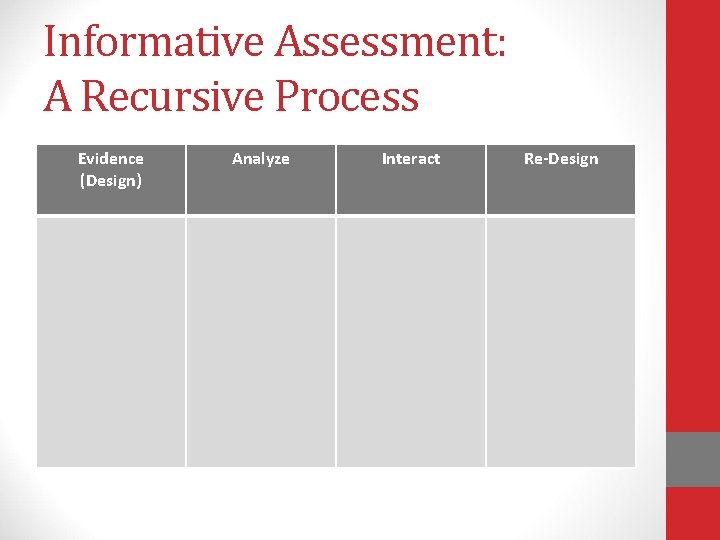

Informative Assessment: A Recursive Process Design Re-Design Interact Enact Analyze

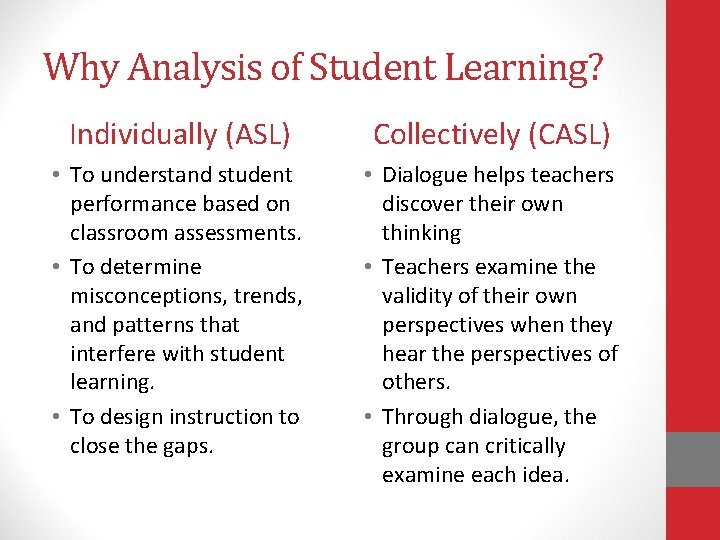

Why Analysis of Student Learning? Individually (ASL) Collectively (CASL) • To understand student performance based on classroom assessments. • To determine misconceptions, trends, and patterns that interfere with student learning. • To design instruction to close the gaps. • Dialogue helps teachers discover their own thinking • Teachers examine the validity of their own perspectives when they hear the perspectives of others. • Through dialogue, the group can critically examine each idea.

Ladder of Inference (Argyris, 1985) • Mental models influence how we understand the world and take action. • We often react to situations without testing our assumptions. • Teachers observe students in action and make decisions based on their models. • As we become aware of our own thinking we can avoid jumping to conclusions. (Source: Langer, Colton, & Goff, 2003, p. 38. )

Informative Assessment: A Recursive Process Evidence (Design) Analyze Interact Re-Design

ASL helps us examine the right questions • Content: What does the student understand? What misconceptions may be present? • Assessment: How well did this assignment work in giving us information about the student’s understanding? • Students: What characteristics of the student might have influenced this performance? What do we know about the student (e. g. learning style)? • Pedagogy: How well did the instructional strategies work? What has worked in the past with this student? • Context: What conditions may have affected this performance? (Source: Langer, Colton, & Goff, 2003, p. 38. )

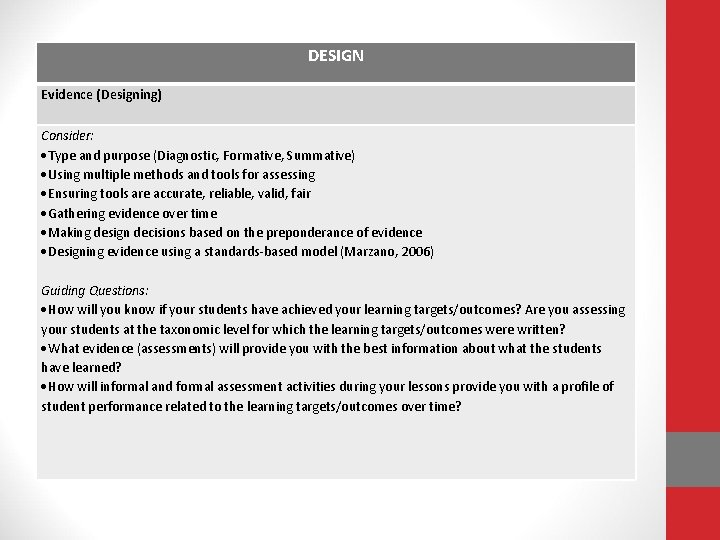

DESIGN Evidence (Designing) Consider: Type and purpose (Diagnostic, Formative, Summative) Using multiple methods and tools for assessing Ensuring tools are accurate, reliable, valid, fair Gathering evidence over time Making design decisions based on the preponderance of evidence Designing evidence using a standards-based model (Marzano, 2006) Guiding Questions: How will you know if your students have achieved your learning targets/outcomes? Are you assessing your students at the taxonomic level for which the learning targets/outcomes were written? What evidence (assessments) will provide you with the best information about what the students have learned? How will informal and formal assessment activities during your lessons provide you with a profile of student performance related to the learning targets/outcomes over time?

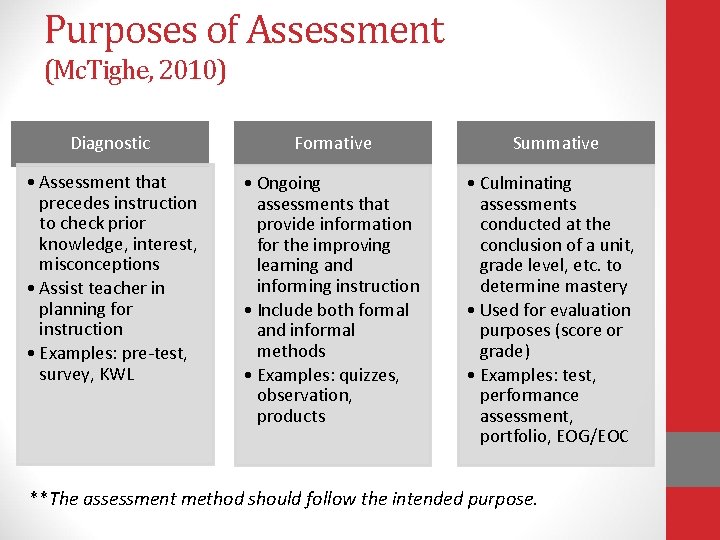

Purposes of Assessment (Mc. Tighe, 2010) Diagnostic Formative Summative • Assessment that precedes instruction to check prior knowledge, interest, misconceptions • Assist teacher in planning for instruction • Examples: pre-test, survey, KWL • Ongoing assessments that provide information for the improving learning and informing instruction • Include both formal and informal methods • Examples: quizzes, observation, products • Culminating assessments conducted at the conclusion of a unit, grade level, etc. to determine mastery • Used for evaluation purposes (score or grade) • Examples: test, performance assessment, portfolio, EOG/EOC **The assessment method should follow the intended purpose.

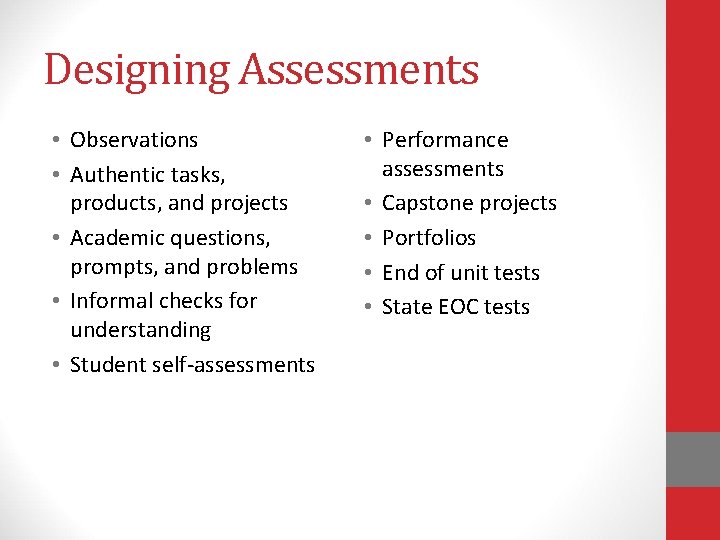

Designing Assessments • Observations • Authentic tasks, products, and projects • Academic questions, prompts, and problems • Informal checks for understanding • Student self-assessments • Performance assessments • Capstone projects • Portfolios • End of unit tests • State EOC tests

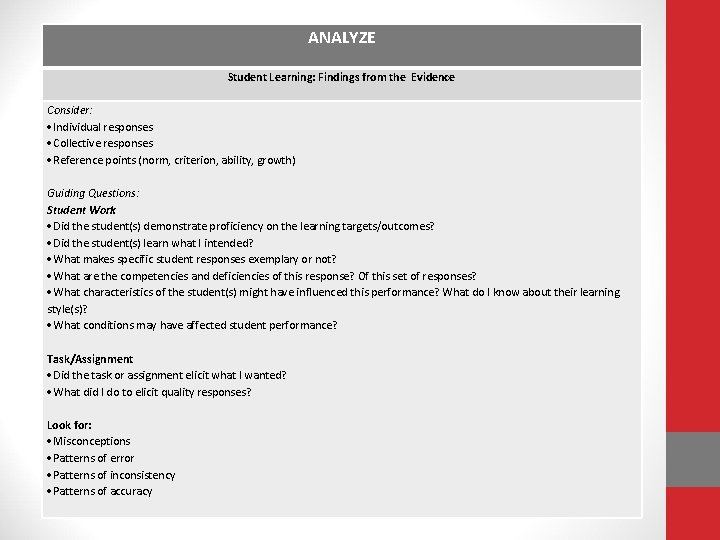

ANALYZE Student Learning: Findings from the Evidence Consider: Individual responses Collective responses Reference points (norm, criterion, ability, growth) Guiding Questions: Student Work Did the student(s) demonstrate proficiency on the learning targets/outcomes? Did the student(s) learn what I intended? What makes specific student responses exemplary or not? What are the competencies and deficiencies of this response? Of this set of responses? What characteristics of the student(s) might have influenced this performance? What do I know about their learning style(s)? What conditions may have affected student performance? Task/Assignment Did the task or assignment elicit what I wanted? What did I do to elicit quality responses? Look for: Misconceptions Patterns of error Patterns of inconsistency Patterns of accuracy

Frames of Reference for Interpreting Performance Ability-referenced, in which a student’s performance is interpreted in light of that student’s maximum possible performance. Growth-referenced, in which performance is compared with the student’s prior performance. Norm-referenced, in which interpretation is provided by comparing the student’s performance with the performance of others or with the typical performance for that student. Criterion-referenced, in which meaning is provided by describing what the student can and cannot do.

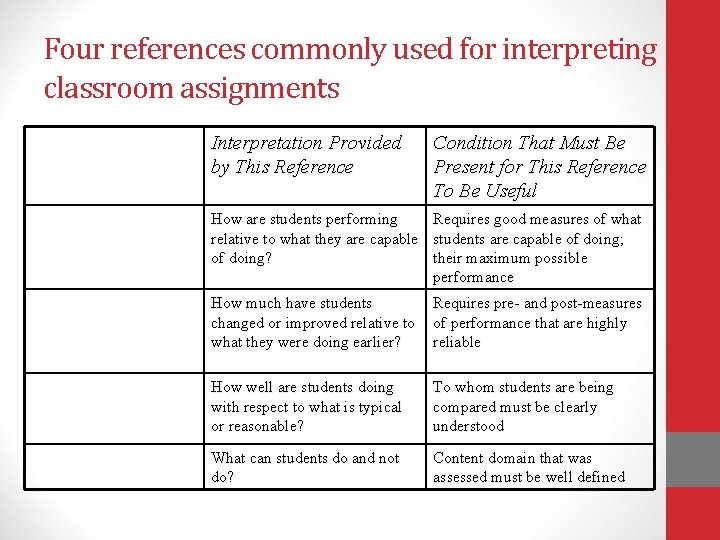

Four references commonly used for interpreting classroom assignments Interpretation Provided by This Reference Condition That Must Be Present for This Reference To Be Useful How are students performing relative to what they are capable of doing? Requires good measures of what students are capable of doing; their maximum possible performance How much have students changed or improved relative to what they were doing earlier? Requires pre- and post-measures of performance that are highly reliable How well are students doing with respect to what is typical or reasonable? To whom students are being compared must be clearly understood What can students do and not do? Content domain that was assessed must be well defined

Criterion-referenced or norm-referenced interpretation? • Ray scored 90 on a history exam. • Using a periodic table, Ashley can name each of the elements. • Matt knows more vocabulary words than 80% of his second-year German class. • Using a map of the United States, Donna can show the location of the ten largest Civil War battles. • In terms of grade-point average, Lawrence graduated 194 th among 210 students in his high school class. (Oosterhof, 2001).

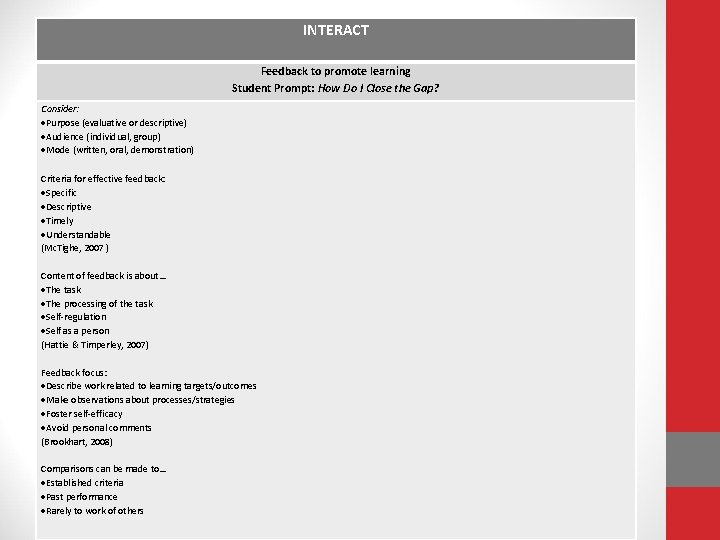

INTERACT Feedback to promote learning Student Prompt: How Do I Close the Gap? Consider: Purpose (evaluative or descriptive) Audience (individual, group) Mode (written, oral, demonstration) Criteria for effective feedback: Specific Descriptive Timely Understandable (Mc. Tighe, 2007 ) Content of feedback is about… The task The processing of the task Self-regulation Self as a person (Hattie & Timperley, 2007) Feedback focus: Describe work related to learning targets/outcomes Make observations about processes/strategies Foster self-efficacy Avoid personal comments (Brookhart, 2008) Comparisons can be made to… Established criteria Past performance Rarely to work of others

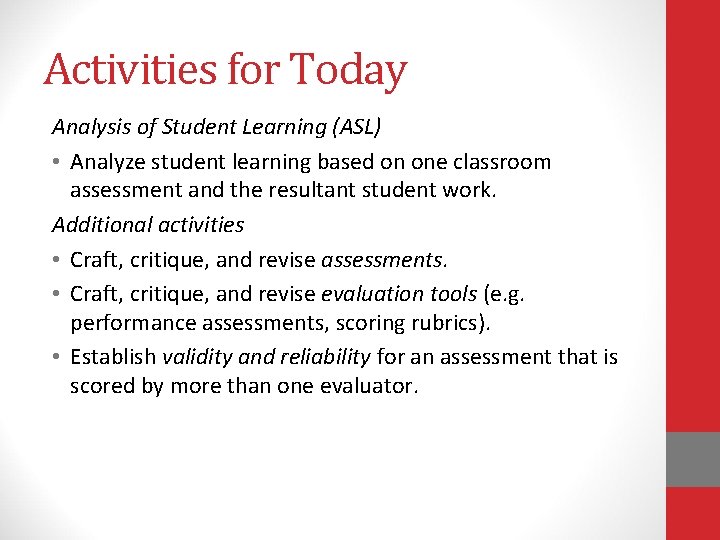

Activities for Today Analysis of Student Learning (ASL) • Analyze student learning based on one classroom assessment and the resultant student work. Additional activities • Craft, critique, and revise assessments. • Craft, critique, and revise evaluation tools (e. g. performance assessments, scoring rubrics). • Establish validity and reliability for an assessment that is scored by more than one evaluator.

The Road We’ve Traveled… • Powerful Learning • Most important goal • Learning that makes a difference (think Threshold Concepts that are transformative) • Everything should be aligned to the learning targets/outcomes • Powerful Teaching • Purposeful design • Critical Analysis • Recursive in nature • Assessment as the Foundation for Powerful Teaching and Learning

The Road Ahead… PLCs or large group meetings focused on one or more of the following: • Designing performance assessments (including rubrics) • Using student self-assessment in the classroom • Using evidence from a multiple sources to build profiles of performance • Designing standards-based grading systems • Using Collaborative Analysis of Student Learning (CASL) for course/program improvement • Drafting a CECHS conceptual framework for assessment

References • Argyris, C. (1985). Strategy, change and defensive routines. Boston, MA: Pitman. • Langer, Colton, & Goff (2003). Collaborative analysis of student work. Alexandria, VA: Association of Supervision and Curriculum Development. • Hattie, J. , & Timperley, H. (2011). The power of feedback. Review of Educational Research, 77(1), 81– 112. • Marzano, R. J. (2006). Classroom assessment and grading that work. Alexandria, VA: Association of Supervision and Curriculum Development. • Mc. Tighe, J. (2007). Seven practices for effective learning. Educational Leadership 63(3), pp. 10 -17. • Oosterhof, A. (2001). Classroom Applications of Educational Measurement (3 rd ed. ). Upper Saddle River, NJ: Merrill Prentice Hall.

- Slides: 18