Modern Test Theory Item Response Theory IRT Limitations

![Basic IRT concept PROB(Item Passed) =FUNCTION[(Trait. Level)-(Item. Difficulty)] Basic IRT concept PROB(Item Passed) =FUNCTION[(Trait. Level)-(Item. Difficulty)]](https://slidetodoc.com/presentation_image/f0c73e19b0c0e6fa6630d4d840a8eb1f/image-13.jpg)

- Slides: 22

Modern Test Theory Item Response Theory (IRT)

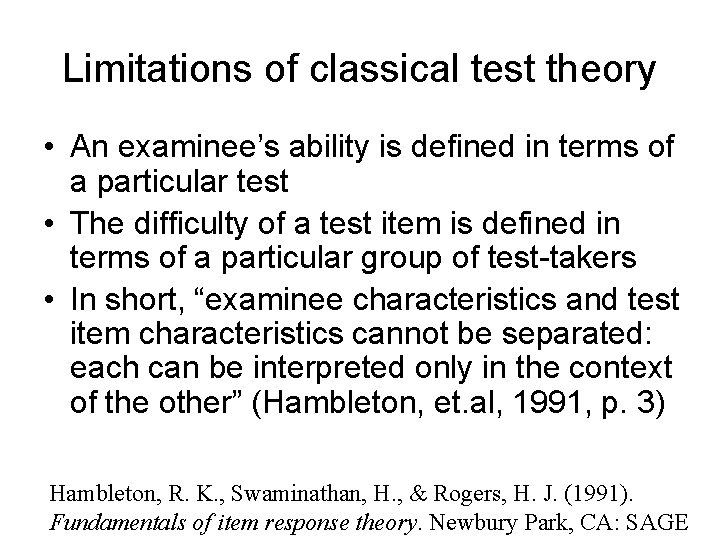

Limitations of classical test theory • An examinee’s ability is defined in terms of a particular test • The difficulty of a test item is defined in terms of a particular group of test-takers • In short, “examinee characteristics and test item characteristics cannot be separated: each can be interpreted only in the context of the other” (Hambleton, et. al, 1991, p. 3) Hambleton, R. K. , Swaminathan, H. , & Rogers, H. J. (1991). Fundamentals of item response theory. Newbury Park, CA: SAGE

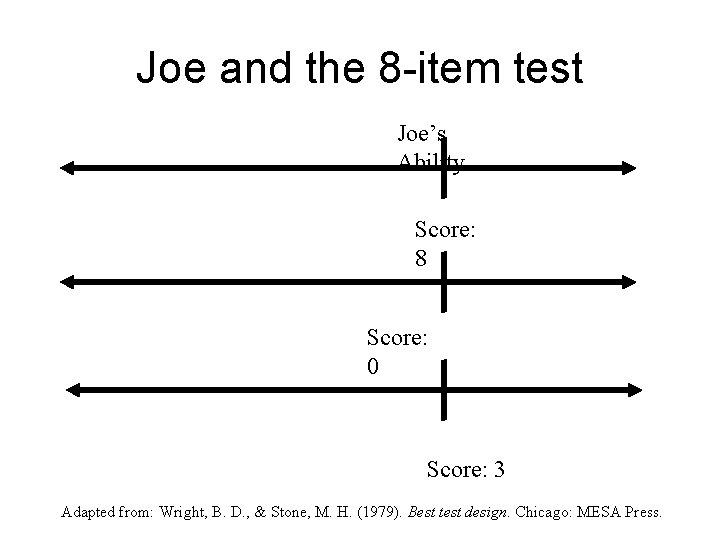

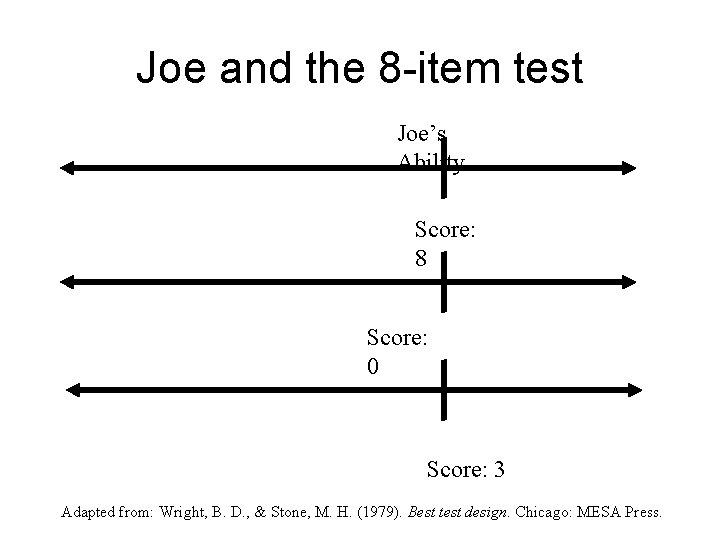

Joe and the 8 -item test Joe’s Ability Score: 8 Score: 0 Score: 3 Adapted from: Wright, B. D. , & Stone, M. H. (1979). Best test design. Chicago: MESA Press.

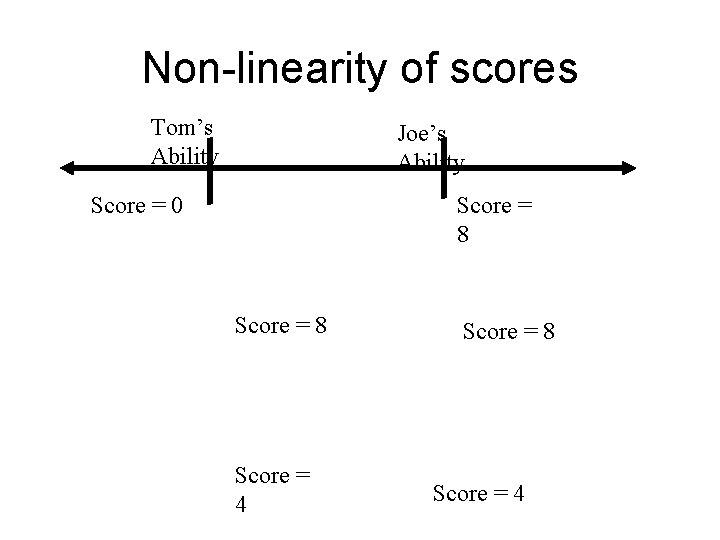

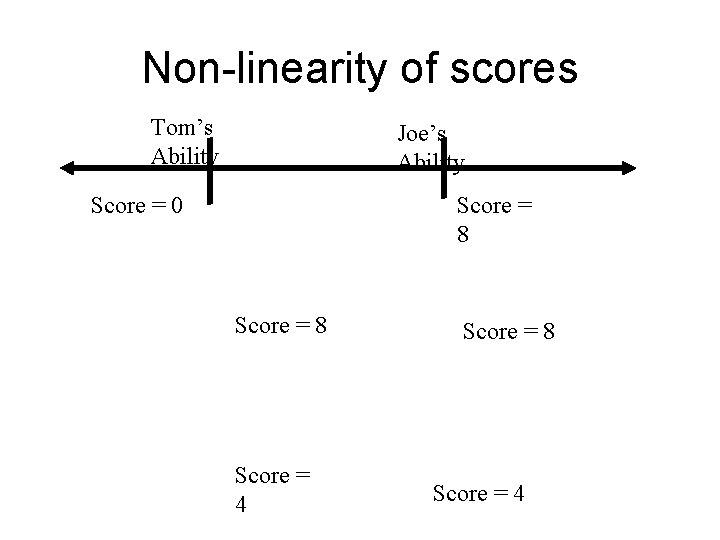

Non-linearity of scores Tom’s Ability Joe’s Ability Score = 0 Score = 8 Score = 4

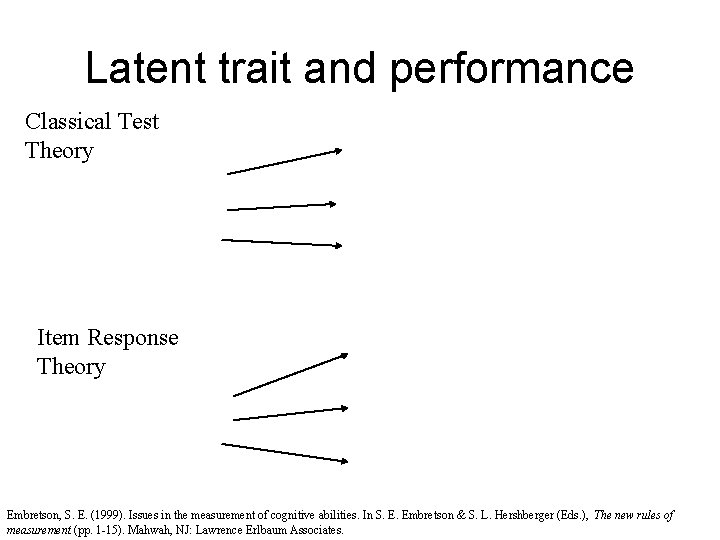

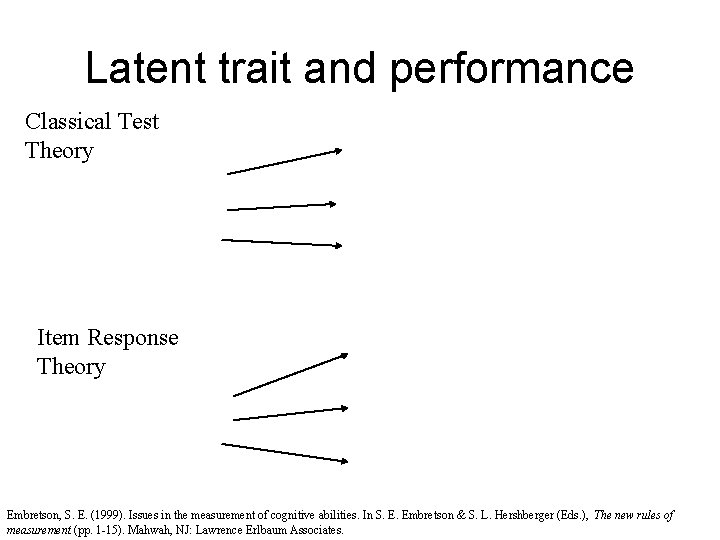

Latent trait and performance Classical Test Theory Item Response Theory Embretson, S. E. (1999). Issues in the measurement of cognitive abilities. In S. E. Embretson & S. L. Hershberger (Eds. ), The new rules of measurement (pp. 1 -15). Mahwah, NJ: Lawrence Erlbaum Associates.

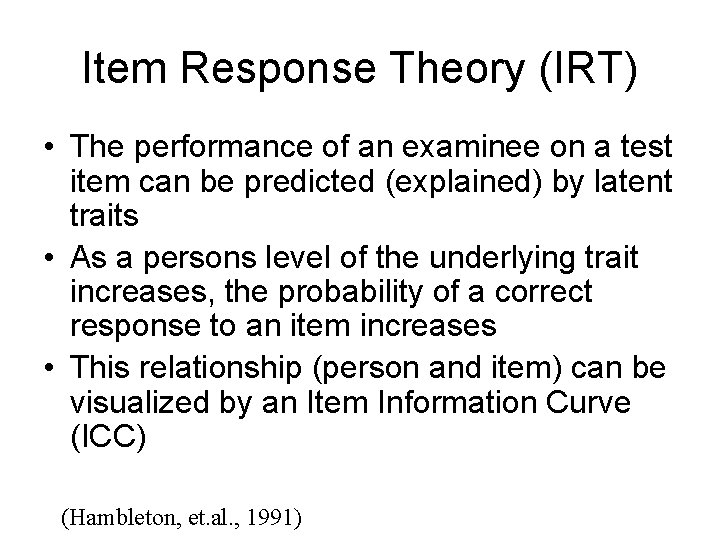

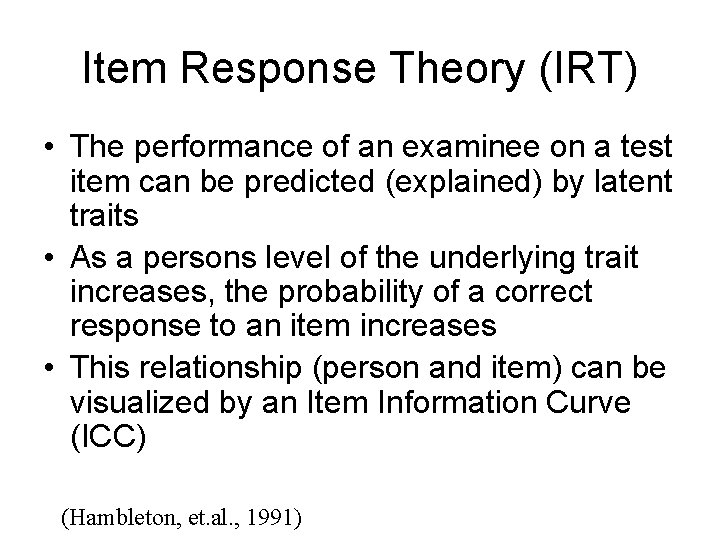

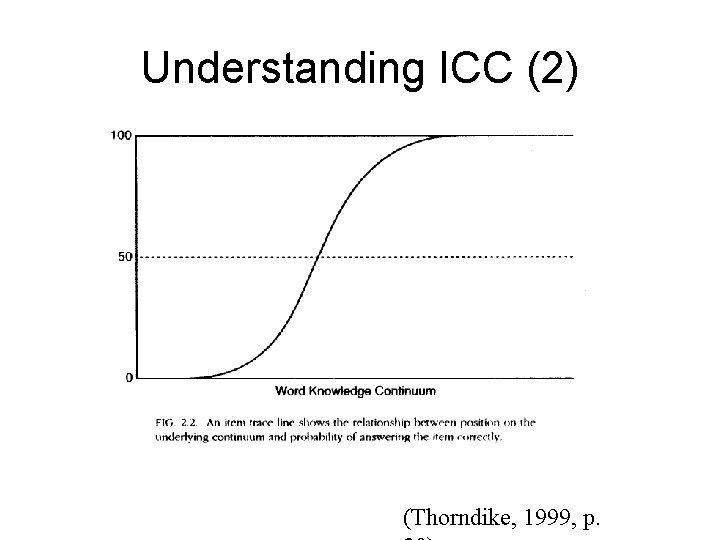

Item Response Theory (IRT) • The performance of an examinee on a test item can be predicted (explained) by latent traits • As a persons level of the underlying trait increases, the probability of a correct response to an item increases • This relationship (person and item) can be visualized by an Item Information Curve (ICC) (Hambleton, et. al. , 1991)

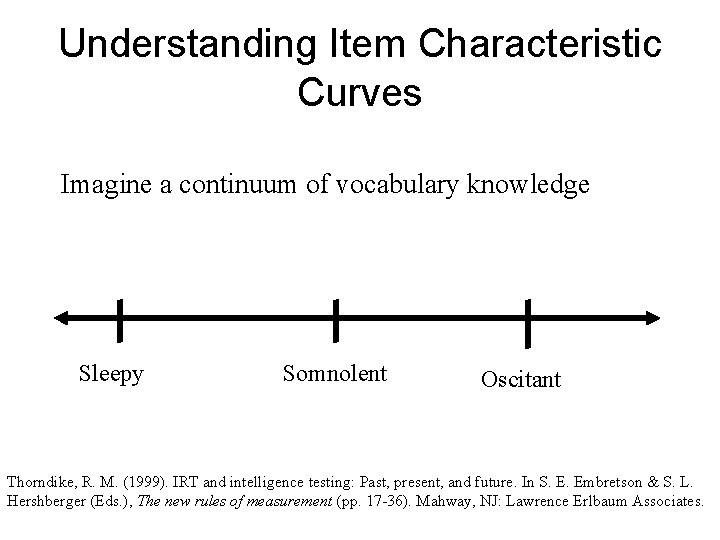

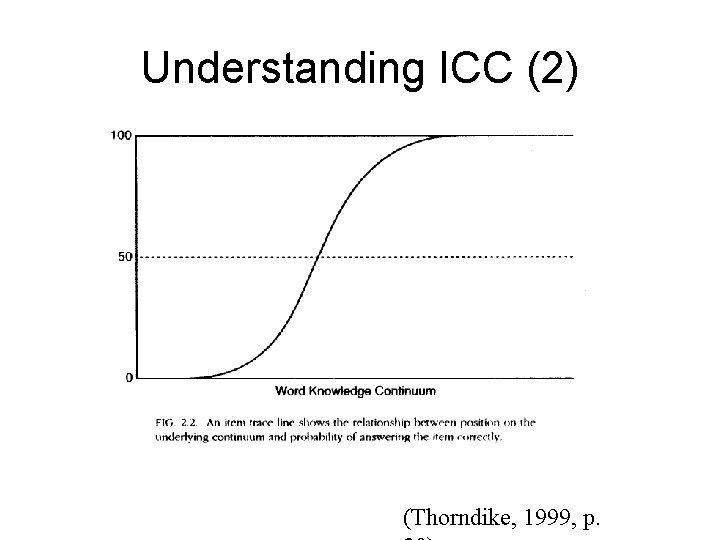

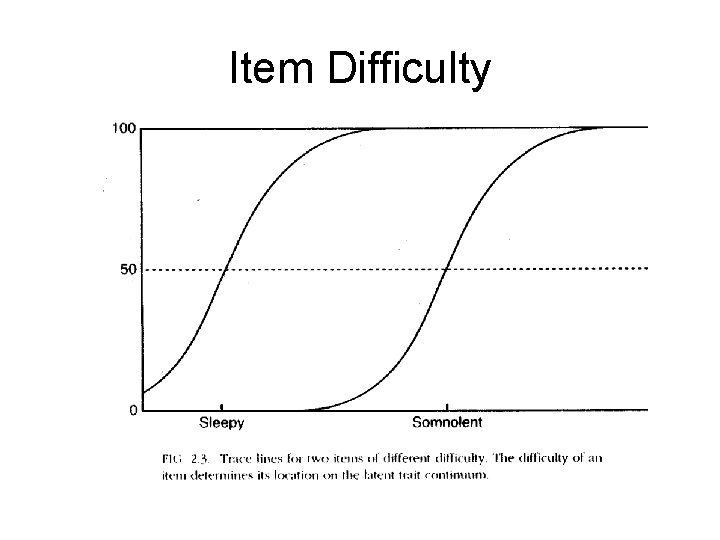

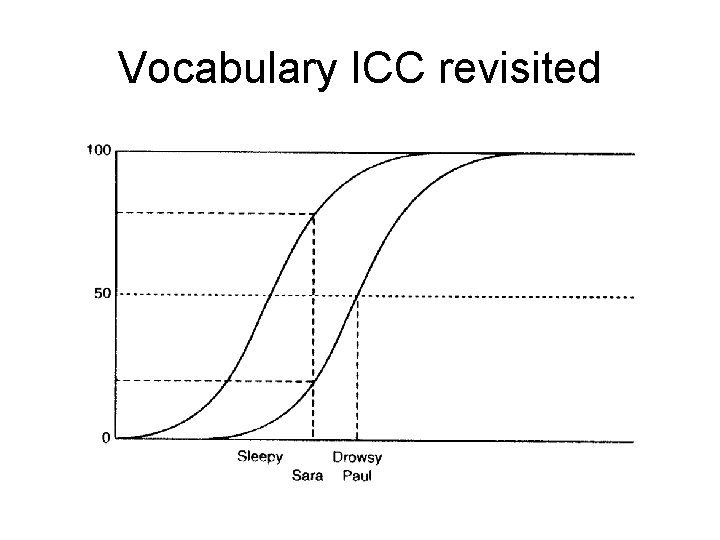

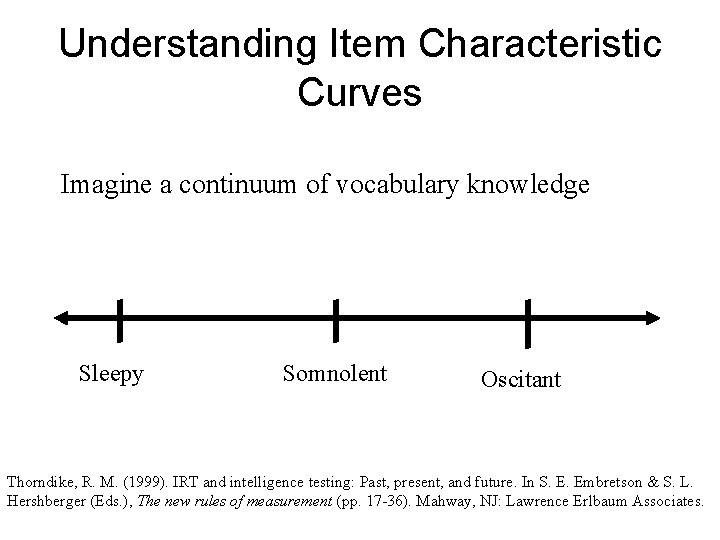

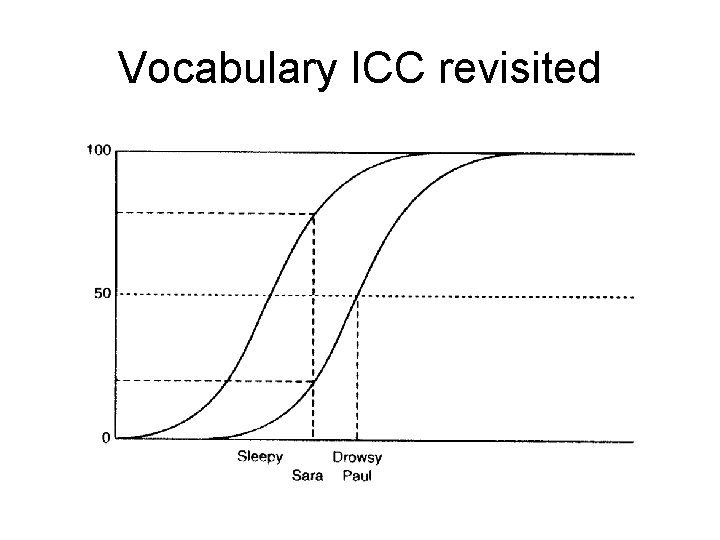

Understanding Item Characteristic Curves Imagine a continuum of vocabulary knowledge Sleepy Somnolent Oscitant Thorndike, R. M. (1999). IRT and intelligence testing: Past, present, and future. In S. E. Embretson & S. L. Hershberger (Eds. ), The new rules of measurement (pp. 17 -36). Mahway, NJ: Lawrence Erlbaum Associates.

Understanding ICC (2) (Thorndike, 1999, p.

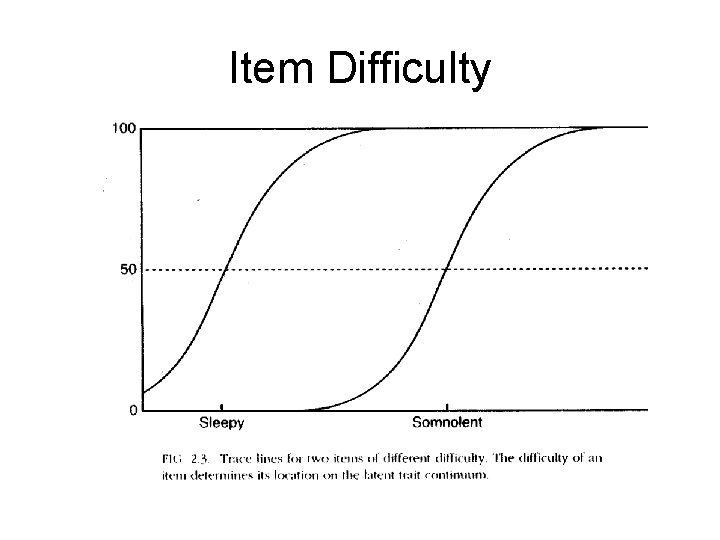

Item Difficulty

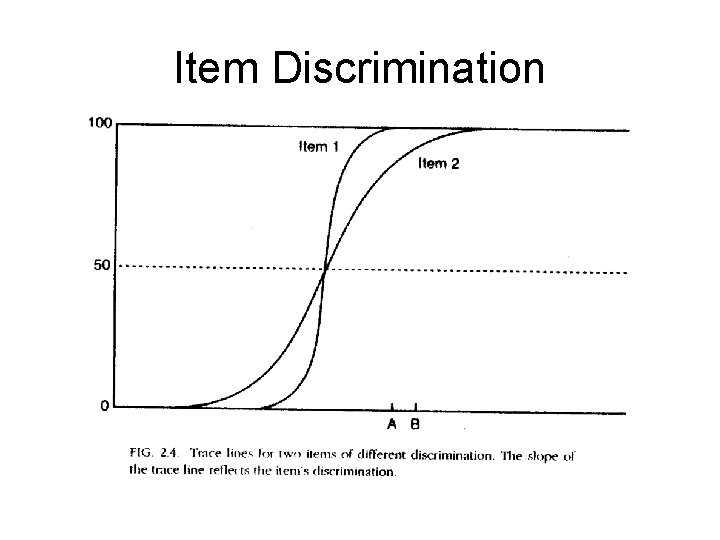

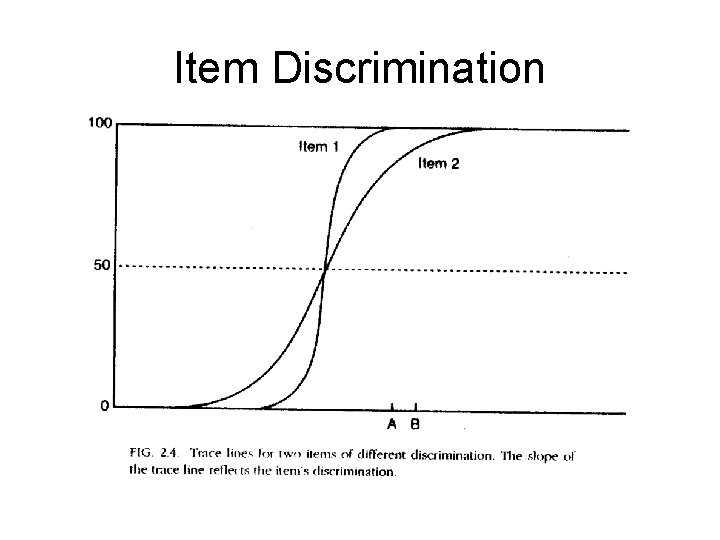

Item Discrimination

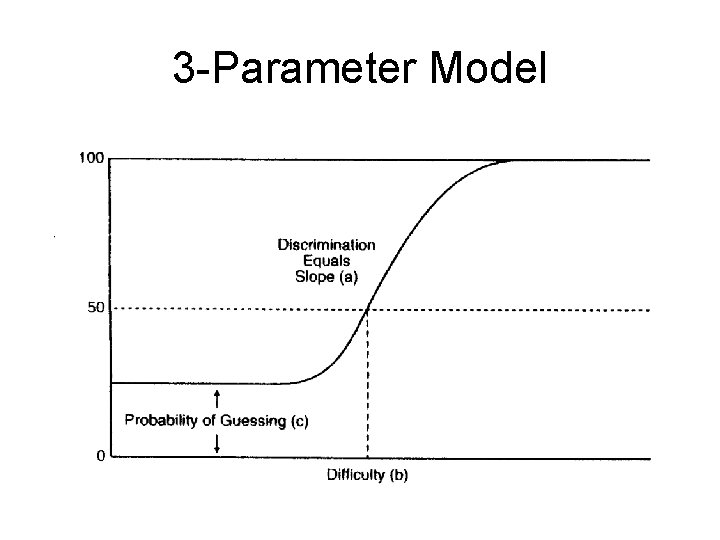

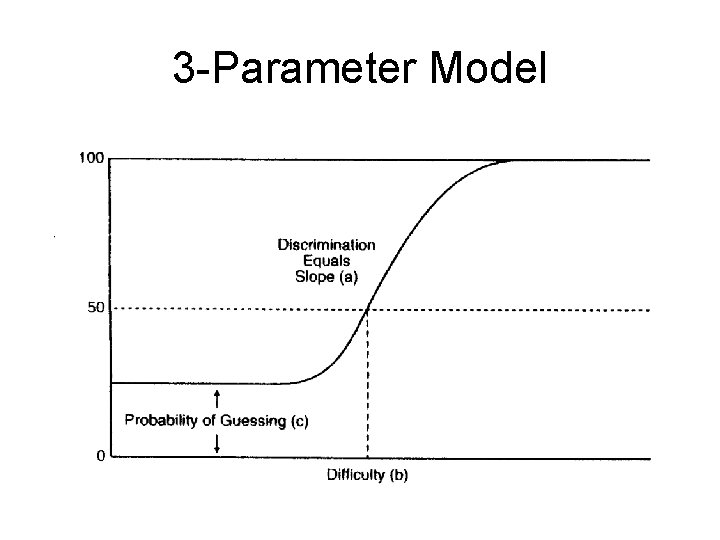

3 -Parameter Model

Vocabulary ICC revisited

![Basic IRT concept PROBItem Passed FUNCTIONTrait LevelItem Difficulty Basic IRT concept PROB(Item Passed) =FUNCTION[(Trait. Level)-(Item. Difficulty)]](https://slidetodoc.com/presentation_image/f0c73e19b0c0e6fa6630d4d840a8eb1f/image-13.jpg)

Basic IRT concept PROB(Item Passed) =FUNCTION[(Trait. Level)-(Item. Difficulty)]

Assumptions of IRT • Unidimensionality – only one ability is measured by a set of items on a test • Local independence – examinee’s responses to any two items are statistically independent • 1 -parameter model – no guessing, item discrimination is the same for all items • 2 -parameter model – no guessing

Advantages of IRT • • Sample-free item calibration Test-free person measurement Item banking facility Computer delivery of tests Test tailoring facility Score reporting facility Item bias detection Henning, G. (1987). A guide to language testing: development, evaluation, research. Boston: Heinle & Heinle.

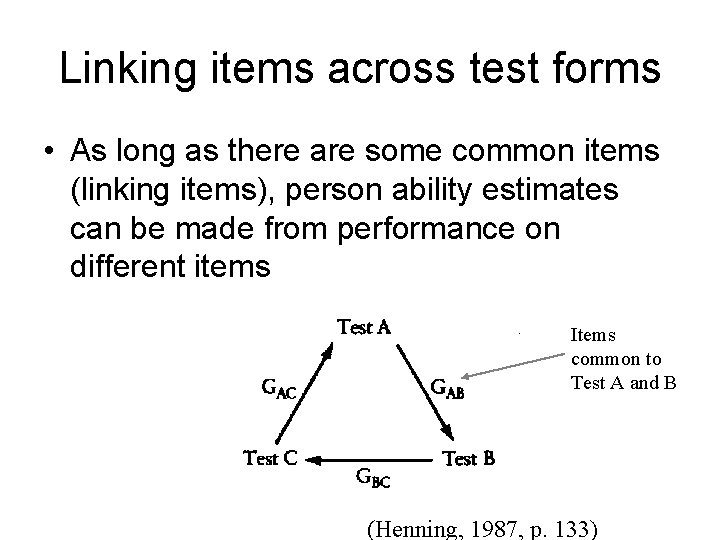

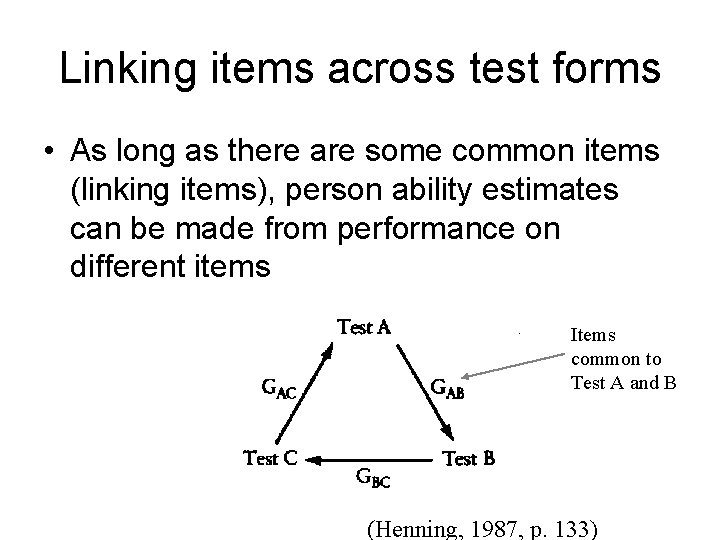

Linking items across test forms • As long as there are some common items (linking items), person ability estimates can be made from performance on different items Items common to Test A and B (Henning, 1987, p. 133)

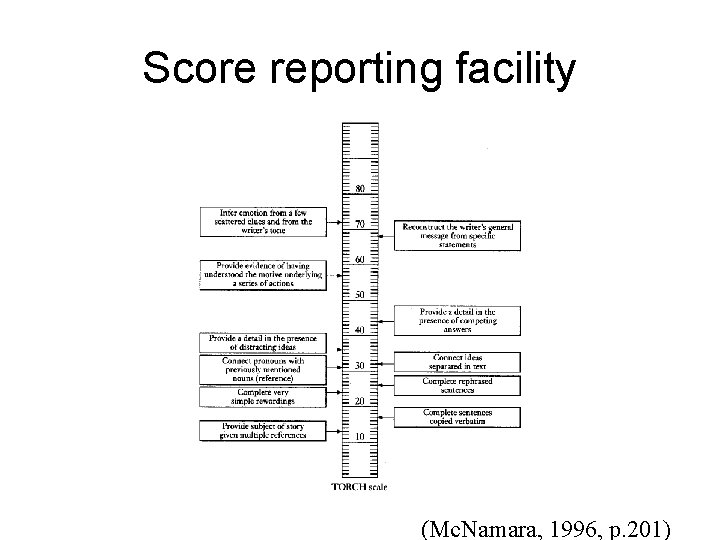

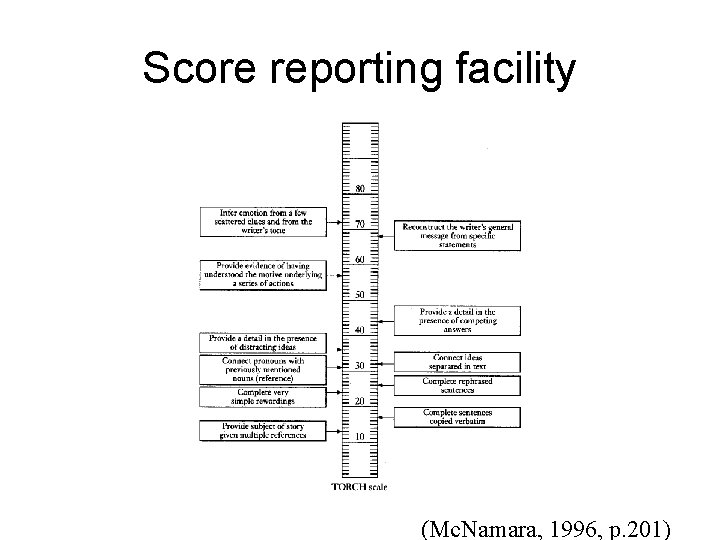

Score reporting facility (Mc. Namara, 1996, p. 201)

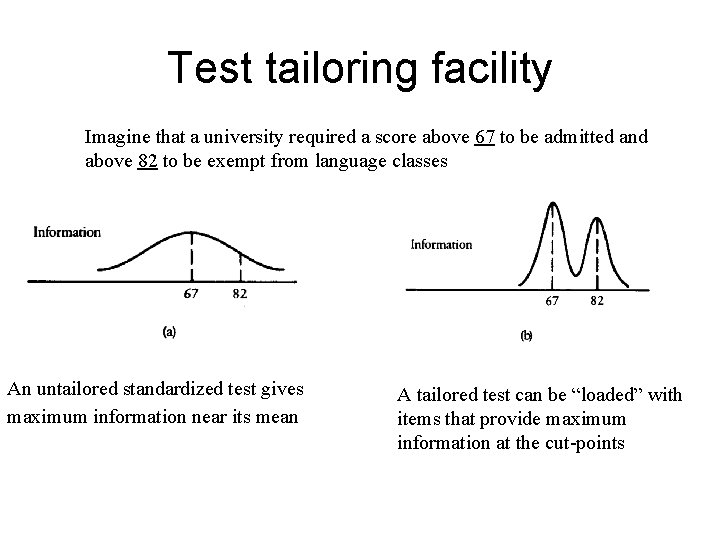

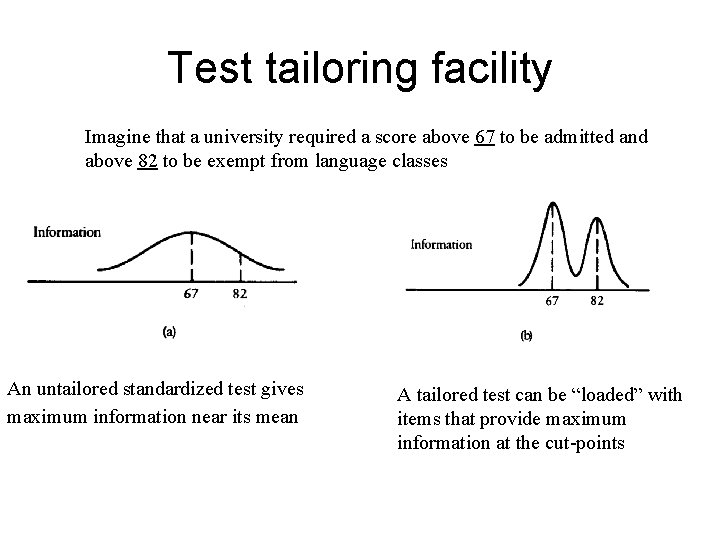

Test tailoring facility Imagine that a university required a score above 67 to be admitted and above 82 to be exempt from language classes An untailored standardized test gives maximum information near its mean A tailored test can be “loaded” with items that provide maximum information at the cut-points

Computerized testing • Computer-delivered tests – Tests which use a computer rather than pencil and paper for test content delivery – Items can take advantage of computer’s multimedia capabilities • Computer-adaptive tests – Test is created “on the fly” to match examinee’s ability level • Web-based tests – Delivered over the World Wide Web – Test-takers can access from anywhere

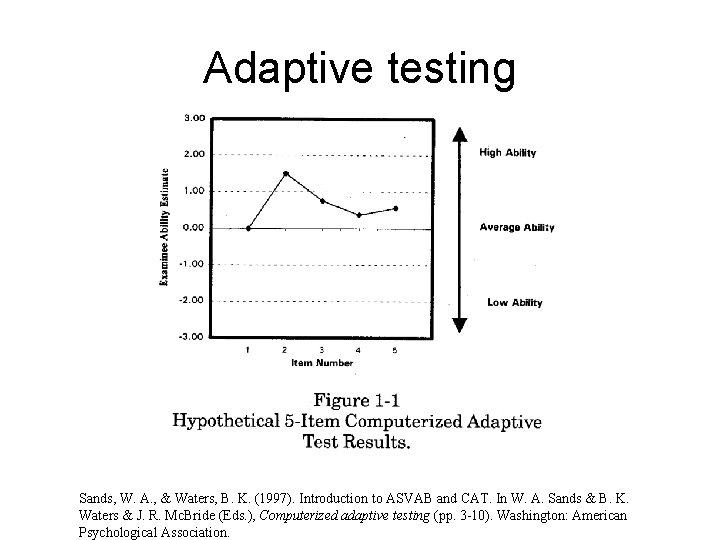

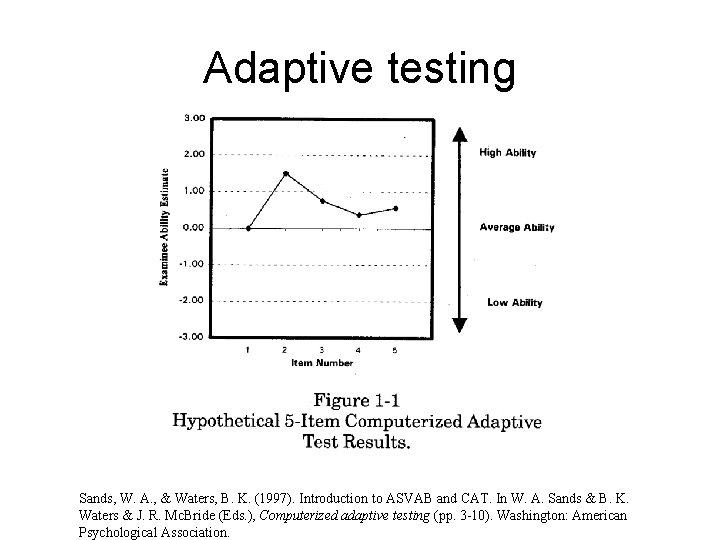

Adaptive testing Sands, W. A. , & Waters, B. K. (1997). Introduction to ASVAB and CAT. In W. A. Sands & B. K. Waters & J. R. Mc. Bride (Eds. ), Computerized adaptive testing (pp. 3 -10). Washington: American Psychological Association.

CAT advantages • Increased efficiency – More able examinees are not bored with easy questions – Less able examinees are not frustrated with incredibly difficult questions • • Immediate feedback is possible Examinees can work at own pace Audiovisual material can be incorporated Potential for “on demand” testing

CAT Challenges • Technical sophistication required to develop and administer CAT • Need for large item pool • Overexposure of best items • Ensuring consistency of measures and content across candidates • Public perception of computer-based scores – Completely infallible – Completely bogus