Modern generalpurpose processors PostRISC architecture n n n

- Slides: 52

Modern general-purpose processors

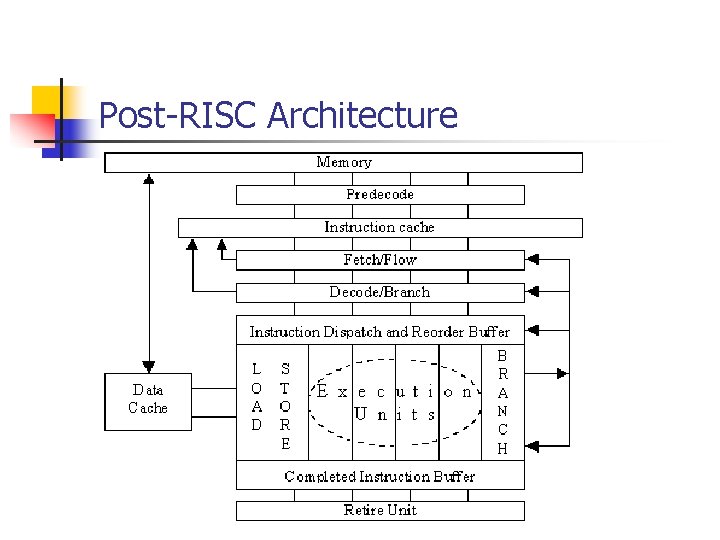

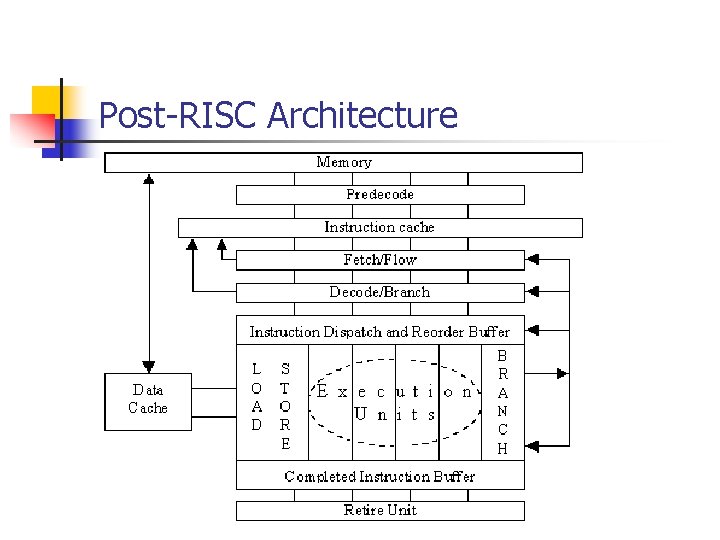

Post-RISC architecture n n n Instruction & arithmetic pipelining Superscalar architecture Data flow analysis Branch prediction Cache memory Multimedia extensions

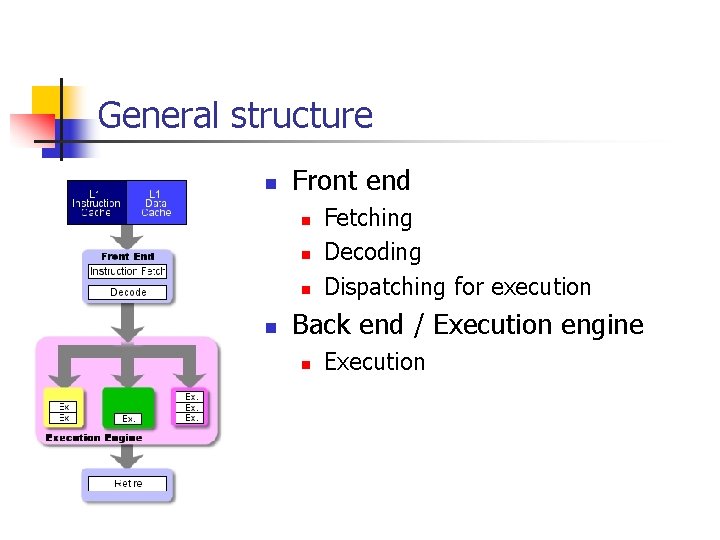

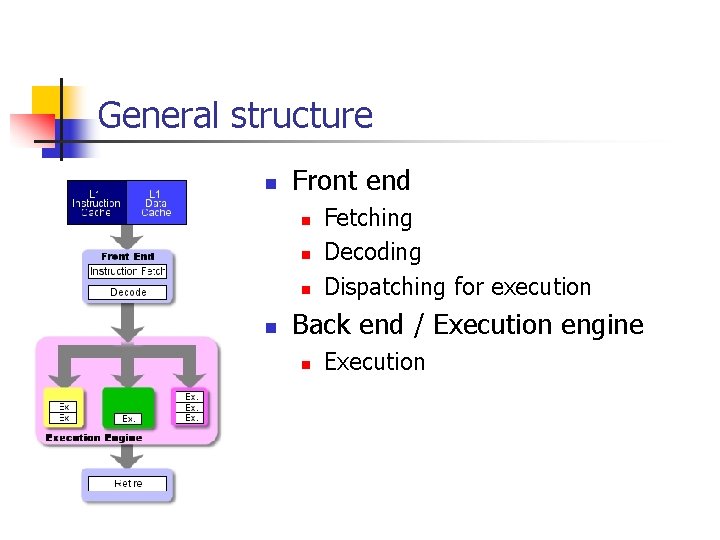

General structure n Front end n n Fetching Decoding Dispatching for execution Back end / Execution engine n Execution

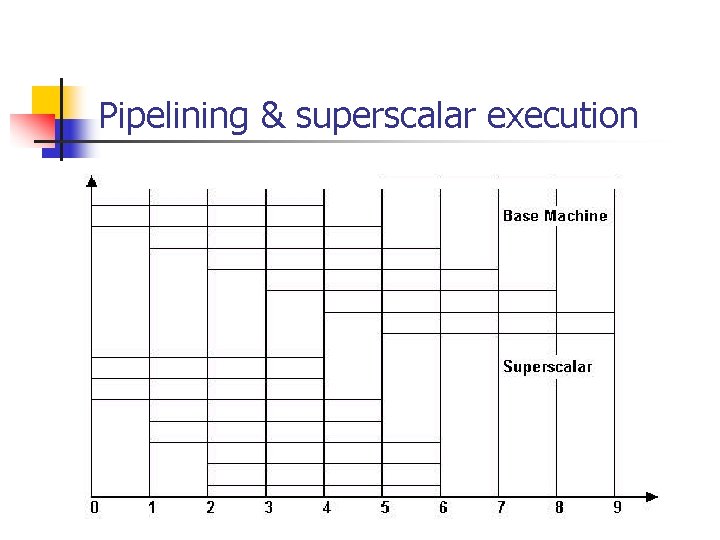

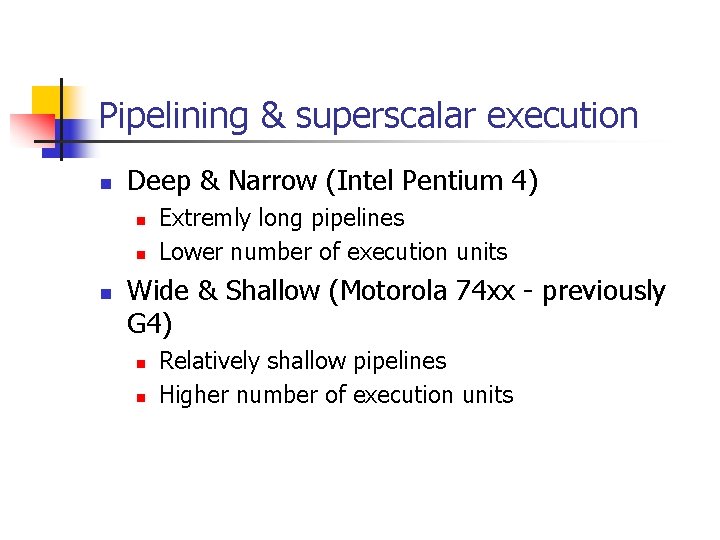

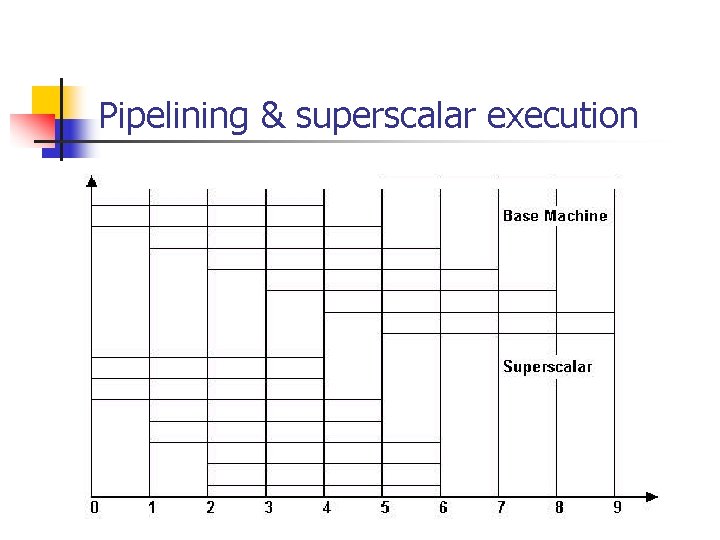

Pipelining & superscalar execution

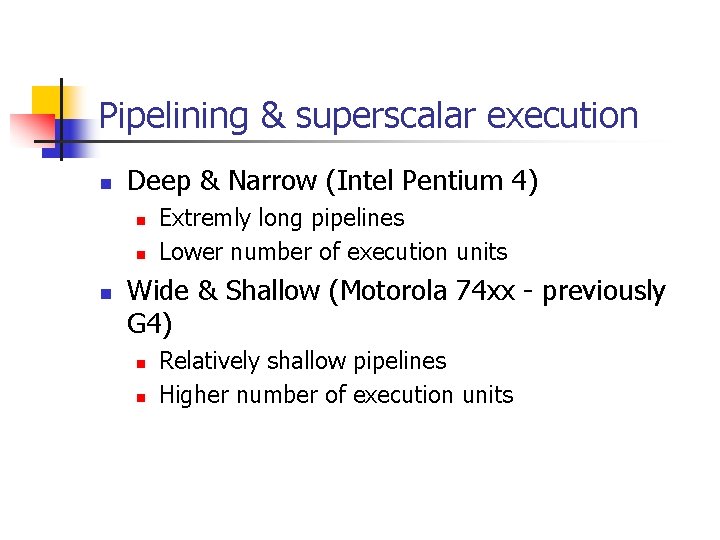

Pipelining & superscalar execution n Deep & Narrow (Intel Pentium 4) n n n Extremly long pipelines Lower number of execution units Wide & Shallow (Motorola 74 xx - previously G 4) n n Relatively shallow pipelines Higher number of execution units

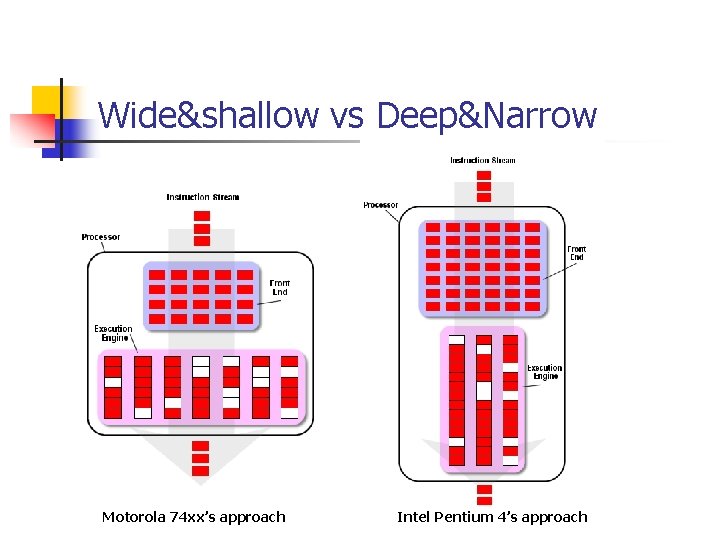

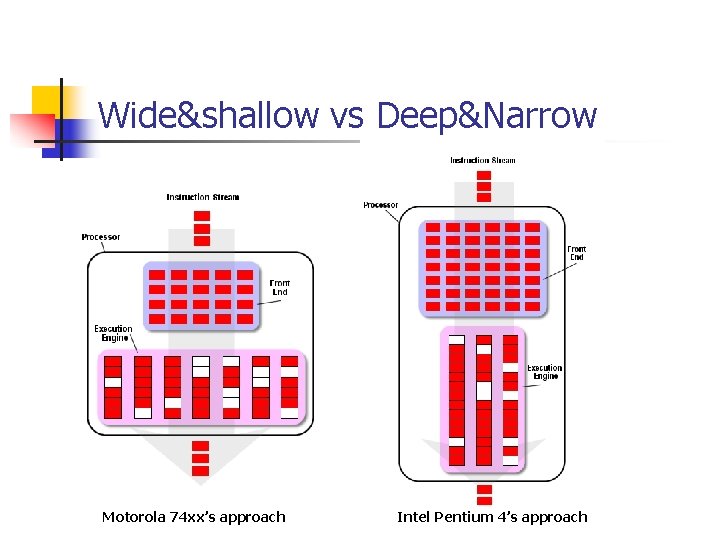

Wide&shallow vs Deep&Narrow Motorola 74 xx’s approach Intel Pentium 4’s approach

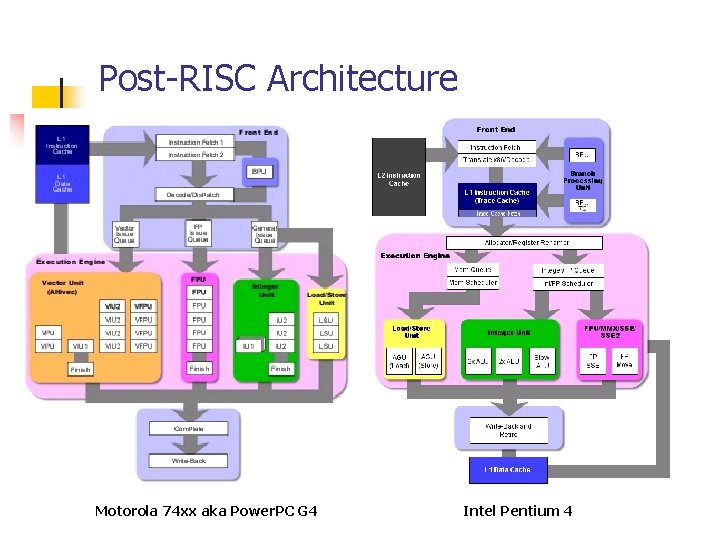

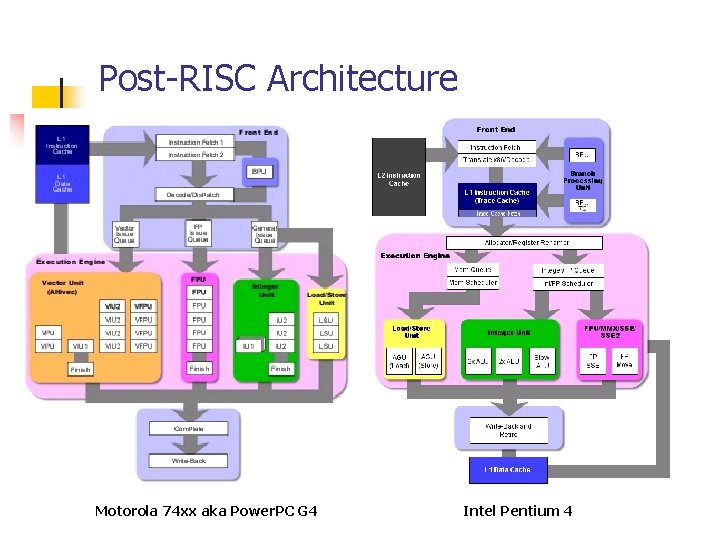

Post-RISC Architecture Motorola 74 xx aka Power. PC G 4 Intel Pentium 4

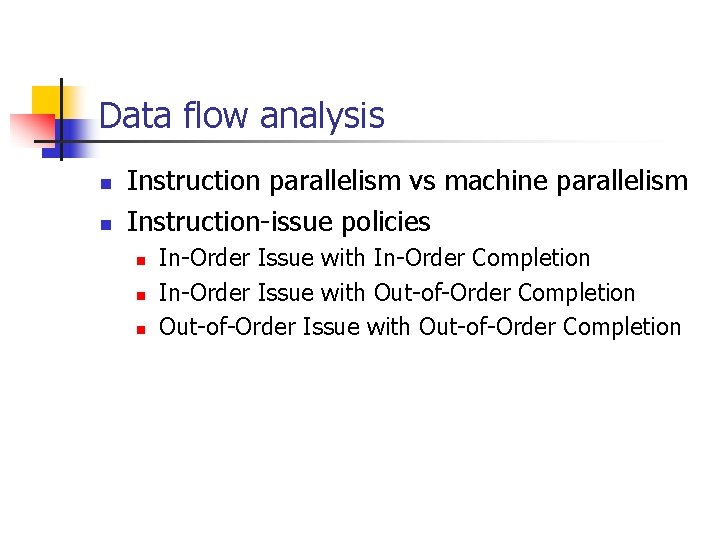

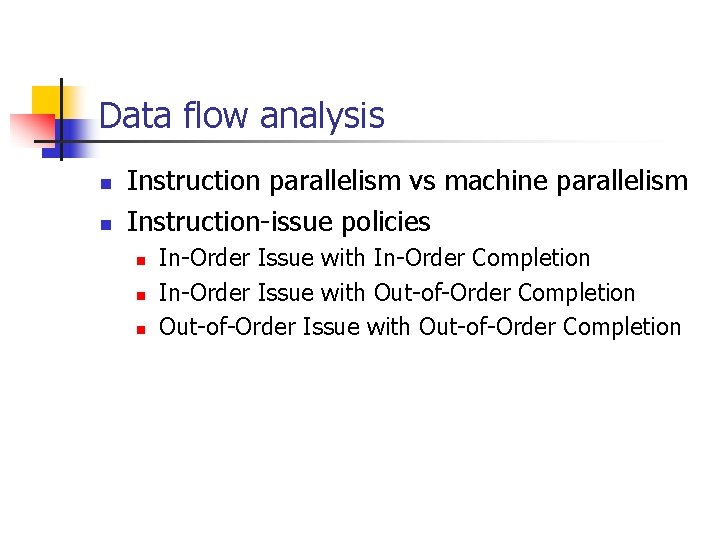

Data flow analysis n n Instruction parallelism vs machine parallelism Instruction-issue policies n n n In-Order Issue with In-Order Completion In-Order Issue with Out-of-Order Completion Out-of-Order Issue with Out-of-Order Completion

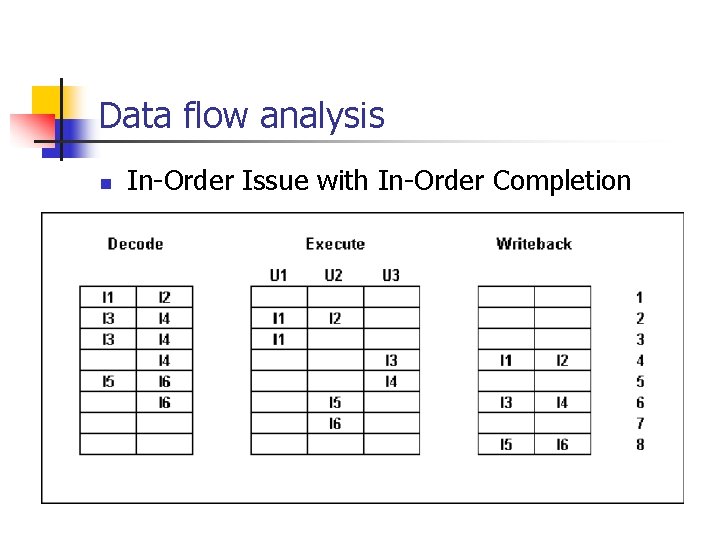

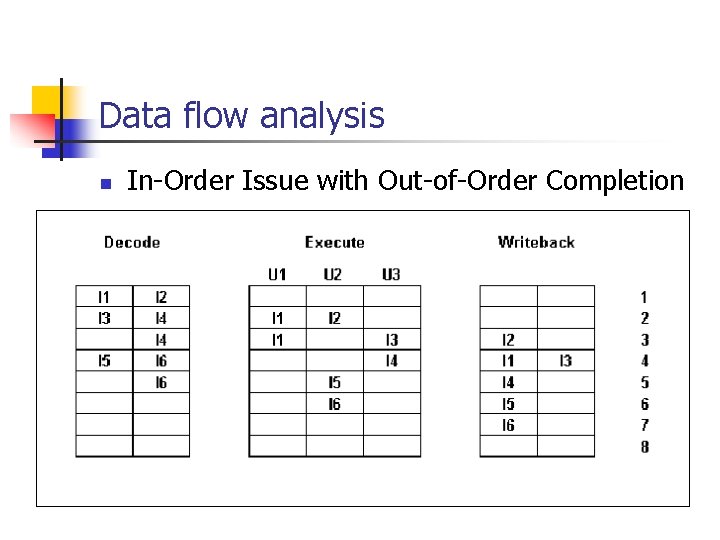

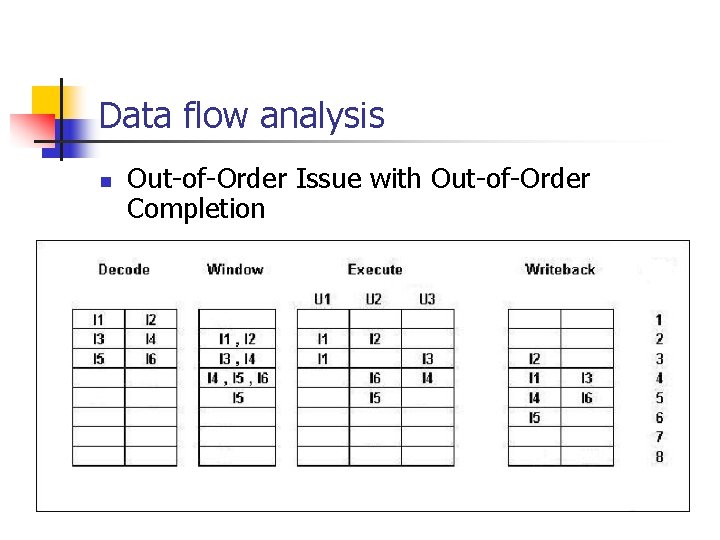

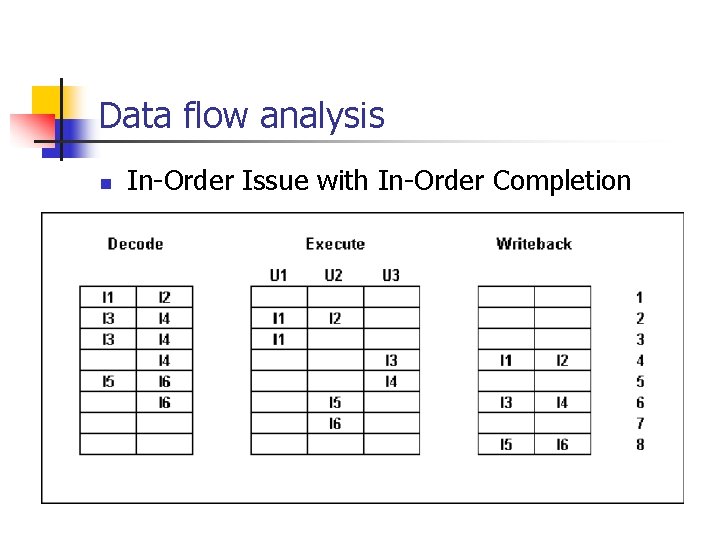

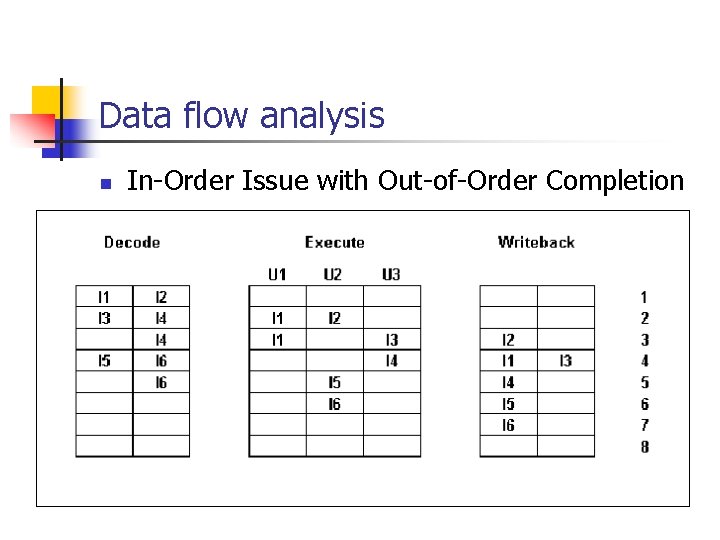

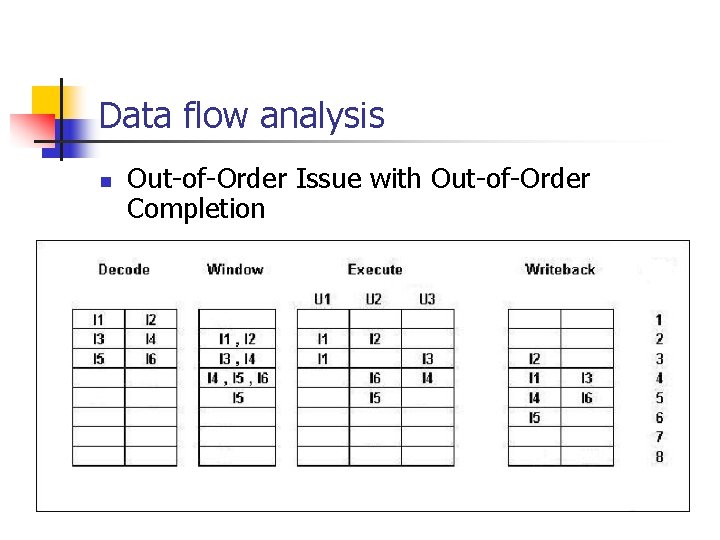

Data flow analysis n Example: n n n i 1 requires 2 cycles to execute, i 3 and i 4 are executed by the same execution unit (U 3), i 5 uses the result of i 4 i 5 and i 6 are executed by the same execution unit (U 2). Hypothetical processor n n n Parallel fetching and decoding of 2 instructions (Decode) Three execution units (Execute) Parallel storing of 2 results (Writeback)

Data flow analysis n In-Order Issue with In-Order Completion

Data flow analysis n In-Order Issue with Out-of-Order Completion

Data flow analysis n In-Order Issue with Out-of-Order Completion Problem of Output Dependency (Write-Write Dependency) Example: n R 3 : = R 3 op R 5 R 4 : = R 3 + 1 R 3 : = R 5 + 1 R 7 : = R 3 op R 4 (i 1) (i 2) (i 3) (i 4) i 1 and i 2; i 3 and i 4 – True Data Dependency (Flow Dependency, Write-Read Dependency) i 1 and i 3; Output Dependency (Write-Write Dependency)

Data flow analysis n Out-of-Order Issue with Out-of-Order Completion

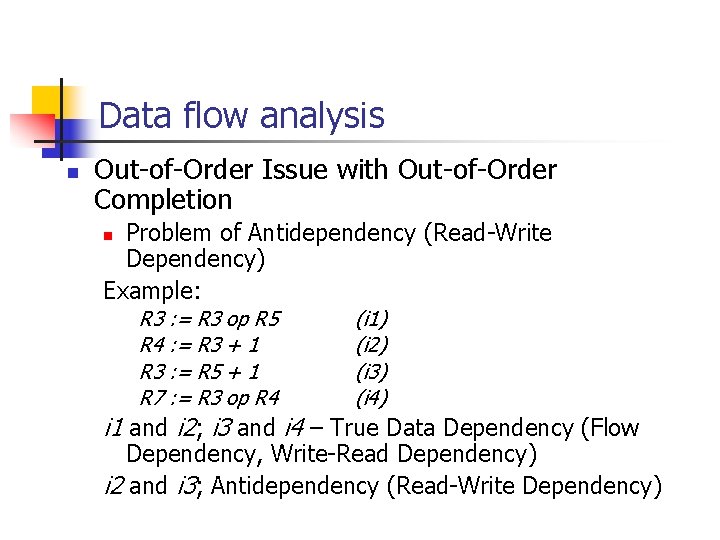

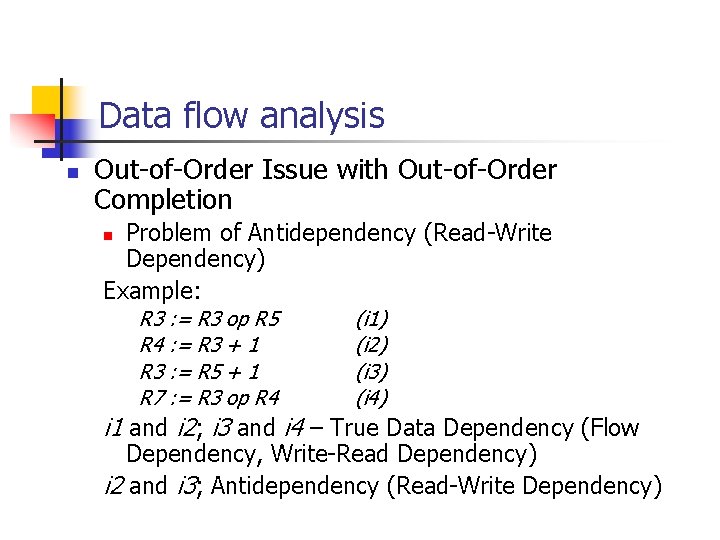

Data flow analysis n Out-of-Order Issue with Out-of-Order Completion Problem of Antidependency (Read-Write Dependency) Example: n R 3 : = R 3 op R 5 R 4 : = R 3 + 1 R 3 : = R 5 + 1 R 7 : = R 3 op R 4 (i 1) (i 2) (i 3) (i 4) i 1 and i 2; i 3 and i 4 – True Data Dependency (Flow Dependency, Write-Read Dependency) i 2 and i 3; Antidependency (Read-Write Dependency)

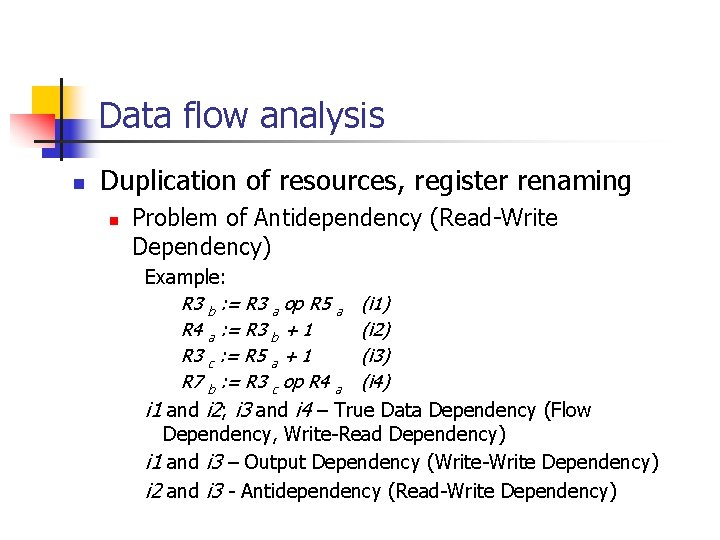

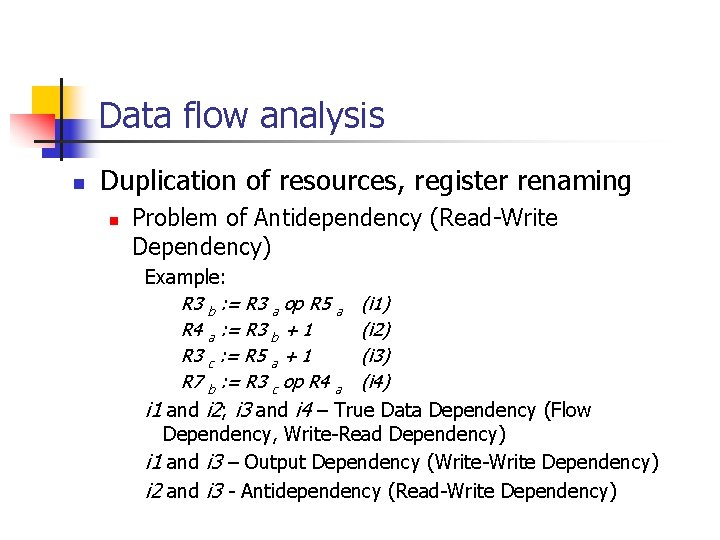

Data flow analysis n Duplication of resources, register renaming n Problem of Antidependency (Read-Write Dependency) Example: R 3 b : = R 3 a op R 5 a (i 1) R 4 a : = R 3 b + 1 (i 2) R 3 c : = R 5 a + 1 (i 3) R 7 b : = R 3 c op R 4 a (i 4) i 1 and i 2; i 3 and i 4 – True Data Dependency (Flow Dependency, Write-Read Dependency) i 1 and i 3 – Output Dependency (Write-Write Dependency) i 2 and i 3 - Antidependency (Read-Write Dependency)

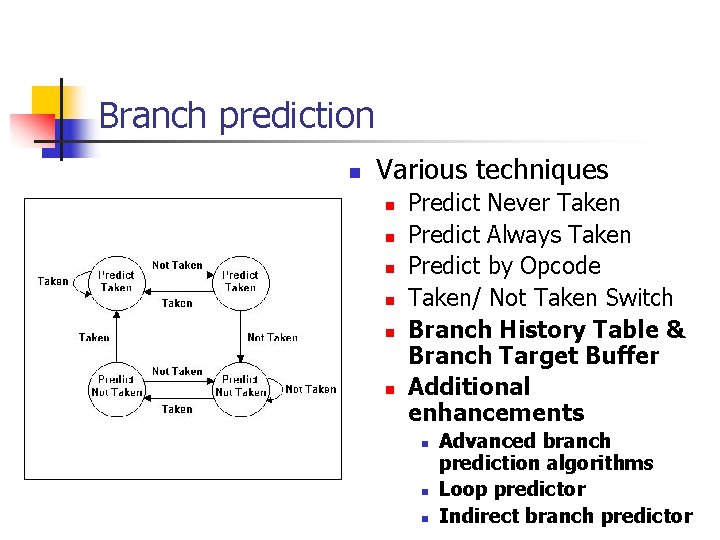

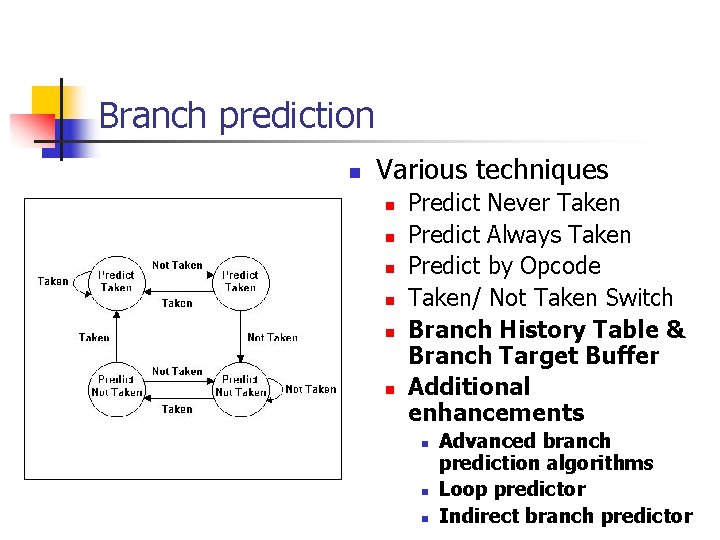

Branch prediction n Various techniques n n n Predict Never Taken Predict Always Taken Predict by Opcode Taken/ Not Taken Switch Branch History Table & Branch Target Buffer Additional enhancements n n n Advanced branch prediction algorithms Loop predictor Indirect branch predictor

Post-RISC Architecture

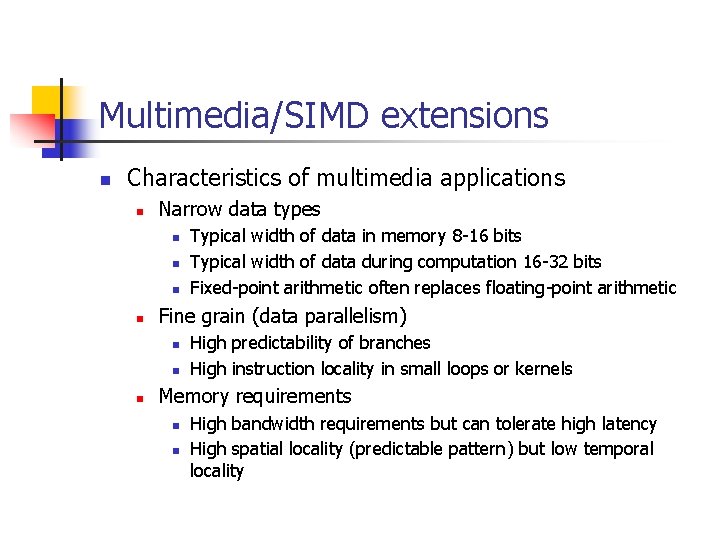

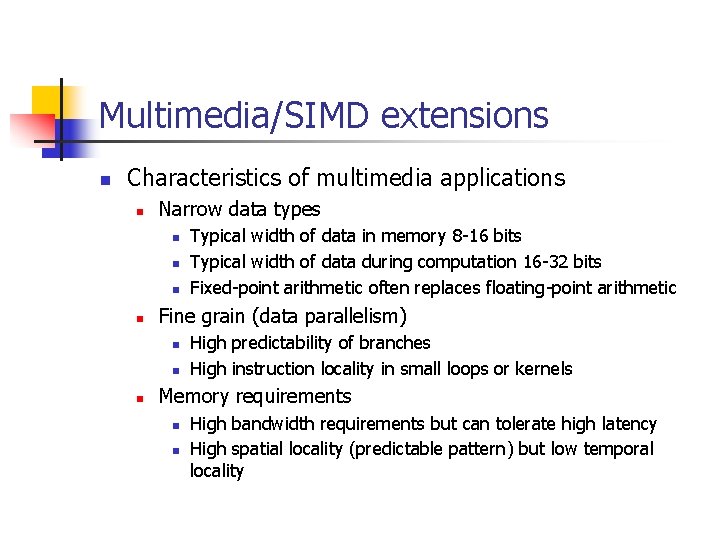

Multimedia/SIMD extensions n Characteristics of multimedia applications n Narrow data types n n Fine grain (data parallelism) n n n Typical width of data in memory 8 -16 bits Typical width of data during computation 16 -32 bits Fixed-point arithmetic often replaces floating-point arithmetic High predictability of branches High instruction locality in small loops or kernels Memory requirements n n High bandwidth requirements but can tolerate high latency High spatial locality (predictable pattern) but low temporal locality

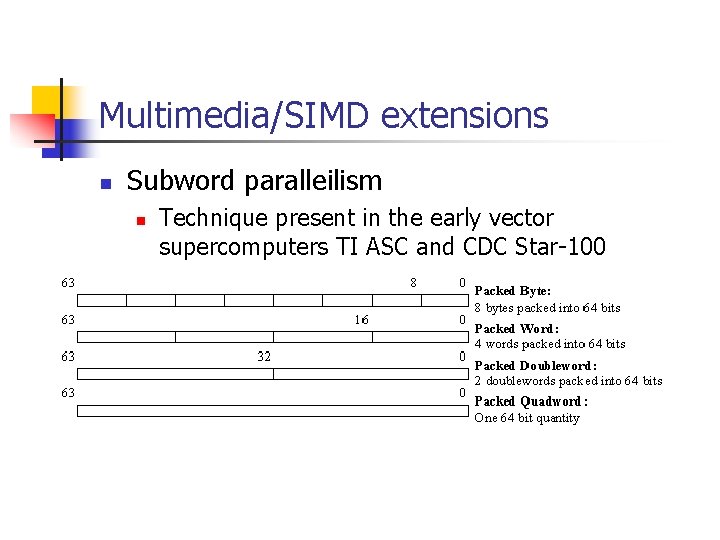

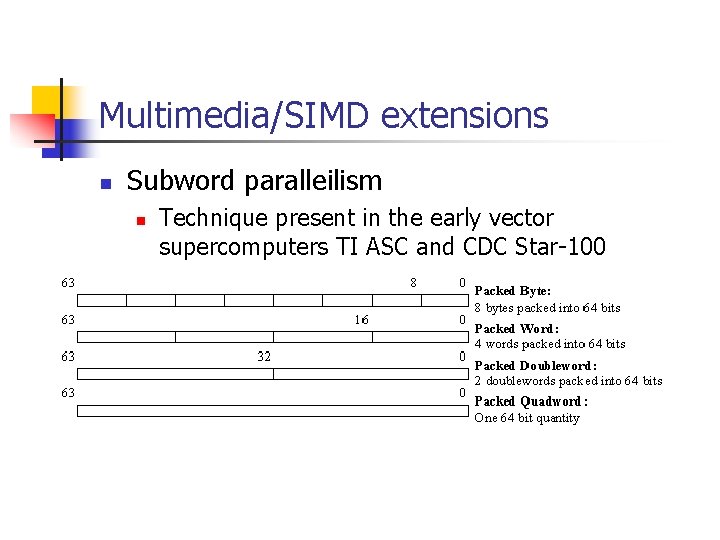

Multimedia/SIMD extensions n Subword paralleilism n Technique present in the early vector supercomputers TI ASC and CDC Star-100

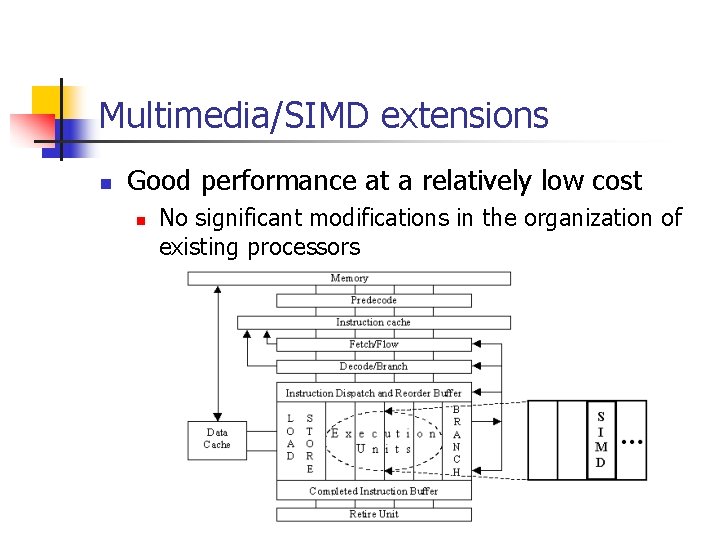

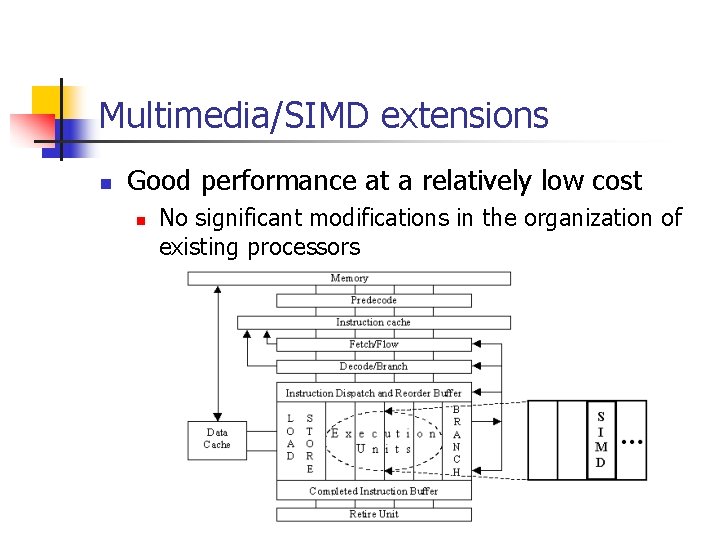

Multimedia/SIMD extensions n Good performance at a relatively low cost n No significant modifications in the organization of existing processors

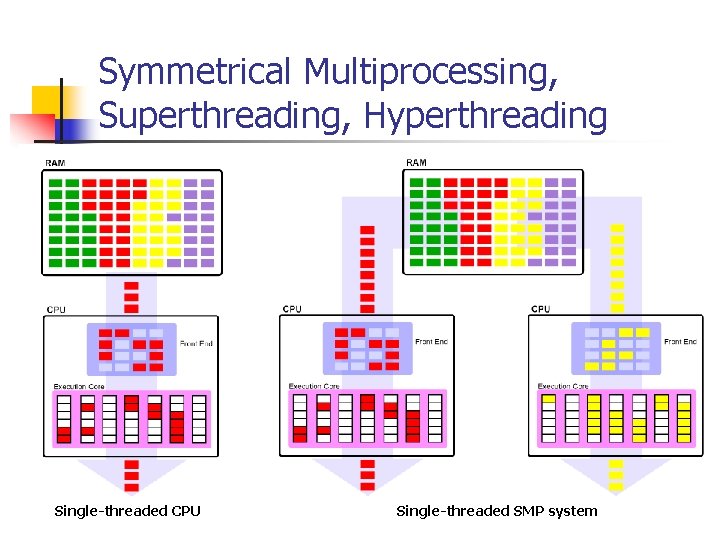

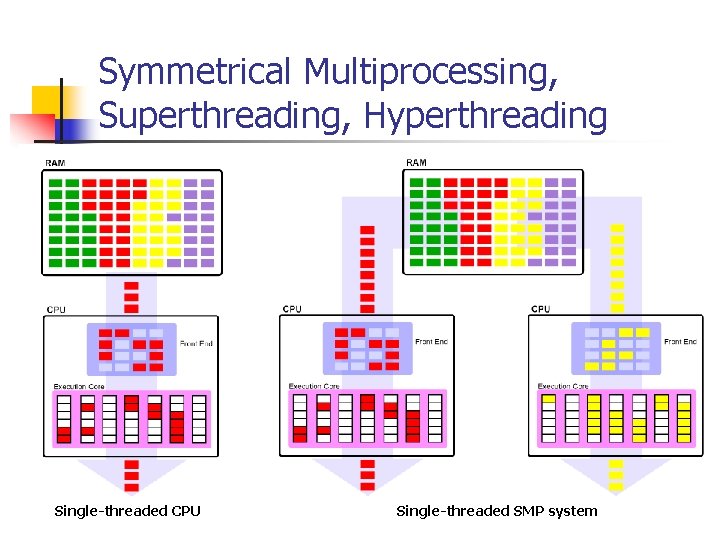

Symmetrical Multiprocessing, Superthreading, Hyperthreading Single-threaded CPU Single-threaded SMP system

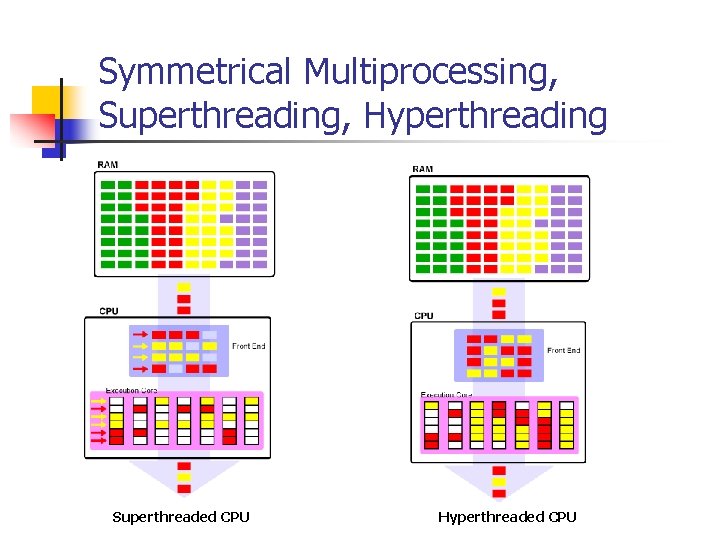

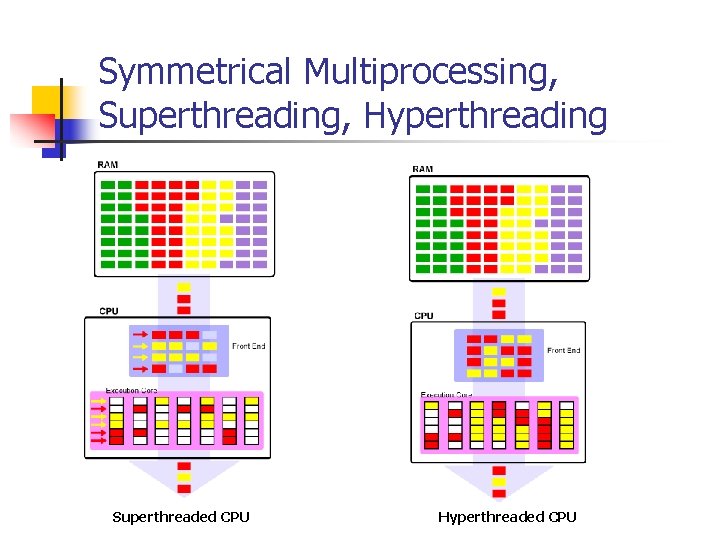

Symmetrical Multiprocessing, Superthreading, Hyperthreading Superthreaded CPU Hyperthreaded CPU

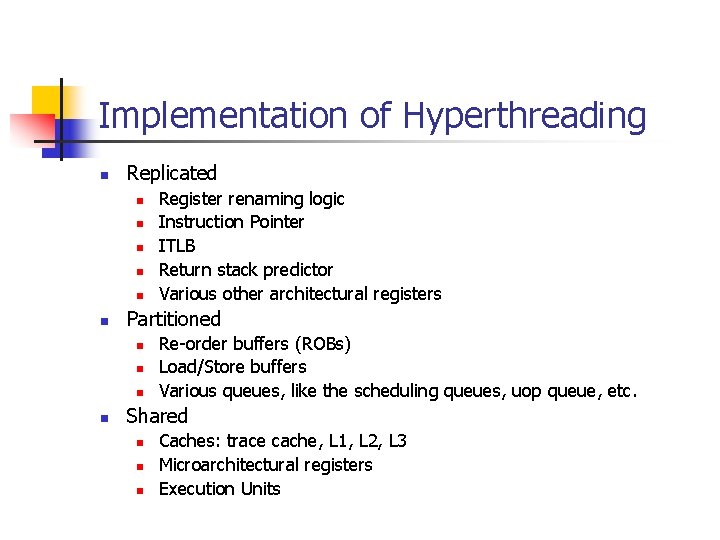

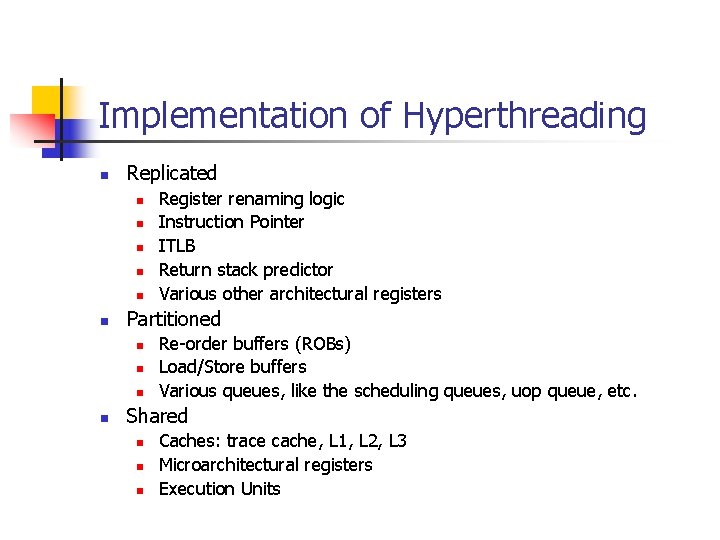

Implementation of Hyperthreading n Replicated n n n Partitioned n n Register renaming logic Instruction Pointer ITLB Return stack predictor Various other architectural registers Re-order buffers (ROBs) Load/Store buffers Various queues, like the scheduling queues, uop queue, etc. Shared n n n Caches: trace cache, L 1, L 2, L 3 Microarchitectural registers Execution Units

Approaches to 64 bits processing n n IA-64 (VLIW/EPIC) AMD x 86 -64 (Intel 64)

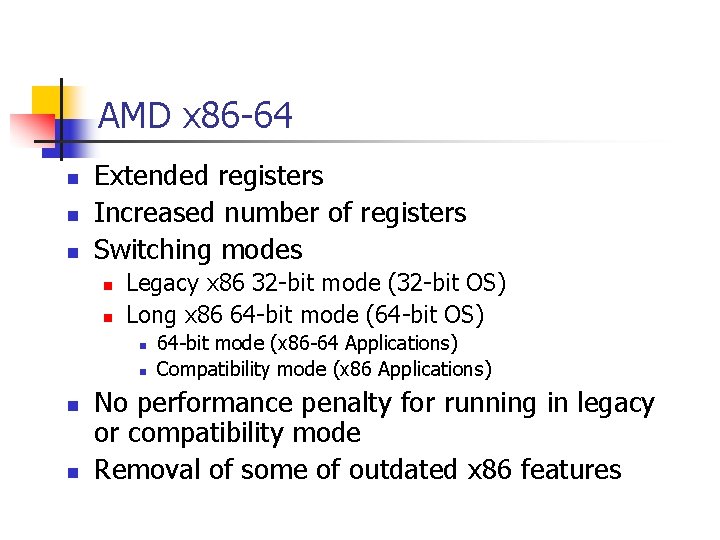

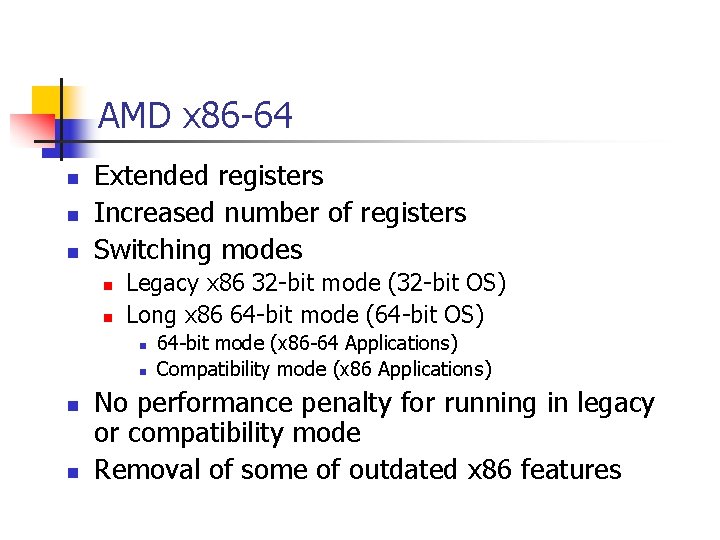

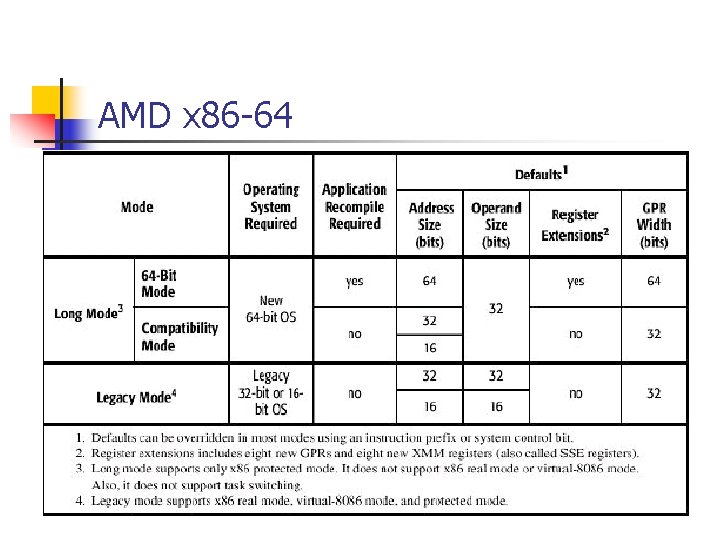

AMD x 86 -64 n n n Extended registers Increased number of registers Switching modes n n Legacy x 86 32 -bit mode (32 -bit OS) Long x 86 64 -bit mode (64 -bit OS) n n 64 -bit mode (x 86 -64 Applications) Compatibility mode (x 86 Applications) No performance penalty for running in legacy or compatibility mode Removal of some of outdated x 86 features

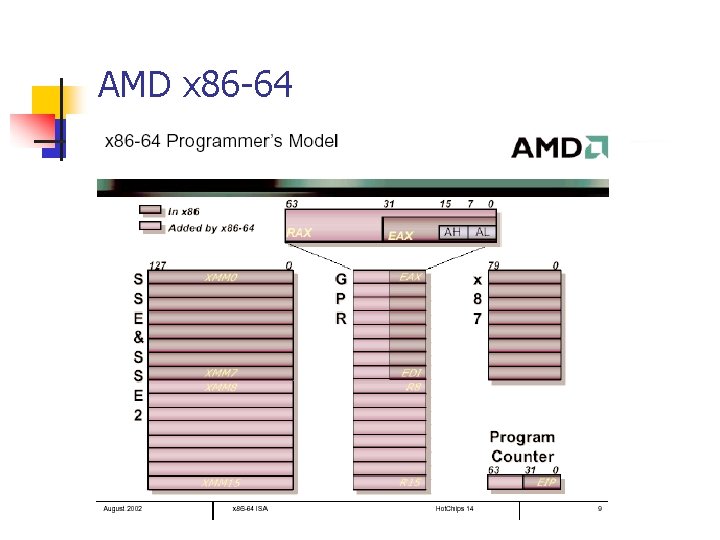

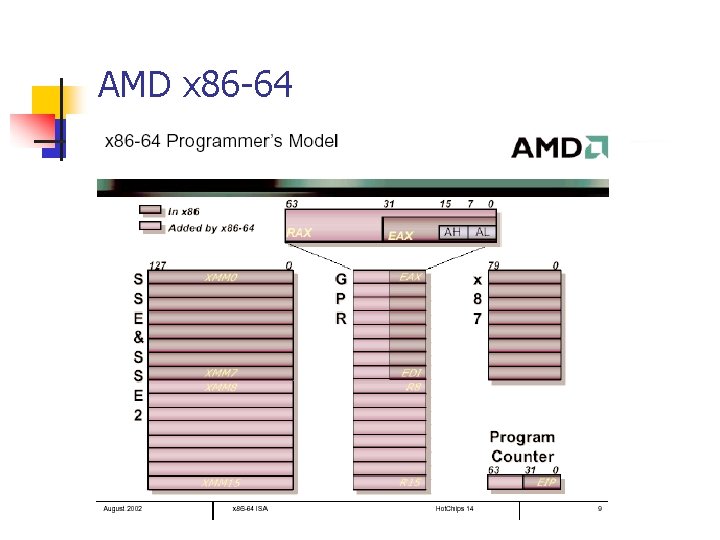

AMD x 86 -64

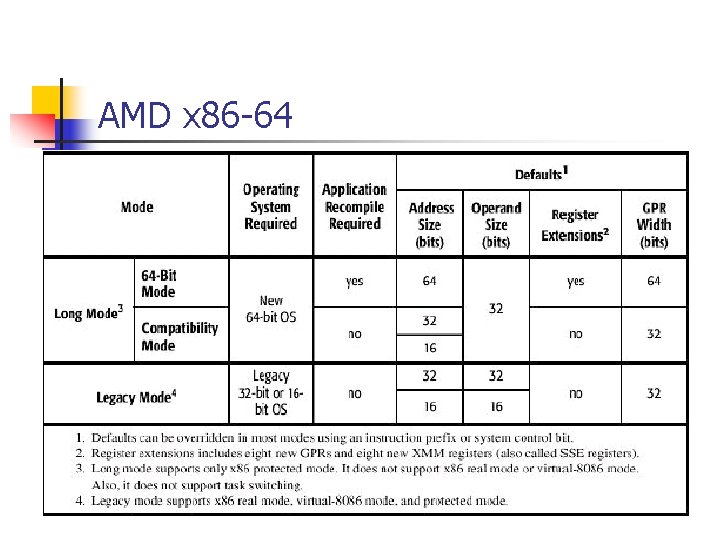

AMD x 86 -64

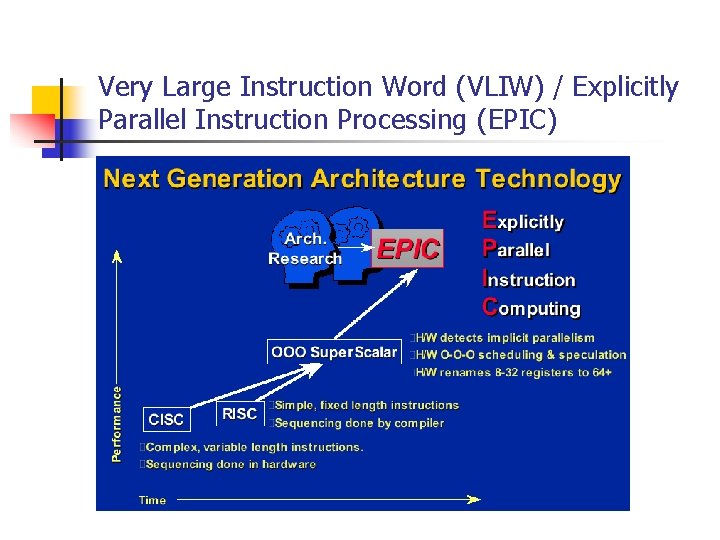

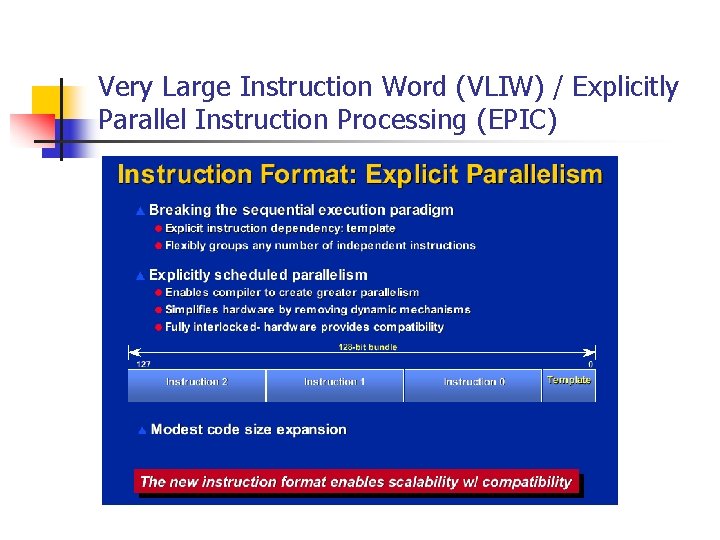

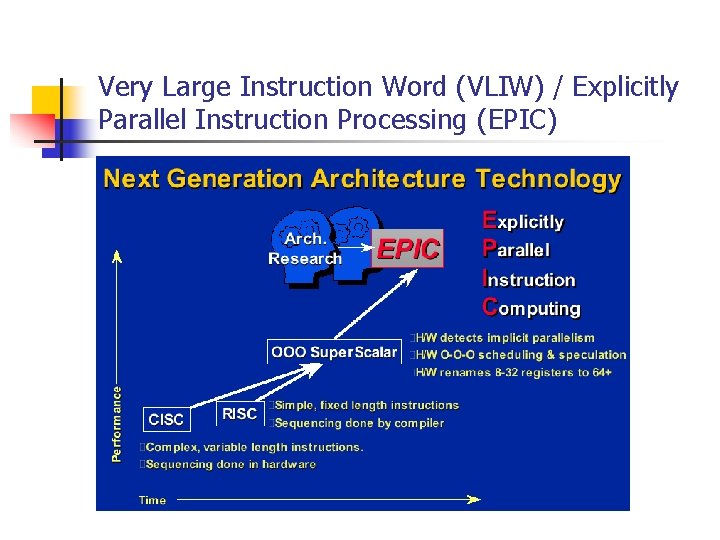

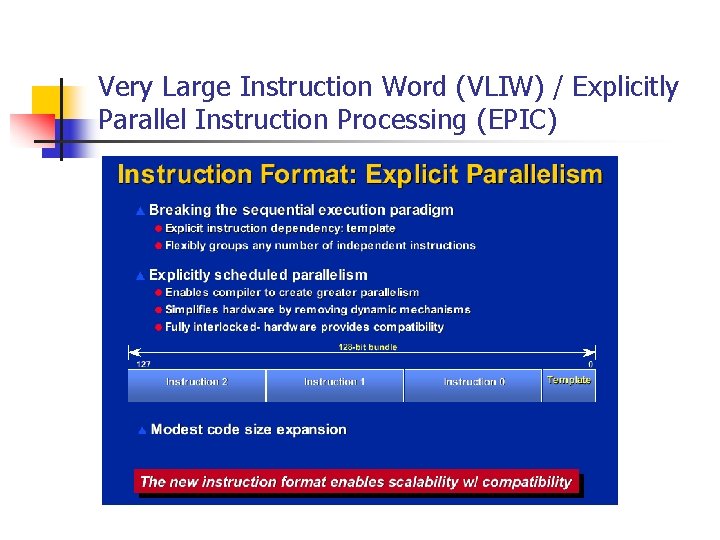

Very Large Instruction Word (VLIW) / Explicitly Parallel Instruction Processing (EPIC)

Very Large Instruction Word (VLIW) / Explicitly Parallel Instruction Processing (EPIC) n Current performance limiters n n n Branches Memory latency Implicit Parallel Instruction Processing

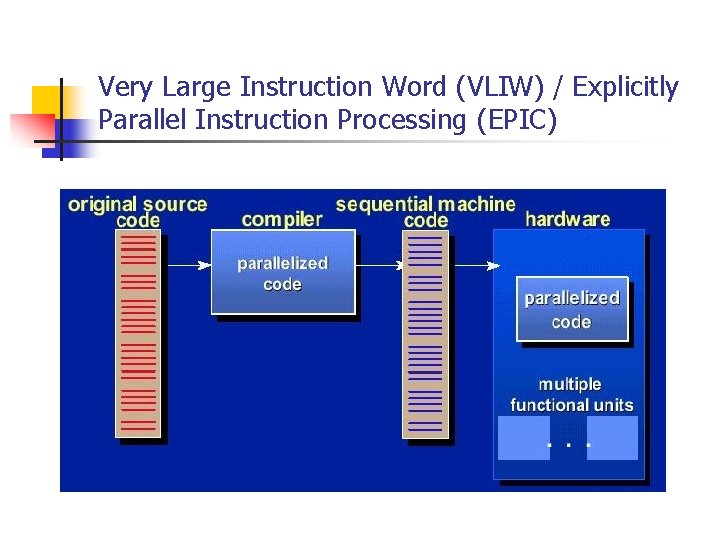

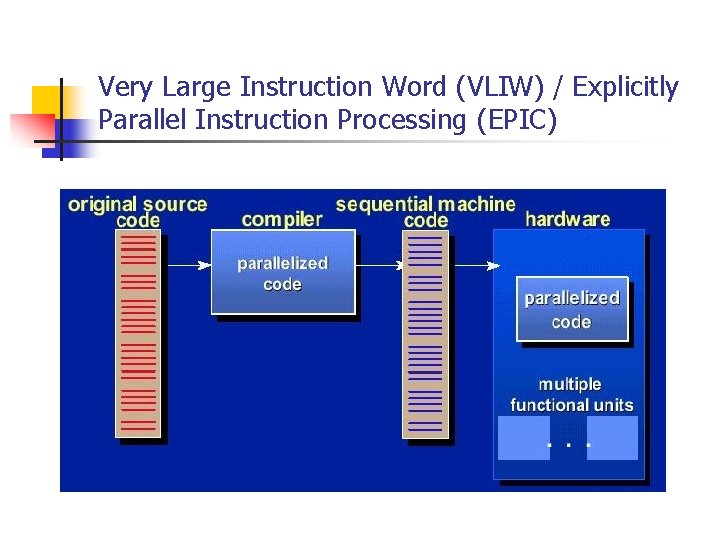

Very Large Instruction Word (VLIW) / Explicitly Parallel Instruction Processing (EPIC)

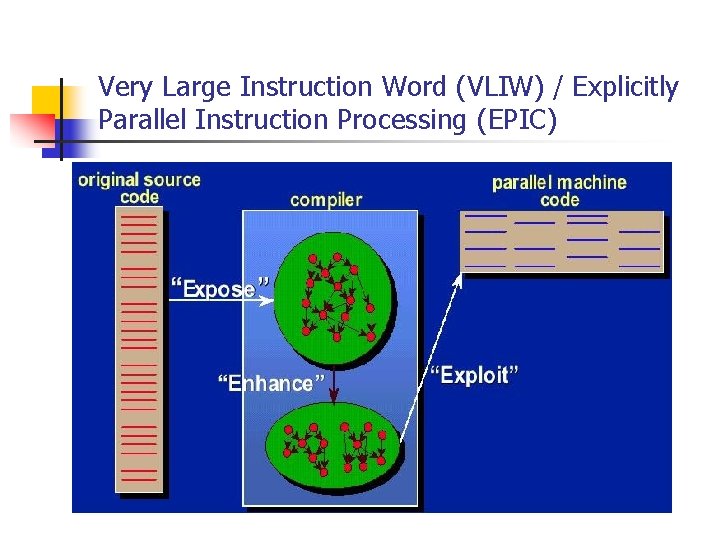

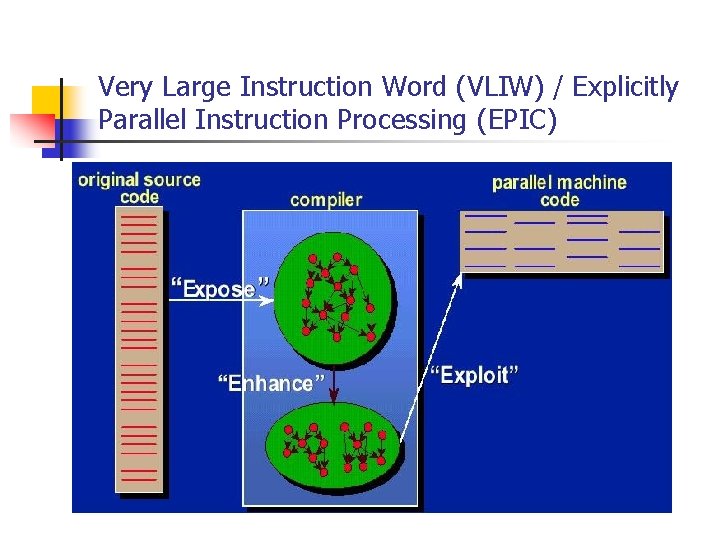

Very Large Instruction Word (VLIW) / Explicitly Parallel Instruction Processing (EPIC)

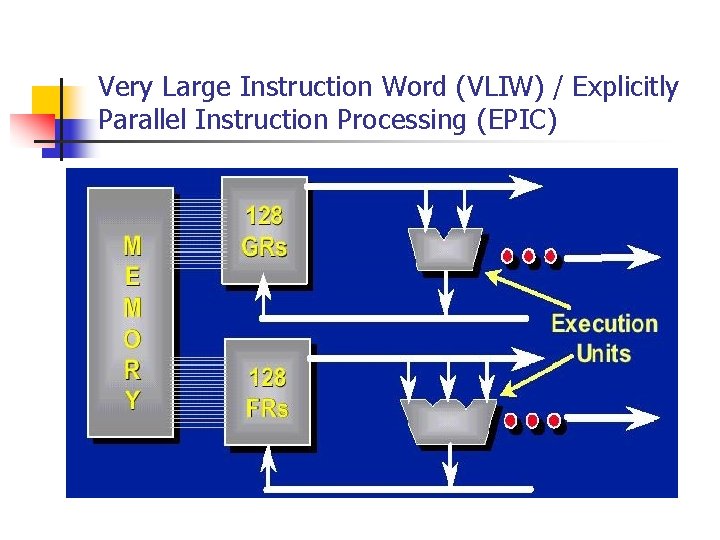

Very Large Instruction Word (VLIW) / Explicitly Parallel Instruction Processing (EPIC)

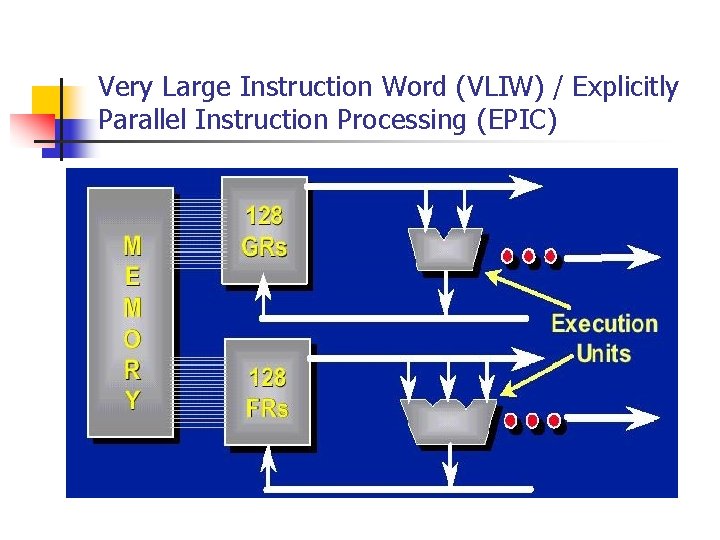

Very Large Instruction Word (VLIW) / Explicitly Parallel Instruction Processing (EPIC)

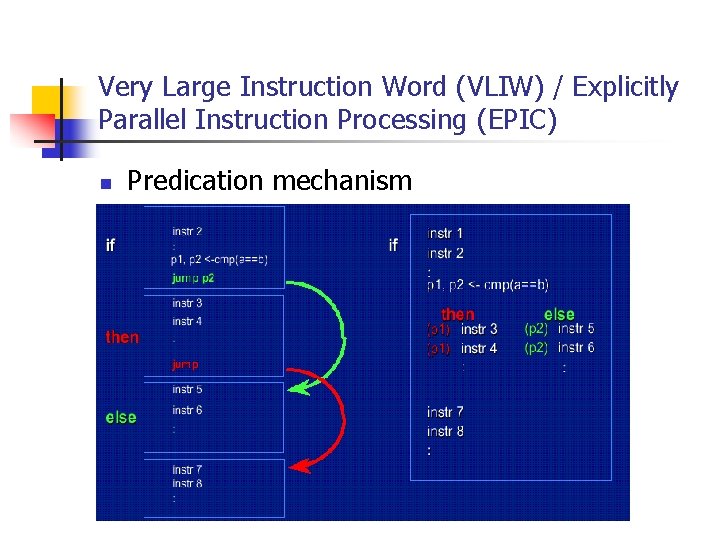

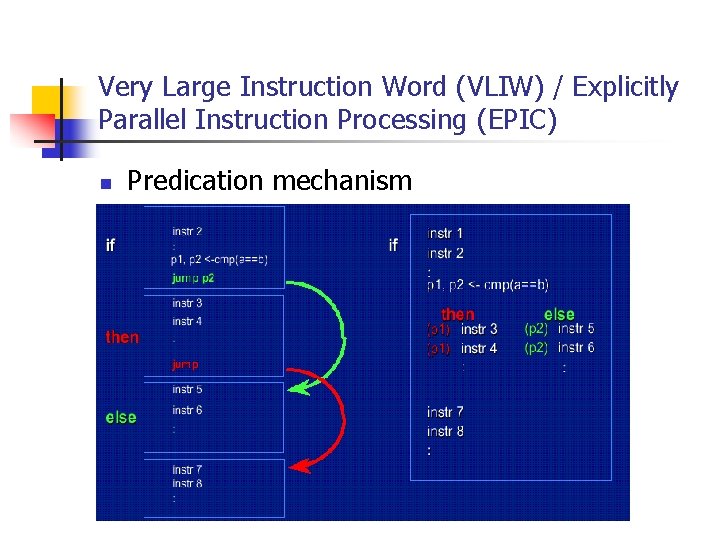

Very Large Instruction Word (VLIW) / Explicitly Parallel Instruction Processing (EPIC) n Predication mechanism

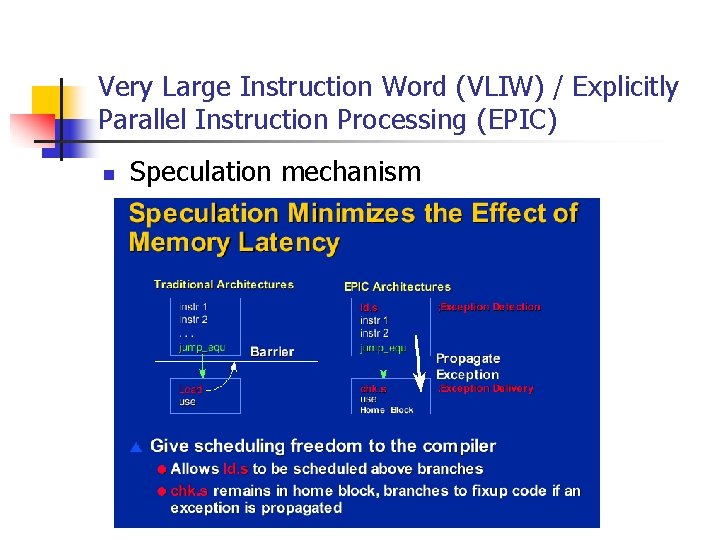

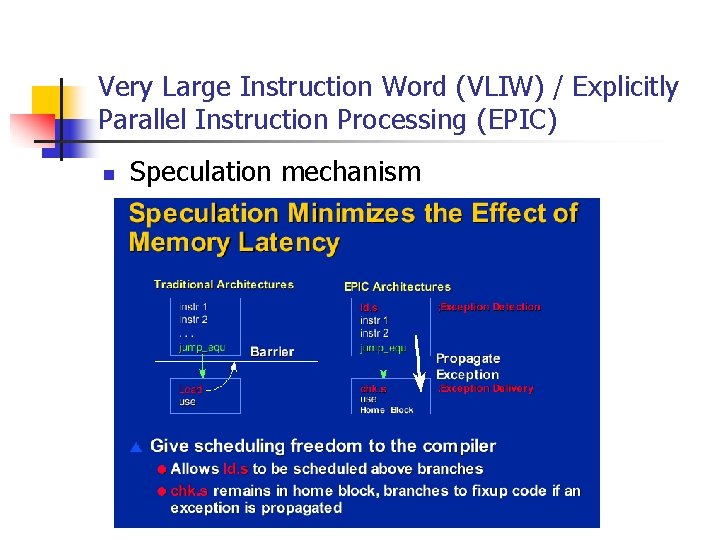

Very Large Instruction Word (VLIW) / Explicitly Parallel Instruction Processing (EPIC) n Speculation mechanism

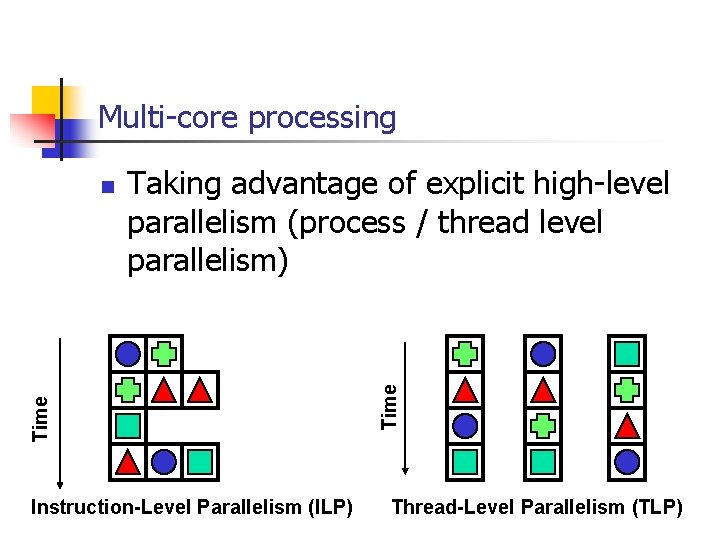

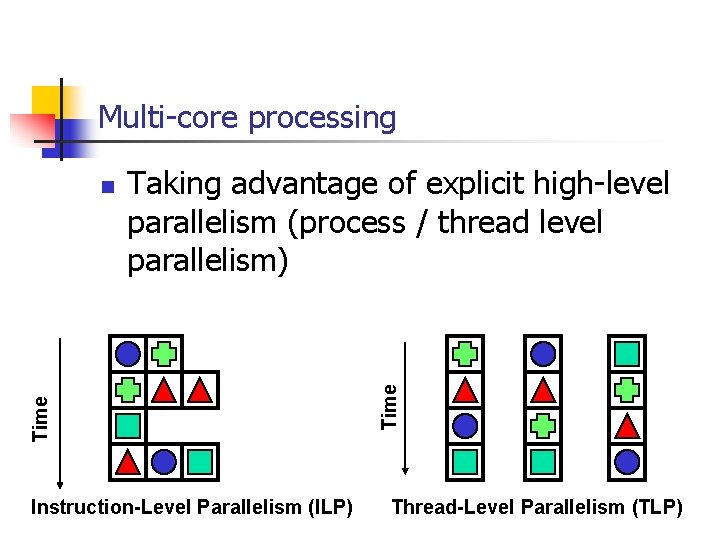

Multi-core processing Instruction-Level Parallelism (ILP) Time Taking advantage of explicit high-level parallelism (process / thread level parallelism) Time n Thread-Level Parallelism (TLP)

Intel Core microarchitecture n Combination and improvement of solutions employed in earlier Intel architectures n n n mainly Pentium M (successor of the P 6/Pentium Pro microarchitecture) Pentium 4 Design for multicore and lowered power consumption n No simplification of the core structure in favor of multiple cores on a single die

Intel Core microarchitecture Features summary n Wide Dynamic Execution n n n n 14 -stage core pipeline 4 decoders to decode up to 5 instructions per cycle 3 clusters of arithmetic logical units macro-fusion and micro-fusion to improve front-end throughput peak dispatching rate of up to 6 micro-ops per cycle peak retirement rate of up to 4 micro-ops per cycle advanced branch prediction algorithms stack pointer tracker to improve efficiency of procedure entries and exits Advanced Smart Cache n n 2 nd level cache up to 4 MB with 16 -way associativity 256 bit internal data path from L 2 to L 1 data caches

Intel Core microarchitecture Features summary n Smart Memory Access n n hardware pre-fetchers to reduce effective latency of 2 nd level cache misses hardware pre-fetchers to reduce effective latency of 1 st level data cache misses "memory disambiguation" to improve efficiency of speculative instruction execution Advanced Digital Media Boost n n n single-cycle inter-completion latency of most 128 -bit SIMD instructions up to eight single-precision floating-point operation per cycle 3 issue ports available to dispatching SIMD instructions for execution

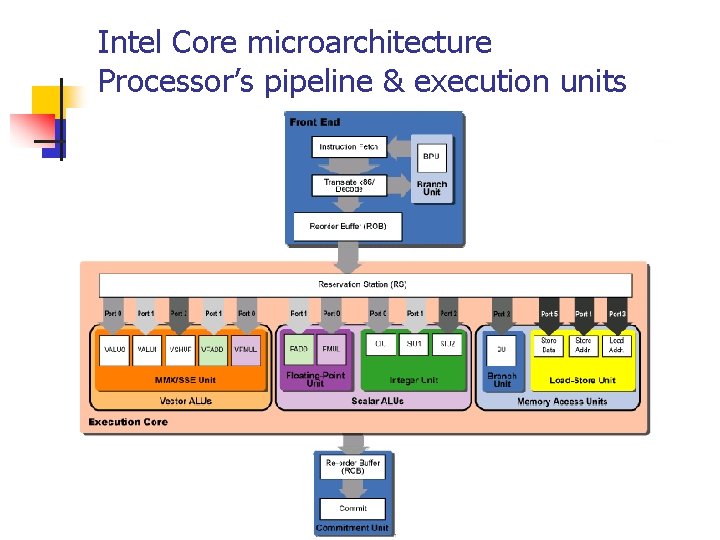

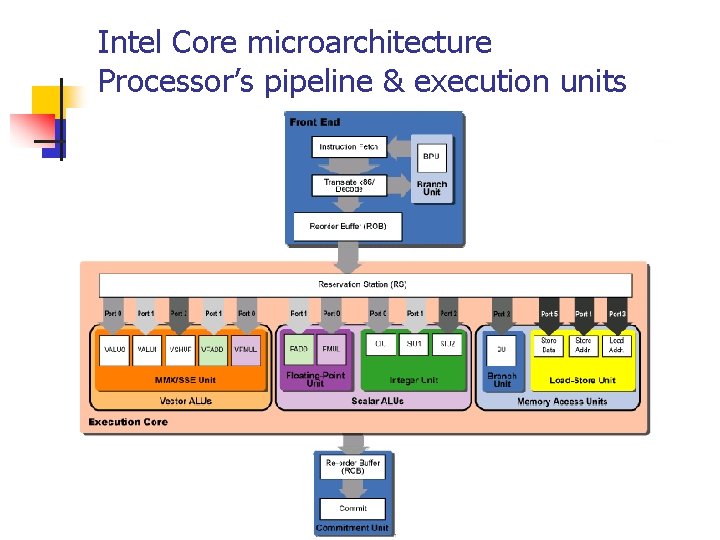

Intel Core microarchitecture Processor’s pipeline & execution units

Intel Core microarchitecture Chosen new features n Instruction fusion n n Macro-fusion – fusion of certain types of x 86 instructions (compare and test) into a single mirco -op in the predecode phase Micro-ops fusion (first introduced with Pentium M) – fusion/pairing of micro-ops generated during translation of certain two micro-ops x 86 instructions (load-and-op and stores (storeaddress and store-data))

Intel Core microarchitecture Chosen new features n Memory disambiguation n Data stream oriented speculative execution as a form of dealing with false memory aliasing n n n The memory disambiguator predicts which loads will not depend on any previous stores When the disambiguator predicts that a load does not have such a dependency, the load takes its data from the L 1 data cache Eventually, the prediction is verified. If an actual conflict is detected, the load and all succeeding instructions are re-executed

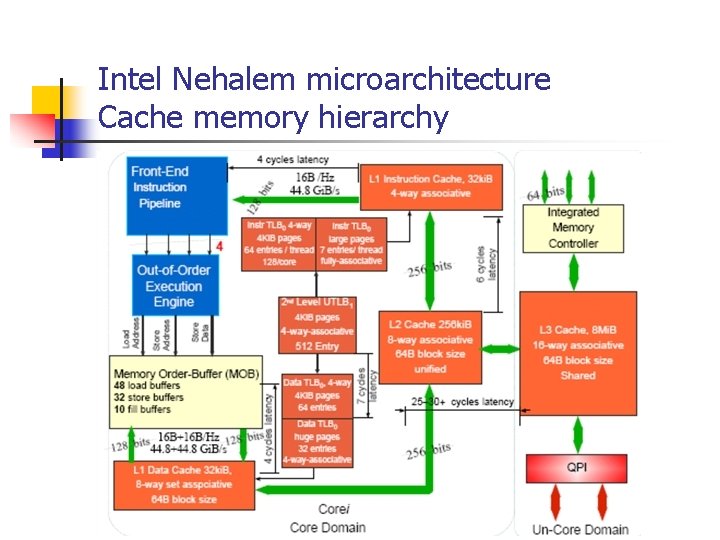

Intel Nehalem microarchitecture Feature summary n Enhanced processor core n n n improved branch prediction and recovery cost from mis-prediction enhancements in loop streaming to improve frontend performance and reduce power consumption deeper buffering in out-of-order engine to sustain higher levels of instruction level parallelism enhanced execution units with accelerated processing of CRC, string/text and data shuffling Hyper-threading technology (SMT) n support for two hardware threads (logical processors) per core

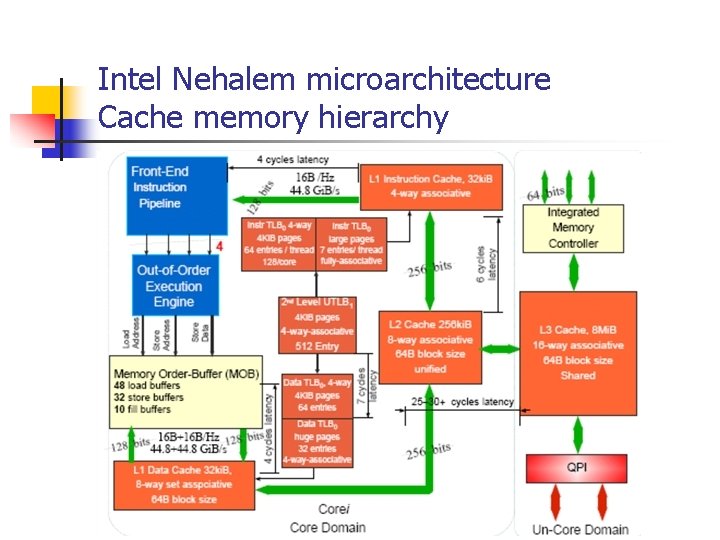

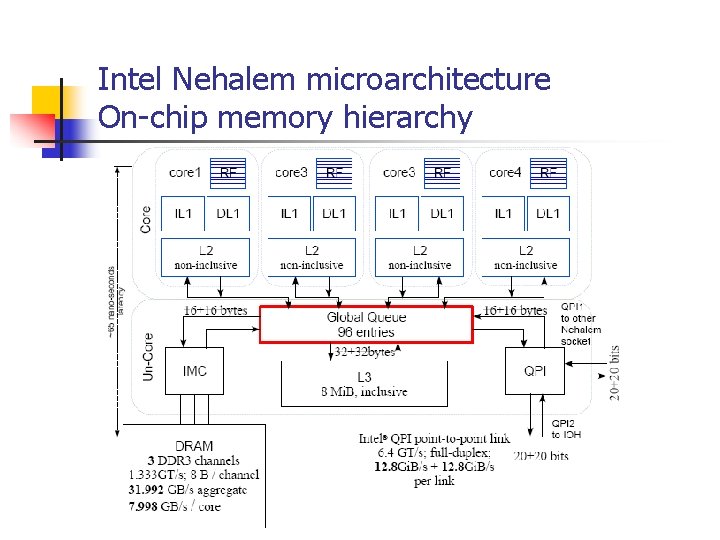

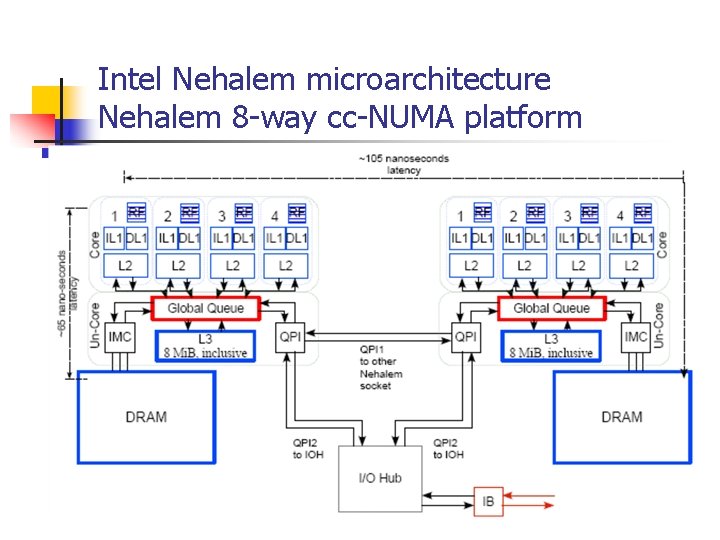

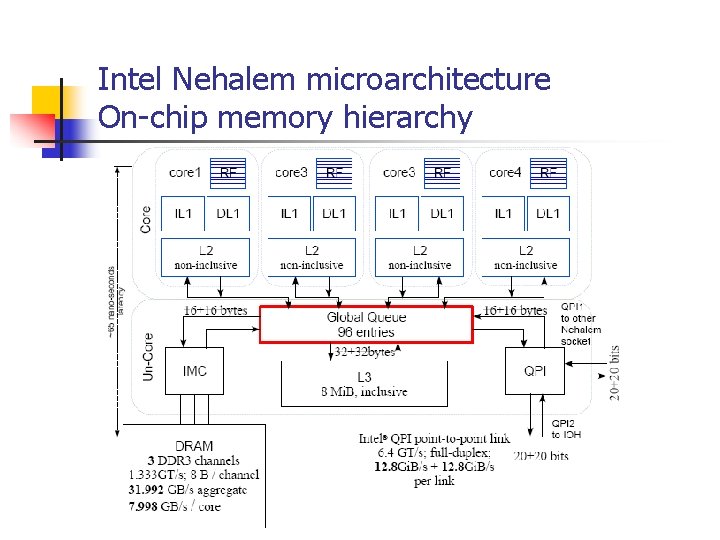

Intel Nehalem microarchitecture Feature summary n Smarter Memory Access n n integrated (on-chip) memory controller supporting low-latency access to local system memory and overall scalable memory bandwidth (previously the memory controller was hosted on a separate chip and it was common to all dual or quad socket systems) new cache hierarchy organization with shared, inclusive L 3 to reduce snoop traffic two level TLBs and increased TLB sizes faster unaligned memory access

Intel Nehalem microarchitecture Feature summary n Dedicated Power management n n n integrated micro-controller with embedded firmware which manages power consumption embedded real-time sensors for temperature, current, and power integrated power gate to turn off/on per-core power consumption

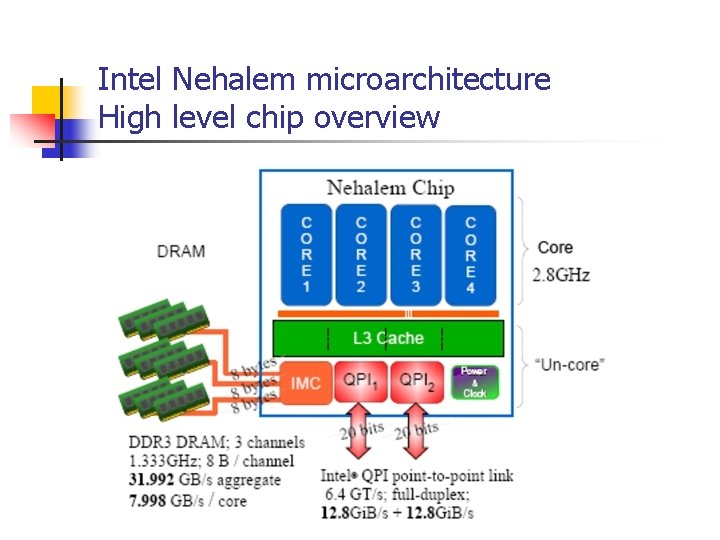

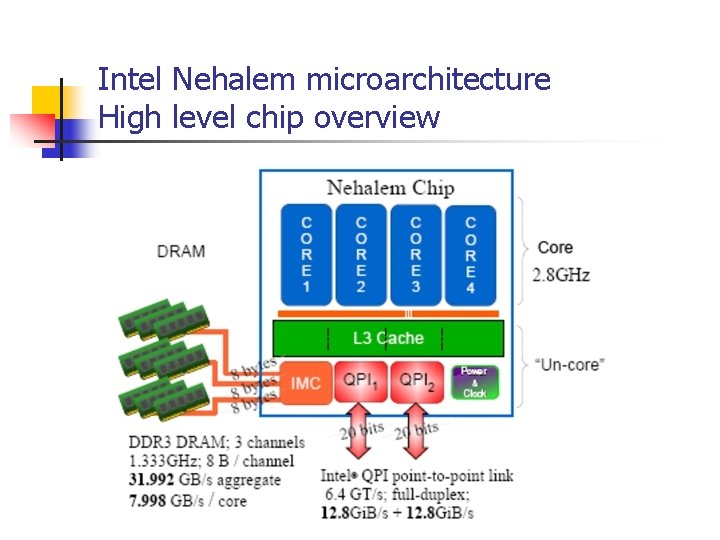

Intel Nehalem microarchitecture High level chip overview

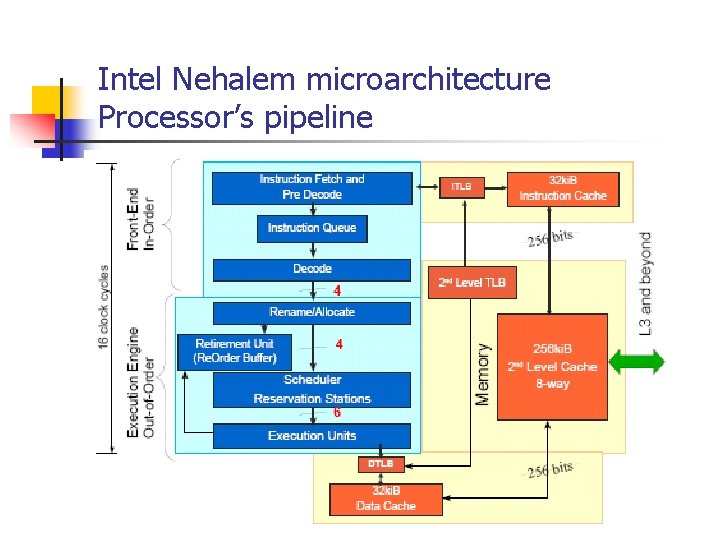

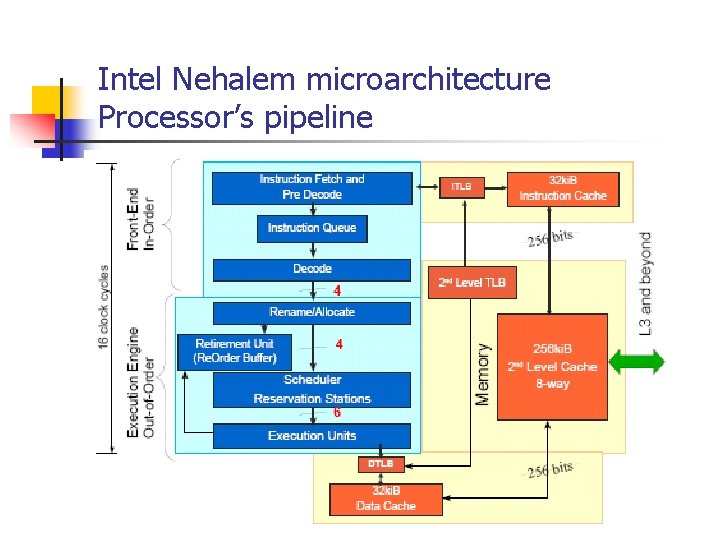

Intel Nehalem microarchitecture Processor’s pipeline

Intel Nehalem microarchitecture In-Order Front End

Intel Nehalem microarchitecture Out-of-Order Execution Engine

Intel Nehalem microarchitecture Cache memory hierarchy

Intel Nehalem microarchitecture On-chip memory hierarchy

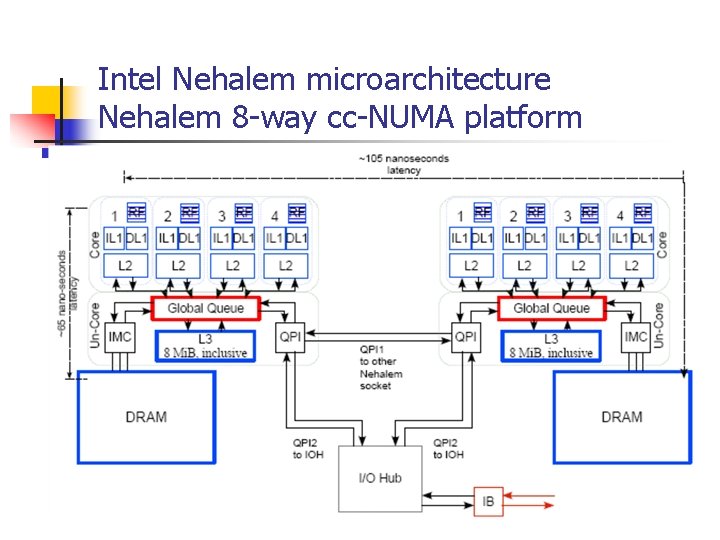

Intel Nehalem microarchitecture Nehalem 8 -way cc-NUMA platform