Models of Assessment for University Student learning Goal

- Slides: 30

Models of Assessment for University Student learning Goal 2: Knowledge of the Physical and Natural World John Jaszczak & Mike Meyer Coffee Chat October 12, 2017

Goal 2: Knowledge of the Physical and Natural World 2. 1 Scientific Knowledge 2. 2 Quantitative Problem Solving 2. 3 Interpretation of Mathematical Representations 2. 4 Assumptions (Quantitative Literacy) 2. 5 Data Analysis 2. 6 Proposes Solutions/Models/Hypotheses

Four Models: 1. Random Sampling of Student Work with Committee-Based Assessment. 2. Department-Level Assessment and Reporting 3. Instructor-Level Assessment and Reporting 4. Scantron (Big Data) Assessment

1. Random Sampling of Student Work with Committee-Based Assessment. • Based on HASS model of assessment • Preserves course/instructor anonymity • Relatively simple for instructors • Instructors need to carefully identify relevant goal criteria • Very challenging for assessors • Variability of assignment types and sizes • Expert content knowledge needed • Small sample-size per class (reliability of data) • Little useful feedback to instructors to improve student learning.

2. Department-Level Assessment and Reporting. • Outgrowth of evaluation of the “random sampling” assessment model • Embraced by Mathematical Sciences Department • Actionable data • Example report available upon request

3. Instructor-Level Assessment and Reporting. • Assessment is done by content expert • No sampling necessary • More work on the part of the instructor • Directly actionable data • Course and instructor are more identifiable • Example report available on Canvas

A Model of Instructor-Level Assessment PH 1110 Fall 2016 Mike Meyer, Director William G. Jackson Center for Teaching and Learning

Class description • Introductory, first-term algebra-based physics course • 56 students (one did not take final exam) • Survey of Mechanics, Sound, Fluids, and Thermo

Plan: Final Exam • Q 1 -Q 8: Multiple choice • Definitions, units, etc. • Drawn from pools, so variable • Q 9 -12 and Q 29 Targeted subgoal questions • Same questions for all students • Q 13 -28 Numerical problems • Drawn from pools by topic • Values vary by student • Initially graded as 100% right or wrong by computer • Students meet to discuss work and receive partial credit

Scientific Knowledge: Goal 2. 1: • Level 1: Scores ≥ 50% (4/8) or better on multiple choice section of final exam (Q. 1 -8) 100% of students met this criteria • Level 2: Scores ≥ 75 % (6/8) or better on multiple choice section of final exam (Q. 1 -8) 94% of students met this criteria

Problem Solving/Modeling Goals 2. 2 and 2. 6: • Level 1: Scores ≥ 40% or better (160/400) on problem portion of exam after partial-credit follow-up 96% (53/55) of students met this criteria • Level 2: Scores ≥ 60% or better (240/400) on problem portion of exam after partial credit follow-up 89% (49/55) of student met this criteria • Level 3: Scores ≥ 80% or better (320/400) on problem portion of exam after partial credit follow-up 51% (28/55 students) met this criteria

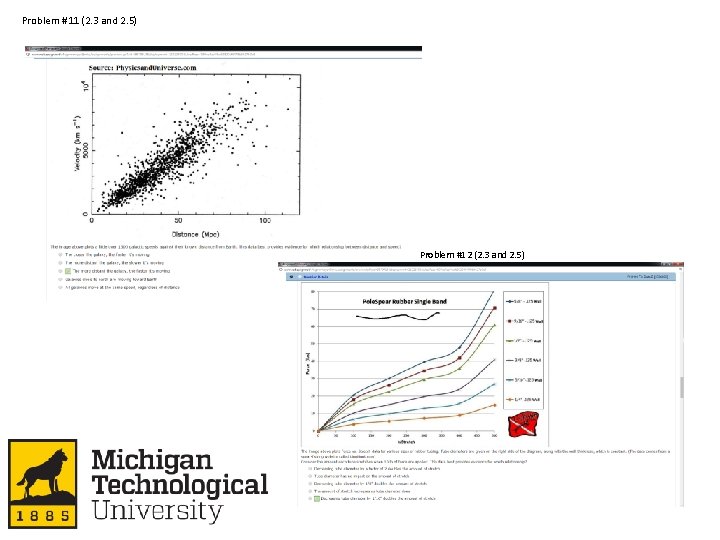

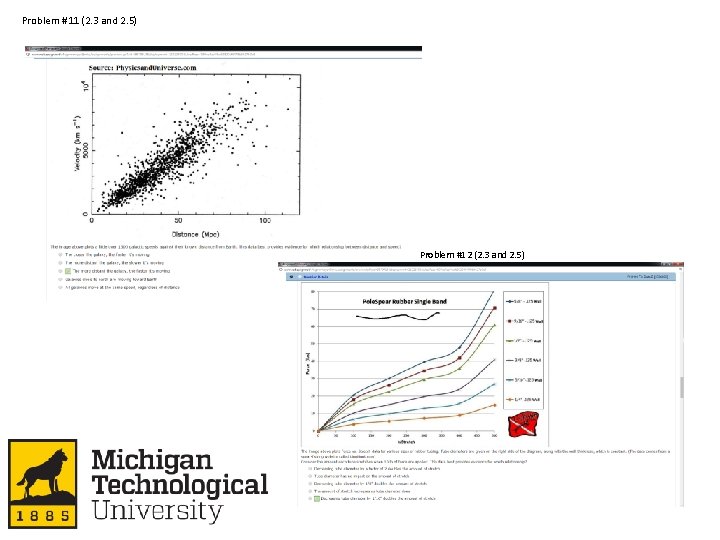

Problem #11 (2. 3 and 2. 5) Problem #12 (2. 3 and 2. 5)

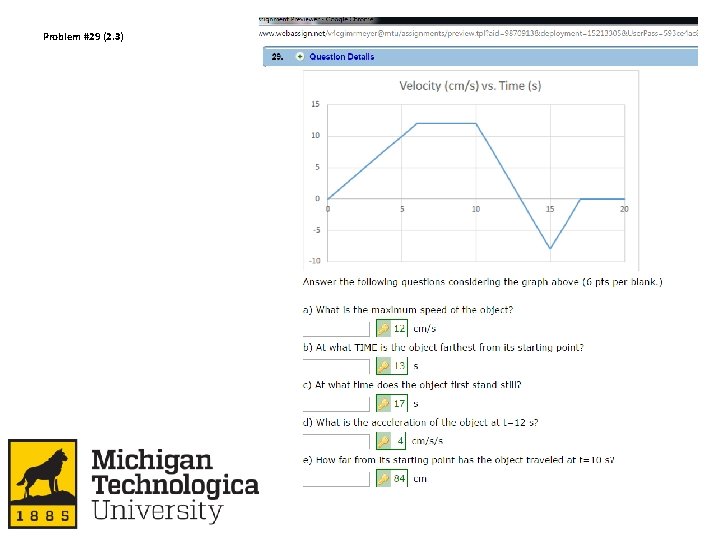

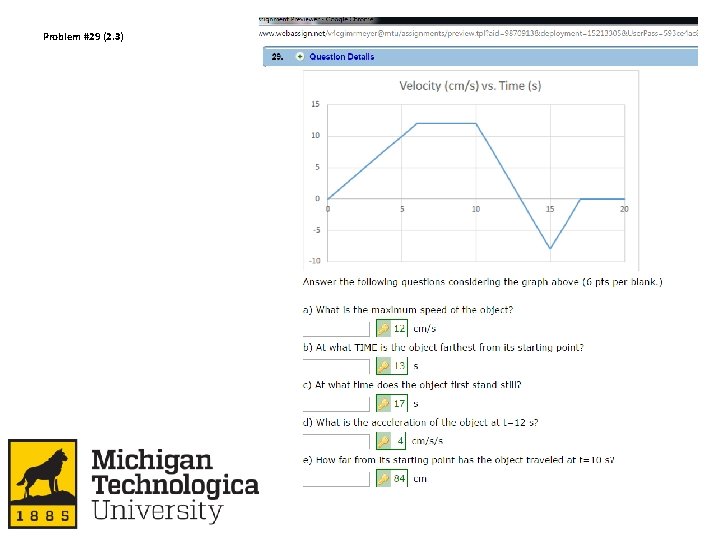

Problem #29 (2. 3)

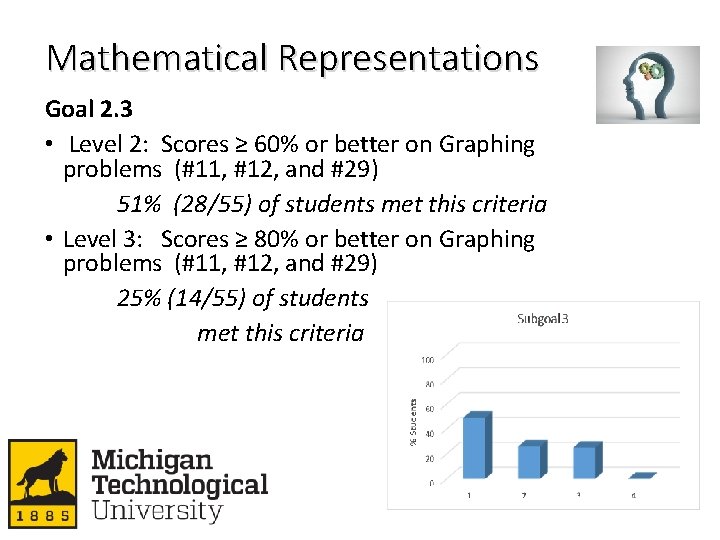

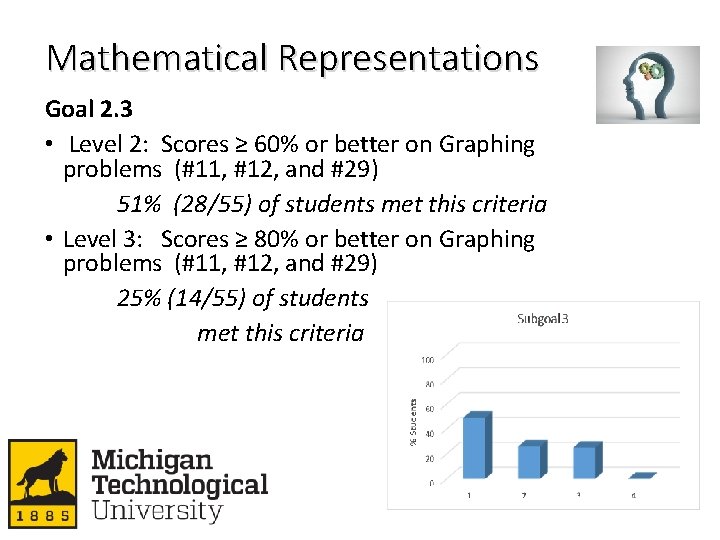

Mathematical Representations Goal 2. 3 • Level 2: Scores ≥ 60% or better on Graphing problems (#11, #12, and #29) 51% (28/55) of students met this criteria • Level 3: Scores ≥ 80% or better on Graphing problems (#11, #12, and #29) 25% (14/55) of students met this criteria

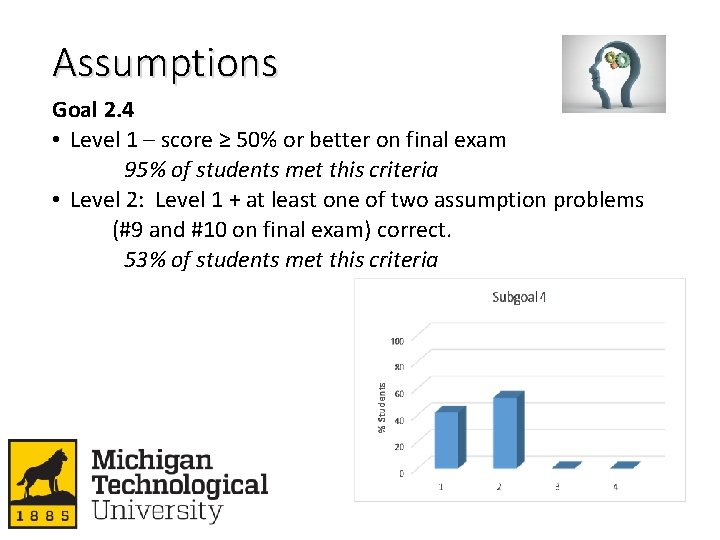

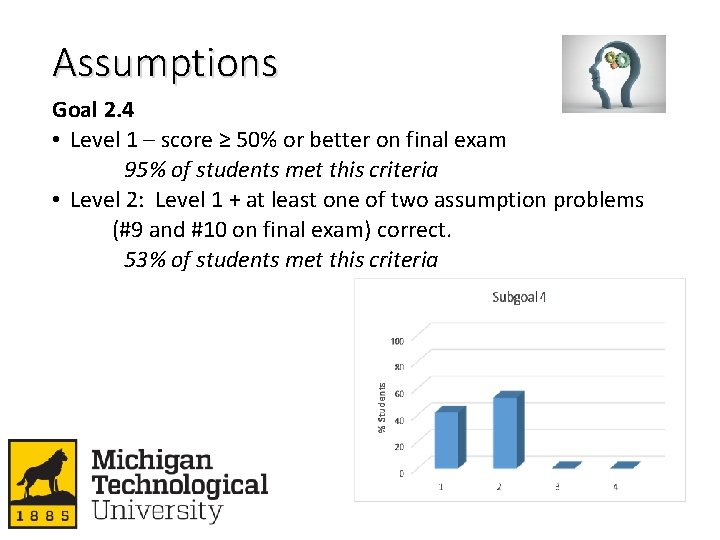

Assumptions Goal 2. 4 • Level 1 – score ≥ 50% or better on final exam 95% of students met this criteria • Level 2: Level 1 + at least one of two assumption problems (#9 and #10 on final exam) correct. 53% of students met this criteria

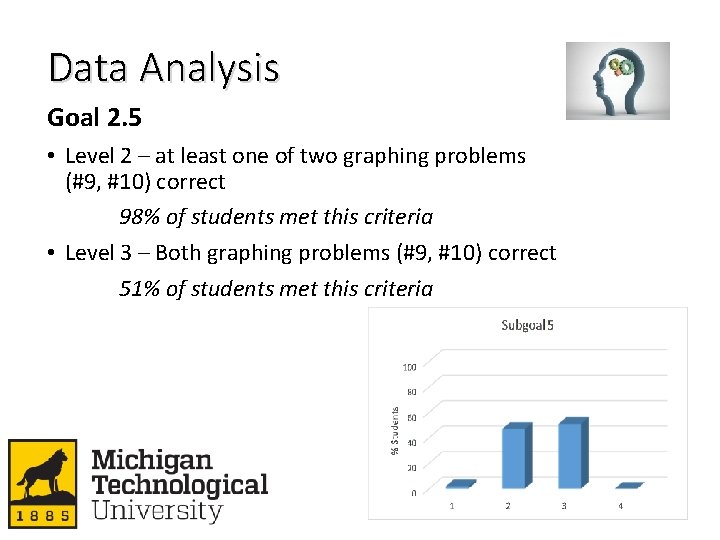

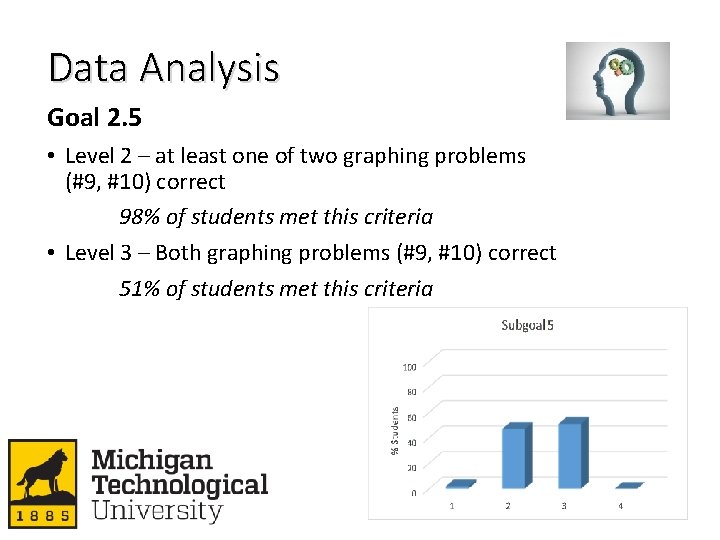

Data Analysis Goal 2. 5 • Level 2 – at least one of two graphing problems (#9, #10) correct 98% of students met this criteria • Level 3 – Both graphing problems (#9, #10) correct 51% of students met this criteria

Analysis/Summary • Course seems to be accomplishing Level 2 “Developing” goal for: • 2. 1 – Scientific Knowledge • 2. 2 – Quantitative Problem Solving • 2. 6 – Models/Hypotheses • Assessment might need work for: • 2. 4 – Assumptions • 2. 5 – Data Analysis • Content area that likely needs more focus: • 2. 3 – Graphing

Instructor-Level Assessment Model • Intentionally designed student work meets the needs of both the course/instructor and assessment process • Subjective decisions are made at the root level • Results are readily available for reflection and action • Opportunities for discussion need to be fostered

4. Scantron (Big Data) Assessment • Relatively simple for instructors • Need to map questions with rubric criteria • Need to help interpret results and targets • Assessment is done by content experts • Biology, Chemistry, Physics • No sampling necessary • Directly actionable data • Course and instructor are more identifiable • Excel spreadsheet tool is available

Scantron Assessment of PH 2100 University Physics I • Comprehensive 40 -question multiple-choice final exam. • Not intentionally designed for Gen Ed assessment. • Same primary instructor over several years. • Spring enrollment is typically 550 to over 700 students in 2 sections.

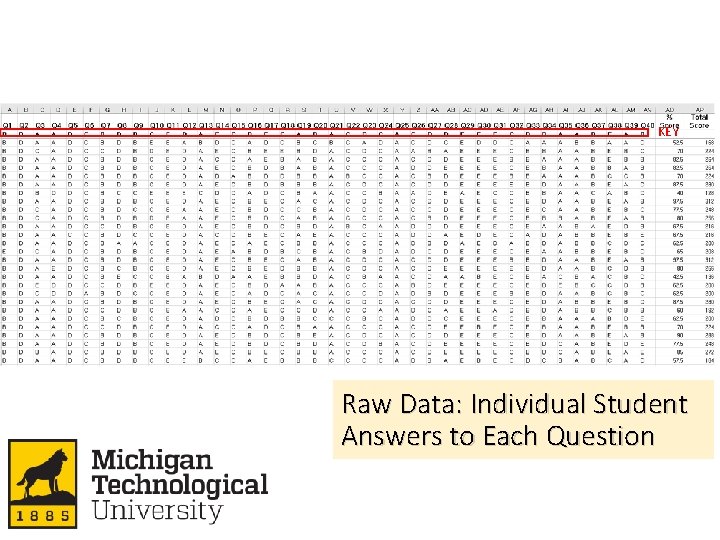

KEY Raw Data: Individual Student Answers to Each Question

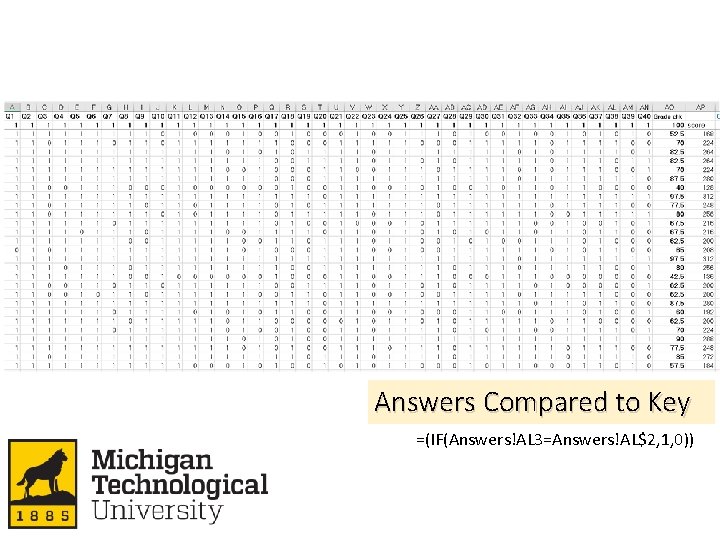

Answers Compared to Key =(IF(Answers!AL 3=Answers!AL$2, 1, 0))

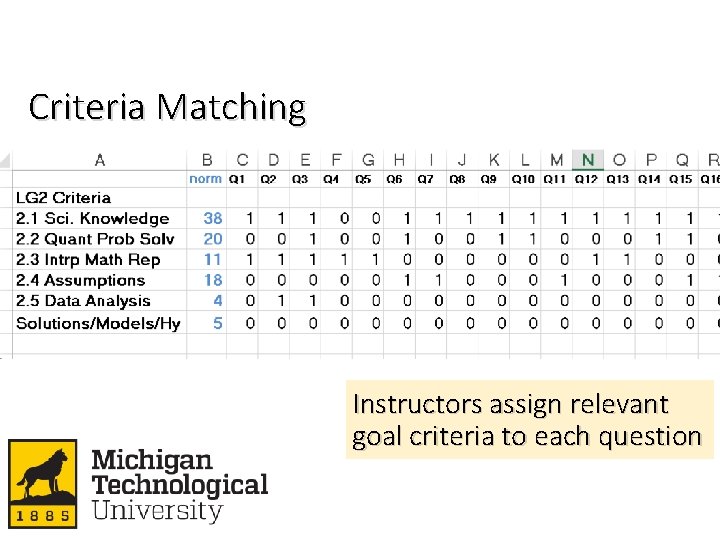

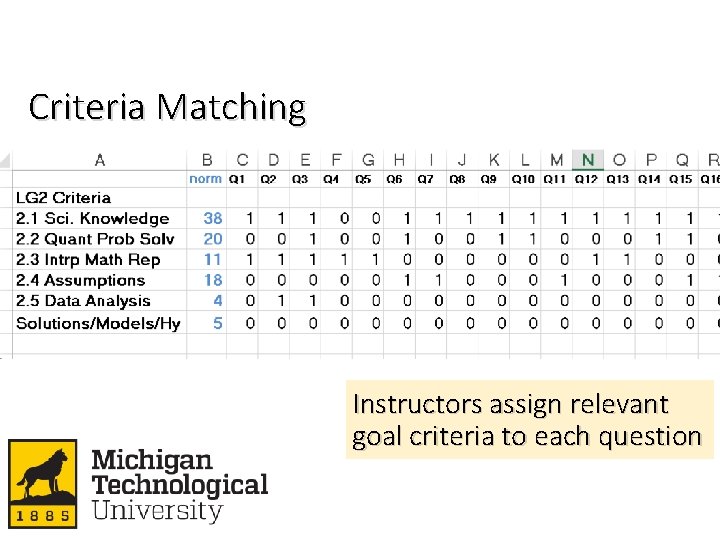

Criteria Matching Instructors assign relevant goal criteria to each question

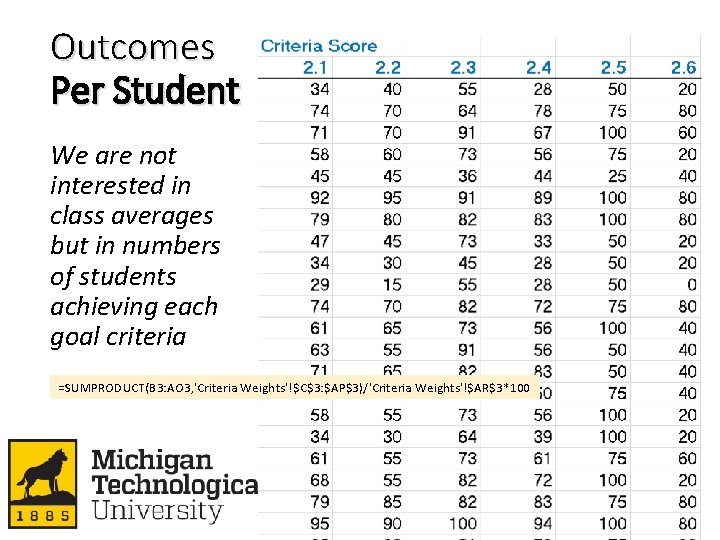

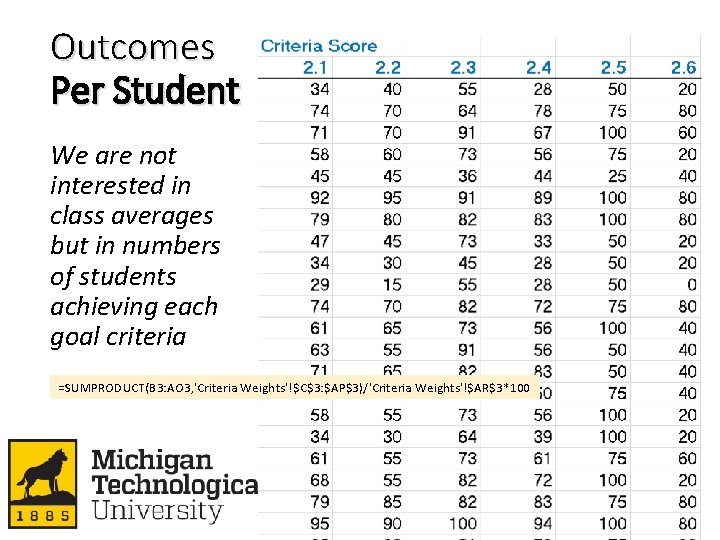

Outcomes Per Student We are not interested in class averages but in numbers of students achieving each goal criteria =SUMPRODUCT(B 3: AO 3, 'Criteria Weights'!$C$3: $AP$3)/'Criteria Weights'!$AR$3*100

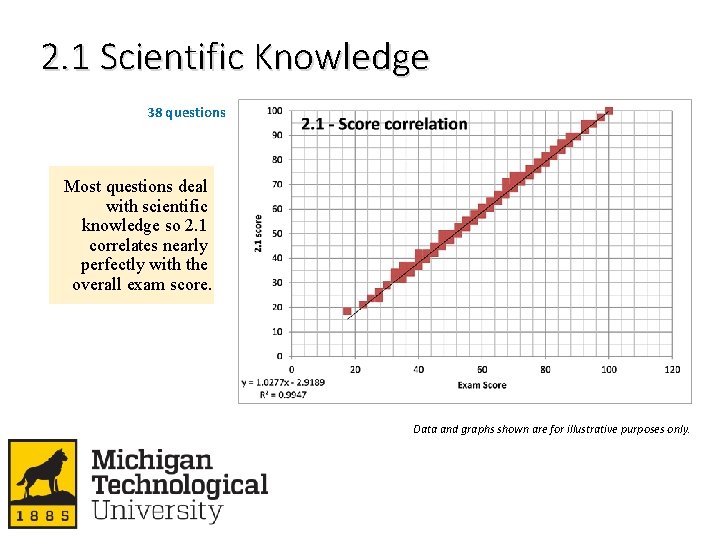

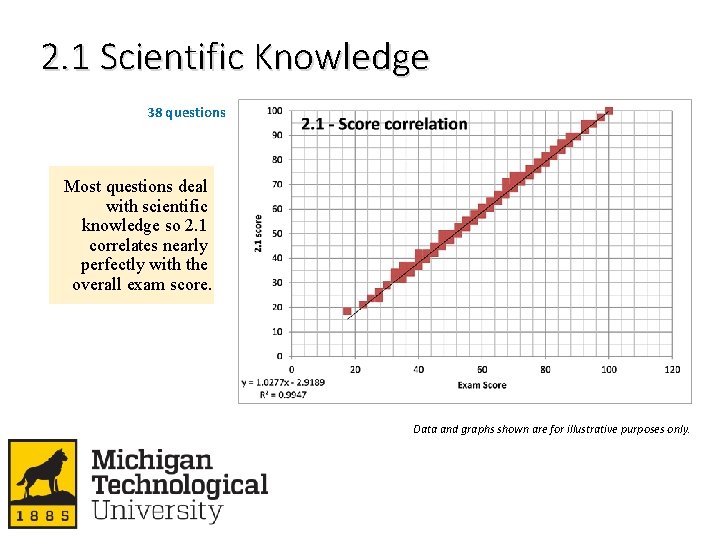

2. 1 Scientific Knowledge 38 questions Most questions deal with scientific knowledge so 2. 1 correlates nearly perfectly with the overall exam score. Data and graphs shown are for illustrative purposes only.

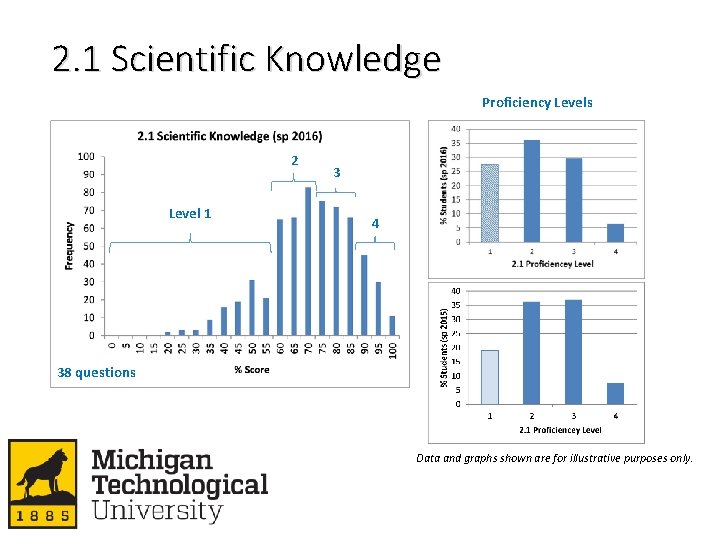

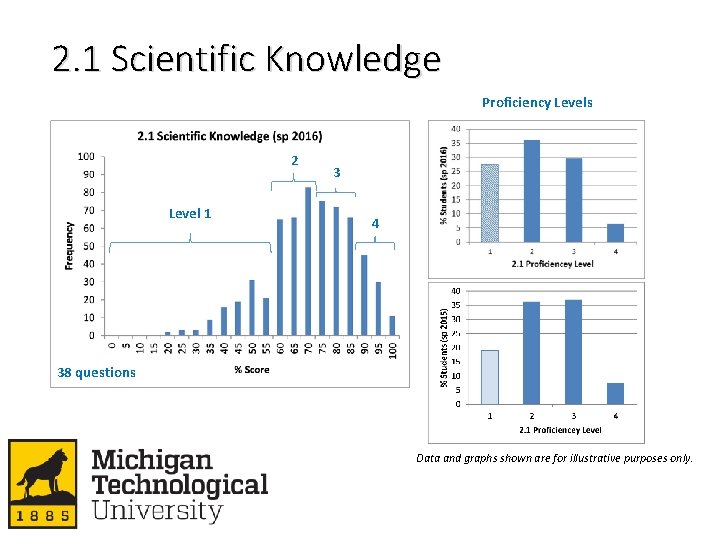

2. 1 Scientific Knowledge Proficiency Levels 2 Level 1 3 4 38 questions Data and graphs shown are for illustrative purposes only.

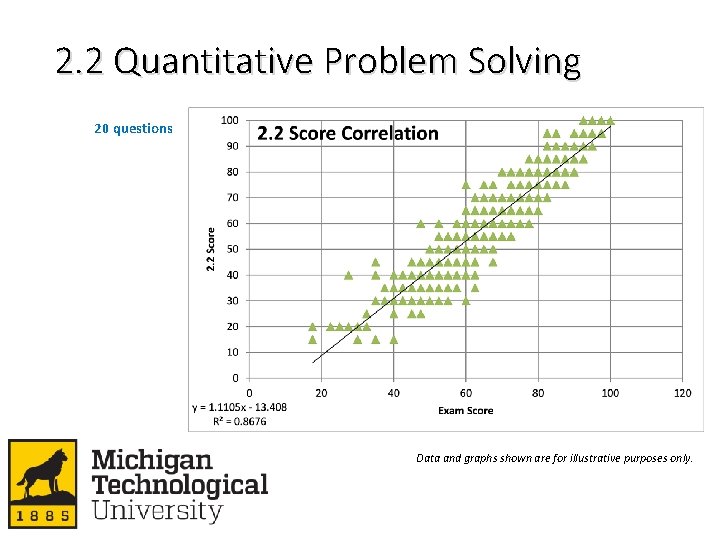

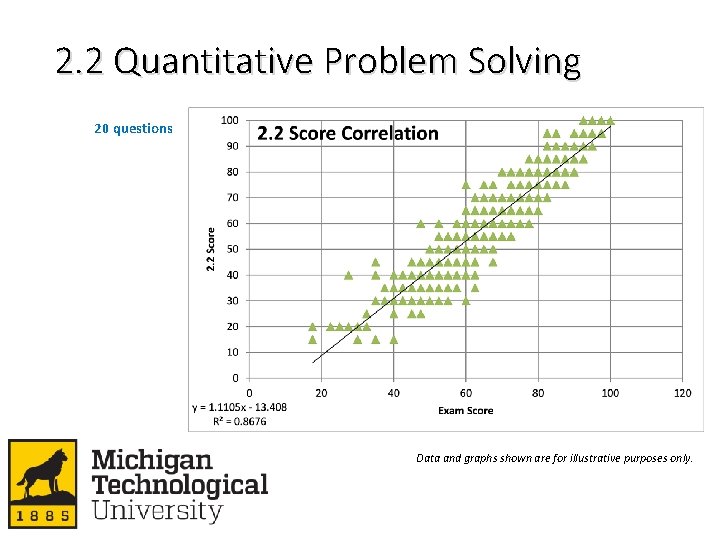

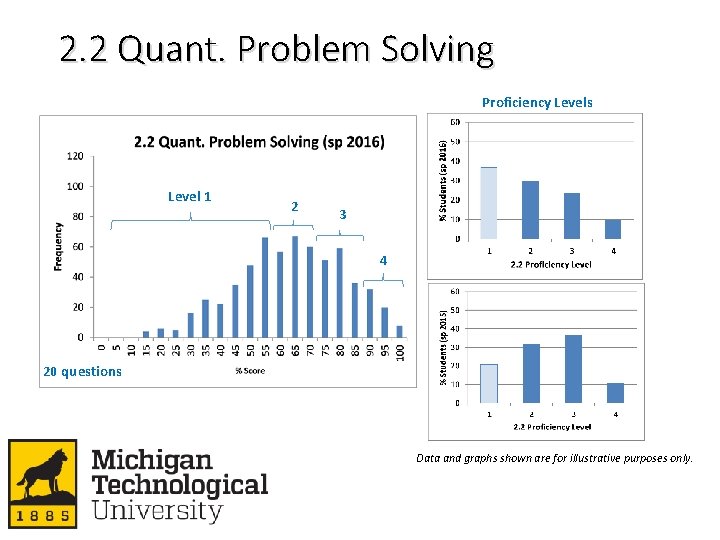

2. 2 Quantitative Problem Solving 20 questions Data and graphs shown are for illustrative purposes only.

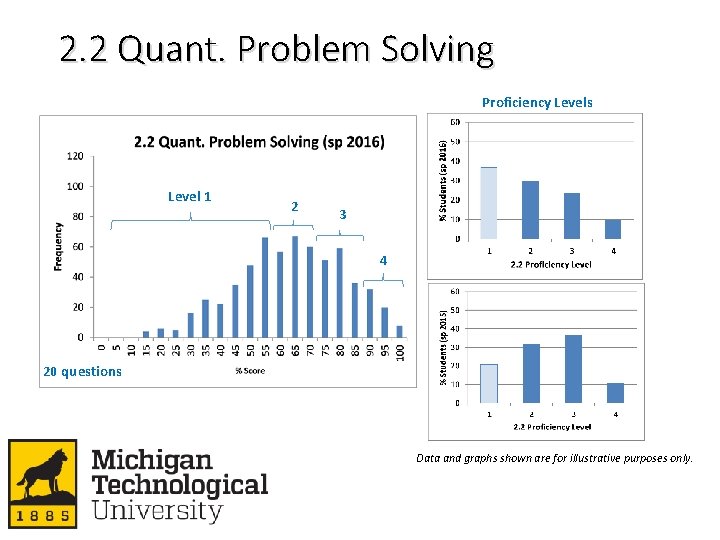

2. 2 Quant. Problem Solving Proficiency Levels Level 1 2 3 4 20 questions Data and graphs shown are for illustrative purposes only.

Topics for Further Discussion • Test Robustness Relative to the Subjective Judgements • Discernment of Proficiency-Level Cut-Offs • Instructor-Level Expectations • General University-Level Expectations • Encourage More Backwards Design • Future Considerations? • Major, year, repeat status, math proficiency, drop & fail rates, etc.

Contact information: Mike Meyer mrmeyer@mtu. edu CTL: 487 -3000 John Jaszczak jaszczak@mtu. edu 487 -2255 Please complete the Google Form for Goal 2 assessment plans that Jeannie emailed you about by Oct. 18. Image credit: Johanne Bouchard, https: //www. linkedin. com/pulse/20141125161326 -18341 gratitude-and-our-ability-to-humbly-say-thank-you