Modelling Language Evolution Lecture 5 Iterated Learning Simon

- Slides: 24

Modelling Language Evolution Lecture 5: Iterated Learning Simon Kirby University of Edinburgh Language Evolution & Computation Research Unit

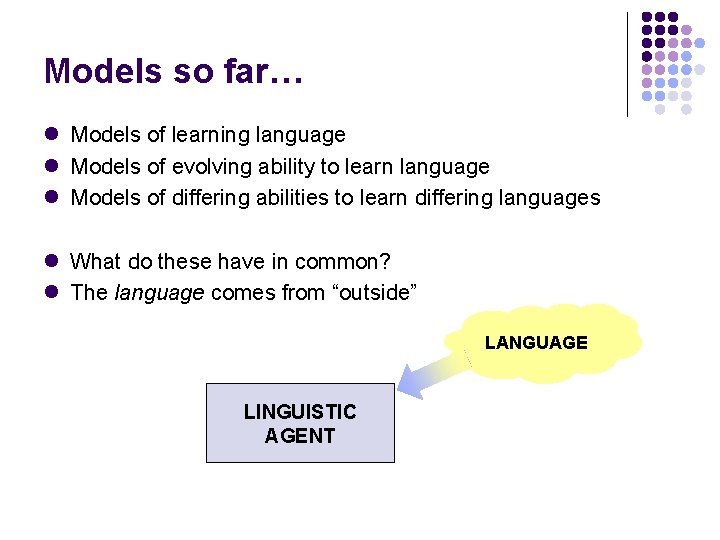

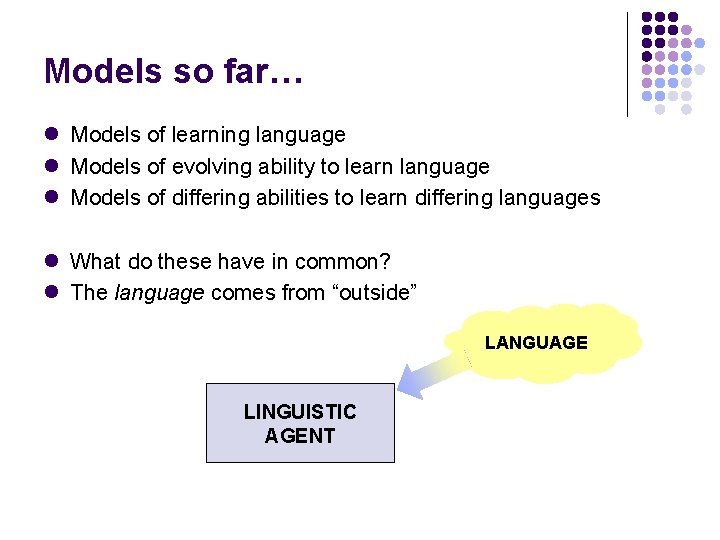

Models so far… l Models of learning language l Models of evolving ability to learn language l Models of differing abilities to learn differing languages l What do these have in common? l The language comes from “outside” LANGUAGE LINGUISTIC AGENT

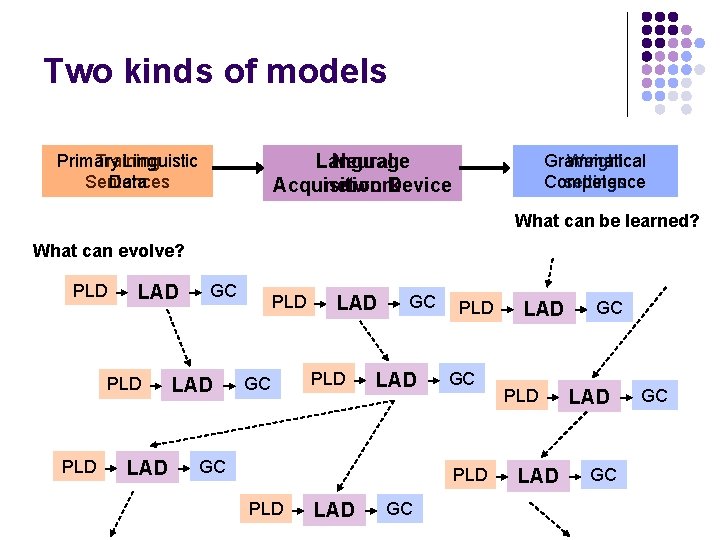

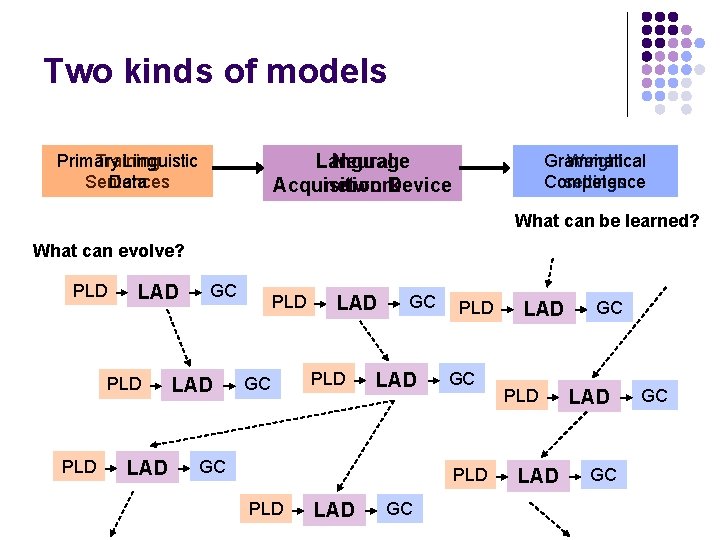

Two kinds of models Language Neural Acquisition network Device Primary Training Linguistic Sentences Data Grammatical Weight Competence settings What can be learned? What can evolve? PLD LAD GC LAD PLD GC LAD GC PLD PLD LAD GC GC

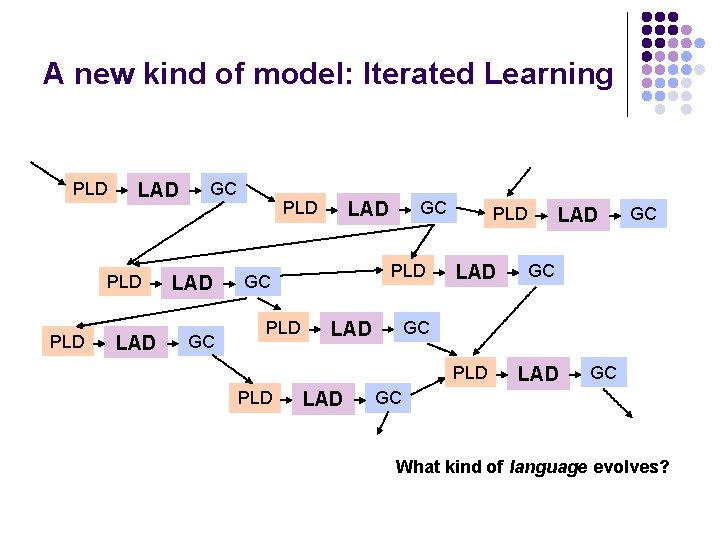

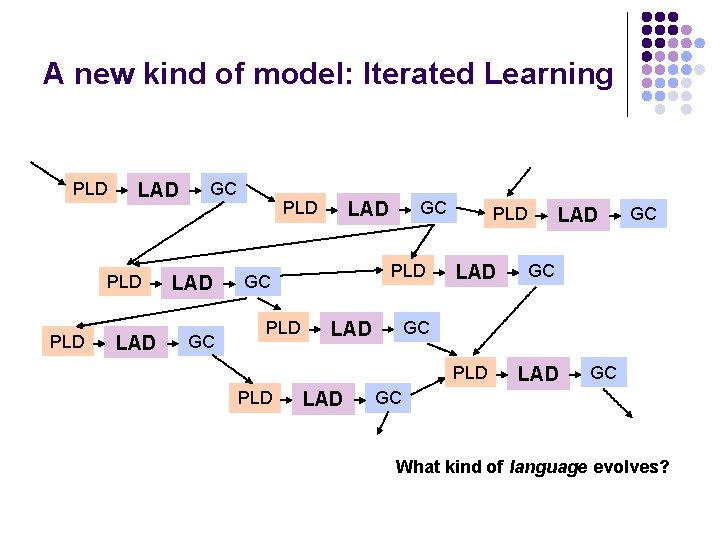

A new kind of model: Iterated Learning PLD LAD GC PLD GC LAD PLD LAD GC GC What kind of language evolves?

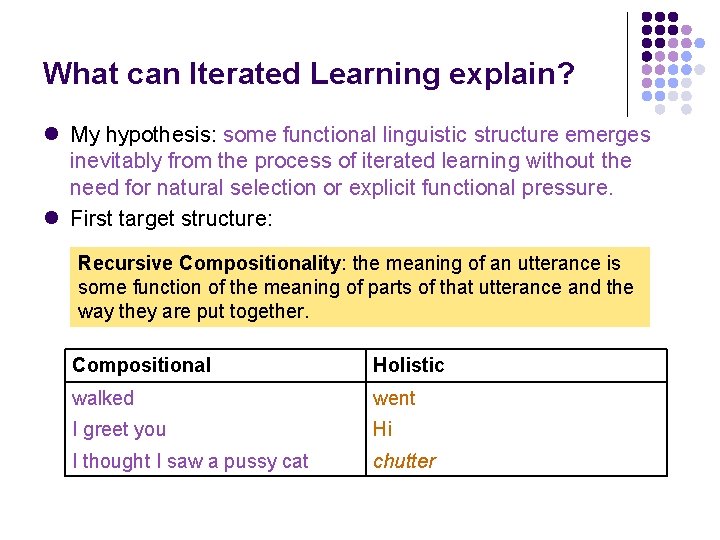

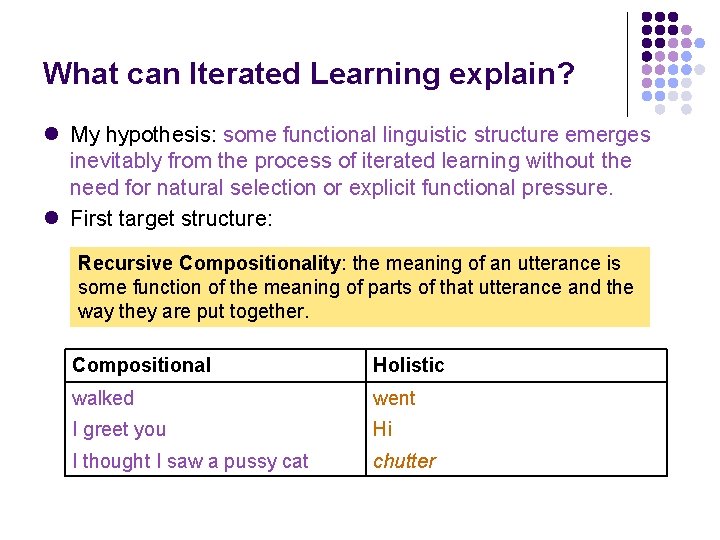

What can Iterated Learning explain? l My hypothesis: some functional linguistic structure emerges inevitably from the process of iterated learning without the need for natural selection or explicit functional pressure. l First target structure: Recursive Compositionality: the meaning of an utterance is some function of the meaning of parts of that utterance and the way they are put together. Compositional Holistic walked went I greet you Hi I thought I saw a pussy cat chutter

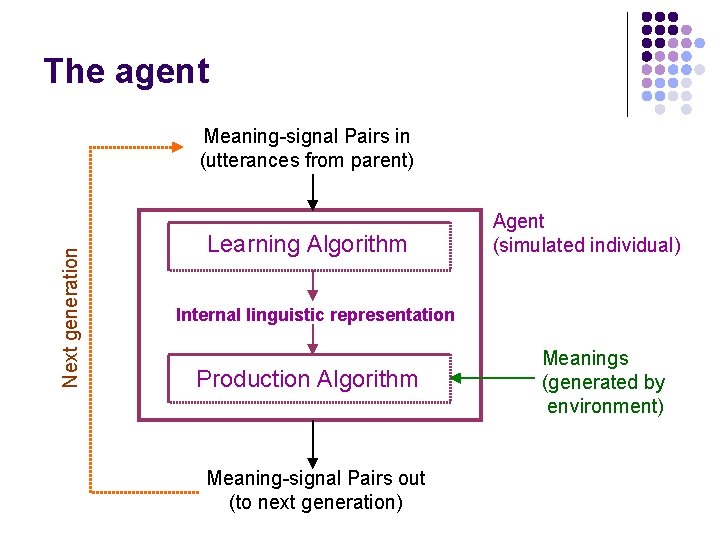

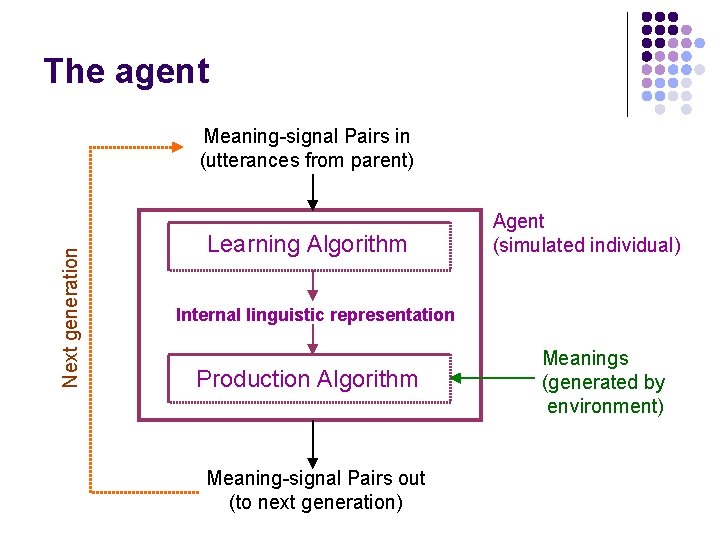

The agent Next generation Meaning-signal Pairs in (utterances from parent) Learning Algorithm Agent (simulated individual) Internal linguistic representation Production Algorithm Meaning-signal Pairs out (to next generation) Meanings (generated by environment)

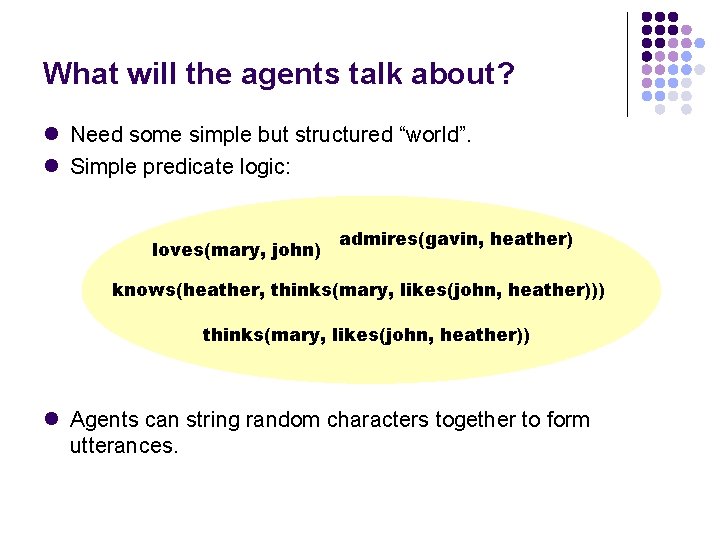

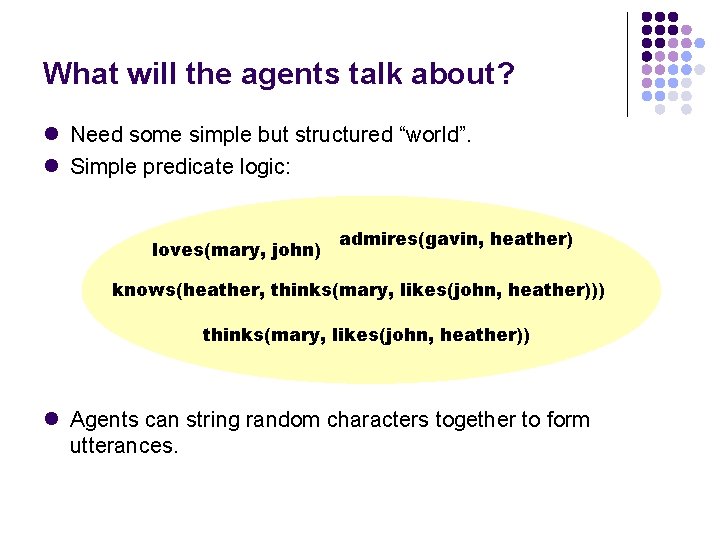

What will the agents talk about? l Need some simple but structured “world”. l Simple predicate logic: loves(mary, john) admires(gavin, heather) knows(heather, thinks(mary, likes(john, heather))) thinks(mary, likes(john, heather)) l Agents can string random characters together to form utterances.

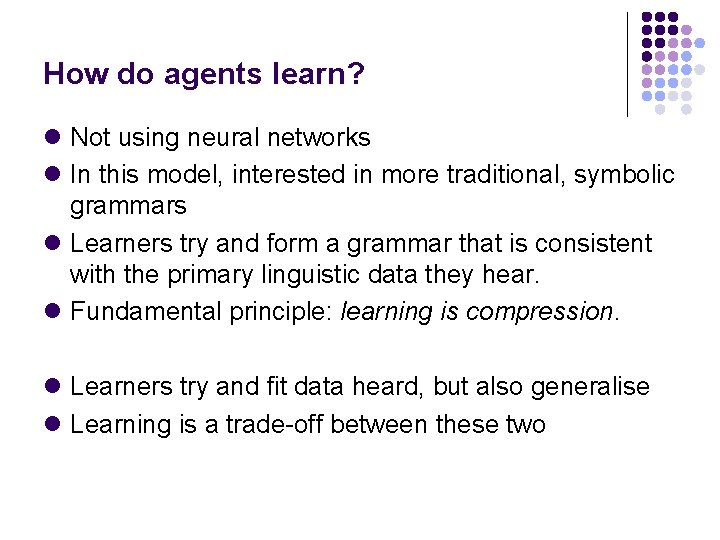

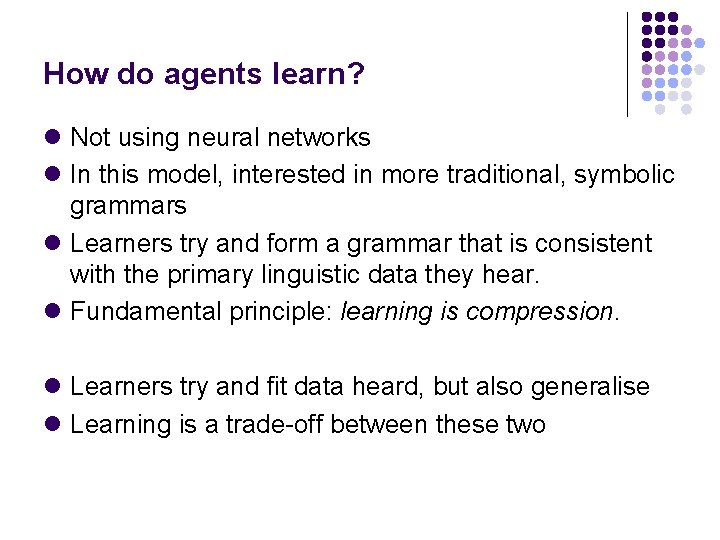

How do agents learn? l Not using neural networks l In this model, interested in more traditional, symbolic grammars l Learners try and form a grammar that is consistent with the primary linguistic data they hear. l Fundamental principle: learning is compression. l Learners try and fit data heard, but also generalise l Learning is a trade-off between these two

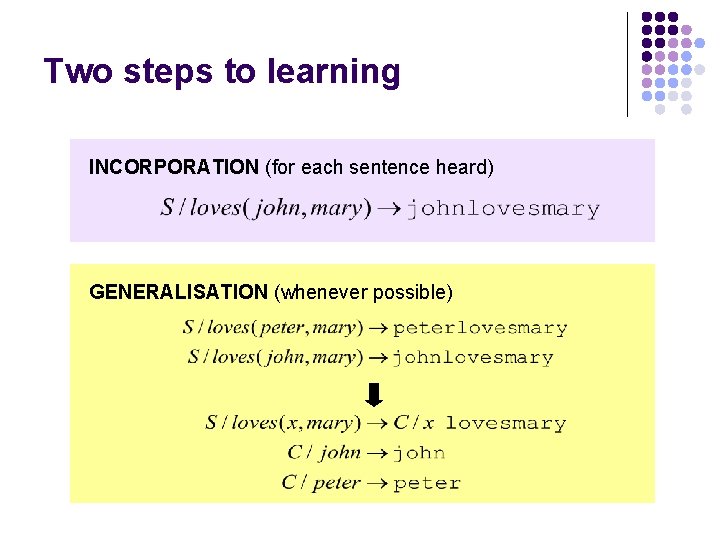

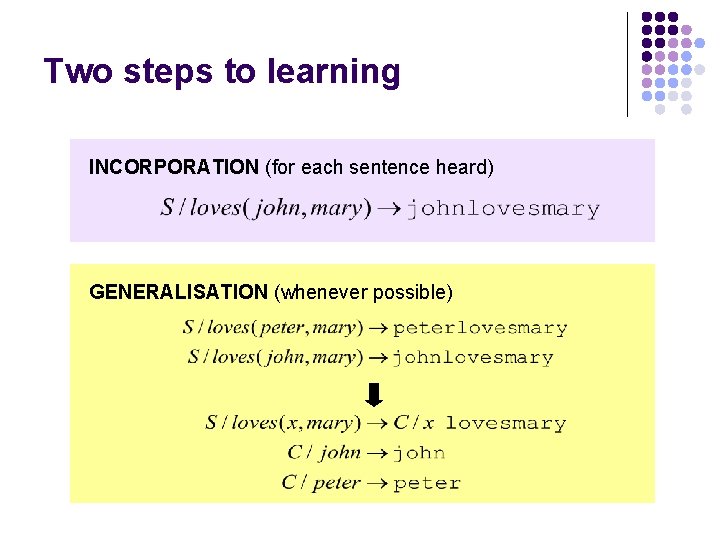

Two steps to learning INCORPORATION (for each sentence heard) GENERALISATION (whenever possible)

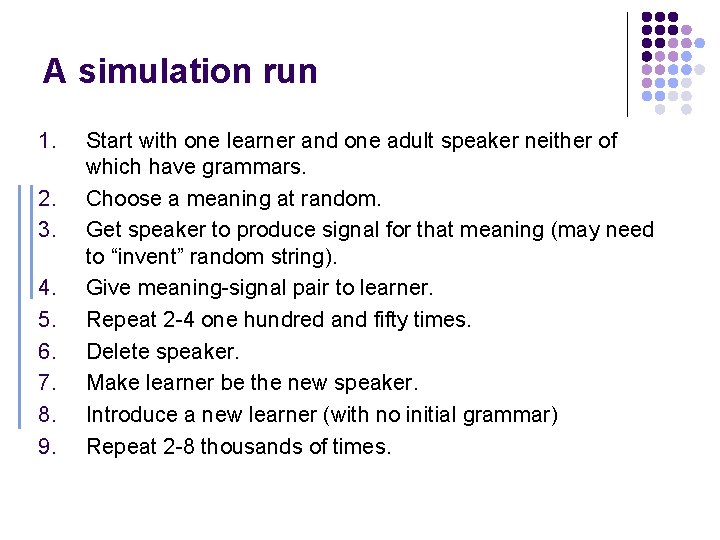

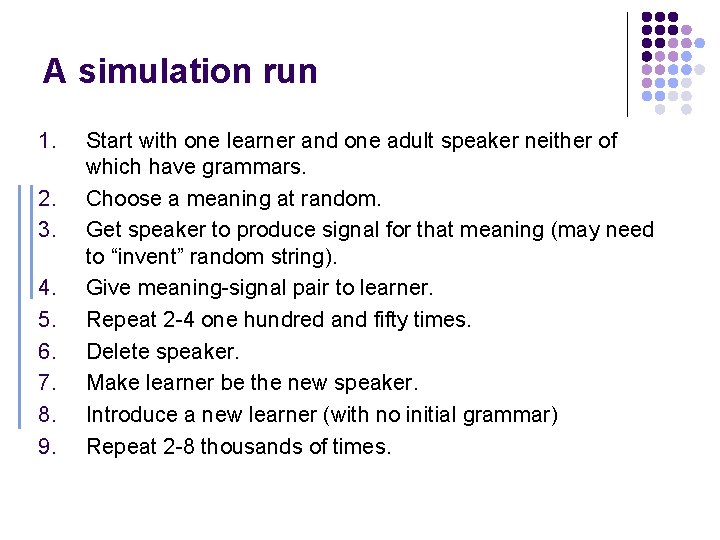

A simulation run 1. 2. 3. 4. 5. 6. 7. 8. 9. Start with one learner and one adult speaker neither of which have grammars. Choose a meaning at random. Get speaker to produce signal for that meaning (may need to “invent” random string). Give meaning-signal pair to learner. Repeat 2 -4 one hundred and fifty times. Delete speaker. Make learner be the new speaker. Introduce a new learner (with no initial grammar) Repeat 2 -8 thousands of times.

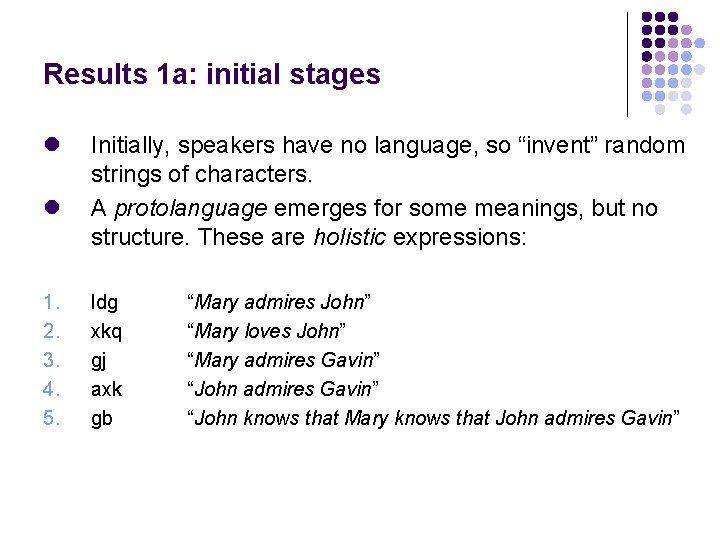

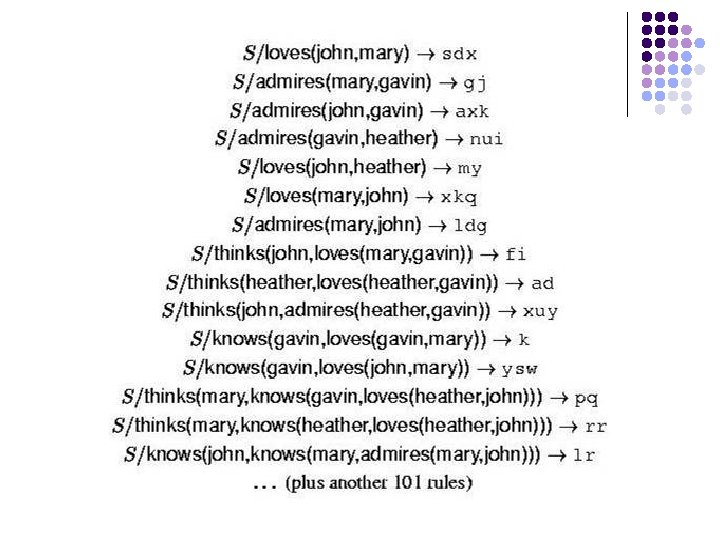

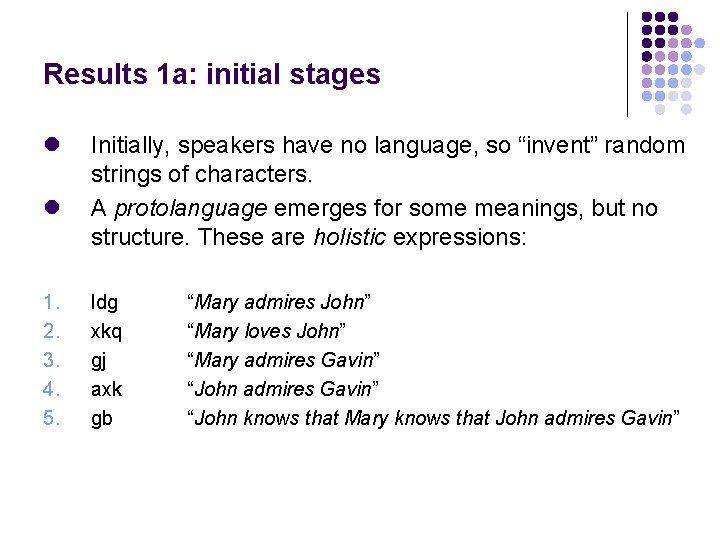

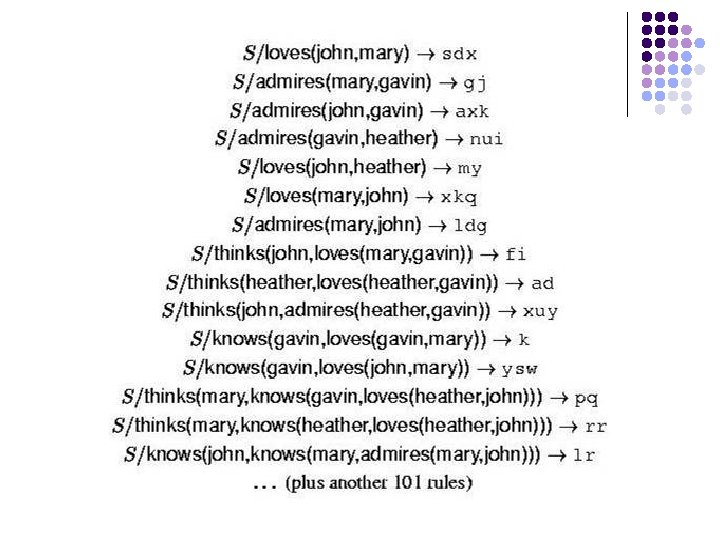

Results 1 a: initial stages l l 1. 2. 3. 4. 5. Initially, speakers have no language, so “invent” random strings of characters. A protolanguage emerges for some meanings, but no structure. These are holistic expressions: ldg xkq gj axk gb “Mary admires John” “Mary loves John” “Mary admires Gavin” “John knows that Mary knows that John admires Gavin”

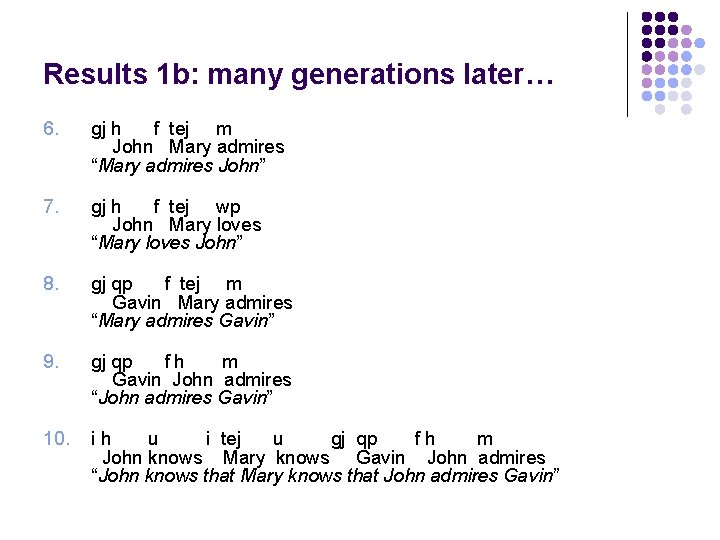

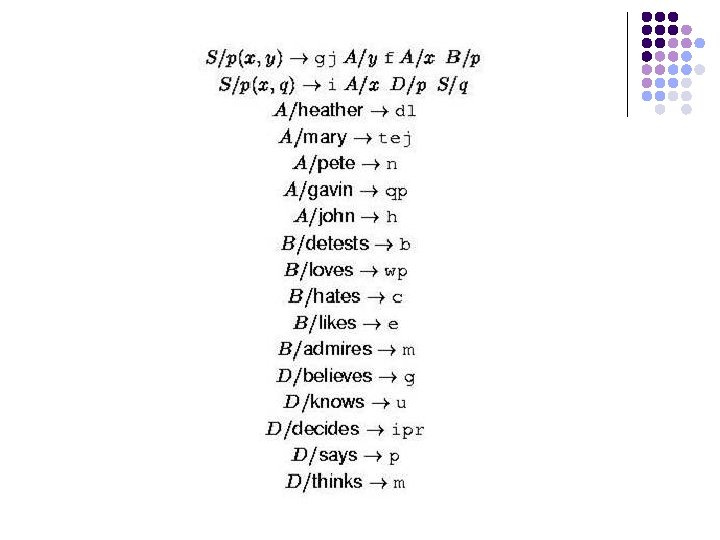

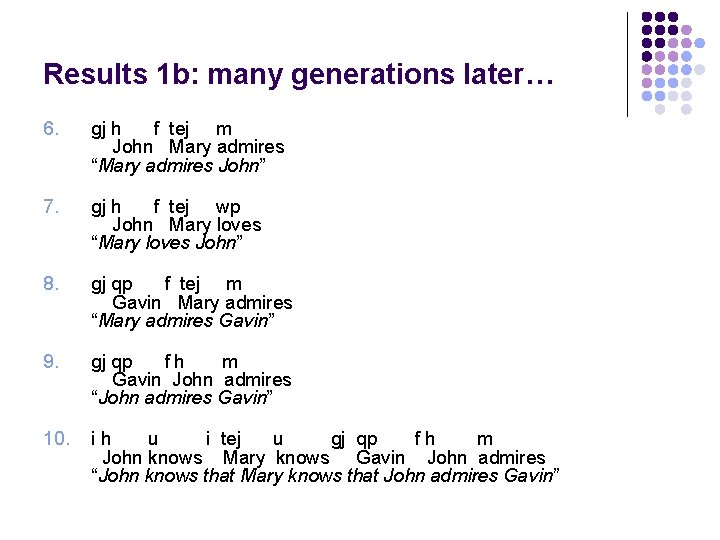

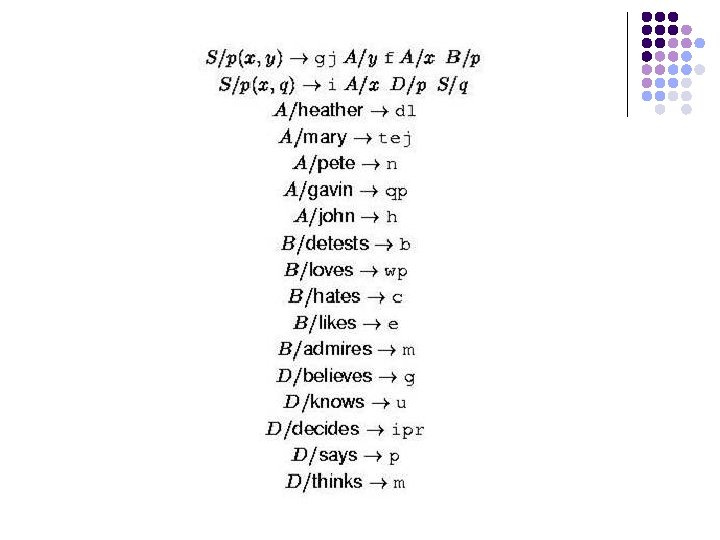

Results 1 b: many generations later… 6. gj h f tej m John Mary admires “Mary admires John” 7. gj h f tej wp John Mary loves “Mary loves John” 8. gj qp f tej m Gavin Mary admires “Mary admires Gavin” 9. gj qp fh m Gavin John admires “John admires Gavin” 10. ih u i tej u gj qp fh m John knows Mary knows Gavin John admires “John knows that Mary knows that John admires Gavin”

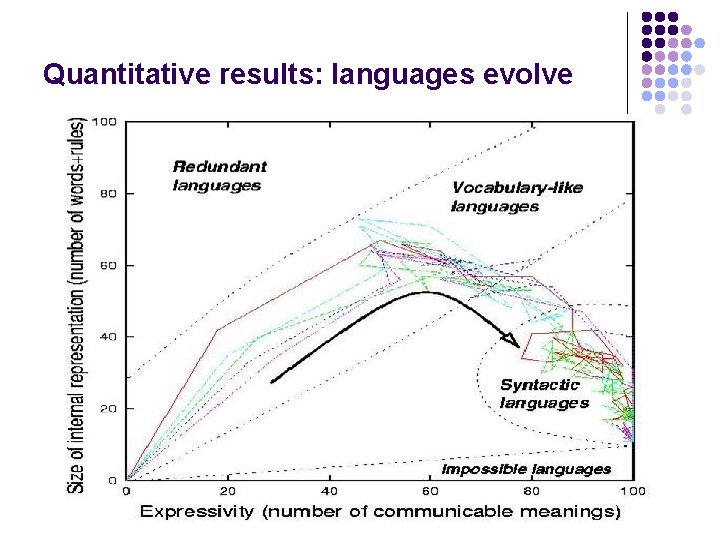

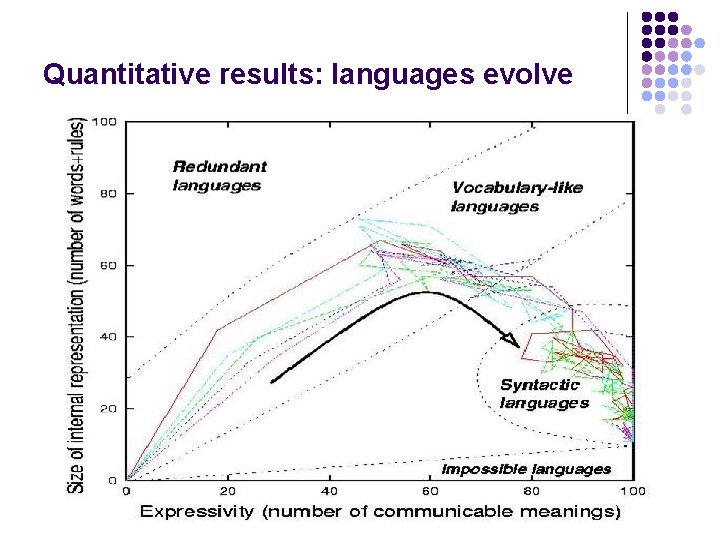

Quantitative results: languages evolve

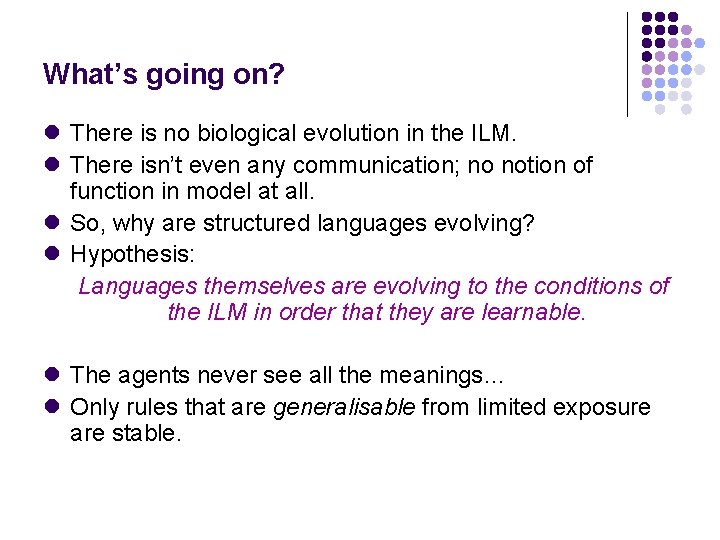

What’s going on? l There is no biological evolution in the ILM. l There isn’t even any communication; no notion of function in model at all. l So, why are structured languages evolving? l Hypothesis: Languages themselves are evolving to the conditions of the ILM in order that they are learnable. l The agents never see all the meanings… l Only rules that are generalisable from limited exposure are stable.

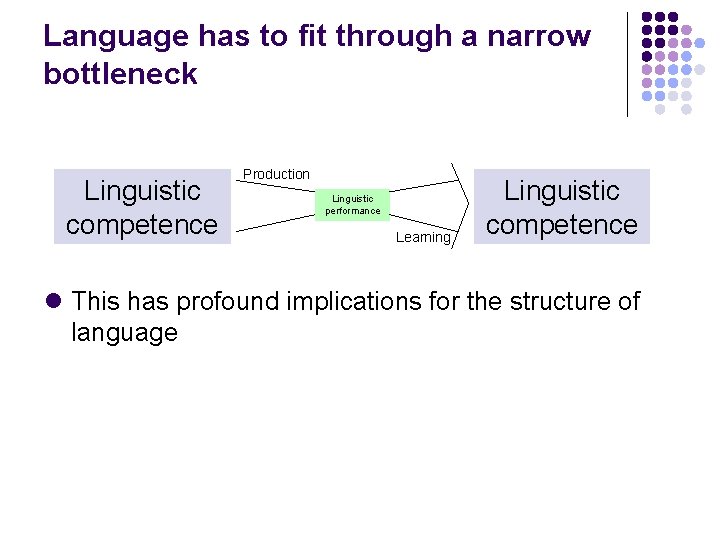

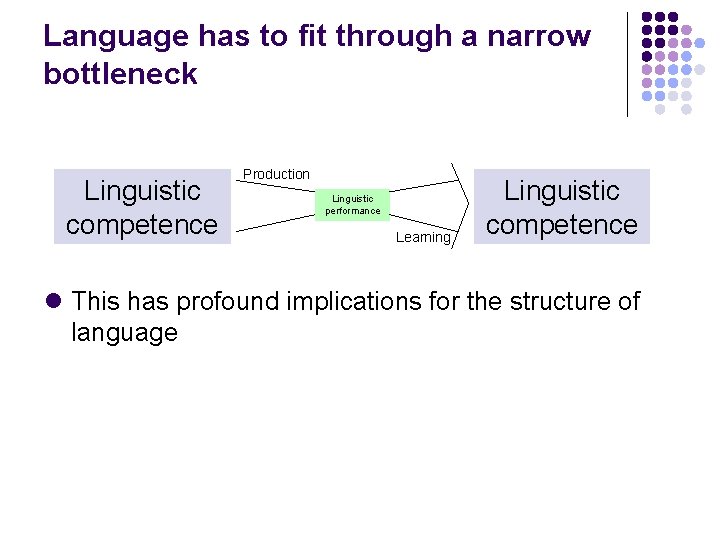

Language has to fit through a narrow bottleneck Linguistic competence Production Linguistic performance Learning Linguistic competence l This has profound implications for the structure of language

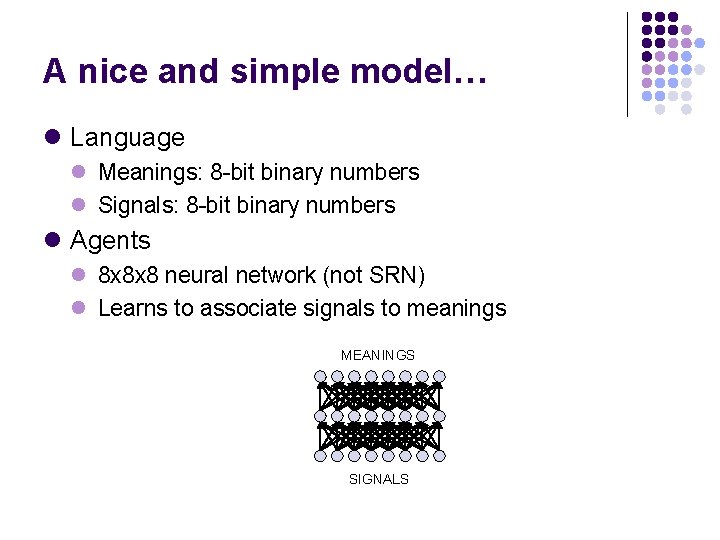

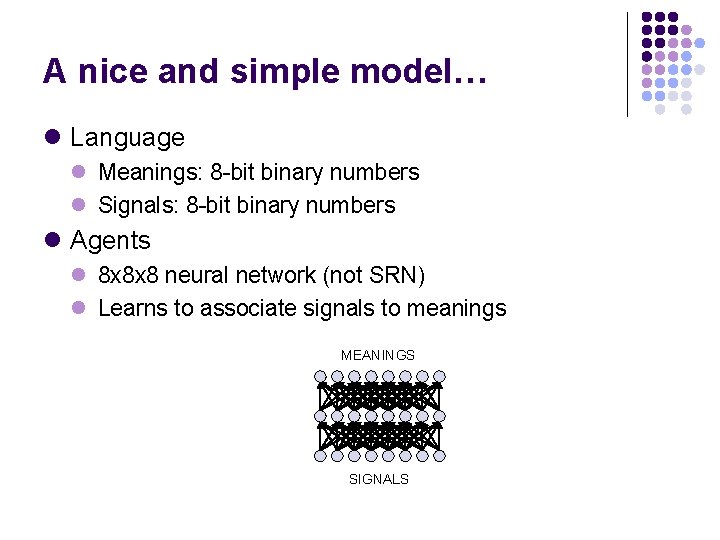

A nice and simple model… l Language l Meanings: 8 -bit binary numbers l Signals: 8 -bit binary numbers l Agents l 8 x 8 x 8 neural network (not SRN) l Learns to associate signals to meanings MEANINGS SIGNALS

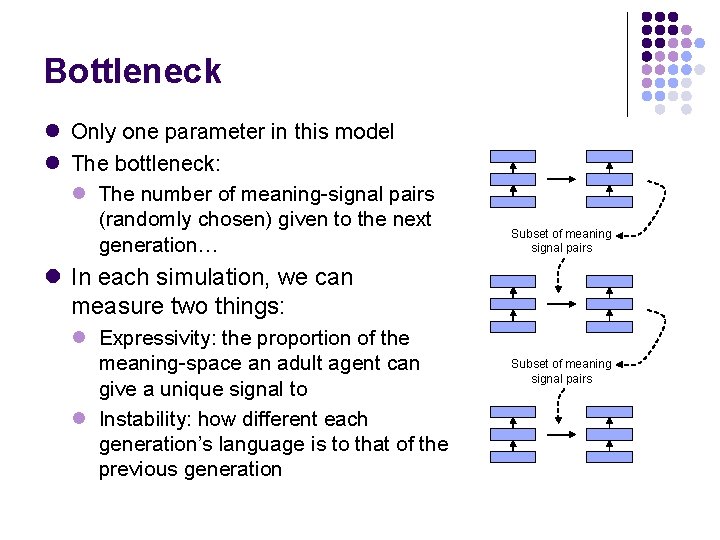

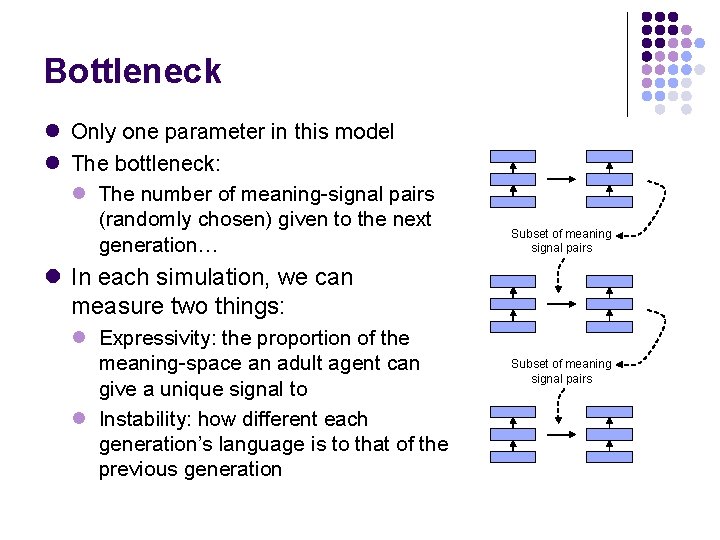

Bottleneck l Only one parameter in this model l The bottleneck: l The number of meaning-signal pairs (randomly chosen) given to the next generation… Subset of meaning signal pairs l In each simulation, we can measure two things: l Expressivity: the proportion of the meaning-space an adult agent can give a unique signal to l Instability: how different each generation’s language is to that of the previous generation Subset of meaning signal pairs

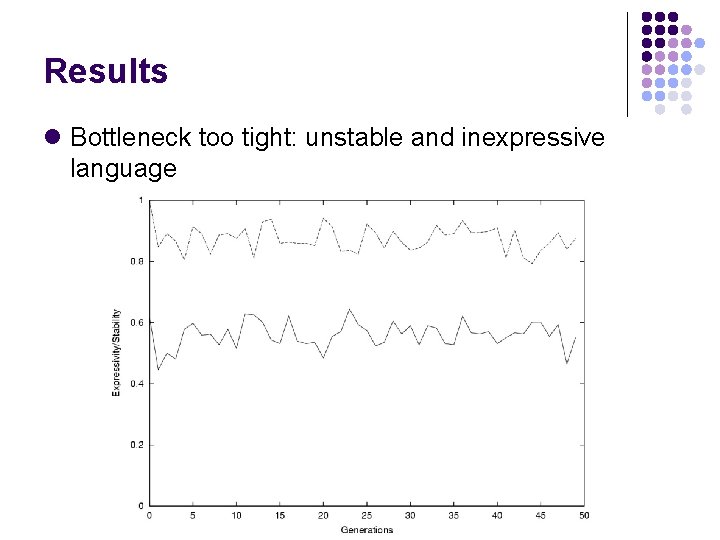

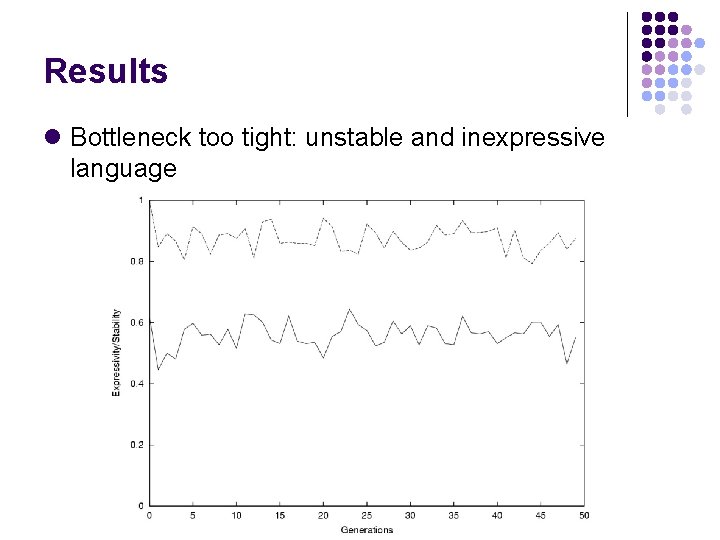

Results l Bottleneck too tight: unstable and inexpressive language

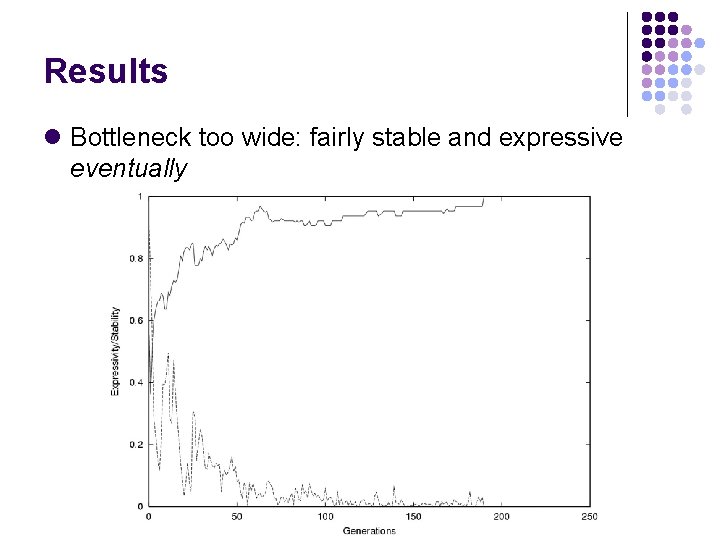

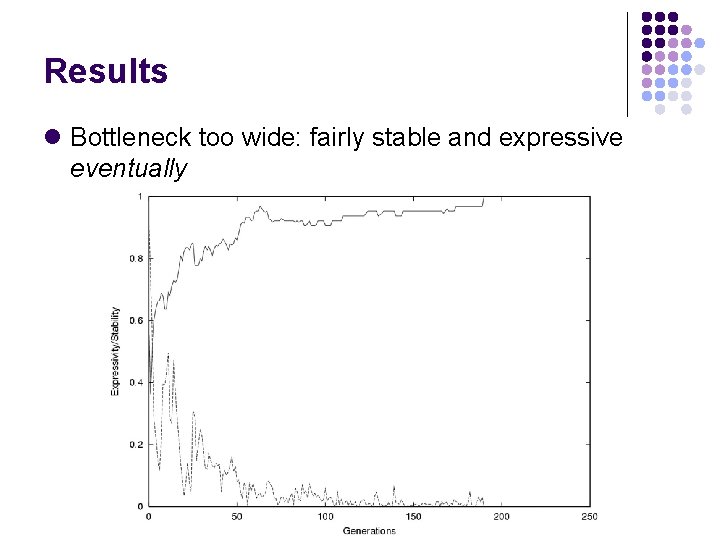

Results l Bottleneck too wide: fairly stable and expressive eventually

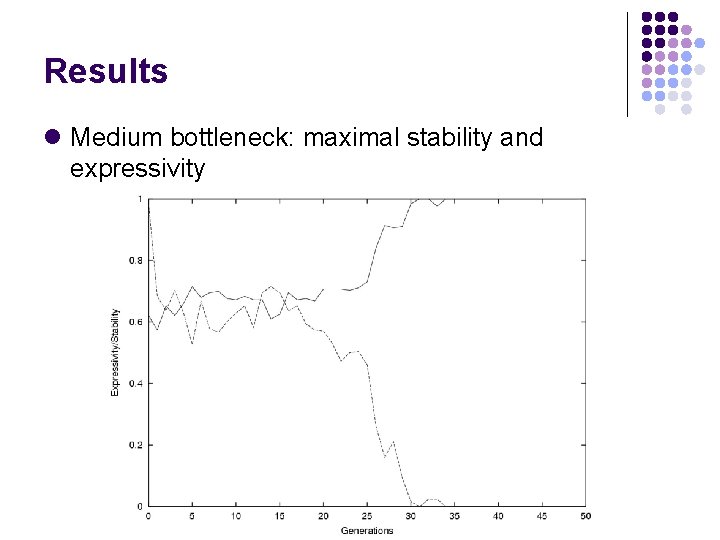

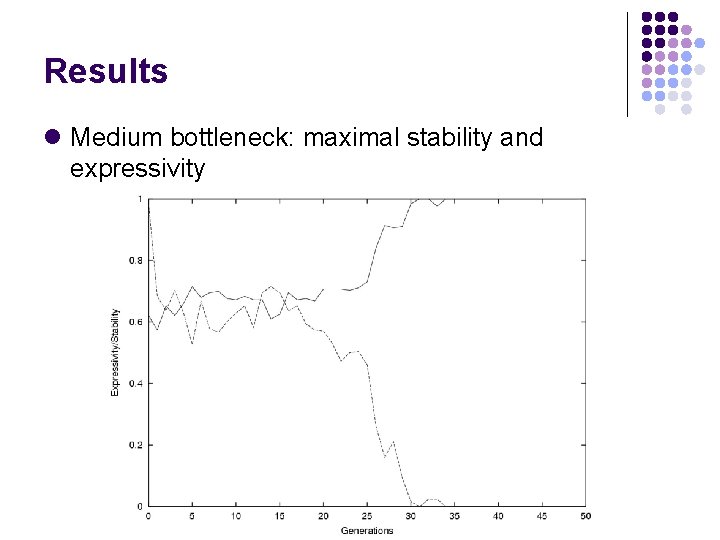

Results l Medium bottleneck: maximal stability and expressivity

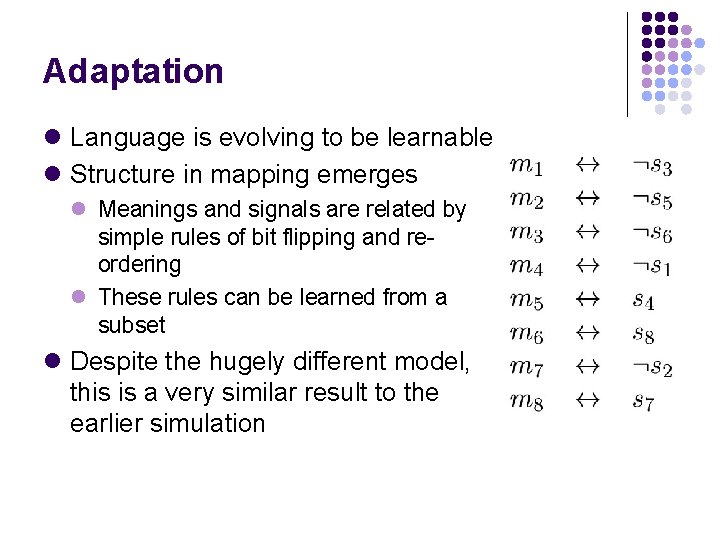

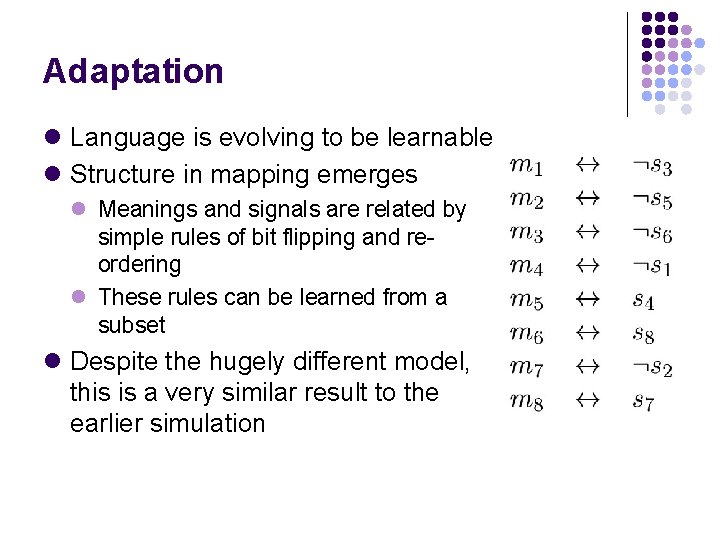

Adaptation l Language is evolving to be learnable l Structure in mapping emerges l Meanings and signals are related by simple rules of bit flipping and reordering l These rules can be learned from a subset l Despite the hugely different model, this is a very similar result to the earlier simulation

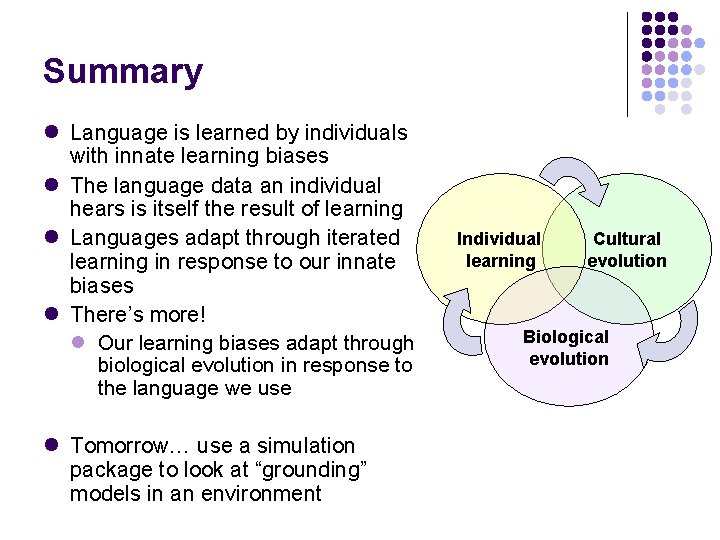

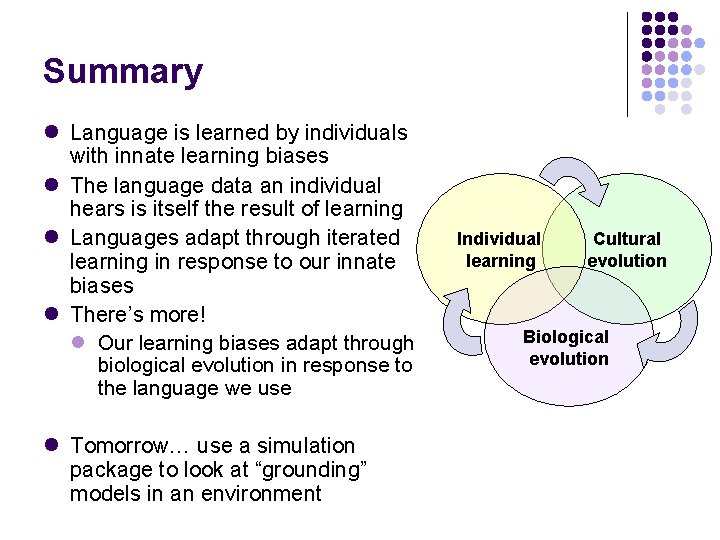

Summary l Language is learned by individuals with innate learning biases l The language data an individual hears is itself the result of learning l Languages adapt through iterated learning in response to our innate biases l There’s more! l Our learning biases adapt through biological evolution in response to the language we use l Tomorrow… use a simulation package to look at “grounding” models in an environment Individual learning Cultural evolution Biological evolution