Modeling Document Dynamics An Evolutionary Approach Jahna Otterbacher

Modeling Document Dynamics: An Evolutionary Approach Jahna Otterbacher, Dragomir Radev Computational Linguistics And Information Retrieval (CLAIR) {jahna, radev} @ umich. edu

What are dynamic texts? • Sets of topically related documents (news stories, Web pages, etc. ) • Multiple sources • Written/published at different points in time – may change over time • Challenging features: – Paraphrases – Contradictions – Incorrect/biased information

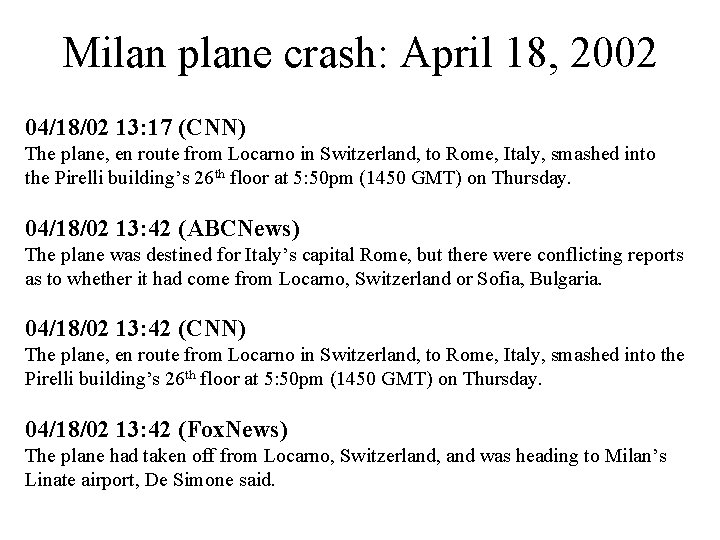

Milan plane crash: April 18, 2002 04/18/02 13: 17 (CNN) The plane, en route from Locarno in Switzerland, to Rome, Italy, smashed into the Pirelli building’s 26 th floor at 5: 50 pm (1450 GMT) on Thursday. 04/18/02 13: 42 (ABCNews) The plane was destined for Italy’s capital Rome, but there were conflicting reports as to whether it had come from Locarno, Switzerland or Sofia, Bulgaria. 04/18/02 13: 42 (CNN) The plane, en route from Locarno in Switzerland, to Rome, Italy, smashed into the Pirelli building’s 26 th floor at 5: 50 pm (1450 GMT) on Thursday. 04/18/02 13: 42 (Fox. News) The plane had taken off from Locarno, Switzerland, and was heading to Milan’s Linate airport, De Simone said.

Problem for IR systems • User poses a question or query to a system – Known facts change at different points in time – Sources contradict one another – Many paraphrases – similar but not necessarily equivalent - information • What is the “correct” information? What should be returned to the user?

Current Goals • Propose that dynamic texts “evolve” over time • Chronology recovery task • Approaches – Phylogenetics: reconstruct history of a set of species based on DNA – Language modeling: LM constructed from first document should fit less well over time

![Phylogenetic models • [Fitch&Margoliash, 67] • Given a set of species and information about Phylogenetic models • [Fitch&Margoliash, 67] • Given a set of species and information about](http://slidetodoc.com/presentation_image_h2/fccc437f90500e8a8955ae0c2a5e53c9/image-6.jpg)

Phylogenetic models • [Fitch&Margoliash, 67] • Given a set of species and information about their DNA, construct a tree that describes how they are related, w. r. t. a common ancestor Distance matrix Candidate tree D W B D 0 4 56 W 4 0 44 B 56 44 0 1 22 2 24 dog 20 bear 24 wolf • Statistically optimal tree minimizes the deviation between the original distances and those represented in the tree

![Phylogenetic models (2) • History of chain letters [Bennett&al, 03] – “Genes” were facts Phylogenetic models (2) • History of chain letters [Bennett&al, 03] – “Genes” were facts](http://slidetodoc.com/presentation_image_h2/fccc437f90500e8a8955ae0c2a5e53c9/image-7.jpg)

Phylogenetic models (2) • History of chain letters [Bennett&al, 03] – “Genes” were facts in the letters: • Names/titles of people • Dates • Threats to those who don’t send the letter on – Distance metric was the amount of shared information between two chain letters – Used Fitch/Margoliash method to construct trees • Result: An almost perfect phylogeny. Letters that were close to one another in the tree shared similar dates, “genes” and even geographical properties.

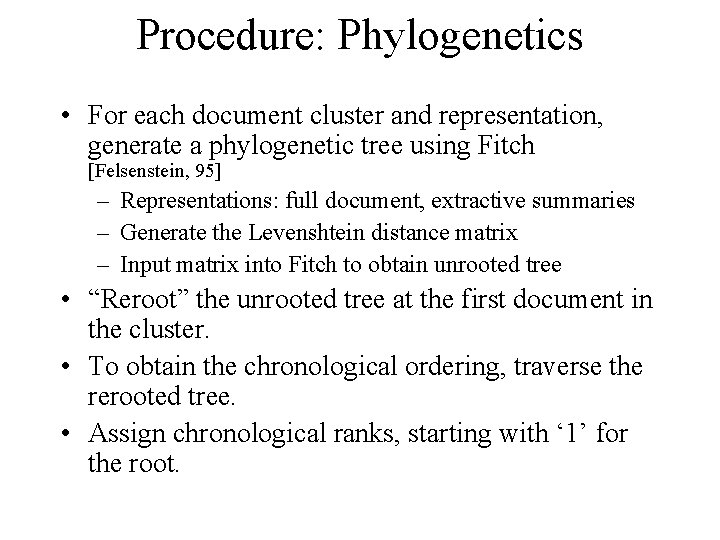

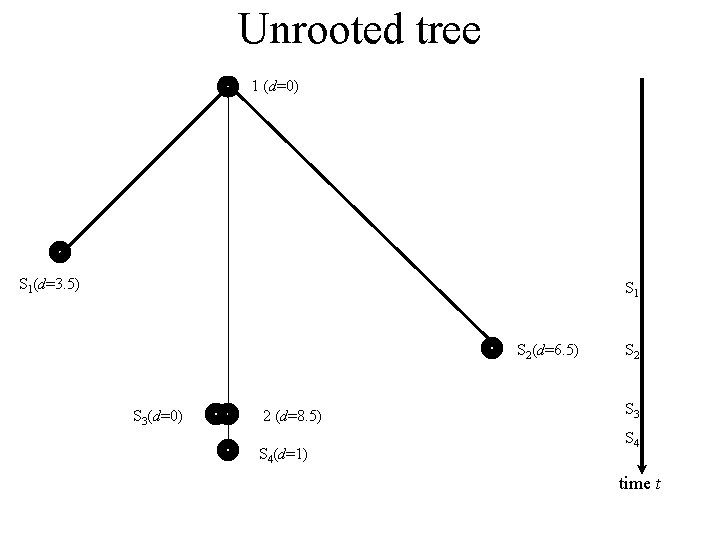

Procedure: Phylogenetics • For each document cluster and representation, generate a phylogenetic tree using Fitch [Felsenstein, 95] – Representations: full document, extractive summaries – Generate the Levenshtein distance matrix – Input matrix into Fitch to obtain unrooted tree • “Reroot” the unrooted tree at the first document in the cluster. • To obtain the chronological ordering, traverse the rerooted tree. • Assign chronological ranks, starting with ‘ 1’ for the root.

Unrooted tree 1 (d=0) S 1(d=3. 5) S 1 S 2(d=6. 5) S 3(d=0) 2 (d=8. 5) S 4(d=1) S 2 S 3 S 4 time t

Rerooted tree S 1 (d=0) S 1 1 (d=3. 5) S 2(d=10) S 2 S 3(d=12) 2 (d=12) S 4(d=13) S 3 S 4 time t

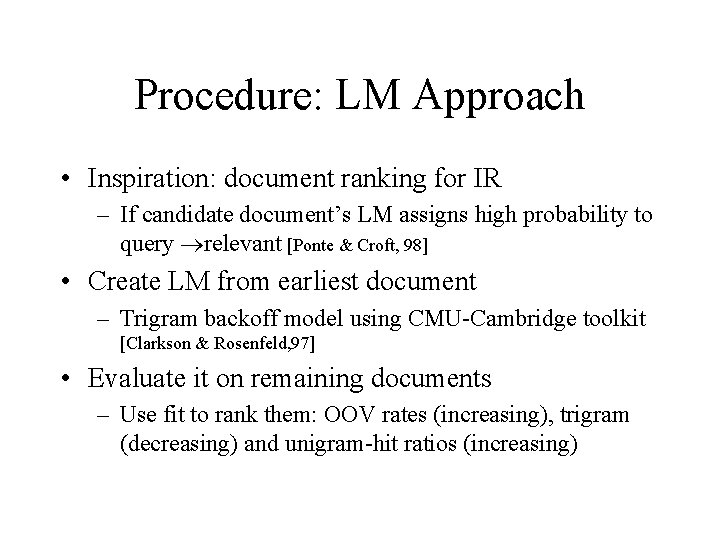

Procedure: LM Approach • Inspiration: document ranking for IR – If candidate document’s LM assigns high probability to query relevant [Ponte & Croft, 98] • Create LM from earliest document – Trigram backoff model using CMU-Cambridge toolkit [Clarkson & Rosenfeld, 97] • Evaluate it on remaining documents – Use fit to rank them: OOV rates (increasing), trigram (decreasing) and unigram-hit ratios (increasing)

![Evaluation • Metric: Kendall’s rank-order correlation coefficient (Kendall’s ) [Siegel & Castellan, 88] – Evaluation • Metric: Kendall’s rank-order correlation coefficient (Kendall’s ) [Siegel & Castellan, 88] –](http://slidetodoc.com/presentation_image_h2/fccc437f90500e8a8955ae0c2a5e53c9/image-12.jpg)

Evaluation • Metric: Kendall’s rank-order correlation coefficient (Kendall’s ) [Siegel & Castellan, 88] – -1 1 – Expresses extent to which the chronological rankings assigned by the algorithm agree with the actual rankings • Randomly assigned rankings have, on average, a = 0.

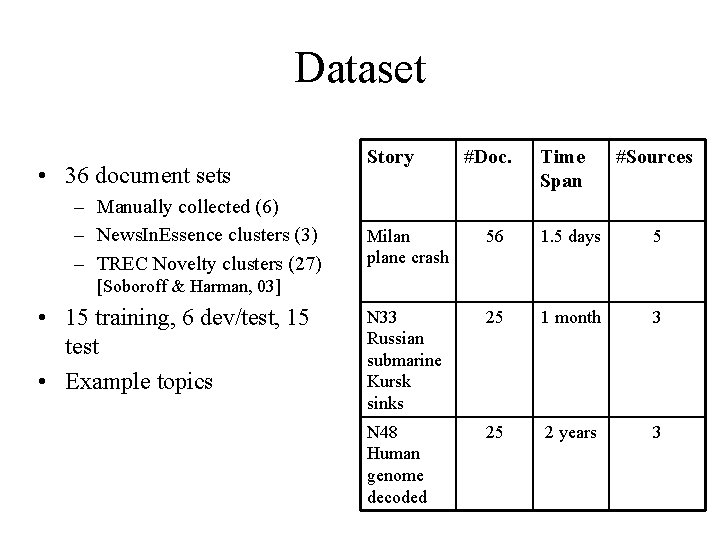

Dataset • 36 document sets – Manually collected (6) – News. In. Essence clusters (3) – TREC Novelty clusters (27) Story #Doc. Time Span #Sources Milan plane crash 56 1. 5 days 5 N 33 Russian submarine Kursk sinks 25 1 month 3 N 48 Human genome decoded 25 2 years 3 [Soboroff & Harman, 03] • 15 training, 6 dev/test, 15 test • Example topics

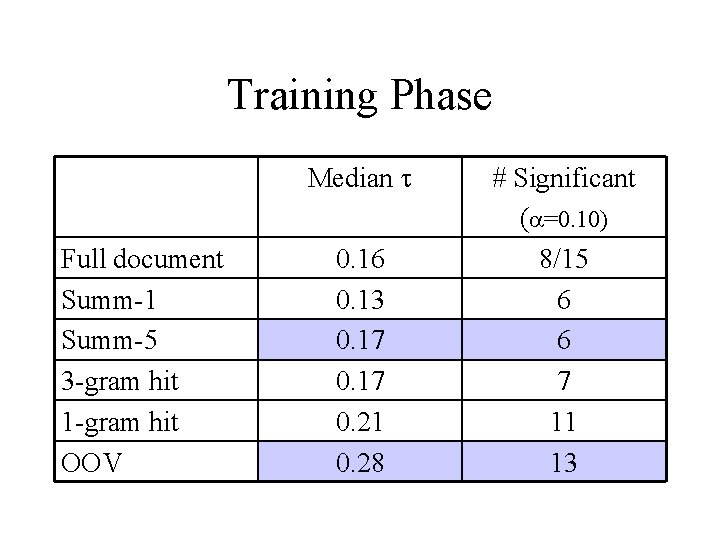

Training Phase Median Full document Summ-1 Summ-5 3 -gram hit 1 -gram hit OOV 0. 16 0. 13 0. 17 0. 21 0. 28 # Significant ( =0. 10) 8/15 6 6 7 11 13

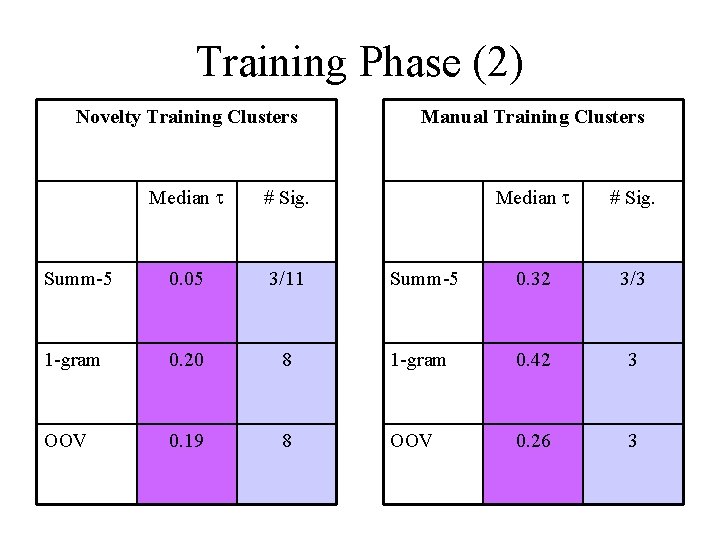

Training Phase (2) Novelty Training Clusters Manual Training Clusters Median # Sig. Summ-5 0. 05 3/11 Summ-5 0. 32 3/3 1 -gram 0. 20 8 1 -gram 0. 42 3 OOV 0. 19 8 OOV 0. 26 3

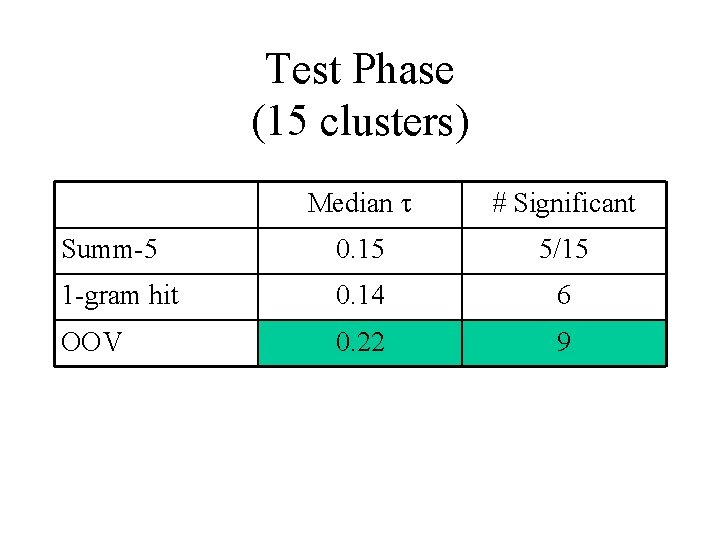

Test Phase (15 clusters) Median # Significant Summ-5 0. 15 5/15 1 -gram hit 0. 14 6 OOV 0. 22 9

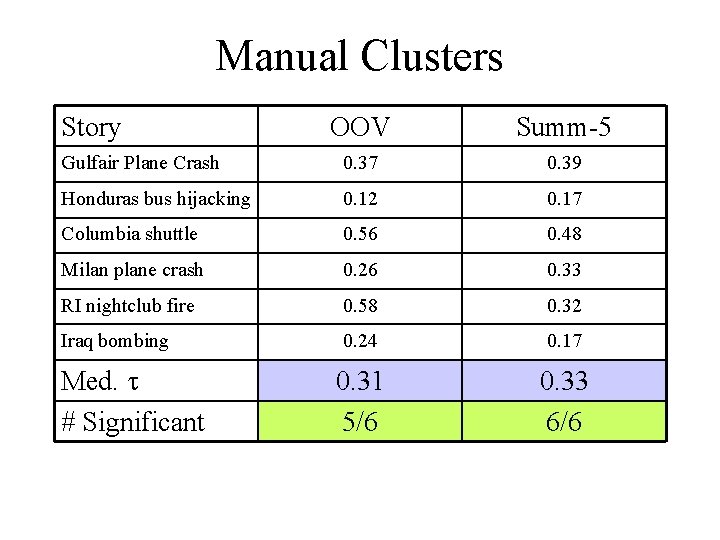

Manual Clusters Story OOV Summ-5 Gulfair Plane Crash 0. 37 0. 39 Honduras bus hijacking 0. 12 0. 17 Columbia shuttle 0. 56 0. 48 Milan plane crash 0. 26 0. 33 RI nightclub fire 0. 58 0. 32 Iraq bombing 0. 24 0. 17 0. 31 5/6 0. 33 6/6 Med. # Significant

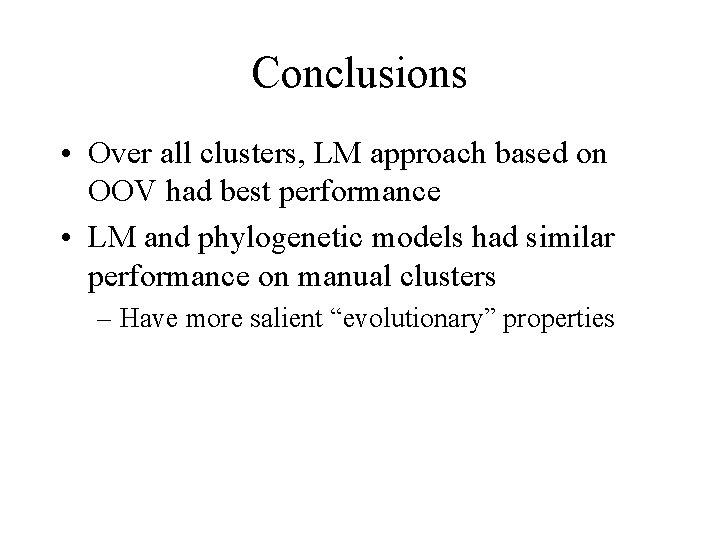

Conclusions • Over all clusters, LM approach based on OOV had best performance • LM and phylogenetic models had similar performance on manual clusters – Have more salient “evolutionary” properties

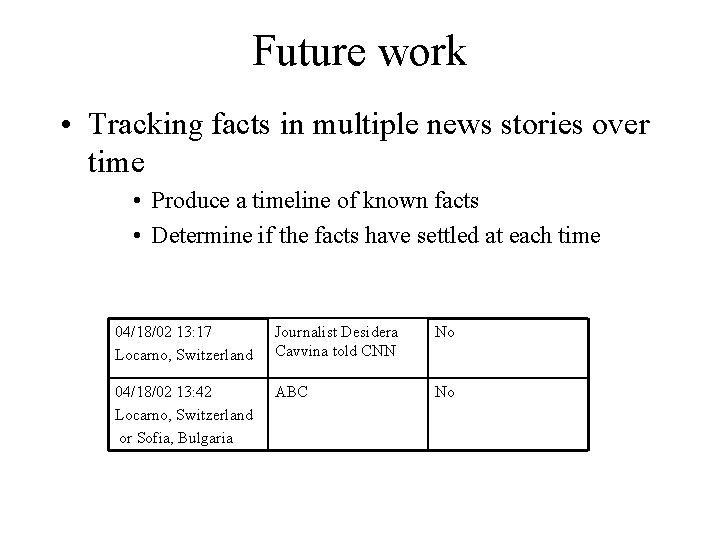

Future work • Tracking facts in multiple news stories over time • Produce a timeline of known facts • Determine if the facts have settled at each time 04/18/02 13: 17 Locarno, Switzerland Journalist Desidera Cavvina told CNN No 04/18/02 13: 42 Locarno, Switzerland or Sofia, Bulgaria ABC No

- Slides: 19