Modeling and Managing Software Productivity Quality balancing Efficiency

Modeling and Managing Software Productivity & Quality … balancing Efficiency and Effectiveness Softec 2011 Kuala Lumpur, Malaysia Gary A. Gack MBA, Six Sigma Black Belt, ASQ Certified Software Quality Engineer Owner, Process-Fusion. net GGack@Process-Fusion. net © 2011 Process-Fusion. net 1

Agenda Measuring Efficiency (Productivity) • the Cost of Quality Framework Measuring Effectiveness (Quality) • Defect Containment Modeling & Managing Efficiency and Effectiveness • Why Modeling? • Scenarios Considered • Effectiveness Results • Efficiency Results “An Apple a Day … “ © 2011 Process-Fusion. net 2

What is “Efficient” (Productive)? How can it be measured? A Lean Perspective The Cost of Quality Framework © 2011 Process-Fusion. net 3

“Productive”? What does that mean? A software process is “productive” (efficient) if, relative to an alternative … • It produces an equivalent or better result at lower cost. • For example, if defect-finding strategy “A” – finds the same number of defects as does strategy “B” (i. e. , the two strategies are equally effective), – but does so at lower cost, – strategy “A” is more efficient than strategy “B”. A is more “productive” than B. © 2011 Process-Fusion. net 4

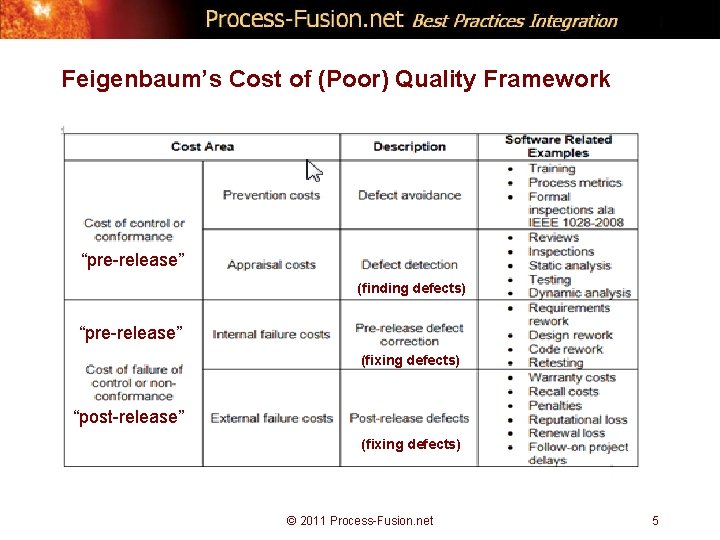

Feigenbaum’s Cost of (Poor) Quality Framework “pre-release” (finding defects) “pre-release” (fixing defects) “post-release” (fixing defects) © 2011 Process-Fusion. net 5

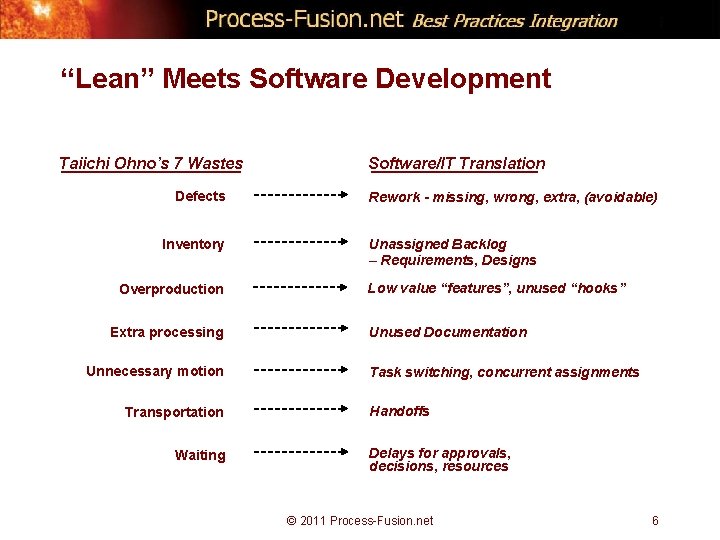

“Lean” Meets Software Development Taiichi Ohno’s 7 Wastes Defects Inventory Overproduction Extra processing Unnecessary motion Transportation Waiting Software/IT Translation Rework - missing, wrong, extra, (avoidable) Unassigned Backlog – Requirements, Designs Low value “features”, unused “hooks” Unused Documentation Task switching, concurrent assignments Handoffs Delays for approvals, decisions, resources © 2011 Process-Fusion. net 6

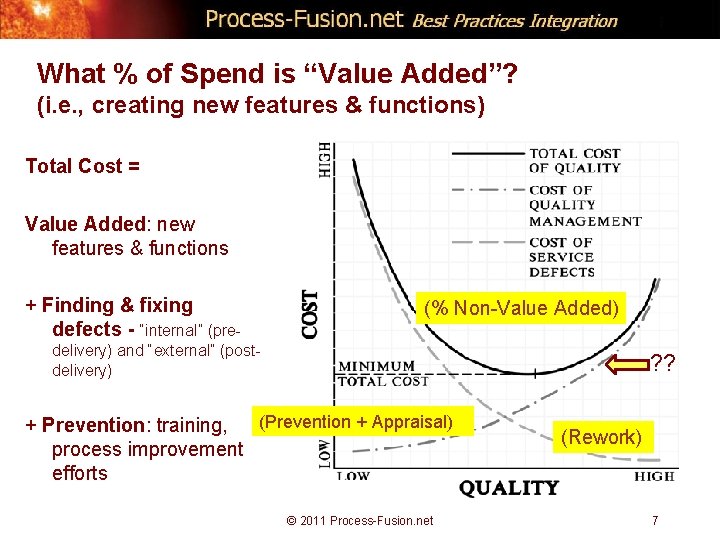

What % of Spend is “Value Added”? (i. e. , creating new features & functions) Total Cost = Value Added: new features & functions + Finding & fixing defects - “internal” (pre- (% Non-Value Added) delivery) and “external” (postdelivery) ? ? (Prevention + Appraisal) + Prevention: training, process improvement efforts © 2011 Process-Fusion. net (Rework) 7

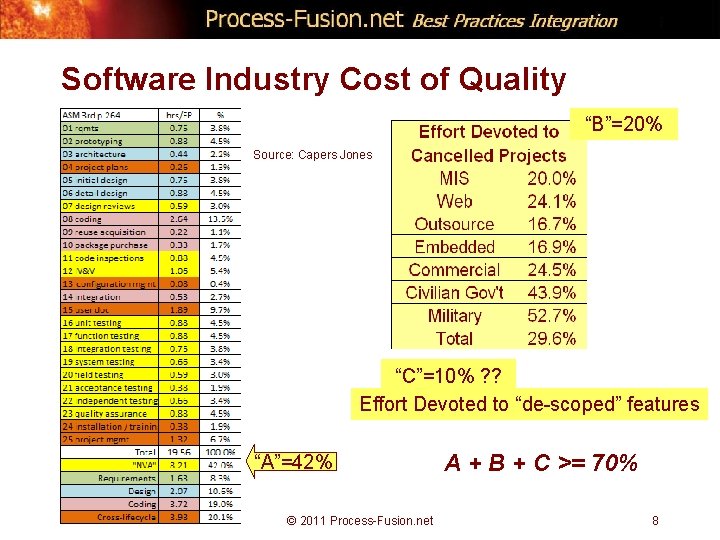

Software Industry Cost of Quality “B”=20% Source: Capers Jones “C”=10% ? ? Effort Devoted to “de-scoped” features “A”=42% © 2011 Process-Fusion. net A + B + C >= 70% 8

Key “Take-away”: To improve Efficiency (productivity), reduce NVA • NVA ~= Appraisal + Rework • (Optimization = what, when, how) © 2011 Process-Fusion. net 9

What is “Effectiveness”? How can it be measured? A Quality Perspective Defect Containment © 2011 Process-Fusion. net 10

“Effective”? What does that mean? Delivered software is “effective” if: (1) it serves a valid organizational purpose - efforts are made to quantify this aspect of effectiveness with return on investment estimates, yet it is essentially a subjective evaluation. (2) it is acceptably defect free. The term “defect” in this context is intended to be broadly construed • e. g. , a missed or incorrect requirement is a defect; a user-unfriendly design is a defect. • Hence, once a project has been initiated the effectiveness of the software process used to execute the project is appropriately measured by defect containment – i. e. , the percentage of defects removed before the software product is delivered to the customer. © 2011 Process-Fusion. net 11

Defect Containment Defined • “Total Containment Effectiveness” (TCE) = % of defects found before release e. g. , 80 defects found in test, 20 found by customers = 80% TCE Measure customer defects over agreed time frames (3/6/12 months) • Defect Containment “Efficiency” considers cost • Phase/Iteration (“step”) Appraisal Containment = % of defects present found by a specific appraisal event e. g. , of 50 defects present in requirements, 40 found by inspection = 80% “step” containment Defects present can be estimated and/or evaluated in retrospect by identifying “origin” © 2011 Process-Fusion. net 12

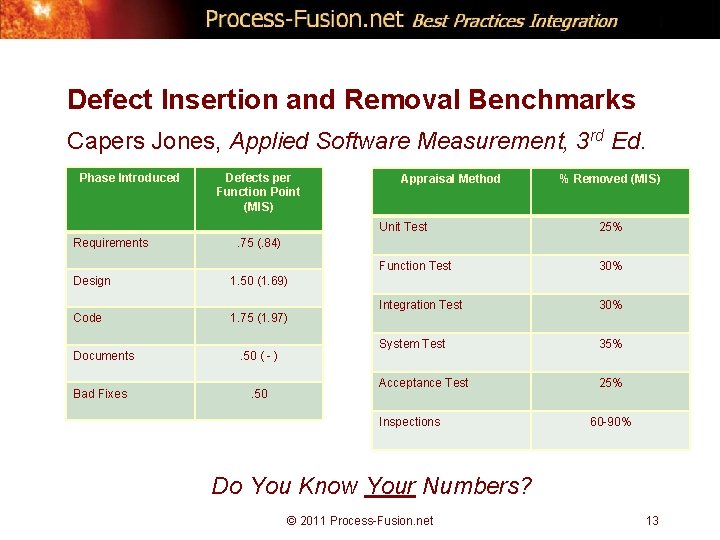

Defect Insertion and Removal Benchmarks Capers Jones, Applied Software Measurement, 3 rd Ed. Phase Introduced Requirements Defects per Function Point (MIS) 1. 50 (1. 69) Code 1. 75 (1. 97) Bad Fixes % Removed (MIS) Unit Test 25% Function Test 30% Integration Test 30% System Test 35% Acceptance Test 25% . 75 (. 84) Design Documents Appraisal Method . 50 ( - ). 50 Inspections 60 -90% Do You Know Your Numbers? © 2011 Process-Fusion. net 13

Modeling & Managing Software Process Efficiency and Effectiveness Leading Indicators Provide CONTROL © 2011 Process-Fusion. net 14

Why Modeling? • In many software groups finding and fixing defects consumes 50 -70% of total cost – Best practice groups reduce that by at least 50% • Models allow you to think through the consequences of alternative strategies … quickly, at very low cost • Models allow you to forecast both quality and financial consequences of alternatives – Creating a business case in the process – Creating a basis for “quality adjusted” status evaluation • Modeling motivates measurement and “management by fact” © 2011 Process-Fusion. net 15

Model Objectives • • Predict (1) delivered quality and (2) total non-value-added effort (cost) Predict defect “insertion” – Focus attention on defects, which account for the largest share of total development cost. – Enable early monitoring of the relationship between defects likely to be present and those actually found – provide early awareness. • Estimate effort needed to execute the volume of appraisal necessary to find the number of defects we forecast to remove. – a ‘sanity check’ on the planned level of appraisal effort – i. e. , is it actually plausible to remove an acceptable volume of defects with the level of effort planned? • Forecast both “pre-release” (before delivery) and “post-release” (after delivery) NVA effort. – When delivered quality is poor, post-release defect repair costs can be 50% of the original project budget. © 2011 Process-Fusion. net 16

IMPORTANT Caveats Don’t focus on the parameter values I have used – The thought process is the important part – Actual values vary considerably from place to place Where available I have used industry benchmarks – All benchmarks conceal large variation – Where benchmarks are not available I’ve used experience as a guide – Your local values may well be quite different Use models such as these to do “what if” analysis – “simulate” a range of assumptions – Best/worst/most likely values © 2011 Process-Fusion. net 17

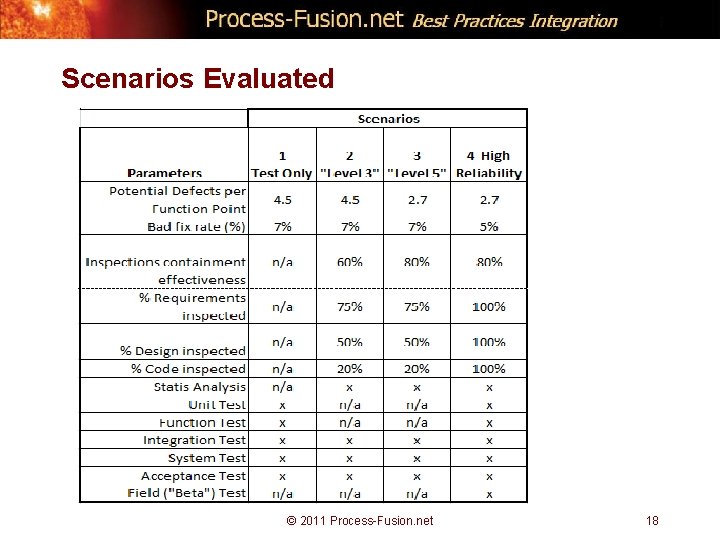

Scenarios Evaluated © 2011 Process-Fusion. net 18

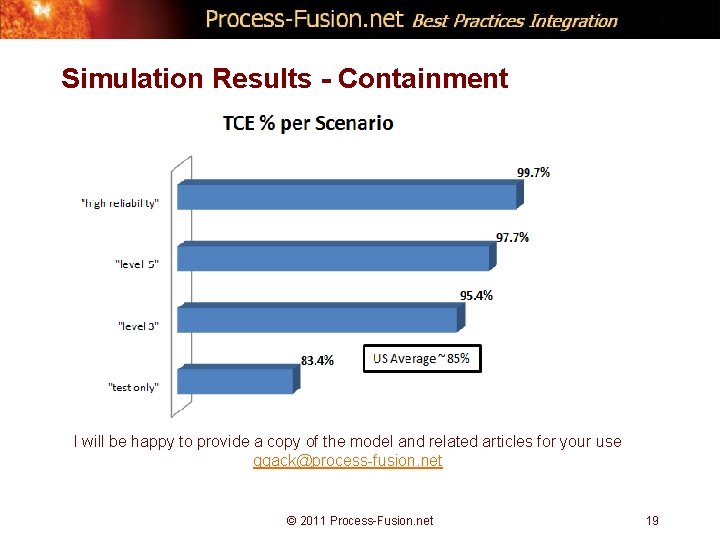

Simulation Results - Containment I will be happy to provide a copy of the model and related articles for your use ggack@process-fusion. net © 2011 Process-Fusion. net 19

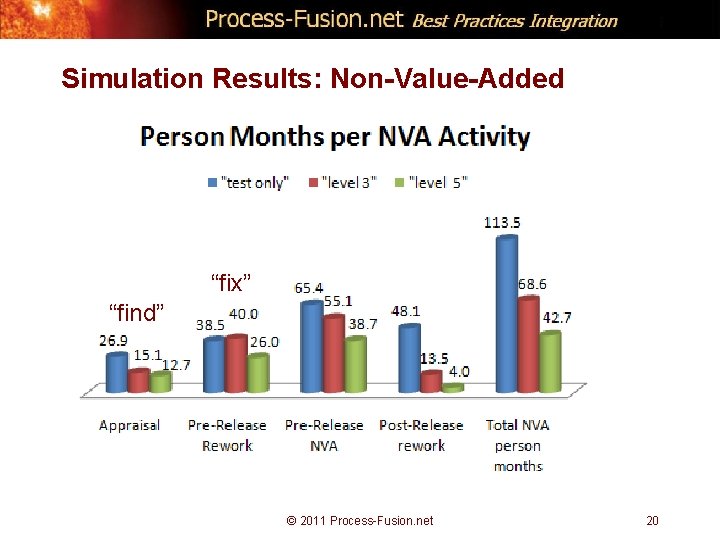

Simulation Results: Non-Value-Added “fix” “find” © 2011 Process-Fusion. net 20

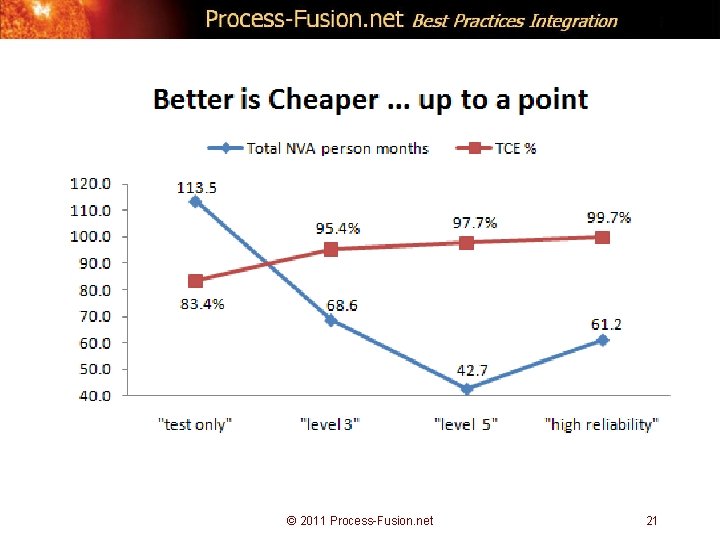

© 2011 Process-Fusion. net 21

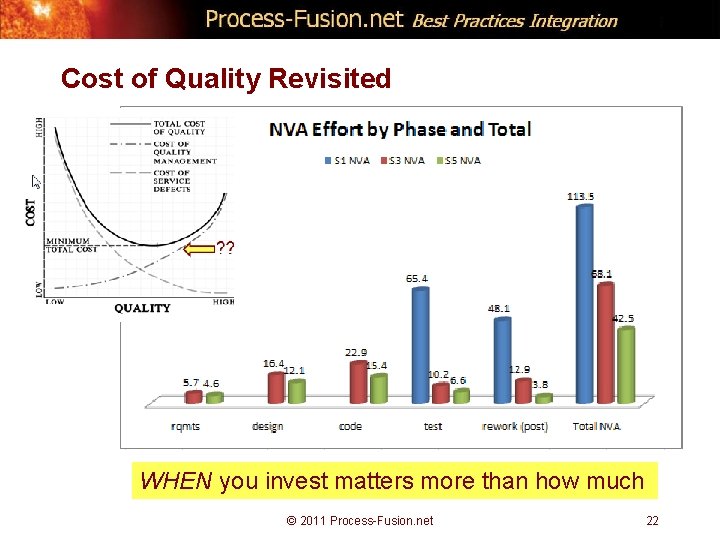

Cost of Quality Revisited WHEN you invest matters more than how much © 2011 Process-Fusion. net 22

“An apple a day …” • Formal inspections, conducted in accordance with IEEE Std. 1028 -2008, are always efficient & effective … better than any form of testing • Maximum benefits come when applied to requirements, architecture, and design • YOU can both reduce cost (improve productivity) and deliver better quality © 2011 Process-Fusion. net 23

Thank You! terima kasih 謝謝 © 2011 Process-Fusion. net 24

- Slides: 24