Model Validation Verification I Jon Zelner jzelnerumich edu

- Slides: 31

Model Validation & Verification I Jon Zelner jzelner@umich. edu University of Michigan Dept. of Sociology Gerald R. Ford School of Public Policy Center for the Study of Complex Systems ICPSR Summer 2009

Validation ‘Fitting the model’ to data This can be labor and processor-intensive under the best of circumstances Agent-based and many other computational models often do not fit assumptions associated with most standard social statistics and econometrics: Linearity Homoskedasticity Typically resort to running the model many times or Making reasonable approximations to the mechanics of the model.

Verification Often skipped over on the way to validation. Assumptions: Are they well thought-out? Do they reflect the phenomenon of interest? Mechanics: What does it do? Is the thing it does what you want it to do? If not, is there a bug or is it conceptual? Parameters: Abstract or meant to be measurable? Do they have the expected association with model output?

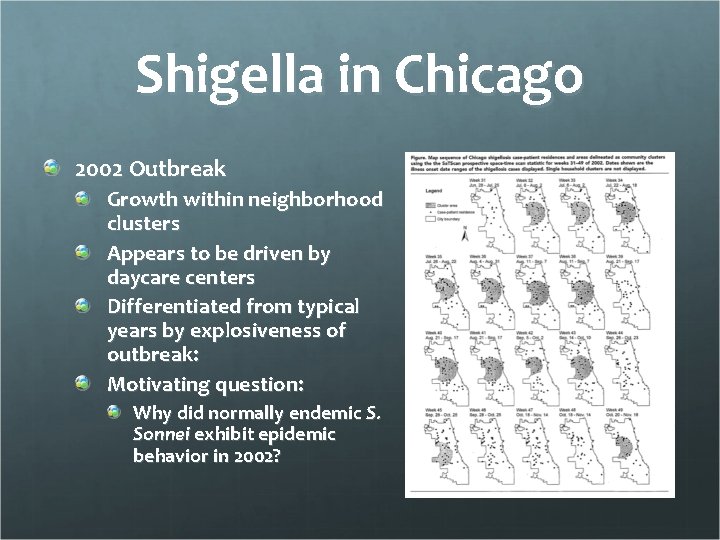

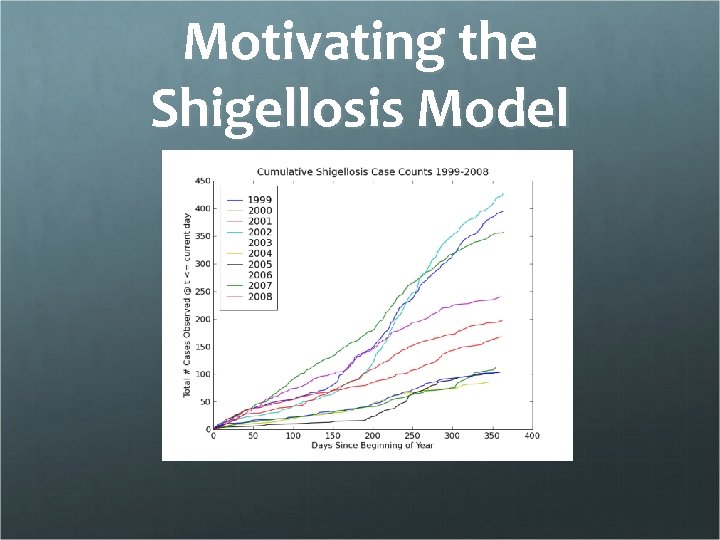

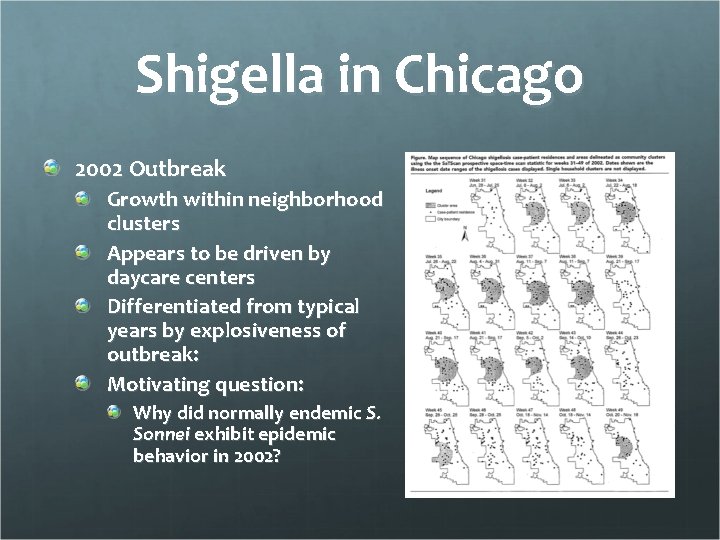

Shigella in Chicago 2002 Outbreak Growth within neighborhood clusters Appears to be driven by daycare centers Differentiated from typical years by explosiveness of outbreak: Motivating question: Why did normally endemic S. Sonnei exhibit epidemic behavior in 2002?

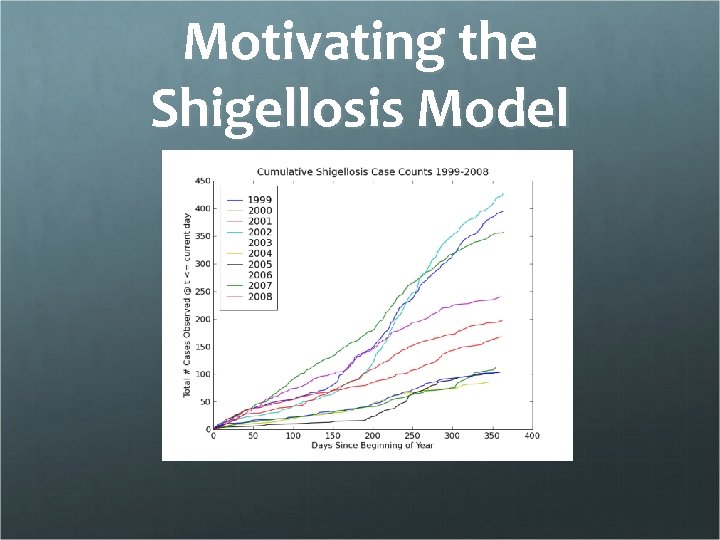

Motivating the Shigellosis Model

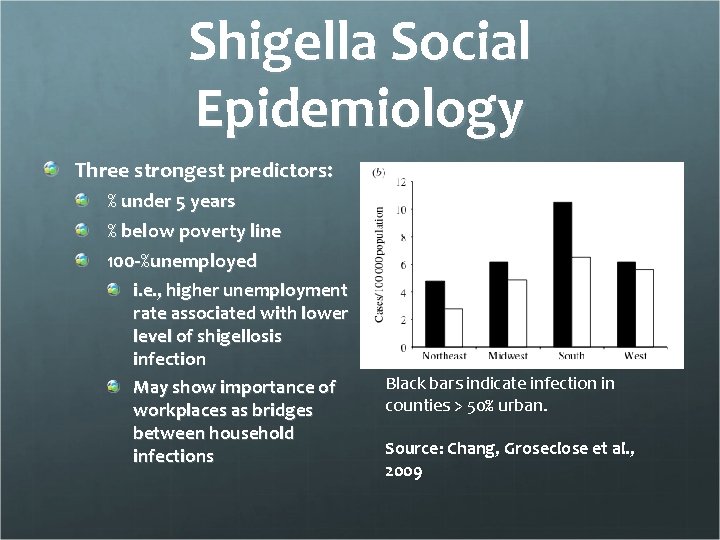

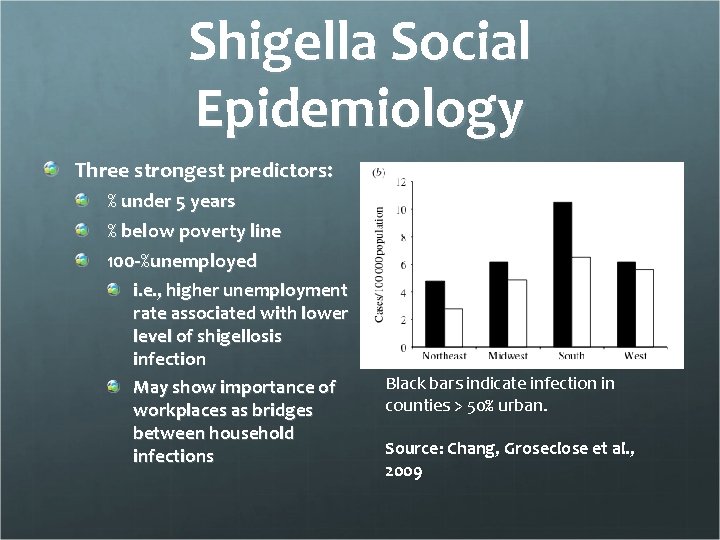

Shigella Social Epidemiology Three strongest predictors: % under 5 years % below poverty line 100 -%unemployed i. e. , higher unemployment rate associated with lower level of shigellosis infection May show importance of workplaces as bridges between household infections Black bars indicate infection in counties > 50% urban. Source: Chang, Groseclose et al. , 2009

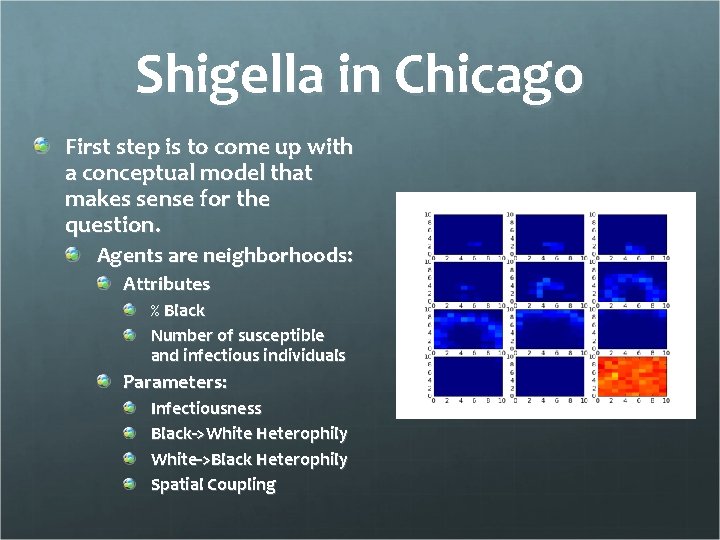

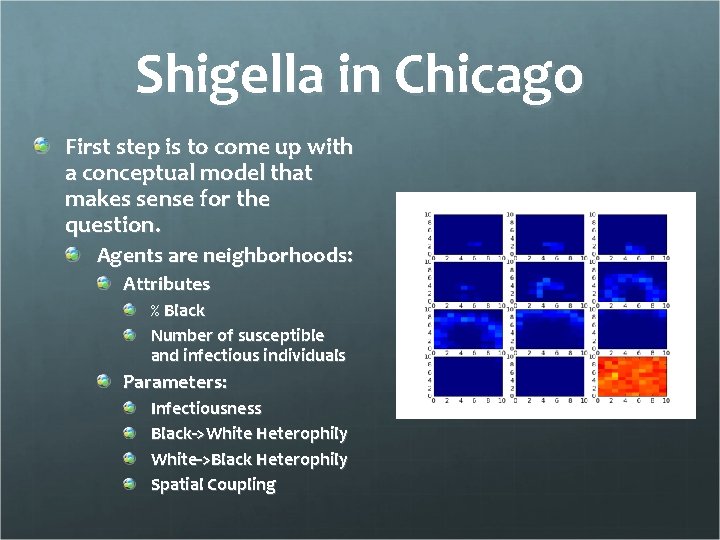

Shigella in Chicago First step is to come up with a conceptual model that makes sense for the question. Agents are neighborhoods: Attributes % Black Number of susceptible and infectious individuals Parameters: Infectiousness Black->White Heterophily White->Black Heterophily Spatial Coupling

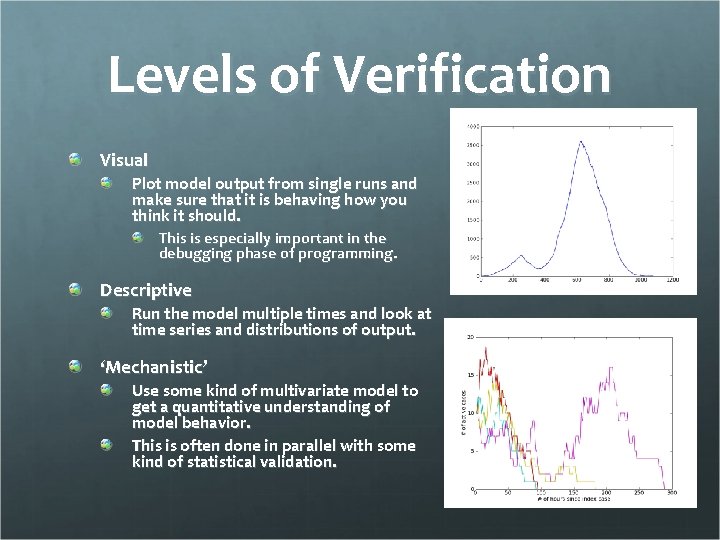

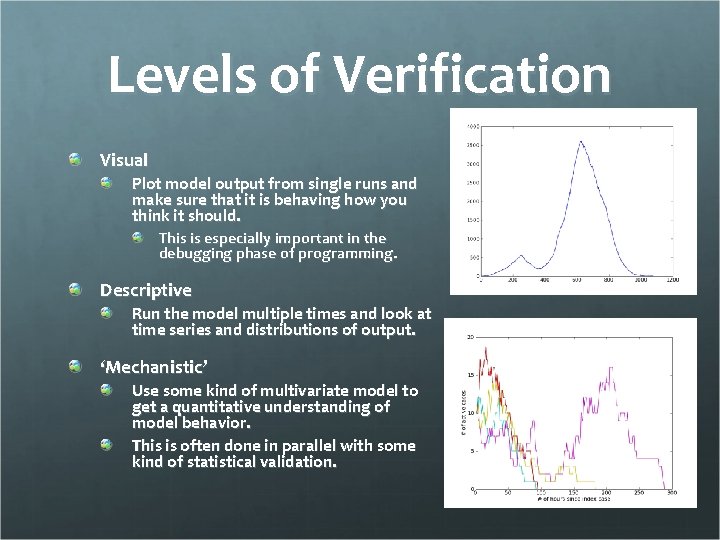

Levels of Verification Visual Plot model output from single runs and make sure that it is behaving how you think it should. This is especially important in the debugging phase of programming. Descriptive Run the model multiple times and look at time series and distributions of output. ‘Mechanistic’ Use some kind of multivariate model to get a quantitative understanding of model behavior. This is often done in parallel with some kind of statistical validation.

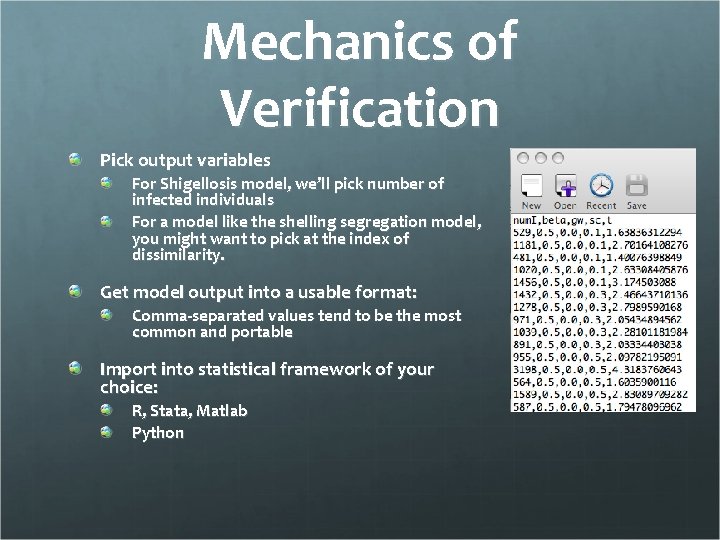

Mechanics of Verification Pick output variables For Shigellosis model, we’ll pick number of infected individuals For a model like the shelling segregation model, you might want to pick at the index of dissimilarity. Get model output into a usable format: Comma-separated values tend to be the most common and portable Import into statistical framework of your choice: R, Stata, Matlab Python

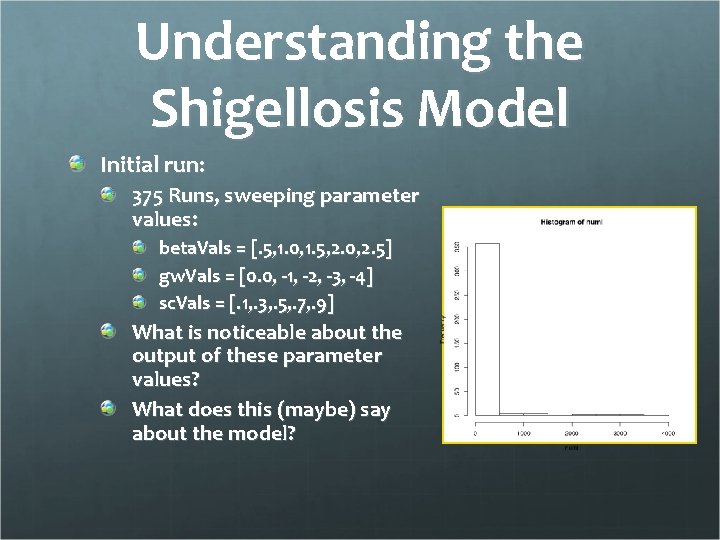

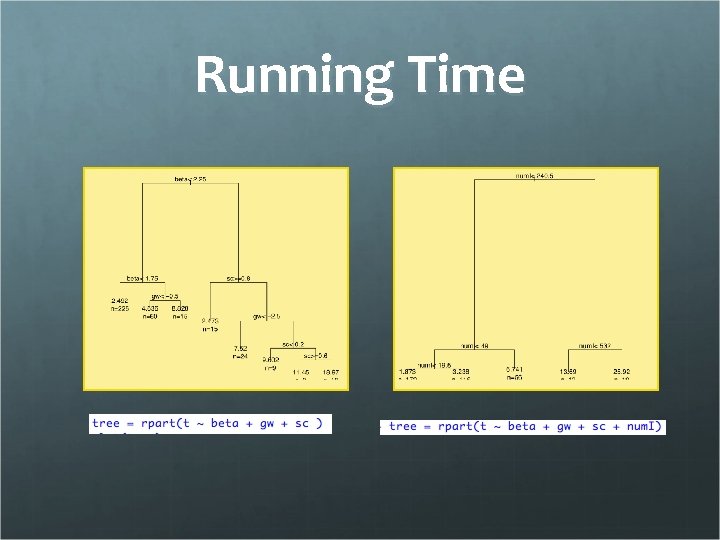

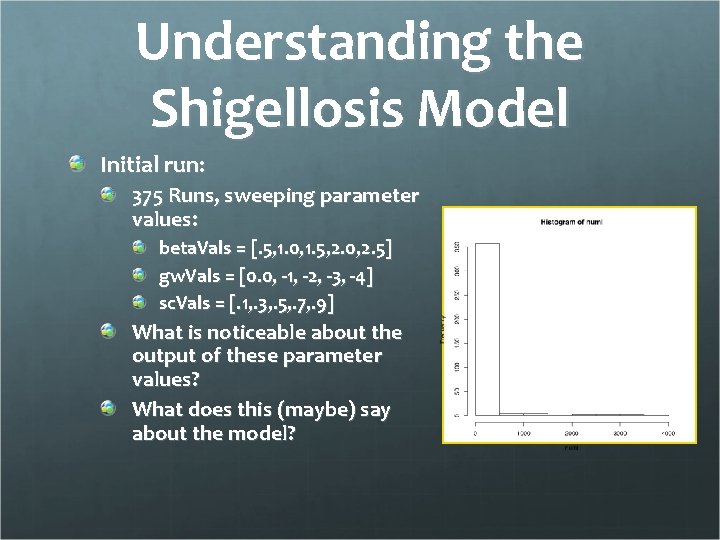

Understanding the Shigellosis Model Initial run: 375 Runs, sweeping parameter values: beta. Vals = [. 5, 1. 0, 1. 5, 2. 0, 2. 5] gw. Vals = [0. 0, -1, -2, -3, -4] sc. Vals = [. 1, . 3, . 5, . 7, . 9] What is noticeable about the output of these parameter values? What does this (maybe) say about the model?

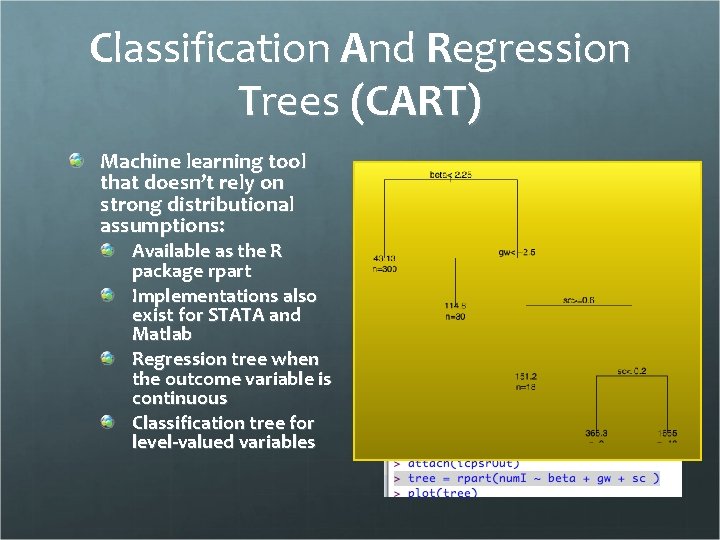

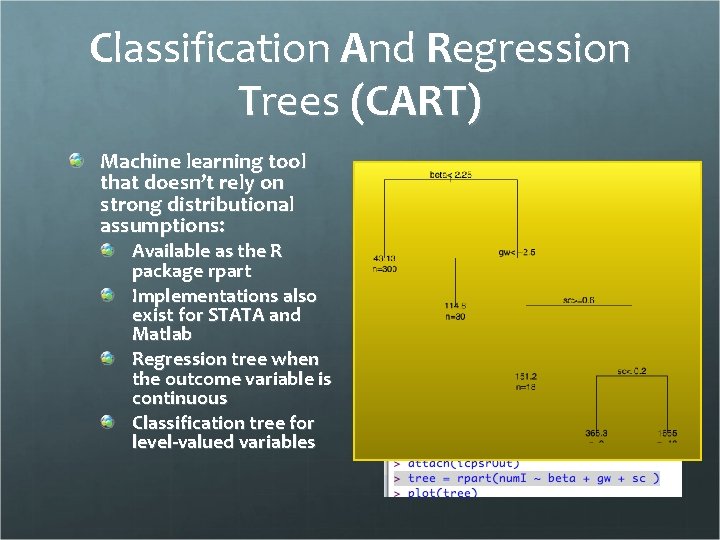

Classification And Regression Trees (CART) Machine learning tool that doesn’t rely on strong distributional assumptions: Available as the R package rpart Implementations also exist for STATA and Matlab Regression tree when the outcome variable is continuous Classification tree for level-valued variables

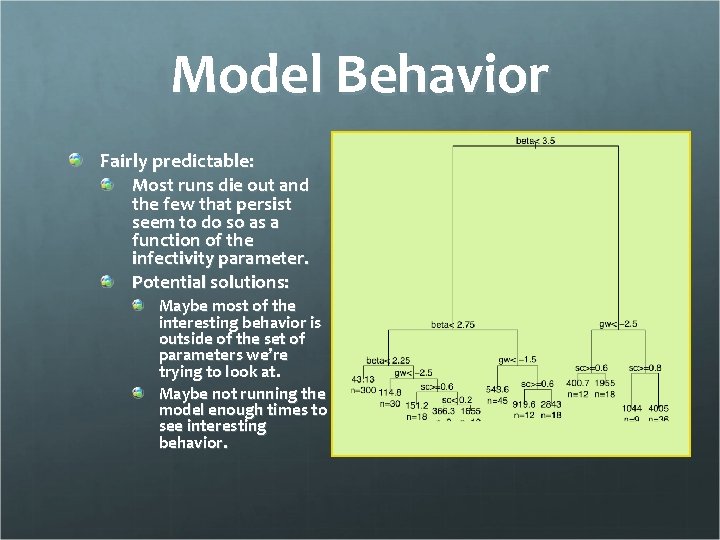

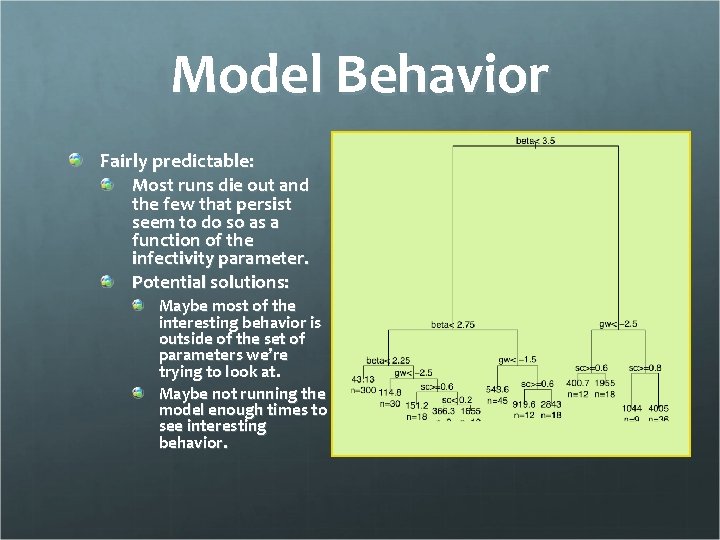

Model Behavior Fairly predictable: Most runs die out and the few that persist seem to do so as a function of the infectivity parameter. Potential solutions: Maybe most of the interesting behavior is outside of the set of parameters we’re trying to look at. Maybe not running the model enough times to see interesting behavior.

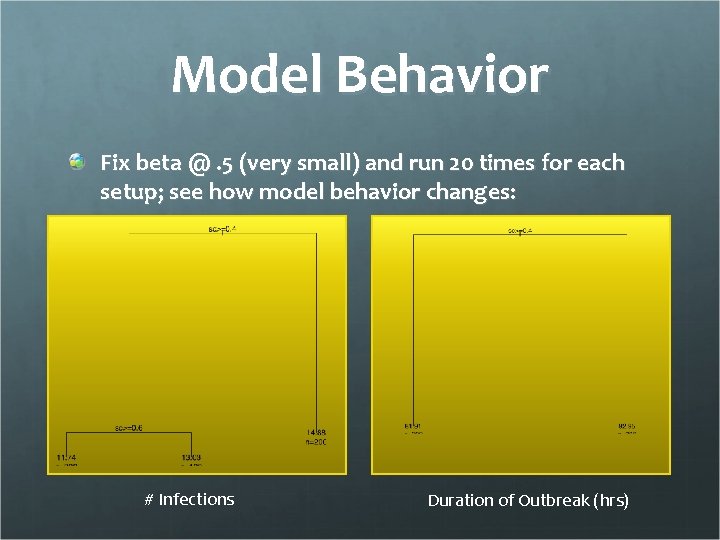

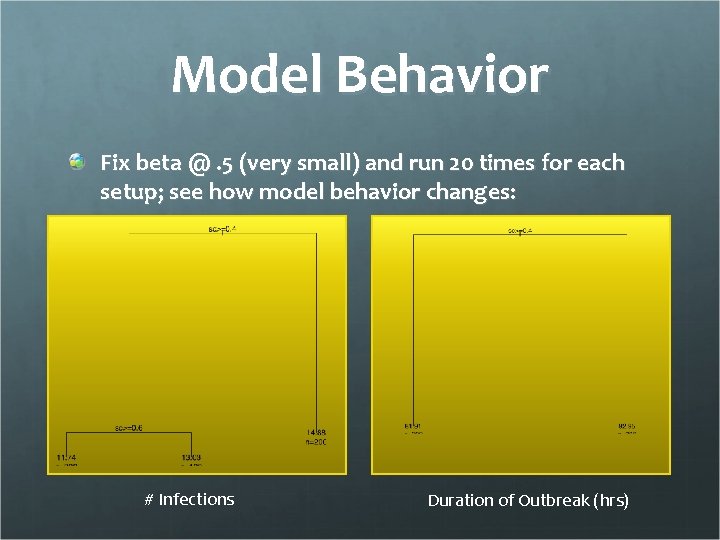

Model Behavior Fix beta @. 5 (very small) and run 20 times for each setup; see how model behavior changes: # Infections Duration of Outbreak (hrs)

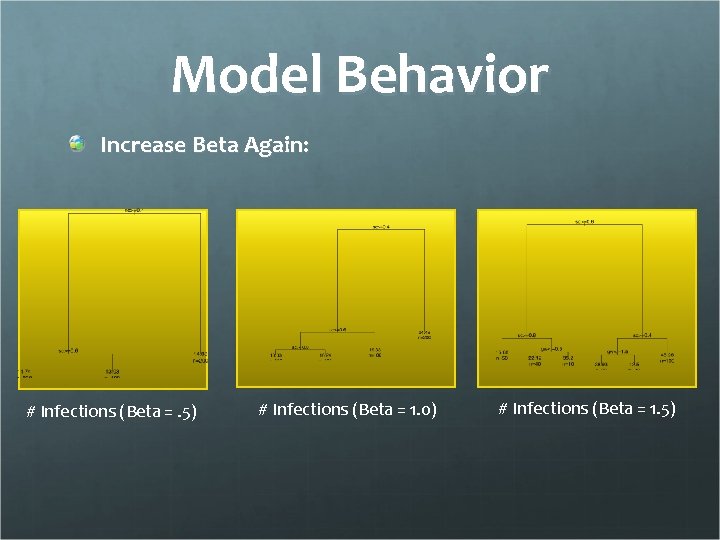

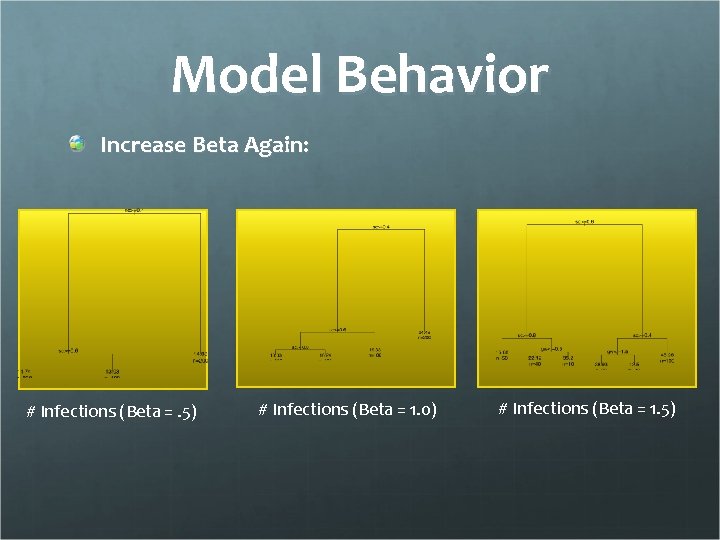

Model Behavior Increase Beta Again: # Infections (Beta =. 5) # Infections (Beta = 1. 0) # Infections (Beta = 1. 5)

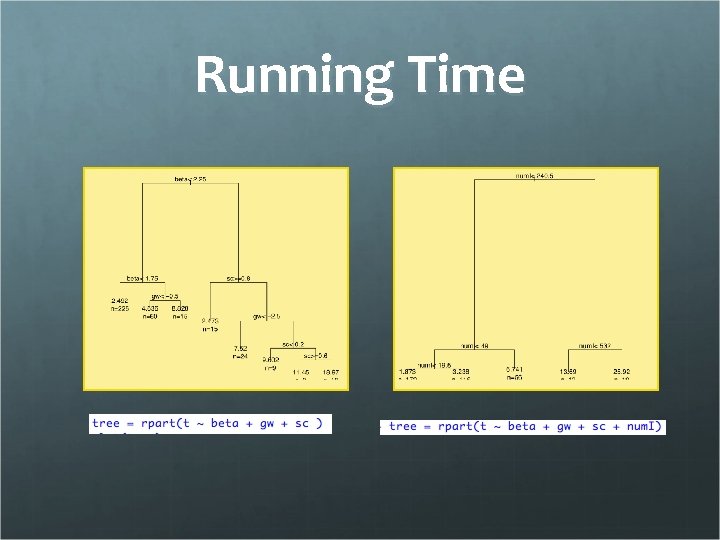

Running Time

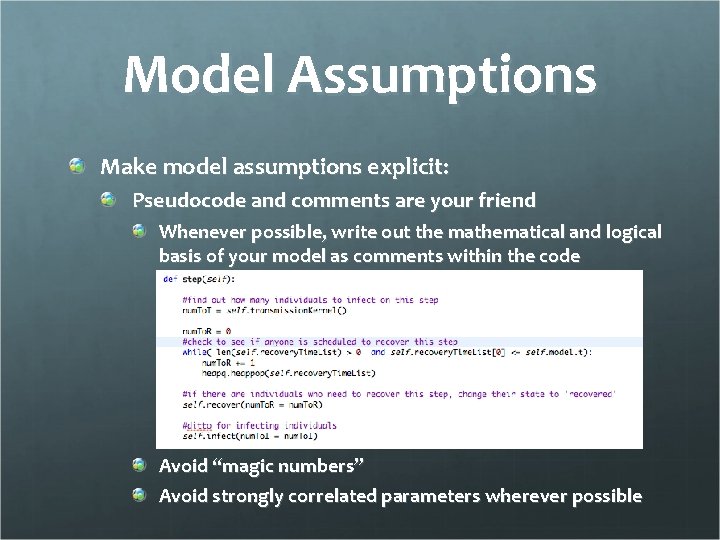

Model Assumptions Make model assumptions explicit: Pseudocode and comments are your friend Whenever possible, write out the mathematical and logical basis of your model as comments within the code Avoid “magic numbers” Avoid strongly correlated parameters wherever possible

Simulation Strategy PLANNING! Simulation can be costly in terms of effort and time, so an explicit strategy can save you time and hours of your life. It’s never too early to do batch runs: Start small: Small # of Runs over a coarse-grained set of parameters Explore areas of interest in greater depth DATA! Computational models generate a lot of output How are you going to preserve work you did in earlier simulations?

Getting Data Into R Output as comma-separated values (CSV) file from Netlogo Use R’s read. csv command to bring data in: read. csv(‘/Users/jzelner/Desktop/test. Data. csv’) on Mac/Linux read. csv(‘c: /Documents/test. Data. csv’) on pc Download and install rpart package: > install. packages(‘rpart’) >library(rpart) to load >help(rpart) to see usage Can also use built-in functions: hist() plot(x, y)

Docking: SIR Model Example Describes the rate of change in the number of infections where everyone has contact with everyone else: Expresses the same mechanism in a model with spatial structure and local contacts:

Active Nonlinear Tests (ANT) Framework for understanding behavior and robustness of simulation models, outlined by Miller. Let Mh(p) give the implication of the model for some hypothesis h under assumptions p. Let p-hat represent original model assumptions Let represent some objective function that describes the model’s performance with respect to h. Use an optimization algorithm to maximize the objective function over all where is the allowable set of perturbations to the model

Objective Functions For segregation and Shigellosis model: Find some parameter range that corresponds to behavior we’re looking for – a first peak inside a segregated neighborhood followed by a travelling wave out into the rest of the city - using known model parameters Search for changes to parameter values and model components that break this pattern What other objective functions? Other less-technical ways of doing this?

Scripting Tools Data Processing/Analysis Python A scripting language that is easy to learn to use Very good at processing text files and retrieving data from the internet and existing databases Runs interactively and in batch mode Similar to R or Matlab Interpreted instead of compiled Lots of existing packages (Sci. Py, Num. Py, Matplotlib) for doing scientific computing.

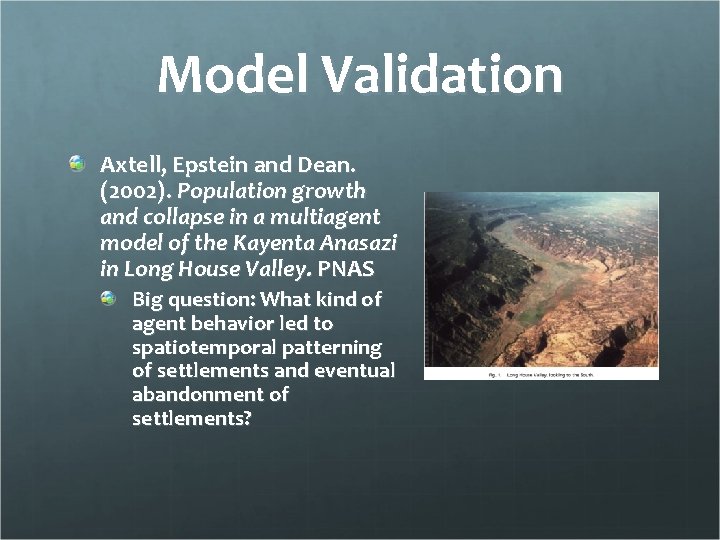

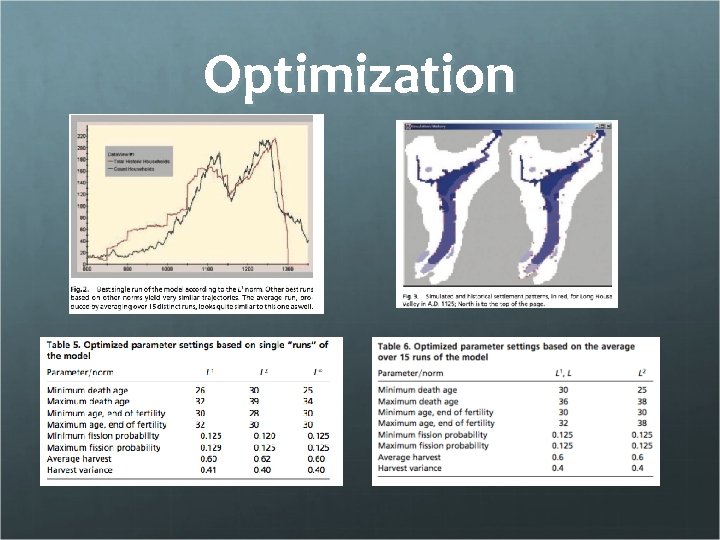

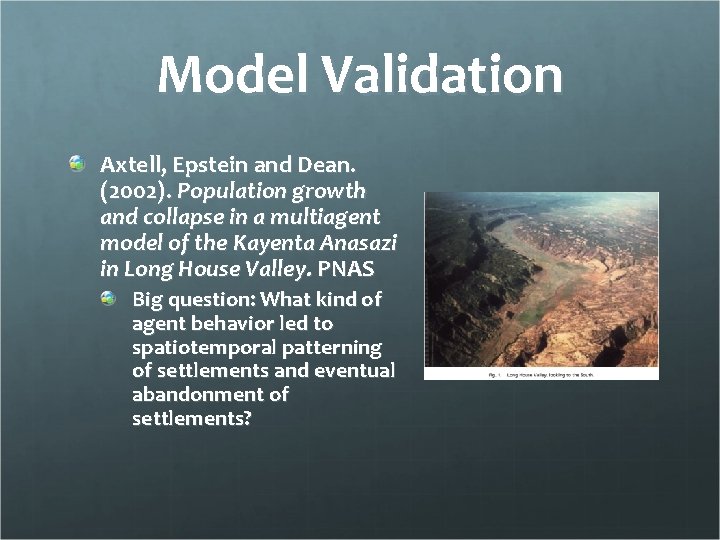

Model Validation Axtell, Epstein and Dean. (2002). Population growth and collapse in a multiagent model of the Kayenta Anasazi in Long House Valley. PNAS Big question: What kind of agent behavior led to spatiotemporal patterning of settlements and eventual abandonment of settlements?

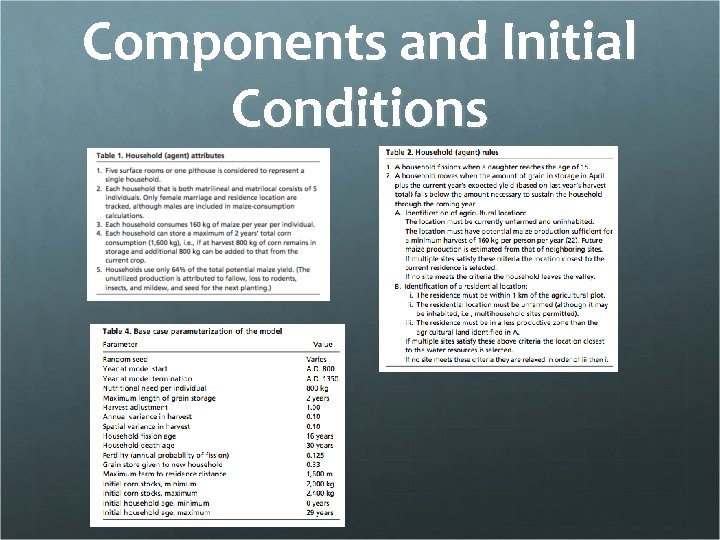

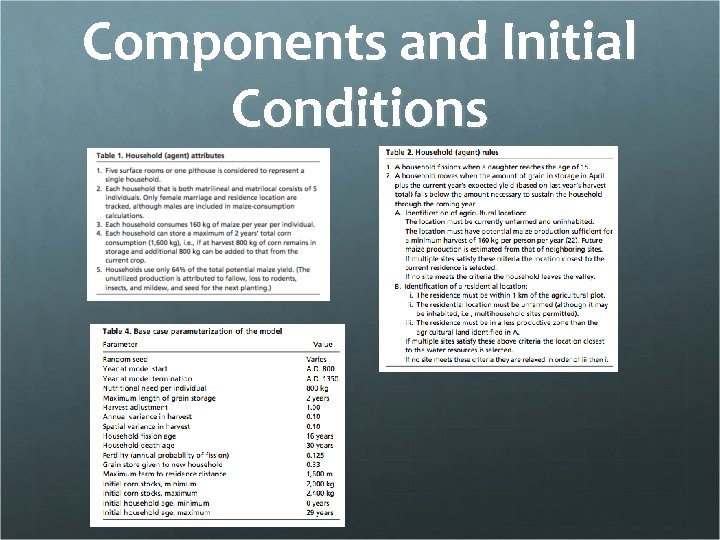

Components and Initial Conditions

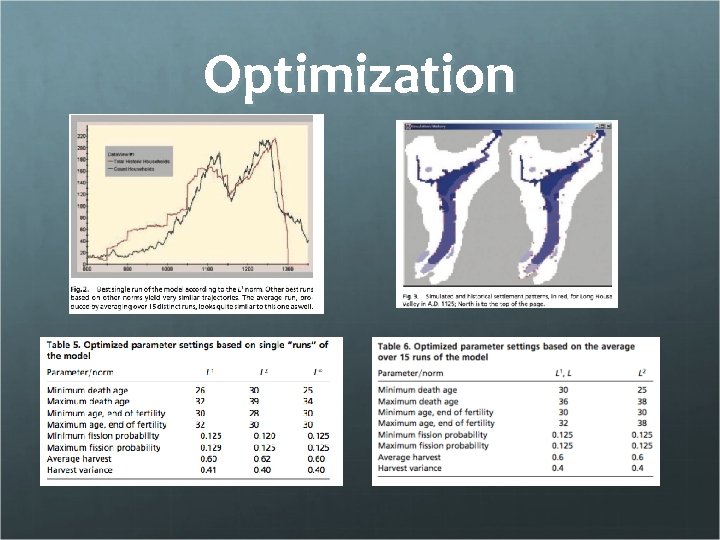

Optimization

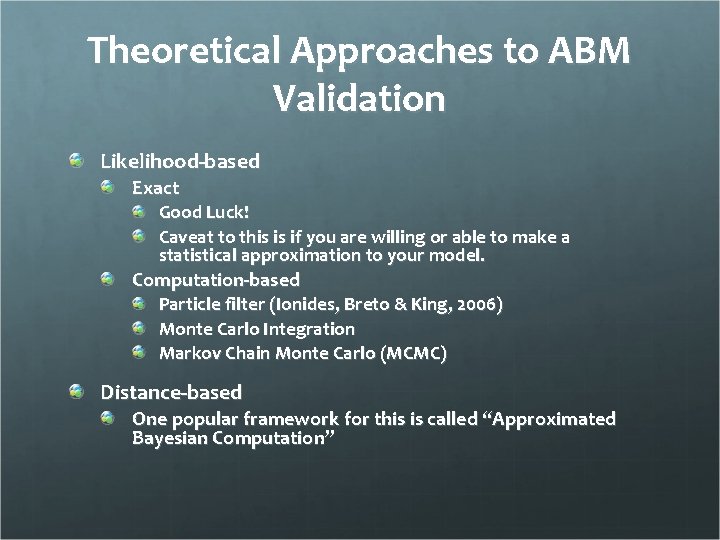

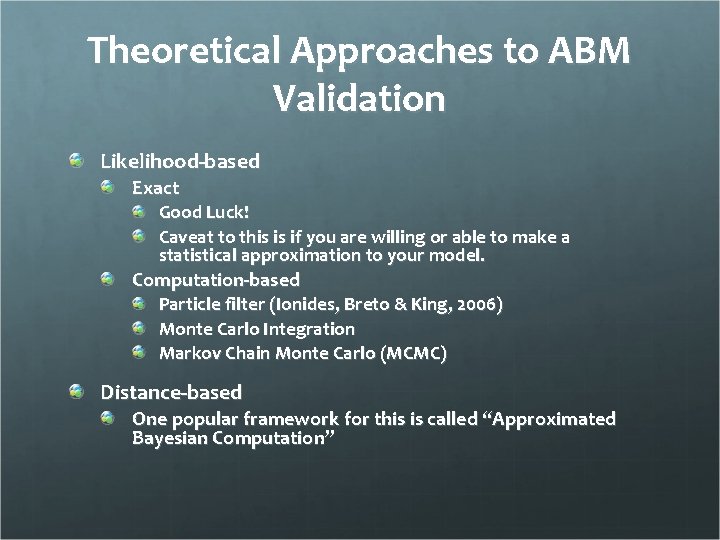

Theoretical Approaches to ABM Validation Likelihood-based Exact Good Luck! Caveat to this is if you are willing or able to make a statistical approximation to your model. Computation-based Particle filter (Ionides, Breto & King, 2006) Monte Carlo Integration Markov Chain Monte Carlo (MCMC) Distance-based One popular framework for this is called “Approximated Bayesian Computation”

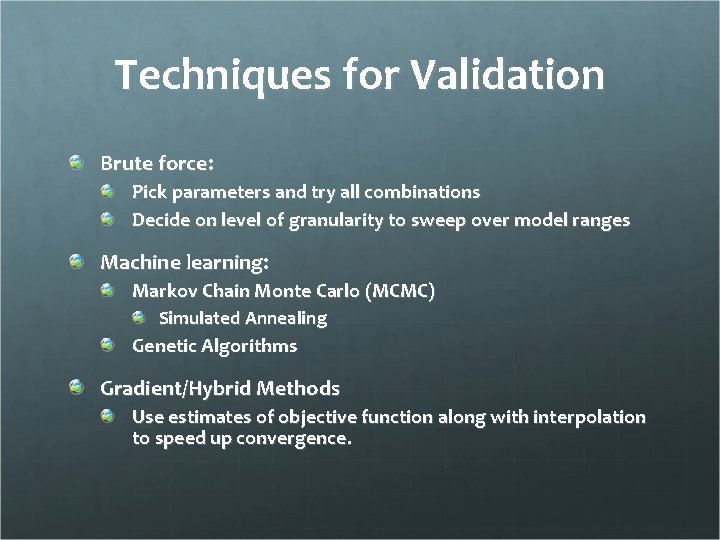

Techniques for Validation Brute force: Pick parameters and try all combinations Decide on level of granularity to sweep over model ranges Machine learning: Markov Chain Monte Carlo (MCMC) Simulated Annealing Genetic Algorithms Gradient/Hybrid Methods Use estimates of objective function along with interpolation to speed up convergence.

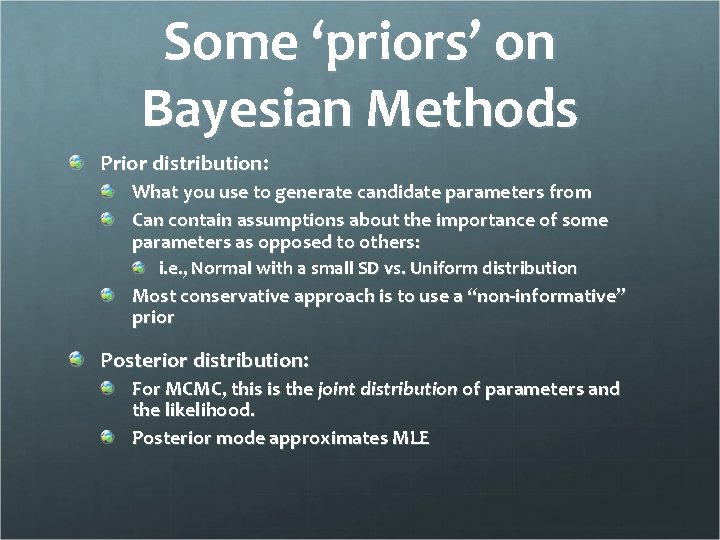

Some ‘priors’ on Bayesian Methods Prior distribution: What you use to generate candidate parameters from Can contain assumptions about the importance of some parameters as opposed to others: i. e. , Normal with a small SD vs. Uniform distribution Most conservative approach is to use a “non-informative” prior Posterior distribution: For MCMC, this is the joint distribution of parameters and the likelihood. Posterior mode approximates MLE

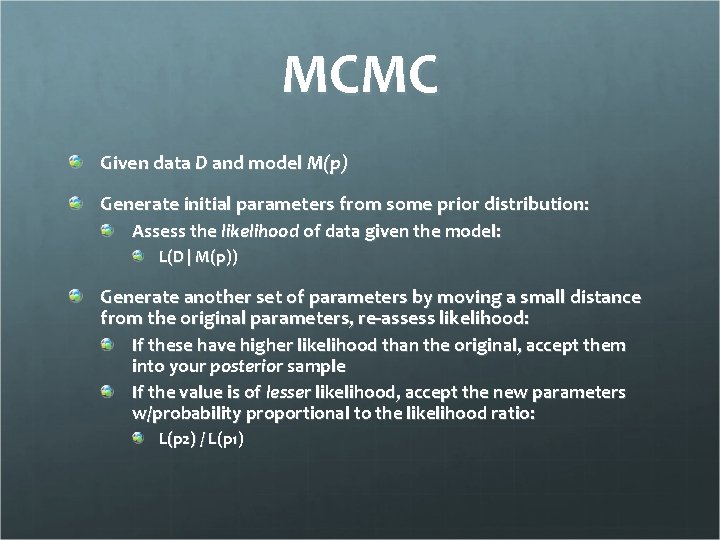

MCMC Given data D and model M(p) Generate initial parameters from some prior distribution: Assess the likelihood of data given the model: L(D | M(p)) Generate another set of parameters by moving a small distance from the original parameters, re-assess likelihood: If these have higher likelihood than the original, accept them into your posterior sample If the value is of lesser likelihood, accept the new parameters w/probability proportional to the likelihood ratio: L(p 2) / L(p 1)

MCMC Using Approximate Bayesian Computation (ABC) Generate random parameters, p, from prior distribution Run model once @ p. Measure distance from D. If model run is within some tolerance of data, accept parameters, otherwise keep last parameters and jump to new ones from there. Can either have the MCMC sampler draw samples directly from your model or import a large run into R and sample from the dataset. Be sure to constrain the sampler to only the parameter values you have already sampled. Requires thorough parameter sweeps.

Another approach Another less technical way to get similar results is to write a program in R, Stata, etc. , that will look through your model output and record the parameter values inside of your prespecified tolerance. Can do this in a multidimensional way for several parameters or look for a value of a single parameter that optimizes net of all other parameters.