Model structure differences influence performance more than number

Model structure differences influence performance more than number of parameters: Findings from a 36 -model, 559 -catchment comparison study 1 Department Wouter Knoben 1, Jim Freer 2, Ross Woods 1 of Civil Engineering; 2 School of Geographical Sciences, University of Bristol Model structures should represent the catchment they are applied to, but choosing the right model for the right place is not easy Dominant process(es) ? ? Process inclusion

Model structure differences influence performance more than number of parameters: Findings from a 36 -model, 559 -catchment comparison study 1 Department Wouter Knoben 1, Jim Freer 2, Ross Woods 1 of Civil Engineering; 2 School of Geographical Sciences, University of Bristol Findings 1. It’s hard to choose the ‘best’ model based on efficiency values – many models get similar values 2. There is no evidence of increased ‘overfitting’ of models with more parameters 3. Model performance correlates more with streamflow signatures than with catchment attributes 4. Model groups seem to exist, made up of models that share certain structural elements and have similar behaviour across the sample of catchments

Conceptual model structure uncertainty and the relation with catchment attributes Tap for quick navigation! Method [1/2] Use these to navigate slides! Wouter Knoben, Jim Freer, Ross Woods w. j. m. knoben@bristol. ac. uk CAMELS data; 559 basins Meteorology and catchment attributes MARRMo. T framework; 36 models Conceptual models used in lumped fashion Model structure uncertainty Parameters vs performance Catchment attributes Summary and conclusions References Addor et al. (2018) calibration evaluation 1989 -1998 1999 -2009 Knoben et al. (under review) Tap figure for details!

Conceptual model structure uncertainty and the relation with catchment attributes Wouter Knoben, Jim Freer, Ross Woods w. j. m. knoben@bristol. ac. uk Method [2/2] Model structure uncertainty Parameters vs performance Catchment attributes Summary and conclusions References Model set-up 1. CMA-ES calibration algorithm Hansen et al. (2003) 4. KGE objective function Gupta et al. (2009) 2. Standardized parameter ranges across models Knoben et al. (under review) 3. Initial storages determined iteratively, by repeatedly running year 1 until storages converge (<1% change between years) calibration evaluation 1989 -1998 1999 -2009 Each model is calibrated 3 times per basin, using: • Regular flows ( KGE(Q); high flow focus ) • Inverted flows ( KGE(1/Q); low focus ) • Average of both ( ½ [ KGE(Q) + KGE(1/Q) ] ) Note that all results are shown for evaluation period, unless explicitly stated otherwise

Conceptual model structure uncertainty and the relation with catchment attributes Wouter Knoben, Jim Freer, Ross Woods w. j. m. knoben@bristol. ac. uk Method Tap for larger versions! How ‘good’ is the best model? Maximum KGE(Q) value per catchment What is the range of KGE values? KGE(Q) difference between best and 32 nd model In every catchment, at least 1 model outperforms the ‘mean flow’ benchmark (KGE = 1 -√ 2; equivalent to NSE = 0) In many catchments, multiple models achieve similar KGE values. This makes it difficult to choose a ‘best’ model Model structure uncertainty [1/2] Parameters vs performance Catchment attributes Summary and conclusions References

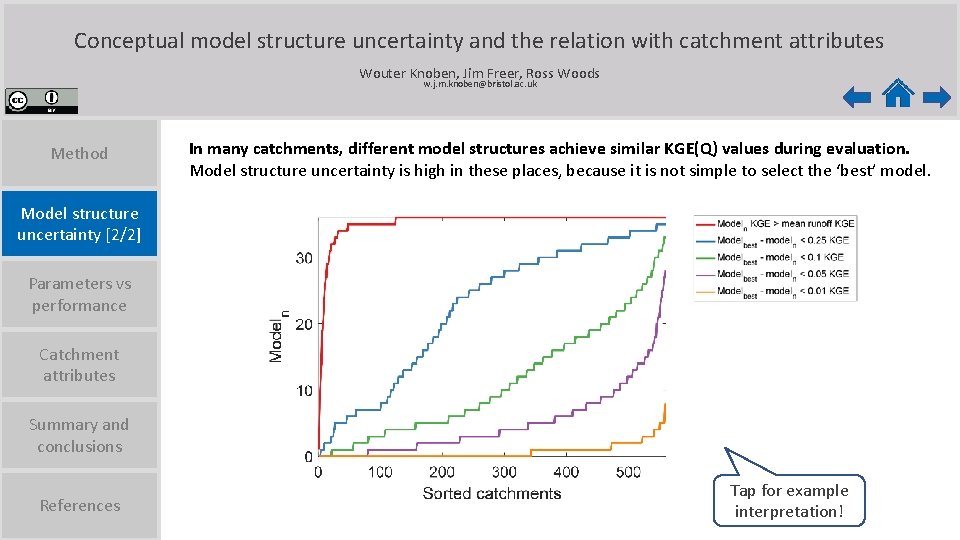

Conceptual model structure uncertainty and the relation with catchment attributes Wouter Knoben, Jim Freer, Ross Woods w. j. m. knoben@bristol. ac. uk Method In many catchments, different model structures achieve similar KGE(Q) values during evaluation. Model structure uncertainty is high in these places, because it is not simple to select the ‘best’ model. Model structure uncertainty [2/2] Parameters vs performance Catchment attributes Summary and conclusions References Tap for example interpretation!

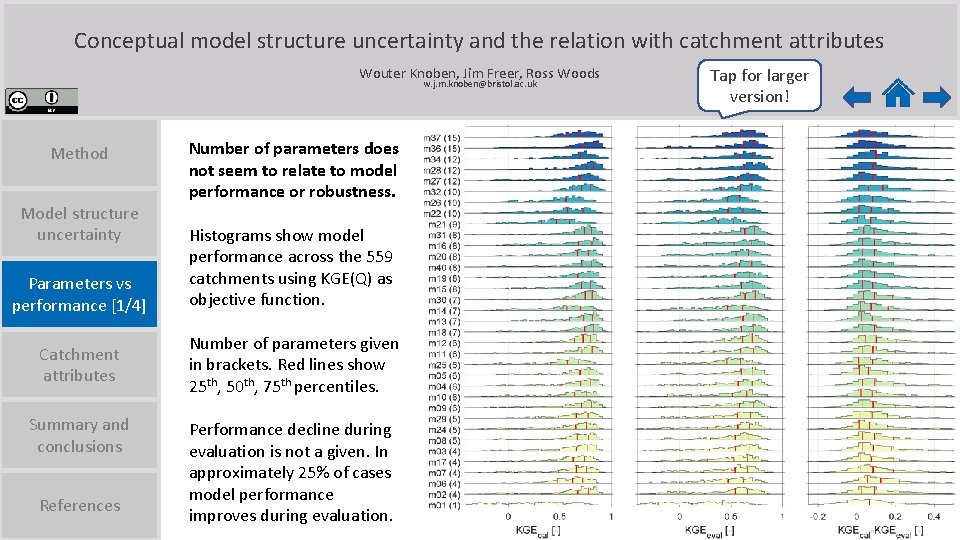

Conceptual model structure uncertainty and the relation with catchment attributes Wouter Knoben, Jim Freer, Ross Woods w. j. m. knoben@bristol. ac. uk Method Model structure uncertainty Parameters vs performance [1/4] Catchment attributes Summary and conclusions References Number of parameters does not seem to relate to model performance or robustness. Histograms show model performance across the 559 catchments using KGE(Q) as objective function. Number of parameters given in brackets. Red lines show 25 th, 50 th, 75 th percentiles. Performance decline during evaluation is not a given. In approximately 25% of cases model performance improves during evaluation. Tap for larger version!

Conceptual model structure uncertainty and the relation with catchment attributes Wouter Knoben, Jim Freer, Ross Woods w. j. m. knoben@bristol. ac. uk Method Model structure uncertainty Parameters vs performance [2/4] Catchment attributes Summary and conclusions References Mann-Kendall statistical tests indicate no significant relationship between number of parameters and median model performance. Within our sample of models and catchments: 1. Models with more parameters do not necessarily fit data better during calibration 2. Models with more parameters are not at increased risk of ‘overfitting’

Conceptual model structure uncertainty and the relation with catchment attributes Wouter Knoben, Jim Freer, Ross Woods w. j. m. knoben@bristol. ac. uk Method Model structure uncertainty Parameters vs performance [3/4] Catchment attributes Summary and conclusions References Using relative model ranks shows that certain model structures are better suited for certain objectives, and that this has little to do with the number of parameters. Models are ranked per catchment, with rank 1 being the best model. Histograms are scaled individually. Note that this figure only shows ranks using the KGE(Q) metric. Certain models are consistently ranked quite poorly. Rankings are different for the other two metrics (low and combined flows). Especially m 21, m 22 and m 26 rank much higher when used to simulate low flows, further showing that model structure strongly influences model capability. Tap for larger version!

Conceptual model structure uncertainty and the relation with catchment attributes Wouter Knoben, Jim Freer, Ross Woods w. j. m. knoben@bristol. ac. uk Method However, there is no universally ‘best’ model for any objective function. Nearly every model is both one of the best choices in certain catchments, and one of the worst choices in others. Model structure uncertainty Parameters vs performance [4/4] Catchment attributes Summary and conclusions References The figure shows for how many catchments each model is in the top/bottom 3 models. Blue is used for top 3 counts, red for bottom 3 counts, green is used to indicate zero counts.

Conceptual model structure uncertainty and the relation with catchment attributes Wouter Knoben, Jim Freer, Ross Woods w. j. m. knoben@bristol. ac. uk Method Model structure uncertainty Parameters vs performance Catchment attributes [1/2] Summary and conclusions References Spearman rank correlation between model ranks and CAMELS catchment attributes show that relative model performance relates most strongly to streamflow signatures, less so to physical characteristics. Model ranks are based on KGE(Q) during model evaluation. Lower ranks are assigned to the better models. Plot includes only catchments where less then 10% of precipitation occurs as snow. When all catchments are used, the relation between fraction snowfall and models with and without snow modules is clear. Tap here to see this! Tap for larger version! vegetation soil streamflow geology climate

Conceptual model structure uncertainty and the relation with catchment attributes Wouter Knoben, Jim Freer, Ross Woods w. j. m. knoben@bristol. ac. uk Method Model structure uncertainty Parameters vs performance Catchment attributes [2/2] Summary and conclusions References Models (x-axis) are grouped by similarity of correlations and this shows that certain model groups exist. Analysis of model documentation shows that certain structural similarities exist within these groups, suggesting that certain model elements result in (lack of) suitability for certain flow regimes. However, our hypotheses about this relation were made as a result of this figure and are thus not currently proven. Tap for larger version! vegetation soil streamflow geology climate

Conceptual model structure uncertainty and the relation with catchment attributes Wouter Knoben, Jim Freer, Ross Woods w. j. m. knoben@bristol. ac. uk Method Model structure uncertainty Parameters vs performance Catchment attributes Summary and conclusions [1/2] References 559 catchments 36 lumped conceptual models 3 objective functions Findings 1. Different model structures achieve similar objective function values – making it hard to choose the ‘best’ model by only looking at efficiency metrics. 2. The number of model parameters does not relate to performance differences between models – there is no evidence of overfitting within our sample of models and catchments. 3. Relative model performance correlates more with streamflow signatures than with catchment attributes – models are more suited for certain flow regimes, but the relation with catchment form is elusive. 4. Results suggest that certain model groups exist in the total model space, and that specific model elements might dictate a model’s suitability for certain flow regimes.

Conceptual model structure uncertainty and the relation with catchment attributes Wouter Knoben, Jim Freer, Ross Woods w. j. m. knoben@bristol. ac. uk Method Model structure uncertainty Parameters vs performance Catchment attributes Summary and conclusions [2/2] References Implications 1. Strict model evaluation must become the norm. KGE (NSE) values are not enough. Findings 1. Different model structures achieve similar objective function values – making it hard to choose the ‘best’ model by only looking at efficiency metrics. 2. Parameter identifiability can be limited with our calibration procedure, but more parameters does not seem inherently worse. 2. The number of model parameters does not relate to performance differences between models – there is no evidence of overfitting within our sample of models and catchments. 3. Understanding of the relation between catchment input, form and response is lacking. Which info and metrics do we need? 3. Relative model performance correlates more with streamflow signatures than with catchment attributes – models are more suited for certain flow regimes, but the relation with catchment form is elusive. 4. If true, this principle might allow (guidance for) custom-build models for specific purposes. 4. Results suggest that certain model groups exist in the total model space, and that specific model elements might dictate a model’s suitability for certain flow regimes.

Conceptual model structure uncertainty and the relation with catchment attributes Wouter Knoben, Jim Freer, Ross Woods w. j. m. knoben@bristol. ac. uk Method Model structure uncertainty Parameters vs performance Catchment attributes Summary and conclusions References [1/1] Addor, N. , Newman, A. J. , Mizukami, N. and Clark, M. P. : The CAMELS data set: catchment attributes and meteorology for large-sample studies, Hydrol. Earth Syst. Sci. , 21, 5293– 5313, doi: 10. 5194/hess-2017 -169, 2017. Gupta, H. V. , H. Kling, K. K. Yilmaz, and G. F. Martinez (2009), Decomposition of the mean squared error and NSE performance criteria: Implications for improving hydrological modelling, Journal of Hydrology, 377(1 -2), 80– 91, doi: 10. 1016/j. jhydrol. 2009. 08. 003. Hansen, N. , S. D. Müller, and P. Koumoutsakos (2003), Reducing the Time Complexity of the Derandomized Evolution Strategy with Covariance Matrix Adaptation (CMA-ES), Evolutionary Computation, 11(1), 1– 18, doi: 10. 1162/106365603321828970. Knoben, W. J. M. , Freer, J. E. , Fowler, K. J. A. , Peel, M. C. and Woods, R. A. : Modular Assessment of Rainfall-Runoff Models Toolbox (MARRMo. T) v 1. 0: an open- source, extendable framework providing implementations of 46 conceptual hydrologic models as continuous space-state formulations, Geosci. Model Dev. Discuss. , 1– 26, doi: 10. 5194/gmd-2018 -332, under review. Please return to the home screen after finishing!

Conceptual model structure uncertainty and the relation with catchment attributes Wouter Knoben, Jim Freer, Ross Woods w. j. m. knoben@bristol. ac. uk How did you get here? Use this to return home. Additional slides Method

Supplement CAMELS dataset Addor et al. (2018) • 671 catchments • Daily time series of • Meteorological forcing • Priestley-Taylor PET estimates • Streamflow We use 559 basins (blue), removing places where area estimates from different sources differ >10% and places that fall outside the limits of a Budyko curve. • Variety of catchment-scale attributes • Climate [11 attributes] • Topography [4 attributes] • Land cover [8 attributes] • Soil [11 attributes] • Geology [7 attributes] • Streamflow [13 attributes]

Supplement MARRMo. T framework Knoben et al. (under review) • 46 models Models used in this work • Implicit Euler approximation of model equations • Smoothing of model equations to avoid discontinuities • Simultaneous solving of all model states • Standardized parameter ranges across all models • Ran at daily time step here, but other step sizes possible Code: https: //github. com/wknoben/MARRMo. T Documentation: https: //doi. org/10. 5194/gmd-2018 -332

Supplement

Conceptual model structure uncertainty and the relation with catchment attributes Wouter Knoben, Jim Freer, Ross Woods w. j. m. knoben@bristol. ac. uk How did you get here? Use this to return home. Additional slides Q 1

Zoom How ‘good’ is the best model? Maximum KGE(Q) value per catchment

Zoom What is the range of KGE values? KGE(Q) difference between best and 32 nd model

Explanation This figure shows Cumulative Distribution Functions of differences in model performance per catchment. For each model and each catchment, we calculate the KGE(Q) difference between that model and the best model for that catchment. The CDFs show the number of models per catchment, that are within a certain distance in KGE(Q) terms from the best model. The red line shows that in more than 400 catchments, all models outperform the mean flow benchmark (KGE = 1 -√ 2; NSE = 0) The orange line shows how many models in each catchment have a KGE(Q) value during evaluation that is within 0. 01 KGE from that of the best model for each catchment. In approximately 200 catchments, … this number lies between 1 and 7. Lines in different colours show different thresholds.

Conceptual model structure uncertainty and the relation with catchment attributes Wouter Knoben, Jim Freer, Ross Woods w. j. m. knoben@bristol. ac. uk How did you get here? Use this to return home. Additional slides Q 2

Zoom

Zoom

Conceptual model structure uncertainty and the relation with catchment attributes Wouter Knoben, Jim Freer, Ross Woods w. j. m. knoben@bristol. ac. uk How did you get here? Use this to return home. Additional slides Q 3

Zoom Spearman rank correlation between model ranks and CAMELS catchment attributes. Includes all catchments. Note the strong correlation between model rank and Fraction P as snow (4 th row from the bottom up), for models that include a snow module. Model ranks are based on KGE(Q) during model evaluation. Lower ranks are assigned to the better models. When attribute value … increases decreases … the model’s rank … decreases increases (rank 1 is good, rank 36 is bad)

Zoom Spearman rank correlation between model ranks and CAMELS catchment attributes. Includes only catchments where <10% of annual precipitation occurs as snowfall. Note the lack of correlation between model rank and Fraction P as snow (4 th row from the bottom up). Model ranks are based on KGE(Q) during model evaluation. Lower ranks are assigned to the better models. When attribute value … increases decreases … the model’s rank … decreases increases (rank 1 is good, rank 36 is bad)

Zoom Note that this figure is not particularly helpful for understanding model structure similarity and differences, better are: Code: https: //github. com/wknoben/MARRMo. T Documentation: https: //doi. org/10. 5194/gmd-2018 -332

- Slides: 30