Model Selection Agenda Myung Pitt Kim Olsson Wennerholm

- Slides: 36

Model Selection

Agenda • Myung, Pitt, & Kim • Olsson, Wennerholm, & Lyxzen

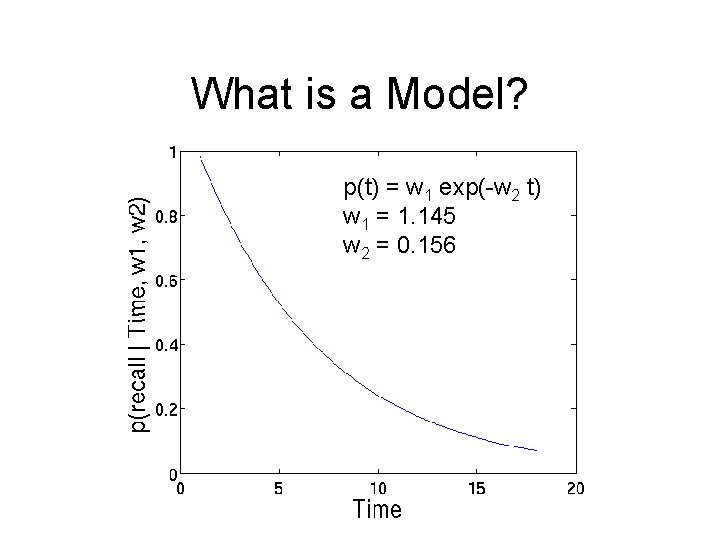

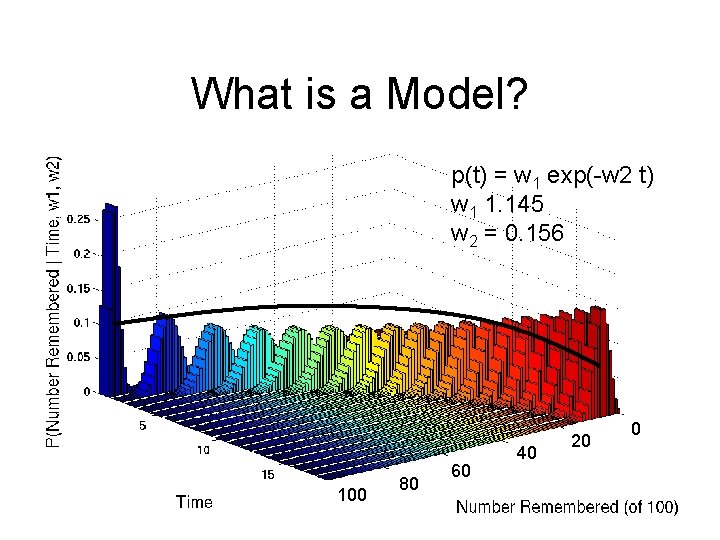

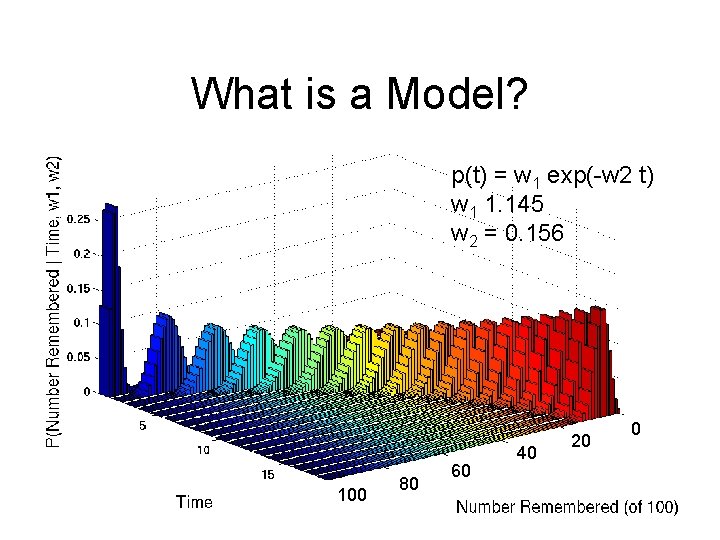

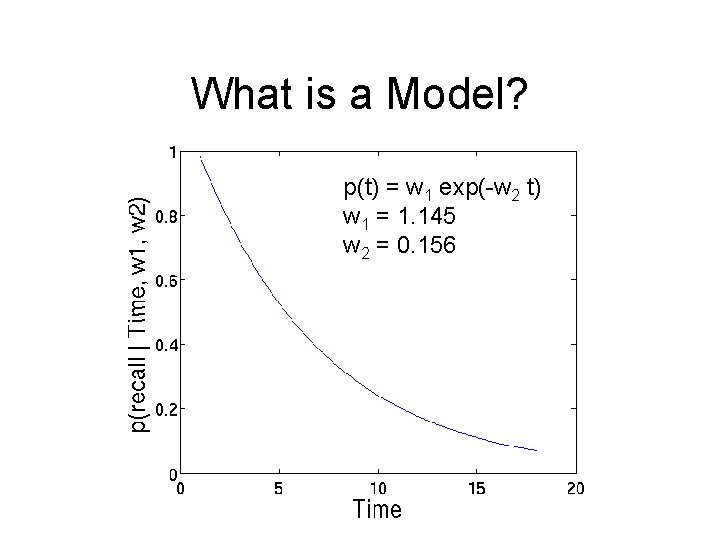

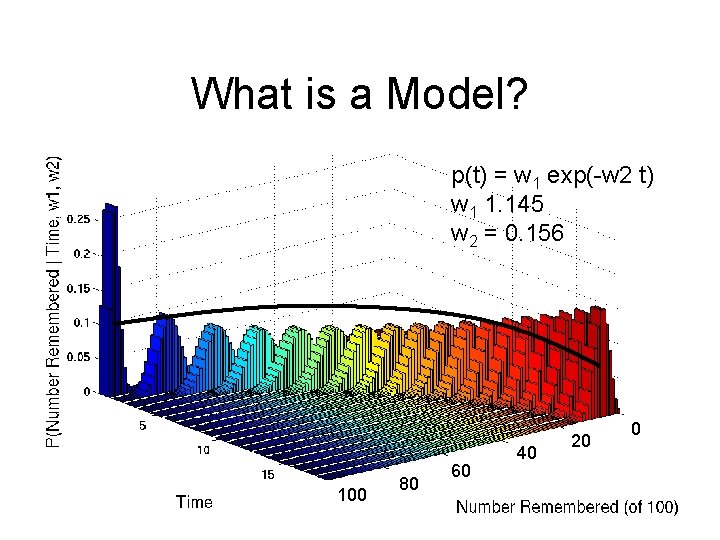

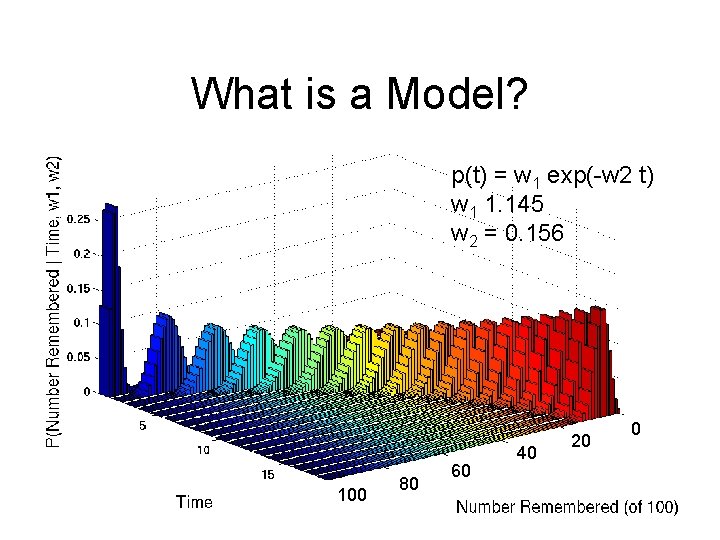

What is a Model? p(t) = w 1 exp(-w 2 t) w 1 = 1. 145 w 2 = 0. 156

What is a Model? p(t) = w 1 exp(-w 2 t) w 1 1. 145 w 2 = 0. 156 100 80 60 40 20 0

What is a Model? p(t) = w 1 exp(-w 2 t) w 1 1. 145 w 2 = 0. 156 100 80 60 40 20 0

What is a Model? • A model is a parametric family of probability distributions. – Parametric because the distributions depend on the parameters. – Distributions because they are stochastic models, not deterministic.

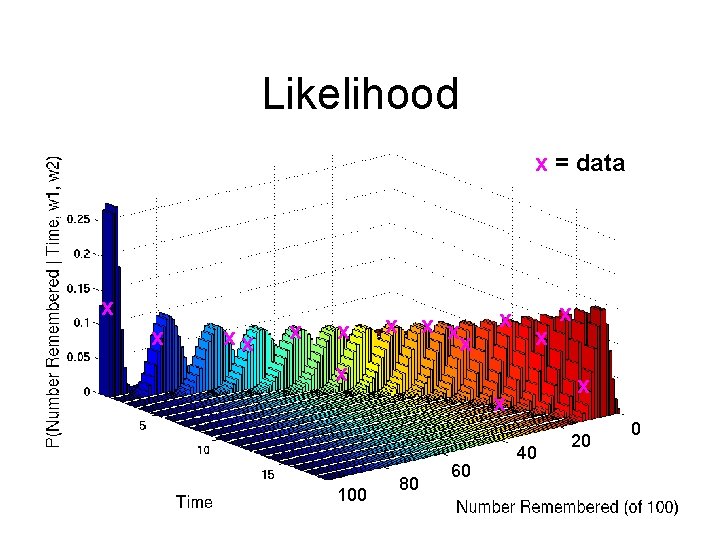

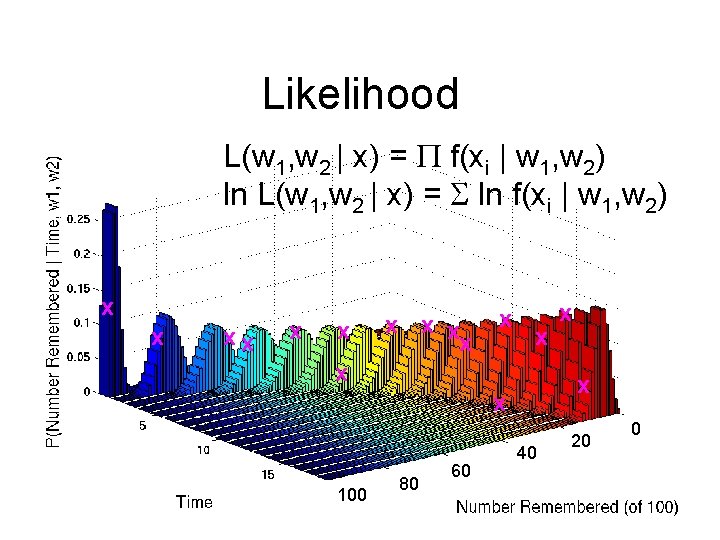

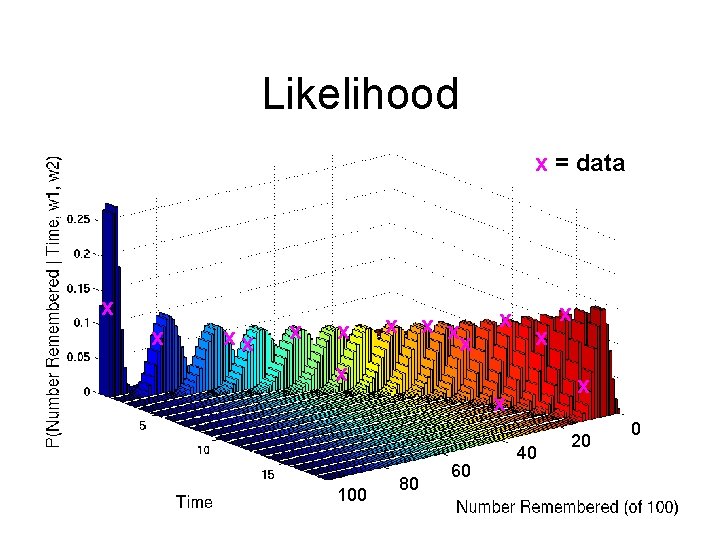

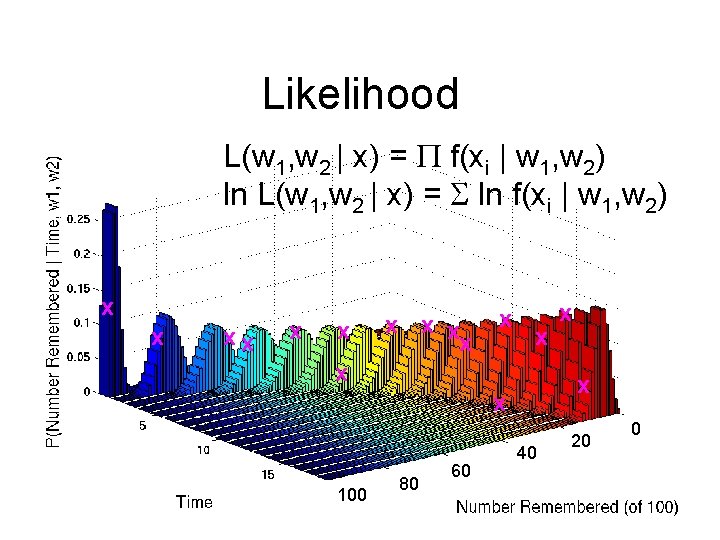

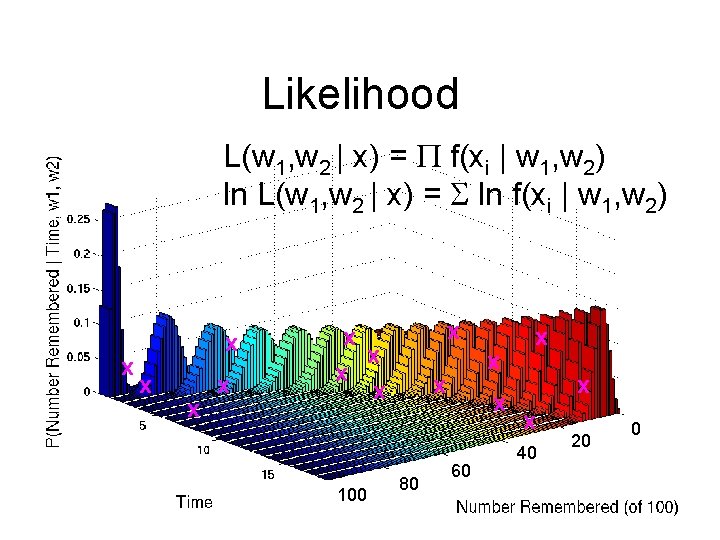

Likelihood x = data x x x x 100 80 60 x 40 20 0

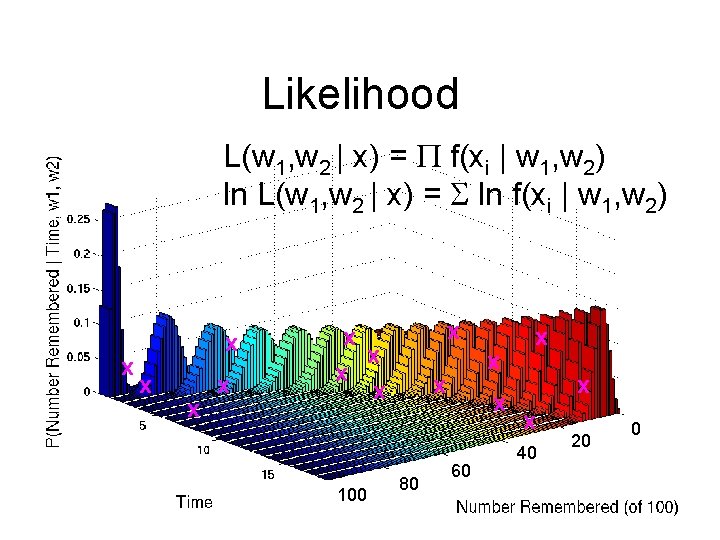

Likelihood L(w 1, w 2 | x) = f(xi | w 1, w 2) ln L(w 1, w 2 | x) = ln f(xi | w 1, w 2) x x x x 100 80 60 x 40 20 0

Likelihood L(w 1, w 2 | x) = f(xi | w 1, w 2) ln L(w 1, w 2 | x) = ln f(xi | w 1, w 2) x x x x x 100 x x 80 x 60 x x x 40 20 0

Likelihood • The w that maximize ln L(w 1, w 2 | x) = ln f(xi | w 1, w 2) are the maximum likelihood parameter estimates, w 1* & w 2*.

Falsifiability (Saturation) • Old rule of thumb: A model is falsifiable if the number of parameters are less than the number of data points. • New rule of thumb: A model is falsifiable iff the rank of the Jacobian is less than the number of data points. – A model is testable if the probability that a model’s predictions are right by chance is 0. – Holds under certain smoothness assumptions.

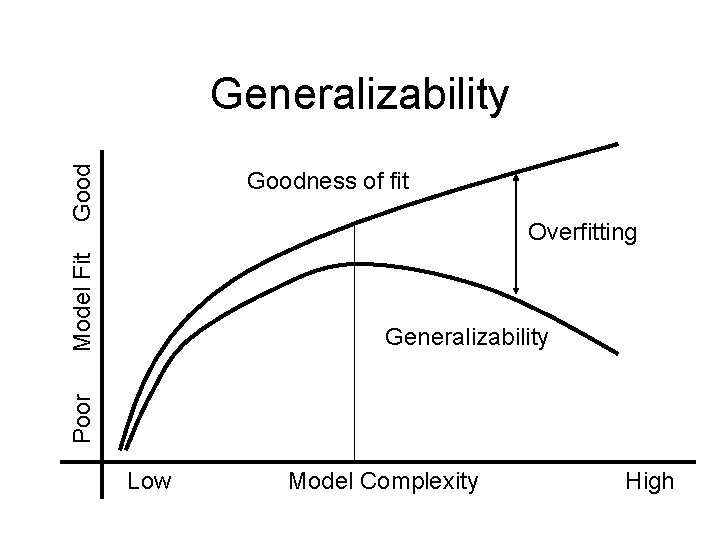

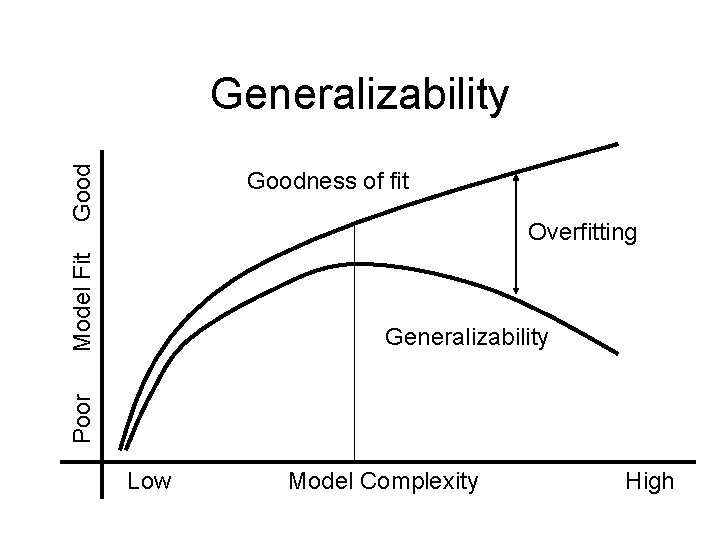

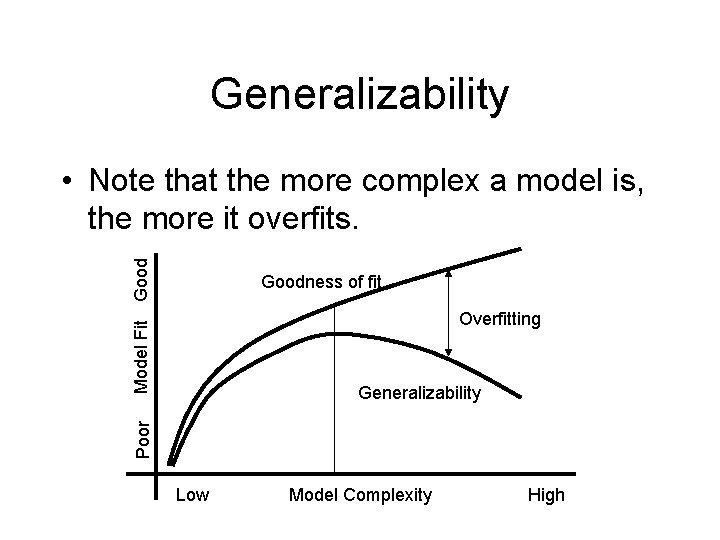

Generalizability • Generalizability (as defined by Myung) is the ability to generalize to new data from the same probability distribution. • Data are corrupted by random noise & so goodness of fit reflects the models ability to capture regularities and fit noise.

Generalizability • Goodness of fit = Fit to regularity (Generalizability) + Fit to noise (Overfitting)

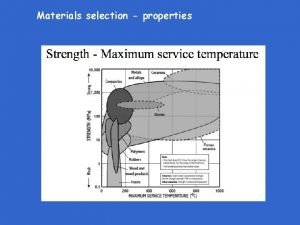

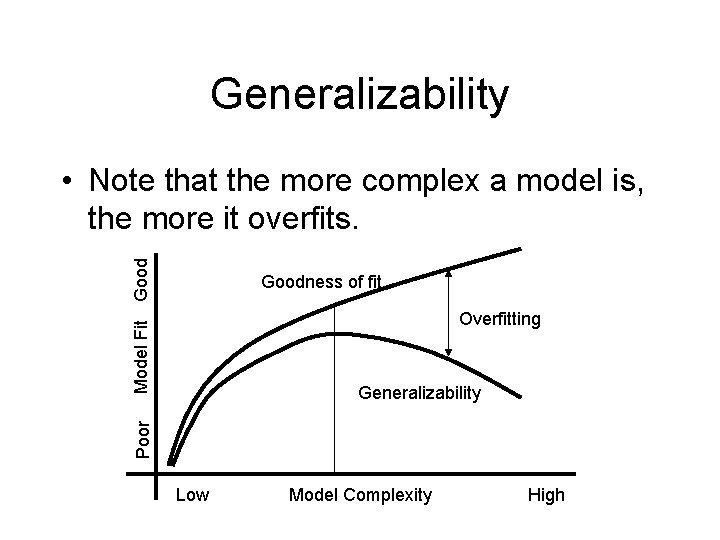

Good Generalizability Goodness of fit Model Fit Overfitting Poor Generalizability Low Model Complexity High

Generalizability Good • Note that the more complex a model is, the more it overfits. Goodness of fit Model Fit Overfitting Poor Generalizability Low Model Complexity High

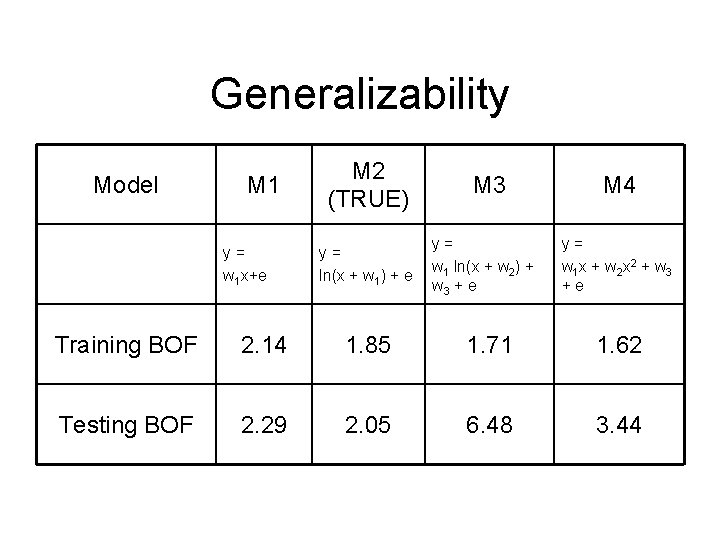

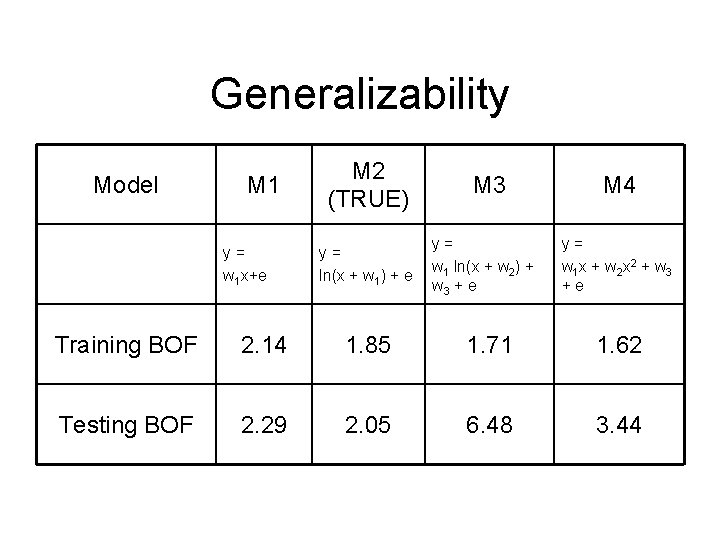

Generalizability Model M 1 y= w 1 x+e M 2 (TRUE) y= ln(x + w 1) + e M 3 y= w 1 ln(x + w 2) + w 3 + e M 4 y= w 1 x + w 2 x 2 + w 3 +e Training BOF 2. 14 1. 85 1. 71 1. 62 Testing BOF 2. 29 2. 05 6. 48 3. 44

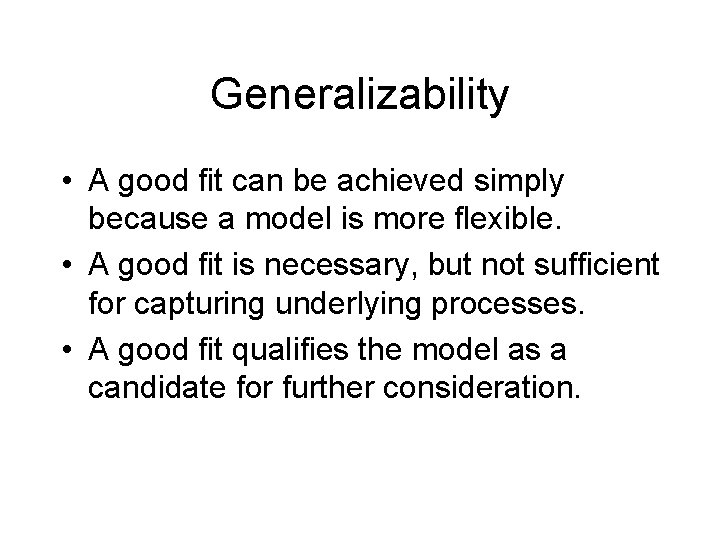

Generalizability • A good fit can be achieved simply because a model is more flexible. • A good fit is necessary, but not sufficient for capturing underlying processes. • A good fit qualifies the model as a candidate for further consideration.

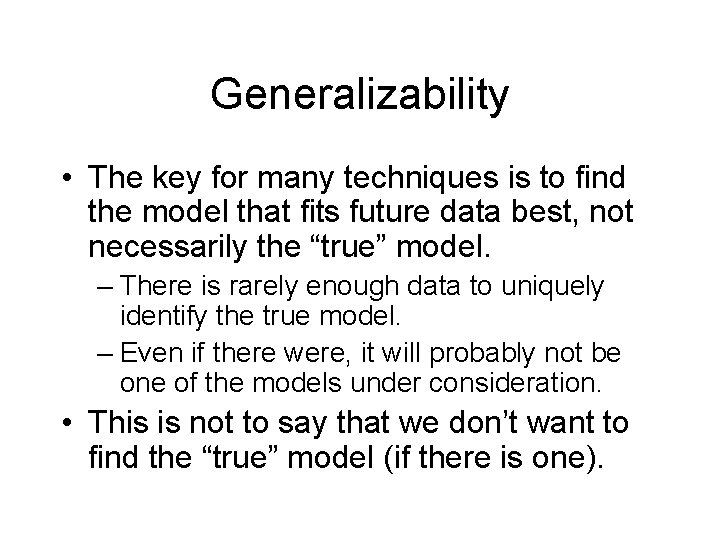

Generalizability • The key for many techniques is to find the model that fits future data best, not necessarily the “true” model. – There is rarely enough data to uniquely identify the true model. – Even if there were, it will probably not be one of the models under consideration. • This is not to say that we don’t want to find the “true” model (if there is one).

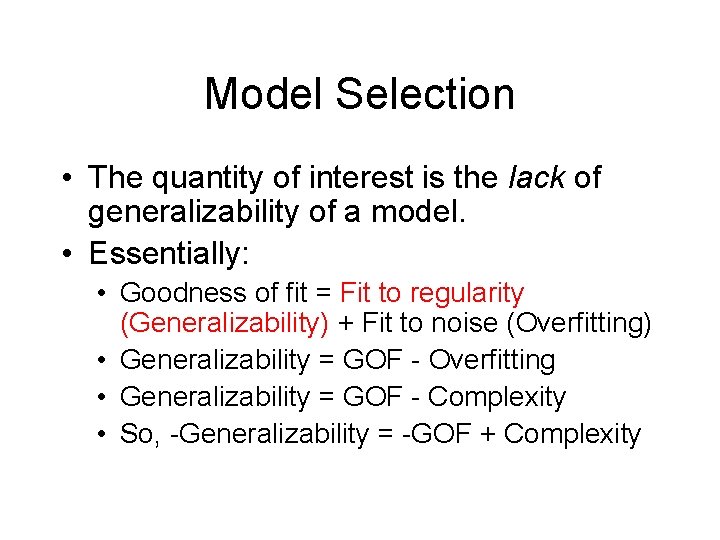

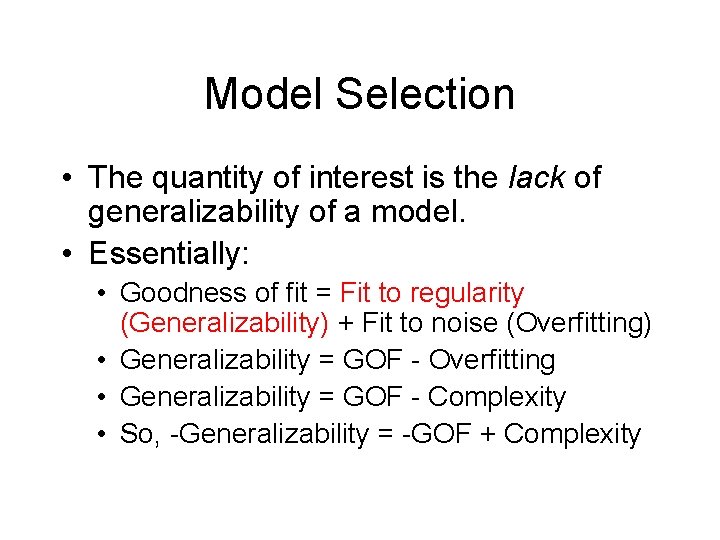

Model Selection • The quantity of interest is the lack of generalizability of a model. • Essentially: • Goodness of fit = Fit to regularity (Generalizability) + Fit to noise (Overfitting) • Generalizability = GOF - Overfitting • Generalizability = GOF - Complexity • So, -Generalizability = -GOF + Complexity

AIC • Akaike Information Criterion (AIC) • AIC is a lack of generalizability measure, so big is bad.

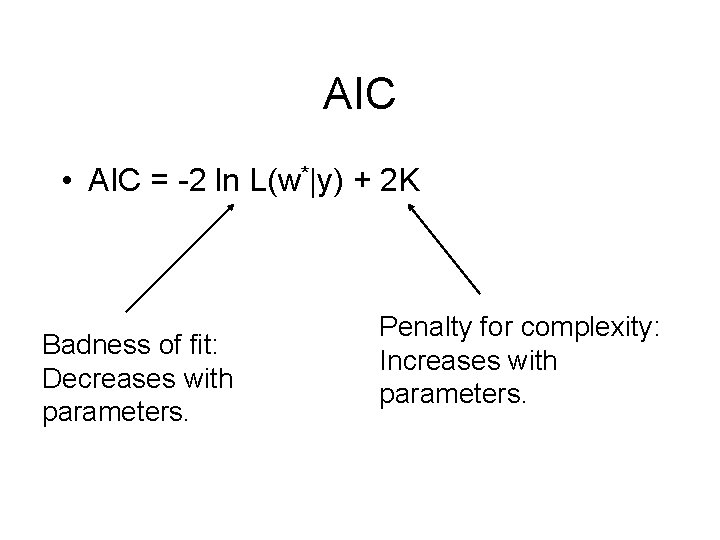

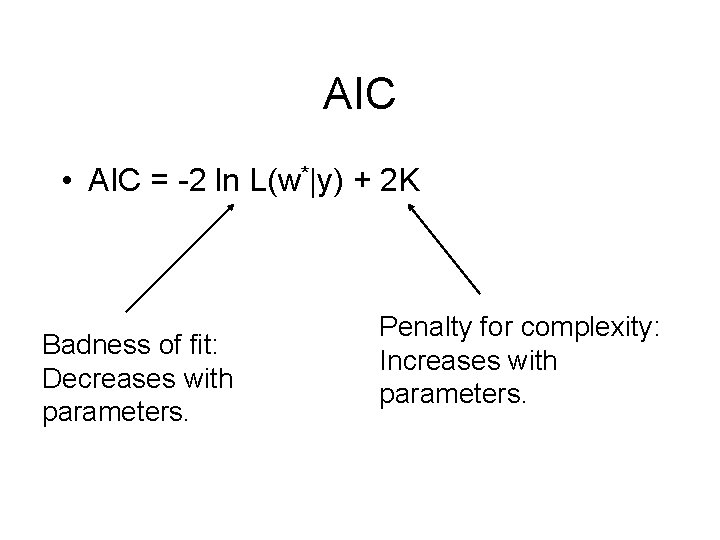

AIC • AIC = -2 ln L(w*|y) + 2 K – y are the data – w* are the MLE estimates – K is the number of model parameters

AIC • AIC = -2 ln L(w*|y) + 2 K Badness of fit: Decreases with parameters. Penalty for complexity: Increases with parameters.

AIC • AIC measures complexity via number of parameters. • Functional form is not considered. 1 parameter 2 parameters

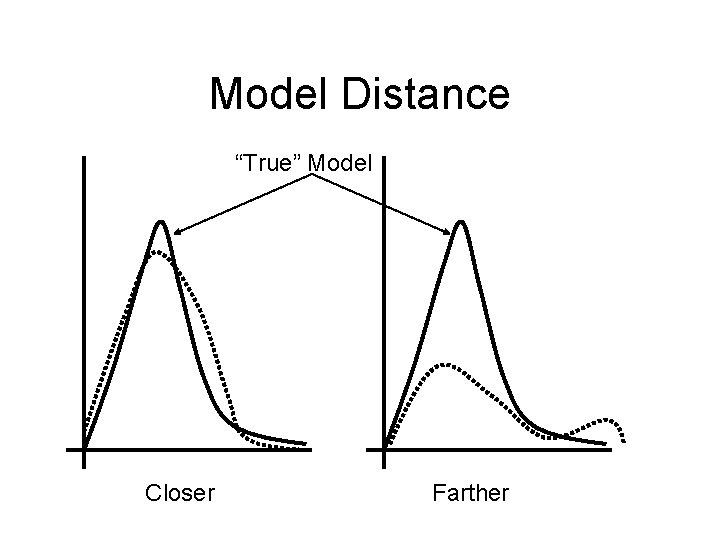

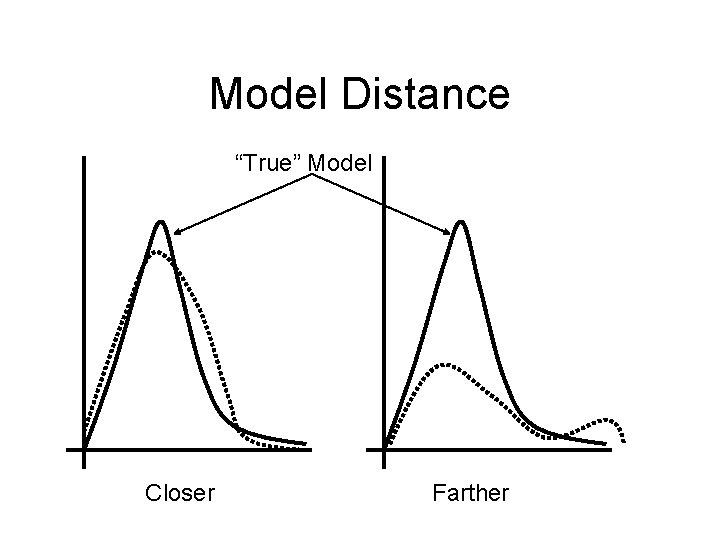

Model Distance “True” Model Closer Farther

AIC • AIC selects a model from a set of models that on average minimizes the distance between the model and the “True” model. • AIC does not depend on knowing the true model. • Given certain conditions, K corrects a statistical bias in estimating this distance.

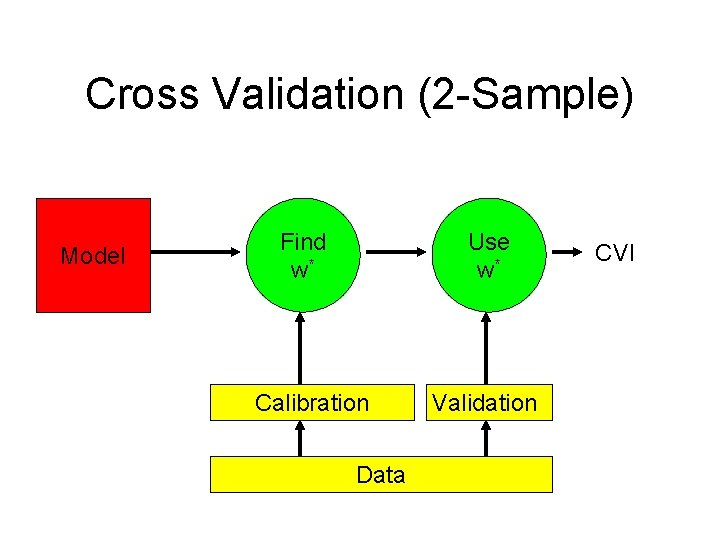

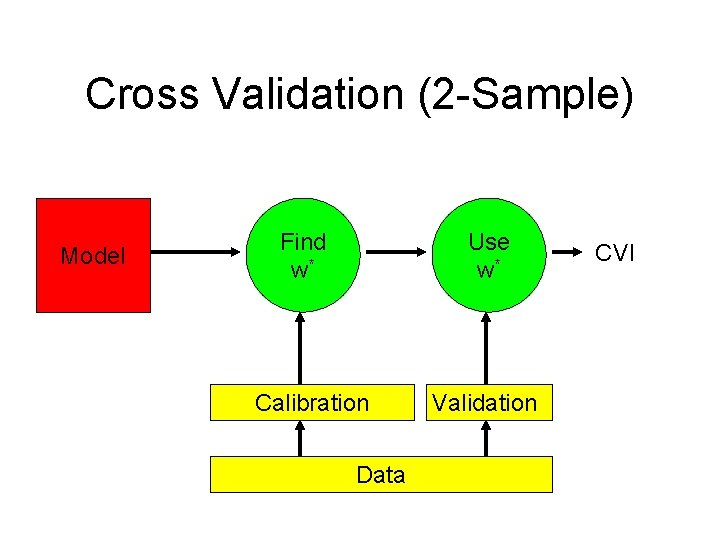

Cross Validation (2 -Sample) Model Find w* Use w* Calibration Data Validation CVI

Cross Validation • Easy to use. • Sensitive to functional form of model. • Not as theoretically grounded as other methods such as AIC.

Cross Validation & AIC • In single sample CV, the CVI is estimated from the calibration sample. • Where Ls is the likelihood of the saturated model with 0 df.

Minimum Descriptive Length • Suppose the following are data described in bits: – 0001000100010001 – 0111010000101011

Minimum Descriptive Length • The data can be coded as: – 0001000100010001 • for i=1: 7, disp(‘ 0001’), end – 0111010000101011 • disp(‘ 0111010000101011’)

Minimum Descriptive Length • Regularity in data can be used to compress the data. • The more regular the data are, relative to a particular coding method, the simpler the “program”. – The choice of coding method doesn’t matter so much.

Minimum Descriptive Length • Think of the ‘program’ as a model. • The model that best captures the regularities in the data will give the shortest code length. – 0001000100010001 • for i=1: 7, disp(‘ 0001’), end – 0111010000101011 • disp(‘ 0111010000101011’)

Minimum Descriptive Length • Capturing data regularities will lead to good prediction of future data, i. e. good generalization. • By finding the model with the minimum descriptive length, MDL will find the simplest model that predicts the data well.

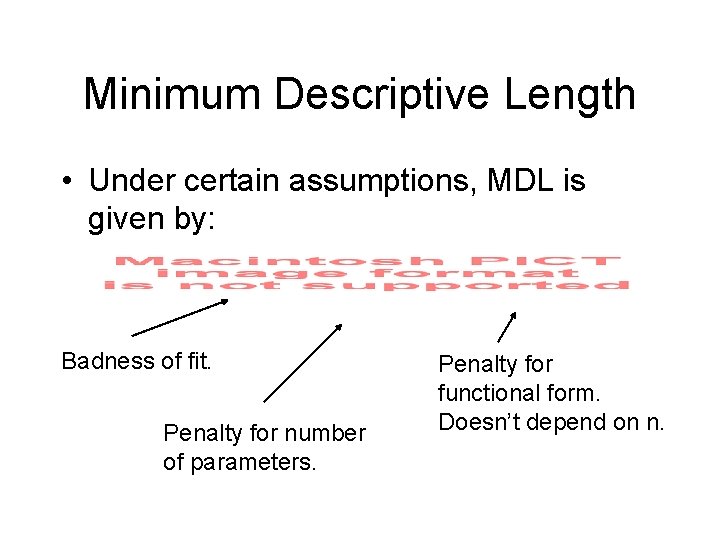

Minimum Descriptive Length • Under certain assumptions, MDL is given by: Badness of fit. Penalty for number of parameters. Penalty for functional form. Doesn’t depend on n.

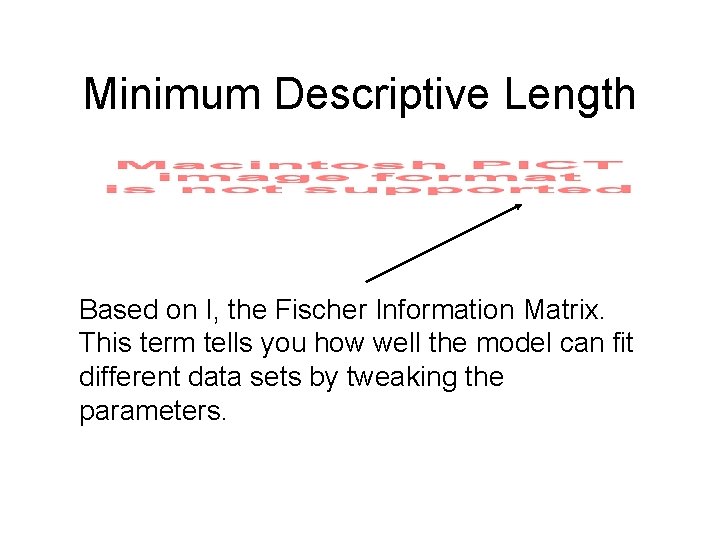

Minimum Descriptive Length Based on I, the Fischer Information Matrix. This term tells you how well the model can fit different data sets by tweaking the parameters.

Other Selection Criterion • • • Bayesian model selection Bayesian Information Criterion (BIC) Generalization Criterion Monte Carlo Techniques Etc…

Myung power

Myung power Carsten olsson

Carsten olsson Arvas foundation

Arvas foundation Mats olsson ki

Mats olsson ki Tom olsson

Tom olsson Ulf olsson son

Ulf olsson son Olsson

Olsson Jonas haage

Jonas haage Agenda sistemica y agenda institucional

Agenda sistemica y agenda institucional Balancing selection vs stabilizing selection

Balancing selection vs stabilizing selection Artificial selection vs natural selection

Artificial selection vs natural selection K selection r selection

K selection r selection Natural selection vs artificial selection

Natural selection vs artificial selection Artificial selection vs natural selection

Artificial selection vs natural selection Disruptive selection

Disruptive selection Logistic model of population growth

Logistic model of population growth Natural selection vs artificial selection

Natural selection vs artificial selection Two way selection and multiway selection

Two way selection and multiway selection Multiway selection in c

Multiway selection in c Mass selection

Mass selection Crc pitt

Crc pitt Pitt redcap

Pitt redcap Psychodynamische imaginative traumatherapie

Psychodynamische imaginative traumatherapie [email protected]

[email protected] Angelina jolie shrek

Angelina jolie shrek Mark pitt virginia tech

Mark pitt virginia tech Angelin ajolie

Angelin ajolie What is aaalac

What is aaalac Rorschach brad pitt

Rorschach brad pitt Primerx pitt

Primerx pitt Adam sandler brad pitt

Adam sandler brad pitt Cs 1520 pitt

Cs 1520 pitt Pitt sorc reimbursement

Pitt sorc reimbursement Dlar pitt

Dlar pitt Dr stephen wisniewski

Dr stephen wisniewski Brad pitt etnicity

Brad pitt etnicity Stat 1000 pitt

Stat 1000 pitt